Abstract

There is continued emphasis on increasing and improving genetics education for grades K–12, for medical professionals, and for the general public. Another critical audience is undergraduate students in introductory biology and genetics courses. To improve the learning of genetics, there is a need to first assess students' understanding of genetics concepts and their level of genetics literacy (i.e., genetics knowledge as it relates to, and affects, their lives). We have developed and evaluated a new instrument to assess the genetics literacy of undergraduate students taking introductory biology or genetics courses. The Genetics Literacy Assessment Instrument is a 31-item multiple-choice test that addresses 17 concepts identified as central to genetics literacy. The items were selected and modified on the basis of reviews by 25 genetics professionals and educators. The instrument underwent additional analysis in student focus groups and pilot testing. It has been evaluated using ∼400 students in eight introductory nonmajor biology and genetics courses. The content validity, discriminant validity, internal reliability, and stability of the instrument have been considered. This project directly enhances genetics education research by providing a valid and reliable instrument for assessing the genetics literacy of undergraduate students.

SIGNIFICANT advances in genetics in recent decades have dramatically increased the impact of genetic information and technologies on society. Genetic issues now play a large role in health and public policy (Miller 1998; Kolstø 2001), and new knowledge in this field continues to have significant implications for individuals and society (Lanie et al. 2004). In spite of this increased exposure to genetics, recent studies of the general public's genetics knowledge show a relatively low understanding of genetics concepts (Petty et al. 2000; Human Genetics Commission 2001; Lanie et al. 2004; Bates 2005). Additionally, genetic information presented informally through various media is not always correct (Grinell 1993; Lanie et al. 2004), and without knowledge of basic genetics, many find it hard to distinguish valid genetic information from misinformation (Jennings 2004). Studies looking specifically at the genetics knowledge of students in grade levels kindergarten through 12 (K–12) also show low levels of understanding. The 2000 National Assessment of Educational Progress tested ∼49,000 U. S. students and on average only ∼30% of 12th graders could completely or partially answer genetics questions correctly (National Center for Education Statistics 2000).

Opportunities to learn about genetics begin in grades K–12. The National Science Education Standards (NSES) provide the basis for state science standards. Specifically, the NSES Science Content Standards indicate which genetics concepts students should learn within the clustered grade levels K–4, 5–8, and 9–12 (National Research Council 1996). In grade levels K–4 and 5–8, learning the basic concepts of inheritance and reproduction is expected, while in grades 9–12 the molecular basis of heredity and biological evolution are covered. Students graduating from high school should leave with a very basic, but reasonably broad, understanding of genetics.

Postsecondary education provides an additional opportunity for genetics education. There are >2 million individuals graduating with associate or bachelor degrees each year in the United States (National Center for Education Statistics 2005a). Approximately 10% of graduates are in the life sciences and health fields (National Center for Education Statistics 2005b), suggesting that they experience adequate exposure to genetics. The other 90% of graduates may receive some genetics instruction through courses taken as part of general education requirements, since >90% of institutions have such requirements organized under broad curricular groups, i.e., natural sciences, social sciences, and humanities/fine arts (Hurtado et al. 1991). Within the natural sciences, undergraduate biology courses for nonscience majors are an ideal opportunity for improving genetics education for the general public, but there has been little evaluation of genetics instruction in these courses.

Other disciplines have been actively involved in such assessment. Diagnostic multiple-choice tests, known as “concept diagnostic tests” or “concept inventories,” have been developed in science disciplines, most notably physics (Hestenes et al. 1992; Hufnagel 2002) and chemistry (BouJaoude 1992; Mulford and Robinson 2002). These multiple-choice tests serve as assessment tools used to determine students' knowledge on a small set of related concepts. These tools also allow educators to evaluate the learning outcomes of their courses from an objective perspective and can be used to identify gaps in student understanding both precourse and postcourse. Furthermore, adjustments to pedagogy and content can be effectively evaluated when a standardized assessment is utilized. As stated by Kylmkowsky et al. (2003, p. 157), “without an appropriate instrument with which to measure conceptual understanding in our students, educational experiments can lead to unfounded conclusions and self-delusion, particularly in the instructors who initiate them.”

Efforts have been underway to develop a “biology concept inventory,” focusing on the various biology major courses such as developmental biology, immunology, and upper-level genetics (Klymkowsky and Garvin-Doxas 2007), but this is relevant only for assessing student knowledge in courses for biology majors. Other assessment instruments have been developed to test for genetics knowledge at the high school level (Zohar and Nemet 2002; Sadler and Zeidler 2004), but neither were rigorously evaluated for validity and reliability. In response to the need for an instrument to specifically test the genetics knowledge of nonscience majors and to evaluate genetics education at the undergraduate level more generally, this study presents the development and evaluation of the Genetics Literacy Assessment Instrument (GLAI).

DEVELOPMENT OF THE GLAI

Our approach to designing the GLAI was similar to the methods outlined by Treagust (1988) and others who have developed assessment tools in the sciences (Hestenes et al. 1992; Anderson et al. 2002; Hufnagel 2002; Mulford and Robinson 2002). The steps of the process involved defining the content, development, and selection of test items, review by professionals, focus group interviews, pilot study data collection, and evaluation.

Defining the content:

The process began by identifying the concepts to be tested by the instrument. The primary source for determining those concepts was a published list of benchmarks of genetics content for nonscience majors' courses identified by a committee of genetics professionals from the American Society of Human Genetics (Hott et al. 2002). This was a comprehensive list, totaling six main concepts and 43 subconcepts, but did not focus on knowledge specifically applicable to genetics literacy. Our definition of genetics literacy is “sufficient knowledge and appreciation of genetics principles to allow informed decision-making for personal well-being and effective participation in social decisions on genetic issues.” This definition is similar to those already in the literature (Andrews et al. 1994; McInerney 2002).

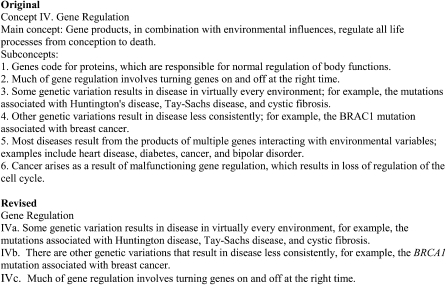

The published 43 subconcepts were reviewed by four of the authors for alignment with genetics literacy, since many were not relevant to the more restrictive objective of measuring genetics literacy, the focus of this study. Additionally, a number of the subconcepts appeared to be repetitive or sufficiently related to warrant combining them. These criteria resulted in the reduction of the original 43 subconcepts to the 17 used to develop the GLAI. Finally, several of the subconcepts were edited to improve readability or clarity. Figure 1 gives an example of how the subconcepts were altered and pared down from the original benchmarks established by Hott et al. (2002).

Figure 1.—

Example of revisions made to the original main concept area and subconcepts.

Development and selection of test items:

A number of sources were reviewed for possible test items, including the example questions provided by the College Board's Advanced Placement Biology Exam and the SAT II Biology Exam, the Biological Science Curriculum Study/National Association of Biology Teachers (BSCS/NABT) High School Biology Test, textbook question banks from Lewis (2001, 2003, 2005), Cummings (2003, 2006), and Campbell and Reece (2001). Other instruments for testing genetics knowledge found in the literature, specifically Sadler and Zeidler (2005) and Fagen (2003), were reviewed. Additionally, items developed by the authors for exams in relevant courses were evaluated for potential use. The majority of the sources did not have usable items for the purpose of the instrument; quite often the items did not align specifically with the concepts being tested, or they tested more than one concept. Suitable items were ultimately included in a pool of questions. One source, Sadler and Zeidler (2005), provided seven questions for the initial pool (permission obtained); not all were used in the final version of the instrument, and those included were altered. Additionally, one question from the BSCS/NABT test and four questions from textbook question banks were revised and included in the GLAI pool.

A number of questions were created de novo, and almost all were modified more than once. The literature addressing students' misconceptions regarding genetics was also reviewed (Stewart et al. 1990; Kindfield 1994; Venville and Treagust 1998; Lewis et al. 2000a,b,c; Marbach-Ad and Stavy 2000; Wood-Robinson 2000; Marbach-Ad 2001; Chattopadhyay 2005) and utilized for the development and revision of questions. Additionally, the combined teaching experiences of the researchers served as a significant resource for reviewing and further developing the GLAI items. Originally, we attempted to have four questions per concept, yet several concepts had only three. The initial GLAI item pool included a total of 56 questions.

The next phase of the instrument development was an extensive review by genetics professionals, instructors, and graduate students. Due to the large number of items to be reviewed, the GLAI item pool was divided in half, evenly distributing the concepts between two feedback forms. For each item, the reviewers were asked to answer the following three questions with “yes,” “no,” or “maybe”: (1) “Does the question test the concept?”; (2) “Does the question test genetics literacy, as per our definition?”; and (3) “Is this a quality question?”. The reviewers were also asked to include any helpful comments or suggestions for improvement of the items.

Seventy-three individuals from a variety of genetics professions and from across the United States were asked to review half of the GLAI items, with 31 indicating their interest in participating. They were divided into two groups on the basis of the nature of their specialty in an attempt to evenly distribute expertise. Twenty-five participated; due to uneven response rates between the two sets of reviewers, 10 individuals reviewed one set of items and 15 the other. Two kinds of data were collected from the reviewers: (1) responses to the three questions, which were converted into quantitative data (yes, 3; maybe, 2; and no, 1), thereby allowing selection of items given greater approval by the reviewers; and (2) verbal feedback, which was collated and analyzed as qualitative data. For the quantitative data, the responses were averaged and the two highest rated items for each concept were selected for inclusion on the initial version of the instrument. On the basis of reviewers' responses to the questions and their individual comments, the items again underwent significant revision. Reviewer feedback was analyzed for themes; ones that recurred among reviewers were especially considered when revising questions. This resulted in the first version of the GLAI, which included 33 items, with the vast majority of concepts having two questions.

Focus groups:

Nine students with varying majors were self-selected from an introductory psychology course to participate in two focus groups, one with six students and the other with three. The focus groups were conducted in the form of a cognitive think-aloud (Weisberg et al. 1996). In each group, the GLAI was administered via a personal response system. After answering each item, the question, the answer, and the alternatives were discussed. Students were probed with questions such as “What is confusing about the question?”, “What words/phrases don't you understand?”, and “What do you think the question is asking?”. The discussions were recorded and transcribed. The feedback from the students was used to modify the wording of questions, answers, and answer alternatives (also known as distractors). For instance, the students identified technical words that were not part of their vocabulary, such as “tenet” and “variant,” which were subsequently replaced with more common terms. They also encouraged us to condense the wording when possible, as the length of the questions could discourage completion of the instrument.

Pilot study:

A pilot study was conducted with 11 self-selected students from an introductory biology course for nonmajors. These students completed the GLAI online once. Five of the students who had not taken a course in the biology sequence emphasizing genetics scored an average of 25%, while the remaining 6 who had completed the genetics portion of the course sequence scored an average of 50%. This indicated the instrument's ability to measure increased genetics knowledge. Also, data on the number of students selecting the alternative answers were analyzed. Distractors selected by <20% of the students were revisited, resulting in a number of them being reworded or entirely rewritten. Data, both quantitative and qualitative, collected from the pilot study and the focus groups provided insight into removing or revising items. The final version of the GLAI contained 31 items: 14 concepts having 2 items each, and 3 concepts having 1 item.

EVALUATION OF THE GLAI

During the 2006–2007 academic year, three different groups of students were recruited to take the online GLAI: undergraduate students in nonmajor introductory biology or genetics courses, undergraduate students in an introductory psychology course, and graduate students in a genetics specialty. For both groups of undergraduate students, the GLAI was administered precourse (during the first week of class) and postcourse (during the second-to-last week of class). A total of 395 students from the introductory biology or genetics courses completed the precourse instrument and 330 the postcourse. In addition, 113 students from the introductory psychology course completed both the pre- and postcourse and 23 graduate students from specialized fields of genetics completed the GLAI once. These data were used to evaluate the individual items and the validity and reliability of the GLAI.

Item analysis:

Item difficulty and item discrimination are reported for the 31 items on the GLAI (Table 1) obtained from the precourse instrument results of 395 students in introductory biology and genetics courses. Item difficulty is the proportion of students answering the item correctly (Kaplan and Saccuzzo 1997). Some references indicate the optimum item difficulty as being halfway between a student choosing the correct answer (100%) and the chance a student chooses the right answer by guessing (20%, given five choices) (Kaplan and Saccuzzo 1997). On the basis of this indicator, the optimal difficulty for items on the GLAI would be 60%. The items averaged a difficulty index of 43%, ranging from 17 to 80%. Only one question, Q5, appeared to be exceptionally difficult with a value of <20%.

TABLE 1.

Item difficulty and discrimination

| Question | Item difficulty | Item discrimination |

|---|---|---|

| Q1 | 52 | 0.27 |

| Q2 | 38 | 0.03 |

| Q3 | 42 | 0.39 |

| Q4 | 29 | 0.51 |

| Q5 | 17 | 0.05 |

| Q6 | 29 | 0.37 |

| Q7 | 55 | 0.56 |

| Q8 | 37 | 0.51 |

| Q9 | 52 | 0.60 |

| Q10 | 68 | 0.59 |

| Q11 | 47 | 0.65 |

| Q12 | 80 | 0.65 |

| Q13 | 25 | 0.20 |

| Q14 | 21 | 0.25 |

| Q15 | 32 | 0.59 |

| Q16 | 48 | 0.48 |

| Q17 | 67 | 0.49 |

| Q18 | 41 | 0.53 |

| Q19 | 23 | 0.53 |

| Q20 | 53 | 0.55 |

| Q21 | 38 | 0.46 |

| Q22 | 28 | 0.38 |

| Q23 | 45 | 0.50 |

| Q24 | 25 | 0.49 |

| Q25 | 23 | 0.37 |

| Q26 | 33 | 0.42 |

| Q27 | 38 | 0.61 |

| Q28 | 47 | 0.49 |

| Q29 | 72 | 0.59 |

| Q30 | 77 | 0.49 |

| Q31 | 36 | 0.44 |

| Average | 43 | 0.45 |

Item discrimination refers to the extent to which success on an item corresponds to success on the inventory as a whole (Nunnally and Bernstein 1994). Item discrimination values were determined by calculating a biserial correlation for each item, an estimate of the Pearson product-moment correlation between the performance on a particular item and the overall performance on the instrument (Henrysson 1971). The values ranged from 0.03 to 0.69 (Table 1). The closer the biserial value of an item is to 1.00, the greater the discriminating power, with values >0.30 being desired (Nunnally and Bernstein 1994; Kaplan and Saccuzzo 1997). Two items, Q2 and Q5, had values ≤0.05, suggesting that they are very poor discriminators.

Validity:

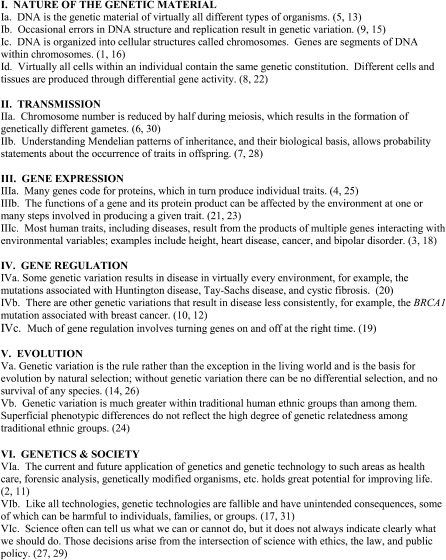

Two indicators of validity have been considered for the GLAI: content and discriminant validity. Establishing a table of specifications, which is a listing of the concepts being tested with the corresponding questions, is the best way to ensure high content validity (Black 1999). For such a listing regarding the GLAI, see Figure 2. Additionally, as stated above, the items were reviewed by genetics professionals, who were asked three questions when considering each GLAI item: “Does the question test the concept?” had a “no” response 8.4% of the time; “Does the question test genetics literacy, as per our definition?” received a “no” response 7.5% of the time; and “Is this a quality question?” had a “no” response 17.2% of the time. This indicates that the reviewers did not blindly agree with the authors' judgment, but provided data regarding the validity of the question. The reviewers' feedback was used in the selection of the items for the final version and for revision of items; thus the content validity of the instrument is supported by the reviewers' participation in its development.

Figure 2.—

Table of specifications. The 17 genetics subconcepts important for genetics literacy and the corresponding question numbers from the final version of the GLAI.

Discriminant validity is an instrument's ability to distinguish among groups that it theoretically should be able to distinguish (Hersen 2004). The precourse GLAI scores from 395 students enrolled in introductory biology or genetics courses, 113 students in a psychology course, and 23 graduate students from specialized fields of genetics were compared. The graduate students averaged 87% on the instrument, while the two undergraduate student groups each averaged <45%. An analysis of variance test with a Games–Howell post hoc analysis indicates a significant difference between the graduate students and the two undergraduate groups (Table 2). The Games–Howell post hoc test was used because of its robust abilities with groups of different variances (Field 2005). The significantly higher scores of the graduate students, and the lack of significant difference between the two undergraduate groups, speak to the validity of the GLAI, since these results are in line with expectations.

TABLE 2.

Analysis of variance for student groups

| Source | d.f. | Mean square | F |

|---|---|---|---|

| Groups | 2 | 22,475.27 | 100.14 (P < 0.01) |

| Error | 528 | 224.44 |

Reliability:

Two measures of reliability are provided: a measure of stability and internal reliability. A test–retest of GLAI was conducted with 112 students in an introductory psychology course who were not enrolled in any class pertaining to biology or genetics. Seven weeks passed between the administration of the test and retest. A Pearson correlation was calculated as 0.68. Internal reliability was measured using the Cronbach's α for both pre- and postcourse scores collected from the introductory biology/genetics students. Internal reliability refers to the correlation between each question and the overall score on the instrument. The reliability estimates were 0.995 (N = 395) for the precourse and 0.997 (N = 330) for the postcourse.

DISCUSSION

In 2002, a subcommittee of the American Society of Human Genetics published a list of benchmarks of genetics content for nonscience majors (Hott et al. 2002). We have determined which of these concepts are necessary for genetics literacy, developed an assessment tool to test for them, and evaluated the quality of this tool. Few articles on the development of undergraduate science assessments have provided such extensive information on the process and evaluation of such an instrument. However, the report by Anderson et al. (2002) on the development of the Concept Inventory of Natural Selection is comprehensive and will frequently be compared to our study throughout the discussion.

Item difficulty and discrimination values are reported for all questions on the GLAI (Table 1). The items varied from 17 to 80% in difficulty, averaging 43%. In comparison, other similar inventories have reported average item difficulty values of 34% (Hufnagel 2002), 45.5% (Mulford and Robinson 2002), and 46.4% (Anderson et al. 2002). Having items of varying difficulty increases the test's ability to discriminate at all levels of students' knowledge (Nunnally and Bernstein 1994). This variation also suggests that the questions were appropriate for students at the undergraduate nonbiology-major level.

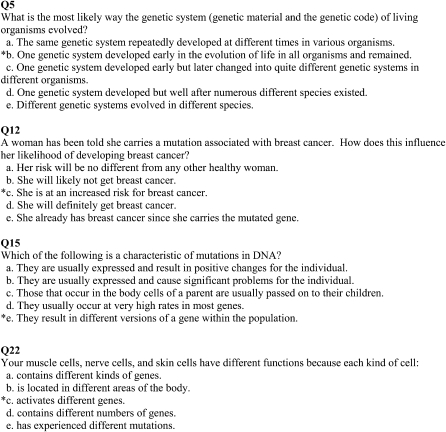

The range in item difficulty scores also implies that students understand some concepts better than others before entering an introductory biology or genetics course. For instance, Q12 (Figure 3) addresses the concept of genetic variations resulting in increased risk of disease and was answered correctly by the majority of students (80%). On the other hand, only 29% correctly answered Q15 (Figure 3), which addresses the concept of mutations. On the basis of constructivist learning theories, learning is a “social process of making sense of experience in terms of existing knowledge” and students must overcome their misconceptions to learn the appropriate concepts (Tobin et al. 1994). Using a tool such as the GLAI precourse can provide instructors with some idea of the knowledge that students possess and may be useful in planning instruction (Hestenes et al. 1992).

Figure 3.—

GLAI sample questions (asterisk indicates the correct answer).

One of the questions, Q5 (Figure 3), appears to be exceptionally difficult with only 17% of the students answering it correctly, less than the percentage expected due to random guessing. This is reinforced by only 30% of the genetics graduate students answering it correctly. It also had a poor item discrimination score of 0.05. The question was intended to measure the concept “DNA is the genetic material of virtually all different types of organisms,” but perhaps is too complicated due to the use of the similar terms “genetic system,” “genetic material,” and “genetic code.” “Genetic material” specifically refers to DNA, “genetic code” to the triplet codon sequence used to transcribe and translate DNA into protein, and “genetic system” to the overall mechanism of heredity. Another question, Q13 (not shown), was intended to measure the same concept, but had only slightly higher difficulty and discrimination scores, 25% and 0.20, respectively. In this question, the terms “genetic code” and “genetic material” were also used. Since the media frequently use the term “genetic code,” sometimes inappropriately (Moran 2007), additional insight into students' perceptions of the terms may help in the revision of the items and may also have implications for instruction.

The average item discrimination value is 0.45. Over 80% of the questions have item discrimination values >0.30, indicating that the instrument is capable of distinguishing between students who understand the concepts and those who do not. Additionally, the Concept Inventory of Natural Selection reported a similar average, with 75% of the questions having discrimination values >0.30. In addition to Q5, Q2 also had a significantly lower discrimination score, suggesting that it also may need to be replaced or undergo revision prior to further use of the instrument.

Validity and reliability of the instrument were addressed using several methods. The items of the GLAI test specific concepts, as illustrated by the table of specifications (Figure 2), to ensure content validity (Black 1999). This is further supported by the 25 genetics professionals and educators who reviewed proposed questions and provided recommendations. We believe the inclusion of both a wide variety of genetics professionals and a large number of genetics educators strengthened the content validity of the instrument. In addition, the instrument was found to have discriminant validity due to its ability to distinguish among groups with varying genetics knowledge. Two measures of the GLAI's reliability were examined: internal consistency and stability. The internal consistency of the instrument was found to be exceptionally high: both pre- and postcourse values were >0.99. In comparison, the Concept Inventory of Natural Selection reported an internal consistency value of 0.58 (Anderson et al. 2002). The high Cronbach α-values may suggest that the instrument measures the one construct of “genetics literacy.” The measure of stability was calculated as 0.68, marginally lower than the desired 0.70 (Gronlund 1993). One reason for this slightly low reliability could be the relatively long time interval between the test and retest.

The evaluation of the GLAI indicates that it is a reasonable instrument for measuring genetics literacy of students before and after an introductory biology or genetics course. The instrument was developed with the intent for use as a standardized measure of students' knowledge of genetics concepts that are particularly relevant to their lives. We suggest that the GLAI be used in introductory level biology and genetics courses for nonbiology majors, although its applicability may go beyond this particular population. Most notably, the GLAI can be utilized as a standardized measure across instructors, courses, and institutions in coordination with efforts to improve the teaching of genetics, as the Force Concept Inventory has been used in physics education research. In this manner, the instrument should significantly contribute to the advancement of genetics education research.

Only sample questions from the GLAI have been included here to maintain confidentiality (Figure 3) (Hake 2001). Those who are interested in obtaining the full version of the GLAI and/or collaboration in continued research with the instrument should contact the authors.

Acknowledgments

We are extremely grateful to the educators who allowed their courses to be used for this study and the students who agreed to participate. We also thank the many genetics professionals and educators who provided feedback on the instrument items.

References

- Anderson, D. L., K. M. Fisher and G. J. Norman, 2002. Development and evaluation of the conceptual inventory of natural selection. J. Res. Sci. Teach. 39 952–978. [Google Scholar]

- Andrews, L. B., J. E. Fullarton, N. A. Holtzman and A. G. Motulsky, 1994. Assessing Genetic Risks: Implications for Health and Social Policy. National Academy Press, Washington, DC. [PubMed]

- Bates, B. R., 2005. Warranted concerns, warranted outlooks: a focus group study of public understandings of genetic research. Soc. Sci. Med. 60 331–344. [DOI] [PubMed] [Google Scholar]

- Black, T. R., 1999. Doing Quantitative Research in the Social Sciences. Sage Publications, Thousand Oaks, CA.

- BouJaoude, S. B., 1992. The relationship between students' learning strategies and the change in their misunderstandings during a high school chemistry course. J. Res. Sci. Teach. 29 687–699. [Google Scholar]

- Campbell, N. A., and J. B. Reece, 2001. Biology. Pearson, Benjamin/Cummings, San Francisco.

- Chattopadhyay, A., 2005. Understanding of genetic information in higher secondary students in northeast India and the implications for genetics education. Cell Biol. Educ. 4 97–104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cummings, M. R., 2003. Human Heredity: Principles and Issues, Ed. 6. Brooks/Cole, Pacific Grove, CA.

- Cummings, M. R., 2006. Human Heredity: Principles and Issues, Ed. 7. Brooks/Cole, Pacific Grove, CA.

- Fagen, A. P., 2003. Assessing and Enhancing the Introductory Science Course in Physics and Biology: Peer Instruction, Classroom Demonstrations, and Genetics Vocabulary. Harvard University, Cambridge, MA.

- Field, A., 2005. Discovering Statistics Using SPSS. Sage Publications, Thousand Oaks, CA.

- Grinell, S., 1993. Reaching the nonschool public about genetics. Am. J. Hum. Genet. 52 233–234. [PMC free article] [PubMed] [Google Scholar]

- Gronlund, N. E., 1993. How to Make Achievement Tests and Assessments. Allyn & Bacon, Boston.

- Hake, R. R., 2001. Suggestions for administering and reporting pre/post diagnostic tests. http://www.physics.indiana.edu/∼hake/TestingSuggestions051801.pdf.

- Henrysson, S., 1971. Gathering, analyzing, and using data on test items, pp. 131–159 in Educational Measurement, edited by R. L. Thorndike. American Council on Education, Washington, DC.

- Hersen, M., 2004. Comprehensive Handbook of Psychological Assessment. John Wiley & Sons, Hoboken, NJ.

- Hestenes, D., M. Wells and G. Swackhamer, 1992. Force concept inventory. Phys. Teach. 30 141–157. [Google Scholar]

- Hott, A. M., C. A. Huether, J. D. McInerney, C. Christianson, R. Fowler et al., 2002. Bioscience 52 1024–1035. [Google Scholar]

- Hufnagel, B., 2002. Development of the astronomy diagnostic test. Astron. Educ. Rev. 1 47–51. [Google Scholar]

- Human Genetics Commission, 2001. Public Attitudes to Human Genetic Information: People's Panel Quantitative Study Conducted for the Human Genetics Commission. Human Genetics Commission, London. http://www.hgc.gov.uk.

- Hurtado, S., A. W. Astin and E. L. Dey, 1991. Varieties of general education programs: an empirically based taxonomy. J. Gen. Educ. 40 133–162. [Google Scholar]

- Jennings, B., 2004. Genetic literacy and citizenship: possibilities for deliberative democratic policymaking in science and medicine. Good Soc. J. 13 38–44. [Google Scholar]

- Kaplan, R. M., and D. P. Saccuzzo, 1997. Psychological Testing: Principles, Applications, and Issues. Brooks/Cole, Pacific Grove, CA.

- Kindfield, A. C. H., 1994. Assessing understanding of biological processes: elucidating students' models of meiosis. Am. Biol. Teach. 56 367–371. [Google Scholar]

- Klymkowsky, M. W., and K. Garvin-Doxas, 2007. Bioliteracy: building the biology concept inventory. http://bioliteracy.net. [DOI] [PMC free article] [PubMed]

- Klymkowsky, M. W., K. Garvin-Doxas and M. Zeilik, 2003. Bioliteracy and teaching efficacy: what biologists can learn from physicists. Cell Biol. Educ. 2 155–161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kolstø, S. D., 2001. Scientific literacy for citizenship: tools for dealing with the science dimension of controversial socioscientific issues. Sci. Educ. 85 291–310. [Google Scholar]

- Lanie, A. D., T. E. Jayaratne, J. P. Sheldon, S. L. R. Kardia, E. S. Anderson et al., 2004. Exploring the public understanding of basic genetic concepts. J. Genet. Couns. 13 305–320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis, J., J. Leach and C. Wood-Robinson, 2000. a Chromosomes: the missing link—young people's understanding of mitosis, meiosis, and fertilisation. J. Biol. Educ. 34 189–199. [Google Scholar]

- Lewis, J., J. Leach and C. Wood-Robinson, 2000. b What's in a cell? Young people's understanding of the genetic relationship between cells, within an individual. J. Biol. Educ. 34 129–132. [Google Scholar]

- Lewis, J., J. Leach and C. Wood-Robinson, 2000. c All in the genes? Young people's understanding of the nature of genes. J. Biol. Educ. 34 74–79. [Google Scholar]

- Lewis, R., 2001. Human Genetics: Concepts and Applications, Ed. 4. McGraw Hill, Boston.

- Lewis, R., 2003. Human Genetics: Concepts and Applications, Ed. 5. McGraw Hill, Boston.

- Lewis, R., 2005. Human Genetics: Concepts and Applications, Ed. 6. McGraw Hill, Boston.

- Marbach-Ad, G., 2001. Attempting to break the code in student comprehension of genetic concepts. J. Biol. Educ. 35 183–189. [Google Scholar]

- Marbach-Ad, G., and R. Stavy, 2000. Students' cellular and molecular explanations of genetic phenomena. J. Biol. Educ. 34 200–205. [Google Scholar]

- McInerney, J. D., 2002. Education in a genomic world. J. Med. Philos. 27 369. [DOI] [PubMed] [Google Scholar]

- Miller, J. D., 1998. The measurement of civic scientific literacy. Public Underst. Sci. 7 203–223. [Google Scholar]

- Moran, L. A., 2007. Sandwalk: strolling with a skeptical biochemist. http://sandwalk.blogspot.com/2007/02/wrong-version-of-genetic-code.html.

- Mulford, D. R., and W. R. Robinson, 2002. An inventory for alternate conceptions among first-semester general chemistry students. J. Chem. Educ. 79 739–744. [Google Scholar]

- National Center for Education Statistics, 2000. The nation's report card: science 2000. http://nces.ed.gov/nationsreportcard/itmrls/searchresults.asp.

- National Center for Education Statistics, 2005. a Degrees conferred by degree-granting institutions, by control of institution, level of degree, and discipline division: 2003–04. http://nces.ed.gov/programs/digest/d05/tables/dt05_254.asp?referer=list.

- National Center for Education Statistics, 2005. b Degrees and other formal awards conferred. http://nces.ed.gov/programs/digest/d05/tables/dt05_249.asp.

- National Research Council, 1996. National Science Education Standards. National Academy Press, Washington, DC.

- Nunnally, J. C., and I. H. Bernstein, 1994. Psychometric Theory. McGraw-Hill, New York.

- Petty, E. M., S. R. Kardia, R. Mahalingham, C. A. Pfeffer, S. L. Saksewski et al., 2000. Public understanding of genes and genetics: implications for the utilization of genetic services and technology. Am. J. Hum. Genet. 67 253.10848495 [Google Scholar]

- Sadler, T. D., and D. L. Zeidler, 2004. The morality of socioscientific issues: construal and resolution of genetic engineering dilemmas. Sci. Educ. 88 4–27. [Google Scholar]

- Sadler, T. D., and D. L. Zeidler, 2005. The significance of content knowledge for informal reasoning regarding socioscientific issues: applying genetics knowledge to genetic engineering issues. Sci. Educ. 89 71–93. [Google Scholar]

- Stewart, J., B. Hafner and M. Dale, 1990. Students' alternate views of meiosis. Am. Biol. Teach. 52 228–232. [Google Scholar]

- Tobin, K., D. J. Tippins and A. J. Gallard, 1994. Research on instructional strategies for teaching science, pp. 177–210 in Handbook of Research on Science Teaching and Learning, edited by D. L. Gabel. Macmillian, New York.

- Treagust, D., 1988. Development and use of a diagnostic test to evaluate students' misconceptions in science. Int. J. Sci. Educ. 10 159–169. [Google Scholar]

- Venville, G. J., and D. F. Treagust, 1998. Exploring conceptual change in genetics using a multidimensional interpretive framework. J. Res. Sci. Teach. 35 1031–1055. [Google Scholar]

- Weisberg, H. F., J. A. Krosnick and B. D. Bowen, 1996. An Introduction to Survey Research, Polling, and Data Analysis. Sage Publications, Thousand Oaks, CA.

- Wood-Robinson, C., 2000. Young people's understanding of the nature of genetic information in the cells of an organism. J. Biol. Educ. 35 29–36. [Google Scholar]

- Zohar, A., and F. Nemet, 2002. Fostering students' knowledge and argumentation skills through dilemmas in human genetics. J. Res. Sci. Teach. 39 35–62. [Google Scholar]