Abstract

With the introduction of minimally invasive surgery (MIS), it became necessary to develop training methods to learn skills outside the operating room. Several training simulators have become commercially available, but fundamental research into the requirements for effective and efficient training in MIS is still lacking. Three aspects of developing a training program are investigated here: what should be trained, how it should be trained, and how to assess the results of training. In addition, studies are presented that have investigated the role of force feedback in surgical simulators. Training should be adapted to the level of behavior: skill-based, rule-based, or knowledge-based. These levels can be used to design and structure a training program. Extra motivation for training can be created by assessment. During MIS, force feedback is reduced owing to friction in the laparoscopic instruments and within the trocar. The friction characteristics vary largely among instruments and trocars. When force feedback is incorporated into training, it should include the large variation in force feedback properties as well. Training different levels of behavior requires different training methods. Although force feedback is reduced during MIS, it is needed for tissue manipulation, and therefore force application should be trained as well.

The tendency to promote minimally invasive surgery for the benefit of the patient and to decrease health care costs requires that more surgeons be well trained in minimally invasive surgery. More efficient and effective training facilities is therefore a real medical need. Traditionally, residents are trained in the classic apprenticeship format with hands-on training in the operating room (OR). However, the specific psychomotor abilities and skills needed for minimally invasive surgery are not easily obtained in the OR because of the complexity of the environmont. Furthermore, the use of special instruments and the confined operating space during MIS make basic skills training for this type of surgery highly suitable for training outside the OR.

Currently there are several training methods available. A number of studies already exist that give an overview of the existing surgical simulator systems [1–5], which can roughly be categorized as box, hybrid, and virtual reality systems. An overview of the existing hardware interfaces was given by Chmarra et al. [6], and a description of the tasks used in these simulators was provided by Carter et al. [7]. Despite the number of simulators, currently only basic skills can be trained; therefore, development of training systems is still in its infancy. This study focuses on some fundamental aspects in relation to the development of training systems.

It should be recognized that different behavioral characteristics must be learned for the different training methods. Therefore, the various levels of behavior often used to evaluate performance can be used as a basis for classifying training methods [8]. Furthermore, there is a need for objective assessment methods, which vary depending on the training method. Finally, an important issue is the role of force feedback in simulators. In several places in this article some extra attention is given to the research performed in our department of Biomechanical Engineering at the Delft University of Technology.

Training Methods and Levels of Human Behavior

Two major aspects have to be considered for the development of training simulators. First, the training should be effective (i.e., the objectives have to be met); and, second, the training should be efficient, which means that the cost, and thus the time, should be minimized [9]. To develop training methods that are effective and efficient, it is important to determine the behavioral level at which the training is to be achieved. The training methods are therefore classified at the level of the surgeon’s behavior, which can be devised using Rasmussen’s model of human behavior [10–13]. Rasmussen’s model distinguishes three levels of behavior [8]: skill-based, rule-based, and knowledge-based levels. The various training methods are described here according to these three behavior levels (Table 1).

Table 1.

Training methods

| Level of human behavior | Training methods |

|---|---|

| Skill-based behavior | Pelvi-trainers, VR trainers |

| Rule-based behavior | Courses, literature, internet, VR trainers |

| Knowledge-based behavior | Operating room, animal experiment, future simulators |

Skill-Based Behavior

Human behavior takes place without conscious control, such as moving laparoscopic instruments due to the fulcrum effect. Tasks are executed as smooth, automated, and highly integrated patterns of behavior. At the skill-based behavior (SBB) level, training methods are developed related to hand-eye coordination problems, the limited degrees of freedom of the instruments, poor ergonomics, and the lack of force feedback. Because of these limitations, intensive training is required at the SBB level [13]. A large part of training at this level can be performed on low-fidelity pelvi-trainers or virtual reality (VR) simulators.

A pelvi-trainer is basically just a box mimicking the abdomen through which the endoscopic instruments are inserted. The instruments used are similar to the instruments employed during MIS in patients. The contents of the box vary from simple objects to animal organs and synthetically produced organs [14, 15]. Force feedback is naturally obtained in a trainer that uses physical (tissue) materials with which to interact. The use of physical materials allows measurement of instrument-tissue interactions that can be used to optimize the training of tissue handling. Unfortunately, basic tissue handling skills training is difficult to implement in box trainers because the use of (dead animal) tissue has many limitations, and the properties may differ from living tissue. Training in pelvi-trainers is currently not often used, mainly because the pelvi-trainers do not give information about the level of task performance.

In VR simulators, abstract environments with objects or tissues and organs are simulated with computer models. Some of the simulators are equipped with force feedback that is generated by servo motors that augment the instruments [4]. The systems with force feedback are costly, and the level of realism is rather limited. The performance feedback properties of VR simulators are stimulating their use, and automatic supervision of training sessions is easily implemented. An example of such a trainer developed at the Delft University of Technology is the Simendo, which has been commercialized by DelltaTech (www.simendo.eu) (Fig. 1). A mobile, plug-and-play low-fidelity system based on a laptop or desktop system, Simendo focuses on training hand-eye coordination. The goal was to develop an affordable basic skills trainer. Its price is about that of a sophisticated laptop [16].

Fig. 1.

Simendo virtual reality simulator for basic skills training

Rule-Based Behavior

Task execution at the rule-based level is controlled by rules or procedures. An example of tasks at this level is the operation protocol, determining the sequence of steps to be performed. For example, during a cholecystectomy the cystic artery and cystic duct should be isolated and identified (Calot’s triangle) before structures are clipped. The rules on the rule-based behavior (RBB) level should be trained extensively to reduce procedural mistakes. Training at this level can be done by attending courses, getting information from the literature or the Internet, or with the use of VR trainers. With the use of VR trainers, training behavior on the RBB level can be combined with training at the SBB level. However, the use of VR simulators requires that VR protocols are representative of the protocols used in the operating theater. Currently, a standard protocol is not available for most procedures. The lack of standard procedures inhibits the implementation of RBB in VR simulators.

Knowledge-Based Behavior

During unfamiliar situations for which no rules are available, the performance must switch to a higher conceptual level. For example, complex mental processes are required to cope with unexpected anatomic and pathologic variations of the patient or with unpredicted events, such as internal bleeding and damage to the tissue, power failure, or instrument breakdown. Avoiding mistakes at this level requires many decisions and much knowledge and is regarded as the most difficult part of training. A decrease in mistakes at the knowledge-based behavior (KBB) level is difficult to realize, mainly because limited knowledge about unexpected events. To overcome this difficulty, interactive VR simulators need to be developed in which the subjects are confronted with unexpected complications and where quick decisions have to be made about the next step in the procedure. Such a crisis trainer can be used to train residents to make the right decisions while operating under stress. Presently, the only available methods to decrease the number of mistakes at the KBB level are training on animal models and hands-on training in the operating theater. The basic objectives of hands-on training are mostly not well formulated. Because of the complexity of the tasks at KBB level, training at this level is difficult to implement in simulators.

Training on the KBB level often involves complex tasks. Novices learn complex tasks differently from the way they do simple tasks [17, 18]. It is sometimes assumed that learning complex sets of interrelated tasks is achievable as “the sum of parts” by sequencing a string of simplified tasks until a complex task is captured. Van Merrienboer et al. showed that complex learning involves achieving integrated sets of learning goals [19]. The whole of the task is more than the sum of its parts because it also includes the ability to coordinate and integrate those parts. This relation is not always recognized. Hence, a well designed training program does not aim at acquiring each of these skills separately but teaches the trainee to acquire the ability to use all of the skills in a coordinated and integrated fashion [17]. Van Merrienboer et al. assumed that four interrelated components are essential for training complex cognitive skills: learning the tasks, supportive information, just-in-time information, and part-task practice [19].

Ideally, the tasks confront the trainees with all constituent skills that make up the whole complex skill [17]. To prevent overloading the trainees, simple constituent skills have to be mastered first. For example, to stretch tissue during dissection, one must first locate the instrument at the correct place, then grasp the tissue in question, and finally stretch in the correct direction with the right force. In a well designed training program, all these tasks are first trained separately and combined thereafter. Supportive information provides the bridge between what is already known and what the trainee should get out of it. Demonstration is a useful form of supportive information. Just-in-time information provides trainees with knowledge at the moment it is needed (e.g., corrective feedback required for wrongly applied rules or procedures). Part-task practice is the training of skills separately in dedicated learning tasks. Many skills have to be practiced many times before the required level of automaticity is obtained. As a consequence, certain tasks can be on the KBB or RBB level at the beginning of training and become a task on the SBB level after training.

Assessment Methods

Assessing the outcome of training is an essential part of the learning process. The purpose in the training outside the OR is to avoid errors, especially at the SBB and RBB levels. Although many training facilities outside the OR are presently available, they are only scarcely used. At this moment, many trainers are classified as being boring, and therefore one of the largest challenges in surgical training is the development of systems in which residents are motivated to train away from patients. Assessment is a strong motivating factor for learners. Assessment can provide a visible result, which motivates the effort to improve but makes it also possible to provide specific feedback [20]. Not reaching certain requirements should have consequences for the resident. Currently, surgical curricula lack structural assessment of residents.

There are various reliable and valid assessment tools available for measuring the various components of surgical competence. They can be divided into cognitive, clinical, and technical assessment tools [21]. Different assessment methods are required for the different levels of human behavior. At the SBB level, a simulator is an example of a technical assessment tool. The performance feedback properties of VR simulators are stimulating their use, and automatic supervision of training sessions is easily implemented. Performance in the pelvi-trainer has been assessed using an electromagnetic hand-motion tracking device [22]. At the Delft University of Technology we developed the TrEndo, a tracking system based on optical sensors able to track instruments’ movements (Fig. 2) [23, 24]. Translating instrument movements into effective feedback, however, is not a easy task. A number of measurements are needed to get a fundamental grip on which parameters are important for assessing task performance. Position sensors to track instruments return information on, for example, the number of actions, time per action, and total trajectory length. Currently, we are investigating whether an often used concept, the shortest path length concept, is valid [25].

Fig. 2.

TrEndo tracking system to measure laparoscopic instrument movements in a box trainer

Once the operating protocols are well defined at the RBB level, the effectiveness of the training can be evaluated by written/oral examinations. During practice, these can be evaluated with time-action analysis and learning curves [26, 27]. At the KBB level, the effectiveness of the training can hardly be evaluated objectively and has to be assessed by observations in the operating theater (e.g., using the Objective Structured Assessment of Technical Skills, or OSATS) [28].

To motivate residents to train their SBB and RBB, their task performance must be translated objectively into a score that should be high enough to continue training on, for example, the KBB level in the OR. Standard tests are, however, lacking. Furthermore, requiring residents to pass tests as a prerequisite for training on animals and patients may be a highly motivating factor for exploiting these training facilities. At the moment, this regulation is also lacking.

Kirkpatrick’s four-level model was developed to assess training effectiveness [17, 29, 30]. In this model, evaluation begins at the lowest level; and information from each prior level serves as a basis for the next level’s evaluation. The levels are as follows.

Level 1—reactions: measure how trainees react to the training program (face validity)

Level 2—learning: assess the extent to which trainees have made progress in performance (construct validity)

Level 3—behavior/transfer: measure the change in behavior due to the training program (predictive validity)

Level 4—results: assess training in terms of clinical results (e.g., reduction in the number of complications)

Evaluation at level 4 is increasingly time-consuming and difficult because the link between the effect of the proposed training and its impact on patient care is often difficult to identify [30].

Role of Force Feedback

Force feedback is naturally obtained in box trainers that use standard laparoscopic instruments and physical materials with which to interact. Some of the VR simulators are equipped with force feedback [4]. Current technology, however, is not able to provide highly realistic force feedback in virtual reality, and accurate modeling of soft tissue properties is also not yet possible; therefore, the level of realism is rather limited. The mathematic modeling of nonhomogeneous, hyperelastic materials is not yet solved. Furthermore, the diversity of dynamic soft tissue models and the lack of programs to generate randomly realistic disturbances makes the development of high-fidelity virtual reality trainers with force feedback difficult, complex, and costly [10].

Little is known about the exact role of haptic feedback during minimally invasive surgery. The surgeon has no direct contact with the tissue but manipulates the tissue via long laparoscopic instruments. Owing to the interposition of instruments, haptic feedback is reduced to force and proprioceptive feedback, with tactile feedback being lost. Moreover, force feedback is limited because the instruments used have friction in the hinges and in the trocar.

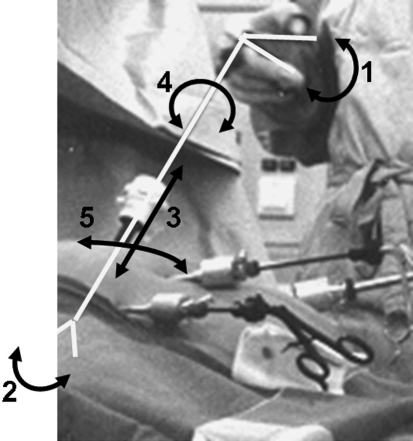

To investigate the role of reduced force feedback during surgery, and therefore also during training, forces must be measured. In our group, a number of studies have been performed to explore the reduction of force feedback in more detail [31]. Force transmission is distorted for several reasons (Fig. 3). Sjoerdsma et al. determined the mechanical transmission characteristics of four different graspers by measuring the ratio between the forces in the jaw and the forces in the handgrip at different opening angles (1 and 2 in Fig. 3). It was found that the mechanical transfer functions were highly nonconstant, differing greatly among the graspers. Furthermore, the transmission function of the grasping forces to the hand was dependent on the opening handle, and the mechanical efficiency was less than 50% [32]. Den Boer et al. [33] evaluated the feedback quality of commercially available reusable and disposable dissectors. Subjects were asked to feel a simulated arterial pulse in a tube, and a sensory threshold measured by a psychophysical method was determined. It was found that the sensory threshold is highly dependent on the mechanical efficiency of the instruments. Heijnsdijk et al. [34] showed that when the mechanical efficiency was improved the performance during tissue-holding tasks was not. Tasks to determine tissue properties, however, were performed better with instruments having a high mechanical efficiency, indicating that the impact of impaired force feedback depends on the task being performed.

Fig. 3.

Interposition of instruments between the surgeon’s hands and the tissue. Force feedback is reduced because of friction of the instruments (1, 2) in the trocar (3, 4, 5) and the limited flexibility of the abdominal wall

The force feedback of the pulling force is impaired as well. The movements are characterized by in-out movements in the trocar (translation along the instrument axis) and rotation around the incision point (3 and 4 in Fig. 3). The trocar has a valve to limit gas leakage, but this valve causes friction with the inward and outward movements of the instruments. Van den Dobbelsteen [35] measured the friction in a number of commercially available trocars. This study showed also that friction between the laparoscopic instrument and the trocar differs extensively across various trocar designs. Rotational movements are hindered by the stiffness of the abdominal wall (5 in Fig. 3). No studies on impaired force feedback relating to the flexibility of the abdominal wall were found in the literature. As a consequence of the limited and varying force feedback, the perception of pinching and pulling forces is inhibited.

When incorporating force feedback into training systems, the effect of distorted force feedback should also be incorporated into the design. Currently, there are no virtual reality trainers that incorporate the large variety of force feedback characteristics. Furthermore, it is currently unknown whether a reduced quality of force feedback affects appropriate force application. Hence, the question of how important it is to have force feedback in trainers still needs to be answered. Force sensors can be added to measure pulling and pinching forces to provide information about safe grasping of tissue (not too-high force) without slip (not too-low force) [36, 37]. Studies have been performed to determine their relation with the performance level, but more research is needed [10, 38, 39]. We tested whether it possible to train the amount of force applied [10]. In a box trainer, a force measurement system measured the pulling force and the direction of the force. During training, the deviation from the desired force was presented on a monitor using error bars. The performance of the subjects was assessed four times during the training; it showed that the subjects receiving feedback about their performance were more capable of reproducing the desired force than subjects who did not receive performance feedback during training [10]. These results indicate that applied force levels can be trained and therefore suggest that force feedback plays an important role in training

Discussion

To avoid errors in the operating room, training methods for the levels of behavior are needed, with their own objectives, means, and needs [13]. Rasmussen’s model can be used to identify the requirements for training at each behavior level. The purpose of training surgeons outside the OR is to minimize accidents and increase patient safety by avoiding errors, especially at the SBB and RBB levels [40]. In aviation, the introduction of effective training methods for pilots reduced the number of deadly errors at the SBB and RBB levels practically to zero, whereas in medicine the value is estimated to be about 3% to 4% [41], indicating that more effort is still needed to improve safety.

The training method that is most effective depends on the tasks to be trained. The problems in MIS training are fundamental. Unfortunately, there is little basic knowledge about what to train [10–12]. To acquire knowledge about what to train, verbal communication was analyzed during training [42]. A structural analysis of the contents showed that a high percentage of anatomy and pathology explanations was found, followed by the location of the instrument, the direction in which the tissue should be manipulated, and instrument handling. The evaluation of the training methodology is also not standardized, which means that training methods cannot be compared. Training methods and protocols could be developed and evaluation scores determined keeping in mind the concept of avoiding errors at the SBB, RBB, and KBB levels. Until now, it is mainly basic training on the SBB level that has been evaluated. Standard training methods outside the OR to avoid errors at the RBB and KBB levels do not yet exist. Development of trainers at the KBB level is much more difficult because of the lack of knowledge about these errors and the high level of realism that is required.

Manipulation and dissection of tissue is one of the most difficult tasks to perform during surgery. Examples of tissue manipulation during MIS are dissection; blunt dissection; coagulation; and pulling, stretching, and stripping tissue [10]. There is little information available about the manipulation of soft tissue; no mechanical/mathematical models have been developed to predict tissue behavior during the manipulation of tissue; therefore, virtual reality systems do not present realistic tissue manipulation tasks. Master surgeons acknowledge that tissue-handling skills are not well trained with the currently available training methods and that these skills are still acquired while operating on real patients [10, 43, 44].

Training is required not only for resident surgeons. Den Boer et al. showed that there are many problems related to the instrumentation, especially that used during dissection (e.g., coagulators and dissectors) [45]. Verdaasdonk et al. reported a large number of incidents related to the use of a laparoscopic tower [46]. These studies showed also that expert surgeons and the other persons in the OR need extra training to use the instrumentation in an optimal way.

The role of force feedback in training remains to be revealed. For example, force feedback is not required for training eye-hand coordination, but force feedback is probably crucial for training subtle tissue handling tasks. Consequently, the feedback requirements depend on the tasks for which a training device is designed. The use of normal instruments and physical materials in a pelvi-trainer then seems beneficial because realistic force feedback properties are difficult to simulate in virtual reality. The automatic performance assessment is, on the other hand, an advantage of current virtual reality systems. Pelvi-trainers that could track the instruments may combine the advantages of both. Identification of tasks performance from position (and force) measurements is a subject that requires further research in this respect.

Conclusions

Training systems can be developed and evaluated according to the level of behavior that is trained. Accurate force feedback is required during subtle tissue manipulation, and more research is still needed to reveal the importance of force feedback during training.

References

- 1.Dunkin B, Adrales GL, Apelgren K, et al. (2007) Surgial simulation: a current review. Surg Endosc 21:357–366 [DOI] [PubMed]

- 2.Halvorsen FH, Elle OJ, Fosse E (2005) Simulators in surgery. Minim Invas Ther Allied Technol 14:214–223 [DOI] [PubMed]

- 3.Sutherland LM, Middleton PF, Anthony A, et al. (2006) Surgical simulation, a systematic review. Ann Surg 243:291–300 [DOI] [PMC free article] [PubMed]

- 4.Schijven M, Jakimowicz J (2003) Virtual reality surgical laparoscopic simulators: how to choose. Surg Endosc 17:1943–1950 [DOI] [PubMed]

- 5.Aggarwal R, Moorthy K, Darzi A (2004) Laparoscopic skills training and assessment. Br J Surg 91:1549–1558 [DOI] [PubMed]

- 6.Chmarra MK, Grimbergen CA, Dankelman J (2007) Systems for tracking minimally invasive surgical instruments. Minim Invas Ther Allied Technol (in press) [DOI] [PubMed]

- 7.Carter FJ, Schijven MP, Aggarwal R, et al. (2005) Consensus guidelines for validation of virtual reality surgical simulators. Surg Endosc 19:1521–1532 [DOI] [PubMed]

- 8.Rasmussen J (1983) Skills, rules and knowledge; signals, signs and symbols, and other distinctions in human performance models. IEEE Trans Syst Man Cybern B Cybern 13:257–266

- 9.Cuschieri A, Francis N, Crosby J, et al. (2001) What do master surgeons think of surgical competence and revalidation? Am J Surg 182:110–116 [DOI] [PubMed]

- 10.Dankelman J (2004) Surgical robots and other training tools in minimally invasive surgery. Proc IEEE Syst Man Cybern B Cybern 3:2459–2464

- 11.Stassen HG, Dankelman J, Grimbergen CA, et al. (2001) Man-machine aspects of minimally invasive surgery. Annu Rev Control 25:111–122 [DOI]

- 12.Wentink M, Stassen LPS, Alwayn I, et al. (2003) Rasmussen’s model of human behavior in laparoscopy training. Surg Endosc 17:1241–1246 [DOI] [PubMed]

- 13.Dankelman J, Wentink M, Stassen HG (2003) Human reliability and training in minimally invasive surgery. Minim Invas Ther Allied Technol 12:1229–1235 [DOI] [PubMed]

- 14.Dersossis AM, Fried GM, Abrahamowicz M, et al. (1998) Development of a model for training and evaluation of laparoscopic skills. Am J Surg 175:482–487 [DOI] [PubMed]

- 15.Waseda M, Inaki N, Mailaender L, et al. (2005) An innovative trainer for surgical procedures using animal organs. Minim Invas Ther Allied Technol 14:262–266 [DOI] [PubMed]

- 16.Verdaasdonk EGG, Stassen LPS, Monteny LJ, et al. (2006) Validation of a new basic virtual reality simulator for training of basic endoscopic skills—the Simendo. Surg Endosc 20:511–518 [DOI] [PubMed]

- 17.Dankeman J, Chmarra MK, Verdaasdonk EGG, et al. (2005) Fundamental aspects of learning minimally invasive surgical skills—review. Minim Invas Ther Allied. Technol 14:247–256 [DOI] [PubMed]

- 18.Ackerman PL (1990) A correlation analysis of skill specificity: learning, abilities, and individual differences. J Exp Psychol Learn 16:883–901 [DOI]

- 19.Van Merrienboer JG, Clark RE, de Croock MBM (2002) Blueprints for complex learning: the 4C/ID model. Educ Techn Res Dev 50(2):39–64 [DOI]

- 20.Mahmood T, Darzi A (2004) The learning curve for colonoscopy simulator in the absence of any feedback: no feedback, no learning. Surg Endosc 18:1224–1230 [DOI] [PubMed]

- 21.Satava RM, Gallagher AG, Pellegrini CA (2003) Surgical competence and surgical proficiency: definitions, taxonomy, and metrics. J Am Coll Surg 196:933–937 [DOI] [PubMed]

- 22.Hance J, Aggerwal R, Moorthy K, et al. (2005) Assessment of psychomotor skills acquisition during laparoscoic cholecystectomy courses. Am J Surg 190:507–511 [DOI] [PubMed]

- 23.Chmarra MK, Bakker NH, Grimbergen CA, et al. (2006) TrEndo, a device for tracking minimally invasive surgical instruments in training setups. Sensors Actuators A 126:328–334 [DOI]

- 24.Chmarra MK, Kolkman W, Jansen FW, et al. (2007) The influence of experience and camera holding on laparoscopic instrument movements measured with the TrEndo tracking system. Surg Endosc May 4; [Epub ahead of print] [DOI] [PubMed]

- 25.Chmarra MK, Jansen FW, Wentink M, et al. (2006) Performance during distinct phases in laparoscopic surgery. Proc EAES Berlin 112

- 26.Sjoerdsma W (1998) Surgeons at work. time and action analysis of the laparoscopic surgical process. Delft: PhD thesis, DUT, ISBN 90-9012069-6, pp 1–103

- 27.den Boer K (2001) Surgical task performance. assessment using time-action analysis. Delft: PhD thesis, DUT; ISBN 90-370-0189-0, pp 1–134

- 28.Martin JA, Regehr G, Reznick R, et al. (1997) Objective structured assessment of technical skill (OSATS) for surgical residents. Br J Surg 84:273–278 [DOI] [PubMed]

- 29.Kirkpatrick DL (1998) Evaluating Training Programs: The Four Levels. San Francisco, Berrett-Koehler, ISBN 1-57675-042-6

- 30.Hutchington L (1999) Evaluating and researching the effectiveness of educational interventions. BMJ 318:1267–1269 [DOI] [PMC free article] [PubMed]

- 31.Dankelman J, Stassen HG, Grimbergen CA, editors (2005) Engineering for Patient Safety: Issues in Minimally Invasive Procedures. Hillside, NJ, Lawrence Erlbaum Associates, pp 1–300 ISBN 0-8058-4905-X

- 32.Sjoerdsma W, Herder JL, Horward MJ, et al. (1997) Force transmission of laparoscopic grasping instruments, Minim Invas Ther Allied Technol 6:274–278

- 33.Den Boer KT, Herder JL, Sjoerdsma W, et al. (1999) Sensitivity of laparoscopic dissectors: what can you feel? Surg Endosc 13:869–873 [DOI] [PubMed]

- 34.Heijnsdijk EAM, Pasdeloup A, van der Pijl AJ, et al. (2004) The influence of force feedback and visual feedback in grasping tissue laparoscopically. Surg Endosc 18:980–985 [DOI] [PubMed]

- 35.Van den Dobbelsteen JJ, Schooleman A, Dankelman J (2007) Friction dynamics of trocars. Surg Endosc Dec 13; [Epub ahead of print] [DOI] [PubMed]

- 36.de Visser H, Heijnsdijk EAM, Herder JL, et al. (2002) Forces and displacements in colon surgery. Surg Endosc 16:1426–1430 [DOI] [PubMed]

- 37.Heijnsdijk EAM, de Visser H, Dankelman J, et al. (2003) Inter- and intra-individual variability of perforation forces of human and pig bowel tissue. Surg Endosc 17:1923–1926 [DOI] [PubMed]

- 38.Smith SG, Torkington J, Brown TJ, et al. (2002) Motion analysis. Surg Endosc 16:640–645 [DOI] [PubMed]

- 39.Rosen J (2002) The bluedragon—a system for measuring the kinematics and the dynamics of minimally invasive surgical tools in-vivo. Proc IEEE Int Conf Robotics Automation 1876–1881

- 40.Dankelman J, Grimbergen CA (2005) System approach to reduce errors in surgery. Surg Endosc 19:1017–1021 [DOI] [PubMed]

- 41.Kohn LT, Corrigan JM, Donaldson MS, editors (2000) To Err is Human. Building a Safer Health System. Washington, DC, Committee on Quality of Health Care in America, Institute of Medicine, National Academy Press, p 287 [PubMed]

- 42.Blom EM, Verdaasdonk EGG, Stassen LPS, et al. (2007) Analysis of verbal communication during teaching in the operating room and the potentials for surgical training. Surg Endosc Feb 7; [Epub ahead of print] [DOI] [PubMed]

- 43.Satava RM, Cuschieri A, Hamdorf J (2003) Metrics for objective assessment; preliminary summary of the surgical skills workshops, Surg Endosc 17:220–226 [DOI] [PubMed]

- 44.Cuschieri A, Francis N, Crosby J, et al. (2001) What do master surgeons think of surgical competence and revalidation? Am J Surg 182:110–116 [DOI] [PubMed]

- 45.Den Boer KT, de Jong T, Dankelman J, et al. (2001) Problems with laparoscopic instruments—opinion of experts. J Laparoendosc Adv Surg Tech A 11:147–156 [DOI] [PubMed]

- 46.Verdaasdonk EGG, Stassen LPS van der Elst M, et al. (2007) Problems with technical equipment during laparoscopic surgery: an observational study. Surg Endosc 21:275–279 [DOI] [PubMed]