Abstract

We report an experiment in which observations of peers by six 3–5-year-old participants under specific conditions functioned to convert a small plastic disc or, for one participant, a small piece of string, from a nonreinforcer to a reinforcer. Prior to the observational procedure, we compared each participant's responding on (a) previously acquired performance tasks in which the child received either a preferred food item or the disc (string) for correct responses, and (b) the acquisition of new repertoires in which the disc (string) was the consequence for correct responses. Verbal corrections followed incorrect responses in the latter tasks. The results showed that discs and strings did not reinforce correct responses in the performance tasks, but the food items did; nor did the discs and strings reinforce correct responses in learning new repertoires. We then introduced the peer observation condition in which participants engaged in a different performance task in the presence of a peer who also performed the task. A partition blocked the participants from seeing the peers' performance. However, participants could observe peers receiving discs or strings. Participants did not receive discs or strings regardless of their performance. Peer observation continued until the participants either requested discs or strings repeatedly, or attempted to take discs or strings from the peers. Following the peer observation condition, the same performance and acquisition tasks in which participants had engaged prior to observation were repeated. The results showed that the discs and strings now reinforced correct responding for both performance and acquisition for all participants. We discuss the results with reference to research involving nonhuman subjects that demonstrated the observational conditioning of reinforcers.

Keywords: emergence of conditioned reinforcement, observational conditioning, observational learning, conditioned reinforcement, children

Observing others come in contact with the contingencies of reinforcement can function to change already learned performance or result in learning (Baer & Deguchi, 1985; Baer, Peterson, & Sherman, 1967; Bandura, 1986; Bandura, Adams, & Beyer, 1977; Brody, Lahey, & Combs, 1978; Catania, 2007; Deguchi, 1984; Deguchi, Fujita, & Sato, 1988; Dugatkin, 1996; Gewirtz, 1969; Greer et al., 2004; Greer, Singer-Dudek, & Gautreaux, 2006). Catania defined observational learning as changes in behavior resulting from the observer having indirect contact with the operant contingencies that are in effect for those who are observed. However, this definition does not distinguish between performance (i.e., emitting previously learned repertoires) and acquiring new repertoires by observation. These and other distinctions have been suggested by several research findings (Dorrance & Zentall, 2002; Galef, 1988; Goldstein & Mousetis, 1989; Greer et al., 2006; McDonald, Dixon, & Leblanc, 1986; Zentall, 1996; Zentall, Sutton, & Sherburne, 1996).

One review of the research on the effects of observation of operant contingencies on the behavior of human observers proposed major distinctions between the effects of observation on: (a) the emission of previously acquired repertoires, (b) the acquisition of new repertoires, (c) the acquisition of observational learning itself as a new repertoire, and (d) the observational conditioning of reinforcers (Greer et al., 2006). The terms used for these different types of observational effects have varied across and within different literatures. For example, research on vicarious reinforcement referred to changes in the rates of emission of behavior by observers as instances of “observational learning.” However, it is more likely that it was the rate of previously learned behavior that increased rather than the learning of a new repertoire (Bandura, 1986; Bandura et al., 1977; Kazdin, 1973; Ollendick, Dailey, & Shapiro, 1983). In contrast, studies that investigated the effects of observation on learning new repertoires showed that the observation of operant contingencies, including corrections received by other individuals who were in the process of learning a new repertoire, resulted in the acquisition of new repertoires by observers (Goldstein & Mousetis, 1989; Greer et al., 2004; McDonald et al., 1986). In the latter studies preobservation assessments showed that the target repertoires were missing, and probes following observation showed that the new repertoires were learned as a function of the observation of others being taught the repertoire. The latter studies meet the criterion for learning of new repertoires by observation, a distinction that is found also in research on observational learning in nonhuman animals (Dorrance & Zentall, 2002; Galef, 1988; Zentall, 1996; Zentall et al., 1996). In the nonhuman research, rather than observing the teaching process, an unusual behavior is acquired by observing another animal receiving reinforcement after engaging in the behavior. For example, Epstein (1984) reported pigeons placing their necks in a noose as a function of observing other pigeons doing so and receiving reinforcement.

Indeed, learning by observing others being taught has itself been identified as a repertoire that was missing originally but was subsequently induced in some children (Greer et al., 2004; Greer et al., 2006). Three studies identified human participants who did not learn new repertoires from observation of operant contingencies being taught to others, but learned to do so after one of two types of intervention (Davies-Lackey, 2005; Gautreaux, 2005; Greer et al., 2006; Pereira Delgado, 2005; Stolfi, 2005). One type used a yoked contingency procedure (Davies-Lackey, 2005; Stolfi, 2005). In this procedure, children who did not learn from observation (as identified by preintervention probe trials) received an intervention in which they were paired with peers. The pair received reinforcement under conditions in which the target child emitted a correct response as a result of observing his peer receive reinforcement for correct responses or corrections for incorrect responses. After pairs achieved mastery on one to three sets of five instructional tasks, children who did not learn by observation prior to the intervention could do so with the original material and with novel instructional material, as demonstrated by nonconsequated probe trials.

In the second type of intervention, 6-year-old children (Pereira Delgado, 2005) and middle-school students (Gautreaux, 2005) who originally did not learn from observation, did so following an intervention that required them to record the correct and incorrect responses of other students who received instruction. Participants received reinforcement for recording the accurate and inaccurate responses of others. Several steps were involved in this sequence, beginning with the participants recording the observed students' responses following reinforcement or correction, to eventually receiving reinforcement only when they accurately recorded the responses of the observed student prior to reinforcement or correction. Eventually the participants learned new material solely by observing other students receiving instruction. This was confirmed by the use of nonconsequated probes.

The categories of observational research identified thus far (i.e., increased performance of previously learned repertoires, acquisition of new repertoires by observation, and the acquisition of the repertoire to learn by observation) all appear to be examples of what Catania (2007) described as indirect contact with operant contingencies. A fourth category, observational conditioning of reinforcers, has been demonstrated in research with nonhuman animals (Galef, 1988; Zentall, 1996). For example, Dugatkin (1996) and Dugatkin and Godin (1992) found that female guppies, normally predisposed to seek out brightly colored males for mating purposes, sought out and mated with dull-colored males after observing other females that appeared to be mating with dull-colored males. In fact, the observed females were not actually mating with the dull-colored males. The experimenters used a sophisticated mirror arrangement to create conditions in which the observing guppies viewed what appeared to be the selection of dull-colored males by other female guppies. The observing guppies “copied” (the term used in that literature) the behavior of the females they observed. Zentall (1996) proposed, and we concur, that the effects reported by Dugatkin and Dugatkin and Godin were, in fact, evidence of observational conditioning of new reinforcers. That is, it was not the behavior of mating that changed; rather, what changed were the reinforcing effects of dull-colored males.

In related work, Mineka and Cook (1988) and Cook, Mineka, Wolkstein, and Laitsch (1998) conditioned snake fear in rhesus monkeys. Laboratory-reared monkeys with no history of experiences with snakes and that did not escape from snakes prior to the observational intervention escaped from snakes following observation of live conspecifics that did so. In reference to the study by Cook et al. and the Mineka and Cook study, Zentall (1996) stated, “Presumably, the fearful conspecific serves as the unconditioned stimulus, and the snake serves as the conditioned stimulus. It appears that exposure to the fearful conspecific or to a snake alone is insufficient to produce fear of snakes in the observer” (Zentall, 1996, p. 231). According to Zentall, this appears to be an example of Pavlovian observational learning where the monkeys' innate ability to respond to the facial cues, posture, or squeals of conspecifics and the pairing of the observed responses with the snake stimulus resulted in the conditioned stimulus control.

Similarly, Curio, Ernest, and Vieth (1978) created observational conditions in which blackbirds acquired escape responses to nonpredator friarbirds by observing what appeared to be a conspecific emitting escape responses in the presence of a live friarbird, normally a nonthreatening species. In fact, the observed blackbird was responding to a live owl, a natural predator. These, and related studies summarized in Dugatkin (2000) and Zentall (1996), involved changes in genetically predisposed responding as a result of observation of the pairing of conspecifics' responses with a novel stimulus.

We found no studies in the human literature that directly related to the nonhuman precedents characterized by Zentall (1996) as observational conditioning. However, we did find studies with young children that were related to what Zentall (1996) and Galef (1988) identified as stimulus-enhancement effects of observation. These studies reported changes in children's food choice under conditions involving observation (Birch, 1980; Duncker, 1938; Greer, Dorow, Williams, McCorkle, & Asnes 1991; Greer & Sales, 1997; Rozin & Schiller, 1980). Greer et al. and Greer and Sales found that children consumed food they had previously refused after observing other children consume the food. Similarly, Rozin and Schiller found that children acquired a taste for chili peppers as a function of observing others consume them. Birch (1980) and Duncker (1938) found similar enhancement effects for food consumption in children. In these studies, it is possible that the participants sampled the food as a result of observing others consume it, and the actual gustatory effects of the food may have come to reinforce consumption. In other words, observation served as the source for trying the food and thus not necessarily for what Zentall (1996) identified as observational conditioning.

In order to focus on the possibility that stimuli may become conditioned reinforcers for humans through observation, we tested whether small plastic discs or pieces of string that were not reinforcers initially would emerge as reinforcers from conditions involving the observation of peers. Specifically, we tested whether the observation of other children receiving these items as consequences for performance, when the observers were denied access to the same stimuli, would result in the items becoming reinforcers for (a) changes in already existing repertoires and (b) the acquisition of new repertoires.

Method

Participants

Six participants, ages 3–5, participated in this study. Five of them (Participants A, B, C, E, and F) attended a private, publicly funded preschool in a major metropolitan area and the other one (Participant D) attended a combined kindergarten-first grade class in a public school in the same geographic area. All of the participants in this study were classified as students with disabilities, due to mild-to-moderate language delays and, in some cases, behavior disorders (see Table 1). In addition, 6 other children served as peer confederates. Three were classmates of the participants: Confederate 1 was paired with Participant A, Confederate 2 was paired with Participant B, and Confederate 3 was paired with Participant C. The other 3 attended classes in the same school as the participants, but in different classrooms: Confederate 4 was paired with Participant D, Confederate 5 was paired with Participant E, and Confederate 6 was paired with Participant F. These latter participants had little, if any, contact with their peer confederates. Given the structure of the school, Participants D, E, and F were highly unlikely to come in contact with their peer confederates, who were not paired with participants based on shared social or academic repertoires. In fact, we selected the peer confederates because they were individuals not otherwise engaged with the participants in play or other socializing.

Table 1.

Participant descriptions and tasks completed during all experimental conditions.

| PARTICIPANT A: | 4-year-old male who responded age appropriately with adults and peers, read sight words, wrote his name with a model exemplar, and followed two-step vocal directions reliably. |

| Performance Task | Matching pictures of community helpers using lotto boards consisting of 6 pictures and corresponding cards |

| Learning Tasks | Vocally identifying actions in pictures following the teacher antecedent, “What is he/she doing?” (i.e., sleeping, drinking, eating, or sneezing), answering general knowledge questions (e.g., What do you write with?, What do you sleep in?), and pointing to coins following the teacher antecedent, “Point to the (quarter, nickel, dime, or penny)” |

| Intervention Task | Matching pictures of different colored shapes when the target stimulus and a nonexemplar were presented |

| PARTICIPANT B: | 3-year-old male who emitted speaker/listener exchanges with adults but not with peers. He identified pictures of common objects, followed single-step directions, and looked at books appropriately. |

| Performance Task | Vocally identifying the gender of individuals in pictures as man or woman |

| Learning Tasks | Vocally identifying pictures of community helpers (e.g., pilot, teacher, lifeguard), vocally identifying pictures of common signs (e.g., Information, Hospital, Do Not Enter), and prereading activities that included pointing to pictures, symbols, letters, and words that were the same when presented with several that were different (associated with the Edmark® [Pro Ed., 2001] reading curriculum) |

| Intervention Task | Pointing to pictures of common objects (e.g., cup, ball) when the target stimulus and a nonexemplar were presented |

| PARTICIPANT C: | 4-year-old male whose verbal behavior consisted of single words and simple phrases. He had a generalized imitation repertoire, identified pictures of common objects, and followed single-step instructions. |

| Performance Task | Coloring with a crayon on a blank sheet of paper for at least 5 seconds |

| Learning Tasks | Edmark prereading activities, vocally identifying pictures of common signs, and vocally identifying pictures of emotions (e.g., sad, mad) |

| Intervention Task | Pointing to pictures of common objects (e.g., cup, ball) when the target stimulus and a nonexemplar were presented |

| PARTICIPANT D: | 5-year-old female whose verbal behavior consisted of single words and simple phrases. She identified common objects and colors as both a listener and speaker. |

| Performance Task | Matching pictures of community helpers using lotto boards consisting of six pictures and corresponding cards |

| Learning Tasks | Sequencing pictures, vocally identifying pictures of community helpers, and answering general knowledge questions |

| Intervention Task | Matching pictures when the target stimulus and a nonexemplar were presented |

| PARTICIPANT E: | 4-year-old male who requested items using single words. He vocally identified pictures of common objects and responded to simple general knowledge questions. |

| Performance Task | Stringing beads |

| Learning Tasks | Vocally identifying opposites, in response to the vocal antecedent, “What is the opposite of ___?” (e.g., hot/cold, big/little), vocally responding to “why” questions (e.g., “Why do you sleep?”, “Why do you eat?”, “Why do you go to school?”), and vocally identifying pictures of common objects (e.g., pencil, chair, table) |

| Intervention Task | Pointing to pictures of common objects (e.g., cup, ball) when the target stimulus and a nonexemplar were presented |

| PARTICIPANT F: | 4-year-old male who participated in speaker/listener exchanges with adults and peers. He read single words and had beginning writing responses. |

| Performance Task | Matching identical pictures of common objects |

| Learning Task | Vocally identifying pictures of various animals |

| Intervention Task | Matching pictures of different colored shapes when the target stimulus and a nonexemplar were presented |

The participants and peer confederates attended classrooms in which behavior analytic procedures were uniformly applied as part of instruction. The school programs utilized a standard curriculum that included individualized assessment and instruction and the use of conditioned reinforcers (plastic discs different than those used in the experiment) in some of the participants' classrooms. Those who were in classrooms that used tokens are identified later. A menu of other reinforcers for which the discs could be exchanged in classrooms that used tokens was posted in each classroom along with the exchange rate for each. However, the verbal level of the participants and peer confederates was not such that the menus provided verbal stimulus control. The exchanged-for reinforcers included food items, access to specific toys or games, and walks to locations within the school. For various reasons the participants had not received tokens as reinforcers prior to the study. However, they did have the opportunity to observe other children receiving tokens as reinforcers.

Materials and Setting

The materials used as the conditioned reinforcers in the experiment were small, colored, translucent plastic discs that were 2.54 cm in diameter (see Figure 1) and small pieces of light-purple colored string, approximately 4 cm long. For all participants, the food items used as reinforcers were candy (SkittlesTM or gummy bears) or animal crackers. During all conditions of the experiment, the experimenter delivered the food items and the discs or strings into translucent plastic, 8-oz cups that were placed on the table in front of the participant and, during the intervention condition, the peer confederate's upper torso. For all participants, the experiment was conducted at a child-sized table (64 cm × 64 cm) in each participant's classroom at a time when the other children in the classroom were engaged in individualized and small-group instruction. The classrooms were approximately 8.5 m by 8.5 m and contained four or five other tables of similar size, a teacher's desk, and other furnishings typical of a classroom for young children. The table where all of the experimental conditions took place was located at the back of the classroom, separated by a short distance from but in view of the rest of the classroom.

Fig 1.

Discs used for Participants A–E.

Design and Procedure

The design of the experiment consisted of pre- and postintervention conditions that tested for changes in the reinforcing effects of the discs or strings. The pre- and postintervention conditions consisted of reversal designs involving multiple stages in an overall multiple-baseline-across-participants design (Johnston & Pennypacker, 1993). Two types of behavior were tested: performance (behavior already acquired) and learning (new behavior acquired). The entire experiment took between 2 and 4 months per participant.

The design of the performance tasks was a reversal design consisting of alternating stages in which correct responses were followed by the delivery of either food items or discs or strings. At no time did the experimenters provide verbal reinforcers (that is, praise or approval) for correct responding, nor did they correct incorrect responses or refusals to respond. Incorrect responses were ignored, the test stimuli were immediately removed, and new test stimuli were presented. Participants were given 3 s in which to respond, and if no response occurred, a new trial was begun. At no point were the discs or strings exchanged for reinforcers.

The performance tasks used for each participant were based on a prior determination of those skills that each participant had in his or her respective behavioral repertoire. They were skills the participant could readily perform and would be likely to repeat. The performance tasks for each participant are described in Table 1.

Performance sessions consisted of 10 trials that were timed in order to determine the rate of response. On each trial, the experimenter presented the physical stimulus and, when necessary, a verbal antecedent (e.g., “Match,” “Color,” or “String the bead”) and then (a) delivered either a food item or the disc or string by dropping it into a translucent plastic cup on the table in front of the participant for correct responses or (b) removed the stimulus after an incorrect response or 3 s of no response and immediately began a new trial. A timer or stopwatch was started at the onset of the first trial and stopped after the last trial. Between one and four sessions of the performance task were conducted each day, 3–4 days per week.

During the preintervention condition, sessions were repeated until the data demonstrated that: (a) performance of the task that was followed by the delivery of food items showed little variability, no overlap in the rates of correct and incorrect responses, and a rate of incorrect responses that was consistently close to zero; and (b) the discs or strings did not reinforce correct responses (that is, correct responses decreased or remained at near-zero levels when discs or strings followed the correct response). For Participants A–E, the postintervention condition consisted of sessions in which only the discs were used, followed by alternating sessions with food items or discs. For Participant F, the strings and food items were alternated in the postintervention condition but it consisted of the same number of sessions that had occurred in the preintervention condition. This was done in order to test whether the strings continued to reinforce performance after a larger number of alternating sessions than occurred in the postintervention condition for the other 5 participants.

We also tested the effects of the discs and strings as reinforcers in three novel learning tasks for each participant (see Table 1). The sessions containing the preintervention learning tasks were interspersed with the sessions containing the performance tasks. Each learning task was presented in blocks of 20 instructional trials. They were not timed because the response requirements differed across types of instructional tasks. Due to the lack of reinforcement during the preintervention trials, the three learning tasks were also interspersed with other classroom instruction that allowed for high rates of reinforcement. Blocks of the preintervention learning tasks were often spaced over the course of the school day. Between one and three sessions of the learning tasks were conducted each day, 2–3 days per week. The learning tasks for each participant are listed in Table 1.

For each learning task, the participant received a disc or string that was placed into the translucent plastic cup following correct responses and verbal corrections from the experimenter for incorrect responses. As part of the correction procedure the participant was required to attend to the physical stimulus and repeat the response as corrected by the experimenter. No disc or string was delivered for corrected responses. Participant F received only one learning task due to difficulties that developed during the preintervention instructional sessions.

When the data for each participant in a learning task indicated that the discs or strings did not demonstrate reinforcing effects, as determined by the absence of an ascending trend across trials, we introduced the observational intervention condition. Once it was completed, the learning tasks were reintroduced for each participant. Discs or strings served as consequences for correct responses. Corrections were presented following incorrect responses until the data showed that the participant responded correctly in at least 90% of the trials across two consecutive sessions.

The intervention condition included tasks that both the participant and the peer confederate had previously mastered. During the intervention condition, each participant was seated side-by-side at a table with the peer confederate. An opaque partition was placed on the table between the participant and the peer confederate, so that each could see the other's head and shoulders but could not see the table top in front of the other, nor could they see the other's responses to the tasks provided by the experimenter. Translucent plastic cups were placed on the table close to each child and in such a way that the participant and the peer confederate could see their cup and the other's cup. That is, the participant could observe the delivery of a disc or string or the absence of a delivery as well as the face and shoulders of the peer confederate on each trial but could not observe the response of the peer confederate to the task. The performance tasks used during the observational intervention condition for each participant are listed in Table 1. The same task was presented to the peer confederate. The observational intervention was conducted between one and two times per day, 2–3 days per week.

Trials for the participant and peer confederate were simultaneous, followed by a 3-s response period. Correct responses by the peer confederate resulted in the delivery of a disc or string to the peer confederate's plastic cup. Correct or incorrect responses by the participant did not result in the delivery of discs or strings; the participant's cup remained empty. The participant's incorrect responses were not corrected. Omission of a response by the participant resulted in the removal of the test stimuli following the 3-s response period, and a new trial began for both the participant and the peer confederate. The task was always the same for the peer confederate as it was for the participant, except that at no time did the peer confederates emit incorrect responses or omit responses. They always performed correctly and always received the disc or string. The peer confederates exchanged their discs or strings for other reinforcers following the sessions but never in view of the participants. What the participants observed was the presentation of the test stimuli by the experimenter, their own response, the delivery of a disc or piece of string to the peer confederate's cup, possibly the facial and other upper-body reactions of the peer confederate, and the absence of delivery of a disc or string to their own cup.

The criterion for terminating the intervention condition was two consecutive sessions during or after which the participant made a request for or attempted to take the peer's discs or strings. The 10-trial sessions continued until each participant repeatedly requested the discs or strings or attempted to take the discs or strings from the peer confederate's cup. Attempts to take the peer confederate's discs or strings were blocked by the experimenter. The experimenter also ignored participants' statements such as, “Where's mine?”, “What about me?”, or “My turn.”

Participants' correct and incorrect responses on both the performance and learning tasks in the pre- and postintervention conditions were recorded immediately by the experimenter and, for most sessions, by an independent observer. This observer independently collected data on the participants' responses for 83% of the performance task sessions, 92% of the intervention sessions, and 79% of the learning task sessions. The interobserver agreement was 100% for all sessions. The observer also monitored the experimenter for procedural fidelity, that is, the degree to which the experimenter adhered to the procedures described, and procedural fidelity was 100%.

During the pre- and postintervention conditions, the experimenter and participant sat at the table with no peer present. During the intervention condition the peer confederate was seated next to the participant. Another adult who served as the independent observer was also frequently close by the table. The experimenter was the same for Participants A–E. For Participant F, an experimenter who was not involved in the pre- and postintervention conditions, and who was unfamiliar to the participant, conducted the intervention condition.

Results

Pre- and Postintervention Performance Conditions

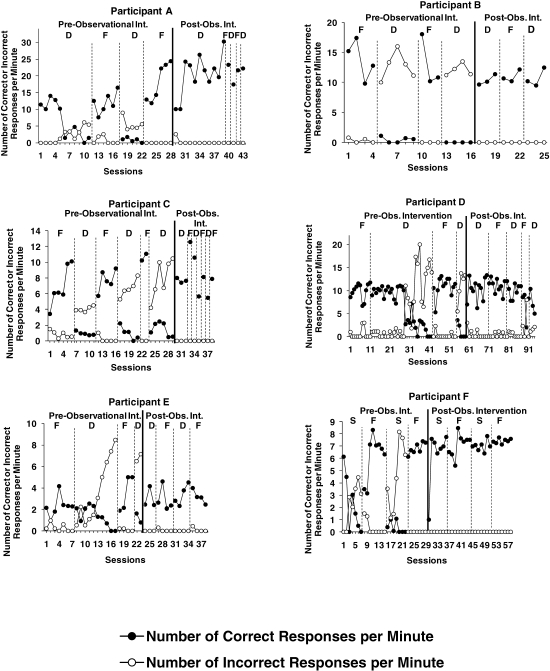

Figure 2 shows per-session response rates during the performance tasks for all 6 participants across the preintervention and postintervention conditions. Participants B, C, D, and E began with food items in the first stage of the preintervention condition and then received alternating stages of discs, food, and discs (in an ABAB design). Participant A began with discs in the first stage, and Participant F began with strings, followed by alternating stages of food, discs or strings, and food (in a BABA design). All 6 participants received discs or strings in the first stage following the observational intervention.

Fig 2.

Correct and incorrect responses per min in the pre- and postintervention (separated by the solid dark line) performance tasks for Participants A–F. Each stage is identified by the reinforcer—F for food, D for discs, or S for strings.

In the preintervention condition, all 6 participants demonstrated higher rates of correct responses with corresponding low rates of incorrect responses during each of the food stages. During the first disc or string stages, all 6 participants either demonstrated low rates of correct responses with corresponding high rates of incorrect responses (Participants B and C) or demonstrated extinction effects (a decrease in correct responding to zero) following initially higher rates of correct responses. For all participants, there were immediate and drastic changes in the levels of responding from the prior food stages by the second disc or string stage: low rates of correct responses and high rates of incorrect responses.

After the observational intervention, the data demonstrated consistently high rates of correct responses with very low or zero rates of incorrect responses for all 6 participants. That is, following the intervention, the participants responded to the discs and strings as they did to the food.

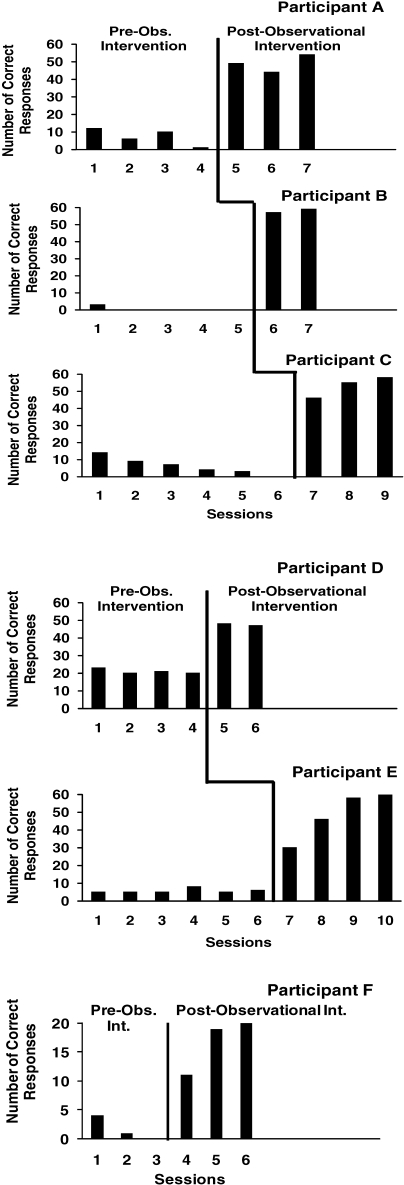

Pre- and Postintervention Learning Conditions

Figure 3 shows the number of correct responses by the 6 participants in the learning tasks presented before and after the observational intervention. The data for each of the three learning tasks were combined and graphed as the number of correct responses over a total of 60 instructional trials, except for Participant F, who completed only one 20-trial learning task (note the different scale on the ordinate). Participant A's correct responses across the three learning tasks decreased from 12 to 1 within four sessions when discs were delivered as consequences for correct responses. After the intervention, the number of correct responses to discs ranged from 49 to 54. Criterion-level responding (18/20 responses correct for two consecutive sessions) was achieved in three sessions for both the identification of actions and the yes/no questions (see Table 1). For the coin identification task, criterion-level responding was not achieved following the intervention. (It should be noted that, in this particular task, the participant had difficulty discriminating between nickels and quarters. Once the experimenter introduced a stimulus prompt by physically increasing the height of the nickel to highlight its thickness in relation to the quarter, the prompt was faded, and Participant A met the criterion. These data are not shown.)

Fig 3.

Number of correct responses in the pre- and postintervention learning tasks for Participants A–F.

Participant B showed low levels of accuracy prior to the intervention, with correct responses decreasing from three to zero. Following the intervention, criterion-level responding occurred within two sessions for all three tasks, with correct responses of 57 and 59, respectively.

Participant C's preintervention data demonstrated a steadily descending trend from 14 to 0 correct responses across five sessions. After the intervention Participant C's correct responses increased from 46 to 58 correct responses in three sessions. He met the criterion for mastery for all three tasks.

Participant D's correct responses on the three tasks during the preobservational intervention condition decreased from 23 to 20 across four sessions. Following the intervention, Participant D responded correctly on 48 and 47 out of 60 trials, respectively. In the first task, sequencing pictures, the data did not demonstrate improvement. An analysis showed that Participant D lacked prerequisite skills for the task. Once an instructional intervention was introduced to teach the prerequisite skills, correct responding increased. Participant D later reached criterion-level responding on the task with the disc as a reinforcer. These results are not reported here.

Participant E's data show that, during the preintervention condition, correct responses across the three tasks ranged from 5–8 correct responses. Following the intervention they increased from 30 to 60, and he met the criterion for all three learning tasks.

Participant F's data indicate that, during the preintervention condition, correct responses for the one learning task decreased from 4 to zero. Following the intervention, they increased from 11 to 20, and he met the criterion.

The results from the preintervention condition showed that the discs or strings did not reinforce learning even though corrective feedback was provided for all incorrect responses. Following the observational intervention, all of the participants either mastered (that is, met the criterion for) the new learning tasks with the discs or strings as reinforcers, or they increased their correct responses markedly. Three of the participants (B, C, and E) mastered all three of the new tasks, and Participant F mastered the one task that was presented. Participants A and D mastered two of the three tasks.

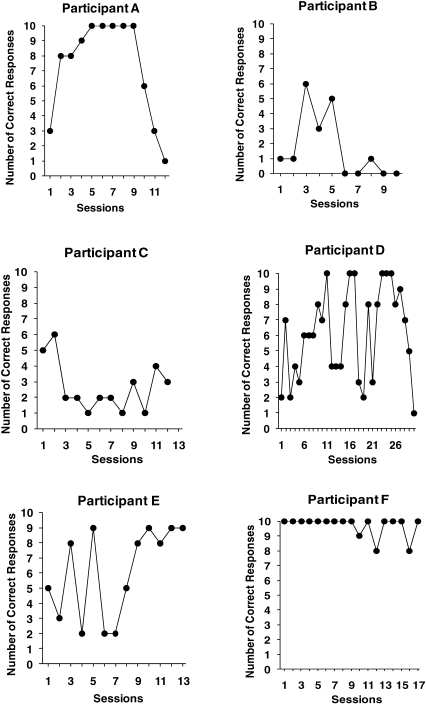

Intervention Condition

Figure 4 shows the participants' number of correct responses during the intervention condition. During the intervention, consisting of sessions of 10 trials, Participants A and B demonstrated extinction effects in 12 and 10 sessions, respectively. Participant C, who received 12 sessions, responded with five and six correct responses for sessions 1 and 2, respectively, and subsequent sessions varied between one and four correct responses. Participant D, who received 30 sessions, demonstrated extreme variability with a sharp descending trend for the last three sessions and with one correct response for the last session. Participant E, who received 13 sessions, showed extreme variability ranging from nine to two correct responses in the first eight sessions but achieved stability for the last 5 sessions where performance was maintained between eight and nine correct responses. Participant F emitted 10 correct responses for all but 3 sessions, where the correct responses were nine, eight, and eight.

Fig 4.

Number of correct responses in the intervention condition for Participants A–F.

During the intervention condition, Participant A began requesting his peer's discs by saying, “It's my turn,” and, after attempting to take his peer's discs, saying, “I won” after the 6th intervention session. Still, 12 sessions were required before he did so in 2 consecutive sessions, as the criterion required. Participant B began requesting the peer's discs by saying, “Me” and “Not me?” during the 6th intervention session, but required 13 sessions before he did so during 2 consecutive sessions. Participant C began requesting discs by crying, grabbing his peer confederate's cup, and saying, “I want some” during the 5th intervention session, but required 12 observational sessions before he did so in two consecutive sessions. Participant D required 30 sessions to meet the criterion. The number of correct responses varied, but she did not make any requests for the discs until after the 24th session. Participant E required 12 sessions before he grabbed his peer confederate's cup and said, “Mine,” and 1 additional session to meet the criterion. Participant F attempted to take the peer confederate's string several times during initial sessions of the condition, but we waited for this to occur during 2 consecutive sessions. However, he stopped attempting to take the peer confederate's strings altogether. We stopped the condition after 17 sessions. Thus, Participant F failed to meet the criterion for the termination of the intervention condition.

Discussion

Our data showed that discs and strings emerged as reinforcers for performance and learning following the observational conditioning intervention. The effect appears to be a function of conditions under which the participants were (a) denied direct access to the discs and strings as they (b) observed their peers receive them from an adult. At present, it appears that the effect may be attributable to stimulus–stimulus pairings that occurred during observation. As such, the effect is similar to that reported in research with nonhuman animals and observational conditioning (Galef, 1988; Zentall, 1996). However, the types of stimuli we used differed from those reported in the earlier studies. Nor were they tokens, that is, generalized reinforcers exchangeable for preferred items. Typically, for elementary-age children, tokens quickly become reinforcing, even when the exchange is delayed by hours. In such cases, rule-governed responding may be involved along with the observed pairing of tokens with already established reinforcers. However, for young children, such as preschoolers, or older students with developmental delays, conditioning tokens as generalized reinforcers is more difficult, perhaps either because behavioral stereotypy as a source of reinforcement interferes (Greer, Becker, Saxe, & Mirabella, 1985), or prerequisite observing responses are missing (Dinsmoor, 1983; Greer & Ross, 2008).

In cases involving preschoolers or older children with developmental delays, conditioning tokens as reinforcers may require systematic intervention. In such cases, unconditioned reinforcers such as food items or conditioned reinforcers such as praise are delivered simultaneously with tokens. In successive stages, once the token is delivered, the unconditioned reinforcer is exchanged at increasingly longer time intervals until the token functions by itself as a reinforcer or as a discriminative stimulus for learning or performance. Another common tactic is to use constructed pathways not unlike those found in game boards. A game-board pathway is arranged such that a picture of a preferred item appears at the end of the path. The child places a token on each step in the pathway and receives the preferred item when the tokens have extended to the picture. However, in some cases, neither procedure is successful and food or access to toys must be used directly for instruction until certain prerequisites are attained (Greer, 1981; Greer & Ross, 2008).

As previously noted, although some of the participants (Participants A, B, C, and D) could observe tokens delivered to others in their respective classrooms, those tokens were different than the discs and string we used in the present study. Moreover, none of the participants had been observed previously to request or attempt to take the classroom tokens.

Distinctions between performance and learning were made in our research and in the nonhuman social learning research. Much of the prior human research on the effects of observation on behavior change in humans, including a large body of research reviewed in Greer et al. (2006), did not determine whether the reported changes involved the learning of new operants or the performance of operants that were in the participants' preexisting repertoires. This distinction has not been part of the literature on human observation, but the distinctions between performance and learning have been part of the social learning research with nonhuman animals. That literature distinguishes between observational learning, true imitation, contagion, social facilitation, and observational conditioning (Galef, 1988; Zentall, 1996).

In our study, presenting a plastic disc or a piece of string initially did not function to reinforce either performance or learning new operants. Following the observational intervention, the discs and strings reinforced both types of responses. For these reasons we assert that the discs and pieces of string emerged as reinforcers—and the effect was not attributable to copying, imitation, modeling, contagion, or stimulus enhancement (Dugatkin, 2000; Galef, 1988; Zentall, 1996). Rather, the observations likely resulted in a stimulus–stimulus pairing and constitute an instance of observational conditioning. The observing participants did not observe whether the peer confederate was performing accurately, inaccurately, or not performing at all; hence contagion, imitation, or copying of behavior was not involved. Similarly, since the strings and discs that were conditioned as reinforcers had no inherent reinforcing potential, unlike food items, the effect was not attributable to stimulus enhancement (Birch, 1980; Duncker, 1938; Greer al., 1991; Rozin & Schiller, 1980).

The fact that, during the intervention condition, the participants did not receive the discs or strings whereas the peer confederates repeatedly received them appears to be a key component of the procedure. That is, it is unlikely that observation alone created the effects we observed. Rather, observation was effective under conditions in which the consequence was denied to the observer, but the observer could view a peer receiving the discs or strings. We hypothesize that the denials of access to discs and strings, while observing others receive them, created the pairing of the peers' receipt of them with the peers' facial or vocal expressions or both. In this way the discs and strings became conditioned reinforcers.

It also is possible that certain other social conditions may have existed and may have contributed to the effect. Specifically, the peer confederates and the participants may have discussed the receipt of discs or strings between observational intervention sessions, and this might have played a part in the effect. Certainly with older children, this would be a strong possibility. For children in the age group of the participants and the peer confederates and for children who have language delays or who have not achieved certain verbal developmental cusps, this would not be expected to be the case (Greer & Ross, 2004, 2008). In addition, we have not observed instances like these with children this young or with language delays of the types displayed by children like those in the experiment, nor did the teachers of the participants report such incidents. Also, participants D, E, and F were not from the same classes as their peer confederates and they had little opportunity for contact with the peer confederates except during the observational intervention. Future research could more systematically isolate the participants in the study from the peers involved in the intervention to eliminate any possibility of interactions beyond the experimental setting.

Artifacts of our experimental procedures may have contributed to the results. A possible explanation for the discs and strings failing to function as reinforcers for the performance tasks in the preintervention conditions is that their use in alternation with food items made them less desirable. Also, the fact that the learning tasks alternated with the preconditioning performance task may have produced the prior extinction of any reinforcing effects the discs or strings may have had. Indeed, this possibility suggests alternative explanations that need to be investigated. For example, denying participants access to nonpreferred items following the satiation of preferred items has been demonstrated to momentarily convert nonpreferred items to reinforcers (Aeschleman & Williams, 1989; Premack, 1971). However, had extensive preintervention sessions with the food items created satiation, the nonpreferred discs and strings would have functioned to reinforce performance as well as learning, when in fact, they did not.

The specific role that the peer confederates play in the conditioning process is a subject for further investigation. The peer confederates in the present study received the discs or strings in a translucent cup, but participants did not receive anything in their cups. In this situation, the participants did not copy the responses of their peers on which the discs or strings were contingent. Rather, it seems that delivery of the discs or strings to the peer confederates under conditions in which the observing participants did not receive the discs or strings functioned to condition them as reinforcers. Once the participants were returned to the performance and learning tasks, the peer confederates were no longer present. Hence, the discs and strings, not the peers, directly controlled responding in the postintervention condition. In earlier studies (Greer, McCorkle, & Sales, 1998; Greer & Sales, 1997), we performed experiments with no peer confederate. Instead of delivering the nonpreferred food item to a peer, the item was placed into the participants' cups whenever they emitted a correct response. However, when they emitted an incorrect response, we placed the item into a separate container to which the student could not gain access. Incorrect responses resulted in the loss of the item, and correct responses resulted in the participants' receiving the item in their cups. This procedure did not condition the item as a reinforcer. In fact, the participants ceased responding or responded with laughter on occasions when the item was placed in the container for the incorrect response. In these earlier studies, the nonpreferred food items were pieces of All Bran® cereal or bean sprouts. Subsequently, peer confederates were introduced, and the nonpreferred food items went into the peers' cups. This resulted in conditioning of the nonpreferred food items as reinforcers. The fact that food was used suggests that stimulus enhancement may have been involved (Zentall, 1996).

In addition to the role of the peer, the role of the experimenter in the observational conditioning effect remains to be investigated more thoroughly. That is, would the effect occur if the intervention consisted of an automated delivery of discs or strings rather than delivery by an adult, with or without a complete view of the confederate? It is possible that an unfamiliar or a familiar adult also may play a critical role in the pairing of stimuli that occurs in the observational process. Providing automated delivery of the discs or strings with no adult present would isolate the role of the peer confederate relative to the presence of an adult.

The ontogenetic and phylogenetic origins of the capability for observational conditioning in humans are not well understood, although some of the effects reported in the nonhuman animal literature have been interpreted as phylogenetic (Dugatkin, 2000; Zentall, 1996). To what extent the origin of the effect in children like those we studied is a product of individual reinforcement histories remains unknown. Research with other species suggests that observational conditioning may have phylogenetic origins and this could be the case for young children as well (Cook et al., 1998; Dugatkin, 2000; Epstein, 1984; Galef, 1988; Zentall, 1996).

Acknowledgments

We would like to acknowledge Molly Robson, Rebecca Roderick, Jennifer Longano, Anjalee Nirgudkar, Jeannine Schmelzkopf, and Lynn Yuan, who assisted us in the data collection. We also are grateful for the excellent suggestions made by the JEAB reviewers, all of whom provided useful feedback.

References

- Aeschleman S.R, Williams M.L. A test of the response deprivation hypothesis in a multiple-response context. American Journal on Mental Retardation. 1989;93:345–353. [PubMed] [Google Scholar]

- Baer D.M, Deguchi H. Generalized imitation from a radical-behavioral viewpoint. In: Reiss S, Bootzin R, editors. Theoretical issues in behavior therapy. New York: Academic Press; 1985. pp. 179–217. [Google Scholar]

- Baer D.M, Peterson R.F, Sherman J.A. The development of imitation by reinforcing behavioral similarity to a model. Journal of the Experimental Analysis of Behavior. 1967;21:405–416. doi: 10.1901/jeab.1967.10-405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bandura A. Social foundations of thought and action. Englewood Cliffs, NJ: Prentice-Hall; 1986. [Google Scholar]

- Bandura A, Adams N.E, Beyer J. Cognitive processes mediating behavioral change. Journal of Personality and Social Psychology. 1977;35:125–139. doi: 10.1037//0022-3514.35.3.125. [DOI] [PubMed] [Google Scholar]

- Birch L. Effects of peer models' food choice and eating behavior in preschoolers' food preferences. Child Development. 1980;51:14–18. [Google Scholar]

- Brody G.H, Lahey B.B, Combs M.L. Effects of intermittent modeling on observational learning. Journal of Applied Behavior Analysis. 1978;11:87–90. doi: 10.1901/jaba.1978.11-87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Catania A.C. Learning (interim 4th ed.) Cornwall-on-Hudson, NY: Sloan Publishing; 2007. [Google Scholar]

- Cook M, Mineka S, Wolkstein B, Laitsch K. Conditioning of snake fear in unrelated rhesus monkeys. Journal of Abnormal Psychology. 1998;94:591–610. doi: 10.1037//0021-843x.94.4.591. [DOI] [PubMed] [Google Scholar]

- Curio E, Ernest U, Vieth W. Cultural transmission of enemy recognition: One function of mobbing. Science. 1978 Nov 24;202:899–901. doi: 10.1126/science.202.4370.899. [DOI] [PubMed] [Google Scholar]

- Davies-Lackey A.J. Yoked peer contingencies and the acquisition of observational learning repertoires (Doctoral dissertation, Columbia University, 2005). Dissertation Abstracts International. 2005;66:138A. [Proquest AAT 3159730] [Google Scholar]

- Deguchi H. Observational learning from a radical-behavioristic viewpoint. The Behavior Analyst. 1984;7:83–95. doi: 10.1007/BF03391892. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deguchi H, Fujita T, Sato M. Reinforcement and the control of observational learning in young children: A behavioral analysis of modeling. Journal of Experimental Child Psychology. 1988;46:326–371. [Google Scholar]

- Dinsmoor J.A. Observing and conditioned reinforcement. Behavioral and Brain Sciences. 1983;6:693–728. [Google Scholar]

- Dorrance B.R, Zentall T.R. Imitation of conditional discriminations in pigeons (Columba livia). Journal of Comparative Psychology. 2002;116:277–285. doi: 10.1037/0735-7036.116.3.277. [DOI] [PubMed] [Google Scholar]

- Dugatkin L.A. The interface between culturally-based preference and genetic preference: Female mate choices in Poecilia reticulata. Proceedings of the National Academy of Science, USA. 1996;93:2770–2773. doi: 10.1073/pnas.93.7.2770. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dugatkin L.A. The imitation factor. New York: Free Press; 2000. [Google Scholar]

- Dugatkin L.A, Godin J.G. Reversal of female mate choice by copying in the guppy (Poecilia reticulata). Proceedings of the Royal Society of London B. 1992;249:179–184. doi: 10.1098/rspb.1992.0101. [DOI] [PubMed] [Google Scholar]

- Duncker K. Experimental modification of children's preference through social suggestion. Journal of Abnormal Psychology. 1938;33:489–507. [Google Scholar]

- Epstein R. Spontaneous and deferred imitation in the pigeon. Behavioural Processes. 1984;9:347–354. doi: 10.1016/0376-6357(84)90021-4. [DOI] [PubMed] [Google Scholar]

- Galef B.J., Jr . Imitation in animals: History, definition, and interpretation of data from psychological laboratories. In: Zentall T.R, Galef B.J Jr, editors. Social learning: Psychological and biological perspectives. Hillsdale, NJ: Lawrence Erlbaum Associates; 1988. pp. 3–28. [Google Scholar]

- Gautreaux G.G. The effects of monitoring training on the acquisition of an observational learning repertoire under peer tutoring conditions, generalization and collateral effects (Doctoral dissertation, Columbia University, 2005). Dissertation Abstracts International. 2005;66:1713A. [Proquest AAT 3174795] [Google Scholar]

- Gewirtz J.L. Mechanisms of social learning: Some roles of stimulation and behavior in early human development. In: Goslin D.A, editor. Handbook of socialization theory and research. Chicago: Rand-McNally; 1969. pp. 57–72. [Google Scholar]

- Goldstein H, Mousetis L. Generalized language learning by children with severe mental retardation: Effects of peers' expressive modeling. Journal of Applied Behavior Analysis. 1989;22:245–259. doi: 10.1901/jaba.1989.22-245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greer R.D. An operant approach to motivation and affect: Ten years of research in music learning. 1981. Oct, [Google Scholar]

- Greer R.D, Becker B.J, Saxe C.D, Mirabella R.F. Conditioning histories and setting stimuli controlling engagement in stereotypy or toy play. Analysis and Intervention in Developmental Disabilities. 1985;5:269–284. [Google Scholar]

- Greer R.D, Dorow L, Williams G, McCorkle N, Asnes R. Peer-mediated procedures to induce swallowing and food acceptance in young children. Journal of Applied Behavior Analysis. 1991;24:783–790. doi: 10.1901/jaba.1991.24-783. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greer R.D, Keohane D, Meincke K, Gautreaux G, Pereira J, Chavez-Brown M, Yuan L. Key instructional components of effective peer tutoring for tutors, tutees, and peer observers. In: Moran J, Malott R, editors. Evidence-based educational practices. New York: Elsevier/Academic Press; 2004. pp. 295–333. [Google Scholar]

- Greer R.D, McCorkle N.P, Sales R.D. Conditioned reinforcement as a function of peer contingencies with pre-school children. 1998. Jun, [Google Scholar]

- Greer R.D, Ross D.E. Verbal behavior analysis: A program of research in the induction and expansion of complex verbal behavior. Journal of Early Intensive Behavioral Intervention. 2004;1:141–165. [Google Scholar]

- Greer R.D, Ross D.E. Verbal behavior analysis: Inducing and expanding complex communication in children with language delays. Boston: Allyn & Bacon; 2008. [Google Scholar]

- Greer R.D, Sales C.D. Peer effects on the conditioning of a generalized reinforcer and food choices. 1997. May, [Google Scholar]

- Greer R.D, Singer-Dudek J, Gautreaux G. Observational learning. International Journal of Psychology. 2006;42:486–489. [Google Scholar]

- Johnston J.M, Pennypacker H.S. Strategies and tactics of behavioral research (2nd ed.) Hillsdale, NJ: Lawrence Erlbaum Associates; 1993. [Google Scholar]

- Kazdin A.E. The effect of vicarious reinforcement on attentive behavior in the classroom. Journal of Applied Behavior Analysis. 1973;6:71–78. doi: 10.1901/jaba.1973.6-71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDonald R.P.F, Dixon L.S, Leblanc J.M. Stimulus class formation following observational learning. Analysis and Intervention in Developmental Disabilities. 1986;6:73–87. [Google Scholar]

- Mineka S, Cook M. Social learning and the acquisition of snake fear in monkeys. In: Zentall T.R, Galef B.F, editors. Social learning: Psychological and biological perspectives. Hillsdale, NJ: Lawrence Erlbaum Associates; 1988. pp. 51–75. [Google Scholar]

- Ollendick T.H, Dailey D, Shapiro E.S. Vicarious reinforcement: Expected and unexpected results. Journal of Applied Behavior Analysis. 1983;16:485–491. doi: 10.1901/jaba.1983.16-485. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pereira Delgado J.A. Effects of peer monitoring on the acquisition of observational learning (Doctoral dissertation, Columbia University, 2005). Dissertation Abstracts International. 2005;66:1712A. [Proquest AAT 3174775] [Google Scholar]

- Premack D. Catching up with common sense or two sides of a generalization: Reinforcement and punishment. In: Glaser R, editor. The nature of reinforcement. New York: Academic Press; 1971. pp. 121–150. [Google Scholar]

- Rozin P, Schiller D. The nature and acquisition of a preference for chili peppers by humans. Motivation and Emotion. 1980;4:77–101. [Google Scholar]

- Stolfi L. The induction of observational learning repertoires in preschool children with developmental disabilities as a function of peer-yoked contingencies (Doctoral dissertation, Columbia University, 2005). Dissertation Abstracts International. 2005;66:2807A. [Proquest AAT 3174899] [Google Scholar]

- Zentall T.R. An analysis of social learning in animals. In: Heyes C.M, Galef B.G Jr, editors. Social learning in animals: The roots of culture. San Diego, CA: Academic Press; 1996. pp. 221–243. [Google Scholar]

- Zentall T.R, Sutton J.E, Sherburne L.M. True imitative learning in pigeons. Psychological Science. 1996;7:343–346. [Google Scholar]