Abstract

The emergence of language was a defining moment in the evolution of modern humans. It was an innovation that changed radically the character of human society. Here, we provide an approach to language evolution based on evolutionary game theory. We explore the ways in which protolanguages can evolve in a nonlinguistic society and how specific signals can become associated with specific objects. We assume that early in the evolution of language, errors in signaling and perception would be common. We model the probability of misunderstanding a signal and show that this limits the number of objects that can be described by a protolanguage. This “error limit” is not overcome by employing more sounds but by combining a small set of more easily distinguishable sounds into words. The process of “word formation” enables a language to encode an essentially unlimited number of objects. Next, we analyze how words can be combined into sentences and specify the conditions for the evolution of very simple grammatical rules. We argue that grammar originated as a simplified rule system that evolved by natural selection to reduce mistakes in communication. Our theory provides a systematic approach for thinking about the origin and evolution of human language.

Language remains in the minds of many philosophers, linguists, and biologists a quintessentially human trait (1–3). Attempts to shed light on the evolution of human language have come from many areas including studies of primate social behavior (4–6), the diversity of existing human languages (7, 8), the development of language in children (9–11), and the genetic and anatomical correlates of language competence (12–16), as well as theoretical studies of cultural evolution (17–21) and of learning and lexicon formation (22). Studies of bees, birds, and mammals have shown that complex communication can evolve without the need for a human grammar or for large vocabularies of symbols (23, 24). All human languages are thought to possess the same general structure and permit an almost limitless production of information for communication (25). This limitlessness has been described as “making infinite use of finite means” (45). The lack of obvious formal similarities between human language and animal communication has led some to propose that human language is not a product of evolution but a side-effect of a large and complex brain evolved for nonlinguistic purposes (1, 26). Others suggest that language represents a mix of organic and cultural factors and, as such, can only be understood fully by investigating its cultural history (16, 27). One problem in the study of language evolution has been the tendency to identify contemporary features of human language and suggest scenarios in which these would be selectively advantageous. This approach ignores the fact that if language has evolved, it must have done so from a relatively simple precursor (28, 29). We are therefore required to provide an explanation that proposes an advantage for a very simple language in a population that is prelinguistic (30–32). This work can be seen as part of a recent program to understand language evolution based on mathematical and computational modeling (33–37).

The Evolution of Signal–Object Associations.

We assume that language evolved as a means of communicating information between individuals. In the basic “evolutionary language game,” we imagine a group of individuals (early hominids) that can produce a variety of sounds. Information shall be transferred about a number of “objects.” Suppose there are m sounds and n objects. The matrix P contains the entries pij, denoting the probability that for a speaker object i is associated with sound j. The matrix Q contains the entries qji, which denote the probability that for a listener sound j is associated with object i. P is called “active matrix,” whereas Q is called “passive matrix.” A similar formalism was used by Hurford (22).

Imagine two individuals, A and B, that use slightly different languages L (given by P and Q) and L′ (given by P′ and Q′). For individual A, pij denotes the probability of making sound j when seeing object i, whereas qji denotes the probability of inferring object i when hearing sound j. For individual B, these probabilities are given by p′ij and q′ji. Suppose A sees object i and signals, then B will infer object i with probability Σj=1mpijq′ji. A measure of A’s ability to convey information to B is given by summing this probability over all objects (n). The overall payoff for communication between A and B is taken as the average of A’s ability to convey information to B, and B’s ability to convey information to A. Thus,

|

1 |

In this equation, both L and L′ are treated once as listener and once as speaker, leading to the intrinsic symmetry of the language game: F(L,L′) = F(L′,L). Language L obtains from L′ the same payoff as L′ obtains from L. If two individuals use the same language, L, the payoff is F(L,L) = Σin=1Σjm=1pijqji.

Hence, we assume that both speaker and listener receive a reward for mutual understanding. If for example only the listener receives a benefit, then the evolution of language requires cooperation.

In each round of the game, every individual communicates with every other individual, and the accumulated payoffs are summed up. The total payoff for each player represents the ability of this player to communicate information with other individuals of the community. Following the central assumption of evolutionary game theory (38), the payoff from the game is interpreted as fitness: individuals with a higher payoff have a higher survival chance and leave more offspring who learn the language of their parents by sampling their responses to individual objects.

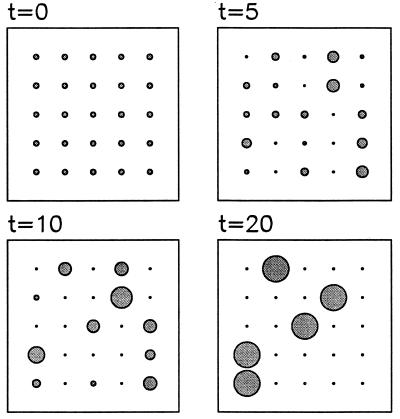

Fig. 1 shows a computer simulation of a group of 100 individuals. Initially, all individuals have different random entries in both active and passive matrices. After some rounds, specific sounds begin to associate with specific objects. Eventually each object is exactly associated with one signal. The simulation shows how a protolanguage can emerge in an originally prelinguistic society.

Figure 1.

Emergence of a protolanguage in an initially prelinguistic society. The population consists of 100 individuals. Each of them starts with a randomly chosen P and Q matrix. There are five objects and five signals (sounds). In one round of the game, every individual interacts with every other individual and the payoff of all interactions is evaluated according to Eq. 1. The total payoff of all individuals is calculated. For the next round, individuals produce offspring proportional to their payoff. Children learn the language of their parents by sampling their responses to each object. The figure shows the population average of the P matrix; the radius of the circle is proportional to the corresponding entry. Initially, all entries are about 0.25. After five generations some initial associations begin to form, which become stronger during subsequent rounds. At t = 20, each object is associated with one signal. Signal 1, however, is used for two objects, whereas signal 5 is not used at all. This solution is suboptimum but evolutionarily stable. Interestingly, errors during language acquisition increase the likelihood of reaching the optimum solution (M.A.N., J. Plotkin, and D.C.K., unpublished work).

For m = n, the evolutionary optimum is reached if each object is associated with one specific sound and vice versa. Evolution does not always lead to the optimum solution, but certain suboptimum solutions, in which the same signal is used for two (or more) objects, can be evolutionarily stable.

A Linguistic Error Limit.

Below, we discuss two essential extensions of the basic model. First, we include the possibility of errors in perception: early in the evolution of communication, signals are likely to have been noisy and can therefore be mistaken for each other (39). We denote the probability of interpreting sound i as sound j by uij. The payoff for L communicating with L′ is now given by

|

2 |

The probabilities, uij, can be expressed in terms of similarities between sounds. We denote the similarity between sounds i and j by sij. We obtain uij = sij/Σkm=1sik. As a simple example, we assume the similarity between two different sounds is constant and given by sij = ɛ, whereas sii = 1. In this case, the probability of correct understanding is uii = 1/[1 + (m − 1)ɛ]. The maximum payoff for a language with m sounds (when communicating with another individual who is using the same language) is given by F(m) = Σim=1uii, and therefore F(m) = m/[1 + (m − 1)ɛ]. The fitness, F, is an increasing function of m converging to a maximum value of 1/ɛ for large values of m. Without error, we would have F(m) = m. Thus, in the presence of error, the maximum capacity of information transfer is limited and equivalent to what could be achieved by 1/ɛ sounds without error.

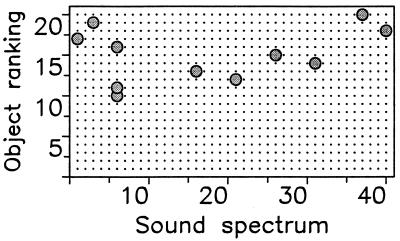

Next, we assume that objects can have different values, ai. (For example when a leopard represents a higher risk than a python, the word “leopard” may be more valuable than “python.”) We have F(m) = [1 + (m − 1)ɛ]−1Σim=1ai, where the objects are ranked according to their value, a1 > a2 >… . This fitness function can adopt a maximum value for a certain number m and decline if the value of m becomes too big. In this case, natural selection will limit the number of sounds used in the language and consequently also limit the number of objects described. Fig. 2 shows a computer simulation of this extended evolutionary language game. The final outcome is a language that uses only a subset of all available sounds to describe the most valuable objects.

Figure 2.

Evolution of protolanguage in the context of misunderstanding. There are 20 objects and 40 sounds, but evolution leads to a language that uses only 9 sounds to describe 11 objects. Sounds are represented on a linear spectrum by numbers between 0 and 1. The similarity between two sounds, xi and xj, is given by sij = exp(−α|xi − xj|). For the computer simulation, m sounds are randomly chosen from a uniform distribution on (0,1). Objects have different values, ai, chosen from a uniform distribution on (0,1). The payoff for language L against L′ is given by Eq. 2. In this simulation, we do not model individual players but simply evaluate whether or not a mutant language will be able to invade and replace the existing language. The simulation is started with a language L whose active matrix P has random elements pij sampled from a uniform distribution on (0,1) and normalized such that all rows have a sum of one. The passive matrix Q is derived from the active matrix: qji = pij/Σipij. Then a mutant language L′ is produced. If F(L′,L) < F(L,L), then L′ cannot invade. Another mutant is generated. If F(L′,L′) > F(L′,L) > F(L,L), then L′ can invade and take over. The original language L is replaced by L′. The simulation searches for mutants that can replace L′. (It is also possible, though very rare, that F(L′,L) > F(L,L), but F(L′,L′) < F(L,L′). In this case, there would be a stable equilibrium between L and L′ and the simulation would stop.) The figure shows the final outcome after 100,000 different mutants, but the basic structure of the language is already present after 4,000 mutants. The x axis indicates the different sounds sorted according to their xi value; the y axis indicates objects ranked according to their ai value (20 being the most valuable). The 11 most valuable objects (y axis) are associated with nine different sounds (x axis). One sound is used for three different objects (including the two least valuable objects described by the language). The sounds seem ideally selected to minimize their similarity. Parameter values: α = 2, n = 20, m = 40; mutation process: all pij values between 0 and 1 are randomly changed within an interval ± 0.01; with probability 0.001, any pij is changed to 0 or 1; with probability 0.001, a whole column or a whole row of the P matrix is changed into 0. The mutation rules are designed to provide enough chance to gain or lose sounds or objects.

The principal result of the extended model, including misunderstanding, is that of a “linguistic error limit”: the number of distinguishable sounds in a protolanguage, and therefore the number of objects that can be accurately described by this language, is limited. Adding new sounds increases the number of objects that can be described but at the cost of an increased probability of making mistakes; the overall ability to transfer information does not improve. This obstacle in the evolution of language has interesting parallels with the error-threshold concept of molecular evolution (40). The origin of life has been described as a passage from limited to unlimited hereditary replicators, whereas the origin of language as a transition from limited to unlimited semantic representation (41).

Word Formation.

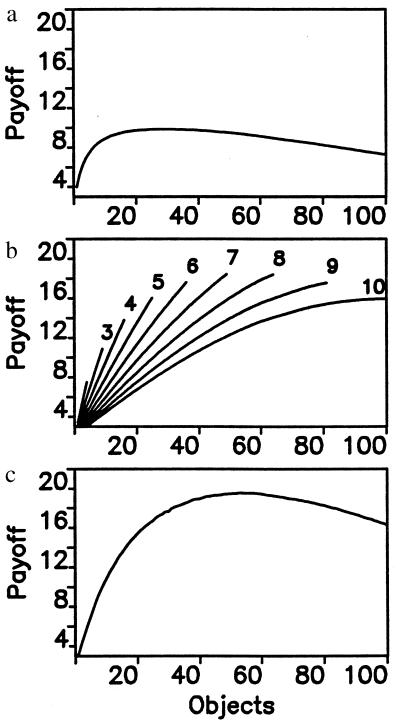

The way to overcome the error limit is by combining sounds into words. Words are strings of sounds. As before, we define the fitness of a language as the total amount of successful information transfer. The maximum fitness is obtained by summing over all probabilities of correct understanding of words. For a language with m sounds (phonemes) and a word-length l, the maximum payoff is given by F(m,l) = ml[1 + (m − 1)ɛ]−l, which converges to 1/ɛl for large values of m, thus allowing a much greater potential for communication. This equation assumes that understanding of a word is based on the correct understanding of each individual sound.

More realistically, we may assume that correct understanding of a word is based (to some extent) on matching the perceived string of phonemes to known words of the language. Consider a language with N words, wi, which are strings of phonemes: wi = (xi1, xi2… xil). For m different phonemes there are ml possible words. A particular language will contain a subset of these words, N ≤ ml. We define the similarity between two words as the product of the similarities between individual phonemes in corresponding positions. The similarity between word wi and wj is Sij = Πk=1lsij(k), where sij(k) denotes the similarity between the k–th phonemes of words wi and wj. The probability of correctly understanding word wi is Pi = 1/Σjml=1Sijσj, where σj = 1 if word wj is part of the language, and σj = σ if word wj is not part of the language. The parameter σ is a number between 0 and 1 and specifies the degree to which word recognition is based on correct understanding of every phoneme versus understanding of the whole word. If σ = 0, then each word is only compared with every other word that is a part of the language; correct understanding of a word consists in comparing the perceived word with all other words that are part of the lexicon. An implicit assumption here is that individuals have perfect knowledge of the whole lexicon. If σ = 1, then every word is compared with every other possible word that can be formed by combining the phonemes. Correct understanding of a word requires a correct identification of each individual phoneme. The listener does not need to have a list of the lexicon. A value of σ between 0 and 1 blends these two possibilities. In this case, recognition of a word is to some extent based on identification of each individual phoneme and to some extent on identification of the word selected from the list of all words that are contained in the language. The maximum payoff for such a language is given by F = Σi=1NPi (Fig. 3).

Figure 3.

Word formation can overcome the error limit. Suppose there are n = 100 objects with values ai uniformly distributed between 0 and 1. (a) Without word formation, each object is described by one sound. The similarity between different sounds is ɛ. The maximum payoff of a language with m sounds is given by F = [1 + (m − 1)ɛ]−1Σi=1mai, where the ai are ranked in descending order. In our example, ɛ = 0.2. The maximum payoff is obtained if the 28 most valuable objects are being described by individual sounds. All other objects are ignored. (b) As a simple example of word formation, we consider that each word consists of two sounds. The maximum payoff of a language with m sounds is F = [1 + (m − 1)ɛ]−2Σi=1Mai, where M is the smaller of m2 or n. In this model, correct identification of a word requires correct understanding of each individual sound. The figure shows the payoff curves for languages that have m = 2 to 10 different sounds. For each language, it is best to make use of the whole repertoire, that is, to use all m2 combinations as words. The maximum payoff is achieved for m = 7, which leads to 49 words describing 49 objects. (c) A more sophisticated theory of word recognition assumes that correct understanding of a word is based on matching the perceived sequence of sounds to all combinations that are thought to be part of the lexicon of the language. For our example, we find that the maximum fitness is obtained for m = 11 sounds, when 52 of the 121 possible combinations are used as words. A smaller or higher number of sounds, m, leads to a lower maximum fitness. If longer words are being admitted, then both cases (b and c) lead to optimum solutions that describe a larger fraction of all objects.

Combining sounds into words leads to an essentially unlimited potential for different words. This step in language evolution can be seen as a transition from an analogue to a digital system. The repertoire is not increased by adding more sounds, but by combining a set of easily distinguishable sounds into words. In all existing human languages, only a small subset of the sounds producible by the vocal apparatus are employed to generate a large number of words. These words are then used to construct an unlimited number of sentences. The crucial difference between word and sentence formation is that the first consists essentially of memorizing all (relevant) words of a language, whereas the second is based on grammatical rules. We do not memorize a list of all possible sentences.

The Evolution of Basic Grammatical Rules.

The next step in language evolution is the emergence of a basic syntax or grammar. Recall that by combining sounds into words, the protolanguage achieves an almost limitless potential for generating words with the power of describing a large number of objects or actions. Grammar emerges in the attempt to convey more information by combining these words into phrases or sentences. Simply naming an object will be less valuable than naming it and describing its action. (A leopard can be stalking, in which case it is a serious risk, or merely sleeping and thereby posing a lesser risk.) There is an obvious advantage to describing both objects and actions. Suppose there are n objects and h actions; there are nh possible combinations, but only a fraction, φ, of them may be relevant (for example: leopard runs; monkey runs; but not banana runs). A “nongrammatical” approach would be to conceive N = φnh different words for all combinations. A “grammatical” approach would be to have n words for objects (i.e., nouns) and h words for actions (i.e., verbs). Let us compare the fitness of grammar and nongrammar.

Again, we will include errors, this time as a probability to mistake words, which can include acoustic misunderstanding and/or incorrect assignment of meaning. The maximum fitness of a nongrammatical language with N different words is Fng = N/[1 + (N − 1)ξ]. The maximum fitness for a grammatical language is Fg = N/{[1 + (n − 1)ξ][1 + (h − 1)ξ]}. Here, ξ is the similarity between words. In the nongrammatical language, each event is described by one word, and correct communication requires that this word is distinguished from N − 1 other words. The grammatical language uses two words for every event: we can say that nouns describe objects and verbs describe actions. Each noun has to be distinguished from n − 1 other nouns, and each verb from h − 1 other verbs. Whether grammar wins in the evolutionary language game depends on the number of combinations of nouns and verbs that describe relevant events. Fg > Fng leads to

|

3 |

If there is no possibility of mistakes (ξ = 0), then there is no difference between grammar and nongrammar. If there are too many mistakes (ξ > ξmax), then grammar is disadvantageous. Between these two limits, there is a “grammar zone” (0 < ξ < ξmax), where grammar has a higher fitness than nongrammar.

From Eq. 3, it follows that a necessary condition for grammar to win is

|

4 |

The number of events must exceed (or equal) the sum of nouns and verbs that can be constructed to describe these events. In other words, a grammatical system is favored only if the number of relevant sentences (that individuals want to communicate to each other) exceeds the number of words that make up these sentences. Note that the main difference between grammar and nongrammar is not to use one or two words for each event, but the number (and types) of rules that need to be remembered for correct communication. Grammar can be seen as a simplified rule system that reduces the chances of mistakes in implementation and comprehension and is therefore favored by natural selection in a world where mistakes are possible.

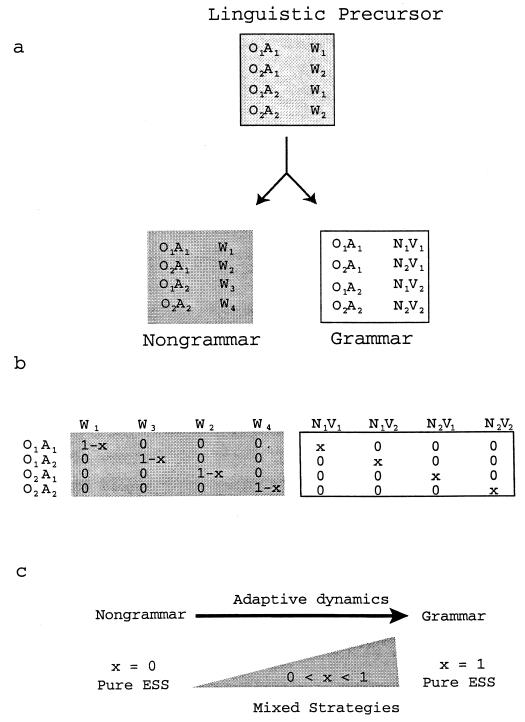

Thus far, we have specified only those conditions conducive for grammar to have a higher fitness than nongrammar. We can also formulate a model describing how grammar can evolve gradually by natural selection (see Fig. 4 and Appendix).

Figure 4.

Grammar can evolve by natural selection. (a) Imagine a simple protolanguage describing two objects, O1 and O2, by two words, W1 and W2. Suppose each object can occur with two actions, A1 and A2. Thus, there are four events, O1A1, O2A1, O1A2, and O2A2, that are described by two words. However, simply specifying the object may be less valuable than specifying the object and describing its action. Therefore, the language can be improved by distinguishing between all 4 events. This improvement can happen in two ways: (i) a nongrammatical approach is to specify four words, W1–W4, for these events; (ii) a grammatical approach is to have two words, N1 and N2 (nouns), for the objects and two words, V1 and V2 (verbs), for the actions. (b) The active matrix, P, for a range of mixed strategies that use the grammatical or the nongrammatical approach with probability x. The pure strategies are nongrammar (x = 0) and grammar (x = 1). (c) Both nongrammar and grammar are evolutionarily stable strategies (ESS), but every mixed strategy, x, is dominated by all mixed strategies, y, with y > x. The adaptive dynamics (42–44) flow from nongrammar to grammar.

The model can be extended in many ways. For example, events can consist of one action and several objects. Objects may be associated with properties, giving rise to adjectives. Events can have similar associations, giving rise to adverbs. The essential result is that a grammatical language that has words for each component of an event receives a higher payoff in the evolutionary language game than a nongrammatical language that has words (or a string of words) for the whole event. In this context, the grammar of human languages evolved to reflect the “grammar of the real world” (that is, the underlying logic of how objects relate to actions and other objects).

Conclusions.

In this paper, we have outlined simple mathematical models that provide new insights into how natural selection can guide three fundamental, early steps in the evolution of human language.

The question concerning why only humans evolved language is hard to answer. Interestingly, however, our models do not suggest that a protolanguage will evolve under all circumstances but outline several obstacles impeding its emergence. (i) In the simplest model (Fig. 1), signal–object associations form only when information transfer is beneficial to both speaker and listener. Otherwise, the evolution of communication requires cooperation between individuals. Thus, cooperation may represent an important prerequisite for the evolution of language. (ii) In the presence of errors, only a very limited communication system describing a small number of objects can evolve by natural selection (Fig. 2). We believe that this error limit is where most animal communication came to a stop. The obvious means to overcome this limit would be to use a larger variety of sounds, but this approach leads into a cul-de-sac. A completely different approach is to restrict the system to a subset of all possible sounds and to combine them into “words” (Fig. 3). (iii) Finally, although grammar can be an advantage for small systems (Fig. 4), it may become necessary only if the language refers to many events. Thus, the need for grammar arises only if communication about many different events is required: a language must have more relevant sentences than words. It is likely that for most animal communication systems, the inequality (4) is not fulfilled.

We view this paper as a contribution toward formalizing the laws that governed the evolution of the primordial human languages. There are, of course, many important and more complex properties of human language that we have not considered here and that should ultimately be part of an evolutionary theory of language. We argue, however, that any such theory has to address the basic questions of signal–object association, word formation, and the emergence of a simple syntax or grammar, for these are the atomic units that make up the edifice of human language.

Acknowledgments

Thanks to Dominic Welsh, Sebastian Bonhoeffer, Lindi Wahl, Nick Grassly, and Robert May for stimulating discussion. Support from The Alfred P. Sloan Foundation, The Florence Gould Foundation, The Ambrose Monell Foundation, and the J. Seward Johnson Trust is gratefully acknowledged.

Appendix

Consider two objects, O1 and O2, that can cooccur with two actions, A1 and A2. Thus, there are four events, O1A1, O2A1, O1A2, and O2A2. The nongrammatical approach is to describe each event with a separate word, W1–W4. The grammatical approach is to have separate words for objects, N1 and N2, and actions, V1 and V2. Consider mixed strategies that use the grammatical system with probability x. The active matrix, P, is given by

|

The rows correspond to the four events: O1A1, O2A1, O1A2, and O2A2. The columns correspond to the eight signals: W1, W2, W3, W4, N1V1, N2V1, N1V2, and N2V2. The pure strategies, x = 0 and x = 1, describe nongrammar and grammar, respectively. The passive matrix, Q, is obtained by replacing all nonzero entries in P by 1 (and transforming this matrix). Note that mixed strategies, 0 < x < 1, have eight nonzero entries, whereas pure strategies have only four nonzero entries in both P and Q. Thus, mixed strategies have the possibility to understand both grammar and nongrammar, whereas the two pure strategies do not understand each other. Finally, we include the possibility of errors, either in implementation or comprehension. The error matrix is given by

|

Here, ξ is the similarity between words or the fraction of times a word is mistaken or misimplemented for another. We used η1 = 1/(1 + 3ξ) and η2 = 1/(1 + ξ)2. We assume that the nongrammatical one-word sentences are not confused with the grammatical two-word sentences. The error matrix specifies the crucial difference between grammar and nongrammar.

The system can be completely understood in analytic terms. The payoff for language x communicating with language y is given (with Eq. 2) by: F (x,y) = (2 − x − y)f1 + (x + y)f2, where f1 = 4/(1 + 3ξ) and f2 = 4/(1 + ξ)2. These equations hold for x and y between 0 and 1. Otherwise, we have F(x,0) = F(0,x) = (2 − x)f1 and F(x,1) = F (1,x) = (1 + x)f2. The payoffs for nongrammar and grammar are, respectively, F(0,0) = 2f1 and F(1,1) = 2f2. Because f1 < f2 and f2 < 2f1, we have the following interesting dynamics: both x = 0 and x = 1 are evolutionarily stable strategies that cannot invade any other strategy, but every mixed strategy, x, is invaded and replaced by every other strategy, y, if x < y < 1. Thus, the adaptive dynamics flow toward grammar. Alternatively, one can also assume that the pure strategies can understand each other, that is, the passive matrices of all strategies are the same; in this case, grammar (x = 1) is the only evolutionarily stable strategy and can beat every other strategy.

References

- 1.Chomsky N. Language and Mind. New York: Harcourt Brace Jovanovich; 1972. [Google Scholar]

- 2.Pinker S. The Language Instinct. New York: Morrow; 1994. [Google Scholar]

- 3.Eco U. The Search for the Perfect Language. London: Fontana; 1995. [Google Scholar]

- 4.Seyfarth R, Cheney D, Marler P. Science. 1980;210:801–803. doi: 10.1126/science.7433999. [DOI] [PubMed] [Google Scholar]

- 5.Burling R. Curr Anthropol. 1989;34:25–53. [Google Scholar]

- 6.Cheney D, Seyfarth R. How Monkeys See the World. Chicago: Univ. of Chicago Press; 1990. [Google Scholar]

- 7.Greenberg J H. Language, Culture and Communication. CA: Stanford Univ. Press; 1971. [Google Scholar]

- 8.Cavalli-Sforza L L, Cavalli-Sforza F. The Great Human Diasporas. Reading, MA: Addison–Wesley; 1995. [Google Scholar]

- 9.Newport E. Cogn Sci. 1990;14:11–28. [Google Scholar]

- 10.Bates E. Curr Opin Neurobiol. 1992;2:180–185. doi: 10.1016/0959-4388(92)90009-a. [DOI] [PubMed] [Google Scholar]

- 11.Hurford J R. Cognition. 1991;40:159–201. doi: 10.1016/0010-0277(91)90024-x. [DOI] [PubMed] [Google Scholar]

- 12.Lieberman P. The Biology and Evolution of Language. Cambridge, MA: Harvard Univ. Press; 1984. [Google Scholar]

- 13.Nobre A, Allison T, McCarthy G. Nature (London) 1994;372:260–263. doi: 10.1038/372260a0. [DOI] [PubMed] [Google Scholar]

- 14.Aboitiz F, Garcia R. Brain Res Rev. 1997;25:381–396. doi: 10.1016/s0165-0173(97)00053-2. [DOI] [PubMed] [Google Scholar]

- 15.Hutsler J J, Gazzaniga M S. Neuroscientist. 1997;3:61–72. [Google Scholar]

- 16.Deacon T. The Symbolic Species. London: Penguin; 1997. [Google Scholar]

- 17.Cavalli-Sforza L L, Feldman M W. Cultural Transmission and Evolution: A Quantitative Approach. Princeton: Princeton Univ. Press; 1981. [PubMed] [Google Scholar]

- 18.Yasuda N, Cavalli-Sforza L L, Skolnick M, Moroni A. Theor Popul Biol. 1974;5:123–142. doi: 10.1016/0040-5809(74)90054-9. [DOI] [PubMed] [Google Scholar]

- 19.Aoki K, Feldman M W. Proc Natl Acad Sci USA. 1987;84:7164–7168. doi: 10.1073/pnas.84.20.7164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Aoki K, Feldman M W. Theor Popul Biol. 1989;35:181–194. doi: 10.1016/0040-5809(89)90016-6. [DOI] [PubMed] [Google Scholar]

- 21.Cavalli-Sforza L L. Proc Natl. Acad Sci USA. 1997;94:7719–7724. doi: 10.1073/pnas.94.15.7719. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Hurford J R. Lingua. 1989;77:187–222. [Google Scholar]

- 23.Von Frisch K. The Dance Language and Orientation of Bees. Cambridge, MA: Harvard Univ. Press; 1967. [Google Scholar]

- 24.Hauser M D. The Evolution of Communication. Cambridge, MA: Harvard Univ. Press; 1996. [Google Scholar]

- 25.Chomsky N. Rules and Representations. New York: Columbia Univ. Press; 1980. [Google Scholar]

- 26.Bickerton D. Language and Species. Chicago: Univ. of Chicago Press; 1990. [Google Scholar]

- 27.de Saussure F. Cours de Linguistique Generale. Paris: Paycot; 1916. [Google Scholar]

- 28.Pinker S, Bloom P. In: The Adapted Mind: Evolutionary Psychology and the Generation of Culture. Barkow J, Cosmides L, Tooby J, editors. London: Oxford Univ. Press; 1992. pp. 451–493. [Google Scholar]

- 29.Dunbar R. Grooming, Gossip and the Evolution of Language. Cambridge, MA: Harvard Univ. Press; 1997. [Google Scholar]

- 30.MacLennan B. In: Artificial Life II: SFI Studies in the Sciences of Complexity. Langton C G, Taylor C D F, Rasmussen S, editors. Redwood City, CA: Addison–Wesley; 1992. pp. 631–658. [Google Scholar]

- 31.Hutchins E, Hazelhurst B. How to Invent a Lexicon: The Development of Shared Symbols in Interaction. London: UCL; 1995. [Google Scholar]

- 32.Akmajian A, Demers R A, Farmer A K, Harnish R M. Linguistics: An Introduction to Language and Communication. Cambridge, MA: MIT Press; 1997. [Google Scholar]

- 33.Hurford J R, Studdert-Kennedy M, Knight C. Approaches to the Evolution of Language. Cambridge, U.K.: Cambridge Univ. Press; 1998. [Google Scholar]

- 34.Parisi D. Brain Cogn. 1997;34:160–184. doi: 10.1006/brcg.1997.0911. [DOI] [PubMed] [Google Scholar]

- 35.Steels L. Evol Commun J. 1997;1(1):1–34. [Google Scholar]

- 36.Oliphant M. BioSystems. 1996;37:31–38. doi: 10.1016/0303-2647(95)01543-4. [DOI] [PubMed] [Google Scholar]

- 37.Maynard Smith J, Szathmary E. The Major Transitions in Evolution. New York: Freeman; 1995. [Google Scholar]

- 38.Maynard Smith J. Evolution and the Theory of Games. Cambridge, U.K.: Cambridge Univ. Press; 1982. [Google Scholar]

- 39.Smith W J. The Behavior of Communicating. Cambridge, MA: Harvard Univ. Press; 1977. [Google Scholar]

- 40.Eigen M, Schuster P. The Hypercycle: A Principle of Natural Self-Organisation. Berlin: Springer; 1979. [DOI] [PubMed] [Google Scholar]

- 41.Szathmary E, Maynard Smith J. Nature (London) 1995;374:227–232. doi: 10.1038/374227a0. [DOI] [PubMed] [Google Scholar]

- 42.Nowak M A, Sigmund K. Acta Appl Math. 1990;20:247–265. [Google Scholar]

- 43.Metz J A J, Geritz S A H, Meszena F G, Jacobs F J A, van Heerwaarden J S. In: Stochastic and Spatial Structures of Dynamical Systems. Van Strien S J, Verduyn Lunel S M, editors. Amsterdam: North Holland; 1996. pp. 183–231. [Google Scholar]

- 44.Hofbauer J, Sigmund K. Evolutionary Games and Replicator Dynamics. Cambridge, U.K.: Cambridge Univ. Press; 1998. [Google Scholar]

- 45.von Humboldt W. Ueber die Verschiedenheit des Menschlichen Sprachbaus. Bonn: Dummlers; 1836. [Google Scholar]