Abstract

BACKGROUND

Knowledge acquisition is a goal of residency and is measurable by in-training exams. Little is known about factors associated with medical knowledge acquisition.

OBJECTIVE

To examine associations of learning habits on medical knowledge acquisition.

DESIGN, PARTICIPANTS

Cohort study of all 195 residents who took the Internal Medicine In-Training Examination (IM-ITE) 421 times over 4 years while enrolled in the Internal Medicine Residency, Mayo Clinic, Rochester, MN.

MEASUREMENTS

Score (percent questions correct) on the IM-ITE adjusted for variables known or hypothesized to be associated with score using a random effects model.

RESULTS

When adjusting for demographic, training, and prior achievement variables, yearly advancement within residency was associated with an IM-ITE score increase of 5.1% per year (95%CI 4.1%, 6.2%; p < .001). In the year before examination, comparable increases in IM-ITE score were associated with attendance at two curricular conferences per week, score increase of 3.9% (95%CI 2.1%, 5.7%; p < .001), or self-directed reading of an electronic knowledge resource 20 minutes each day, score increase of 4.5% (95%CI 1.2%, 7.8%; p = .008). Other factors significantly associated with IM-ITE performance included: age at start of residency, score decrease per year of increasing age, −0.2% (95%CI −0.36%, −0.042%; p = .01), and graduation from a US medical school, score decrease compared to international medical school graduation, −3.4% (95%CI −6.5%, −0.36%; p = .03).

CONCLUSIONS

Conference attendance and self-directed reading of an electronic knowledge resource had statistically and educationally significant independent associations with knowledge acquisition that were comparable to the benefit of a year in residency training.

KEY WORDS: knowledge acquisition, in-training exam, UpToDate

INTRODUCTION

Medical knowledge is one of six general competencies endorsed by the Accreditation Council for Graduate Medical Education (ACGME).1 Standardized exams are well suited to the assessment of medical knowledge.

The Internal Medicine In-Training Examination (IM-ITE) is a multiple choice test used to self-assess medical knowledge of internal medicine residents during their 3-year training program. This national standardized exam developed jointly by the American College of Physicians, Association of Professors of Medicine, and the Association of Program Directors of Internal Medicine2 is administered each October to over 18,000 internal medicine residents.3 This validated exam is predictive of performance on the American Board of Internal Medicine Certification Examination (ABIM-CE).4–7 While some studies have attempted to assess the impact of specific learning habits on medical knowledge acquisition, they have demonstrated either no association or very small associations with ITE performance.8–20 Importantly, these studies did not account for the influence of prior test performance on subsequent test performance in the analyses of factors associated with medical knowledge acquisition.

The purpose of this study was to examine the impact of learning habits such as conference attendance and utilization of an electronic knowledge resource on medical knowledge acquisition as measured by the IM-ITE.

METHODS

Setting

The Mayo Clinic Internal Medicine Residency has approximately 48 categorical residents in each of 3 years of sequential training. All 195 residents from the 4 classes who took the IM-ITE in 2002 and 2003 formed the cohort for this study (see Tables 1 and 2). There were no major changes to the overall residency curriculum during the time of this study. Variables reported or speculated to influence IM-ITE performance were obtained from data on file with the internal medicine residency program and the human resources department of the Mayo Clinic, Rochester, MN (see Table 2). In the manner usual to this residency, an electronic card-swipe system was used to record attendance at 4 residency required conferences each week: morbidity and mortality, grand rounds, and two core-curriculum conferences. United States Medical Licensing Exam Step 1 (USMLE-1) is the first of 3 standardized tests required for medical licensure in the United States. Most medical students take USMLE-1 after their second year of medical school. These scores were available for residents in our cohort and were obtained from data provided at the time of application to the residency program. Residents were required to take an objective structured clinical examination (OSCE) where interpretation of 10 patients with physical exam abnormalities was scored on a 100-point scale.12 Class rank is an ordinal numbering of residents in each class based on their grades averaged over the number of residency rotations they have completed. National residency matching program (NRMP) rank is an ordinal list by which medical students are assigned to residency programs after medical school. Information regarding usage of the electronic knowledge resource is described below.

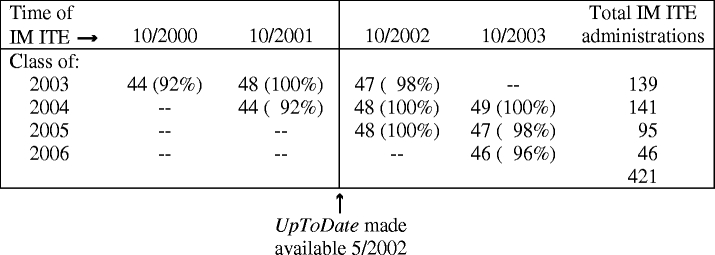

Table 1.

Cohort design: total residents (percent eligible) per class who took the IM-ITE during each year

Table 2.

Cohort and Covariate Characteristics

| Demographics (N = 195*) | ||

|---|---|---|

| Age (years) at entry into residency: mean (SD) | 28.4 (3.8) | |

| Gender, female: n (%) | 61 (31%) | |

| International medical graduates: n (%) | 34 (17%) | |

| Marital status: n (%) | ||

| Married | 106 (54%) | |

| Single | 85 (44%) | |

| Divorced | 4 ( 2%) | |

| Parenthood† | 47 (11%) | |

| IM-ITE Characteristics (N = 421‡) | Mean Score (SD) | |

| PGY1§ residents (n = 182) | 60% (7.8%) | |

| PGY2§ residents (n = 143) | 68% (7.1%) | |

| PGY3§ residents (n = 96) | 73% (6.8%) | |

| Other resident-specific data | Mean (SD) | Range |

| UpToDate hours of use prior to 2002 | 17.1 (15.1) | 0 to 106 |

| IM-ITE// | ||

| UpToDate hours of use prior to 2003 | 33.4 (28.0) | 0 to 142 |

| IM-ITE// | ||

| Conferences attended in year prior to | 59 (29) | 0 to 158 |

| IM-ITE | ||

| USMLE Step 1 score¶ | 230 (15.3) | 187 to 266 |

| OSCE Score# | 43.8 (11.0) | 17.7 to 73.7 |

*While all residents were encouraged to take the exam each year, not all were available to do so due to absences (for instance: away electives, illnesses, transfers, etc). The total numbers of residents from each class who took the IM-ITE at least once were 48, 49, 52, and 46 for the classes of 2003, 2004, 2005, and 2006, respectively, for a combined total cohort of 195 residents.

†Parenthood is counted in this table if the resident had a child at the time of any IM-ITE, and the denominator for this percentage is 421, the number of test administrations; however, parental status at the time of each separate IM-ITE administration was used for analysis, as this changed in the cohort from year to year.

‡Total number of IM-ITE scores includes every IM-ITE score for each resident in the cohort, including those scores before 2002 (see text and Table 1).

§PGY, Post graduate year, equivalent to year in residency

//Before the 2002 IM-ITE, UpToDate was available to PGY2 and PGY3 residents from the initial subscription activation in May 2002 until IM-ITE administration in October 2002. Before the 2003 IM-ITE, UpToDate was available to PGY2 and PGY3 residents from November 2002 until IM-ITE administration in October 2003. UpToDate was available to PGY1 residents both years from entry into residency in July until IM-ITE administration in October (see also Table 1).

¶USMLE, United States Medical Licensing Examination

#OSCE, objective structured clinical examination

Also included in the model were the ordinally numbered data for calendar year of test administration, class rank, and National residency matching program (NRMP) rank order (see text and Table 3).

Residents had opportunity to withhold IM-ITE scores or electronic knowledge resource usage information from analysis. All elected to be included in the study. This study was approved by the institutional review boards of the Mayo Clinic and the Johns Hopkins Bloomberg School of Public Health.

Electronic Knowledge Resource

UpToDate (Waltham, MA) is a proprietary electronic knowledge resource widely used by trainees and clinicians because of ease of use, broad coverage of multiple topics, and frequent updates.21–24 This learning resource was selected as a study parameter for 2 reasons. First, UpToDate is a common knowledge compendium that is used by many internal medicine residents. A survey of nearly 18,000 residents accompanying the IM-ITE in 2003 noted that UpToDate was the most frequently used electronic resource for clinical information(Schultz, October 4, 2005, personal communication). Second, usage of this learning resource is objectively and quantitatively measurable. To facilitate provision of CME credits, UpToDate tracks usage for each subscriber. Usage data are measured by the amount of time a user is logged on to UpToDate, with a maximum of 10 minutes recorded for any topic per accession. For example, if a topic is accessed for 3 minutes and then the user logs off or accesses another topic within the program, then, measured time for the topic is 3 minutes. If a topic is accessed and then left open for more than 10 minutes, the measured time is 10 minutes. Printing a topic review results in 10 minutes of credit. Hence, 1 hour of credit represents reading or printing at least 6 topics.

Beginning in May 2002, the Mayo Clinic Internal Medicine Residency, Rochester, MN purchased individual subscriptions to UpToDate for all of its residents for internet use (see Table 1). Residents were provided with UpToDate without any expectations of when it should be used or what should be read. Thus, each individual resident’s use of the resource was self-directed. Neither the residents nor the investigators knew that UpToDate use was being electronically tracked before the 2003 IM-ITE. Residents had access to UpToDate for at most 6 months before the 2002 IM-ITE exam and 18 months before the 2003 exam. None of the authors have any affiliation with UpToDate nor have they received any financial support from UpToDate. Other than providing the requested data, UpToDate was not involved in the design of the study nor the analysis of the data.

Internal Medicine In-Training Examination

All data were collected retrospectively after completion of the 2003 IM-ITE. Consistent with the recommendations of the developers of the IM-ITE that the test be used for resident and program self-assessment to guide further improvement, all the residents were offered the examination, but were advised not to study for the examination.2 For this cohort, IM-ITE scores were analyzed for all residents who took the IM-ITE in 2002 and/or 2003 from the residency classes graduating in 2003, 2004, 2005, and 2006. To properly account for previous performance, IM-ITE scores were collected from every previous administration of the examination for each resident in the cohort. For example, scores were available for the class of 2003 from 3 previous years: 2000, 2001, and 2002. While all residents were encouraged to take the exam each year, not all were available to do so due to absences (for instance: away electives, illness, transfers, etc). For the entire cohort of 195 residents, 421 separate IM-ITE scores were collected (see Tables 1 and 2). The fact that not all residents took the IM-ITE the same number of times is one reason for use of a random effects model (see “Statistical Analysis” below).

Scores on the IM-ITE are reported as percent questions correct and percentile, based on year of residency, normed for performance of other residents of similar training level across the country. For purposes of clarity, when we refer to IM-ITE score, we are referring to the percent questions correct.

Statistical Analysis

Data were collected electronically into a Microsoft access database. Graphical and tabular displays were used to examine the data for unusual values and patterns of interest. Linear regression models were used to address the question of whether conference attendance and UpToDate use were associated with IM-ITE score among otherwise similar residents. Because multiple test scores from individual residents are likely correlated, random effects models were used to analyze the repeated measures of IM-ITE.25,26 Each resident can have his/her unique level of performance (random intercept). With at most 3 observations per resident, estimating other random effects, in particular a random slope, is difficult.25,26 We included fixed effects of all variables listed in Table 3, calendar year of test administration, and a random intercept that accounts for unmeasured factors that may influence IM-ITE performance and cause correlation among repeated scores for an individual, including the possibility of modified testing behavior based on previous test scores.11 In a random intercept model, the inferences about each predictor combine test-score differences within an individual resident over time with differences among all residents over time, giving more weight to the former when there is more variation in the residents’ levels of performance. We used robust variance estimates for the fixed effects coefficients to reduce the sensitivity of our findings to incorrect assumptions about the random effects.25 All analyses were conducted by 2 authors (FSM and SLZ) using generalized estimating equations as implemented in Stata 8.2 (StataCorp LP, College Station, TX). Results were considered statistically significant if p values were less than or equal to .05.

Table 3.

Results expressed as change in IM-ITE score (percent items correct) associated with each covariate

| Covariate | % Score change | P value | 95% CI |

|---|---|---|---|

| Learning habit variables | |||

| Conferences attended before IM-ITE (per 100 conferences attended) | 3.9 | <.001 | (2.1, 5.7) |

| UpToDate use in year prior to IM-ITE (per 100 hours of use) | 3.7 | .008 | (1.0, 6.4) |

| Prior medical training variables | |||

| PGY (per increasing year of residency)* | 5.1 | <.001 | (4.1, 6.2) |

| US graduate vs IMG | −3.4 | .03 | (−6.5, −0.36) |

| Prior medical achievement variables | |||

| USMLE step 1 score† (per unit USMLE score increase) | 0.19 | <.001 | (0.12, 0.27) |

| NRMP rank order (per unit rank)‡ | −0.025 | .03 | (−0.047, −0.002) |

| OSCE score§ (per unit OSCE score increase) | 0.049 | .32 | (−0.048, 0.15) |

| Class Rank (per unit rank)// | −0.045 | .20 | (−0.11, 0.024) |

| Demographic Variables | |||

| Age at entry into residency (per year) | −0.20 | .01 | (−0.36, −0.042) |

| Gender (female vs male) | −0.35 | .68 | (−2.0, 1.3) |

| Single vs married | −1.5 | .08 | (−3.2, 0.2) |

| Divorced vs married | 6.1 | .20 | (−3.2, 16) |

| No children vs parenthood¶ | 1.9 | .055 | (−0.044, 3.8) |

Results from random effects linear regression analysis of associations of IM-ITE score controlling for multiple covariates. Each coefficient may be interpreted as the change in IM-ITE score associated with that covariate when adjusted for all other covariates in the model.

*PGY, Post graduate year, equivalent to year in residency

†USMLE, United States Medical Licensing Examination

‡NRMP, National residency matching program

§OSCE, Objective structured clinical examination

//Class Rank is an ordinal numbering based on average faculty evaluations (i.e., grades) for all residency rotations.

¶Parenthood indicates whether or not the resident had any children at the time of the IM-ITE administration.

RESULTS

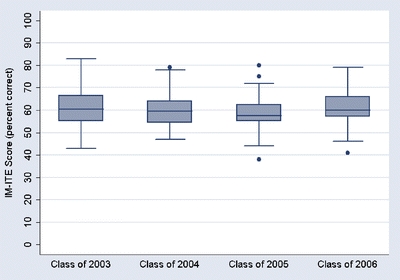

The association of calendar year of test administration on score was intrinsic to our a priori model in addition to the variables listed in Table 3. When adjusting for these variables, calendar year of test administration was insignificant, specifically referent to 2000; the test scores from 2001, 2002, and 2003 demonstrated no significant association related to year of administration. Similarly, when including year of graduation in the model, there was no evidence of a “class” effect, specifically referent to the class of 2003; the classes of 2004, 2005, and 2006 demonstrated no significant association with score, and these additions did not change the coefficients or p values in any meaningful way for the other variables in the model. Additionally, the first year IM-ITE scores of residents in each class were similar (see Fig. 1). Taken together, these results demonstrated that there was no evidence of bias related to cohort, class, or baseline knowledge effects.

Figure 1.

Baseline level of knowledge similar between classes. Shown here are the standard box plots for the first year IM-ITE scores for each class of residents which form the overall cohort for the study. The upper and lower portions of each box represents the 75th and 25th percentile for scores in each class. The horizontal line in the middle of each box represents the median score for each class. The vertical lines extending from each box reach to the next adjacent scores from the 75th and 25th percentile scores. If scores lie outside the adjacent values, they are represented by individual dots so that the full range of scores can be seen. The mean (SD) for the first year IM-ITE scores for the classes of 2003, 2004, 2005, and 2006 were 60 (8.1), 60 (7.7), 59 (7.6), and 61 (7.9), respectively. There was no statistical difference in these baseline score distributions assessed by ANOVA, p = .67

When adjusting for all variables associated with prior medical training, prior achievement, and demographics, attendance at residency conferences was associated with an increase in IM-ITE score of 3.9% per 100 conferences attended (95% CI 2.1%, 5.7%; p < .001). There were 4 conferences per week or approximately 200 conference opportunities per year. Thus, an IM-ITE score increase of 3.9% would be expected for attendance at one half of the conferences.

Similarly, use of UpToDate was associated with an increase in IM-ITE score of 3.7% per 100 hours of use (95%CI 1.0%, 6.4%; p = .008). Thus, UpToDate use on average of 20 minutes per day or 122 hours in the year before the IM-ITE would be associated with a score increase of 4.5% (95%CI 1.2%, 7.8%).

As expected, variables associated with better prior medical achievement were associated with higher IM-ITE scores. Specifically, each unit score increase on USMLE-1 was associated with an increase in IM-ITE score of 0.19% (95%CI 0.12%, 0.27%; p < .001). Similar increases in IM-ITE score were associated with USMLE-2 and USMLE-3 scores. However, these data were not included in the final model because unlike USMLE-1 scores, USMLE-2 and USMLE-3 scores were not uniformly available for all residents in our cohort due to the timing of these examinations in the training cycle.

For the remainder of the results of the analysis and comparisons of covariates, please see Table 3.

DISCUSSION

Medical knowledge acquisition is an important goal of residency training. Our findings suggest that self-directed reading of an electronic knowledge resource 20 minutes per day or conference attendance 2 times per week is associated with improved IM-ITE scores comparable to the score increase associated with 1 year of internal medicine training. These findings are based on quantitative data from objective sources. There was no reliance on survey data or self-reported study habits for this analysis. In addition to these new findings, this study adds to the literature by demonstrating a methodological approach to the relationship of medical knowledge acquisition with learning habits and variables hypothesized to effect test performance such as prior knowledge.

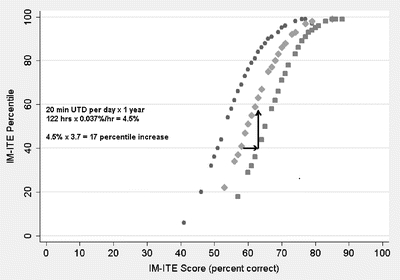

Our findings are educationally as well as statistically significant. Several authors have demonstrated the association between IM-ITE score and passing the ABIM-CE.4–7 Grossman, et al.5 found that IM-ITE scores above the 35th percentile had a positive predictive value of 89% for passing the ABIM-CE. As the correlation between IM-ITE score and percentile is linear below the 90th percentile with a slope of 3.7, utilizing UpToDate for 20 minutes per day would be associated with a 4.5% score increase, which would result in a percentile increase of 17% for those residents who initially scored below the 90th percentile (see Fig. 2). As the range of data for UpToDate use includes amounts greater than the 122 hours associated with use of 20 minutes per day in the year before the IM-ITE, this estimate is appropriate to the model (see Table 2). This level of use is above the mean for our cohort. However, the association of usage with IM-ITE score is proportionate to total usage; so, even the mean usage of 33.4 hours before the 2003 IM-ITE is associated with a score increase of 1.24%, which translates to a percentile increase of 4.6% for those with scores below the 90th percentile. This increase remains educationally significant especially for those who may be near the 35th percentile, which is associated with passing the ABIM-CE.5 It should be noted that mean usage is lower before the 2002 IM-ITE because UpToDate was only available for 6 months before this exam, whereas it was available for the full year before the 2003 IM-ITE (see “Methods” and Tables 1 and 2).

Figure 2.

IM-ITE percentile vs score relationship. IM-ITE scores are reported as percent items correct (score) as well as a national percentile comparing an individual’s score with those of other residents of similar year in residency throughout the nation. Shown here is the 2003 IM-ITE percentile versus score relationship. A similar relationship exists for the 2002 IM-ITE. Below the 90th percentile, the percentile to score relationship is linear (slope 3.7, r2 = .98). Figure text and arrows demonstrate the association of UpToDate (UTD) use for 1 year on IM-ITE score (%) and percentile for average use of 20 minutes (min) per day. Year in residency: 1 (●) 2 (♦) 3 (■)

Although many learners now use electronic knowledge resources such as UpToDate, some educators have raised concerns about the use of knowledge compendia in preference to primary searches of the literature to answer clinical questions.21,27,28 Our findings do not support the suggestion that use of these compendia may actually be detrimental to the acquisition of medical knowledge.29

Although seemingly intuitive, prior studies investigating the association between conference attendance and test performance have shown no effect.8,9 However, these studies were not sufficiently powered, nor did they control for other variables that could affect IM-ITE performance, most notably previous IM-ITE scores. We demonstrate that conference attendance 2 times per week for 1 year is another independent factor associated with IM-ITE increases similar to that of a year of clinical training. Since 100 conferences attended per year are within the range of our data, the estimate is appropriate to our model (see Table 2). Similar to the calculations above for UpToDate usage, the mean conference attendance of 59 per year in our cohort is associated with an IM-ITE score increase of 2.3%, which would translate to a percentile increase of 8.5% for those scoring below the 90th percentile. Thus, even conference attendance at the mean level is associated with educationally significant improvements on the IM-ITE, given that a score of 35th percentile is associated with passing the ABIM-CE.5

Not unexpectedly, we found a positive association between USMLE-1 scores and IM-ITE scores. More important is that when adjusting for this expected association, the other covariates in the model remained significantly associated with IM-ITE score, indicating that these associations can not be accounted for just by innate test-taking ability.

The associations of increasing PGY and IMG status with increasing IM-ITE scores are similar in direction and magnitude to the associations previously reported.3 The better performance by IMGs has been speculated to reflect higher board scores which may become the focus for residency selection committees who have fewer variables of comparison on which to make decisions. However, our study adjusted for USMLE-1 scores in all IMGs in our cohort, suggesting that better IM-ITE performance observed in IMGs is not due to previous board scores or test-taking ability. Thus, IMGs in US residency programs may have learning skills that are as of yet unexplained. We speculate that at least some IMGs come from environments where self-directed learning habits are essential to their success and that continuation of these habits in residency is associated with greater knowledge acquisition. Furthermore, IMGs who train in the United States have a diverse spectrum of previous training, sometimes including completing residencies and practicing in other countries.30 This diversity and possible increased experience may account for some of the increase in IM-ITE scores seen in IMGs.

Several limitations should be considered when interpreting this study. First, this is not a randomized controlled trial. However, a randomized controlled trial would be difficult to perform in the residency with a significant possibility of unintended cross-over between groups. Appropriate observational studies are needed for this environment. Thus, we chose a cohort design at a leading medical center that included all eligible residents followed for 4 years during which a major online learning system was made available to them for the first time. Second, this study was conducted at a single institution, and the results may not necessarily be generalizable to other residencies. The characteristics of the curriculum and learning environment may be unique to each program and difficult to quantitate.31 Although this is a large program with 4 years of data acquisition, the high caliber of learners, as noted by relatively high USMLE-1 scores (mean 230), may set this cohort apart from some other programs. However, the range of USMLE-1 scores for this cohort spans the gamut of possible scores (SD = 15.3, range 187 to 266 with minimum passing score 180), so there is an adequate distribution of prior test performance to allow assessment of USMLE-1 score as a factor associated with IM-ITE score. The learning habits of this cohort could, in fact, be different from residents elsewhere. One might also speculate that demonstrating an association between learning behaviors and increased test performance would be more challenging in cohorts with a performance level that is already quite high. The magnitude of the results might actually be larger at institutions with learners who have less baseline knowledge (e.g., lower USMLE-1 scores).

The associations of other learning habits that are difficult to objectively quantitate were not captured in this study. For example, UpToDate usage was the only reading habit that we were able to measure. Although this is one of the most popular learning resources among internal medicine residents, many are likely to use other resources as well. Learning from textbooks, journals, and popular internal medicine review materials (e.g., American College of Physicians Medical Knowledge and Self-Assessment Program) may well have important associations with knowledge acquisition, but their use is not measurable and not captured in this study. We cannot comment on the relative value of any of these reading materials on knowledge acquisition. It is also possible that UpToDate use may be a marker for increased self-directed study of other materials which, although unmeasurable, may be positively associated with increased IM-ITE scores. Finally, the conferences available to residents at our institution are not available to residents at other institutions. While we suspect that conferences at our institution are similar in content to those at many other residency programs, this finding needs verification in other training environments.

It should be noted that this study focused on a single dimension of physician competency, i.e., medical knowledge. This is the competency most easily measured quantitatively through standardized exams but, admittedly, is alone insufficient to being a good physician.

In conclusion, increased medical knowledge acquisition as measured by IM-ITE performance is associated with regular attendance at educational conferences and with use of an electronic knowledge resource. Previous evidence suggests that residents attempt to modify their learning behaviors based on IM-ITE scores.11 Our study suggests learning modalities that may be helpful in this regard. Furthermore, many residency programs are in the midst of redesign to adjust to duty hour limits, new ACGME requirements to measure competency, and new opportunities to enhance both quality and safety. This study supports the importance of self-directed learning habits in the training of physicians.

Acknowledgments

The authors wish to acknowledge Peter A. Bonis, MD of UpToDate for his assistance in providing the UpToDate usage data. They further wish to thank Dr. Bonis for providing these data without any input into study design, analysis, or publication, which has allowed them to maintain scientific independence for this study. Study findings were presented in oral format at the Association of Medical Education in Europe Annual Meeting, Genoa, Italy, September 14–18, 2006. There were neither internal nor external funding sources for this study.

Conflicts of Interest None disclosed.

References

- 1.ACGME Outcome Project. ACGME General Competencies Vers. 1.3 (9.28.99) Available at: http://www.acgme.org/outcome/comp/compFull.asp#2. Accessed May 1, 2006.

- 2.Internal Medicine In-Training Examination®. Last update February 7, 2006, available at: http://www.acponline.org/cme/itelg.htm?idxt. Accessed May 1, 2006.

- 3.Garibaldi RA, Subhiyah R, Moore ME, Waxman H. The in-training examination in internal medicine: an analysis of resident performance over time. Ann Intern Med. 2002;137(6):505–10. [DOI] [PubMed]

- 4.Cantwell JD. The Mendoza line and in-training examination scores. Ann Intern Med. 1993;119(6):541. [DOI] [PubMed]

- 5.Grossman RS, Fincher RM, Layne RD, Seelig CB, Berkowitz LR, Levine MA. Validity of the in-training examination for predicting American Board of Internal Medicine certifying examination scores. J Gen Intern Med. 1992;7(1):63–7. [DOI] [PubMed]

- 6.Rollins LK, Martindale JR, Edmond M, Manser T, Scheld WM. Predicting pass rates on the American Board of Internal Medicine certifying examination. J Gen Intern Med. 1998;13(6):414–6. [DOI] [PMC free article] [PubMed]

- 7.Waxman H, Braunstein G, Dantzker D, et al. Performance on the internal medicine second-year residency in-training examination predicts the outcome of the ABIM certifying examination. J Gen Intern Med. 1994;9(12):692–4. [DOI] [PubMed]

- 8.FitzGerald JD, Wenger NS. Didactic teaching conferences for IM residents: who attends, and is attendance related to medical certifying examination scores? Acad Med. 2003;78(1):84–9. [DOI] [PubMed]

- 9.Cacamese SM, Eubank KJ, Hebert RS, Wright SM. Conference attendance and performance on the in-training examination in internal medicine. Med Teach. 2004;26(7):640–4. [DOI] [PubMed]

- 10.Kolars J, McDonald F, Subhiyah R, Edson R. Knowledge base evaluation of medicine residents on the gastroenterology service: implications for competency assessments by faculty. Clin Gastroenterol Hepatol. 2003;1:64–8. [DOI] [PubMed]

- 11.Hawkins RE, Sumption KF, Gaglione MM, Holmboe ES. The in-training examination in internal medicine: resident perceptions and lack of correlation between resident scores and faculty predictions of resident performance. Am J Med. 1999;106(2):206–10. [DOI] [PubMed]

- 12.Dupras DM, Li JT. Use of an objective structured clinical examination to determine clinical competence. Acad Med. 1995;70(11):1029–34. [DOI] [PubMed]

- 13.Shokar GS. The effects of an educational intervention for “at-risk” residents to improve their scores on the In-training Exam. Fam Med. 2003;35(6):414–7. [PubMed]

- 14.Ellis DD, Kiser WR, Blount W. Is interruption in residency training associated with a change in in-training examination scores? Fam Med. 1997;29(3):184–6. [PubMed]

- 15.Madlon-Kay DJ. When family practice residents are parents: effect on in-training examination scores. Fam Pract Res J. 1989;9(1):53–6. [PubMed]

- 16.Godellas CV, Hauge LS, Huang R. Factors affecting improvement on the American Board of Surgery in-training exam (ABSITE). J Surg Res. 2000;91(1):1–4. [DOI] [PubMed]

- 17.Godellas CV, Huang R. Factors affecting performance on the American Board of Surgery in-training examination. Am J Surg. 2001;181(4):294–6. [DOI] [PubMed]

- 18.Pollak R, Baker RJ. The acquisition of factual knowledge and the role of the didactic conference in a surgical residency program. Am Surg. 1988;54(9):531–4. [PubMed]

- 19.Shetler PL. Observations on the American Board of Surgery in-training examination, board results, and conference attendance. Am J Surg. 1982;144(3):292–4. [DOI] [PubMed]

- 20.Bull DA, Stringham JC, Karwande SV, Neumayer LA. Effect of a resident self-study and presentation program on performance on the thoracic surgery in-training examination. Am J Surg. 2001;181(2):142–4. [DOI] [PubMed]

- 21.Schilling LM, Steiner JF, Lundahl K, Anderson RJ. Residents’ patient-specific clinical questions: opportunities for evidence-based learning. Acad Med. 2005;80(1):51–6. [DOI] [PubMed]

- 22.Peterson MW, Rowat J, Kreiter C, Mandel J. Medical students’ use of information resources: is the digital age dawning? Acad Med. 2004;79(1):89–95. [DOI] [PubMed]

- 23.Fox GN, Moawad N. UpToDate: a comprehensive clinical database. J Fam Pract. 2003;52(9):706–10. [PubMed]

- 24.What is UpToDate? Available at: http://www.uptodate.com/service/faq.asp. Accessed May 1, 2006.

- 25.Diggle P, Heagerty P, Liang K, Zeger S. Analysis of Longitudinal Data, 2nd edn. Oxford, England: Oxford University Press; 2002.

- 26.Liang KY, Zeger SL. Regression analysis for correlated data. Annu Rev Public Health. 1993;14:43–68. [DOI] [PubMed]

- 27.Green ML, Ruff TR. Why do residents fail to answer their clinical questions? A qualitative study of barriers to practicing evidence-based medicine. Acad Med. 2005;80(2):176–82. [DOI] [PubMed]

- 28.Green ML, Ciampi MA, Ellis PJ. Residents’ medical information needs in clinic: are they being met? Am J Med. 2000;109(3):218–23. [DOI] [PubMed]

- 29.Koonce TY, Giuse NB, Todd P. Evidence-based databases versus primary medical literature: an in-house investigation on their optimal use. J Med Lib Assoc. 2004;92(4):407–11. [PMC free article] [PubMed]

- 30.Norcini JJ, Shea JA, Webster GD, Benson JA, Jr. Predictors of the performance of foreign medical graduates on the 1982 certifying examination in internal medicine. JAMA. 1986;256(24):3367–70. [PubMed]

- 31.Norcini JJ, Grosso LJ, Shea JA, Webster GD. The relationship between features of residency training and ABIM certifying examination performance. J Gen Intern Med. 1987;2(5):330–6. [DOI] [PubMed]