Abstract

Background

To practice Evidence-Based Medicine (EBM), physicians must quickly retrieve evidence to inform medical decisions. Internal Medicine (IM) residents receive little formal education in electronic database searching, and have identified poor searching skills as a barrier to practicing EBM.

Objective

To design and implement a database searching tutorial for IM residents on inpatient rotations and to evaluate its impact on residents’ skill and comfort searching MEDLINE and filtered EBM resources.

Design

Randomized controlled trial. Residents randomized to the searching tutorial met for up to 6 1-hour small group sessions to search for answers to questions about current hospitalized patients.

Participants

Second- and 3rd-year IM residents.

Measurements

Residents in both groups completed an Objective Structured Searching Evaluation (OSSE), searching for primary evidence to answer 5 clinical questions. OSSE outcomes were the number of successful searches, search times, and techniques utilized. Participants also completed self-assessment surveys measuring frequency and comfort using EBM databases.

Results

During the OSSE, residents who participated in the intervention utilized more searching techniques overall (p < .01) and used PubMed’s Clinical Queries more often (p < .001) than control residents. Searching “success” and time per completed search did not differ between groups. Compared with controls, intervention residents reported greater comfort using MEDLINE (p < .05) and the Cochrane Library (p < .05) on post-intervention surveys. The groups did not differ in comfort using ACP Journal Club, or in self-reported frequency of use of any databases.

Conclusions

An inpatient EBM searching tutorial improved searching techniques of IM residents and resulted in increased comfort with MEDLINE and the Cochrane Library, but did not impact overall searching success.

KEY WORDS: evidence-based medicine, medical education, internship and residency, searching

INTRODUCTION

Evidence-based medicine (EBM) is an increasingly important component of graduate medical education and is recognized by the Accreditation Council for Graduate Medical Education (ACGME), the Institute of Medicine (IOM), and others as fundamental to residency training. Practicing EBM is a multistep process, which involves asking clinical questions about patients, searching for the best available evidence, critically appraising the validity of the evidence, and applying the evidence to patient care.1 In the past, most EBM education focused on critical appraisal and occurred away from direct clinical care in the setting of journal clubs or other free-standing educational activities. More recently, experts have begun to deemphasize critical appraisal skills2,3 in favor of skills that foster “real-time” evidence-based practice during clinical care.

The ability to efficiently search databases to obtain evidence to guide clinical care in real-time is crucial to practicing EBM. Whereas immediate evidence retrieval can impact more than 50% of patient care decisions,4,5 trainees rarely consult evidence-based resources to answer their clinical questions.6,7 Residents have identified both insufficient time and poor searching skills as barriers to evidence-based practice.8

Several groups have introduced curricula to improve the searching skills of trainees. Brief free-standing searching tutorials for medical students9,10 and residents11,12 have resulted in improved searching strategies. One searching curriculum for pediatric residents during a neonatal intensive care unit rotation improved searching confidence and techniques.13 Whereas these findings suggest that searching instruction may be beneficial, few studies have utilized randomized group comparisons or have assessed the ability of participants to actually retrieve data.

We set out to improve Internal Medicine (IM) residents’ skill in database searching through a new EBM searching tutorial that was integrated with active patient care on the inpatient wards. The goal of the tutorial was to help residents successfully and efficiently obtain the best evidence to guide the care of hospitalized patients in real-time. To assess the effectiveness of our educational intervention, we randomized IM residents to our intervention or a control seminar and used both a survey and skills assessment exercise to assess both groups. We hypothesized that residents who attended the tutorials would self-report greater comfort with searching and would perform real searches more quickly and more successfully.

METHOD

Needs Assessment

Before the implementation of the new curriculum, we conducted a needs assessment using a questionnaire developed by the EBM Task Force of the Society of General Internal Medicine (SGIM), for which construct validity has been demonstrated among practicing clinicians.14 The survey asks participants to self-rate their comfort using MEDLINE, EBM databases, and UpToDate® using a 5-point Likert scale (1=very uncomfortable, 5=very comfortable) and their frequency of use of MEDLINE, EBM databases and UpToDate® on a 5-point Likert scale (1=never, 2=rarely, 3=monthly, 4=weekly, 5=daily). Eighty-eight residents from our IM training program completed needs assessment surveys during the 2004–2005 academic year. Sixty-four of those residents later participated in the study; the remainder completed residency training.

We utilized the results of the needs assessment in designing our educational intervention. Among residents surveyed, nearly all (97%) reported using UpToDate® and a large majority (77.3%) reported using MEDLINE at least weekly. Only 5% and 9.8% of residents reported weekly or daily use of the Cochrane Library and ACP Journal Club, respectively. Most (92.2%) reported feeling very comfortable using UpToDate®, but only 45.5%, 21.3%, and 7.1% reported similar levels of comfort using MEDLINE, ACP Journal Club, or the Cochrane Library, respectively. We therefore designed our educational intervention to improve residents’ skills and comfort with MEDLINE and filtered EBM resources such as ACP Journal Club and the Cochrane Library.

Educational Intervention

During the 2005–2006 academic year, all 2nd- and 3rd-year IM residents on inpatient ward rotations at Mount Sinai Hospital in New York City were randomized by concealed selection of assigned numbers to participate in the EBM Searching Tutorial (n = 40) or to attend a usual medical conference (n = 37) occurring simultaneously. The weekly searching tutorials were attended by 3–6 residents rotating on the inpatient wards. Residents randomized to the intervention attended up to 6 tutorials between August 2005 and March 2006. Each tutorial was supervised jointly by a medical research librarian and 1–3 faculty members from the Division of General Internal Medicine. Involved faculty members had an interest in EBM, but no formal training in database searching. Tutorials were held in a conference room on the inpatient wards. The room was equipped with 3 computers with access to the online resources of the medical school library, including MEDLINE, UpToDate®, ACP Journal Club, and the Cochrane Library. All MEDLINE searches utilized the PubMed interface. Each tutorial participant received a 3 × 4 inch laminated card of “searching pearls”, which included descriptions of databases and suggested techniques in PubMed.

At the start of the searching tutorial, each resident did a brief oral presentation about a current hospitalized patient, and with the help of the group, generated 1 or more clinical questions relating to that patient. Questions were formulated using the PICO (Patient Intervention Comparison Outcome)15,16 format and addressed issues pertaining to diagnosis, prognosis, or treatment of their patients. Using online resources, residents then searched for answers to their clinical questions while faculty observed. Subsequent search attempts were made with the guidance of faculty members who stressed techniques including the use of Medical Subject Headings (MeSH), Limits, Clinical Queries, and Related Articles in PubMed. Faculty also instructed residents in the use of ACP Journal Club and the Cochrane Library when appropriate to the clinical question. At the end of each session, participants reconvened and shared their search strategies and the answer to the clinical question they investigated.

Assessment of Educational Intervention

We assessed the effectiveness of our intervention using 2 methods: responses to the survey developed by the SGIM EBM Task Force and performance on a novel Objective Structured Searching Examination (OSSE) developed by our group. The survey, which had been also utilized in the needs assessment, was administered to all residents in both the intervention and control groups several months after the completion of the tutorial sessions.

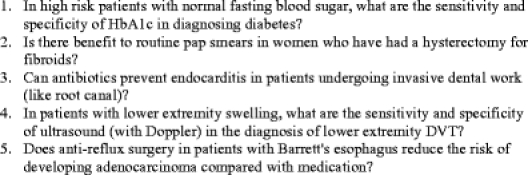

All residents participated in the OSSE on the same day as the survey. We created this novel OSSE to assess whether residents were capable of performing both successful and time efficient searches to answer simulated clinical questions. A secondary goal was to assess their use of searching strategies and filtered resources. The 45-minute OSSE was conducted in a computer laboratory in the medical school library. Seven to 12 participants attended each OSSE session. Participants were asked to independently search EBM databases for primary literature to answer 5 clinical questions pertaining to diagnosis, prognosis, and treatment (Fig. 1). Acceptable forms of primary literature included clinical trials, systematic reviews/metaanalyses, and EBM-filtered summaries such as those in ACP Journal Club. Participants were discouraged from using UpToDate® or other online textbooks to answer the clinical questions; narrative reviews were not accepted as primary evidence.

Figure 1.

Clinical questions used in the EBM OSSE.

OSSE clinical questions were reviewed by EBM experts for face validity. The OSSE was piloted on members of the General IM faculty before implementation, including both novices and proficient searchers.

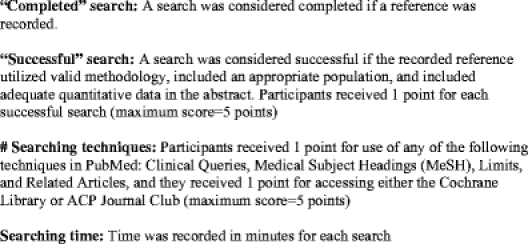

For each clinical question, participants documented the time they spent searching and the reference for the best article they found to answer each clinical question.

Two investigators (DK and NT) scored each OSSE. Scorers were blinded to participants’ group allocation. Scorers used participants’ documentation and PubMed search histories to evaluate 4 criteria: the number of completed searches, the number of successful searches, searching efficiency, and the utilization of searching techniques (Fig. 2). Scorers recorded the number of successful searches for each resident by reviewing abstracts for the referenced articles. Searches were considered successful if the referenced study utilized valid methodology, if the population studied was applicable to the question, and if the abstract included quantitative data to answer the specific question. To determine the interrater reliability of the reviews of OSSE results, the 2 investigators independently scored 20 randomly selected OSSEs and agreement (kappa statistic) was measured. Investigators scoring OSSEs demonstrated good interrater reliability (κ = 0.76).

Figure 2.

Items scored on the OSSE.

The study was reviewed by the Mount Sinai School of Medicine Institutional Review Board and exempted from ongoing oversight.

Statistical Analysis

SPSS software (version 14.0; SPSS Inc, Chicago, IL) was used to perform all statistical analyses. The Wilcoxon ranks sum test was used to identify differences in responses on the self-assessment questionnaires before and after the intervention and to compare OSSE performances.

RESULTS

A total of 77 residents were randomized, 40 in the intervention group and 37 in the control group. Two residents in the control group left the training program and were excluded from the study after randomization, and OSSE data for 1 resident in the control group was lost. Six residents randomized to the intervention did not actually receive it because of scheduling conflicts, but all were analyzed in the group to which they were originally assigned. The groups were similar with regard to gender and year of training.

We conducted a total of 30 searching tutorials, and residents randomized to the intervention group attended a mean of 2.6 (SD 1.5) tutorials. A total of 101 clinical questions were asked: 58% concerned therapy or harm, 21% concerned diagnosis, 14% concerned prognosis, and 7% were classified as “other.” During tutorial sessions, residents successfully answered 62% of their clinical questions; success rates varied by question type. Eighty-six percent of prognosis or “other” questions were successfully answered, whereas 58% of therapy/harm questions and 52% of diagnosis questions were answered.

The mean time between the last tutorial session and the post-intervention evaluation was 112 days (SD 57) for residents who attended at least 1 tutorial session. On self-assessment surveys, residents in the intervention group reported higher mean levels of comfort than control residents in the use of both MEDLINE (4.53 vs 4.15, p = .05) and the Cochrane Library (3.37 vs 2.79, p = .03). There was no significant difference in self-reported comfort with ACP Journal Club between the groups (3.72 vs 3.55, p = .52). There was no difference in the reported frequency of use of any of the EBM databases between groups.

OSSE data are available for 74 residents. Residents who received the intervention utilized more searching techniques than residents in the control group (2.48 vs 1.65, p = .004). In terms of specific searching techniques, there was a trend toward more usage of MeSH headings and filtered resources (i.e., ACP Journal Club or the Cochrane Library) among intervention residents, although these differences were not significant (Table 1). Residents in the intervention group were more likely than controls to utilize PubMed’s Clinical Queries during the OSSE (78% vs 32%, p < .001). There were no significant differences between the groups in the number of successful searches and the time per completed search, although there did appear to be a trend toward better performance in the intervention group (Table 2).

Table 1.

Utilization of Searching Techniques: Percent of Participants in Each Group Utilizing Searching Techniques in the OSSE

| Control (n = 34) | Intervention (n = 40) | p value | |

|---|---|---|---|

| Clinical queries (%) | 32 | 78 | <.001 |

| MeSH (%) | 15 | 30 | .12 |

| Limits (%) | 35 | 30 | .63 |

| Related articles (%) | 29 | 48 | .41 |

| Filtered resources (%) | 53 | 63 | .12 |

Table 2.

Results of EBM OSSE

| Control mean (SD) | Intervention mean(SD) | p value* | |

|---|---|---|---|

| Number of completed searches (out of 5) | 4.41 (0.78) | 4.63 (0.74) | .119 |

| Number of searching techniques utilized (out of 5) | 1.65 (1.04) | 2.48 (1.11) | .004 |

| Number of successful searches (out of 5) | 2.76 (1.46) | 3.15 (1.62) | .302 |

| Time/completed search (min) | 3.93 (2.86) | 3.09 (2.03) | .353 |

*Using Wilcoxon ranks sum test

DISCUSSION

In this study, we set out to evaluate the impact of an educational intervention on the ability of medical residents to use electronic databases to find medical literature. Our needs assessment revealed a comfort gap in medical residents’ attitudes toward different online information resources. Residents reported the greatest comfort with an online textbook (UpToDate®), intermediate comfort with MEDLINE, and lowest comfort with EBM-filtered resources. We designed a searching tutorial to improve residents’ skills and comfort with both MEDLINE and filtered resources. To maximize their relevance, tutorials occurred during inpatient rotations and focused on answering questions about active inpatients.

We rigorously evaluated our intervention by conducting a randomized trial, which demonstrated that medical residents who attended searching tutorials were more likely to use a variety of searching techniques when compared with control residents. Despite the difference in searching techniques, control and intervention groups did not differ in searching success in our OSSE. There are several possible explanations for this negative result. First, because intervention and control residents worked together regularly, intervention residents frequently taught their colleagues new searching techniques, resulting in contamination that may have minimized between group differences. Second, our 5-search OSSE may have been underpowered to detect meaningful differences. We designed the OSSE to test residents’ skills with online resources as they are currently utilized, as the searching evaluations in the published literature10,21,22 were poorly applicable to our setting. Designing a tool to measure searching skill was a challenge, because many searches are successful even by unskilled searchers, and others are difficult even in experienced hands. Demonstrating differences in skill among advanced learners may require many searches, but time constraints limited our ability to use a longer OSSE. Because of its brevity, we feel the OSSE may fail to measure small differences in searching skills and is likely to have underestimated between group differences, especially in a small sample such as ours. Differences in searching techniques may be a more sensitive measure of searching skill in our study.

The intervention group reported significantly higher levels of comfort using both MEDLINE and the Cochrane Library, but similar comfort as controls with use of ACP Journal Club. There are several possible explanations for this result. First, although we attempted to emphasize ACP Journal Club during tutorial sessions, participants’ clinical questions were often highly specific and detailed, reflecting the patient population at our tertiary referral center. Answers to such questions can rarely be found in ACP Journal Club or any filtered resource. We therefore had less opportunity to use filtered resources than MEDLINE/PubMed. Second, the ACP Journal Club search engine utilizes no controlled vocabulary or advanced searching techniques, so there was little specific instruction for us to offer. It is also possible that our control group overestimated their competence with ACP Journal Club and other resources and obscured a small but real difference in competence between the groups. There is evidence in the literature to suggest that trainees overestimate their skills in other areas of EBM;17 it is possible they are also overconfident in their searching ability.

We did not find a difference in self-reported frequency of EBM database usage between groups at the end of the study. This finding is not surprising. Residents in our program are expected to consult primary literature to support patient care decisions, and to present data to faculty regularly. The lack of difference between the groups in frequency of resource use is likely a function of our institutional culture. Our intervention did not create more frequent users of the evidence, only more skilled ones. Intervention residents did not use filtered resources more frequently than control residents, which may reflect the complexity of their inpatient clinical questions, which are more often answered in MEDLINE.

Our study is an important addition to the literature. We implemented a curriculum in which residents improved their searching skills by answering clinical questions about hospitalized patients in real-time. Our participants were on inpatient rotations, searching the literature daily, which allowed for continuous skill reinforcement. EBM educational activities like ours, which occur within the context of active patient care, act to guide clinical decision making and demonstrate to learners the importance and feasibility of incorporating evidence-based practice into their clinical routines. A recent systematic review concluded that EBM teaching, which is integrated with patient care, has a greater impact on learners’ skills, attitudes, and behavior than stand-alone curricula.18 Further, whereas most teaching in medical residency occurs in the hospital, our study is among the first to measure outcomes of educational interventions in the inpatient setting.19

Our study is unique in evaluating searching skill by measuring success in real searches. Other groups have challenged learners with real searches, but have measured only their approach to searching, not their actual success.9,12,20 Whereas our outcomes included the use of good searching techniques, we also measured the quality of search results and the time to complete each search, resulting in a more complete evaluation.

Another strength of our study was its overall design. We performed a randomized trial, which included all 2nd- and 3rd-year residents in our program, and our analysis was by intention-to-treat. Whereas many authors have recognized the importance of formally evaluating the impact of EBM curricula,2,3,21 there have been few published studies as rigorous as ours.

There were several important limitations to our study design. The searching OSSE that we used has not been previously validated. Our OSSE does have face validity through expert review and piloting, which may be adequate in a low-stakes educational environment. The dynamic nature of web-based databases makes rigorous validation of tools like ours unfeasible, as search results may change dramatically over time. However, the lack of content validity may have led us to over- or underestimate the impact of our intervention, and may limit the applicability of our results.

Other limitations include the fact that the study took place at a single institution with a small number of participants. As a result of its sample size, the study was not powered to detect small improvements in searching skills. Further, whereas our completion rate was reasonable, not all residents who were randomized to receive the intervention actually received it. Within the intervention group, there was variability in the number of seminars attended, with a mean of 2.6 sessions. We did not find a relationship between the number of sessions attended and OSSE success, although our study was not powered to find such a difference. It is possible that more sessions would have resulted in more improvement in searching skill. In addition, our OSSE did not measure real-world searching behavior and may not reflect residents’ behavior when caring for real patients.

Our study demonstrates that an integrated inpatient EBM searching tutorial improves medical residents’ use of searching techniques and comfort with EBM databases, but we were unable to demonstrate an impact on overall searching success. Further investigations will include an exploration of the most successful searching strategies, and a reemphasis on filtered databases, possibly through curriculum in a different setting.

Acknowledgments

Dr. Stark was supported by grants from the Empire Clinical Research Investigator Program, New York State Department of Health and U.S. Department of Health and Human Services, Health Resources and Services Administration CFDA 93-895.

The authors thank the EBM Task Force of the SGIM for making their survey tool available to our group.

Conflict of Interest None disclosed.

Footnotes

This paper was presented at the SGIM 29th Annual Meeting in April 2006, the SGIM 2007 Mid-Atlantic Meeting on March 9, 2007, and at the SGIM 30th Annual Meeting in April 2007.

References

- 1.Sackett DL, Rosenberg WM, Gray JM, Haynes RB, Richardson WS. Evidence-based medicine: what it is and what it isn't. BMJ. 1996;312(7023):71–2. [DOI] [PMC free article] [PubMed]

- 2.Hatala R, Keitz SA, Wilson MC, Guyatt G. Beyond journal clubs. Moving toward an integrated evidence-based medicine curriculum. J Gen Intern Med. 2006;21(5):538–41. [DOI] [PMC free article] [PubMed]

- 3.Straus SE, Green ML, Bell DS, et al. Evaluating the teaching of evidence based medicine: conceptual framework. BMJ. 2004;329:1029–32. [DOI] [PMC free article] [PubMed]

- 4.McGinn T, Seltz M, Korenstein D. A method for real-time, evidence-based general medical attending rounds. Acad Med. 2002;77(11):1150–2. [DOI] [PubMed]

- 5.Schilling LM, Steiner JF, Lundahl K, Anderson RJ. Residents' patient-specific clinical questions: opportunities for evidence-based learning. Acad Med. 2005;80(1):51–6. [DOI] [PubMed]

- 6.Ramos K, Linscheid R, Schafer S. Real-time information-seeking behavior of residency physicians. Fam Med. 2003;35(4):257–60. [PubMed]

- 7.Green ML, Ciampi MA, Ellis PJ. Residents' medical information needs in clinic: are they being met? Am J Med. 2000;109:218–23. [DOI] [PubMed]

- 8.Green ML, Ruff TR. Why do residents fail to answer their clinical questions? A qualitative study of barriers to practicing evidence-based medicine. Acad Med. 2005;80(2):176–82. [DOI] [PubMed]

- 9.Gruppen LD, Rana GK, Arndt TS. A controlled comparison study of the efficacy of training medical students in evidence-based medicine literature searching skills. Acad Med. 2005;80(10):940–4. [DOI] [PubMed]

- 10.Rosenberg WM, Deeks J, Lusher A, Snowball R, Dooley G, Sackett D. Improving searching skills and evidence retrieval. J R Coll Physicians Lond. 1998;32(6):557–63. [PMC free article] [PubMed]

- 11.Smith CA, Ganschow PS, Reilly BM, et al. Teaching residents evidence-based medicine skills: a controlled trial of effectiveness and assessment of durability. J Gen Intern Med. 2000;15:710–5. [DOI] [PMC free article] [PubMed]

- 12.Vogel EW, Block KR, Wallingford KT. Finding the evidence: teaching medical residents to search MEDLINE. Journal of the Medical Library Association. 2002;90(3):327–30. [PMC free article] [PubMed]

- 13.Bradley DR, Rana GK, Martin PW, Schumacher RE. Real-time, evidence-based medicine instruction: a randomized controlled trial in a neonatal intensive care unit. Journal of the Medical Library Association. 2002;90(2):194–2001. [PMC free article] [PubMed]

- 14.Mangrulkar RS, Lencoski K, Gerrity M, Hayward R, Straus S, Green ML. The impact of a series of workshops to improve evidence based clinical practice. J Gen Inter Med. 2004;19(suppl 1):219.

- 15.Oxman AD, Sackett D, Guyatt G. Users' guides to the medical literature: I. How to get started. J Am Med Assoc. 1993;270(17):2093–5. [DOI] [PubMed]

- 16.Richardson WS, Wilson MC, Nishikawa J, Hayward R. The well-built clinical question: a key to evidence-based decisions. ACP J Club. 1995;123:A-12. [PubMed]

- 17.Caspi O, McKnight P, Kruse L, Cunningham V, Figueredo AJ, Sechrest L. Evidence-based medicine: discrepancy between perceived competence and actual performance among graduating medical students. Med Teach. 2006;28(4):318–25. [DOI] [PubMed]

- 18.Coomarasamy A, Khan KS. What is the evidence that postgraduate teaching in evidence based medicine changes anything? A systematic review. BMJ. 2004;329(7473):1017–22. [DOI] [PMC free article] [PubMed]

- 19.DiFrancesco L, Pistoria MJ, Auerbach AD, Nardino RJ, Holmboe ES. Internal medicine training in the inpatient setting - A review of published educational interventions. J Gen Intern Med. 2005;20(12):1173–80. [DOI] [PMC free article] [PubMed]

- 20.Frohna JG, Gruppen LD, Fliegel JE, Mangrulkar RS. Development of an evaluation of medical student competence in evidence-based medicine using a computer-based OSCE station. Teaching and Learning in Medicine. 2006;18(3):267–72. [DOI] [PubMed]

- 21.Shaneyfelt T, Baum KD, Bell D, et al. Instruments for evaluating education in evidence-based practice. J Am Med Assoc. 2006;296(9):1116–27. [DOI] [PubMed]

- 22.Haynes RB, Johnston ME, McKibbon KA, Walker CJ, Willan AR. A program to enhance clinical use of MEDLINE. A randomized controlled trial. Online J Curr Clin Trials. May 11 1993;56:Doc No 56. [PubMed]