Abstract

Objectives

To develop methods to facilitate the ‘systematic’ review of evidence from a range of methodologies on diffuse or ‘soft’ topics, as exemplified by ‘access to healthcare’.

Data sources

28 bibliographic databases, research registers, organisational web sites or library catalogues. Reference lists from identified studies. Contact with experts and service users. Current awareness and contents alerting services in the area of learning disabilities.

Review methods

Inclusion criteria were English language literature from 1980 onwards, relating to people with learning disabilities of any age and all study designs. The main criteria for assessment was relevance to Guillifords’ model of access to health care which was adapted to the circumstances of people with learning disabilities. Selected studies were evaluated for scientific rigour then data was extracted and the results synthesised. Quality assessment was by an initial set of ‘generic’ quality indicators. This enabled further evidence selection before evaluation of findings according to specific criteria for qualitative, quantitative or mixed-method studies.

Results

82 studies were fully evaluated. Five studies were rated ‘highly rigorous’, 22 ‘rigorous’, 46 ‘less rigorous’ and 9 ‘poor’ papers were retained as the sole evidence covering aspects of the guiding model. The majority of studies were quantitative but used only descriptive statistics. Most evidence lacked methodological detail, which often lowered final quality ratings.

Conclusions

The application of a consistent structure to quality evaluation can facilitate data appraisal, extraction and synthesis across a range of methodologies in diffuse or ‘soft’ topics. Synthesis can be facilitated further by using software, such as the Microsoft ‘Access’ database, for managing information.

Keywords: Research quality evaluation, Review literature, Health Services Research, Information Storage and Retrieval, Human

Key messages for Practice

Participating in the evaluation phase of a literature review is a valuable role that provides skill development opportunities for health librarians

Traditional librarian skills of organising and classifying information can assist in identifying and mapping underlying themes and components in literature reviews of diffuse topics

Librarians need training in both qualitative and quantitative methods, and evaluation of research quality to participate in research and become evidence-based practitioners

Introduction

The evidence-base to inform methods for carrying out systematic reviews is developing rapidly. The Health Technology Assessment programme has funded a number of studies to further our understanding of the application of aspects of the systematic review process1-4. The growth of the Cochrane Reviews Methodology Collaborative5, which conducts and monitors this research, also reflects the development of this subject. This is the second of two papers on literature review methodology in diffuse subject areas. The first focussed on identification of relevant literature8. This paper describes an approach to the evaluation of quality across research employing different methods, used for a review of a ‘diffuse’ topic – ‘access to healthcare’.

As the amount of published research evidence increases exponentially, syntheses of evidence are an important resource in informing decision making by policy makers, practitioners and health service users7. The evaluation of literature described here was part of a literature review funded by the National Co-ordinating Centre for Service Delivery and Organisation research programme (NCCSDO), which has itself produced guidance on methods for researching the organisation and delivery of health services29. The purpose of review in this context is to summarise the available evidence to inform decision-making within healthcare services, while identifying areas where research is lacking. It is against this background of increased commissioning of literature reviews in diffuse topics27 that the role of the librarian in the systematic review process is now being highlighted26.

Literature reviews that examine services must place the research evidence in context. To this end the review included a consultation (described in paper one8) to gather evidence on barriers to, and facilitators of, access to healthcare for people with learning disabilities. The challenges in developing systematic review methods include how to incorporate evidence using different research methodologies into a single review6, and how to include different types of ‘knowledge’, such as that gathered from consultation, generated to meet the need of stakeholders10. We felt it was important to discriminate between different types of ‘evidence’ and ‘knowledge’ as research evidence, in itself, is of limited value unless stakeholders are able to translate it into their own context and use it to support their practice or decision-making. Only when this translation has taken place can research evidence be said to have become ‘knowledge’ either tacitly or formally within organisations. Different types of evidence are recognised as being more or less valid, depending on their quality and the purpose for which they were gathered. The consultation exercise gathered non-written forms of evidence and was designed to capture the tacit and experiential knowledge of service users. Identification of evidence context is one area where the librarian can make a significant contribution to the review process.

The concept of ‘access to healthcare’ is multi-faceted with barriers and facilitators operating both outside and across health services. Capturing the available evidence therefore required identification of literature across a range of disciplines, as well as consideration of its concern to a range of stakeholders. Details of the model of access used and search strategy are given in the previous paper8. The full-report is available on the SDO website9.

Rationale

There are two ways in which the rationale for the methods employed in this review differed from a systematic review of effectiveness. Firstly, it was deemed inappropriate to adopt criterion for study design, or ‘hierarchy of evidence’28 because research evidence on diffuse topics are likely to reflect a plurality of methods and approaches, in keeping with their multi-faceted nature. We therefore made no value judgements about the relative merits of qualitative versus quantitative method, but reasoned that it was possible and justifiable to not limit evidence to a particular methodological approach or study type.

Secondly, as a key purpose of the review was to identify gaps in the evidence base and practical interventions to promote ‘access’, it was important not to exclude research solely on the basis of poor methodological quality. It was acknowledged that such research could be substantive in illuminating the underpinning model, or “innovative” in terms of potential for future service development. A conscious decision was therefore made to balance the ‘signal’ of a publication (ie. our judgement of its potential value to illuminating access to health care for people with learning disabilities) against its ‘noise’ or poor methodological quality11.

Quality evaluation tool development

Critical appraisal of the literature in this review draws on a number of established criteria12,13 and approaches14,15,16. There is some agreement on the most important elements of research that embody methodological and epistemological quality that should be appraised to establish the credentials of evidence. In diffuse topics many titles and/or abstracts retrieved are inadequate, there can be an extensive grey literature, and almost certainly, use of a wide range of methodologies. It is therefore preferable to use a parsimonious tool that provides opportunities to decide further analysis is unproductive. The quality evaluation tool described here was designed to incorporate the multifarious nature of research evidence on ‘access to healthcare’ and provide two opportunities for data rejection before appraisal of findings and final quality rating.

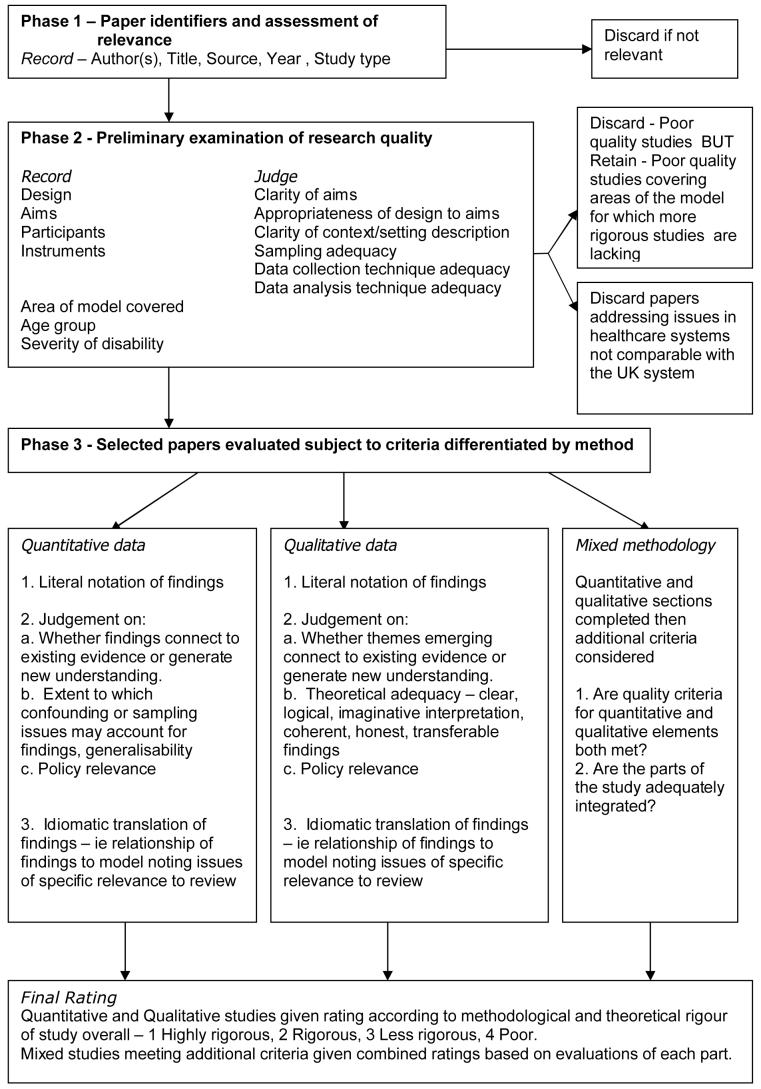

The tool is described in Figure 1 and below. The quality evaluation tool is available from http://www.npcrdc.ac.uk/publications/Quality%20Evaluation%20Tool.doc

Figure 1. Quality Evaluation Tool summary.

Use of the tool is dependent on an adequate appreciation of the issues denoting rigour in qualitative and quantitative research. These are alluded to in the description of this tool, however, the reader is recommended to see the work of Popay, Rogers and Williams14 and Mays and Pope15 regarding evaluation of qualitative research, and Gomm17 for a discussion of the issues affecting quality in quantitative research.

Quality Evaluation tool

Phase 1 - Paper identifiers and assessment of relevance

Fields - Author(s), title, source, year, study type

Paper identifiers (noted above) were recorded for every paper. Those not relevant to our model on examining the full text were discarded and the reason noted. Full details of selection criteria are given in the first paper8.

Phase 2 - Evaluation of methodological rigour

Part 1: Method - Fields

Design, aims, participants, instruments Methodological details were recorded for remaining papers. The design and other details informed assessment of methodological quality, and enabled the reviewer to interpret the findings in context during synthesis. Adequate description of methodological detail in research papers is crucial to evaluation of quality18.

Part 2: Methodological Quality Indicators - Fields

Clarity of aims, appropriateness of design, clarity of context/setting description, sampling adequacy, data collection technique adequacy, data analysis techniques adequacy.

These indicators were applied to both quantitative and qualitative papers as they have been identified as central to methodological rigour in both types of research14,15,17.

It is a pre-requisite of any piece of rigorous research that the aim of the study, hypothesis or research question is clearly stated as this is the yardstick against which findings are considered. The design of the study should then be capable of providing evidence to answer the question, support or refute the hypothesis.

The reader is unable to adequately assess the extent to which findings provide answers to research questions without knowledge of the context within which the research was conducted. Settings may exert significant influence on outcomes. For example, interviews conducted in participants’ own homes may allow more reflective and open comment than those either conducted in the high street or in the presence of an authority figure such as their manager, doctor or teacher.

Issues of sampling also have a bearing on research outcome. In quantitative research, considerations of statistical power19 often mean that large numbers of participants must be included in order to detect statistically significant effects. In qualitative research the absolute number of participants is less significant than the range14 whose experiences reflect different perspectives on the research subject and therefore allow a more considered analysis of the topic.

Methods used to collect data also influence outcome. Face to face interview, telephone interview, postal survey, using structured, semi-structured or grounded questioning/interviewing will all produce data, but with varying levels of detail. Data collection must be appropriate to the aims of the study and its participants.

Data analysis, therefore, whether conducted on conclusion of data collection, as in much quantitative research, or concurrently, as is common in qualitative research, needs to be appropriate to the sample, type of data collected and the question it is trying to answer.

Part 3: Review organisers - Fields

Area of model, age group, severity of disability, Details were recorded of which aspects of the model were covered in the paper, participants’ age group and disability range. The review aimed to reflect differences in access to healthcare across the lifespan and any effect severity of disability had on access. These details were recorded at this point to aid decisions on the usefulness of including the paper where the indicators showed the study to be lacking rigour.

Phase 3 - Evaluation of Findings

The remaining analysis was differentiated by method, however, the overall structure for each section was consistent.

Part 1: Key findings

Findings from the research paper, relevant to the review topic, were recorded.

Part 2: Evaluation of findings by method

Whether the findings connected with existing bodies of knowledge, or generated new research evidence was considered and formed the ‘knowledge indicator’. Such connections were considered to signify rigour by positioning the findings within the wider evidence base. This involved reflection on the findings and their relation to evidence cited in the introduction to the paper, as well as other ‘bodies of knowledge’ that the reviewer became aware of through the process of review.

Quantitative studies were assessed with respect to the aim of the research and particularly whether confounding may offer an alternative explanation to the findings and whether there had been attempts to control for, recognise and address, confounding16. Confounding occurs where statistical significance, or association, is apparent between an independent and dependent (or outcome) variable but may in fact be attributable to a third factor, or variable, that is related to both. For example, research may demonstrate a statistical relationship between socio-economic status (SES) and computer literacy amongst 8 year-old children. Further investigation may suggest that an intermediate factor – access to a computer – may mediate the relationship between SES and computer literacy. The research is therefore confounded, as it is unclear whether SES relates to aptitude or opportunity differences between the children.

Qualitative research was assessed using established standards14,15 with particular consideration given to the validity and adequacy of studies. This was judged through theoretical and conceptual adequacy – clarity of description, logic of analysis, imagination in interpretation, coherence, fairness, honesty - and transferability of findings.

For both types of study, consideration was given to its policy relevance – the extent that the study addresses issues of concern to practitioners and policy makers and empowers service users.

Part 3: Notes - Idiomatic translation

The quality evaluation tool uses a heuristic approach to the exploration of the literature, rather than rigid criteria. It was designed to include idiomatic translation of findings, to place the study within the wider literature. Notes were therefore made on the focus of the research and its fit within the model of access guiding the review, along with other relevant concerns.

The literature reflected a wide range of themes, disciplines and paradigms, which represented forms of narrative and therefore issues of audience, language and knowledge. This is in keeping with literature synthesis as a form of ethnography where the synthesiser identifies key metaphors and concepts in order to understand competing ‘world views’ of a topic20. This section guided final synthesis.

Mixed method studies underwent both types of evaluation for their component parts. Final consideration was given to whether these met quality criteria and were adequately integrated.

Part 4: Final Quality rating

Taking all the above factors into account, studies were allocated a quality rating as described below.

Highly rigorous - All or most quality indicators met including knowledge indicator.

Rigorous - Main quality indicators met including knowledge indicator.

Less rigorous - Some lack of methodological detail but knowledge indicator met.

Poor - Lack of methodological detail and/or knowledge indicator not met.

Piloting

Two researchers were involved in reviewing literature but given that over 800 papers were identified as potentially relevant and a timescale of one year, it was not possible for both researchers to review every paper. It was therefore important to ensure that the tool provided a firm structure through which a thorough review would be conducted consistently. The tool was piloted on a sample of six qualitative, quantitative, and mixed-method papers, to allow reviewer familiarisation and evaluate its capacity to capture the dimensions of the publications to be assessed. Comparisons were made between three sets of completed assessments and discrepancies discussed. A further three sets of papers were then completed in light of these discussions to ensure compatibility between the reviewers in application of the tool. The pilot phase was brief but considered adequate as the reviewers were not expected to conduct their reviews in isolation. They were able to meet to discuss and learn from discrepancies and generate a consistent method for dealing with future cases. The co-location of the reviewers was an important factor in being able to conduct data extraction and evaluation in this way.

As a result of the pilot exercise some amendments were made to the tool to extend the number of options for classifying the literature by type, design, age group and disability range. The number of coding boxes for identifying the areas of the model explored by the publication were also increased from three to five. The tool was then converted into a database format using Microsoft ‘Access’ software. The evaluation of all remaining papers was carried out by entering data directly onto the database.

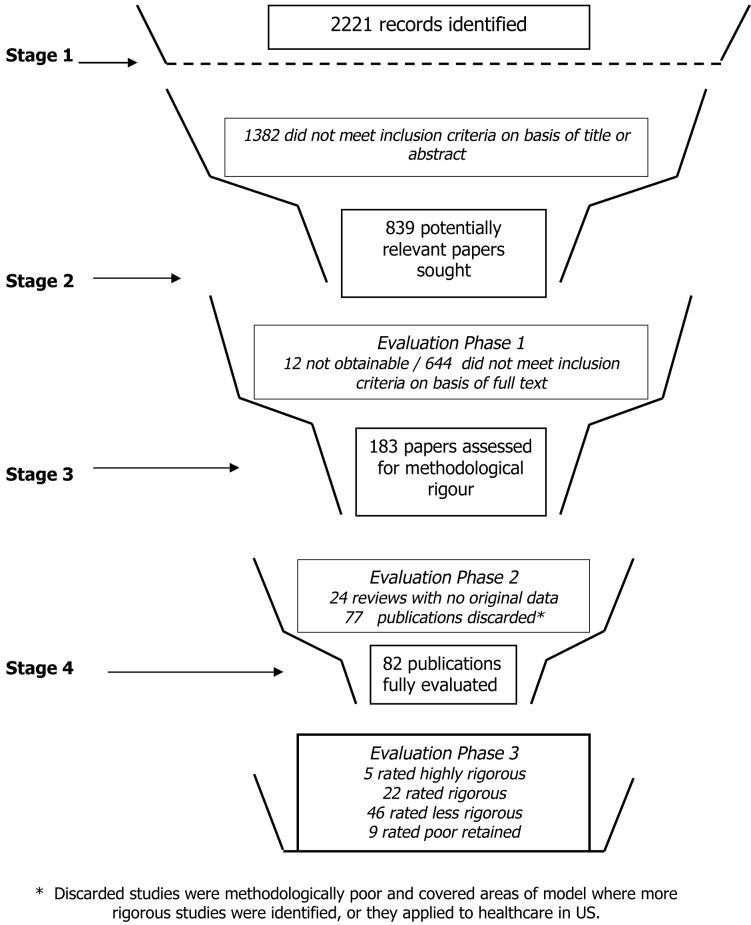

Application of the evaluation tool

The tool facilitated ‘funnelling’ of literature18, that is stages of manual scanning and filtering search results to compensate for the lower level of specificity occurring in diffuse topic searches. The process is illustrated in Figure 2 below.

Figure 2. Funnelling of references.

Stage 1 - Initial inclusion/exclusion

Stage 1 funnelling was completed during the search phase where inclusion/exclusion criteria were applied to records retrieved from databases (titles and abstracts). Of the 2221 records screened 839 appeared to meet our criteria. This stage was deliberately over inclusive because we did not wish to exclude relevant papers/publications due to obscure titling or inadequate abstracts (see previous paper8). Implementation of ‘quality evaluation’ therefore began in earnest with the consideration of these 839 records.

Stage 2 - Confirmation of relevance

Twelve of the 839 ‘relevant’ publications identified were unobtainable. The reviewers considered the full text of remaining publications. After recording paper identifiers, confirmation was sought that the paper addressed one or more of the areas of the model adopted for the research. Any publication where the assessor was unsure or doubtful was discussed jointly to agree a status. 644 publications did not address ‘access’ as defined by our criteria and were abandoned.

Stage 3 - Methodological quality evaluation

Twenty four of the 183 publications confirmed relevant were reviews. These were read to ensure that papers we had failed to identify were included. Methodological rigour was poor on many of the remaining papers. The audiences for these publications were often practitioners, and evidence cited based on very small samples or anecdotes of experience. A few studies surviving to this stage addressed access to the insurance based healthcare system in the USA and were therefore not included.

Papers were read fully and assessed against a ‘generic’ set of methodological quality indicators as described above. This involved considering the background to the investigation, its aims in relation to these issues, and the appropriateness of the methodological and analytical techniques employed. The areas of the model of ‘access’ covered in the study, age groups and disability range were recorded and used to organise the review in synthesis. These items were valuable for highlighting ‘gaps’ in research.

Papers which scored well on these ‘generic’ quality indicators, and/or those that applied to areas of the model for which no more rigorous literature was identified, proceeded to a detailed evaluation of their findings based on study design. Poor quality papers had their limitations made explicit.

Stage 4 - Method specific quality evaluation

Eighty two studies were considered of adequate quality and relevance to the ‘access’ model including 15 qualitative, 62 quantitative and 5 mixed method studies. These proceeded to the final stage of evaluation of findings according by specific quality criteria.

The data extraction and quality evaluation process included evaluation of the literature using both literal and idiomatic techniques20. That is, the main details of each paper were transcribed literally (word-for-word) from the original text onto the quality evaluation pro-forma to accurately represent original findings. These were then evaluated in light of the criteria for the study type, as described above, and using idiomatic translation, comments were added on the quality, paradigms and approaches used, comparisons with previously reviewed literature, and relationship to the model.

Idiomatic translation identifies the underlying “meaning” of the text, from its literal meaning, and has been highlighted as a precursor for synthesising and interpreting qualitative literature into a new construction or dialectic20. Diffuse literature review needs to recognise competing “world views” and evaluate their relevance to the model employed. This translation means that reviewer perspectives, along with the substance of the literature, are reflected in synthesis of findings. This is a feature of literature review in more diffuse, “soft” topics.

Final ratings are shown in figure 2. Many papers evaluated failed to attain higher ratings due to lack of detail on the methodology, which made judgements on confounding, representativeness and transferability difficult. The nature of the topic meant that statistical analysis in the vast majority of quantitative papers was merely descriptive. Statistical considerations were therefore not critical to evaluation and many of the papers rated ‘less rigorous’ were considered adequate in terms of the soundness of the data on which conclusions were based.

Use of a Microsoft ‘Access’ database to manage information generated by data extraction and evaluation, proved invaluable. It allowed the reviewer to extract data easily according to areas of the model underpinning the literature review. Equally important, in the light of evidence from the consultation exercise, it enabled us to describe the distribution of the evidence across the model and hence identify ‘gaps’. Time and effort in converting the hard copy pro-forma to a database format were more than repaid at this stage.

Discussion

Implications for Librarians

The type of literature evaluation described here is increasingly being commissioned to inform decision-making on future priorities for research, and to assess the impact of policy27, 24 from a stakeholder perspective. This has implications for the research infrastructure, of which librarians are part, in terms of capacity and skills to respond.

Librarians are familiar with literature across a range of methods and disciplines and are therefore well placed to contribute to the evaluation of literature, particularly in identifying and categorising the different narratives that converge in a diffuse topic. Identifying the underlying assumptions, or ontology, on which research is based draws on traditional librarian skills of classifying the literature. The evaluation phase of the literature review required the authors to focus on the content of the evidence gathered during which the librarian identified and categorised the variety of “world views”20 reflected. This facilitated the mapping of the literature.

Quantitative papers using parametric statistics, though a small proportion of the studies using this methodology, were evaluated by the first author who has the necessary skills in this area. Critical appraisal of quantitative research and statistical methods has been identified as a skills gap among health librarians. To contribute effectively to all stages of the systematic review process, and provide an evidence-based service, it is important that skills are developed in this area22.

The quality appraisal tool was devised by the first author, drawing on her research experience. However, ensuring the tool was ‘fit for purpose’ required further development work during the pilot phase as the tool was applied to a variety of literature. Librarians have done a good deal of work in assisting research users to identify and apply the plethora of quality evaluation tools and checklists available, through the Critical Appraisal Skills Programme30 (CASP). They can extend this role by contributing to the development and piloting of quality appraisal tools, by using their extensive knowledge of the literature, and by further testing and applying these tools in subsequent literature reviews.

To this end, participating in all stages of a literature review, from the funding proposal to the dissemination of findings, can provide a vehicle for developing the role of the librarian in the health services context. At the same time their developing research skills will enable them to conduct research both on the services they provide, and, as a member of a team, on research projects.

Implications for systematic review methods

Literature reviews of diffuse concepts require additional stages of manual sieving, or ‘funnelling’ data, as final criteria for inclusion only emerge after examining the full range of available literature18. A high-level of wastage would then seem to be an unavoidable feature of this type of review as many references initially obtained as of potential, or undeterminable relevance, are later discarded on examining the full-text.

The evaluation of the literature was an intense, iterative process, reflecting the developing literature around quality assessment in qualitative research, which suggests that further criteria refinement may be necessary during evaluation as qualitative research incorporates a wide range of techniques and approaches21.

The development and piloting of the quality appraisal tool was of necessity a rapid process. Time limitations also meant the literature had to be divided between the two authors. However, ongoing consultation was conducted as necessary. Given more time, each paper could have been evaluated by both assessors. This would have enhanced the scientific validity of the review findings by enabling calculation of inter-rater reliability, and further assessment of the evaluation tool.

A common practice in literature review, particularly within short time-scales, is for reviewers to take the lead on specific pieces of the overall work, which are later assembled and honed into a complete review. The advantage of researchers reviewing the full range, as opposed to carving out discreet areas of the literature, is the depth of knowledge acquired around the topic. As quality appraisal progressed the reviewers’ understanding of the literature and its relationship to the model of ‘access to healthcare’ deepened and they were therefore able to feed this more rounded or ‘holistic’ view into the synthesis.

The authors plan to further test and develop the tool and would encourage other researchers and practitioners involved in reviewing different types of evidence to provide feedback on its use. In this regard, the section of the evaluation tool entitled “Area(s) of the model” was specific to the research project described here. However, this section is inter-changeable and could be replaced with criteria from models or frameworks underpinning any review through which the evidence is to be organised.

Finally, this literature review adopted a relativist approach congruent with the multifaceted nature of healthcare service delivery, recognising that human activity reveals a wide range of subjective perspectives and narratives. Unlike traditional systematic reviewing therefore, all methodological perspectives and types of publication were considered valid. Consequently, the findings of this review challenge conventional ways of delivering health services by identifying both the ‘thick’ and ‘thin’ descriptions25 of contextual factors that may result in failure to gain access to services by people with learning disabilities.

The authors believe that the value of this approach to literature review is demonstrated by the range and depth of publications identified and gaps in knowledge highlighted. They suggest that it is possible to conduct such reviews systematically, though they do not constitute a ‘systematic review’ in the traditional sense.

Acknowledgements

We thank our colleagues Angela Swallow and Caroline Glendinning, all those who participated in the consultation exercise and the NCC SDO for funding this project.

Footnotes

The definitive version is available at: http://www.blackwell-synergy.com/links/doi/10.1111%2Fj.1471-1842.2004.00543.x

A full report of the project is available from the NCC SDO web site at: http://www.sdo.lshtm.ac.uk/sdo232002.html

The full quality evaluation tool is available on the NPCRDC, University of Manchester website at: http://www.npcrdc.ac.uk/publications/Quality%20Evaluation%20Tool.doc

Contributor Information

Alison Alborz, Research Fellow, National Primary Care Research & Development Centre, 5th Floor, Williamson Building, University of Manchester, Oxford Road, Manchester, M13 9PL, Alison.Alborz@man.ac.uk.

Rosalind McNally, Library and Information Officer, National Primary Care Research & Development Centre, 5th Floor, Williamson Building, University of Manchester, Oxford Road, Manchester, M13 9PL, Rosalind.McNally@man.ac.uk.

References

- 1.Moher D, Pham B, Lawson ML, Klassen TP. The inclusion of reports of randomised trials published in languages other than English in systematic reviews. Health Technol Assess. 2003;7(41):1–90. doi: 10.3310/hta7410. [DOI] [PubMed] [Google Scholar]

- 2.Sutton A, Abrams K, Jones D, Sheldon T, Song F. Systematic reviews of trials and other studies. Health Technol Assess. 1998;2(19):1–276. [PubMed] [Google Scholar]

- 3.Song F, Eastwoood A, Gilbody S, Duley L, Sutton A. Publication and related biases. Health Technol Assess. 2000;4(10):1–115. [PubMed] [Google Scholar]

- 4.Egger M, Juni P, Bartlett C, Holenstein F, Sterne J. How important are comprehensive literature searches and the assessment of trial quality in systematic reviews? Empirical study. Health Technol Assess. 2003;7(1):1–76. [PubMed] [Google Scholar]

- 5.The Cochrane Library. 2. Chichester, UK: John Wiley & Sons, Ltd; 2004. The Cochrane Methodology Register. 2004. [Google Scholar]

- 6.Thomas J, Harden A, Oakley A, Oliver S, Sutcliffe K, Rees R, et al. Integrating qualitative research with trials in systematic reviews. BMJ. 2004;328:1010–1012. doi: 10.1136/bmj.328.7446.1010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Boaz A, Ashby D, Young K. Systematic reviews: What have they got to offer evidence based policy and practice? London: ESRC UK Centre for Evidence Based Policy and Practice, Queen Mary, University of London; 2002. (ESRC UK Centre for Evidence Based Policy and Practice: Working Paper 2). [Google Scholar]

- 8.McNally R, Alborz A. Developing methods for systematic reviewing in health services delivery and organisation: an example from a review of access to health care for people with learning disabilities I. Identifying the literature. Health Information and Libraries Journal. 2004;21:182–193. doi: 10.1111/j.1471-1842.2004.00512.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Alborz A, McNally R, Swallow A, Glendinning C. From the cradle to the grave. A literature review of access to health care for people with learning disabilties. Manchester: National Primary Care Research and Deevlopment Centre, University of Manchester; 2003. http://www.sdo.lshtm.ac.uk/access.htm. [Google Scholar]

- 10.Pawson R, Boaz A, Grayson L, Long A, Barnes C. Types and quality of knowledge in social care. London: Social Care Institute for Excellence; 2004. pp. 1–83. (Knowledge Review 3). [Google Scholar]

- 11.Edwards A, Russell I, Stott N. Signal versus noise in the evidence base for medicine: an alternative to hierarchies of evidence? Fam Pract. 1998;15:322. doi: 10.1093/fampra/15.4.319. [DOI] [PubMed] [Google Scholar]

- 12.University of Salford. Health Care Practice Research and Development Unit Evaluation Tool for Quantitative Research Studies. 2001. [accessed 11.8.2004]. http://www.fhsc.salford.ac.uk/hcprdu/tools/quantitative.htm.

- 13.University of Salford. Health Care Practice Research and Development Unit Evaluation Tool for Qualitative Research Studies. 2001. [accessed 11.8.2004]. http://www.fhsc.salford.ac.uk/hcprdu/tools/qualitative.htm.

- 14.Popay J, Rogers A, Williams G. Rationale and standards for the systematic review of qualitative literature in health services research. Qualitative Health Research. 2000;8:341–351. doi: 10.1177/104973239800800305. [DOI] [PubMed] [Google Scholar]

- 15.Mays N, Pope C. Assessing quality in qualitative research. British Medical Journal. 2000;320:52. doi: 10.1136/bmj.320.7226.50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Reeves D, Alborz A, Hickson F, Bamford J. Community provision of hearing aids and related audiology services. Health Technol Assess. 2000;4(4):1–198. [PubMed] [Google Scholar]

- 17.Gomm R, Needham G, Bullman A, editors. Evaluating Research in Health and Social Care. London: Open University Press; 2000. [Google Scholar]

- 18.Hawker S, Payne S, Kerr C, Hardey M, Powell J. Appraising the evidence: reviewing disparate data systematically. Qualitative Health Research. 2002;12:1284–1299. doi: 10.1177/1049732302238251. [DOI] [PubMed] [Google Scholar]

- 19.Cohen J. Statistical Power Analysis for the Behavioral Sciences. 2nd ed. Hillsdale, New Jersey: Lawrence Erlbaum; 1988. [Google Scholar]

- 20.Noblit G, Hare D. Meta-ethnography: synthesizing qualitative studies. California: Sage: Newbury Park; 1988. [Google Scholar]

- 21.Dixon-Wood M, Shaw RL, Agarwal S, Smith JA. The problem of appraising qualitative research. Quality and Safety in Health Care. 2004;13:223–225. doi: 10.1136/qshc.2003.008714. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Booth A, Brice A. Clear-cut? Facilitating health librarians to use information research in practice. Health Information and Libraries Journal. 2004;20(supplement 1):45–52. doi: 10.1046/j.1365-2532.20.s1.10.x. [DOI] [PubMed] [Google Scholar]

- 23.Gulliford M, Morgan M, Hughes D, Beech R, Figeroa-Munoz J, Gibson B, et al. Access to Health Care. Report of a scoping exercise for the National Co-ordinating Centre for NHS Service Delivery and Organisation R&D (NCC SDO) London: NCCSDO; 2001. [Google Scholar]

- 24.Milner S, Bailey C, Deans J. “Fit for purpose” health impact assessment: a realistic way forward. Public Health. 2003;117:295–300. doi: 10.1016/S0033-3506(03)00127-6. Ref ID: 24. [DOI] [PubMed] [Google Scholar]

- 25.Geertz C. The interpretation of cultures: selected essays. New York: Basic Books; 1973. [Google Scholar]

- 26.Beverley C, Booth A, Bath P. The role of the information specialist in the systematic review process: a health information case study. Health Information and Libraries Journal. 2003;20:64–75. doi: 10.1046/j.1471-1842.2003.00411.x. [DOI] [PubMed] [Google Scholar]

- 27.Grayson L, Gomersall A. A difficult business: finding the evidence for social science reviews. London: ESRC UK Centre for Evidence Based Policy and Practice, Queen Mary, University of London; 2003. (ESRC UK Centre for Evidence Based Policy and Practice: Working Paper 19). [Google Scholar]

- 28.Sackett DL, Richardson WS, Rosenberg W, Hayes RB. Evidence Based Medicine: How to Practice and Teach EBM. New York: Churchill Livingstone; 1997. [Google Scholar]

- 29.Fulop N, Allen P, Clarke A, Black N, editors. Research Methods. London: Routledge; 2001. Studying the organisation and delivery of health services. [Google Scholar]

- 30.NHS Public Health Resource Unit Critical Appraisal Skills Programme. 2003. [accessed 23.9.2003]. http://www.phru.nhs.uk/casp/casp.htm.