SYNOPSIS

Objective

We determined the competency of the public health epidemiology workforce within state health agencies based on the Centers for Disease Control and Prevention/Council of State and Territorial Epidemiologists Competencies for Applied Epidemiologists in Governmental Public Health Agencies (AECs).

Methods

The competence level of current state health agency staff and the need for additional training was assessed against 30 mid-level AECs. Respondents used a five-point Likert scale—ranging from “strongly agree” to “strongly disagree”—to designate whether staff was competent in certain areas or whether additional training was needed for each of the competencies.

Results

Most states indicated their epidemiology workforce was competent in most of the AECs subject areas. Subject areas with the greatest number of states reporting competency (82%) are creating and managing databases and applying privacy laws. However, at least one-third of the states reported a need for additional training in all competencies assessed. The greatest reported needs were for additional training in surveillance system evaluation and use of knowledge of environmental and behavioral science in epidemiology practice.

Conclusion

The results indicate that most epidemiologists mastered the traditional discipline-specific competencies. However, it is unclear how this level of competency was achieved and what strategies are in place to sustain and strengthen it. The results indicate that epidemiologists have lower levels of competence in the nontraditional epidemiologic fields of knowledge. Future steps to ensure a well-qualified epidemiology workforce include assessing the full AECs in a subgroup of Tier 2 epidemiologists and implementing competencies in academic curricula to sustain reported competency achievements.

In November 2001, the Council of State and Territorial Epidemiologists (CSTE) conducted the first comprehensive nationwide assessment of core epidemiology capacity in state and territorial health departments. The assessment found that approximately 42% of 1,366 epidemiologists lacked formal training in epidemiology, indicating a large training gap in the public health workforce.1

The CSTE Epidemiology Capacity Assessment (ECA) was revised and again administered in 2004, this time to focus on the infrastructure of public health surveillance programs, core epidemiology capacity, and training opportunities for epidemiologists employed in health departments. Results revealed that 29% of epidemiologists lacked formal training or academic coursework in epidemiology, and the assessment indicated a need for additional training in several key areas: design and evaluation of surveillance systems, design of epidemiologic studies, design of data collection tools, data management, evaluation of public health interventions, and leadership and management training.2 However, 94% of respondents reported providing or funding training or education to enhance the competence of epidemiologists during the 12 months preceding the 2004 assessment.3

The findings from the 2001 and 2004 assessments prompted CSTE to focus its workforce initiatives on strengthening public health epidemiology. Four priority areas for building workforce capacity and enhancing the competency of epidemiologists were identified:

Measuring epidemiology capacity and filling the need for trained epidemiologists within the public health system;

Establishing public health competencies and addressing the training gap;

Identifying unique barriers to recruiting and retaining applied epidemiologists; and

Addressing funding gaps and leadership issues.4

CSTE collaborated with the Centers for Disease Control and Prevention (CDC) to build epidemiology workforce capacity through the CDC/CSTE Applied Epidemiology Fellowship program, convened the ECA workgroup to revise the epidemiologic assessment tool for 2006, and supported a team assigned to the National Public Health Leadership Institute. In addition, CDC and CSTE convened a workgroup of epidemiology experts—comprising representatives from state and local health agencies, schools of public health, private industry, and CDC—to develop Competencies for Applied Epidemiologists in Governmental Public Health Agencies (AECs) for local, state, and federal public health epidemiologists.

The objectives of the AECs are to define the discipline of applied epidemiology and describe what skills four different levels of practicing epidemiologists working in government public health agencies should have to accomplish required tasks.5 The AECs were created within the framework of the Core Competencies for Public Health Professionals, developed by the Council on Linkages Between Academia and Public Health Practice (COL).6 The expert panel modified the eight skill domains of the Core Competencies to address the unique elements of epidemiologic practice.

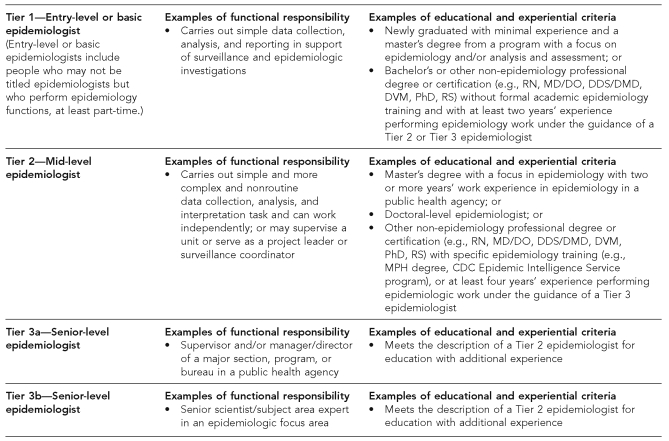

The panel, which first convened in October 2004, developed the AECs in four tiers. Each tier focused on epidemiologists with increasing levels of experience and responsibilities (Figure 1).5 The panel approved the final competencies in 2006 after a validation period and with broad input from practicing epidemiologists and public health organizations.

Figure 1.

Functional responsibility and educational and experiential criteria of epidemiologists from the CDC/CSTE AECsa

Centers for Disease Control and Prevention (US) and Council of State and Territorial Epidemiologists. CDC/CSTE applied epidemiology competencies for governmental public health agencies: preface [cited2007 Jan 28]. Available from: URL: http://www.cste.org/Assessment/competencies/Appiled EPI preface jan4.pdf

RN = registered nurse

MD = medical degree

DO = Doctor of Osteopathy

DDS = Doctor of Dental Surgery

DMD = Doctor of Dental Medicine

DVM = Doctor of Veterinary Medicine

PhD = Doctor of Philosophy

RS = registered sanitarian

CDC = Centers for Disease Control and Prevention

CSTE = Council of State and Territorial Epidemiologists

AECs = Competencies for Applied Epidemiologists in Governmental Public Health Agencies

MPH = Master of Public Health

METHODS

Questions to measure competency-specific training needs were included in the 2006 ECA tool.7 All questions were based on the perspective of the state epidemiologist or a designated senior-level health official. After the 2006 ECA was pilot-tested in seven states, it was made available to state health agencies in all 50 states, the District of Columbia, and eight U.S. jurisdictions and territories from May through August 2006. The competence level of current staff and the need for additional training were assessed against 30 competencies from part II of the 2006 ECA titled “Workforce Competency: Recruitment and Retention.”7

In formulating 30 competency statements for the 2006 ECA, a CSTE working group mapped the questions to the CDC/CSTE AECs for Tier 2 (mid-level) epidemiologists (Figure 1).8 Questions covered all eight Core Competency domains: skills of analytic assessment; basic public health sciences; cultural competency; communication; community dimensions of practice; financial planning and management; leadership and systems thinking; and policy development/program planning.

The 30 competencies were selected based on a review of the relevance and the predominant day-to-day applicability of each competency. While the authors strived to remain at the competency level, in some instances, subcompetencies—which were perceived to have special relevance to working epidemiologists' daily activities—were included. The discipline-specific competency domains were intentionally overrepresented to reflect current general practice nationwide. An attempt was made to include some key cross-cutting competencies such as systems thinking, communication, and evaluation.

Respondents to the 2006 ECA used a five-point Likert scale—ranging from strongly agree (1) to strongly disagree (5)—to designate whether their staff are competent in an area and whether additional training was needed for each of the 30 selected Tier 2 competencies.7,8

For each competency, response to “staff are competent in this area” was interpreted as agreement (i.e., competence) if the respondent selected a 1 or 2, neutral if the respondent selected a 3, and disagreement (i.e., not competent) if the respondent selected a 4 or 5. Response to “additional training is needed” was interpreted as agreement (i.e., more training needed) if the respondent selected a 1 or 2, neutral if the respondent selected a 3, and disagreement (i.e., more training not needed) if the respondent selected a 4 or 5.

The response rate for the 2006 CSTE ECA was 92% (54/59: 50 states, the District of Columbia, and three U.S. jurisdictions); however, not all participants responded to each question.

RESULTS

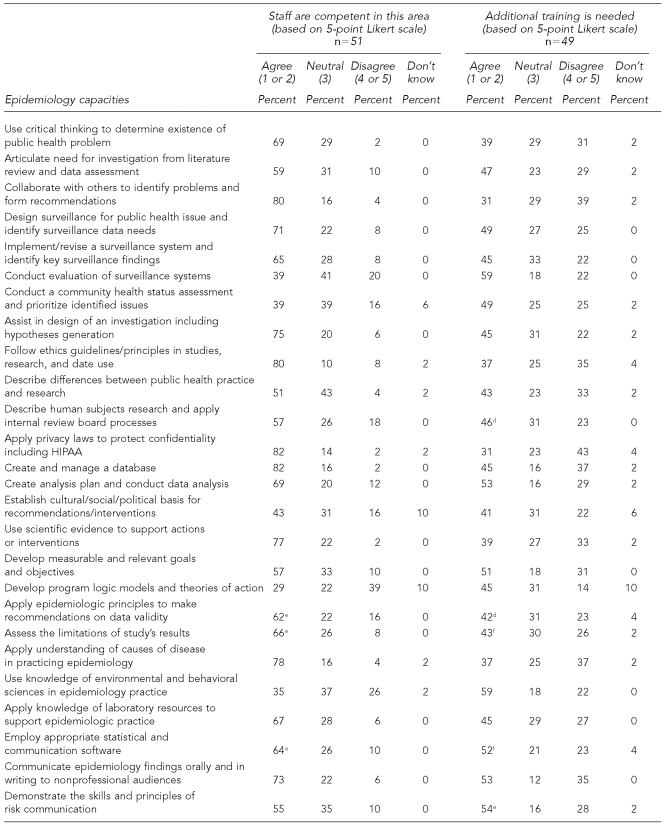

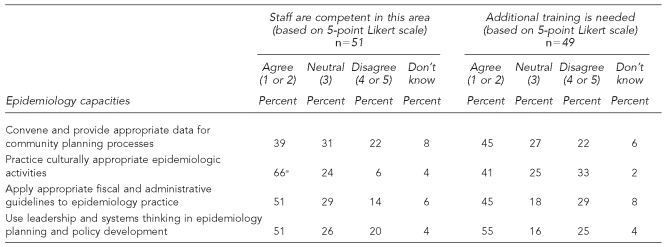

Staff are competent in this subject area

Most states indicated their epidemiology workforce was competent in most of the competency areas. For 24 (80%) of the 30 competencies, more than half of respondents indicated their staff were competent. For the remaining six competencies, relatively few states reported adequate levels of training (Table). Subject areas with the greatest number of states reporting competency (82%) were creating and managing databases and applying privacy laws to protect confidentiality, including the Health Insurance and Portability Accountability Act of 1996. In seven other subject areas, comprising a mix of topics, approximately three-quarters of states (71% to 80%) reported staff competence.

Table.

Competency and training needs of Tier a epidemiologists,b from the CDC/CSTE AECsc

Tier 2 competencies were selected as a general level of assessment for all epidemiologists.

Centers for Disease Control and Prevention (US) and Council of State and Territorial Epidemiologists. Competencies for applied epidemiologists in governmental public health agencies [cited2007 Jan 28]. Available from: URL: http://www.cdc.gov/od/owcd/cdd/aec or http://www.cste.org/competencies.asp

Council of State and Territorial Epidemiologists. CDC/CSTE applied epidemiology competencies for governmental public health agencies: preface [cited2007 Jan 28]. Available from: URL: http://www.cste.org/Assessment/competencies/AppiledEPIprefacejan4.pdf

n548

n550

n547

CDC = Centers for Disease Control and Prevention

CSTE = Council of State and Territorial Epidemiologists

AECs = Competencies for Applied Epidemiologists in Governmental Public Health Agencies

HIPAA = Health Insurance Portability and Accountability Act

For half of the 30 competencies, states reported acceptable training. This contrasts with the six competencies that states indicated were areas of substantial training weakness. A relatively high proportion of states (69%) reported competence both in the use of critical thinking to determine the existence of public health problems and in creating an analysis plan and conducting data analysis. Also in this group were competencies at the lower end of the range for which approximately half of the states reported competence (51%), including using leadership, systems thinking in epidemiology planning, and policy development; and applying appropriate fiscal and administrative guidelines to epidemiology practice. As with competencies for which a fairly large proportion of states reported adequate training, these competencies cut across the spectrum of topics—from those typically included in an academic program in epidemiology (e.g., using appropriate statistical software), to those primarily learned on the job (e.g., developing measurable project goals), to those for which public health workers often received continuing education (e.g., describing human subjects research and internal review board).

Additional training is needed

Approximately one-third of states reported a need for additional training in all 30 competencies. The greatest needs were reported for additional training in surveillance system evaluation and use of knowledge of environmental and behavioral science in epidemiology practice (59%). Other subject areas of significant training need were: leadership and systems thinking (55%), risk communications (54%), communicating findings to lay audiences (53%), using statistical software (52%), developing measurable goals and objectives (51%), and creating an analysis plan and analyzing data (53%).

In general, reporting staff competency in a given subject area correlated with indicating no need for additional training in that area. For example, 82% of states reported staff competence in applying privacy laws to protect confidentiality, and 31% reported a need for additional training in this area. Conversely, 35% of states indicated staff competency in using knowledge of environmental and behavioral science in epidemiology practice, and 59% reported a need for additional training in this competency. For a few competencies, states reported both an area of training strength and a need for additional training. For example, 82% of states reported staff competence in creating and managing databases; yet 45% also indicated staff needed additional training in that subject area.

DISCUSSION

Although the 2004 ECA seemed to indicate gaps in knowledge at many levels within the cadre of government epidemiologists, the data were insufficient to clarify those gaps. Specifically, the limited information hampered the development of an action plan to address those gaps. Thus, for the 2006 ECA, CSTE redesigned and expanded the ECA training section, most notably to include a subset of the recently developed AECs. In addition, more detailed information was collected on training opportunities.

The 2006 ECA findings brought good news, anticipated news, and opportunities to design a road map for the future. The results (shown in the Table) clearly indicate that most Tier 2 epidemiologists had mastered the traditional discipline-specific competencies. Although reassuring, the ECA does not indicate how epidemiologists achieved this level of competency and what strategies are in place to sustain and strengthen it. Most likely, these results were influenced by a variety of factors, such as years of employment, market availability, and infrastructure capacity. For example, the competency addressing the application of privacy laws typically is learned on the job and is influenced by employment seniority. On the other hand, market availability and infrastructure capacity are local determinants pointing to the ability to attract and train epidemiologists and may support the degree of mastery associated with educational competencies, such as the creation of databases.

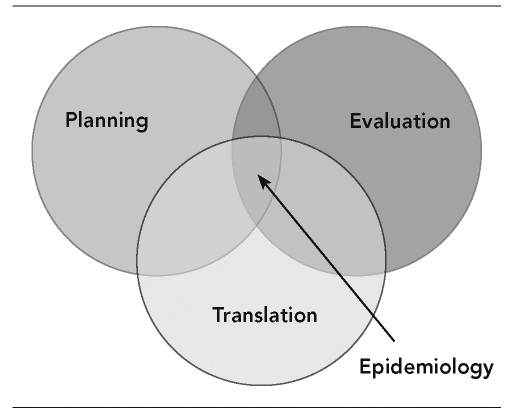

The findings of the 2006 ECA confirmed the anticipated news: lower levels of competence in the nontraditional epidemiologic fields of knowledge. Lower scores fell into three intertwined competency categories: systems thinking and planning, evaluation, and translation to practice (Figure 2). Gaps in competency to employ a systems-driven holistic approach to planning hamper the development and implementation of evaluation frameworks designed to translate research findings into practical, community-based interventions.

Figure 2.

The interconnectedness of systems thinking and planning, evaluation, and translation to practice

The lower scores in two competencies—application of fiscal and administrative guidelines to epidemiology practice and use of leadership and systems thinking in epidemiologic planning and policy development—express competency deficiencies in systems thinking. In addition, community health status assessment and data generation for community planning processes point to the lack of knowledge to employ a holistic approach to planning and to underutilization of epidemiology to connect problem identification with community-based solutions. Lower percentages for evaluation indicate not only a lack of knowledge of evaluation as a competency domain, but also the effect of that deficiency on the application in a discipline-specific setting. Specifically, the development of logic models and ability to evaluate surveillance systems are related.

The third area of deficiency is making science work for people—for example, describing the differences between research and practice, establishing the cultural and sociopolitical basis for interventions, and applying environmental and behavioral science in epidemiology practice. Of special note is the apparent discrepancy in scores between communicating findings (73%) and demonstrating skills in risk communication (55%). Environmental epidemiologic studies, regardless of how well executed, rarely have findings that do not require a careful explanation of the risks and limitations.

The 2006 ECA provides some data regarding training the epidemiology workforce.7 The data indicate that accessibility to training opportunities has improved substantially. The trend toward distance learning is cost-effective and readily available to employees. Also positive is a movement toward institutionalizing training by integrating it in performance-review processes and supporting a training coordinator for internal training. An improvement in the 6% of state health agencies that reported requiring continuing education, combined with the high (90%) reported access, would dramatically boost sustaining workforce competency.

To ascertain how states would assure access to training and continuing education, specific questions were added to the 2006 ECA, giving respondents a series of choices. Based on the answers to those questions, most states indicated that training in epidemiology was provided through partnerships.7 CDC's role is affirmed as a known knowledge and practice base in epidemiology. Similarly, schools of public health are the leading producers of epidemiologists and discipline-specific academic course work. The influence of public health infrastructure support from financial resources targeting preparedness is evident in the finding that the nation's Centers for Public Health Preparedness are also a commonly used training venue for epidemiologists.

Limitations

The results of the 2006 ECA should be interpreted within the context of a few key limitations. Inherent to self-reporting are limitations regarding accurate interpretation of the good news indicating that most epidemiologists achieved high levels of competency of the discipline-specific domains. The 2006 ECA respondents were asked to describe the competence and training needs in the selected competency areas. While the definition of competence was not explicitly included within the 2006 ECA, all respondents had access to the full AECs, which include a preface that describes competence as well as the context and use of the competency statements. However, it is probable that the methodology used by respondents in determining competence most likely varied.

Furthermore, the respondent providing the information on competence and training needs may or may not have had direct supervision over the entire epidemiology staff. Feedback from senior-level epidemiologists who served as respondents to the ECA indicated that while completion of the ECA tool by every mid-level epidemiologist within his/her respective organizations was difficult to achieve, every attempt was made to canvas the target audience. The design of the assessment prohibited the collection of information that could have described how those competency levels were achieved. This makes it difficult to identify workable strategies aimed at sustaining these positive results.

Lastly, only a subset of 30 competencies from the more comprehensive AECs was included. The results should be viewed with that in mind.

CONCLUSION

The 2006 ECA provides unique insights into the quality of the epidemiology workforce. Three observations provide a road map for the future:

The first assessment efficiently validates the utility of the AECs as an important tool to measure and improve the knowledge and skills of epidemiologists employed in state and territorial health departments. To gain a more comprehensive understanding of competency in the existing epidemiology workforce, an assessment of the full AECs in a subgroup of Tier 2 epidemiologists is recommended.

The higher scores in the discipline-specific competencies must not be misinterpreted to connote mastery; this was revealed in some of the responses in which states indicated staff competency (e.g., in creating databases [82%]) but also the need for additional training (e.g., in creating databases [45%]). Future assessments should include questions ascertaining the methods that were employed to achieve such competency levels and that document best practices to sustain those results.

Competencies need to be implemented in existing and future academic curricula to sustain this self-reported achievement. Priority should be given to the development and implementation of curricula addressing the nontraditional competency domains, specifically planning, evaluation, and translation to practice.

Because the AECs uniquely blend educational and workforce (practice) competencies, translating those competencies in curricula and ultimately training courses demands a committed collaboration between academia and practice. Examples of productive partnerships exist in the form of academic health departments, but the lack of sustainable resources for such endeavors threatens this proven path to success. If the epidemiologic workforce is to maintain the reported level of competence, government support as articulated by the Institute of Medicine is imperative.9 Workforce quality and quantity are closely linked. Failure to address the lack of epidemiologists in specific disciplines, such as environmental health and chronic disease prevention, will commensurately affect competence.

Footnotes

This work was supported by cooperative agreement U60/CCU07277 from the Centers for Disease Control and Prevention to the Council of State and Territorial Epidemiologists.

REFERENCES

- 1.Boulton ML, Malouin RA, Hodge K, Robinson L. Assessment of the epidemiologic capacity in state and territorial health departments—United States, 2001. MMWR Morb Mortal Wkly Rep. 2003;52(43):1049–51. [PubMed] [Google Scholar]

- 2.Boulton ML, Abellera J, Lemmings J, Robinson L. Assessment of epidemiologic capacity in state and territorial health departments—United States, 2004. MMWR Morb Mortal Wkly Rep. 2005;54(18):457–9. [PubMed] [Google Scholar]

- 3.Council of State and Territorial Epidemiologists. 2004 national assessment of epidemiologic capacity: findings and recommendations. [cited 2007 Jan 28]; Available from: URL: http://www.cste.org//Assessment/ECA/pdffiles/ECAfinal05.pdf.

- 4.Council of State and Territorial Epidemiologists. CSTE special report: workforce development initiative. [cited 2007 Jan 28];2004 Jun; Available from: URL: http://www.cste.org/pdffiles/Workforcesummit.pdf.

- 5.Centers for Disease Control and Prevention (US) and Council of State and Territorial Epidemiologists. CDC/CSTE applied epidemiology competencies for governmental public health agencies (AECs): preface. [cited 2007 Jan 28]; Available from: URL: http://www.cste.org/Assessment/competencies/Appiled EPI preface jan4.pdf.

- 6.Council on Linkages Between Academia and Public Health Practice. Core competencies of public health professionals. [cited 2007 Jan 28]; Available from: URL: http://www.phf.org/Link.htm.

- 7.Council of State and Territorial Epidemiologists. 2006 national assessment of epidemiologic capacity: findings and recommendations. [cited 2007 Jun 21]; Available from: URL: http://www.cste.org/pdffiles/2007/2006CSTEECAFINALFullDocument.pdf.

- 8.Council of State and Territorial Epidemiologists. Competencies for applied epidemiologists in governmental public health agencies (AECs) [cited 2007 Jan 28]. Available from: URL: http://www.cdc.gov/od/owcd/cdd/aec or http://www.cste.org/competencies.asp.

- 9.Gebbie KM, Rosenstock L, Hernandez LM, editors. Educating public health professionals for the 21st century. Washington: National Academies Press; 2003. Who will keep the public healthy? p. p. 157. [PubMed] [Google Scholar]