Abstract

The national program to report hospital-level outcomes for transplantation has been in place since 1991, yet it has not been addressed in the existing literature on hospital report cards. We study the impact of reported outcomes on demand at kidney transplant centers. Using a negative binomial regression with hospital fixed effects, we estimate the number of patients choosing each center as a function of reported outcomes. Parameters are identified by the within-hospital variation in outcomes over five successive report cards. We find some evidence that report cards influence younger and college-educated patients, but overall report cards do not affect demand.

Quality report cards for providers and health plans have proliferated in recent years. While report cards supply valuable feedback to the profiled institutions, the primary objective of releasing the data publicly is to influence patient behavior (Wicks and Meyer 1999; Palmer 1995). Most of the previous literature on the impact of provider report cards has focused on the cardiac surgery profiling programs in New York and Pennsylvania. Each led to improvements in patient outcomes and the process of care (Baker et al. 2002; Bentley and Nash 1998; Chassin 2001; Hannan et al. 1994), though it is unclear whether the report cards were used by patients or their referring physicians. One study found that market shares increased more rapidly at New York hospitals with good outcomes following the release of report cards (Mukamel and Mushlin 1998), and another (Mukamel et al. 2004) found that the release of reports influenced patients’ choice of cardiac surgeon. However, Romano and Zhou (2004) found no relationship between reported outcomes and choice behavior, and Schneider and Epstein (1999) found that few Pennsylvania patients were aware of report cards prior to undergoing surgery.

In general, studies of the impact of publicly releasing outcomes data on patient choice and market share report negative results (Marshall et al. 2000; Mennemeyer, Morrisey, and Howard 1997; Schauffler and Mordavsky 2001; Vladeck et al. 1988). These studies must be interpreted cautiously due to small sample sizes, presence of capacity constraints in some hospitals (limiting the ability of favorable report cards to increase volume), and lack of new information in report cards relative to pre-existing perceptions of quality (Mukamel and Mushlin 2001).

While the cardiac outcome reporting programs in Pennsylvania and New York have been subject to extensive evaluation, outcome report cards for solid organ transplantation have received scant attention in the quality improvement literature. The goal of this study is to determine whether report cards influence the number of kidney waiting list registrations and live donor transplants at transplant hospitals. Our results suggest that they do not: hospitals that demonstrate an improvement (deterioration) in outcomes from one report card to the next do not experience a proportional increase (decrease) in patient demand.

Background on Kidney Transplantation

In 2001, there were 26,882 kidney transplants performed at more than 230 hospitals. About one-half of transplant recipients receive a kidney from a living donor, usually a friend or family member. Candidates who cannot obtain a living donor kidney are placed on the waiting list for deceased donor kidneys. Most major cities now have at least two kidney transplant centers, and, although the majority of procedures continue to be performed at large academic medical centers, kidney transplantation increasingly is viewed as a “routine” medical procedure on par with other major surgeries.

End-stage renal disease patients deemed suitable candidates for transplantation typically choose a transplant center shortly after diagnosis in consultation with their nephrologist. From a patient’s perspective, transplant centers are differentiated primarily by travel time and perceived quality. Nearly all transplant operations are covered by insurance, and patients’ copayments do not vary as long as they choose an in-network hospital. Candidates for deceased donor transplants who live near regional boundaries also may consider expected waiting times when choosing a transplant center, but most face little variation among nearby centers.

Patients’ choices are constrained by their insurers. Medicare covers transplantation at any center meeting a fairly minimal set of criteria in terms of staffing and procedure volume, but state-run Medicaid programs cover kidney transplantation at in-state facilities only. Private insurers bargain aggressively with transplant programs, and most restrict coverage to a few centers in each geographic area under the guise of “centers of excellence” programs.

Report Cards in Transplantation

Hospital-specific graft and patient survival reports for solid organ transplantation have been available to patients and physicians since 1991, and are produced as part of the federal government contract for the Scientific Registry of Transplant Recipients. (The “graft” is the transplanted organ and the “graft survival rate” measures the proportion of patients with functioning transplanted organs at a specific time post-transplant. A patient who dies with a functioning transplant is counted against the graft survival rate, regardless of the cause of death.) Reports list by organ type each hospital’s actual graft and patient survival rates and expected graft and patient survival rates. According to the Scientific Registry (2004), “the ‘Expected Graft Survival’ is the fraction of grafts that would be expected to be functioning at each reported time point, based on the national experience for patients similar to those at this center.” The registry computes expected rates by estimating a Cox proportional hazards model (covariates include primary diagnosis, age, sex, race, and various physiologic measures) for all patients receiving a kidney transplant in the United States during a specific time period and using the estimated coefficients to project survival rates for the patient populations of each hospital. Reports for 1991, 1994, and 1997 were released in hardcopy format to medical libraries and government document depositories. They were not widely publicized.

In 1998 and later in 1999, the Division of Transplantation in the Department of Health and Human Services, which oversees the Scientific Registry contract, criticized the center-specific report card program on the grounds that the data in the reports were out of date by the time they were released and the hardcopy reports were inaccessible to patients and nephrologists (U.S.DHHS 1998; 1999). The Division of Transplantation directed the United Network for Organ Sharing (UNOS), which held the Scientific Registry contract at the time, to “use rapidly advancing Internet technology to make information swiftly, conveniently, and inexpensively available throughout the nation,” (U.S. DHHS 1998). In response, UNOS began releasing reports on the Internet on an annual basis.

In October 2000, the contract for the Scientific Registry of Transplant Recipients was re-bid and awarded to the University Renal Research and Education Association (URREA) at the University of Michigan. URREA releases reports on the Internet every six months (see http://www. ustransplant.org/csr_0507/csrDefault.aspx). The types of information provided in a report are displayed in Table 1. The performance measures illustrated in the table are based on transplants performed at an unnamed hospital between July 1, 2001 and Dec. 31, 2003, as stated in the January 2005 report.

Table 1.

Example of a center-specific report card

| Graft survival by time since transplant, adults 18+ |

||||

|---|---|---|---|---|

| Hospital X |

United States |

|||

| 1 Month | 1 Year | 1 Month | 1 Year | |

| Transplants (n = number) | 677 | 677 | 34,696 | 34,696 |

| Graft survival (%) | 98.38 | 94.35 | 96.60 | 91.38 |

| Expected graft survival (%) | 96.64 | 91.81 | ||

| Ratio of observed-to-expected failures | .48 | .66 | 1.00 | 1.00 |

| 95% Confidence interval | ||||

| Lower bound | .24 | .46 | ||

| Upper bound | .86 | .92 | ||

| P-value (2-sided) | <.01 | .012 | ||

| How do the rates at this center compare to those in the nation? | Statistically higher | Statistically higher | ||

| Follow-up days reported by center (%) | 100.0 | 98.4 | 99.4 | 95.7 |

| Maximum days of follow-up (n) | 30 | 365 | 30 | 365 |

| Transplant time period | July 1, 2001 to Dec. 31, 2003 | July 1, 2001 to Dec. 31, 2003 | July 1, 2001 to Dec. 31, 2003 | July 1, 2001 to Dec. 31, 2003 |

Note: Center-specific report data released at www.ustransplant.org in January 2005.

Methods

Overview

We created a data set listing the combined number of patients registering for deceased donor kidney transplants and patients receiving living donor transplants (i.e., patient demand) at each hospital during the time periods between the release of successive report cards. Using a regression model for panel data, we studied the impact of reported outcomes on patient demand. Parameters were identified from the within-hospital variation in the number of patients choosing the hospital and reported outcomes. For the sake of brevity. we discuss the impact of report cards on patients’ choices, recognizing that in reality the choice of transplant hospital is made jointly by patients, their physicians, and insurers.

Study Population

The study sample consists of all patients age 18 and older who received living donor transplants, and all patients age 18 years or older who registered on the deceased donor waiting list (and who did not subsequently undergo a live donor transplant) in the continental United States between Sept.1, 1999 (the release date of the first report card on the Internet) and Oct. 30, 2002. Some of the wait list registrants have been transplanted since registering, others have died or are still waiting. The data were obtained from the Scientific Registry of Transplant Recipients, which compiles the information from forms that transplant centers are required to file with the Organ Procurement and Transplantation Network.

From the initial sample of 75,821 transplant recipients (i.e., patients with living donors) and candidates (i.e., patients registering on the waiting list), we excluded: patients who had been transplanted previously (the vast majority of whom register at the institution where they received the first procedure), candidates for multi-organ transplants (who must choose from a much narrower set of hospitals), non-citizens, hospitalized patients, candidates for double kidney transplants, patients with missing data for their state of residence, patients at centers with fewer than 10 listings during the study period, patients at Veterans Administration hospitals or children’s hospitals, and patients choosing transplant centers that were not in operation long enough to be included in the most recent center-specific survival report. The final sample consisted of 59,332 patients, aggregated to the hospital-period level, as later described.

Results must be interpreted cautiously in light of the fact that we did not observe a “control” sample of patients who lacked access to report cards (for example, registrants in the pre-report card era). The interpretation of a negative finding (i.e., that registrations are not influenced by report card measures) is straightforward: report cards do not affect choice. However, a finding that hospitals with better report card performance experience proportionately larger increases in demand does not imply causality. If registrants or their referring physicians learned about quality through informal channels, then it might appear as though report cards affect choice, even though choices would be responsive to quality in the absence of report cards. In summary, failure to observe a control sample may lead us to incorrectly reject the null hypothesis of no effect (i.e., a false positive), but it does not lead us to fail to reject the null when the alternative hypothesis is correct (i.e., a false negative). Fortunately (from the standpoint of interpretability), we observe mostly negative effects in this analysis.

Variable Construction

The dependent variable is the number of patients choosing transplant center i during period t (t=1, 2, 3, 4, 5), where periods are defined by the release of five outcome report cards beginning in September of 1999. We separately examine demand among patients with a college degree (N = 6,760), patients between 18 and 40 years of age (N = 15,486) (the main sample includes patients between 18 to 91 years of age, we chose 40 arbitrarily as the upper bound for “young” patients), privately insured patients (N = 21,307), and transplant patients who received a kidney from a living donor (N = 18,640). Note that we only observe educational attainment for patients registering on the deceased donor wait list. Thus, the analysis of demand among college-educated patients excludes the 70% or so of living donor recipients who did not register on the waiting list (30% of living donor recipients register on the deceased donor wait list pre-transplant in the event the potential living donor is unable to donate). Also note that for patients registering at more than one hospital (about 5%), we counted only the first registration. Results for models that counted the second registration were similar.

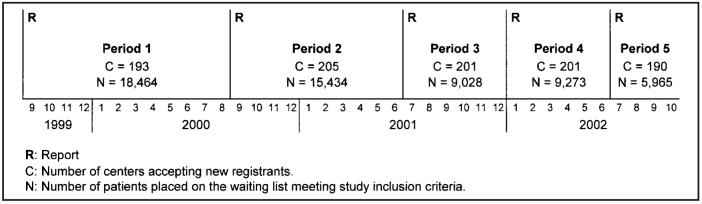

Figure 1 displays the study timeline, the number of centers represented in the data in each period, and the number of patients in the study sample choosing transplant centers in each period. The number of transplant centers varies over time because some hospitals opened transplant programs while others closed programs. To be included in the data, a hospital had to accept registrants for a minimum of two consecutive periods. Period 1 is defined as the time between the release of the first center-specific survival report on the Internet in September 1999 and the release of the second report in September 2000. Periods 2 through 5 are defined similarly.

Figure 1.

Timeline of the release of report cards during the study period

The principle independent variable is hospital i’s actual one-year post-transplant graft survival rate minus its expected graft survival rate, as listed in the center-specific survival report released at the beginning of period t: actual survivalit - expected survivalit. The variable varies by hospital and by period. Positive values indicate better performance (i.e., actual survival exceeds expected survival). We use graft survival rates rather than patient survival rates on the theory that patient survival rates are more likely to be influenced by factors beyond hospitals’ control. In any event, the two are highly correlated. URREA computes expected survival rates by: 1) estimating graft survival time as a function of patient and donor characteristics using data on all transplants in the United States in a given time span, and then 2) computing expected survival rates for each transplant center based on the estimated parameters and the subsample of patients transplanted at the center. According to URREA (Scientific Registry of Transplant Recipients 2004), “the ‘Expected Graft Survival’ is the fraction of grafts that would be expected to be functioning at each reported time point, based on the national experience for patients similar to those at this center.”

To determine the sensitivity of results to different hospital performance measures, we separately estimate two additional sets of models where performance is characterized by: 1) the actual graft survival rate, unadjusted for differences in patient mix: 2) the ratio of observed to expected graft failure rates; and 3) the actual graft survival rate minus the expected graft survival rate divided by the standard error of the expected graft survival rate. The latter is a “z-score” (as in Luft et al. 1990) with a theoretical range of -∞ to ∞. Again, higher values are better. Adjusting for the standard error reflects the degree of statistical confidence in the observed difference between the actual and expected graft survival rates. Hereafter, we refer to these various measures of graft survival rates as hospital “performance,” recognizing that quality is multi-dimensional and survival rates are affected by many factors excluded from the statistical model used to compute expected survival rates.

We include two additional independent variables to measure temporal changes in the pool of patients from which hospitals draw transplant registrants. The first is total patient demand within a 100-mile radius of the hospital. The second is total patient demand in the donut-shaped region between 100 and 200 miles of the hospital. Only 2.5% of patients choose a hospital that is more than 200 miles from their home. Typically, hospital-level regression models also control for teaching status, bed size, and ownership status. Use of a fixed-effects model, as subsequently described, obviates the need to control for these and other time-invariant hospital attributes.

Analysis

The dependent variable - the number of registrations - is a count variable observed for the same set of hospitals over multiple periods, so a regression model for handling count dependent variables for panel data is indicated. Poisson models are frequently used in this context, but they assume that the expected value of the dependent variable is equal to its variance. We tested for and rejected this assumption using Cameron and Tivedi’s (1990) regression test. Following the advice of Allison and Waterman (2004), we instead use a negative binomial regression model with a complete set of period and hospital fixed effects. The model is:

where Yit is the number of patients choosing hospital i in period t; αi represents hospital i’s fixed effect; γt is a fixed effect for period t; β1, the parameter of interest, captures the marginal effect of hospital performance (PERFORM); β2 and β3 are parameters on the variables representing total demand within 100 miles (DIST100) and between 100 and 200 miles (DIST200); and εit is an independently and identically distributed error term. Hospital fixed effects capture the impact on demand of observed and unobserved hospital characteristics that do not change over time. The β parameters are identified from the within-hospital variation in performance and demand. Several statistical programs (for example, Stata, Stata Corporation, College Station, Texas) contain fixed-effects estimators for negative binomial models that do not require estimating parameters on the individual fixed effects. However, as shown by Allison and Waterman, these are not true fixed-effects models in the sense that it is possible to estimate a coefficient on a time-invariant characteristic. We estimate period and hospital fixed effects explicitly.

Results

Summary statistics for the dependent variable (period-by-period patient demand) and independent variables are displayed in Table 2. By construction, the mean of the actual graft survival rate minus the expected graft survival rate is zero. Hospitals with positive values have better than expected performance and hospitals with negative values have worse than expected performance.

Table 2.

Summary statistics

| Mean | S.D.a | Minimum | Maximum | |

|---|---|---|---|---|

| Registrations | 59 | 64 | 1 | 634 |

| Performance | ||||

| Actual graft survival rate | .89 | .07 | .37 | 1.00 |

| Actual minus expected graft survival rate | .00 | .06 | -.52 | .18 |

| Observed ÷ expected graft failure rate | 1.02 | .58 | .00 | 5.84 |

| Z-score | -.10 | 1.61 | -20.69 | 4.44 |

| Number of registrants, 0 to 100 miles | 262 | 246 | 3 | 1,337 |

| Number of registrants, 100 to 200 miles | 133 | 136 | 0 | 1,009 |

S.D. = standard deviation.

Temporal variation in the independent variable of interest - hospital performance - is necessary to estimate a fixed effects model. Correlations between actual minus expected graft survival rates are displayed across reports in Table 3. Correlation coefficients between adjacent reports exceed .5, but correlations between reports separated by at least one period (for example, periods 1 and 3 or periods 2 and 4) are less than .5, suggesting that current performance is not necessarily indicative of past or future performance.

Table 3.

Correlation matrix for actual minus expected graft survival rates

| Period |

|||||

|---|---|---|---|---|---|

| Period | 1 | 2 | 3 | 4 | 5 |

| 1 | 1.00 | ||||

| 2 | .55 | 1.00 | |||

| 3 | .46 | .73 | 1.00 | ||

| 4 | .33 | .46 | .61 | 1.00 | |

| 5 | .29 | .34 | .48 | .62 | 1.00 |

Table 4 displays incidence rate ratios (“rate ratios” hereafter) for hospital performance, equal to exp(β1), from negative binomial regressions for several different specifications. Incident rate ratios are analogous to odds ratios. For the difference between actual and expected graft survival, actual graft survival, and the z-score, values greater than one indicate that better performance increases patient demand at a center, while values less than one indicate that better performance decreases demand. For the ratio of observed-to-expected graft failure rates, the reverse is true. Each panel of the table reports results for a different measure of quality.

Table 4.

Incidence rate rations from negative binomial models

| No fixed effects |

With hospital fixed effects |

|||

|---|---|---|---|---|

| All centers |

All centers |

>10 registrations |

>20 registrations |

|

| Sample | IRR 95% CI (1) | IRR 95% CI (2) | IRR 95% CI (3) | IRR 95% CI (4) |

| Performance = actual graft survival rate - expected graft survival rate | ||||

| All registrants | 3.66 [1.69, 7.96]** | 1.10 [.77, 1.57] | 1.07 [.73, 1.57] | 1.14 [.75, 1.73] |

| College degreea | 6.01 [1.95, 18.56]** | 1.84 [.76, 4.45] | 1.98 [.74, 5.34] | 3.39 [1.09, 10.53]** |

| Age 18-40 | 4.81 [1.96, 11.77]** | 2.07 [1.27, 3.35]** | 2.03 [1.21, 3.40]** | 2.35 [1.33, 4.13]** |

| Private insurance | 5.21 [2.11, 12.84]** | 1.19 [.70, 2.03] | 1.09 [.61, 1.97] | 1.39 [.72, 2.67] |

| Living donor | 2.90 [1.06, 7.93]** | 1.34 [.83, 2.16] | 1.37 [.82, 2.28] | 1.13 [.65, 1.96] |

| Performance = actual graft survival rate | ||||

| All registrants | 3.00 [1.50, 6.00]** | 1.16 [.82, 1.63] | 1.04 [.72, 1.52] | 1.19 [.80. 1.77] |

| College degreea | 4.04 [1.54, 10.58]** | 1.50 [.64, 3.53] | 1.59 [.61, 4.16] | 2.98 [1.00, 8.84]** |

| Age 18-40 | 3.83 [1.73, 8.49]** | 2.06 [1.30, 3.25]** | 1.92 [1.18, 3.12]** | 2.21 [1.30, 3.76]** |

| Private insurance | 4.39 [1.95, 9.85]** | 1.23 [.74, 2.07] | 1.06 [.60, 1.88] | 1.45 [.77, 2.72] |

| Living donor | 3.09 [1.27, 7.52]** | 1.47 [.93, 2.32]* | 1.42 [.87, 2.31] | 1.24 [.73, 2.10] |

| Performance = observed/expected graft failureb | ||||

| All registrants | .89 [.82, .96]** | .99 [.96, 1.03] | 1.00 [.96, 1.04] | 1.00 [.96, 1.04] |

| College degreea | .84 [.75, .94]** | .95 [.87, 1.04] | .96 [.87, 1.06] | .93 [.83, 1.05] |

| Age 18-40 | .87 [.79, .96]** | .94 [.89, .98]** | .94 [.89, .99]** | .93 [.87, .98]** |

| Private insurance | .85 [.78, .94]** | .97 [.92, 1.03] | .99 [.93, 1.05] | .97 [.91, 1.04] |

| Living donor | .93 [.84, 1.03] | .99 [.94, 1.04] | .98 [.93, 1.04] | 1.00 [.94, 1.06] |

| Performance = z-score | ||||

| All registrants | 1.04 [1.02, 1.07] | 1.00 [.99, 1.01] | 1.00 [.99, 1.01] | 1.00 [.99, 1.02] |

| College degreea | 1.08 [1.04, 1.11]** | 1.02 [1.00, 1.05]* | 1.02 [1.00, 1.05]* | 1.03 [1.00, 1.06]** |

| Age 18-40 | 1.05 [1.03, 1.08]** | 1.02 [1.01, 1.03]** | 1.02 [1.01, 1.03]** | 1.02 [1.01, 1.03]** |

| Private insurance | 1.06 [1.03, 1.08]** | 1.01 [.99, 1.02] | 1.01 [.99, 1.02] | 1.01 [.99, 1.03] |

| Living donor | 1.04 [1.01, 1.07]** | 1.00 [.99, 1.02] | 1.00 [.99, 1.02] | 1.00 [.99, 1.01] |

| Number of hospitals | 206 | 206 | 151 | 104 |

| Number of observationsc | 1,005 | 1,005 | 745 | 513 |

Note: IRR = incidence rate ratio; CI = confidence interval.

We observe educational attainment only for patients who registered on the waiting list, not for the 68% of living donor recipients who did not register prior to transplantation.

Higher values indicate worse perfomance.

Each center is observed for, at most, five periods.

p < .10.

p < .05.

Incident rate ratios for the control variables measuring the total demand in period t within a 100-mile radius and a 100 to 200 mile donut are not shown, but were positive and in most cases significantly different from one. The ratio for demand within a 100-mile radius was larger than the ratio for demand within a 100 to 200 mile donut. Also omitted from the table for the sake of brevity are the coefficients on year and hospital fixed effects. Complete results are available from the authors upon request.

The first column in Table 4 displays rate ratios from negative binomial models that include year fixed effects but, for purposes of comparison with the main specification, exclude hospital fixed effects. With one exception, the ratios in the first column are significantly different from unity at conventional levels, indicating that in the cross section better performance is correlated with patient demand. For example, the rate ratio from the model for total demand with actual minus expected graft survival as the performance measure is 3.66 (95% CI: 1.69, 7.96) and is significantly different from one at the 5% level.

Once hospital fixed effects are introduced into the model (columns 2 to 4), rate ratios are no longer significant for the most part. Column 2 presents results from models estimated on all hospitals; columns 3 and 4 report results from models that include only hospitals with at least 10 registrations in every period and 20 registrations in every period, respectively. Hospitals with more than 10 and 20 registrations account for 81% and 60% of total registrations, respectively. We estimated separate regressions on these subsets to examine the sensitivity of results to the exclusion of hospitals with small, marginal transplant programs.

The only cases in which rate ratios achieve statistical significance at the 5% level occur when the dependent variable is restricted to 1) patients age 18 to 40, and 2) demand by patients with college degrees at centers with more than 20 registrations in every period. The incidence rate ratios are higher for living donor transplant recipients, but fail to achieve significance at the 5% level, suggesting that the results for the main specification are not biased by the omission of waiting time as a transplant center characteristic (living donor recipients do not need to consider waiting times).

To examine the sensitivity of results to specification, we estimated models that included transplant procedure volume as a control and models that allowed for a one- or two-month lag between the release of a report and the period used to measure registrations. Results were similar to the baseline specification.

Of the 193 centers performing transplants in the first period, 177 (92%) were performing transplants in the fifth and final period. The remaining 16 programs reported no transplants in the fifth period. Eleven of these exited between the fourth and fifth periods. The decision by hospitals to exit transplantation may be related to performance. In some cases, “star” transplant surgeons are lured to competitors, making it difficult for small programs to continue operations. In other cases, hospitals may shutter poorly performing programs. Failure to account explicitly for the exit process may impart bias to our results. To examine the potential for bias, we compared quality measures between exiting and surviving transplant programs. Differences in the quality measures were insignificant, and not in a consistent direction, although the infrequency of exits made it difficult to study the exit decision in a systematic fashion.

Discussion

The national program to report hospital-specific survival rates for organ transplantation has been in place since 1991, yet it has not been examined in the existing literature on report cards in health care. We find some evidence that publicly reported outcome measures influence the choices of younger patients and patients with college degrees, but overall we are unable to detect an impact of report cards for kidney transplantation on demand. In this last respect, our results echo previous work employing similar study designs (Mennemeyer, Morrisey, and Howard et al. 1997; Romano and Zhou 2004).

There are several plausible explanations for why results differ by age group. Younger patients may be more comfortable using the Internet, interpreting report cards, and applying the culture of consumer choice to health care. If this were the case, we would expect the impact of report cards to increase over time. Alternatively, age may proxy for health status, with younger, healthier patients better able to travel to a distant transplant center in cases where it achieves better patient outcomes than nearby hospitals. Interestingly, models with both unadjusted performance (i.e., the actual graft survival rate) and adjusted performance (i.e., actual minus expected graft survival rates) yield significant results for younger patients. If patients were using report cards optimally, then only adjusted performance measures should influence choice behavior. We also find weak evidence that college-educated patients are responsive to report cards. Results are consistent with studies showing that many patients have difficulty understanding report cards (Hibbard and Jewett 1997; Hibbard et al. 2001).

In our study design, each hospital essentially acts as its own control. Identification is achieved from the within-hospital variation in reported outcome measures. Of course this begs the question: If publicly reported outcome measures vary so much from one report to the next, what use do they serve? The purpose of this study is to examine whether patients do use the information, not whether they should use it. URREA maintains that ease of interpretation and transparency are important goals in compiling outcome reports, necessitating use of easily understood methods to compute expected graft survival rates. While we agree with the first point, methods that produce wide swings in reported outcomes are suspect, especially since the time periods on which adjacent reports are based overlap. Recognizing that hospital-level outcomes are variable over short time horizons, McClellan and Staiger (1999) propose a method of hospital profiling that dampens the effect of transient fluctuations in outcomes (which are by no means unique to organ transplantation). The procedure is complicated, but it would be worth comparing with the current methods to determine whether it yields qualitatively different rankings of centers.

In contrast to the fixed-effects results, we find that in cross-sectional regressions better outcomes are associated with registration volume. A previous analysis of these data found a similar result using a conditional logit model of registrants’ choice behavior (Howard 2006). After controlling for the distance between registrants’ homes and transplant hospitals, the study concluded that at the hospital level a one standard deviation increase in actual graft failure rates, holding expected graft failure rates fixed, is associated with a 6% decline in patient registrations. The divergence between the cross-sectional and panel data results is puzzling. One explanation is that the cross-sectional results are biased by omitted hospital attributes. However, it is difficult to identify characteristics that fit the bill, beyond those included in the conditional logit model (Howard 2006). An alternative explanation is that patients discount short-term fluctuations in reported outcomes and instead focus on transplant program reputation, which is a function of both current and past performance. In this case, panel data models that rely on report-to-report changes in outcomes will be insensitive to the impact of survival rates on choice behavior.

Understanding the role of nephrologists (who provide primary care to patients with kidney failure) and health insurers in the choice process is key to explaining the mechanism by which reputation and past performance could affect patient behavior. Patients rarely choose transplant centers in isolation. Rather, most are referred to transplant centers by nephrologists. Additionally, patients with private insurance - about 35% of patients - must select centers in insurance plans’ “centers of excellence” transplant network. Most large private insurers maintain exclusive networks for transplantation services, which are distinct from contracting programs for other hospital services. To the extent that nephrologists and plans value quality, choice patterns will reflect transplant center reputation, even if patients themselves lack information. Because nephrologists and plans deal with transplant patients on a continual basis, they possess the institutional memory necessary to judge whether fluctuations in the survival rates listed in any given report reflect underlying program quality or are simply short-term deviations from long-run performance.

Even if report cards do not affect patients’ choices, the report card program still may yield important benefits for patients. Several studies have shown that publicly reporting outcomes for cardiac surgery led hospitals to undertake quality-improvement efforts (Baker et al. 2002; Bentley and Nash 1998; Chassin 2001; Hannan et al. 1994). Of course, hospitals’ behavioral responses to the dissemination of outcome data are not always so benign. One way to improve reported outcomes is to increase quality; another is to alter treatment patterns so that only patients with a favorable prognosis are counted toward a hospital’s success rate (Werner and Asch 2005). Dranove et al. (2003) show that high-risk patients were less likely to undergo cardiac bypass surgery following the release of cardiac report cards in New York and Pennsylvania. The risk-adjustment models used to compute expected graft survival rates for transplant centers include a fairly extensive set of controls that limit opportunities for gaming, but there are still patient and organ attributes that are observed by physicians yet are unmeasurable for purposes of statistical adjustment. Counteracting any incentive to turn away high risk patients or reject poor quality organs is the pressure to maintain and increase transplant volume. Given these countervailing incentives, it is not clear if transplant centers are too selective or not selective enough in registering patients on the kidney waiting list.

In their present form, transplant report cards do not appear to influence hospital-level demand. However, this does not imply that patients are inherently unresponsive to publicly reported quality information. Currently the report cards reference technical terms like “expected” graft survival, “p-values,” and “confidence intervals.” Interpreting this information is cognitively demanding, requiring a high degree of numerical literacy. Distributing reports over the Internet, while preferable to the original format of thick paper volumes, also limits patient access. Only 60% of U.S. adults use the Internet regularly (U.S. Census Bureau 2004), and it seems likely that usage is even lower among patients with end-stage renal disease. In fairness to the Scientific Registry, patients are not the primary audience for report cards, and the most recent report includes a “plain English” user’s guide. Nevertheless, making report cards more user-friendly and redesigning them along the principles outlined by Hibbard et al. (2001) may help patients make better use of the data.

Acknowledgments

Support for this study came from the National Institute of Diabetes and Digestive and Kidney Diseases of the National Institutes of Health (grant no. NIDDK/NIH DK067611).

References

The data reported here have been supplied by the University Renal Research and Education Association (URREA) as the contractor for the Scientific Registry of Transplant Recipients (SRTR). The interpretation and reporting of these data are the responsibility of the author(s) and in no way should be seen as an official policy of or interpretation by the SRTR or the U.S. government. Institutional Review Board approval or exemption determination is the responsibility of the authors as well.

- Allison PD, Waterman R. [Accessed Feb. 29, 2004];Fixed Effects Negative Binomial Regression Models. 2004 Unpublished manuscript. Available at http://www.ssc.upenn.edu/~allison/FENB.pdf.

- Baker DW, Einstadter D, Thomas CL, Husak SS, Gordon NH, Cebul RD. Mortality Trends During a Program that Publicly Reported Hospital Performance. Medical Care. 2002;40:879–890. doi: 10.1097/00005650-200210000-00006. [DOI] [PubMed] [Google Scholar]

- Bentley JM, Nash DB. How Pennsylvania Hospitals Have Responded to Publicly Released Reports on Coronary Artery Bypass Graft Surgery. Joint Commission Journal on Quality Improvement. 1998;24:40–49. doi: 10.1016/s1070-3241(16)30358-3. [DOI] [PubMed] [Google Scholar]

- Cameron A, Trivedi P. Regression Based Tests for Overdispersion in the Poisson Model. Journal of Econometrics. 1990;46:347–364. [Google Scholar]

- Chassin MR. Achieving and Sustaining Improved Quality: Lessons from New York State and Cardiac Surgery. Health Affairs. 2002;21:40–51. doi: 10.1377/hlthaff.21.4.40. [DOI] [PubMed] [Google Scholar]

- Dranove D, Kessler D, McClellan M, Satterthwaite M. Is More Information Better? The Effects of Health Care Quality Report Cards. Journal of Political Economy. 2003;111:555–588. [Google Scholar]

- Hannan EL, Kilburn H, Jr., Racz M, Shields E, Chassin MR. Improving the Outcomes of Coronary Artery Bypass Surgery in New York State. Journal of the American Medical Association. 1994;271:761–766. [PubMed] [Google Scholar]

- Hibbard JH, Peters EM, Slovic P, Finucane ML. Making Health Care Report Cards Easier to Use. Joint Commission Journal on Quality Improvement. 2001;27(11):591–604. doi: 10.1016/s1070-3241(01)27051-5. [DOI] [PubMed] [Google Scholar]

- Hibbard JH, Jewett JJ. Will Report Cards Help Consumers? Health Affairs. 1997;16:218–228. doi: 10.1377/hlthaff.16.3.218. [DOI] [PubMed] [Google Scholar]

- Howard DH. Quality and Consumer Choice in Healthcare: Evidence from Kidney Transplantation. Topics in Economic Analysis and Policy. Berkeley Electronic Press. 2005;5(1) doi: 10.2202/1538-0653.1349. article 24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luft HS, Garnick DW, Mark DH, Peltzman DJ, Phibbs CS, Lichtenberg E, McPhee SJ. Does Quality Influence Choice of Hospital? Journal of the American Medical Association. 1990;263:2899–2906. [PubMed] [Google Scholar]

- Marshall MN, Shekelle PG, Leatherman S, Brook RH. The Public Release of Performance Data. What Do We Expect to Gain? A Review of the Evidence. Journal of the American Medical Association. 2000;283(14):1866–1874. doi: 10.1001/jama.283.14.1866. [DOI] [PubMed] [Google Scholar]

- McClellan M, Staiger D. National Bureau of Economic Research (NBER)Working Paper Number 7327. NBER; Cambridge, Mass.: 1999. The Quality of Health Care Providers. [Google Scholar]

- Mennemeyer ST, Morrisey MA, Howard LZ. Death and Reputation: How Consumers Acted Upon HCFA Mortality Information. Inquiry. 1997;34:117–128. [PubMed] [Google Scholar]

- Mukamel DB, Mushlin AI. Quality of Care Information Makes a Difference: An Analysis of Market Share and Price Changes after Publication of the New York State Cardiac Surgery Mortality Reports. Medical Care. 1998;36:945–954. doi: 10.1097/00005650-199807000-00002. [DOI] [PubMed] [Google Scholar]

- —— The Impact of Quality Report Cards on Choice of Physicians, Hospitals, and HMOs: A Midcourse Evaluation. Joint Commission Journal on Quality Improvement. 2001;27(1):20–27. doi: 10.1016/s1070-3241(01)27003-5. [DOI] [PubMed] [Google Scholar]

- Mukamel DB, Wiemer DL, Zwanziger J, Huang Gorthy S, Mushlin AI. Quality Report Cards, Selection of Cardiac Surgeons, and Racial Disparities: A Study of the Publication of the New York State Cardiac Surgery Reports. Inquiry. 2004;41:435–446. doi: 10.5034/inquiryjrnl_41.4.435. [DOI] [PubMed] [Google Scholar]

- Palmer RH. Securing Health Care Quality for Medicare. Health Affairs. 1995;14:89–100. doi: 10.1377/hlthaff.14.4.89. [DOI] [PubMed] [Google Scholar]

- Romano PS, Zhou H. Do Well-Publicized Risk-Adjusted Outcomes Reports Affect Hospital Volume? Medical Care. 2004;42(4):367–377. doi: 10.1097/01.mlr.0000118872.33251.11. [DOI] [PubMed] [Google Scholar]

- Schauffler HH, Mordavsky JK. Consumer Reports in Health Care: Do They Make a Difference. Annual Review of Public Health. 2001;22:69–89. doi: 10.1146/annurev.publhealth.22.1.69. [DOI] [PubMed] [Google Scholar]

- Schneider EC, Epstein AM. Use of Public Performance Reports: A Survey of Patients Undergoing Cardiac Surgery. Journal of the American Medical Association. 1999;279:1638–1642. doi: 10.1001/jama.279.20.1638. [DOI] [PubMed] [Google Scholar]

- Scientific Registry of Transplant Recipients [Accessed March 18, 2005];Guide to the Center-specific Reports, v. 6.0. 2004 Available at http://www.ustransplant.org/csr_0105/csr.pdf.

- U.S. Census Bureau . Statistical Abstract of the United States 2004-2005. (124th Edition) U.S. Census Bureau; Washington D.C.: 2004. Table 1150. [Google Scholar]

- U.S. Department of Health and Human Services (DHHS) 1999 Report to Congress on the Scientific and Clinical Status of Organ Transplantation. U.S. Department of Health and Human Services; Washington D.C.: 1999. [Google Scholar]

- —— Organ Procurement and Transplantation Network; Final Rule. Federal Register. 1998;63:16295–16338. [PubMed] [Google Scholar]

- Vladeck BC, Goodwin EJ, Myers LP, Sinisi M. Consumers and Hospital Use: the HCFA “Death List.”. Health Affairs. 1988;7(1):122–125. doi: 10.1377/hlthaff.7.1.122. [DOI] [PubMed] [Google Scholar]

- Werner RM, Asch DA. The Unintended Consequences of Publicly Reporting Quality Information. Journal of the American Medical Association. 2005;293(10):1239–1244. doi: 10.1001/jama.293.10.1239. [DOI] [PubMed] [Google Scholar]

- Wicks EW, Meyer JA. Making Report Cards Work. Health Affairs. 1999;18:152–155. doi: 10.1377/hlthaff.18.2.152. [DOI] [PubMed] [Google Scholar]