In The Future of Public Health, the Institute of Medicine (IOM) recommended improved instruction in quantitative methods and research skills in public health.1 The IOM emphasized the ethical commitment of public health professionals to use quantitative knowledge to reduce suffering and enhance quality of life. A Harvard study found that two-thirds of the alumni ranked quantitative skills as directly applicable to their profession, supporting IOM's position.2 The IOM report recognized inadequate education and training of the workforce as one of the causes of disarray.1

The Public Health Faculty/Agency Forum addressed the educational dimensions of IOM's findings, and core competencies were developed for Master of Public Health (MPH) students.3 Embedded in these competencies were specific objectives: the ability to (1) define, assess, and understand factors that lead to health promotion and disease prevention, (2) understand research designs and methods, (3) make inferences based on data, (4) collect and summarize data, (5) develop methods to evaluate programs, (6) evaluate the integrity of data, and (7) present scientific information to professional and lay audiences. The Forum also suggested that faculty review, evaluate, and refine established courses and develop new ones. However, the Forum did not outline teaching strategies to achieve these objectives.4 Employing improved teaching methods implies stronger evaluations of existing classes from which stronger teaching methods may be derived.

Two methods of instruction have been empirically validated: Keller's Personalized System of Instruction (PSI)5 and Socratic-Type Programming.5,6 Keller emphasized the following components: (1) identification of specific terminal skills or knowledge, (2) individualized instruction, (3) use of teaching assistants, and (4) use of lectures as a way to motivate students, not just to transmit information. PSI has been more effective than conventional instruction in a variety of educational settings and has increased student achievement and consumer satisfaction more than conventional instruction.7,8

Socratic-Type instruction emphasizes student responses during lecture, rather than presentations by instructors.6,9,10 PSI calls for active responding in the classroom and the Socratic Method is one means of achieving this response. Moran and Malott summarize the empirical evidence and most reliable teaching technologies to date, from which it is clear that frequent quizzing coupled with Socratic procedures contributes importantly to learning.11 A recent study of active learning assessed the use of study guide questions for pending lectures, and outcomes were greater for the study guide than for traditional lecturing.12

Research methods should be taught and evaluated by the very methods being taught. This requires experimental or quasi-experimental methods to model the application of science for teaching evaluations. Connelly and associates called for the development of reliable and valid measurement of specified competencies in public health education.13 Sarrel found that the use of pretests, interim testing, and a formal posttest evaluation of their training program demonstrated clear learning and strengthened their program.14

The public health infrastructure relies on people who have the skills to deliver and evaluate interventions. Common teaching methods, such as lectures, limited homework assignments, and final exams, may not maximize learning and do not enable assessment of learning attributable to specific teaching methods. Thus, research methods should be applied to evaluate public health teaching methods to refine them continuously over time. We propose an educational model to assess behavior change over time and describe a course that effectively teaches principles of research design using PSI and Socratic Methods.

METHODS

Participants

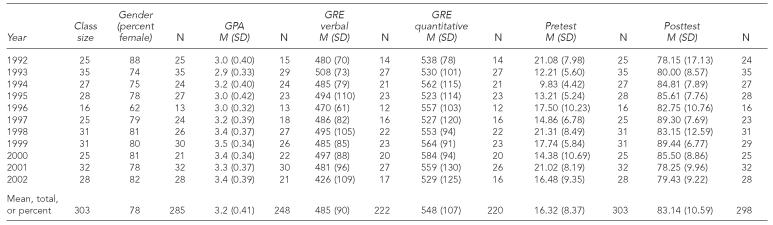

Graduate students' performance in Public Health 607 (Research Methods and Proposal Writing) was assessed over an 11-year period (1992 through 2002). Approximately 62% of each class was female, with class sizes varying from 16 to 35 students. Twenty-five out of the 303 students were in the process of or had already completed a doctoral degree. Table 1 shows demographics and student academic backgrounds.

Table 1.

Class size, gender makeup, GPA, GRE, pretest, and posttest scores over 11 years

GPA = grade point average

GRE = graduate record examination

SD = standard deviation

M = mean

Procedures

Data collection and design.

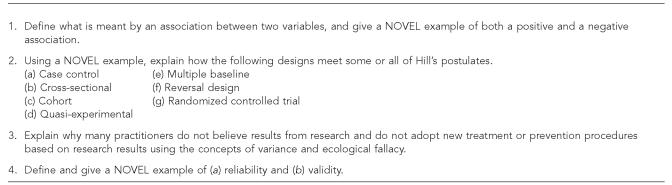

The class was taught once a year with a pretest/posttest design replicated across the 11 years. Students entering the MPH program came from different fields with at least a bachelor's degree, but many held master's or doctoral degrees. A comprehensive pretest consisting of short-answer and essay items was administered on the first day of class to measure students' prior knowledge of research design. Questions covered content that ranged from concepts of measurement and sources of variance, to operational means of obtaining associations, to nonexperimental and experimental designs. The questions measured knowledge of research designs and related concepts, proper use of designs to applied circumstances, and ability to critique research methodology and develop novel studies. The Figure shows example questions. Variations of the same questions were included in a comprehensive final exam.

To encourage high effort on the pretest, students were told that anyone who scored 80% or higher would be exempt from quizzes and that a one-letter grade bonus would be assigned to their final grade in the course. No student met the 80% criterion. Graduate assistants and the instructor graded the tests with discrepancies judged by the instructor. Students' gender, prior degrees, graduate record examination (GRE), and grade point average (GPA) were used to predict change in examination performance.

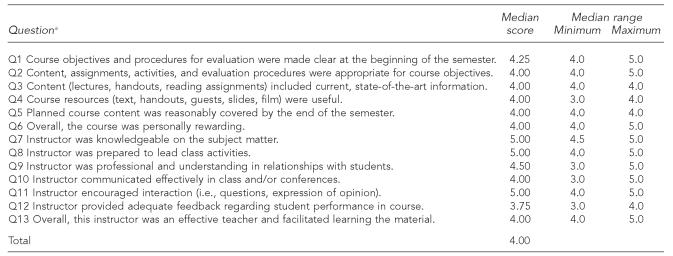

Students also evaluated 13 aspects of the class and instructor at the end of every semester. These evaluations were administered by volunteer students. The class instructor was not present during the evaluations and was blind to student ratings. Completed evaluations were given to the graduate school of public health administrator, who produced aggregated summary scores the following semester. Evaluation questions are provided in Table 2. Students responded to each item on a five-point ordinal scale, with anchors of 1 = strongly disagree to 5 = strongly agree.

Table 2.

Students' aggregated quantitative evaluations from 1997 to 2002

Responses were based on a five-point scale: 1 5 strongly disagree, 2 5 disagree, 3 5 neither agree nor disagree, 4 5 agree, 5 5 strongly agree.

Class content.

The class emphasized Hill's postulates of causal inference and their foundation for research.15 Designs taught included fully controlled trials, quasi-experimental, within subject time series, case control, cross-sectional, cohort, and nested case control. Each design was evaluated on its strengths and weaknesses for concluding causal associations, including strength of association, consistency of association, temporal order, ruling out alternative explanations, and theoretical plausibility. Concepts such as variance, reliability, validity, fidelity, bias, and confounding were reviewed in the context of each design.

Multicomponent intervention.

The class met for two hours and 40 minutes every week for 15 weeks. Six short-answer/short-essay quizzes were administered every other week. Quiz questions required students to generalize from major course concepts and provide novel examples to demonstrate understanding. The questions were similar in format and content to questions on the pretest and final exam. Students who scored less than 80% to 85% (the criterion varied from semester to semester) on a quiz had an option to take a second form of the quiz the following week. Overall, quizzes accounted for 40% of each student's grade in the course.

Lecture and lab time were divided evenly. Lecture was an interactive period where the instructor, using the Socratic Method, asked the students increasingly demanding questions. Correct answers were praised, while incorrect answers prompted the instructor to probe for more basic understanding while avoiding explicit criticism. The main topics of discussion were concepts presented in the readings. Lab time provided clarification of concepts by the teaching assistant in a more relaxed environment. Students were able to practice using the concepts and ask questions in smaller groups or a more casual environment. Exercises were used to provide research experience. Guest speakers offered specialized information such as library research methods and ethics in research.

Over years of administration, two to three texts were used. The primary text was a current edition of Designing Clinical Research.16 The second text emphasized quasi-experimental and time-series designs.17 These were supplemented by research articles to illustrate issues and methodological errors.

In addition to answering specific questions concerning each reading, homework assignments required incremental preparation of components of a grant application based on the PH398 form used by the National Institutes of Health. These included specific aims, operational definitions, literature review, research design, subjects, procedures, and protection of human subjects. Each student was also required to prepare a written critique of a published article. Critical feedback was provided on writing style, use of concepts, design, and understanding of methodological issues. These assignments were iterative and cumulative, leading to both a draft research proposal and refined article critique by the end of the semester. Homework was worth 15% of the semester grade.

Statistical analyses.

Descriptive statistics such as percents, means, and medians were computed for each class. Separate analyses for classes and students as the unit of analyses were conducted. An ANOVA model was used to evaluate whether classes differed by year on pretest and posttest scores, GPA, and GRE scores. Student change scores were computed by subtracting pretest from posttest scores, with mean differences evaluated by a paired sample t-test. A Pearson correlation coefficient determined associations among students' covariates, test scores, and change scores. We estimated a regression model with students' pre- to posttest change as the dependent variable and pretest, gender, class year, doctoral status, GPA, and GRE scores used as covariates. Strength of each predictor was estimated by standardized betas. All analyses were performed using SPSS.18

RESULTS

Table 1 presents a summary of class size, proportion of female students, GPA, GRE, and pre- and posttest scores over the 11 years. Mean GPA scores ranged per class from 2.9 to 3.5, based on a four-point scale. Mean class GRE verbal scores ranged from 426 to 508, and quantitative scores ranged from 523 to 584. For all 11 years combined, the total mean GPA was 3.2 (standard deviation [SD] = 0.41) and the mean GRE verbal and quantitative scores were 485 (SD=90) and 548 (SD=107), respectively. An ANOVA determined classes differed on mean GPA scores F=(10, 247)=6.03, p<0.001. However, GRE verbal and quantitative scores were not significantly different among years.

Class pretest scores were consistently low and ranged from 9.8% to 21.3% correct, while posttest scores were consistently higher, ranging from 78.2% to 89.4% correct. The mean pre- and posttest scores were 16.3% (SD=8.4) and 83.1% (SD=10.6) correct, respectively. With limited variance around the posttest scores, a high proportion of students attained more than 75% correct by the end of the semester. Pre- to posttest change ranged from 57.1% to 75.0%. The 11-year mean increase from pre- to posttest for all students was 66.8%. This difference was significant t(297)= 98.72, p<0.001 as expected using a paired sample t-test. For comparison, students pursuing or holding a doctoral degree had comparable pretest (M=23.26%, SD=7.96), posttest (M=86.56%, SD=10.52), and change (M=63.30%, SD=10.52) scores.

To estimate the likelihood that consistent change in scores from pre- to posttest was attributable to the class methods, a binomial probability with a 50% chance of changing at least 50 percentage points was computed for 11 consecutive years of change. The probability of obtaining the observed result was less than 0.001.

A Pearson correlation coefficient was used to determine associations among students' characteristics, test scores, and change scores. There was a significant negative correlation between students' class year and pretest scores (r=−0.19, p=0.003), but no significant correlation between class year and posttest scores (r=−0.02, p=0.78). Significant positive relationships were found between students' pretest scores and GRE verbal (r=0.31, p<0.001), GRE quantitative (r=0.25, p<0.001), and GPA (r=0.37, p<0.001) scores. Similar significant positive associations were seen with students' posttest scores and GRE verbal (r=0.26, p<0.001), GRE quantitative (r=0.30, p<0.001), and GPA (r=0.34, p<0.001) scores. While GRE quantitative scores had a marginally significant positive correlation with the pre- to posttest change scores (r=0.11, p=0.09), GRE verbal and GPA scores were not associated with the students' change scores (r=0.03, p=0.64 and r=0.05, p=0.45). Pretest, GPA, and GRE scores were included in further multivariate analyses.

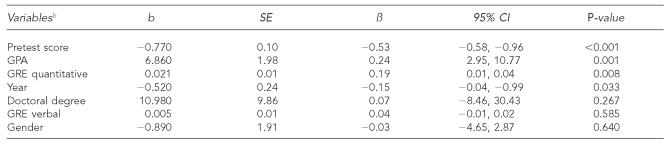

Table 3 presents students' change scores regressed on predictor variables. The model explained 29.4% of the variance. Variables significantly associated with change scores included students' pretest scores (standardized beta = 20.53), GPA (standardized beta = 0.24), GRE quantitative score (standardized beta = 0.19), and class year (standardized beta = −0.15). The results indicate that, for every one standard deviation decrease from the mean pretest score, posttest scores increased 0.53 SDs, after controlling for all other variables. This suggests that students with poorer understanding of the material at pretest may benefit most from the class. Moreover, students with higher GPA and GRE quantitative scores showed greater change.

Table 3.

Linear regression model for students' change scoresa

R2 = 0.294

Variables are ordered according to beta weight, from highest to lowest.

b = unstandardized coefficient

SE = standard error

ß = standardized coefficient

CI = confidence interval

GPA = grade point average

GRE = graduate record examination

Students ranked qualitative aspects of the class each year. However, data were available only from 1997 to 2002 (Table 2). Overall, students ranked the class favorably. Median scores ranged from 3.0 to 5.0, with the total median score over the six years equaling 4.0, or “agree,” with the 13 positively framed evaluation questions.

DISCUSSION

This article describes an effective way to teach research design to graduate students in public health. The teaching method specified objectives, frequent assessments of student behavior, individualized feedback, and the opportunity to do assignments until mastered. Pre- to posttest change demonstrated dramatic increases in competencies by semester's end. These changes were replicated over 11 consecutive cohorts.

On the pretest, most students demonstrated a lack of conceptual understanding of public health science. Many students demonstrated what might best be described as a memorized understanding of procedures with little understanding of how specific procedures controlled for error, or without the ability to use novel methods to solve research problems. While this class did not equip students with advanced research ability, it moved them in that direction through active participation and feedback. The pretest also showed the need for more training of most behavioral science majors in public health, including an important proportion of students who had completed relatively advanced training in medicine. This feedback tended to have a humbling effect on students that motivated them to participate fully in the class.

The class was based on Keller's Personalized System of Instruction and the Socratic methods. Teaching assistant tutorials, repeated quizzes, and the Socratic methods resulted in reliable mastery of the content. Most students ended the class with a B grade or higher. The few who obtained a C were invited to repeat the class and all obtained a B or higher with replication. Moreover, those who obtained a C grade demonstrated remarkable change from about 10% correct answers on pretests to more than 70% correct at posttest.

The bivariate analyses showed weak relationships between most demographic and baseline knowledge characteristics and final change from pretest. Scores at pretest and posttest performance tended to be weakly related to pre- to posttest change, but this is essentially a mechanical relationship as they are components of the change score. Examination of GRE and GPA scores showed weak prediction of change in bivariate analyses. This suggests that they did not predict change in the context of this class.

The multivariate analysis showed that pretest scores, GPA, quantitative GRE scores, and year of enrollment were significant predictors of change scores. However, pretest scores are again a function of the mechanical relationship as a component of the change score, providing more opportunity for change with lower-level pretest scores. GPA and GRE predictors are face valid and probably reflect past academic skills, both specific knowledge such as quantitative skills, and general knowledge of how to study for classes. Year of enrollment may reflect subtle changes in the demography of the students or in the teaching procedures. However, all of these factors explained less than 30% of the variance in the pre- to posttest performance changes. This provides circumstantial evidence that the changes achieved were due to the teaching procedures. This evidence is strengthened when one examines the role of doctoral training (mostly physicians), verbal GRE, and gender, none of which reached significance as predictors of change. These findings suggest that individuals with a relatively high GPA and relatively advanced degrees, including a doctoral degree, can benefit from a teaching technology of the sort tested here.

Limitations

This study lacked random assignment to a comparison group for more complete control for alternate explanations of change. However, the class included interactive feedback through biweekly quizzes, frequent student and instructor interaction, homework, and tutoring. These repeated interactions served as student performance “process measures” that corroborated the pre- to posttest changes observed. Moreover, our results showed that the likelihood of obtaining 11 consecutive years of substantial change was less than one in 1,000, with students differing in academic achievement and backgrounds. Although the efficacy of PSI and the Socratic Method were not compared to other pedagogical methods, the current research provides substantive evidence for attributing change in performance to the current instructional methods, and suggests a high level of generalizability.

Standards for teaching public health

The IOM called for more quantitative instruction in public health. This article provides a model for well-researched PSI and related Socratic teaching methods that can be applied to all graduate public health instruction.19 While more formal control procedures could be used in the experimental evaluation of similar classes, we recommend that, at a minimum, objective measures of knowledge be assessed routinely on a pretest and posttest basis. Doing so provides quasi-experimental evidence of learning attributable to the specific class, and it provides a model to students for the routine use of research methods in the delivery of services. These procedures also lend to use by academic personnel committees for remedial assistance for instructors in those instances where outcomes do not appear satisfactory. Such procedures should supplement subjective student evaluations. The Association of Schools of Public Health and the Council on Education in Public Health might consider criteria for evaluating public health instruction that follows this model. Such policies and teaching procedures could meet the standards recommended by the IOM.

Figure.

Examples of questions presented on pretests and posttests

Footnotes

Research for this article was conducted with intramural support from CBEACH.

REFERENCES

- 1.Committee for the Study of the Future of Public Health, Division of Health Care Services, Institute of Medicine. Washington: National Academy Press; 1988. [cited 2007 Apr 23]. The future of public health. Also available from: URL: http://www.nap.edu/catalog.php?record_id=1091. [Google Scholar]

- 2.Kahn K, Tollman SM. Planning professional education at schools of public health. Am J Public Health. 1992;82:1653–7. doi: 10.2105/ajph.82.12.1653. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Sorensen AA, Bialek RG. Atlanta: Bureau of Health Professions, Health Resources and Services Administration and Public Health Practice Program Office, Centers for Disease Control and Prevention (US); 1991. The public health faculty/agency forum: linking graduate education and practice: final report. [Google Scholar]

- 4.Potter MA, Pistella CL, Fertman CI, Dato VM. Needs assessment and a model agenda for training the public health workforce. Am J Public Health. 2000;90:1294–6. doi: 10.2105/ajph.90.8.1294. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Keller FS. “Good-bye teacher…”. J Appl Behav Anal. 1968;1:79–89. doi: 10.1901/jaba.1968.1-79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Mahan HC. Paper read at Western Psychological Association. San Francisco: 1967. May, The use of Socratic type programmed instruction in college courses in psychology. [Google Scholar]

- 7.Kulik C-LC, Kulik JA, Cohen PA. Instructional technology and college teaching. Teaching of Psychology. 1980;7:199–205. [Google Scholar]

- 8.Kulik JA, Kulik C-LC, Cohen PA. A meta-analysis of outcome studies of Keller's Personalized System of Instruction. Am Psychol. 1979;34:307–18. [Google Scholar]

- 9.4th ed. Boston: Houghton Mifflin; 2006. The American Heritage Dictionary of the English Language. [Google Scholar]

- 10.Binker A. Socratic questioning. In: Paul RW, editor. Critical thinking: what every person needs to survive in a rapidly changing world. Dillon Beach (CA): Foundation for Critical Thinking; 1993. pp. 360–90. [Google Scholar]

- 11.Moran DJ, Malot RW, editors. San Diego: Elsevier Academic Press; 2004. Evidence-based educational methods. [Google Scholar]

- 12.Saville BK, Zinn TE, Neef NA, Van Norman R, Ferreri SJ. A comparison of interteaching and lecture in the college classroom. J Appl Behav Anal. 2006;39:49–61. doi: 10.1901/jaba.2006.42-05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Connelly J, Knight T, Cunningham C, Duggan M, McClenahan J. Rethinking public health: new training for new times. J Manag Med. 1999;13:210–7. doi: 10.1108/02689239910292927. [DOI] [PubMed] [Google Scholar]

- 14.Sarrel PM, Sarrel LJ, Faraclas WG. Evaluation of a continuing education program in sex therapy. Am J Public Health. 1982;72:839–43. doi: 10.2105/ajph.72.8.839. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Hill A. The environment and disease: association or causation? Proc R Soc Med. 1965;58:295–300. [PMC free article] [PubMed] [Google Scholar]

- 16.Hulley SB, Cummings SR. 1st ed. Baltimore: Williams & Wilkins; 1988. Designing clinical research: an epidemiologic approach. [Google Scholar]

- 17.Hayes SC, Barlow DH, Nelson-Gray RO. Boston: Allyn & Bacon; 1999. The scientist practitioner: research and accountability in the age of managed care. [Google Scholar]

- 18.SPSS, Inc. Chicago: SPSS, Inc.; 2004. SPSS for Windows: Version 12.0.1. [Google Scholar]

- 19.Greenhoot AF, Semb G, Colombo J, Schreiber T. Prior beliefs and methodological concepts in scientific reasoning. J Appl Cogn Psychol. 2004;18:203–21. [Google Scholar]