Abstract

Objective

To evaluate the data quality of ventilator settings recorded by respiratory therapists using a computer charting application and assess the impact of incorrect data on computerized ventilator management protocols.

Design

An analysis of 29,054 charting events gathered over 12 months from 678 ventilated patients (1,736 ventilator days) in four intensive care units at a tertiary care hospital.

Measurements

Ten ventilator settings were examined, including fraction of inspired oxygen (Fio 2), positive end-expiratory pressure (PEEP), tidal volume, respiratory rate, peak inspiratory flow, and pressure support. Respiratory therapists entered values for each setting approximately every two hours using a computer charting application. Manually entered values were compared with data acquired automatically from ventilators using an implementation of the ISO/IEEE 11073 Medical Information Bus (MIB). Data quality was assessed by measuring the percentage of time that the two sources matched. Charting delay, defined as the interval between data observation and data entry, also was measured.

Results

The percentage of time that settings matched ranged from 99.0% (PEEP) to 75.9% (low tidal volume alarm setting). The average charting delay for each charting event was 6.1 minutes, including an average of 1.8 minutes spent entering data in the charting application. In 559 (3.9%) of 14,263 suggestions generated by computerized ventilator management protocols, one or more manually charted setting values did not match the MIB data.

Conclusion

Even at institutions where manual charting of ventilator settings is performed well, automatic data collection can eliminate delays, improve charting efficiency, and reduce errors caused by incorrect data.

For patients treated with continuous positive-pressure ventilation in the intensive care unit (ICU), numerous ventilator settings are adjusted to provide appropriate oxygenation and ventilation and to facilitate weaning. Nurses or respiratory therapists periodically observe settings on the ventilator that control oxygen, flow, volume, and pressures, and chart these values either on paper or into electronic patient records. Variation exists in how and when such values are observed and documented. 1–3 For ICU staff, access to timely and accurate ventilator settings is essential for making treatment decisions and for maintaining situation awareness. Computerized decision support tools, such as ventilator management protocols, require accurate and timely data to generate instructions for caregivers and to effect changes in patient care. 4,5

We hypothesized that the ventilator settings entered manually into a computer charting application would not always match the settings automatically acquired from ventilators at 5-second intervals. To test this hypothesis, we measured the percentage of time that the manually charted settings matched 5-second ventilator values. To assess the possible impact of incorrect data on computerized ventilator management protocols, we measured the number of suggestions generated by computerized ventilator management protocols where one or more manually charted setting values did not match the automatically acquired data.

Background

In 1985, Andrews et al. described one of the first computer charting applications for respiratory care data at LDS Hospital in Salt Lake City, Utah. 6 Evaluations of that system showed improvements in the completeness, legibility, and descriptiveness of computer-charted data. In addition, clinicians reported that the enhanced accessibility, organization, and presentation of computer-charted data improved medical decision making. 6,7 The integration of coded, time-stamped respiratory data into electronic patient records enabled the development of computerized decision support tools, including protocols for managing and weaning ventilated patients. 8,9

Data Quality

The development by East et al. of computerized ventilator protocols for managing patients with acute lung injury revealed problems of timeliness in manually recorded data. 5 Prior to use of the computerized protocols, respiratory therapists were known to wait hours before entering data, and sometimes guessed when documenting the time a particular setting was observed. In a small sample of 399 measurements for fraction of inspired oxygen (Fio 2) setting, East noted that 119 (30%) failed to be recorded in the computer within 30 minutes of the time they were observed. As East explained, “This condition is devastating…for protocol decisions because ‘old’ respiratory care data will mistakenly be used for the decisions since the ‘new’ data is not yet in the computer.” 5 More recently, Nelson et al. identified a similar occurrence in computer charting performed by nurses, noting that prior to an educational intervention, only 59% of medication administrations were charted in real-time (within 1 minute of the time medications were administered), and only 40% were charted at the bedside. 10 Nelson warned that computerized decision support applications, including systems that check for dosing errors and administration of discontinued medications, are ineffective when charting is delayed.

When clinicians record dozens of values for each patient multiple times per shift, data entry errors are inevitable. Quality control mechanisms, such as cross-field edits, range checking, and double data entry, can reduce but do not eliminate these errors. 11,12 Studies that have assessed the quality of manually recorded data have been performed in anesthesia monitoring 13–17 and critical care monitoring. 18–20 Each of these studies focused on physiologic variables such as heart rate or blood pressure that were inherently unstable and not directly manipulated by care providers. The primary goal of the clinicians who monitored these physiologic variables was to filter out artifactual values and to record data points that were representative of the patient’s true condition. A careful review of the literature found no studies that examined the data quality of manually charted nonphysiologic parameters such as ventilator settings.

Automated Data Collection

Data quality problems associated with manual charting were anticipated by the earliest developers of computerized patient monitoring systems. In 1968, after 15 months of evaluating a cardiopulmonary monitoring system, Osborne et al. stated that automatically collected data were “far more useful and reliable than the clinical log maintained by house staff or nurses, because hand-entered logs often fail in accuracy just at crucial times, and because the nurses necessarily have to give first thought to whatever emergency has arisen.” 21 Successful computerized data collection and bedside monitoring systems for critically ill patients were developed and evaluated by other investigators. 22–25 In spite of the advantages of these systems, automatic data acquisition from bedside monitoring devices was not widely used in the 1970s and 1980s.

Microprocessor-controlled ventilators introduced in the 1980s provided greater opportunities for bedside data acquisition as vendors began including RS-232 and other interfaces for digital output. QuickChart/RT (Puritan Bennett, Pleasanton, CA), a software application that automatically captured settings and measurements from a ventilator and displayed trends on a personal computer, was developed in 1989. 26 In the same year, a computerized decision support tool called RESPAID was created that automatically acquired ten ventilator parameters every 5 seconds and identified events such as alarms and setting changes. 27

QuickChart/RT and RESPAID were not widely used, possibly because each was a proprietary system that only communicated with a single ventilator model. To address limitations of interoperability, representatives from device manufacturers, health care institutions, and academic departments began developing a set of communication standards to allow “plug-and-play” data sharing among medical devices made by various manufacturers. This resulted in the Institute for Electrical and Electronics Engineers (IEEE) 1073 Medical Information Bus (MIB) standard being adopted in 1996. 28–31 In 2004, IEEE 1073 was ratified by the International Organization for Standardization (ISO) and named ISO 11073 Point-of-Care Medical Device Communication Standard. Although the ISO/IEEE standard has been endorsed by the U.S. National Committee on Health and Vital Statistics (NCVHS) and adopted as part of the U.S. Consolidated Health Informatics (CHI) initiative, it is still not widely used by the medical device industry. Consequently, most institutions that collect data directly from bedside devices rely on proprietary systems that are typically expensive and not well-integrated with current hospital information systems.

Methods

The LDS Hospital in Salt Lake City, Utah, is a 520-bed community-based tertiary care and level-one trauma center that is part of the Intermountain Healthcare network of hospitals and clinics. The hospital’s electronic medical record, the Health Evaluation through Logical Processing (HELP) System, has been in use for over 30 years and contains most inpatient clinical information. 32 Computer-based charting of respiratory and ventilator data has been a component of the HELP System since 1985. 6 Computerized protocols, first developed in the late 1980s to standardize the care of patients with acute lung injury, have been refined and expanded to manage oxygenation, ventilation, and ventilator weaning. 33 During our study, about 60% of ventilated patients in the hospital were enrolled in a protocol.

Study Design

We prospectively collected data from August 2004 through July 2005 for ventilated patients in four ICUs at LDS Hospital: Shock/Trauma/Respiratory (STRICU, 12 beds), Medical/Surgical (MSICU, 16 beds), Coronary (CCU, 16 beds), and Thoracic (TICU, 16 beds). Each ICU room was equipped with ISO/IEEE 11073 MIB hardware that could be connected to Puritan Bennett 7200A and 840 series ventilators (Puritan Bennett, Pleasanton, CA) that were outfitted with MIB interfaces. 34,35 Prior to the start of the present study, ventilators were not routinely connected to the MIB in the study ICUs. In 2004, a system was implemented that issued a unit-wide computer alert when an unintended patient–ventilator circuit disconnection occurred. 36 The disconnection alerting system was effective only when ventilators were connected to the MIB. Respiratory therapists were instructed to verify that the MIB-ventilator cable was connected whenever patients were attached to the ventilators.

When ventilators were connected to the MIB, the ten ventilator settings listed in ▶ were automatically collected every 5 seconds and stored in files on a bedside computer. Each data file stored up to 24 hours of ventilator data and required about 7 megabytes of disk space. The data files were transferred monthly to a network server and later imported into a MySQL database (www.mysql.com) for analysis. Approval for the study and a waiver of informed consent were obtained from the institutional review boards at LDS Hospital and the University of Utah.

Table 1.

Table 1 Ten Ventilator Settings Collected Automatically Using the Medical Information Bus (MIB)

| Ventilator Settings | Units |

|---|---|

| Fraction of inspired oxygen (Fio2) ∗ | %† |

| Positive end-expiratory pressure (PEEP) ∗ | cm H2O |

| Respiratory rate ∗ | breaths/min |

| Tidal volume (VT) ∗ | mL |

| Pressure support ∗ | cm H2O |

| Peak inspiratory flow rate | L/min |

| Low minute ventilation alarm limit | L/min |

| High pressure alarm limit | cm H2O |

| High respiratory rate alarm limit | breaths/min |

| Low tidal volume alarm limit | mL |

∗ Denotes ventilator settings that were used in the logic of computerized ventilator management protocols that were used in the study intensive care units.

† In the computer system, the term Fio2 was associated with a percentage (e.g., 40% or 100%) rather than a decimal fraction (e.g., 0.40 or 1.0).

While MIB data were stored in the background, respiratory therapists continued regular manual computer charting, without being made aware that ventilator settings were being collected automatically. Once the collection of MIB data was complete, the corresponding manually charted ventilator settings were extracted from a research data warehouse. The data warehouse also provided information on all computerized ventilator protocol instructions, including the value of each ventilator setting used by the protocol logic.

Manual Charting Process

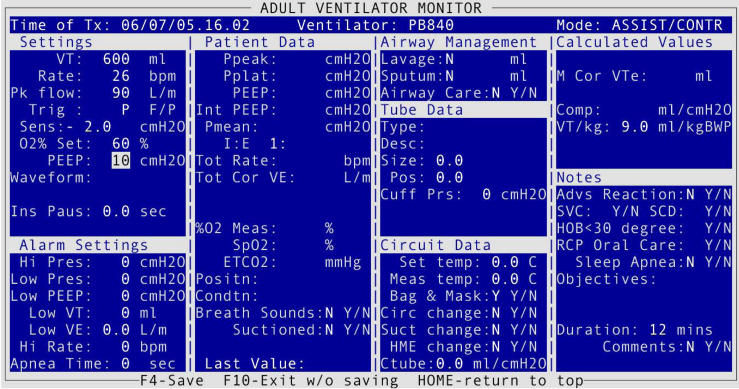

The respiratory care documentation policy in the four study ICUs required respiratory therapists to observe each ventilated patient at least every two hours, and enter data into the HELP System. ▶ shows a screen from the charting application for Assist/Control ventilation using a Puritan Bennett 840 (PB840) ventilator. In addition to ventilator settings and alarm limits, respiratory therapists recorded measured physiologic variables, breath sound and other observations, patient–ventilator circuit information, and procedures performed. The time when values were observed was entered in the “Time of Tx” field located near the top of ▶. The field defaulted to the current time, so it was necessary for respiratory therapists to edit the default time if they were back-charting data. All data entered into the charting application for a given observation time constituted a charting instance. Although not visible on the data entry screen, a charting instance also recorded the time when the application was started and the time when the data were stored in the electronic record. Time in the HELP System was limited to 1-minute granularity.

Figure 1.

Computer charting application in the HELP System used by respiratory therapists to document ventilator settings (far left column) along with other patient-specific ventilator and respiratory data. This screen is specific to the Puritan Bennett 840 ventilator operating in Assist/Control mode. Similar screens were displayed depending on the type of ventilator and mode of operation.

A HELP terminal was located at each bedside, and additional terminals were available at central nursing stations in each unit and throughout the hospital. Ventilators were typically located next to the bedside HELP terminals, making it easy for respiratory therapists to document ventilator settings. The first time charting was performed for a patient, all fields in the charting application were empty, and about 50 to 80 keystrokes were required to enter the setting values. At subsequent chartings, each field contained the previously charted value; this saved time for respiratory therapists, as they could simply press Enter to document settings that had not changed. When no settings had changed, as few as 18 keystrokes were required to document the settings shown in ▶.

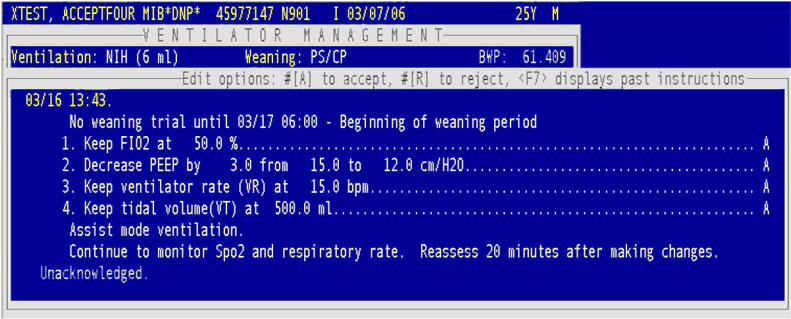

If a patient was enrolled in a computerized ventilator management protocol, respiratory therapists ran the protocol after saving the data entered in the charting application. The protocol generated a list of instructions to guide the respiratory therapist in adjusting ventilator settings (▶). The computer-generated instructions also reminded respiratory therapists to measure blood gases and spontaneous breathing parameters when appropriate, and prompted therapists when a patient met criteria for extubation. Each protocol instruction was individually acknowledged or refused by the therapist, and reasons for refusal were documented. If an attempt was made to run the protocol, but ventilator data had not been charted in the previous 30 minutes, the computer prompted the user to chart the data needed for the protocol.

Figure 2.

Example of instructions generated by a HELP computerized ventilator management protocol. The protocol logic relied on Fio 2, PEEP, respiratory rate, tidal volume, and pressure support settings that were manually charted by respiratory therapists. Fio 2 = fraction of inspired oxygen, PEEP = positive end-expiratory pressure.

Data Analysis

Data quality was assessed by measuring the percentage of time each manually charted ventilator setting matched the corresponding MIB values. Also measured was charting delay, defined as the interval between the time therapists claimed to observe values and the time the values were actually “charted” into the computer. Charting delay did not necessarily affect the percentage of time that the manual and MIB data matched, but rather provided an indication of whether charting was performed in a timely fashion. For example, if a patient’s positive end-expiratory pressure (PEEP) setting remained constant, but the therapist did not record the setting until 15 minutes after it was observed, this charting delay would have no effect on the percentage of times that PEEP was charted correctly. Part of the charting delay was comprised of the charting duration, which was defined as the time respiratory therapists spent entering data. Because timekeeping in the HELP System only had 1-minute granularity, a correction factor of 0.5 minutes was added to each charting duration measurement to eliminate situations in which zero time would have been recorded.

The value of each ventilator setting used in the protocol logic (Fio 2, PEEP, respiratory rate, tidal volume, and pressure support) was compared with MIB values measured at the time of protocol execution and 2 minutes prior to that time. If a manually charted value used in the protocol logic did not match any MIB-acquired value within the 2-minute window, the manually charted value was considered to be in error. Using a 2-minute window prevented situations in which a new setting was recorded after a protocol instruction was generated, but due to the 1-minute time granularity of the HELP System, the new setting was time-stamped at the same minute the protocol was executed.

Results

At least one hour of ventilator setting data was collected via the MIB for 722 ICU admissions, which included 678 unique patients. ▶ shows characteristics of the ICU admissions. More than 41,660 hours (1,736 days) of MIB ventilator data were collected, during which time 29,054 manual charting instances were recorded. Depending on the mode of ventilation, up to ten individual setting values were recorded for each charting instance. Fio 2, PEEP, and the alarm limit settings were recorded for all modes of ventilation. Tidal volume (VT) and respiratory rate settings were recorded only for controlled modes of ventilation. The pressure support setting was recorded only for modes of ventilation that used pressure support. ▶ shows the average number of setting changes per 24 hours for manually charted and MIB-acquired data. Also in ▶ are the percentages of times that manually charted values matched the MIB values. About 25% of the settings recorded by the MIB lasted for less than 1 minute, and events with such short durations were not typically charted by respiratory therapists.

Table 2.

Table 2 Patient Characteristics by Intensive Care Unit

| Characteristic | CCU (n = 31) | MSICU (n = 143) | STRICU (n = 341) | TICU (n = 207) |

|---|---|---|---|---|

| Age, yr, mean ± SD | 61 ± 13 | 54 ± 19 | 48 ± 19 | 63 ± 14 |

| Female, % | 39 | 52 | 42 | 33 |

| In-hospital mortality, % | 32 | 11 | 24 | 9.2 |

| Hospital length of stay, days, median (IQR) | 9.6 (6.3–18) | 11 (4.2–19) | 12 (5.8–20) | 12 (7.1–25) |

| ICU length of stay, days, median (IQR) | 6.0 (4.1–12) | 5.0 (1.8–12) | 7.3 (2.3–15) | 4.8 (1.1–15) |

| Total hours on ventilator, median (IQR) | 99 (35–240) | 38 (15–150) | 97 (23–231) | 22 (6.7–234) |

| Weaning hours on ventilator, ∗ median (IQR) | 12 (3.2–37) | 13 (2.9–80) | 29 (4.5–103) | 2.5 (0.4–39) |

| Highest APACHE-II score during admission, mean ± SD | 22 ± 9 | 16 ± 9 | 20 ± 9.1 | 18 ± 10 |

| Lowest GCS score during admission, mean ± SD | 7.1 ± 4.0 | 6.9 ± 3.8 | 5.2 ± 3.2 | 9.0 ± 4.7 |

| Enrolled in computerized ventilator management protocol,† % | 61 | 82 | 83 | 25 |

∗ Weaning modes used in study ICUs were continuous positive airway pressure and pressure support ventilation.

† Computerized ventilator management protocols were ordered by physicians to help maintain oxygenation and ventilation and to assist in weaning.

ICU = intensive care unit; CCU = coronary care unit; MSICU = medical/surgical ICU; STRICU = shock/trauma ICU; TICU = thoracic ICU; IQR = interquartile range; APACHE = Acute Physiology and Chronic Health Evaluation; GCS = Glasgow Coma Score.

Table 3.

Table 3 Percentage of Time That Manually Charted Settings Were Correct (Matched the MIB Values) and Average Number of Changes Recorded for Each Setting (HELP and MIB) per 24 Hours

| Setting | HELP Changes ∗ | MIB Changes† | Total Hours | Incorrect Hours | Percentage of Time Correct |

|---|---|---|---|---|---|

| Fio2 | 2.16 | 4.01 | 41,660 | 1,116.1 | 97.3 |

| PEEP | 0.85 | 2.33 | 41,660 | 412.2 | 99.0 |

| VT | 0.32 | 2.68 | 25,001 | 431.8 | 98.3 |

| Respiratory rate | 1.27 | 3.52 | 25,001 | 521.7 | 97.9 |

| Pressure support | 1.57 | 6.42 | 6,134 | 260.1 | 95.8 |

| Peak flow | 0.88 | 3.99 | 41,660 | 2,237 | 94.6 |

| Low VE alarm | 0.80 | 1.88 | 41,660 | 1,926 | 95.4 |

| High pressure alarm | 0.37 | 0.76 | 41,660 | 3,745 | 91.0 |

| High respiratory rate alarm | 0.69 | 2.19 | 41,660 | 7,977 | 80.9 |

| Low VT alarm | 0.90 | 3.12 | 41,660 | 10,032 | 75.9 |

∗ Average number of setting changes recorded in the computer charting application (HELP) per 24 hours.

† Average number of setting changes recorded by the MIB per 24 hours.

MIB = Medical Information Bus; Fio 2 = fraction of inspired oxygen; PEEP = positive end-expiratory pressure; VT = tidal volume; VE = minute ventilation.

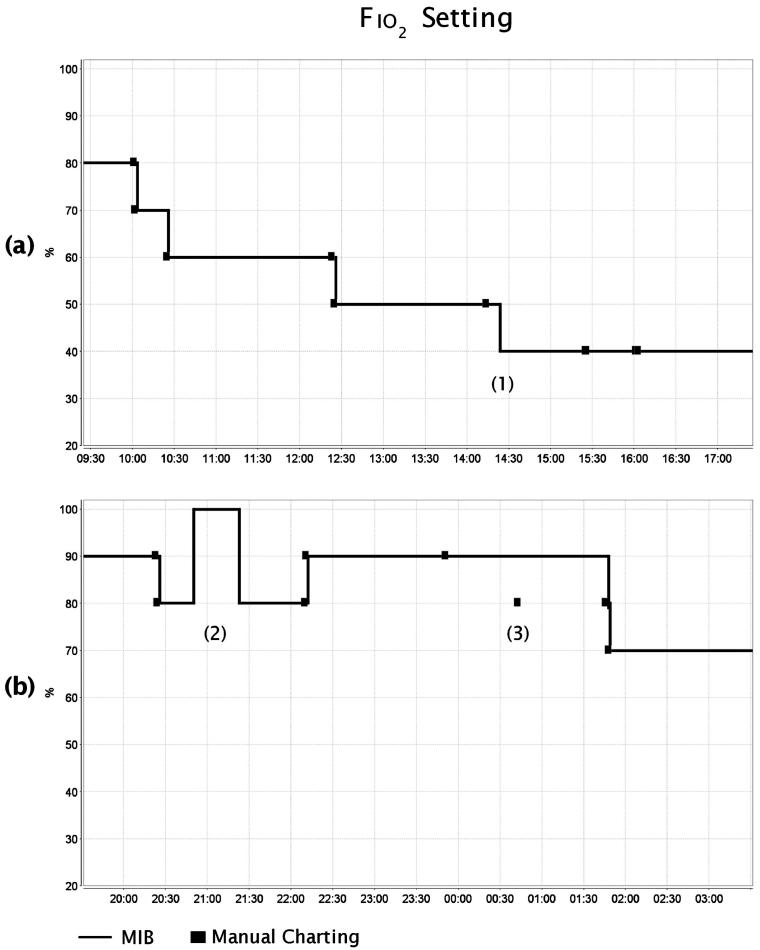

The percentage of time that manually charted values matched the MIB values was affected by (1) delayed charting of setting changes, (2) failure to chart setting changes, and (3) incorrect charting of settings. ▶ illustrates these three errors. Each graph in the figure presents eight hours of Fio 2 settings collected via the MIB, along with the manually charted Fio 2 values for the same period. In ▶A, a setting change occurred at 14:22 but was not recorded in HELP until 15:25—63 minutes, or 1.05 hours, later. The manually charted Fio 2 value for the 8-hour window matched the MIB data for only 6.95 hours, or 86.9% of the time. In ▶B near label (2), a setting change took place that was not recorded in HELP before the setting changed again. In this case, 37 minutes elapsed while the Fio 2 was set at 100% and the HELP value did not change from 80%. At the time labeled (3) in ▶B, a value entered into HELP at 00:46 (80%) differed from the corresponding MIB value (90%). This error persisted for 68 minutes until the Fio 2 setting was changed to 80%. For the 8-hour time period in ▶B, the Fio 2 setting was charted incorrectly for 37 + 68 = 105 minutes (or 1.75 hours), so the percentage of time the Fio 2 setting was charted correctly was only 78.1%.

Figure 3.

Examples of charting errors that affected the percentage of time that manually charted values were correct. (a) The delayed recording of an Fio 2 setting change is shown near (1), where a setting change occurred at time 14:22, but was not manually charted until 15:25. The Fio 2 setting was correct 86.9% of the time in this 8-hour window. (b) A failure to record an Fio 2 setting change is shown near label (2), and the recording of an incorrect value is shown near (3). The Fio 2 setting was correct 78.1% of the time in this 8-hour window. Fio 2 = fraction of inspired oxygen.

▶ shows the number of manual charting instances and edited charting instances by ICU, along with the average charting delay and charting duration for each charting event. The number of charting instances that were edited after they had been initially entered was 405 (1.39%). Of these edits, 158 (39.0%) added additional information, 206 (50.9%) corrected previously entered values, and 41 (10.1%) were performed for other reasons (e.g., deleting duplicate chartings, deleting data entered for the wrong patient or at the wrong time). The combined average charting delay was 6.1 minutes (median 1.5 minutes, range: <1–795 minutes), including an average of 1.8 minutes spent entering data into the charting application.

Table 4.

Table 4 Charting Instances, Modifications, Charting Delay, and Charting Duration by ICU

| CCU | MSICU | STRICU | TICU | Total | |

|---|---|---|---|---|---|

| Charting instances | 933 | 3,483 | 16,038 | 8,600 | 29,054 |

| Charting instances edited (%) | 7 (0.75%) | 48 (1.38%) | 176 (1.10%) | 174 (2.02%) | 405 (1.39%) |

| Add missing information (%) | 3 (42.9%) | 10 (20.8%) | 68 (38.6%) | 77 (44.3%) | 158 (39.0%) |

| Correct values (%) | 4 (57.1%) | 38 (79.2%) | 94 (53.4%) | 70 (40.2%) | 206 (50.9%) |

| Other (%) | 0 (0%) | 0 (0%) | 14 (8.0%) | 27 (15.5%) | 41 (10.1%) |

| Charting delay, minutes, mean (range) | 7.9 (<1–721.5) | 3.7 (<1–502.5) | 3.6 (<1–795.5) | 11.8 (<1–649.5) | 6.1 (<1–795.5) |

| Charting duration, minutes, mean (SD) | 1.9 (1.72) | 1.5 (1.21) | 1.7 (1.49) | 1.9 (1.61) | 1.8 (1.51) |

ICU = intensive care unit; CCU = coronary care unit; MSICU = medical/surgical ICU; STRICU = shock/trauma ICU; TICU = thoracic ICU.

Of the 722 ICU admissions, 469 (65.0%) were enrolled in a computerized ventilator management protocol. The protocol generated a total of 14,263 sets of instructions (see ▶). The number of protocol instructions that were generated with one or more incorrectly charted setting values was 559 (3.9%).

Table 5.

Table 5 Ventilator Management Protocol Instructions Generated with Incorrect Setting Values

| Number of Protocol Instructions = 14,263 | ||

|---|---|---|

| Number Incorrect | Percent Incorrect | |

| Fio2 | 172 | 1.2 |

| PEEP | 200 | 1.4 |

| VT | 40 | 0.3 |

| Respiratory rate | 66 | 0.5 |

| Pressure support | 116 | 0.8 |

| Any incorrect | 559 ∗ | 3.9 |

∗ Some instructions were generated with more than one incorrect setting. There were 594 total incorrect settings.

Fio 2 = fraction of inspired oxygen; PEEP = positive end expiratory pressure; VT = tidal volume; VE = minute ventilation.

Discussion

Previous studies evaluating the quality of manually charted data have examined either (1) accuracy at a single point in time (which was usually the case in anesthesia monitoring studies), or (2) the extent to which a manually charted value compared with the mean or median of a block of automatically acquired data (e.g., Turner et al. 19 Cunningham et al. 20 ). To the best of our knowledge, this was the first study that reported the percentage of time a clinical variable was documented correctly in an electronic medical record. We suggest the “percentage of time correct” could be a valuable metric for evaluating the quality of manual documentation for many types of clinical variables, such as ventilator settings, vital signs, and infusion pump drip rates.

For the ventilator settings examined in this study, the percentage of time correct was highest for PEEP (99.0%), set tidal volume (98.3%), set respiratory rate (97.9%), Fio 2 (97.3%), and pressure support level (95.8%). Of note, these were the settings used by the computerized ventilator management protocols. Peak inspiratory flow setting, which was not used by the protocols, was correct 94.6% of the time. The percentage of time correct for the low minute ventilation alarm was 95.4% compared to 91.0% for the high pressure alarm setting, 80.9% for the high respiratory rate alarm setting, and 75.9% for the low tidal volume alarm setting. Regarding the uncharacteristically low percentages for the high respiratory rate alarm setting (80.9%), and the low tidal volume alarm setting (75.9%), respiratory therapists anecdotally suggested that these alarms were more prevalent and had a higher false positive rate than the high pressure and low minute ventilation alarms. It is probable that changes to alarm limit settings were less likely to be documented when the adjustments were made as a result of clinicians responding to alarms that may have interrupted other tasks.

Keystroke errors, mental lapses, distractions, and fatigue likely contributed to inaccurate charting, but the computer charting application may have contributed to data entry errors as well. When respiratory therapists accessed the computer charting application, the setting fields contained the previously charted values. Prefilling the fields saved respiratory therapists from repeatedly charting the same information, but required therapists to explicitly enter any setting changes rather than simply pressing Enter to accept the previous values. On occasion, most notably with alarm limit settings, a setting was changed but the respiratory therapist continued to chart the old (incorrect) value for several hours.

The 559 protocol instructions that were generated with one or more incorrectly charted setting values were a potential source of error and confusion. The protocols were transparent in the sense that data used in the instruction-generating logic were explicitly displayed along with the instruction. When a setting change was suggested, the current setting value (i.e., the most recently charted value) and the suggested value were displayed in the computer instruction—for example: “Decrease the Fio 2 from 70% to 60%.” This instruction would have been illogical if the ventilator was already set at 60%.

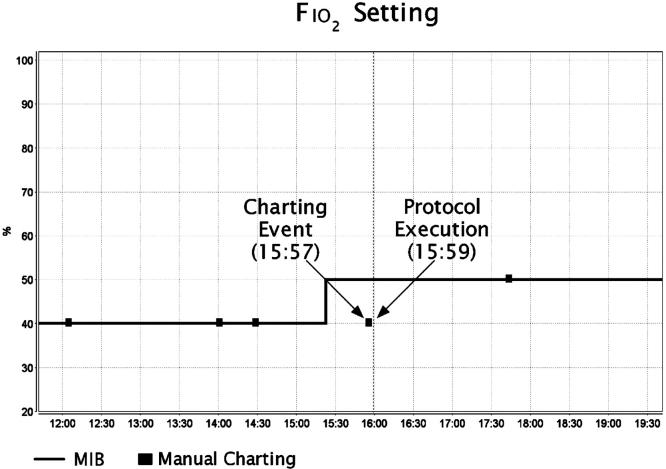

Illogical protocol instructions could be confusing, especially to a therapist unfamiliar with the protocol logic. At a minimum, when incorrect instructions were generated, respiratory therapists had to spend time identifying the charting problem, correcting it, and documenting it in the patient record. Repeated cases might lead to frustration and decreased confidence in future protocol instructions. ▶ shows the MIB and manually charted measurements of Fio 2 for one of the study patients. The instructions generated by the protocol execution at 15:59 were based on the incorrect Fio 2 of 40% that was charted at 15:57. As a result, the protocol incorrectly instructed the respiratory therapist to change the mode of ventilation from pressure support to continuous positive airway pressure. In this case, the respiratory therapist declined to follow the protocol instruction, noting in the record that the patient was sleepy and not breathing deeply and as a result had a low blood oxygen saturation.

Figure 4.

Example of an incorrect Fio 2 setting that was used to generate protocol instructions. Fio 2 = fraction of inspired oxygen.

Limitations

There were no exclusion criteria for patients to participate in the study; however, MIB data were not collected from several ventilated patients in the study ICUs. There were both human and technological reasons for not collecting data from these patients. For example, respiratory therapists did not always connect ventilators to the MIB to enable the unit-wide disconnection alarm—many times the necessary cable was not connected when patients were admitted to the unit, or the cable was not reconnected when patients returned from being transported to the operating room or the imaging laboratory. Data could not be collected when the ventilator was not connected to the MIB. Technological limitations affecting the data collection process were caused by MIB hardware failures and the difficulty of associating raw ventilator data with a specific patient. Because of the large amount of ventilator data collected via the MIB (over 1,736 ventilator days), we believe that the human and technical deficiencies did not compromise the validity of the study.

This study did not specifically analyze the magnitude of differences in manually vs. automatically collected setting values. However, for both clinical decision makers and computerized decision support tools, incorrectly charted settings are important notwithstanding the magnitude of error. We did not determine prior to data analysis a useful way to combine incorrect duration with the magnitude of error. Such a method would be particularly relevant when dealing with measured physiologic variables as compared to setting values.

The charting instances extracted from the research data warehouse did not contain both the original and edited values for each setting, measured physiologic variable, and clinical observation; instead, only the most recently saved values and a marker identifying whether the charting instance had been edited were available. Because the data were extracted from the research data warehouse and not the main clinical data repository (the legal record), it was impossible to determine how many edits affected setting values. However, the low number of charting instances that were edited to correct previously entered values—206 (0.7%) out of a total of 29,054 instances—suggested that respiratory therapists were either largely unaware of periods when setting values were incorrect, or they thought that retroactively correcting the record was not necessary.

The observed 6.1-minute average charting delay (including an average of 1.8 minutes spent charting) suggested that, in most cases, data entry was performed soon after settings were observed. Because the time that settings were observed was self-reported, it is possible that respiratory therapists underestimated or misrepresented the amount of time that elapsed between observing and entering data. When a respiratory therapist started the charting application, current time was entered by default into the field for observation time. Because additional effort was required to edit the observation time from the default current time, it is probable that some charting instances contained values that had been observed in the past but were associated with the current time. Such situations would have caused underestimation of the charting delay.

Future Work

Although many of today’s infusion pumps, patient monitors, and ventilators are fitted with an RS-232 serial port for data transfer, manufacturers have been slow to adopt the ISO/IEEE 11073 standard. Lack of consumer demand has been identified as a major reason for slow adoption. 37 The lack of compliance with medical device communication standards contributes to the fact that relatively few institutions are automatically acquiring data from bedside devices for integration into the patient record. Clinically based strategies must be developed to specify which data elements should be collected from bedside devices and at what frequency. At LDS Hospital, we are collecting ventilator data at 5-second intervals to detect patient–ventilator disconnections and to identify ventilator setting changes in a timely manner. 36 We anticipate the further development of clinical decision support tools that rely on automatically acquired ventilator data, such as graphical displays of trends, integration of ventilator data with blood gas and medication administration data, and increasingly intelligent ventilator management protocols.

Conclusion

Our study evaluated data quality of ventilator settings entered by respiratory therapists into a computer charting application. Data were entered directly into the bedside computer while the therapist was observing the patient. Prior to the study, computer charting of respiratory care data had been used for over 20 years in the study ICUs, and the implementation and use of computerized ventilator protocols reinforced the necessity of accurate and timely charting. The minimal charting delay and the high level of accuracy observed for most settings was certainly influenced by the availability of computer terminals in each patient room, the culture of bedside charting prevalent among the respiratory therapists, and the extensive use of computerized ventilator protocols.

In the intensive care environment, which is frequently noisy, stressful, and prone to interruption, charting in an accurate and timely fashion is difficult. 38 Collecting data using the MIB provided an opportunity to evaluate the quality of ventilator setting data entered by respiratory therapists into a computer charting system. Although it may be challenging to apply the results observed in this study to ICUs where charting relies primarily on paper flowsheets, recent studies by Akhtar et al. 1 and Soo Hoo et al. 3 have noted important inconsistencies in respiratory care charting among practitioners and institutions. Poor data quality adversely affects both human and computer decision making. Even at institutions where manual charting of ventilator settings is performed well, automatic data collection should be used to eliminate delays, improve charting efficiency, and reduce errors caused by incorrect data.

Footnotes

Dr. Vawdrey is funded through a training grant in medical informatics from the National Library of Medicine, LM007124. Additional support for this research was provided by Intermountain Healthcare.

The authors thank Kyle Johnson, Bill Hawley, and Tupper Kinder of the Department of Medical Informatics at LDS Hospital for technical assistance throughout the data collection process. Lori Carpenter and Vrena Flint from the Department of Respiratory Care provided helpful suggestions about the study design and the data analysis.

References

- 1.Akhtar SR, Weaver J, Pierson DJ, Rubenfeld GD. Practice variation in respiratory therapy documentation during mechanical ventilation Chest 2003;124:2275-2282. [DOI] [PubMed] [Google Scholar]

- 2.Holt AA, Sibbald WJ, Calvin JE. A survey of charting in critical care units Crit Care Med 1993;21:144-150. [DOI] [PubMed] [Google Scholar]

- 3.Soo Hoo GW, Park L. Variations in the measurement of weaning parameters: a survey of respiratory therapists Chest 2002;121:1947-1955. [DOI] [PubMed] [Google Scholar]

- 4.Dojat M, Harf A, Touchard D, Lemaire F, Brochard L. Clinical evaluation of a computer-controlled pressure support mode Am J Respir Crit Care Med 2000;161:1161-1166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.East TD, Henderson S, Morris AH, Gardner RM. Implementation issues and challenges for computerized clinical protocols for management of mechanical ventilation in ARDS patients Symposium for Computer Applications in Medical Care (SCAMC) 1989;13:583-587. [Google Scholar]

- 6.Andrews RD, Gardner RM, Metcalf SM, Simmons D. Computer charting: an evaluation of a respiratory care computer system Respir Care 1985;30:695-707. [PubMed] [Google Scholar]

- 7.Clemmer TP, Gardner RM. Data gathering, analysis, and display in critical care medicine Respir Care 1985;30:586-601. [PubMed] [Google Scholar]

- 8.East TD, Morris AH, Wallace CJ, et al. A strategy for development of computerized critical care decision support systems Int J Clin Monit Comput 1991;8:263-269. [DOI] [PubMed] [Google Scholar]

- 9.Sittig DF, Gardner RM, Pace NL, Morris AH, Beck E. Computerized management of patient care in a complex, controlled clinical trial in the intensive care unit Comput Methods Programs Biomed 1989;30:77-84. [DOI] [PubMed] [Google Scholar]

- 10.Nelson NC, Evans RS, Samore MH, Gardner RM. Detection and prevention of medication errors using real-time bedside nurse charting J Am Med Inform Assoc 2005;12:390-397. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Blumenstein BA. Verifying keyed medical research data Stat Med 1993;12:1535-1542. [DOI] [PubMed] [Google Scholar]

- 12.Mullooly JP. The effects of data entry error: Aon analysis of partial verification Comput Biomed Res 1990;23:259-267. [DOI] [PubMed] [Google Scholar]

- 13.Benson M, Junger A, Quinzio L, et al. Influence of the method of data collection on the documentation of blood-pressure readings with an Anesthesia Information Management System (AIMS) Methods Inf Med 2001;40:190-195. [PubMed] [Google Scholar]

- 14.Edsall DW, Deshane P, Giles C, Dick D, Sloan B, Farrow J. Computerized patient anesthesia records: less time and better quality than manually produced anesthesia records J Clin Anesth 1993;5:275-283. [DOI] [PubMed] [Google Scholar]

- 15.Edsall DW, Deshane PD, Gould NJ, Mehta Z, White SP, Solod E. Elusive artifact and cost issues with computerized patient records for anesthesia (CPRA) Anesthesiology 1997;87:721-722. [DOI] [PubMed] [Google Scholar]

- 16.Block FE. Normal fluctuation of physiologic cardiovascular variables during anesthesia and the phenomenon of “smoothing.” J Clin Monit 1991;7:141-145. [DOI] [PubMed] [Google Scholar]

- 17.Cook RI, McDonald JS, Nunziata E. Differences between handwritten and automatic blood pressure records Anesthesiology 1989;71:385-390. [DOI] [PubMed] [Google Scholar]

- 18.Taylor DE, Whamond JS. Reliability of human and machine measurements in patient monitoring Eur J Intens Care Med 1975;1:53-59. [DOI] [PubMed] [Google Scholar]

- 19.Turner HB, Anderson RL, Ward JD, Young HF, Marmarou A. Comparison of nurse and computer recording of ICP in head injured patients J Neurosci Nurs 1988;20:236-239. [DOI] [PubMed] [Google Scholar]

- 20.Cunningham S, Deere S, Elton RA, McIntosh N. Comparison of nurse and computer charting of physiological variables in an intensive care unit Int J Clin Monit Comput 1996;13:235-241. [DOI] [PubMed] [Google Scholar]

- 21.Osborn JJ, Beaumont JO, Raison JC, Russell J, Gerbode F. Measurement and monitoring of acutely ill patients by digital computer Surgery 1968;64:1057-1070. [PubMed] [Google Scholar]

- 22.Warner HR, Gardner RM, Toronto AF. Computer-based monitoring of cardiovascular functions in postoperative patients Circulation 1968;37(Suppl):II68-II74. [DOI] [PubMed] [Google Scholar]

- 23.Lewis FJ. Monitoring of patients in intensive care units Surg Clin North Am 1971;51:15-23. [DOI] [PubMed] [Google Scholar]

- 24.Peters RM, Stacy RW. Automatized clinical measurement of respiratory parameters Surgery 1964;56:44-52. [PubMed] [Google Scholar]

- 25.Blumenfeld W, Marks S, Cowley RA. An inexpensive data acquisition system for a multi-bed intensive care unit Am J Surg 1972;123:580-584. [DOI] [PubMed] [Google Scholar]

- 26.Roccaforte P, Woodring PL. An automatic data acquisition system for ventilator patients Int J Clin Monit Comput 1989;6:123-126. [DOI] [PubMed] [Google Scholar]

- 27.Chambrin MC, Ravaux P, Chopin C, Mangalaboyi J, Lestavel P, Fourrier F. Computer-assisted evaluation of respiratory data in ventilated critically ill patients Int J Clin Monit Comput 1989;6:211-215. [DOI] [PubMed] [Google Scholar]

- 28.Shabot MM. Standardized acquisition of bedside data: the IEEE P1073 Medical Information Bus Int J Clin Monit Comput 1989;6:197-204. [DOI] [PubMed] [Google Scholar]

- 29.Kennelly RJ. Improving acute care through use of medical device data Int J Med Inf 1998;48:145-149. [DOI] [PubMed] [Google Scholar]

- 30.Garnsworthy J. Standardizing medical device communications: The Medical Information Bus Med Device Technol 1998;9:18-21. [PubMed] [Google Scholar]

- 31.Gardner RM, Tariq H, Hawley WL, East TD. Medical Information Bus: the key to future integrated monitoring Int J Clin Monit Comput 1989;6:205-209. [DOI] [PubMed] [Google Scholar]

- 32.Kuperman GJ, Gardner RM, Pryor TA. HELP: A Dynamic Hospital Information System. New York: Springer-Verlag; 1991.

- 33.Morris AH, Wallace CJ, Menlove RL, et al. Randomized clinical trial of pressure-controlled inverse ratio ventilation and extracorporeal CO2 removal for adult respiratory distress syndrome Am J Respir Crit Care Med 1994;149:295-305. [DOI] [PubMed] [Google Scholar]

- 34.East TD, Young WH, Gardner RM. Digital electronic communication between ICU ventilators and computers and printers Respir Care 1992;37:1113-1123. [PubMed] [Google Scholar]

- 35.Gardner RM, Hawley WL, East TD, Oniki TA, Young HF. Real time data acquisition: recommendations for the Medical Information Bus (MIB) Int J Clin Monit Comput 1991;8:251-258. [DOI] [PubMed] [Google Scholar]

- 36.Evans RS, Johnson KV, Flint VB, et al. Enhanced notification of critical ventilator events J Am Med Inform Assoc 2005;12:589-595. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Kennelly RJ, Gardner RM. Perspectives on development of IEEE 1073: the Medical Information Bus (MIB) standard Int J Clin Monit Comput 1997;14:143-149. [DOI] [PubMed] [Google Scholar]

- 38.Drews FA. The frequency and impact of task interruptions on patient safety in the intensive care unit 16th World Congress on Ergonomics. Maastricht, the Netherlands: Elsevier; 2006. Spring 2006.