Abstract

Objective

Few instruments are available to measure the performance of intensive care unit (ICU) clinical information systems. Our objectives were: 1) to develop a survey-based metric that assesses the automation and usability of an ICU’s clinical information system; 2) to determine whether higher scores on this instrument correlate with improved outcomes in a multi-institution quality improvement collaborative.

Design

This is a cross-sectional study of the medical directors of 19 Michigan ICUs participating in a state-wide quality improvement collaborative designed to reduce the rate of catheter-related blood stream infections (CRBSI). Respondents completed a survey assessing their ICU’s information systems.

Measurements

The mean of 54 summed items on this instrument yields the clinical information technology (CIT) index, a global measure of the ICU’s information system performance on a 100 point scale. The dependent variable in this study was the rate of CRBSI after the implementation of several evidence-based recommendations. A multivariable linear regression analysis was used to examine the relationship between the CIT score and the post-intervention CRBSI rates after adjustment for the pre-intervention rate.

Results

In this cross-sectional analysis, we found that a 10 point increase in the CIT score is associated with 4.6 fewer catheter related infections per 1,000 central line days for ICUs who participate in the quality improvement intervention for 1 year (95% CI: 1.0 to 8.0).

Conclusions

This study presents a new instrument to examine ICU information system effectiveness. The results suggest that the presence of more sophisticated information systems was associated with greater reductions in the bloodstream infection rate.

Introduction

Intensive care units are complex environments, where rapid access to clinical information can be life-determining. Clinical information technologies (CIT) such as electronic medical records (EMRs), computerized provider order entry (CPOE), clinical decision support systems (CDSS), and picture archiving and communication systems (PACS) may profoundly affect ICU performance and clinical outcomes. The availability and use of information technology may favorably impact clinical endpoints, costs, and efforts to improve quality of care. 1, 2 However, the degree to which CIT improves outcomes is difficult to assess. Although case reports suggest the benefit of ICU information systems, we do not know of any studies that have examined this impact across ICUs. 3, 4 Standardized instruments that measure ICU performance, particularly from the perspective of the clinician, do not exist to allow for such comparisons.

We have previously developed and validated a clinical information technology assessment tool (CITAT) that quantitatively assesses the automation and usability of a hospital’s general inpatient information system. We now describe development of a similar ICU-specific clinical information technology assessment tool and its use in conjunction with a standardized quality improvement intervention to reduce catheter-related bloodstream infections (CRBSI).

Methods

Overview of Instrument Development

We developed the clinical information technology assessment tool for intensive care units (CITAT-ICU) based on an earlier version of this survey constructed for physicians who practice on the general inpatient wards. The original instrument was produced in eight steps according to established methods of survey design. These steps included: development of a conceptual model, literature review, content identification, item construction, pre-testing, and item selection and reclassification. This instrument was further tested and validated in four U.S. hospitals, and demonstrated discriminant validity, convergent validity, reliability, and precision. 5 To develop the CITAT-ICU we followed the same development sequence, modifying previous items, constructing new items, and re-selecting items based on their suitability for ICU use.

Conceptual Model

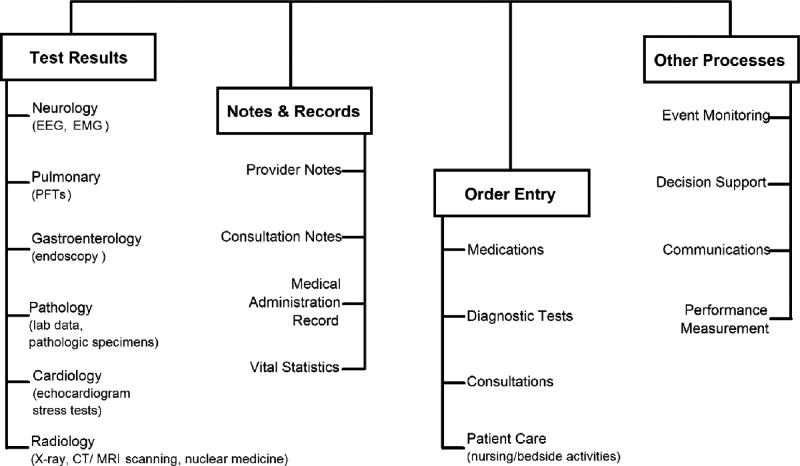

We constructed a measurement model for a hospital information system’s automation and usability, two concepts used to characterize information systems. 6 Automation represents the degree to which clinical information processes in the intensive care unit are fully computerized. We divide automation into 4 distinct sub-domains: test results, notes & records, order entry, and other processes (▶). 5 Usability represents the degree to which the information system is effective, easy to use, and well supported. Usability is thus separated into three sub-domains: effectiveness, ease, and support. Automation, usability, and all seven sub-domains were defined according to our previous specifications. 5

Figure 1.

Simplified conceptual framework of a hospital clinical information system, including four major components and associated clinical subcomponents. Each box represents types of clinical information that can be potentially computerized. Test Results represent unique results formats specific to a medical specialty. A few examples are listed in parentheses. EEG, electro-encephalogram; EMG, electromyelogram; PFT, pulmonary function tests. (Reproduced from: Amarasingham R, Diener-West M, Weiner M, Lehmann H, Herbers JE, and Powe NR. Clinical information technology capabilities in four U.S. hospitals: testing a new structural performance measure. Med Care. 2006;44:216–24.)

New Item Construction

Each of the 90 items from the original CITAT was re-interpreted and re-written in the context of the ICU. For each sub-domain we then developed new ICU-specific items, including items that reflect emerging information technologies, such as the ability to electronically measure and chart urine volumes for critically ill patients. This resulted in 30 additional items.

Item Selection

In order to reduce the number of items from 120 items to 60 or fewer, four of the investigators independently reviewed the instrument. Two investigators are academic critical care physicians with experience in the acquisition or design of ICU clinical information systems. Each of the four investigators assigned an ICU-specific priority score from 1–5 for each automation item on three different scales. A high score on the first scale, Impact on Clinical Outcomes, indicates that the automation of that clinical information process is likely to contribute to positive clinical outcomes, both in terms of aggregate measures, such as mortality and death, and process measures, such as obtaining blood cultures prior to giving antibiotics. A high score on the second scale, Impact on Efficiency, indicates that the automation of that process would improve the efficiency of a physician or ICU team in providing care. A high score on the third scale, Relevance, indicates that the process is deemed extremely relevant to the daily work of ICU providers. Usability items were also rated on the Impact on Clinical Outcomes and Impact on Efficiency scales; because the usability of an item is not directly related to its relevance in the ICU, the Relevance scale was not used for usability items.

Scores for each item were summed for each investigator, with a maximum of 15 points for automation items (3 scales × 5 points), and 10 points for usability items (2 scales × 5 points). The items were then ranked from highest to lowest score for each reviewer in both the automation and usability domains; items in the top 50% were assigned a binary score of 1 and the remainder was assigned 0. The resulting binary scores from all four reviewers was then summed for each item, with a maximum score of 4 points if the item was in the top 50% for all reviewers. All automation with ≥ 3 points were selected. Items with 2 points that were also ranked in the top 50% by both critical care physicians were also included. All usability items with ≥ 2 points were included in the instrument. This ranking system selected 30 automation items and 24 usability items for the final CITAT-ICU. The CITAT-ICU scoring items are available at the JAMIA on-line appendix, www.jamia.org, accompanying this article.

Testing within a CRBSI Intervention

We tested the CITAT-ICU instrument in 19 Michigan ICUs participating in the Keystone ICU Project, a collaborative research study between the Johns Hopkins University Quality and Safety Research Group and the Michigan Health & Hospital Association (MHA)–Keystone Center for Patient Safety & Quality. The Keystone Project was designed as a prospective cohort study using ICU-specific historical controls as the baseline comparator to evaluate a multi-faceted, evidence-based intervention to reduce CRBSI. In the original study, 108 ICUs voluntarily participated in the MHA Keystone ICU project. For each of these ICUs, CRBSI rates were obtained in four three month intervals: 3 months prior to implementation, 0–3 months (peri-implementation), 3–6 months post-implementation, and 6–9 months post-implementation. 7

Before implementing any patient safety intervention, each ICU designated at least one physician and nurse as team leaders. Team leaders were responsible for disseminating the interventions to their colleagues. Training was carried out through biweekly conference calls, coaching from research staff, and two state-wide meetings during the year. Teams were provided a manual of operations that included details regarding the efficacy of the intervention for CRBSI, evidence supporting the intervention, suggestions for implementing the intervention, and methods of data collection. 7 The CRBSI intervention sought to increase the extent to which caregivers used five evidence-based interventions to reduce CRBSI: 1) washing hands prior to the insertion of the central line; 2) using full barrier precautions; 3) cleaning the skin around the insertion site with chlorhexadine; 4) avoiding the femoral site if possible; and 5) removing unnecessary catheters. Team leaders were encouraged to partner with their local hospital infection control practitioner to assist with the implementation of the intervention and data collection.

Study Population and Study Sample

The physician ICU directors of each of the 19 ICUs completed the clinical information technology assessment tool for intensive care units (CITAT-ICU) between March and June 2005. We also wanted to examine differences between the physician director and physician staff scores among the ICUs in order to assess the inter-rater reliability of the instrument. We therefore requested each ICU to have at least one of their staff physicians independently complete the CITAT-ICU.

Statistical Analysis

The independent variables in our analyses were the information technology scores. A CIT Index score and seven subdomain scores were calculated for each ICU based on the responses from each physician ICU director. Each item on the instrument is individually assigned a score from 0 to 5 points. Total scores were calculated for each ICU by adding the total number of points based on the responses, dividing by the maximum number of points, and multiplying by 100. The methods for scoring the instrument, identical to the original CITAT, have been extensively described. 5

Two CRBSI rates were devised for each ICU: the pre-rate (the rate of CRBSI for the first time period in which data were collected for the ICU) and the post-rate (the CRBSI rate for the last time period in which data were collected for the ICU). The pre- and post-CRBSI rates were calculated by adding the total number of central line infections reported for the 3 month time period and dividing by the total number of central line days for that period. Because some hospitals joined the study late or dropped out early we had to account for the ICU’s “exposure” to the study, since it is theoretically possible that ICUs who participated longer in the study might show greater reductions in CRBSI rates because of greater exposure to the study’s interventions (i.e., a dose-response effect of the intervention). We therefore divided the post-rate by the total number of days the ICU participated in the study. To make the post-rate meaningful we subsequently multiplied the rate by the total number of days in the entire study (365 days). Thus, the post-rate reflects the expected number of infections per thousand central line days after a 365 day exposure to the study. One hospital reported data for only one time period and was thus eliminated from the CRBSI analysis.

We tested the association between each category of IT score (CIT index score, automation and usability scores, and seven subdomain scores) and the post-intervention CRBSI rates using a linear regression model. The relationship between the IT score and the post-rate may be affected by the baseline or pre-rate. We therefore adjusted for the pre-rate in a multi-variate model considering the IT variable and post-rate. We also examined the relationship between three hospital co-variates, teaching status (teaching vs. non-teaching), bed size (one of nine categories of increasing bed size), urban status (urban vs. rural) and the post-intervention CRBSI rate. Results were considered statistically significant at a p-value ≤ 0.05. STATA version 8.2 (College Station, TX) was used for all analyses. The Johns Hopkins University School of Medicine Institutional Review Board approved our research protocol.

Results

Characteristics of Study Hospitals

A total of 19 ICU directors completed the Clinical Information Technology Assessment Tool (CITAT) for their facility. Compared to all Michigan hospitals, our study sample had more teaching hospitals (58% in this study vs. 24% overall in Michigan), less rural hospitals (11% vs. 25%, respectively), and more hospitals with greater than 300 beds (58% vs. 23%, respectively). In ▶ we report the mean CIT, automation, and usability scores calculated from the ICU director responses for each category of hospitals. Across all scores, non-teaching hospitals scored higher than teaching hospitals but the difference was statistically significant only in the case of usability (p <0.05). Likewise urban hospitals were more likely to have higher scores than rural hospitals but the differences did not achieve statistical significance. In the case of bed size, hospitals with less than 300 beds were statistically significantly higher across all IT scores than hospitals with larger bed size. However, there were only two very small hospitals (75–100 beds); the higher IT scores for hospitals with less than 300 beds were due to several hospitals between 200–300 beds that scored very highly on the instrument.

Table 1.

Table 1 Characteristics of Study Hospitals

| Hospital Characteristic | N (%) | CIT |

Automation |

Usability |

|||

|---|---|---|---|---|---|---|---|

| Mean (SD) | p | Mean (SD) | p | Mean (SD) | p | ||

| Teaching Status | |||||||

| Non-Teaching | 8 (42%) | 48 (16) | .11 | 41 (24) | .34 | 55 (14) | <.05 |

| Teaching | 11 (58%) | 37 (11) | 32 (13) | 42 (11) | |||

| Rural Status | |||||||

| Urban | 17 (89%) | 43 (15) | .52 | 37 (19) | .54 | 48 (14) | .61 |

| Rural | 2 (11%) | 35 (6) | 28 (14) | 43 (2.2) | |||

| Bed size | |||||||

| Bed size <300 | 8 (42%) | 50 (17) | <.05 | 46 (24) | <.05 | 55 (13) | .06 |

| Bed size ≥300 | 11 (58%) | 36 (9) | 29 (10) | 43 (13) | |||

Association between IT Score and CRBSI Rates

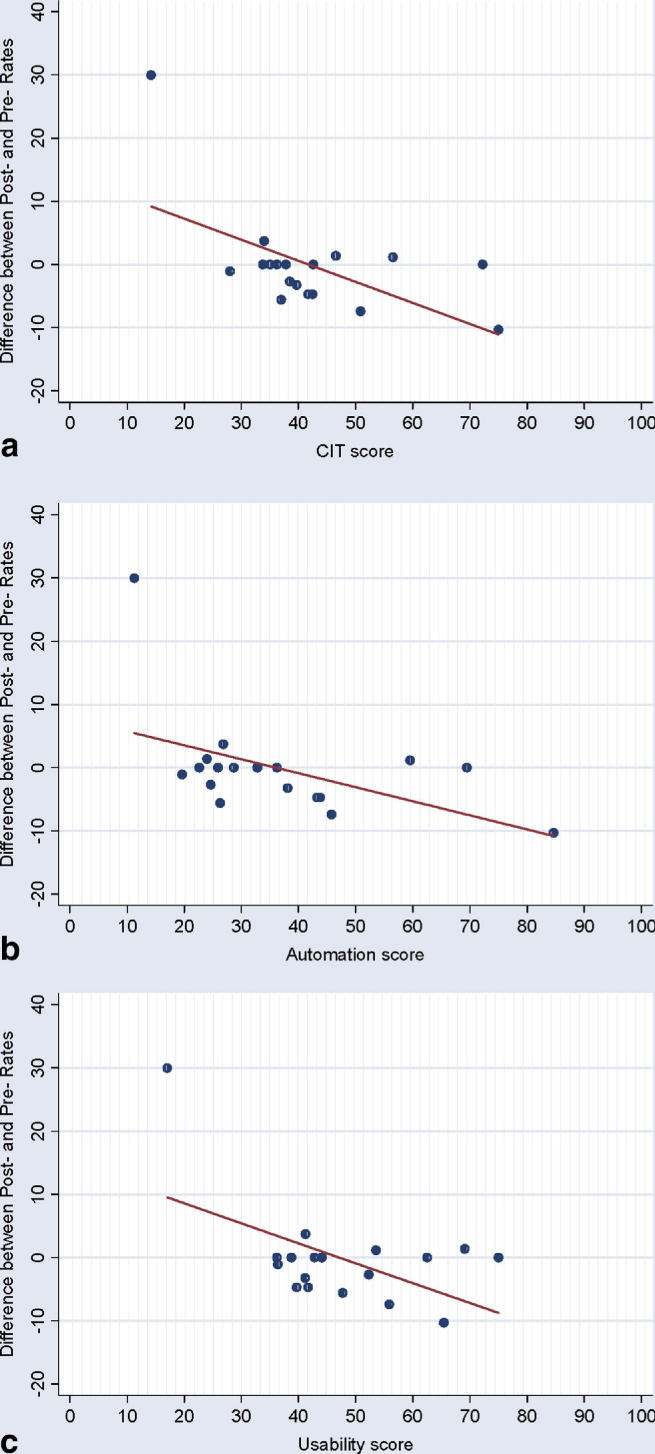

▶ shows the difference between the post-rate (last CRBSI rate collected per 1,000 central line days) and the pre-rate (first CRBSI rate collected per 1,000 central line days) as a function of the IT director score for each of the ICUs. The graphs for CIT, automation, and usability all reveal a downward trend, suggesting that with increasing IT score greater reductions in CRBSI are achieved with the quality improvement intervention.

Figure 2.

Relationship between the difference in the post- and pre-CRBSI rate and a) CIT; b) Automation; and c) Usability scores.

In the bi-variate linear regression analysis we found that for each IT category, an increase in the IT score was associated with a corresponding decrease in the post-intervention CRBSI rate (▶). However, only in the cases of CIT (p = 0.05), usability (p = 0.03), and effectiveness (p = 0.05) did the results approach statistical significance. In multi-variate analysis examining the relationship between the IT score and the post-intervention rate after adjusting for the pre-intervention rate, the magnitude of change was not appreciably altered; however several IT variables (CIT, automation, usability, and test results) become statistically significant. Processes (p = 0.06) and effectiveness (p = 0.07) also approach statistical significance. In separate models we also examined the relationship between bed size (p = 0.14), urban/rural status (p = 0.71), and teaching status (p = 0.93) with the post-intervention rate. None of these were found to be statistically significantly associated with the post-intervention rate even after adjustment for the pre-intervention rate.

Table 2.

Table 2 Association between IT Scores and Rate of Post-intervention Catheter-related Bloodstream Infections (CRBSI) per 1,000 Central Line Days ∗

| Independent Variable | Unadjusted |

Adjusted† |

||

|---|---|---|---|---|

| Change in Post-intervention Rate of CRBSI (95% CI)† | P | Change in Post-intervention Rate of CRBSI (95% CI)‡ | P | |

| IT scores | ||||

| CIT | −0.42 (−0.82, −0.01) | 0.05 | −0.46 (−0.80, −0.10) | 0.02 |

| Automation | −0.23 (−0.57, 0.10) | 0.16 | −0.31 (−0.60, −0.02) | 0.04 |

| Usability | −0.47 (−8.8, −0.6) | 0.03 | −0.42 (−0.79, −0.04) | 0.03 |

| Automation subdomains | ||||

| Test Results | −0.23 (−0.57, 0.11) | 0.17 | −0.30 (−0.60, −0.01) | 0.04 |

| Notes & Records | −0.08 (−0.46, 0.30) | 0.35 | −0.14 (−0.48, 0.20) | 0.39 |

| Order Entry | −0.12 (−0.27, 0.08) | 0.13 | −0.12 (−0.27, 0.04) | 0.13 |

| Processes | −0.21 (−0.54, 0.12) | 0.20 | −0.27 (−0.56, 0.01) | 0.06 |

| Usability subdomains | ||||

| Effectiveness | −0.44 (−0.87, −0.01) | 0.05 | −0.37 (−0.78, 0.04) | 0.07 |

| Ease | −0.16 (−0.38, 0.07) | 0.15 | −0.09 (−0.33, 0.14) | 0.41 |

| Support | −0.17 (−0.41, 0.08) | 0.17 | −0.19 (−0.41, 0.03) | 0.08 |

| Hospital characteristics | ||||

| Bed size | 3.2 (−0.97, 7.4) | 0.12 | 2.8 (−1.1, 6.7) | 0.14 |

| Rural location | −4.0 (−32, 24) | 0.77 | −4.7 (−30, 21) | 0.71 |

| Teaching status | 5.2 (−7.9, 18.3) | 0.84 | 5.2 (−6.8, 17) | 0.93 |

∗ One ICU reported data for one phase of the intervention only. Since a change in CRBSI rate could not be calculated for this ICU over the study intervention, it was removed for this analysis leaving 18 hospitals.

† Multi-variate model adjusted for the pre-intervention rate.

‡ Change in Post-rate (per 10,000 central line days) after a 1 year exposure to the study.

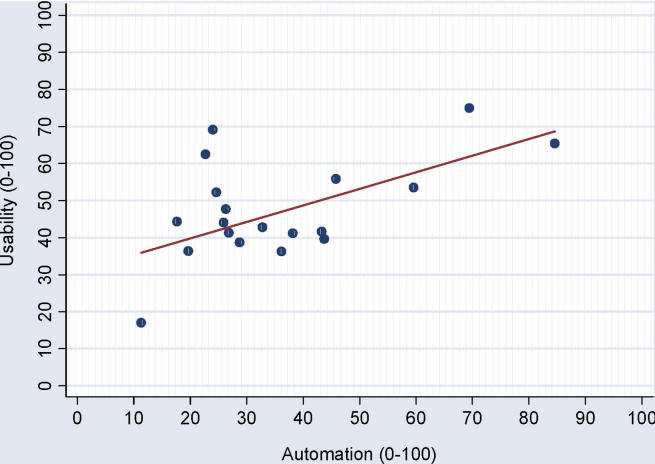

Association between Usability and Automation

Among the sample of 19 ICUs, the usability score appears to positively correlate with the automation score (▶); in a linear regression model, for every 10 point increase in automation, usability increases by 4 points (β = 0.41; p = 0.01). In contrast, teaching status, rural status, and bed size were not statistically significantly associated with the automation score.

Figure 3.

Relationship between Usability and Automation (β = 0.41; p = 0.01).

Relation between Physician Director and Staff Scores

Of the 19 ICUs for which we had director scores, we were able to obtain at least one independent physician staff score at 13 of the ICUs. The number of staff physician responses at each of these 13 ICUs ranged from one physician to 36 physicians. The correlation (r) between the mean staff physician scores and the director score for varying sets of ICUs ranged from 0.40 (when all 13 ICUs were included, including those hospitals for which we had only one physician staff response) to 1.0 (when only the two hospitals with at least 34 physician staff responses were analyzed). The correlation ranges from 0.65 to 1.0 when at least six physician staff responses are available for an ICU (▶).

Table 3.

Table 3 Correlation between Mean Physician and Director Score

| Minimum # of Physician Responses per ICU | No. of Corresponding ICUs | Correlation (r) |

|---|---|---|

| 1 | 13 | 0.40 |

| 2 | 9 | 0.27 |

| 3 | 7 | 0.27 |

| 6 | 5 | 0.81 |

| 7 | 4 | 0.86 |

| 12 | 3 | 0.65 |

| 34 | 2 | 1 |

Discussion

The Clinical Information Technology Assessment Tool for the ICU (CITAT-ICU) retains features of an earlier instrument for the general inpatient ward that was previously shown to be valid and reliable. 5 In this site-specific study, we modified the instrument to reflect the ways that normal hospital information processes might differ in the ICU setting. To examine the instrument’s potential application among a cohort of ICUs, we considered how scores on the CITAT-ICU correlated with the ability to reduce catheter-related bloodstream infections in 19 ICUs participating in a state-wide quality improvement program. Our results suggest that higher scores on a multitude of information technology domains (CIT, automation, usability, test results, and notes & records) are associated with lower post-intervention rates of CRBSI. These results remain significant after accounting for the baseline or pre-intervention rate. In addition, scores on both the processes and effectiveness sub-domains approached significance as well. In contrast, bed size, rural status, and teaching status do not appear to be associated with the post-intervention rate of CRBSI, even after adjusting for the pre-intervention rate.

These results are meaningful. Catheter-related bloodstream infections (CRBSI) are costly and can be fatal. 8,9 Each year within the United States, central venous catheters cause an estimated 80,000 CRBSIs and up to 28,000 deaths in intensive care units (ICUs). Average potential cost per CRBSI is $45,000. 10 According to our findings, an ICU with a 10 point higher CIT score is associated with 4.6 fewer central line infections per 1,000 central line days compared to an ICU with a lower IT score that implements the same evidence based intervention. Several potential explanations exist for these positive findings. Catheter-related bloodstream infections are the result of multiple factors including a system’s organization and structural environment. Providers equipped with systems that can more easily retrieve test results, provide ubiquitous access to clinical information, and employ order sets that reduce variations in clinical care may be more likely to deliver higher quality of care. Highly automated, carefully designed information systems may allow ICU teams to focus on truly clinical tasks by reducing paperwork, enhancing patient monitoring, and simplifying data extraction. In the case of central line placement, efficiencies created by a powerful information system may allow physicians and nurses to better comply with effective, but potentially time consuming, interventions such as those introduced in this study. Such steps include performing the central line insertion using checklists and enabling more team members, such as nurses, to participate. An electronic medical record, or complex decision support system, may prompt daily consideration of central line removal. In the future, clinical information systems might incorporate other key data elements about central lines, and provide automatic tracking, with warnings if certain signals appear (e.g., fever and tachycardia in the presence of a catheter that has been in place for an extended period).

Most quality improvement efforts are data intensive. Interventions need to be accompanied by tenaciously collected baseline and follow-up data. Powerful information systems may reduce the burden of data collection, freeing quality improvement teams to focus on efforts to change provider behavior, re-engineer processes, champion interventions, and sustain gains. Allowing staff to concentrate on the “human” aspects of quality improvement may be a significant benefit of well designed clinical information systems and may explain some of our findings.

The CITAT was designed so that automation and usability would represent, as much as possible, two separate constructs. This distinction was confirmed through previous factor analyses. 5 The uniqueness allows us to evaluate whether increasing automation is associated with greater usability. In this study we found that hospitals with higher automation scores also tend to have higher usability scores, suggesting that the conversion of paper-based systems to well-designed electronic systems do improve physician director perceptions of their system’s effectiveness and ease of use.

Survey based assessment tools often suffer from lack of response rate, particularly among physicians. We therefore reduced the instrument to its smallest possible size by prioritizing items for selection according to their overall relevance to the ICU and their potential impact on clinical outcomes and efficiencies. We also sought to create an instrument that could be administered to the medical director alone. To test the reliability of this approach, we administered the assessment tool to a varying number of physician staff at all 19 of the participating ICUs. Thirteen hospitals (68% of the sample) had at least one additional staff physician complete the survey. We found that when the sample was restricted to ICUs with at least six staff physicians completing the survey the correlation coefficient between the mean physician scores and the director score was at least 0.65. This suggests that the instrument reaches a relatively high degree of reliability with a relatively low number of respondents and that a single medical director’s score may be an efficient and valid way to score an ICU’s information systems. This finding will need to be replicated in further studies.

We do have important limitations to report. First, the 19 ICUs in this study represent a convenience sample of the ICUs who participated in the larger quality improvement study in Michigan. It is possible that the ICUs that were willing to participate might respond to the CITAT-ICU in a systematically different way than those who did not participate, producing a selection bias. In addition, because no other instruments exist with which to measure an ICU information system’s performance, we did not have an independent method to corroborate the validity of using responses from a single medical director. However, at a sub-sample of hospitals, independent physician staff scores appear to correlate with the director scores, lending evidence of inter-rater reliability.

We examined whether bed size, teaching status, and rural location were associated with both the post-intervention rates and with the IT score. These hospital characteristics do not appear to be confounders. However, it is possible that other unmeasured organizational factors (e.g., financial strength of the organization) could play a role in the relationship we observed.

Conclusion

Many examinations of critical care information systems are single site evaluations with limited explanatory power. This study presents a new instrument that can be used to quantitatively evaluate ICU information systems across a broad cross-section of hospitals. Additionally, we provide evidence that higher IT scores as measured on the CITAT-ICU are associated with greater reductions in the rate of catheter related bloodstream infections among hospital ICUs that apply the same quality improvement intervention. Although further testing is needed, this instrument could have important applications in health services research, quality improvement, and clinical IT design in the ICU setting.

Footnotes

The authors thank the Robert Wood Johnson Clinical Scholars Program and the Agency for Healthcare Research and Quality (1UC1HS14246) for their support of this work.

References

- 1.Bates DW, Cohen M, Leape LL, Overhage JM, Shabot MM, Sheridan T. Reducing the frequency of errors in medicine using information technology. J Am Med Inform Assoc. 200;8(4):299–308. [DOI] [PMC free article] [PubMed]

- 2.Bates DW, Gawande AA. Improving safety with information technology N Engl J Med 2003;348(25):2526-2534. [DOI] [PubMed] [Google Scholar]

- 3.Cordero L, Kuehn L, Kumar RR, Mekhjian HS. Impact of computerized physician order entry on clinical practice in a newborn intensive care unit J Perinatol 2004;24(2):88-93. [DOI] [PubMed] [Google Scholar]

- 4.Wong DH, Gallegos Y, Weinger MB, Clack S, Slagle J, Anderson CT. Changes in intensive care unit nurse task activity after installation of a third-generation intensive care unit information system Crit Care Med 2003;31(10):2488-2494. [DOI] [PubMed] [Google Scholar]

- 5.Amarasingham R, Diener-West M, Weiner M, Lehmann H, Herbers JE, Powe NR. Clinical information technology capabilities in four U.S. hospitals: testing a new structural performance measure Med Care 2006;44(3):216-224. [DOI] [PubMed] [Google Scholar]

- 6.Parasuraman RST, Wickens CD. A model for types and levels of human interaction with automation IEEE Transaction on Systems, Man, and Cybernetics—Part A: Systems and Humans 2000;30(3):286-297. [DOI] [PubMed] [Google Scholar]

- 7.Pronovost P, Needham D, Berenholtz S, Sinopoli D, Chu H, Cosgrove S, et al. An intervention to decrease catheter-related bloodstream infections in the ICU N Engl J Med 2006;355(26):2725-2732. [DOI] [PubMed] [Google Scholar]

- 8.Mermel LA. Prevention of intravascular catheter-related infections Ann Intern Med 2000;132(5):391-402. [DOI] [PubMed] [Google Scholar]

- 9.Burke JP. Infection control—a problem for patient safety N Engl J Med 2003;348(7):651-656. [DOI] [PubMed] [Google Scholar]

- 10.O’Grady NP, Alexander M, Dellinger EP, Gerberding JL, Heard SO, Maki DG, et al. Guidelines for the prevention of intravascular catheter-related infections Am J Infect Control 2002;30(8):476-489. [DOI] [PubMed] [Google Scholar]