Abstract

Objective

To describe the characteristics of unanswered clinical questions and propose interventions that could improve the chance of finding answers.

Design

In a previous study, investigators observed primary care physicians in their offices and recorded questions that arose during patient care. Questions that were pursued by the physician, but remained unanswered, were grouped into generic types. In the present study, investigators attempted to answer these questions and developed recommendations aimed at improving the success rate of finding answers.

Measurements

Frequency of unanswered question types and recommendations to increase the chance of finding answers.

Results

In an earlier study, 48 physicians asked 1062 questions during 192 half-day office observations. Physicians could not find answers to 237 (41%) of the 585 questions they pursued. The present study grouped the unanswered questions into 19 generic types. Three types accounted for 128 (54%) of the unanswered questions: (1) “Undiagnosed finding” questions asked about the management of abnormal clinical findings, such as symptoms, signs, and test results (What is the approach to finding X?); (2) “Conditional” questions contained qualifying conditions that were appended to otherwise simple questions (What is the management of X, given Y? where “given Y” is the qualifying condition that makes the question difficult.); and (3) “Compound” questions asked about the association between two highly specific elements (Can X cause Y?). The study identified strategies to improve clinical information retrieval, listed below.

Conclusion

To improve the chance of finding answers, physicians should change their search strategies by rephrasing their questions and searching more clinically oriented resources. Authors of clinical information resources should anticipate questions that may arise in practice, and clinical information systems should provide clearer and more explicit answers.

Introduction

Physicians cannot readily find answers to many of their questions about patient care. 1–9 Most questions that arise in practice go unanswered, either because the physician does not search for an answer, or because an answer is difficult to find. 3,6,9–13 Unanswered questions can lead to failings in quality of care, patient safety, and cost-effectiveness. 4,8,14–23

One reason for failing to find an answer may be that the question is poorly phrased. Physicians have been advised to phrase their questions to match the evidence from randomized clinical trials. 24–29 For example, rather than asking “When should patients with infectious mononucleosis return to sports?” one approach advises physicians to use the “PICO” format: “In children with infectious mononucleosis (Patient population) does sports participation during the 6-week period following the onset of symptoms (Intervention) compared with no sports participation (Comparison) raise the risk of splenic rupture (Outcome)?” An answer to a PICO question is more likely to be evidence-based. But, as in this question about infectious mononucleosis, it may not answer the physician’s original question.

Bergus and colleagues found that specialists providing an email consultation service more often answered questions that explicitly included an intervention and outcome in the query than questions lacking those elements. 28 In contrast, Cheng found that most questions posed by hospital clinicians were not “well-built” (e.g., using the PICO formulation) and that well-built questions were not more likely to be answered than poorly built questions. 7 In a study of 200 questions asked by family physicians, only 71 (36%) could be recast as PICO questions. 30 In a study of Oregon physicians, only 46% of questions arising in practice could be answered using the medical literature. 12

Many factors besides the phrasing of the question could contribute to the physician’s inability to find an answer. For example, the literature searching skill of the physician, the resources available, and the time available could all affect the likelihood of finding an answer. 9,31 In a previous study, physicians cited lack of time and doubt about the existence of relevant information as common reasons for not pursuing answers to their questions. 9 Once pursued, the most common reason for not finding an answer was the absence of relevant information in the selected resource. 9

This study focused on the nature of the questions themselves. Our goals were to understand why some questions go unanswered and to develop recommendations to improve the likelihood of finding answers. Finding good answers to questions that arise in practice is important because they occur commonly, 2–4,9,10,13,32,33 and the answers affect patient care decisions. 4,8,14–22 If certain kinds of questions are routinely not answered well in the print and electronic literature, authors could learn to anticipate and effectively answer these questions. Likewise, it would be useful to offer practical guidance for physicians on locating answers when they are readily available but missed.

Methods

Subjects

The unanswered questions in this study were selected from the authors’ previous study of 1062 questions asked by primary care physicians during the course of patient care. 9 In that study, we recruited family physicians, general internists, and general pediatricians from the eastern third of Iowa. This geographic area includes rural towns and small cities. To identify physicians, the previous study used a database of practicing physicians, which is maintained by the University of Iowa. In the earlier study, we assigned a random number to each physician, ordered the list by this random number, divided physicians according to specialty (general internal medicine, general pediatrics, and family medicine), and invited physicians from each specialty in random order. In addition to the random sample, we invited ten minority physicians to improve the generalizability of our findings. In the previous study, we stopped inviting after 48 physicians agreed to participate (16 general internists, 17 general pediatricians, and 15 family physicians). This sample size had been based on our estimate of the number needed to collect a large number of questions (1000) and on the funding available.

Data Collection Procedures

The questions from the previous study were collected between July 31, 2001 and August 19, 2003. During that study, one of us (JE) visited each physician for four half-day observation periods, during which he stood in the hallway outside the clinic exam rooms. Between patient visits, the physician reported any questions that arose related to the care of the patient. The observer recorded the verbatim question along with notes that described attempts to answer it. The data collection methods were further described elsewhere. 9 The present study represents an additional analysis of those data.

From the total of 1062 questions, we limited the analysis in this study to questions that physicians pursued but failed to answer. Please see Appendix 1, Derivation of number of unanswered questions in present study. A question was defined as “pursued” if the physician searched for an answer at any time during the observation period using one or more print or electronic resources. A question was defined as “easily answered” if the needed information was readily found in the first resource consulted. A question was defined as “not answered” if the information found did not address the question or did not adequately answer it as judged by the physician. We excluded questions when the only resource consulted was a human (e.g., a phone call to a specialist) because our goal was to improve the answering process when applied to generally available print and electronic resources. We also excluded unanswered questions when the search for an answer was prematurely and permanently interrupted (e.g., nurse told the physician to hurry because patients were waiting).

Data Analysis

We used a qualitative analysis technique known as the “constant comparison method” 34 to identify recurring types of questions whose format or content might explain why they went unanswered. Using this technique, one of us (JE) developed an initial taxonomy of question types by reading unanswered questions in random order, identifying recurring types, and writing definitions for each type. As each question was read, it was “constantly compared” to existing types to determine whether a new type was needed or an existing type could accommodate it, possibly with a modification of the definition. Working independently, two other investigators (JO, SM) used a random sample of 50 questions to develop their own versions of the taxonomy. JE synthesized the three independent efforts and sent the revised taxonomy along with 3 to 4 examples of each question type back to JO and SM, who were asked, “Would you agree this is an appropriate definition for this type of question?” and “Does the definition adequately describe what you see in the question?” Comments from the investigators were used to revise the taxonomy. An iterative process was used, in which the taxonomy was revised by JE, reviewed by JO and SM, revised again, reviewed again, and so on until a final taxonomy was developed. A similar method was used to classify easily answered questions. At this stage, the taxonomy of unanswered questions consisted of 13 question types.

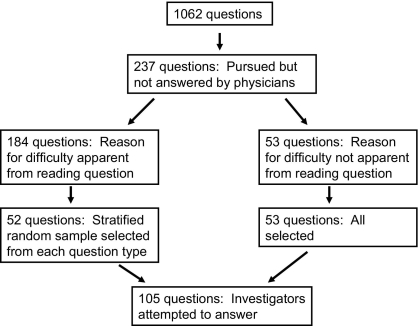

One of the 13 types was designated “reason for difficulty not apparent,” and it included 53 questions. Many of the questions in this category appeared similar to easily answered questions. To better understand why these “reason-not-apparent” questions went unanswered, we attempted to answer them using 10 preselected resources: UpToDate.com (available at www.uptodate.com), Thomson Healthcare, Micromedex (available at www.thomsonhealthcare.com), MEDLINE (available at www.ncbi.nlm.nih.gov), National Guideline Clearinghouse (available at www.guideline.gov), Harrison’s Principles of Internal Medicine, 35 Nelson Textbook of Pediatrics, 36 The Sanford Guide to Antimicrobial Therapy, 37 Red Book: 2003 Report of the Committee on Infectious Diseases, 38 eMedicine (available at www.emedicine.com), and Google (available at www.google.com). These resources were selected by the investigators because they are clinically oriented, generally available, and commonly used in practice. 9 Each resource was searched for a maximum of 10 minutes, and the searcher recorded the time spent for each resource. In addition to the 53 unclassified questions, we searched for answers to a stratified random sample of up to 5 questions from each of the remaining 12 question types. In total, we attempted to answer 105 questions (▶). We attempted to answer all reason-for-difficulty-not-apparent questions because they comprised a heterogeneous group, and we hoped the search would shed light on why they were difficult. In contrast, the other questions fell into homogeneous groups and the reason for difficulty seemed apparent just from reading the question. Therefore, we answered a random sample from each of these groups.

Figure 1.

Selection of Questions for Answering Attempts by Investigators.

Search terms were taken from the text of the question for all resources except MEDLINE, where MeSH terms were used. The most helpful search terms often became apparent only after becoming familiar with the literature on the topic.

For each resource, we determined whether the question was completely answered, partially answered, or not answered at all. These judgments were made by one of the investigators (JE), who performed all of the searches after three authors (JE, JO, SM) pilot tested the process. An answer was considered “complete” if it was adequate to direct patient care without the need for additional information. Based on our attempts to answer questions, we modified the existing taxonomy of unanswered questions by changing definitions and adding new question types. For example, “Is alpha streptococcus on blood culture likely to be a pathogen in a 17-day-old?” was originally classified as “reason for difficulty not apparent.” After searching for answers to questions like this one, we discovered that clinical resources do well answering questions about diseases, like neonatal sepsis, but not as well answering questions about non-disease entities, like blood culture contaminants. We labeled this category “Misalignment Between Question and Resource Format.” We were able to reclassify all but 7 of the 53 “reason-for-difficulty-not-apparent” questions, using the modified taxonomy.

(Note: We use the term “author” throughout, in place of the more accurate but unwieldy “clinical information resource developer.”)

During our attempts to answer questions, we took notes on what the physician could have done to find a better answer and what the author could have done to provide a better answer. These notes were organized into their own taxonomies of recommendations for physicians and recommendations for authors. The investigators completed an iterative process using email and conference calls to label, describe, and exemplify each recommendation. The recommendations for physicians and authors were developed independently of the question type taxonomy. To help avoid constraints on the recommendations, we did not attempt to develop one-to-one relationships between specific question types and specific recommendations that addressed them. The question types provided an implicit background for the recommendations, but the recommendations arose primarily from our attempts to answer questions rather than our attempts to classify them.

We used descriptive statistics and one-way ANOVA to summarize the time spent searching for answers. The significance level was set at .05. We used Stata (Stata Corp., College Station, TX) for all statistical analyses.

Our methods resulted in three work products: a taxonomy of unanswered questions, a list of recommendations for physicians, and a list of recommendations for authors. The study was approved by the University of Iowa Human Subjects Office.

Results

Unanswered Questions

After inviting 56 physicians, 48 (86%) agreed to participate in the previous study. In that earlier study, each physician was observed for approximately 16 hours (4 visits per physician with 4 hours per visit), resulting in a total of 768 hours of observation time. Physicians did not pursue answers to 477 (45%) of the 1062 questions usually because they doubted that they could find a good answer quickly. 9 Of the remaining 585 questions that were pursued, physicians were unable to find answers to 237 (41%) in print and electronic resources. 9

In the present study, after searching for answers, the investigators found “complete” answers to 74 (70%) of 105 questions in at least one of the 10 resources. The investigators may have been more successful in finding answers than the previous study physicians because the investigators completed a leisurely search of 10 resources, whereas the physicians had only a minute or two between patients. One example of a question that went unanswered in any of the 10 resources was “If adults smoke in the house, but not when the child is visiting, is that associated with asthma?”

With 105 questions and 10 resources per question, there were 1050 question/resource pairs that could have yielded an answer. However, the current study did not seek answers from resources deemed inappropriate for the question (e.g., Harrison’s Principles of Internal Medicine was not searched for pediatric questions). This left 818 appropriate question/resource pairs. For 224 of these pairs (27%), the current study found a complete answer; for 204 (25%), a partial answer was found; and, for 390 (48%), no answer was found. The average time spent searching a single resource for a given question was 3.8 minutes. An average of 3.2 minutes was required to find a complete answer, 5.0 minutes to determine that only a partial answer was available, and 3.5 minutes to determine that no answer was available in the resource (F = 34.8, P < 0.001).

Unanswered Questions Taxonomy

The initial taxonomy of unanswered questions consisted of 13 types. After adding new types based on our attempts to answer questions, the final taxonomy consisted of 19 types (see Table 1, available as a JAMIA online data supplement at www.jamia.org). The three most common types accounted for 128 (54%) of the questions: (1) “Undiagnosed finding” questions asked about the management of abnormal clinical findings, such as symptoms, signs, and test results (What is the approach to finding X? (n = 45 questions)); (2) “Conditional” questions contained qualifying conditions that were appended to otherwise simple questions (What is the management of X, given Y? where “given Y” is the qualifying condition that makes the question difficult. (n = 45)); and (3) “Compound” questions asked about the association between two highly specific elements (Can X cause Y? (n = 38)).

The difference between conditional questions and compound questions is subtle but distinct: Compound questions can be divided into two questions, either of which is easier to answer than the original, whereas conditional questions cannot be split this way. For example:

Compound: Can cocaine precipitate a sickle cell crisis? (difficult to answer)

Simple: What are the adverse effects of cocaine? (easy to answer)

Simple: What can precipitate a sickle cell crisis? (easy to answer)

Conditional: What are the screening guidelines for breast cancer when the patient has a family history of breast cancer? (difficult to answer)

Unconditional: What are the screening guidelines for breast cancer? (easy to answer)

Recommendations for Physicians

The current study identified seven recommendations for physicians (“What the physician could do to get a better answer?”) that fell into 3 main categories (see Table 2, available as an online JAMIA data supplement at www.jamia.org): (1) Select the most appropriate resource. For example, search clinical handbooks and clinically oriented reviews rather than comprehensive textbooks that attempt to meet the needs of basic scientists and medical students as well as clinicians. (2) Rephrase the question to match the type of information in clinical resources. For example, do not ask “Should a woman with previous benign breast biopsies be on tamoxifen for prophylaxis?” Instead ask “Should a woman with fibrocystic disease be on tamoxifen for prophylaxis?” or, after becoming familiar with the literature, “At what Gail model risk level should prophylactic tamoxifen be prescribed?” (3) Use more effective search terms. For example, Do not ask “What is the treatment for an elevated very-low-density-lipoprotein cholesterol?” Instead ask “What is the treatment for an elevated triglyceride level?” because current guidelines use “triglycerides” as the preferred term. 39 For 77 (73%) of the 105 questions, we had no recommendations for the physician because the question seemed adequate as stated, and it appeared that clinically oriented resources should have included the answer.

Recommendations for Authors

The 33 recommendations for authors fell into two main categories (see Table 3, available as an online data supplement at www.jamia.org). First, authors should be explicit. For example, “What are the recommendations for household contacts of hepatitis B carriers?”

Explicit answer: “Household contacts, especially sexual contacts of individuals with chronic HBV [hepatitis B virus] infection, should be tested and those who are seronegative, should be vaccinated.”40

Less explicit answer: “For unvaccinated persons sustaining an exposure to HBV, postexposure prophylaxis with a combination of HBIG [hepatitis B immune globulin] … and hepatitis B vaccine … is recommended.35 (In this answer, the nature of the exposure is not specified and “household contacts” were not explicitly addressed.)

Second, authors should provide practical, actionable, clinically oriented information. For example, “Should a 13-year-old, premenarchal girl be referred or observed if she has a 22-degree dorsal scoliosis?”

Clinically oriented answer: “For curves between 20 and 29 degrees and Risser grade 0 to 3, repeat x-ray every 6 months, refer to orthopedist, and brace after 25 degrees; for curves between 20 and 29 degrees and Risser grade 4, repeat x-ray every 6 months, refer to orthopedist, and observe without bracing.”41 (Note: Risser grades, which define the extent of bony fusion of the iliac apophysis, are defined in the article.)41

Non-clinically oriented answer: “Premenarchal girls with curves between 20 and 30 degrees have a significantly higher risk for progression than do girls 2 yr after menarche with similar curves.”36 (This answer discusses the problem but does not explain what should be done.)

The most common recommendation for authors, related to providing clinically oriented information, was to anticipate questions that are likely to arise in practice. Seven of the 19 questions that prompted this recommendation could have been anticipated if authors had covered common variations of straightforward questions. For example, “How do you treat a calf vein thrombosis (since they are not supposed to be as dangerous)?” “What are screening guidelines in the elderly?” “How should premenopausal women with hot flashes be treated?” Authors who write about deep vein thrombosis, screening guidelines, and hot flashes could anticipate these questions by considering logical variations on the underlying straightforward questions.

Easy Questions

The 48 physicians in this study easily answered 166 (28%) of the 585 questions they pursued using the first print or electronic resource they consulted. The two largest categories of easily answered questions were (1) straightforward prescribing questions (n = 53; e.g., “Does amitriptylene come in 50 milligram tablets?”), which were readily answered in prescribing resources, and (2) straightforward questions about diagnosed diseases, as opposed to undiagnosed clinical findings (n = 31; e.g., “What is the treatment for deQuervain’s tenosynovitis?”). A few of the easy questions were similar in structure to unanswered questions. For example, nine easy questions were compound and six asked about undiagnosed findings.

Discussion

Main Findings

In this study, physicians’ unanswered questions were readily grouped into generic types and most questions fit into one of three types: questions about abnormal findings, conditional questions, and compound questions. In our attempts to answer these questions, we identified deficiencies in question phrasing and resource selection that could be addressed by physicians and gaps in knowledge resources that could be addressed by authors. The process of answering a potentially difficult question can be thought of sequentially: initially, the burden is on the physician to optimally phrase the question, and subsequently it is on the author to anticipate and explicitly answer questions that arise in practice.

Link between Question Types and Recommendations

The taxonomy of unanswered questions served as an intermediate step in developing the taxonomy of recommendations, but we did not force one-to-one links between question types and recommendations because our attempts to answer questions seemed to provide a more direct path to the recommendations. In retrospect, however, we found that one or more recommendations could be applied to each question type. For example, authors could address conditional questions better if they anticipate the common conditional factors that are appended to simple questions, such as screening mammogram recommendations for women with a family history of breast cancer. Authors could address questions about clinical findings better if they provide explicit, step-by-step advice on the approach to common undiagnosed findings (symptoms, signs, and abnormal test results).

Physicians might be more likely to answer their compound questions if they split them into their components (What are the adverse effects of cocaine? What are the precipitating events that can lead to a sickle cell crisis?) However, without an explicit statement (Cocaine is not associated with sickle cell crisis) the physician will have doubts about the answer to the original compound question. Such explicit negative statements are rare in the medical literature and the potential number could be overwhelming (X does not cause sickle cell crisis, Y does not cause sickle cell crisis, …). However, authors should explicitly answer recurring compound questions that commonly arise in practice (e.g., Oral iron does not cause the stool guaiac to turn positive. 42 Asking depressed patients about suicidal thoughts does not increase the likelihood of suicide. 43 ). How can authors anticipate these pertinent negatives, and how can they anticipate other types of unanswered questions? In addition to including clinically knowledgeable providers, the resource development team might benefit from a checklist to help them provide clinically useful and comprehensive information. For example,

∗ When discussing the treatment of a disease, such as heart failure, state how that treatment should be changed when the disease is accompanied by a common comorbid condition, such as renal failure.44

∗ When recommending antibiotic treatment, include the duration of treatment.

Authors could also refer to large public repositories of questions such as the ClinicalQuestions Collection at the National Library of Medicine (http://clinques.nlm.nih.gov/JitSearch.html) to find practice-based questions related to their topics. Authors may be reluctant to answer questions when supporting evidence is lacking or fragmented, 45 but the lack of evidence does not make the question go away. The content of clinical knowledge resources often seems to be based more on the information available than on the information needed. When evidence is lacking, many practicing physicians request information on the usual practices of experts, with this information clearly marked as opinion. 9,46

Other Studies

Previous studies have developed a variety of classification systems for physicians’ questions. 32,47–53 For example, Florance found that literature search requests from practicing physicians could be grouped into four types: prediagnostic assessment, diagnosis, treatment choice, and learning. 50 Allen and colleagues found that physicians’ questions could be grouped into seven categories, such as laboratory, pharmacy, differential diagnosis, and so on. 47 Ely and colleagues analyzed 1396 office-based questions and categorized them into 64 generic types (e.g., What is the drug of choice for condition x? What is the cause of symptom x? and so on). 52 However, we were unable to find previous attempts to classify unanswered questions.

To help improve the likelihood of finding evidence-based answers, physicians have been advised to rephrase their questions to include the “PICO” elements: population, intervention, comparison, and outcome. 24–29 However, most questions that arise in practice are not amenable to such rephrasing, 30 and it is not clear that including the PICO elements increases the likelihood of successfully answering questions. 7

Many recommendations are available to help authors write systematic review articles by rigorously summarizing original research. 54–65 For example, the Cochrane Collaboration provides an extensive handbook that describes this process. 65 However, these guidelines have generally focused on the needs of the author rather than the needs of the clinician. For example, authors of systematic reviews are advised to limit their scope to highly focused questions about the efficacy of single interventions (Does X work to treat Y?), 55,65,66 whereas clinicians have a different perspective (What is the best treatment for Y? or What is the best approach to abnormal finding Y?). 9,46

Limitations

Our findings should be interpreted in light of several limitations. For example, answering strategies that work well for difficult questions may not work well for easy questions, and that possibility was not addressed in this study. Also, we made no attempt to verify the accuracy of the answers or to evaluate the evidence supporting them. In this study, a “complete“ answer was not necessarily a ”correct” answer. In a previous study, trust in the validity of information was found to be an important factor when physicians were asked to describe the characteristics of ideal information resources. 9 The concept of trust did not arise in this study because the resources were preselected, and we focused more on the existence of information than on its validity.

We attempted to answer only 105 of the 237 unanswered questions, and it is possible that answering all the questions would have led to additional or different recommendations. However, this seems unlikely because the 105 questions were selected randomly from each question type.

We did not address the distinction between unanswered questions and unanswerable questions, meaning questions for which there is no evidence, or even published opinion, to provide an answer. We studied a relatively small number of questions that were asked by physicians in a small geographic area. Thus our findings may not apply to questions from other settings or questions that arise in non-primary care practices.

Conclusion

We found that unanswered primary care questions fall into a relatively small number of generic types. Some of these types were apparent from the questions themselves, whereas others required attempts to answer them before they could be categorized. We found that rephrasing questions was occasionally helpful, but more often, there were important gaps in the information provided by clinical resources. Filling these gaps with evidence-based information, supplemented with opinion and best practices as appropriate, is needed to successfully answer questions that are currently unanswered. Although our findings should be viewed as preliminary, they may be useful to authors who are interested in developing resources that effectively answer important patient-care questions that arise in practice. Innovative methods that automate links between questions and answers could address some of the problems we found. 22,67 Future research that analyzes more unanswered questions from a wider variety of clinicians could further clarify the nature of difficult questions and extend the recommendations proposed in this study.

Appendix 1:

Derivation of number of unanswered questions in present study

In the authors’ 2005 paper, physician-subjects pursued 585 questions. Of those, 238 questions were easily answered, 167 were not answered at all, and 180 were answered “with difficulty.” The 180 questions that were “answered-with-difficulty” included 128 that were incompletely answered, 45 that were completely answered but required more than one resource, and 7 that were completely answered but difficult to find in the first resource consulted. The present study included all 167 previous study questions that were not answered at all, plus the 128 previous study questions that were incompletely answered (167 + 128 = 295). From those 295 previous questions, the current study deleted 12 questions for which data on the physician search was inadequate, 27 for which the only resource consulted was a human, 6 for which the search was prematurely and permanently interrupted (e.g., nurse told the physician to hurry because patients were waiting), 8 for which further inspection of previous study field notes indicated that the question appeared to be adequately answered, 4 that were not related to patient care, and 1 for which the physician did not have access to any appropriate nonhuman resource. This left 237 questions, which was the sample for the current study.

Footnotes

This study was supported by the National Institutes of Health, National Library of Medicine (1R01LM07179-01). This study was presented in abstract form at the Annual Meeting of the North American Primary Care Research Group in Tucson, Arizona on October 17, 2006. JWE has served as a consultant for Thomson, which publishes topic reviews and related material for clinicians on managing clinical conditions as part of Micromedex. JAO is chief clinical informatics officer of Thomson. SMM has served as a consultant for Thomson and is the developer of KnowledgeLink which provides context-specific clinical information at the point of care.

References

- 1.Gorman PN, Helfand M. Information seeking in primary care: How physicians choose which clinical questions to pursue and which to leave unanswered Med Decis Making 1995;15:113-119. [DOI] [PubMed] [Google Scholar]

- 2.Barrie AR, Ward AM. Questioning behaviour in general practice: a pragmatic study BMJ 1997;315:1512-1515. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Fozi K, Teng CL, Krishnan R, Shajahan Y. A study of clinical questions in primary care Med J Malaysia 2000;55:486-492. [PubMed] [Google Scholar]

- 4.Gorman PN. Information needs in primary care: A survey of rural and nonrural primary care physicians Medinfo 2001;10:338-342. [PubMed] [Google Scholar]

- 5.Ramos K, Linscheid R, Schafer S. Real-time information-seeking behavior of residency physicians Fam Med 2003;35:257-260. [PubMed] [Google Scholar]

- 6.Currie LM, Graham M, Allen M, Bakken S, Patel V, Cimino JJ. Clinical information needs in context: an observational study of clinicians while using a clinical information system Proc AMIA Symp 2003:190-194. [PMC free article] [PubMed]

- 7.Cheng GY. A study of clinical questions posed by hospital clinicians J Med Libr Assoc 2004;92:445-458. [PMC free article] [PubMed] [Google Scholar]

- 8.Alper BS, White DS, Bin G. Physicians answer more clinical questions and change clinical decisions more often with synthesized evidence: a randomized trial in primary care Ann Fam Med 2005;3:507-513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Ely JW, Osheroff JA, Chambliss ML, Ebell MH, Rosenbaum M. Answering physicians’ clinical questions: obstacles and potential solutions J Am Informatics Assoc 2005;12:217-224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Covell DG, Uman GC, Manning PR. Information needs in office practice: are they being met? Ann Intern Med 1985;103:596-599. [DOI] [PubMed] [Google Scholar]

- 11.Gorman P. Does the medical literature contain the evidence to answer the questions of primary care physicians?Preliminary findings of a study. Proc Annu Symp Comp Appl Med Care 1993:571-575. [PMC free article] [PubMed]

- 12.Gorman PN, Ash J, Wykoff L. Can primary care physicians’ questions be answered using the medical journal literature? Bull Med Libr Assoc 1994;82:140-146. [PMC free article] [PubMed] [Google Scholar]

- 13.Green ML, Ciampi MA, Ellis PJ. Residents’ medical information needs in clinic: are they being met? Am J Med 2000;109:218-223. [DOI] [PubMed] [Google Scholar]

- 14.Del Mar CB, Silagy CA, Glasziou PP, Weller D, Spinks AB, Bernath V, et al. Feasibility of an evidence-based literature search service for general practitioners Med J Aust 2001;175:134-137. [DOI] [PubMed] [Google Scholar]

- 15.Hayward JA, Wearne SM, Middleton PF, Silagy CA, Weller DP, Doust JA. Providing evidence-based answers to clinical questionsA pilot information service for general practitioners. Med J Austr 1999;171:547-550. [PubMed] [Google Scholar]

- 16.Lindberg DA, Siegel ER, Rapp BA, Wallingford KT, Wilson SR. Use of MEDLINE by physicians for clinical problem solving JAMA 1993;269:3124-3129. [PubMed] [Google Scholar]

- 17.Sackett DL, Straus SE. Finding and applying evidence during clinical rounds: The “evidence cart.” JAMA 1998;280:1336-1338. [DOI] [PubMed] [Google Scholar]

- 18.Schilling LM, Steiner JF, Lundahl K, Anderson RJ. Residents’ patient-specific clinical questions: opportunities for evidence-based learning Acad Med 2005;80:51-56. [DOI] [PubMed] [Google Scholar]

- 19.Schwartz K, Northrup J, Israel N, Crowell K, Lauder N, Neale AV. Use of on-line evidence-based resources at the point of care Fam Med 2003;35:251-256. [PubMed] [Google Scholar]

- 20.Swinglehurst DA, Pierce M, Fuller JCA. A clinical informaticist to support primary care decision making Qual Health Care 2001;10:245-249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Chambliss ML, Conley J. Answering clinical questions J Fam Pract 1996;43:140-144. [PubMed] [Google Scholar]

- 22.Maviglia SM, Yoon CS, Bates DW, Kuperman G. KnowledgeLink: impact of context-sensitive information retrieval on clinicians’ information needs J Am Med Inform Assoc 2006;13:67-73Epub 2005 Oct 12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Marshall JG. The impact of the hospital library on clinical decision making: the Rochester study Bull Med Libr Assoc 1992;80:169-178. [PMC free article] [PubMed] [Google Scholar]

- 24.Sackett DL, Richardson WS, Rosenberg W, Hayes RB. Evidence-Based Medicine. How to Practice and Teach EBM. New York: Churchill Livingston; 1997.

- 25.Allison JJ, Kiefe CI, Weissman NW, Carter J, Centor RM. The art and science of searching MEDLINE to answer clinical questionsFinding the right number of articles. Int J Technol Assess Health Care 1999;15:281-296. [PubMed] [Google Scholar]

- 26.Armstrong EC. The well-built clinical question: the key to finding the best evidence efficiently Wis Med J 1999;98:25-28. [PubMed] [Google Scholar]

- 27.Bergus GR, Emerson M. Family medicine residents do not ask better-formulated clinical questions as they advance in their training Fam Med 2005;37:486-490. [PubMed] [Google Scholar]

- 28.Bergus GR, Randall CS, Sinift SD, Rosenthal DM. Does the structure of clinical questions affect the outcome of curbside consultations with specialty colleagues? Arch Fam Med 2000;9:541-547. [DOI] [PubMed] [Google Scholar]

- 29.Richardson WS, Wilson MC, Nishikawa J, Hayward RSA. The well-built clinical question: a key to evidence-based decisions (editorial) ACP J Club 1995;123:A12-A13. [PubMed] [Google Scholar]

- 30.Ely JW, Osheroff JA, Ebell MH, Chambliss ML, Vinson DC, Stevermer JJ, et al. Obstacles to answering doctors’ questions about patient care with evidence: qualitative study BMJ 2002;324:710-713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Ely JW, Levy BT, Hartz A. What clinical information resources are available in family physicians’ offices? J Fam Pract 1999;48:135-139. [PubMed] [Google Scholar]

- 32.Gorman PN. Information needs of physicians J Am Soc Inf Sci 1995;46:729-736. [Google Scholar]

- 33.Ely JW, Osheroff JA, Ebell MH, Bergus GR, Levy BT, Chambliss ML, et al. Analysis of questions asked by family doctors regarding patient care BMJ 1999;319:358-361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Strauss A, Corbin JM. Basics of Qualitative Research: Techniques and Procedures for Developing Grounded Theory. Sage; 1998.

- 35.Kasper DL, Braunwald E, Fauci AS, Hauser SL, Longo DL. Harrison’s Principles of Internal Medicine. 16th ed.. New York: McGraw-Hill; 2004.

- 36.Behrman RE, Kliegman RM, Jenson HB. Nelson Textbook of Pediatrics. 17th ed.. Philadelphia: Saunders; 2004.

- 37.Gilbert DN, Moellering Jr RC, Eliopoulos GM, Sande MA. The Sanford Guide to Antimicrobial Therapy 2006. 36th ed.. Sperryville, VA: Antimicrobial Therapy, Inc; 2006.

- 38.Pediatrics CoIDAAo Red Book: 2003 Report of the Committee on Infectious Diseases. 26th ed.. Elk Grove Village, IL: American Academy of Pediatrics; 2003.

- 39.Third Report of the National Cholesterol Education Program (NCEP) Expert Panel on Detection, Evaluation, and Treatment of High Blood Cholesterol in Adults (Adult Treatment Panel III) final report Circulation 2002;106:3143-3421. [PubMed] [Google Scholar]

- 40.Teo EK, Lok ASF. Hepatitis B virus vaccination. Available at http://www.utdol.com/utd/content/topic.do?topicKey=heptitis/15188&type=A&selectedTitle=1∼63. Accessed on 9/27/2006.

- 41.Reamy BV, Slakey JB. Adolescent idiopathic scoliosis: review and current concepts Am Fam Physician 2001;64:111-116. [PubMed] [Google Scholar]

- 42.McDonnell WM, Ryan JA, Seeger DM, Elta GH. Effect of iron on the guaiac reaction Gastroenterology 1989;96:74-78. [DOI] [PubMed] [Google Scholar]

- 43.Gould MS, Marrocco FA, Kleinman M, Thomas JG, Mostkoff K, Cote J, et al. Evaluating iatrogenic risk of youth suicide screening programs: a randomized controlled trial JAMA 2005;293:1635-1643. [DOI] [PubMed] [Google Scholar]

- 44.Shlipak MG. Pharmacotherapy for heart failure in patients with renal insufficiency Ann Intern Med 2003;138:917-924. [DOI] [PubMed] [Google Scholar]

- 45. Reviews: making sense of an often tangled skein of evidence Ann Intern Med 2005;142:1019-1020. [DOI] [PubMed] [Google Scholar]

- 46.Rochon PA, Bero LA, Bay AM, Gold JL, Dergal JM, Binns MA, et al. Comparison of review articles published in peer-reviewed and throwaway journals JAMA 2002;287:2853-2856. [DOI] [PubMed] [Google Scholar]

- 47.Allen M, Currie LM, Graham M, Bakken S, Patel VL, Cimino JJ. The classification of clinicians’ information needs while using a clinical information system Proc AMIA Symp 2003:26-30. [PMC free article] [PubMed]

- 48.Cimino C, Barnett GO. Analysis of physician questions in an ambulatory care setting Proc Annu Symp Comp Appl Med Care 1991:995-999. [PMC free article] [PubMed]

- 49.Cimino J. Generic queries for meeting clinical information needs Bull Med Libr Assoc 1993;81:195-206. [PMC free article] [PubMed] [Google Scholar]

- 50.Florance V. Medical knowledge for clinical problem solving: a structural analysis of clinical questions Bull Med Libr Assoc 1992;80:140-149. [PMC free article] [PubMed] [Google Scholar]

- 51.Forsythe DE, Buchanan BG, Osheroff JA, Miller RA. Expanding the concept of medical information: an observational study of physicians’ information needs Comp Biomed Res 1992;25:181-200. [DOI] [PubMed] [Google Scholar]

- 52.Ely JW, Osheroff JA, Gorman PN, Ebell MH, Chambliss ML, Pifer EA, et al. A taxonomy of generic clinical questions: classification study BMJ 2000;321:429-432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Graham MJ, Currie LM, Allen M, Bakken S, Patel V, Cimino JJ. Characterizing information needs and cognitive processes during CIS use Proc AMIA Symp 2003:852. [PMC free article] [PubMed]

- 54.Stroup DF, Berlin JA, Morton SC, Olkin I, Williamson GD, Rennie D, et al. Meta-analysis Of Observational Studies in Epidemiology (MOOSE) group Meta-analysis of observational studies in epidemiology: a proposal for reporting JAMA 2000;283:2008-2012. [DOI] [PubMed] [Google Scholar]

- 55.Siwek J, Gourlay ML, Slawson DC, Shaughnessy AF. How to write an evidence-based clinical review article Am Fam Physician 2002;65:251-258. [PubMed] [Google Scholar]

- 56.Thomas J, Harden A, Oakley A, Oliver S, Sutcliffe K, Rees R, et al. Integrating qualitative research with trials in systematic reviews BMJ 2004;328:1010-1012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Bigby M, Williams H. Appraising systematic reviews and meta-analyses Arch Dermatol 2003;139:795-798. [DOI] [PubMed] [Google Scholar]

- 58.Khan KS, Kunz R, Kleijnen J, Antes G. Five steps to conducting a systematic review J R Soc Med 2003;96:118-121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Proceedings of the 3rd Symposium on Systemic Review Methodology Stat Med 2002;21:1501-1640Oxford, England. July 3–5, 2000. [DOI] [PubMed] [Google Scholar]

- 60.Silagy CA, Middleton P, Hopewell S. Publishing protocols of systematic reviews: comparing what was done to what was planned JAMA 2002;287:2831-2834. [DOI] [PubMed] [Google Scholar]

- 61.Williamson RC. How to write a review article Hosp Med 2001;62:780-782. [DOI] [PubMed] [Google Scholar]

- 62.Magarey A, Veale B, Rogers W. A guide to undertaking a literature review Aust Fam Phys 2001;30:1013-1015. [PubMed] [Google Scholar]

- 63.Oxman AD. Checklists for review articles BMJ 1994;309:648-651. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Oxman AD, Guyatt GH. The science of reviewing research Ann N Y Acad Sci 1993;703:125-133. [DOI] [PubMed] [Google Scholar]

- 65.The Cochrane Collaboration. Cochrane Handbook for Systematic Reviews of Interventions. Available at: http://www.cochrane.org/resources/handbook/index.htm. Accessed on: 8/28/2006.

- 66.Hutchison BG. Critical appraisal of review articles Can Fam Physician 1993;39:1097-1102. [PMC free article] [PubMed] [Google Scholar]

- 67.Cimino JJ, Li J. Sharing infobuttons to resolve clinicians’ information needs Proc AMIA Symp 2003:815. [PMC free article] [PubMed]