Abstract

Objective

Communication of abnormal test results in the outpatient setting is prone to error. Using information technology can improve communication and improve patient safety. We standardized processes and procedures in a computerized test result notification system and examined their effectiveness to reduce errors in communication of abnormal imaging results.

Design

We prospectively analyzed outcomes of computerized notification of abnormal test results (alerts) that providers did not explicitly acknowledge receiving in the electronic medical record of an ambulatory multispecialty clinic.

Measurements

In the study period, 190,799 outpatient visits occurred and 20,680 outpatient imaging tests were performed. We tracked 1,017 transmitted alerts electronically. Using a taxonomy of communication errors, we focused on alerts in which errors in acknowledgment and reception occurred. Unacknowledged alerts were identified through electronic tracking. Among these, we performed chart reviews to determine any evidence of documented response, such as ordering a follow-up test or consultation. If no response was documented, we contacted providers by telephone to determine their awareness of the test results and any follow-up action they had taken. These processes confirmed the presence or absence of alert reception.

Results

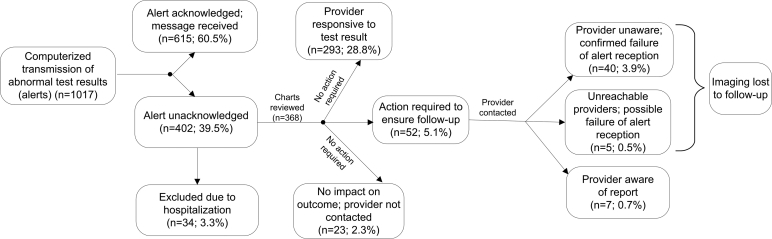

Providers failed to acknowledge receipt of over one-third (368 of 1,017) of transmitted alerts. In 45 of these cases (4% of abnormal results), the imaging study was completely lost to follow-up 4 weeks after the date of study. Overall, 0.2% of outpatient imaging was lost to follow-up. The rate of lost to follow-up imaging was 0.02% per outpatient visit.

Conclusion

Imaging results continue to be lost to follow-up in a computerized test result notification system that alerted physicians through the electronic medical record. Although comparison data from previous studies are limited, the rate of results lost to follow-up appears to be lower than that reported in systems that do not use information technology comparable to what we evaluated.

Introduction

Recent studies have raised concern about errors related to critical test result communication in ambulatory care. 1–6 Communication failures are responsible for a significant number of outpatient medical errors and adverse events and are often implicated in liability claims. 7–9 In response to the increased recognition that delayed communication in radiology is a major cause of litigation in the United States, the American College of Radiology updated its guidelines for communicating critical diagnostic imaging findings in 2005. 10 Radiologists are now strongly advised to expedite reports that indicate significant or unexpected findings to referring physicians “in a manner that reasonably ensures timely receipt of the findings.” 10 information technology (IT) could improve such communication processes and thereby enhance physician awareness of abnormal imaging test results, a problem of significant magnitude in previous studies. 4,11 We hypothesized that with standardized policies and procedures and educational efforts, use of IT to notify providers of abnormal radiology results would lead to few, if any, imaging results lost to follow-up. Our goals were to assess the effectiveness of a computerized test result notification system designed to minimize lapses in communication in the outpatient setting, to identify breakdowns in communication that could result from the use of this system, and to determine the potential impact of communication breakdowns on patients’ health outcomes.

Background

To improve communication and patient safety, the Institute of Medicine has called for redesigning and error-proofing health care delivery systems. 12 One method for achieving this goal is to develop electronic information systems for the delivery of health care data. 12,13 The use of IT and adoption of electronic medical records (EMRs) is thought to hold promise in improving the quality of information transfer. 14 To this end, the Veterans Affairs (VA) system adopted the use of a “View Alert” system to notify clinicians of abnormal test results, although the VA has since encountered several problems with the effectiveness of this system, including the lack of adequate policies and procedures in its use. 15 In 2004, our institution developed standard operating procedures to minimize breakdowns in critical communication between radiologists and clinicians. Several key stakeholders, including lead representatives from the sections of Radiology, IT, several clinical services, and administrators, contributed to the implementation of these standard operating procedures.

The VA uses computerized physician order entry so that the ordering clinician is always known. This reduces the problem of whom to notify about test results. The system relies primarily on computerized notification of abnormal test results (alerts) displayed prominently through a View Alert window that is displayed in the EMR every time a provider signs on. The View Alert window also appears when providers switch between patient records and displays alerts on all of their patients. With the exception of life-threatening findings, which are communicated by telephone, the radiologist alerts the referring provider electronically to the presence of “significant unexpected findings” using codes specific to our institution (▶). Based on his or her interpretation, the radiologist manually assigns one of the codes to the imaging report, which is necessary to generate an alert. The use of three of these codes (101 to 103) results in an alert immediately appearing prominently in the View Alert window of the ordering (and sometimes an alternative) provider. The provider is then expected to click on the alert to view the patient’s entire abnormal report. Clicking the alert indicates that the provider received the imaging result and is the only mechanism for the EMR to record the provider’s acknowledgment. However, the system does not require providers to read alerts, and providers do have an option of ignoring the View Alert window to bypass it. All providers who use the EMR receive an initial orientation about use of alerts. There is periodic reinforcement (about once or twice per year) through lectures and staff meetings in the use of the alert system at which providers are made aware of its potential use in improving patient safety through better test result notification. Informal feedback has shown that many physicians found the system useful for the timely receipt of imaging test results.

Table 1.

Table 1 Codes Used to Flag Imaging Reports Generated within the Institution ∗

| 101 | Major new abnormality† |

| 102 | New abnormality (tuberculosis, etc.)† |

| 103 | Suspicious for new malignancy† |

| 104 | Discrepancy with prior examination report |

| 105 | Technically unsatisfactory |

| 106 | Comparison with prior examination, no discrepancy |

| 107 | Comparison with prior examination, minimal discrepancy |

∗ Codes are specific to authors’ institution.

† Generates an electronic alert in the electronic medical record.

Although the View Alerts mechanism is the same across VA facilities, our facility designed a critical value notification protocol and established guidelines to track all radiology alerts. We also established responsibilities of the requesting physician, the reporting radiologist, and the leadership of the facility. For example, the Radiology section sends information on all alerts that remain unacknowledged to the ordering provider’s chief of service, the administrative officer of that service, and the chief of staff of the hospital. We also educated the practitioners to assign surrogate backup providers in the EMR when they are away so that alerts can be sent to the surrogates in their absence.

The established codes for flagging imaging test results (shown in ▶) are used to monitor and track radiology alerts. Standardization of policies and procedures in the use of the alerts system addresses several criteria suggested by Bates and Leape 1 for effective critical results reporting systems. These criteria include consensus about which results are considered critical (several stakeholders agreed on the codes), effective processes for communicating results (including computerized tracking) to key clinicians, and backup procedures (such as informing an alternate provider when the ordering provider is absent). Backup procedures are critical, for example, when the ordering provider is unavailable or is a part-time consultant, a temporary covering provider, or an emergency room provider. To ensure continued follow-up care in such cases, all alerts are sent to the patients’ primary care physicians (PCPs), who thus serve as backup alternate providers. If the alert is sent to more than one provider, it only requires acknowledgment by any responsible provider (any provider to whom the system sent an alert) for resolution. In addition, all patients assigned to resident PCPs are also assigned to a staff physician, who in the absence of the resident is considered the responsible provider. Because of its tracking capability, the system also addresses two factors that lead to malpractice claims against radiologists: failure to directly contact the referring physician and failure to document any attempt to make contact. 8 Hence, we considered this computerized test result notification system a significant improvement over previous noncomputerized systems.

The View Alert mechanism can be customized for use in receiving other types of clinical information, such as receipt of critical laboratory alerts and notes for co-signature, which are mandatory. Providers also have the option of receiving some nonmandatory information, such as reports from consultant services, and are well accustomed to using this tool in their daily routine. More than 1 year after the radiology alerts system was initiated, we sought to evaluate its effectiveness of communication, defining effectiveness as “clinicians becoming aware of, and responding appropriately to, alerts about critical radiology results.”

Looking for possible breakdowns of information transfer, we adapted a taxonomy of communication breakdowns to address three key steps involved in the communication process in our system. 16 This taxonomy includes errors of message transmission (e.g., failure to create an alert), errors of message reception (e.g., failure to notice or act upon an alert), and errors of message acknowledgment (e.g., failure to confirm receipt of the alert). We found the taxonomy suitable to address the types of communication breakdowns identified in our computerized test result notification system. Although transmission failures could occur if the interpreting radiologist did not code the report in the alerting system correctly, we focused only on errors of reception and acknowledgment for a series of alerts that were confirmed to be transmitted electronically. Specifically, we sought evidence that ordering physicians received and acknowledged alerts of critical findings and took appropriate follow-up action based on their awareness of abnormal reports.

Methods

Setting

The study was carried out at the multispecialty ambulatory clinics of the Michael E. DeBakey Veterans Affairs Medical Center in Houston, Texas. The clinic includes primary care, medical subspecialties, general surgery, and all surgical subspecialties. Each VA patient is assigned to a PCP responsible for supervising and coordinating all outpatient services for their assigned patients. 17 Some of the clinics included residents who were supervised by staff physicians. The study was approved by the Baylor College of Medicine Institutional Review Board and the Michael E. DeBakey Veterans Affairs Medical Center Research and Development Committee.

Outcomes of Alert Acknowledgment

Between March 7 and May 28, 2006, we used weekly computerized tracking to identify alerts that represented abnormal imaging reports coded as 101 to 103. The tracking system only identified alerts that were confirmed to have been transmitted electronically to the providers.

Inclusion and Exclusion Criteria

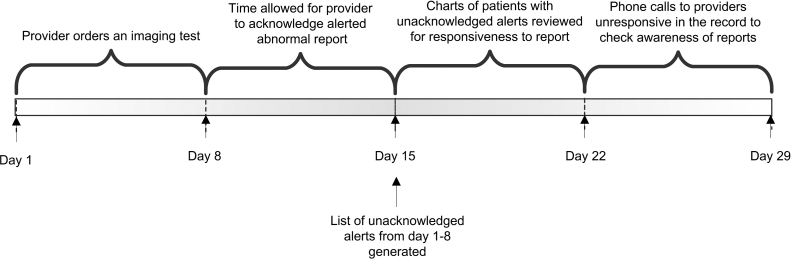

Each week during the study time period, the system downloaded alerts that were not acknowledged by the providers (see ▶ for timeline). The downloaded list included patient identifiers, names of providers to whom the alert was sent, the date and type of imaging study, and the location of patient at the time of the imaging study. We excluded unacknowledged alerts resulting from imaging studies that we confirmed to have been performed on inpatients. We then reviewed the medical records of all patients whose imaging tests were done in the outpatient setting and whose results were unacknowledged at the time the list was generated. On pilot testing of the medical record review process, we found that some outpatients with abnormal imaging findings were admitted to the hospital for further evaluation and treatment. We anticipated that such scenarios implied alert reception and would lead to appropriate follow-up of the abnormal test, and this was confirmed on several chart reviews at the time of pilot testing. Thus, patients who were found to be admitted any time after the imaging test but before the time of our medical record review were excluded from further analysis. This period would typically be about 14 days (range 8 to 21 days) depending on the date of imaging test and when the chart review was conducted (▶).

Figure 1.

Timeline for assessing communication of an alert (computerized notification of abnormal test results).

Outcomes of Alert Reception

We anticipated that providers also receive information from other modes of communication (such as patients’ requests for their test results, via a nurse-generated printout, or by discussing findings with radiologists by visiting or calling them). It was also possible for providers to be aware of a result by directly accessing the report in the patient’s record without acknowledging the alert in the View Alert window. Therefore, when alerts were not acknowledged, we reviewed the medical record for documentation of alert reception.

On medical record review, we determined whether the providers documented appropriate follow-up action related to the alert, indicating they had “received” the message. We defined this documentation as responsiveness to the alert. Responsiveness included appropriate follow-up on the alert by either direct mention of the report in the medical record or an action taken thereupon, such as ordering a recommended follow-up imaging test or a consultation or procedure. We did a comprehensive review of the electronic medical record for entries under “notes,” “orders,” and “consults” to ascertain actions taken.

Because providers may not always document their actions, absence of responsiveness in the medical record did not necessarily imply that providers failed to receive the alert. As a final step in the process of determining reception failure, we contacted the providers to determine their awareness and actions on the report. After initial chart review, a second investigator confirmed findings of the chart reviewer and checked the record for new documentation before calling the ordering provider. In most cases, by the time the provider was called, nearly 4 weeks had elapsed from the generation of the alert, an adequate time period for providers to respond to the alert. If providers were aware of the report, we sought to determine reasons why they had not taken any follow-up action. However, if the providers were unaware of the report, they were advised of a recommended follow-up action based on the report of the radiologist (e.g., ordering a computed tomography scan for a lung nodule). These instances of unawareness were classified as confirmed failures of alert reception, and their outcomes were categorized as near misses, defined as events that could have harmed the patient but did not cause harm as a result of chance, prevention, or mitigation. 13

If the ordering provider could not be reached on three tries on 3 different days, we called the alternate provider. If both providers were unavailable, the case was handed over to the Section of Radiology for further nonresearch-related notification detailed earlier to ensure adequacy of follow-up. We could not confirm the failure of communication in these cases and thus classified them as possible failures of alert reception. Both confirmed and possible failures of reception were classified as lost to follow-up imaging.

If on medical record review we determined that the diagnosis was not new and the patient was already receiving appropriate care for the condition, we did not contact the providers even though they had not documented a response in the medical record. In such cases, two investigators reviewed the case at length and discussed their findings to confirm that calling the provider was unnecessary and would not likely impact patient management because the provider who ordered a test was obviously aware of the patient’s condition prior to ordering the test. As an example, a computerized tomography of the neck revealed a suspicious thyroid mass on a patient who had already been worked up and scheduled for a future thyroidectomy by the appropriate service. Usually these situations occurred when the radiologist was not made aware of case details such as ongoing therapy and detailed prior information from medical record review was found to support the facts. These situations were called no impact on outcome situations.

We collected data on the type of imaging and characteristics of the ordering providers such as specialty and training. The person who first responded to the alert was identified if other than the ordering provider. We also assessed whether the responsiveness resulted from the patients’ visits to the clinic, by the patients calling for their results, or by the providers themselves. Finally, the reviewers made judgments based on extensive case review from the medical chart about the possible anticipated outcomes that might have occurred if the providers remained unaware of the report (e.g., possibility of delayed diagnosis of outcomes such as lung cancer if the study team had not intervened by calling the patient for a computerized tomography of the chest).

We analyzed the data using the SAS version 9.13 software package (SAS Institute Inc., Cary, NC). The χ2 and Fisher’s exact tests were performed to determine whether alerts associated with lost to follow-up imaging differed in several characteristics (e.g., type of study, provider specialty, etc.) from alerts that were unacknowledged but for which imaging was not lost to follow-up. Agreement between reviewers on the determination of no impact on outcome situations was assessed using the Kappa statistic. A kappa value >0.75 was used to denote excellent agreement.

Results

A total of 190,799 outpatient visits occurred between March 7, 2006, and May 28, 2006. In this time period, 20,680 outpatient studies (including radiographs, computed tomography scans, ultrasounds, magnetic resonance imaging, and mammography) were performed, and 1,017 (4.9%) alerts were electronically transmitted. ▶ illustrates the outcomes of these 1,017 alerts, 402 of which providers did not acknowledge. Medical record review revealed 34 cases in which the patient was admitted shortly after the imaging test, and these alerts were excluded from study of outcomes of alert reception. Therefore, we evaluated alert reception outcomes in 368 charts (36.2%). The imaging modality and characteristics of the ordering providers are listed in ▶. Of 368 unacknowledged alerts, 181 (49.2%) were sent to both the ordering and an alternate provider. On chart review, we found 293 cases in which a provider had responded to the alert without acknowledging it electronically (▶). In 163 (56%) of these cases, the ordering provider first responded to the alert on their own (data not shown). In 52 (18%) cases, an alternate provider first documented the response. In the remaining cases, the first response was documented when the patient returned for a clinic visit (n = 65), when patients called for their reports (n = 5), or when a trainee supervisor (n = 7) or a nurse (n = 1) noticed an abnormal report.

Figure 2.

Outcomes of computerized notification of abnormal imaging results.

Table 2.

Table 2 Characteristics of Unacknowledged Abnormal Imaging Reports and of Providers Who Ordered Them

| n (%) | |

|---|---|

| Types of abnormal imaging reported ∗ | |

| General radiology | 164 (44.5) |

| Ultrasounds | 25 (6.8) |

| Computerized tomography scans | 127 (34.5) |

| Magnetic resonance imaging | 31 (8.4) |

| Mammography | 5 (1.4) |

| Fluoroscopic radiographs | 16 (4.3) |

| Ordering provider characteristics | |

| Trainees (interns, residents, and fellows) | 76 (20.6) |

| Physician assistants | 85 (23.1) |

| Nurse practitioners | 20 (5.4) |

| Attending physicians | 187 (50.8) |

| Ordering provider specialty | |

| Generalist/primary care | 222 (60.3) |

| Hematology/oncology | 28 (7.6) |

| Other specialty medicine† | 32 (8.7) |

| Emergency medicine | 24 (6.5) |

| General surgery/gynecology | 20 (5.4) |

| Specialty surgery‡ | 42 (11.4) |

∗ Denotes 368 alerts that were unacknowledged in the electronic medical record.

† Included medicine subspecialties other than Hematology-Oncology.

‡ Included all surgical subspecialties, e.g., otolaryngology, neurosurgery, plastic surgery, etc.

Of the remaining 75 unresponsive cases, 23 cases were classified as no impact on outcome situations and a decision was made not to call the providers (kappa value = 0.88 on interinvestigator agreement). We could not reach five providers and turned these cases over to the Section of Radiology according to study protocol. In these cases the provider was either out of the office for an extended period of time or had recently left the institution. Of the remaining 47 providers, 7 were aware of the report but had not documented anything in the medical record. Reasons cited were either because a decision was still awaited on the patient’s next course of action or the abnormality was old and had been worked up at an outside institution. We confirmed failures of alert reception in 40 cases (3.9% of all alerts) in which providers were unaware of the alert. ▶ compares the characteristics of the 45 alerts lost to follow-up with the 323 cases for which no further action was required to ensure follow-up. In general, alerts associated with imaging lost to follow-up were not associated with any particular type of imaging study, ordering provider characteristics, ordering provider specialty, or notification of an additional provider, although our statistical power to assess these differences was limited.

Table 3.

Table 3 Characteristics of Providers and Tests in 45 Cases of Imaging Lost to Follow-up Versus 323 Cases in Which No Further Action Was Required to Ensure Follow-up ∗

| Lost to Follow-up n (%) | No Action Was Required n (%) | χ2 (Fisher’s exact test) | df | p | |

|---|---|---|---|---|---|

| Type of study | |||||

| General radiology | 23 (51) | 141 (44) | |||

| Ultrasounds | 2 (4.4) | 23 (7.1) | |||

| Computerized tomography | 9 (20) | 118 (37) | |||

| Magnetic resonance imaging | 6 (13) | 25 (7.7) | |||

| Mammography | 2 (4.4) | 3 (1.0) | |||

| Fluoroscopic radiograph | 3 (6.7) | 13 (4.0) | (Fisher’s exact test) | 0.0559 | |

| Total | 45 | 323 | |||

| Ordering provider characteristics | |||||

| Trainee | 10 (22) | 66 (20) | |||

| Physician assistants | 14 (31) | 71 (22) | |||

| Nurse practitioners | 3 (6.7) | 17 (5.3) | |||

| Attending physician | 18 (40) | 169 (52) | 2.810 | 3 | 0.4219 |

| Total | 45 | 323 | |||

| Specialty | |||||

| Generalist/primary care | 26 (58) | 196 (61) | |||

| Hematology/oncology | 2 (4.4) | 26 (8.0) | |||

| Nononcology specialty | 5 (11) | 27 (8.4) | |||

| Emergency medicine | 5 (11) | 19 (5.9) | |||

| General surgery/gynecology | 3 (6.7) | 17 (5.2) | |||

| Specialty surgery | 4 (8.9) | 38 (12) | (Fisher’s exact test) | 0.6464 | |

| Total | 45 | 323 | |||

| Number of providers notified | |||||

| 1 | 28 (62) | 159 (49) | |||

| 2 | 17 (38) | 164 (51) | 2.669 | 1 | 0.1023 |

| Total | 45 | 323 | |||

| Alternate provider characteristics | |||||

| Trainee | 1 (5.6) | 3 (1.8) | |||

| Physician assistant | 2 (11) | 17 (10) | |||

| Nurse practitioners | 0 (0.0) | 5 (3.0) | |||

| Attending physician | 15 (83) | 141 (85) | (Fisher’s exact test) | 0.5681 | |

| Total† | 18 | 166 |

∗ Medical record review determined whether action was required to ensure follow-up of imaging by calling the provider.

† Not every alert was transmitted to a second alternate provider.

The types of abnormalities related to imaging lost to follow-up are shown in ▶. All of these had the potential to lead to missed or delayed diagnosis of the given condition if we had not intervened. A majority of near misses (73%) were related to some form of a suspected new malignancy.

Table 4.

Table 4 Types of Near Misses Associated with Imaging Lost to Follow-Up ∗

| Type of Near Miss | n |

|---|---|

| Suspicious for new malignancy | |

| Chest radiograph with nodular density not followed up with computerized tomography or repeat imaging | 13 |

| Other chest imaging suspicious for neoplasm (hilar nodes, mediastinal widening) with no follow-up | 4 |

| Abnormal imaging suspicious for a gastrointestinal neoplasm necessitating an endoscopic procedure that was not ordered | 5 |

| Abnormal imaging suspicious for intra-abdominal neoplasm (kidney, liver, ovarian); further imaging to evaluate not ordered | 5 |

| Abnormal mammogram requiring further evaluation that was not ordered | 2 |

| Abnormal imaging suspicious for other neoplasms; recommended further imaging/test not ordered | 4 |

| Other major new abnormalities | |

| Chest imaging showing nonneoplastic abnormalities (e.g., consolidation) | 3 |

| Spinal canal imaging abnormalities (e.g., severe canal stenosis) | 6 |

| Other abnormal nonneoplastic imaging (e.g., aneurysm on abdominal radiograph) | 3 |

∗ All 45 cases had absence of both radiologic and clinical follow-up at the time of our 4-week telephone intervention.

Discussion

Our institution implemented several standard operating procedures to enhance communication through the View Alert computerized test result notification system, a process currently in use in all VA facilities to report specified abnormal test results. To test its effectiveness we studied communication outcomes as a result of using this system and evaluated physician awareness of abnormal imaging test results, a problem of significant magnitude in previous studies. 4,11 Using a taxonomy of communication breakdowns, we categorized problems with alert acknowledgment and reception and identified lost to follow-up imaging results. Providers failed to electronically acknowledge over one-third of alerts according to established protocols and were unaware of abnormal results in 4% of cases 4 weeks after reporting. We found that 45 (0.2%) of 20,680 imaging reports were lost to follow-up in the study period; when translated to 190,799 outpatient visits, the rate was 0.02% per outpatient visit. Although we lack data about these rates prior to using this system, our findings suggest that lack of physician awareness of abnormal imaging results and subsequent loss of appropriate follow-up occur despite the use of a computerized test result notification system that followed standardized policies and procedures.

Surprisingly, there is a dearth of studies that report such overall rates. Two studies have reported similar data in systems that do not use similar IT and suggest that physician unawareness of abnormal imaging results is a substantial problem. 4,11 One of them reported 36% of abnormal mammograms to be lost to follow-up, 4 whereas another reported that 23% of abnormal dual-energy x-ray absorptiometry scans without evidence of review by the provider. 11 These numbers are strikingly high compared to the 4% reported in our study. The dual-energy x-ray absorptiometry scans study also reported about a 2% overall lost to follow-up imaging rate compared to the 0.2% seen in our study. Although our data are from a single site, they appear to suggest an improvement over noncomputerized notification systems.

Limited data exist from computerized notification systems related to imaging. In a recent study at another VA facility, 8 of 395 (2%) of abnormal radiology reports were lost to follow-up, a number that is not statistically different from ours. 18 However, their system was semiautomated, i.e., the radiologists notified the provider in every abnormal case, a fairly time-intensive process. The vast majority of radiologists have found this method to be frustrating, with lengthy wait times on the telephone for referring clinicians, and for this reason this method is generally not popular among radiologists. 19 Other techniques of automation-based test result notification are emerging 19,20 ; however, their performance and outcome data are lacking. In general, electronic alerting has been shown to improve critical laboratory results communication in the inpatient setting, and we believe that it has a promising future in improving test result follow-up in the outpatient setting. 21–23

Our findings affirm the need for high reliability of tracking abnormal test results to achieve high-quality health care. 1 System redesign should include formal policies and procedures regarding communication and appropriate use of technology 19–21,24,25 to achieve high standards of patient safety. Several studies report communication errors in test result reporting and have addressed issues related to test result follow-up. 1–4,17,26–32 However, few studies have comprehensively evaluated outcomes by using a taxonomy of communication failures to identify points of breakdowns in the test result notification process. 11,29,32 We believe our study may be one of the first to adapt such a taxonomy to evaluate a computerized test result notification system in ambulatory care.

Our study included alerts related to a broad range of imaging studies ordered by providers of several types, including trainees. The majority of outpatient care at our institution is delivered by primary care providers, who often act as gatekeepers and can serve as a safety net to maintain quality care in a complex multispecialty health care system. The transmission of every alert to the patient’s primary care provider could have contributed to enhanced communication as evidenced by the number of cases (18%) in which the alternate (primary care) physician was the first to document responsiveness to the alert. Although this suggests that our back-up system seems to be working to an extent, it is still concerning that providers at times did not document any response to the alert in the medical record.

Despite enthusiasm about computerized test result notification systems and their potential for improving patient safety, there were several examples of near misses for which results were not followed up with additional testing, work-up, or consultation. Because we do not have appropriate comparison data for our system prior to this intervention, we cannot speculate whether this is an improvement over our previous nonelectronic notification, such as pages or telephone calls to ordering providers. Although lost to follow-up imaging accounted for only 4% of all abnormal results, in a system in which millions of imaging tests are performed the potential impact of lost test results is high. For example, in the brief 11-week time period at our institution, most of these near misses (including 33 reports that were coded as suspicious for a new malignancy) could have resulted in adverse outcomes. At the time of provider contact in these cases, providers reported recalling the case, but when asked specifically about their abnormal imaging tests, providers did not recall reading the results and admitted to being unaware and not taking action.

Practicing physicians in the ambulatory care setting are subject to many breakdowns in care processes that lead to lost test results. Primary care physicians may receive up to 40 radiology reports per week 3 in addition to several other laboratory reports, and at our institution providers may receive anywhere from 20 to 60 alerts per day. There are several other constraints, such as time and workload, that could affect the communication process, but much needs to be learned about why alerts remain unacknowledged and why test results get lost to follow-up. Future work should aim to lower the rate of lost-to-follow-up results without putting additional constraints on providers’ tasks or time.

Our study has several limitations. This was a single-institution VA study performed over a relatively brief time period with a predominantly male study population, raising questions about generalizability outside the VA. We also have no similar communication error data prior to initiation of the electronic alert system, limiting our conclusions about its effectiveness compared to previous methods. In addition, we did not examine possible alert transmission failures, such as circumstances in which the radiologist did not appropriately code the abnormal report. We did not study harm and adverse events, but only near misses at 4 weeks, which was a convenient and feasible outcome measure given our study methodology. It is possible that follow-up would have occurred for some alerts after this 4-week time period had we not intervened. We also did not assess the characteristics of the 615 abnormal imaging results that were acknowledged by the providers. Our results also may not be generalizable to the inpatient setting where the prevalence of abnormal results is likely to be higher and care is provided by teams that rotate more frequently and have a more complex structure. Because of the unique IT environment at the VA (the VA information management system integrates the EMR with ancillary systems such as the radiology information system), our results are not generalizable to other settings, such as free-standing diagnostic imaging centers, which may not communicate alerts directly to physicians’ EMR screens. Nevertheless, we believe that the VA system can be viewed as a model for communicating alerts to physicians and an important system within which innovations in patient safety methods can be developed and tested. 33

In conclusion, imaging results continue to be lost to follow-up in a computerized test result notification system that alerted physicians on their EMR screens. Although previous data comparisons are limited, communication failures in this system appear to occur at a lower rate than those reported in systems that do not use comparable IT. Future research should assess the effectiveness of various methods of notifying providers of abnormal test results and determine why some alerts are lost in computerized notification systems.

Footnotes

Dr. Singh is the recipient of National Institutes of Health K12 Mentored Clinical Investigator Award grant number K12RR17665 to Baylor College of Medicine. Dr. Petersen is a Robert Wood Johnson Foundation Generalist Physician Faculty Scholar (grant number 045444) and a recipient of the American Heart Association Established Investigator Award (grant number 0540043N). These sources had no role in design and conduct of the study; collection, management, analysis, and interpretation of the data; or preparation, review, and approval of the manuscript or the decision to submit the manuscript for publication. Dr. Singh had full access to all of the data in the study and takes responsibility for the integrity of the data and the accuracy of the data analysis.

The views expressed in this article are those of the authors and do not necessarily represent the views of the Department of Veterans Affairs.

The authors thank Carol Swartsfager for her assistance with figures and Annie Bradford for assistance with technical writing.

References

- 1.Bates DW, Leape LL. Doing better with critical test results Jt Comm J Qual Patient Saf 2005;31:66-6761. [DOI] [PubMed] [Google Scholar]

- 2.Berlin L. Communicating radiology results Lancet 2006;367:373-375. [DOI] [PubMed] [Google Scholar]

- 3.Poon EG, Gandhi TK, Sequist TD, Murff HJ, Karson AS, Bates DW. “I wish I had seen this test result earlier!”: dissatisfaction with test result management systems in primary care Arch Intern Med 2004;164:2223-2228. [DOI] [PubMed] [Google Scholar]

- 4.Poon EG, Haas JS, Louise PA, et al. Communication factors in the follow-up of abnormal mammograms J Gen Intern Med 2004;19:316-323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Roy CL, Poon EG, Karson AS, et al. Patient safety concerns arising from test results that return after hospital discharge Ann Intern Med 2005;143:121-128. [DOI] [PubMed] [Google Scholar]

- 6.Schiff GD. Introduction: communicating critical test results Jt Comm J Qual Patient Saf 2005;31:63-6561. [DOI] [PubMed] [Google Scholar]

- 7.Joint Commission on Accreditation of Healthcare Organizations Sentinel event statistics, March 31, 2006. Joint Commission on Accreditation of Healthcare Organizations; 2005. [PubMed]

- 8.Physician Insurers Association of America American College of Radiology. Practice Standards Claims Survey. Rockville, MD: Physicians Insurers Association of America; 1997. [DOI] [PubMed]

- 9.Woolf SH, Kuzel AJ, Dovey SM, Phillips Jr RL. A string of mistakes: the importance of cascade analysis in describing, counting, and preventing medical errors Ann Fam Med 2004;2:317-326. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.American College of Radiology ACR Practice Guideline for Communication of Diagnostic Imaging Findings. Practice Guidelines and Technical Standards 2005. Reston, VA: American College of Radiology; 2005. pp. 5-9.

- 11.Cram P, Rosenthal GE, Ohsfeldt R, Wallace RB, Schlechte J, Schiff GD. Failure to recognize and act on abnormal test results: the case of screening bone densitometry Jt Comm J Qual Patient Saf 2005;31:90-97. [DOI] [PubMed] [Google Scholar]

- 12.Institute of Medicine To Err is Human: Building a Safer Health System. Washington, DC: National Academy Press; 1999.

- 13.Institute of Medicine of the National Academies Patient Safety: Achieving a New Standard for Care. Washington, DC: National Academy Press; 2004. [PubMed]

- 14.Bates DW, Gawande AA. Improving safety with information technology N Engl J Med 2003;348:2526-2534. [DOI] [PubMed] [Google Scholar]

- 15.Final Report: Summary review: evaluation of Veterans Health Administration procedures for communicating abnormal test results. Report number 01-01965-24. 11-25-2002.

- 16.Weinger MB, Blike G. Intubation Mishap. Agency for Health Care Research and Quality Web M & M Rounds. 2003. Available at: http://www.webmm.ahrq.gov/case.aspx?caseID=29. Accessed January 11, 2007.

- 17.Kizer KW. The changing face of the Veterans Affairs health care system Minn Med 1997;80:24-28. [PubMed] [Google Scholar]

- 18.Choksi VR, Marn CS, Bell Y, Carlos R. Efficiency of a semiautomated coding and review process for notification of critical findings in diagnostic imaging AJR Am J Roentgenol 2006;186:933-936. [DOI] [PubMed] [Google Scholar]

- 19.Brantley SD, Brantley RD. Reporting significant unexpected findings: the emergence of information technology solutions J Am Coll Radiol 2005;2:304-307. [DOI] [PubMed] [Google Scholar]

- 20.Brenner RJ. To err is human, to correct divine: the emergence of technology-based communication systems J Am Coll Radiol 2006;3:340-345. [DOI] [PubMed] [Google Scholar]

- 21.Kuperman GJ, Teich JM, Tanasijevic MJ, et al. Improving response to critical laboratory results with automation: results of a randomized controlled trial J Am Med Inform Assoc 1999;6:512-522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Rind DM, Safran C, Phillips RS, et al. Effect of computer-based alerts on the treatment and outcomes of hospitalized patients Arch Intern Med 1994;154:1511-1517. [PubMed] [Google Scholar]

- 23.Choksi V, Marn C, Piotrowski M, Bell Y, Carlos R. Illustrating the root cause analysis process: creation of safety net with a semi-automated process for notification of critical findings in diagnostic imaging J Am Coll Radiol 2005;2:768-776. [DOI] [PubMed] [Google Scholar]

- 24.Poon EG, Wang SJ, Gandhi TK, Bates DW, Kuperman GJ. Design and implementation of a comprehensive outpatient results manager J Biomed Inform 2003;36:80-91. [DOI] [PubMed] [Google Scholar]

- 25.Berlin L. Using an automated coding and review process to communicate critical radiologic findings: one way to skin a cat Am J Roentgenol 2005;185:840-843. [DOI] [PubMed] [Google Scholar]

- 26.Boohaker EA, Ward RE, Uman JE, McCarthy BD. Patient notification and follow-up of abnormal test resultsA physician survey. Arch Intern Med 1996;156:327-331. [PubMed] [Google Scholar]

- 27.Brenner RJ, Bartholomew L. Communication errors in radiology: a liability cost analysis J Am Coll Radiol 2005;2:428-431. [DOI] [PubMed] [Google Scholar]

- 28.Hanna D, Griswold P, Leape LL, Bates DW. Communicating critical test results: safe practice recommendations Jt Comm J Qual Patient Saf 2005;31:68-80. [DOI] [PubMed] [Google Scholar]

- 29.Hickner JM, Fernald DH, Harris DM, Poon EG, Elder NC, Mold JW. Issues and initiatives in the testing process in primary care physician offices Jt Comm J Qual Patient Saf 2005;31:81-89. [DOI] [PubMed] [Google Scholar]

- 30.Peterson NB, Han J, Freund KM. Inadequate follow-up for abnormal Pap smears in an urban population J Natl Med Assoc 2003;95:825-832. [PMC free article] [PubMed] [Google Scholar]

- 31.Haas JS, Kaplan CP, Brawarsky P, Kerlikowske K. Evaluation and outcomes of women with a breast lump and a normal mammogram result J Gen Intern Med 2005;20:692-696. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Massachusetts Coalition for the Prevention of Medical Errors: Communicating Critical Test Results. Available at: http://www.macoalition.org/Initiatives/docs/CRTOverview.pdf. Massachusetts Hospital Association. Accessed January 11, 2007.

- 33.Singh H, Thomas EJ, Khan M, Petersen LA. Identifying diagnostic errors in primary care using an electronic screening algorithm Arch Intern Med 2007;12:167302–8. [DOI] [PubMed] [Google Scholar]