Conflicts of interest in biomedical research can endanger the independent judgement of researchers and, in a worst-case scenario, can result in harm to humans, animals or the environment, or avoidable damage to scientifically validated truths. Highly publicized cases of scientists who have downplayed the risk of passive smoking—while receiving funding from the tobacco industry—or researchers who have questioned anthropogenic global climate change—yet are supported by the coal or oil industries—(LaDou et al, 2007) have attracted persistent, and often appropriate, criticism.

A conflict of interest occurs when someone in a position of trust—for example, an academic researcher, lawyer or physician—has competing private and professional interests that make it more difficult to fulfil his or her professional duties without bias. However, a conflict of interest in itself is not necessarily bad, as long as the ‘right' interests prevail.

Nevertheless, conflicts of interest can create an impression of impropriety that, in the long run, might undermine the credibility of an individual or even an entire profession. At a time when policy-makers, politicians and the public increasingly rely on scientific advice about controversial issues—for example, human embryonic stem cells, genetically modified crops or global climate change—conflicts of interest diminish the public's trust in the independence and unbiased judgement of academic scientists. To maintain trust, researchers must remain visibly trustworthy, which requires a careful and explicit management of conflicts of interest. Amidst growing concerns about the rising prevalence of conflicts of interest (Bekelman et al, 2003) and the attendant risks, various commentators and scientists have proposed several measures to handle conflicts of interest, which range from injunctions for more systematic disclosures to outright bans (Kaiser, 2005).

…a conflict of interest in itself is not necessarily bad, as long as the ‘right' interests prevail

Yet, research funding from private benefactors—who often pursue their own interests when they finance research or collaborate with academic scientists—contributes considerably to and benefits research. Therefore, should scientists regard these sources of funding as opportunities or moral problems? It is necessary to find a sensible way of regulating conflicts of interest that both allows research to benefit from private contributions and collaborations, and protects the independence and unbiased judgement of research scientists.

An example of the difficulties represented by conflicts of interest and regulation is the question of selective bans on funding from tobacco companies in medical research (Cohen, 2001). A few years ago, the University of Geneva was affected when a public health researcher with whom it was associated was accused of scientific fraud. For decades he had been secretly paid as a consultant for the tobacco company Philip Morris (Richmond, VA, USA) and his research had disingenuously entertained a controversy on the health risks of passive smoking (Diethelm et al, 2005). Pressing libel charges, the researcher initiated legal proceedings that eventually came before the Federal Tribunal, Switzerland's highest court of appeal. Simultaneously, the University conducted an inquiry into his scientific misconduct. Both proceedings concluded that he could not be considered to be independent from the tobacco industry, that his involvement was part of a deliberate strategy by the tobacco industry to raise doubt about the health risks of passive smoking, and that the interests of the industry were in contradiction to those of public health and medical science (Mauron et al, 2004; Anon, 2006).

New regulations—or modifications to existing regulations—that deal with conflicts of interest usually come in the wake of scandals or attacks on scientific integrity (Check, 2004). Not surprisingly, these are often heavily influenced by the particular details of a given case. For example, in a press release issued in December 2001—shortly after its internal committee concluded its investigation—the University of Geneva announced that it was asking its members to no longer accept funding from the tobacco industry, and instructing its researchers to systematically declare the source of funding in their publications and when presenting results (University of Geneva, 2001). It thus joined a growing list of academic institutions that prevent their faculty from obtaining funding from the tobacco industry (Cohen, 2001). However, it is difficult to decide how to regulate funding and the potential conflict of interest it entails. Given the strong particular interests that usually lead to detailed rules on the subject, such regulations are often vulnerable to allegations of partiality. Conversely, to advocate no regulation at all downplays or disregards the problem (Stossel, 2005). In addition, an outright ban on private funding would be misguided because it would not allow academia and industry to join forces when both have a genuine convergence of interest (Korn & Ehringhaus, 2005).

Selective bans on certain collaborations—for example, with the tobacco industry—have been criticized both as too strict and too lenient (Cohen, 2001). Such bans have been regarded as inequitable both to researchers and to sponsors—contrary to the neutrality of research (Glantz, 2005)—and some fear that they could be the first steps down a slippery slope that would eventually lead to intrusive regulations and prevent financial partnerships that are necessary for academia (Jones, 2005; Stossel, 2005). Some are also concerned that selective bans would merely act to evade debates about more prevalent conflicts of interest: for example, funding by pharmaceutical companies and the risk that it could distort the priorities of medical research.

To maintain trust, researchers must remain visibly trustworthy, which requires a careful and explicit management of conflicts of interest

Critics of a general ban on funding from select industries, such as the tobacco industry, share a common premise that there should be no distinction between the sources of private funding for academic research; though in fact, this premise is invalid. However, the alternative distinctions, based on the size or type of financial contribution, are also problematic (Morin et al, 2002; Brennan et al, 2006). Instead, we need a consistent approach to manage conflicts of interest in a manner that preserves trust and is mutually beneficial for partnerships based on genuine common interests. This article therefore proposes a unified approach to evaluate, manage and disclose conflicts of interest by focusing on academic medical research—where recent conflicts of interest have received the most attention.

Thompson (1993) gives a clear and useful definition of a conflict of interest in the context of medicine: “a conflict of interest is a set of conditions in which professional judgment concerning a primary interest (such as a patient's welfare or the validity of research) tends to be unduly influenced by a secondary interest (such as financial gain).” It is realistic because it recognizes that professionals, including researchers, have potentially conflicting interests that need to be taken into account.

On the basis of Thompson's definition, we can categorize interests into two groups. Primary interests define a professional's identity; everyone engaged in a particular activity, such as academic research, should share these. The primary interest of a researcher is the generation and public dissemination of knowledge; intrinsic to this aim are other primary interests, such as scientific rigour, research integrity and openness.

Secondary interests are required, or are useful, for supporting research and professional standing—such as obtaining funding or publication in high-impact journals—but are not crucial for scientific activity to retain its fundamental identity. Secondary interests can also be completely different from work-related ones, “such as preference for family and friends or the desire for prestige and power” (Thompson, 1993).

Public and private partners, and collaborators in research have their own primary and secondary interests. A company, for example, has a legitimate primary interest in making money. The pharmaceutical and biotechnology industries have an additional primary interest based on their specific identity and market: to improve health by developing new therapies and diagnostics. Companies, of course, have secondary interests too, such as maintaining a degree of social trust or consumer acceptance.

Secondary interests are wholly legitimate things to have. It is only when a secondary interest gains priority over a primary interest, or when either partner fails to minimize the risk of sacrificing primary interests, that the risk of criminal or morally unacceptable behaviour becomes apparent. A conflict of interest per se, therefore, is not intrinsically wrong; instead, conflicts of interest represent situations in which the primary interests of partners do not fully overlap. By using this definition, there can be no ‘appearance of a conflict of interest'—either a conflict exists or it does not. Therefore, evaluating the possibility of a conflict of interest on these terms does not mean judging someone's character. In this case, a lack of moral fibre is not required for the conflict to represent a risk of morally questionable behaviour (Dana & Loewenstein, 2003). Therefore, conflicts of interest do not always have to be based on financial considerations, nor do they require a partner; as scientific misconduct shows, fraud is a prime example of putting secondary interests—getting published—before primary interests—conducting and reporting research honestly.

To avoid sacrificing primary interests in partnerships between academics and industry partners, or between scientists and private funders, it is necessary to establish clear rules that enable either partner to preserve their primary interests. Moreover, by accepting only those conflicts of interest where this is reasonably possible, scientists can avoid a situation in which their work and principles are compromised. Furthermore, these assessments should not be left entirely to individuals, given that conflicts of interest can cloud one's judgement (Wazana, 2000; Steinman et al, 2001).

How can we apply these principles as a practical regulatory framework that will help academics to maintain their priorities and thus maintain public trust in academic freedom (Steinbrook, 2005)? Detailed disclosure of the involvement of all parties keeps conflicts of interest visible, but it fails as a sole regulatory mechanism for two reasons. First, disclosure can inaccurately make a problematic conflict of interest seem acceptable. Second, the mere knowledge that a conflict exists is not sufficient to assess the likelihood of bias. Disclosure thus fails both in making conflicts of interest acceptable and in allowing readers to assess their acceptability. Quantitative caps on gifts, consultancy fees or equity are also insufficient because independent judgement is not a function of the amount of money received (Dana & Loewenstein, 2003).

We propose that a conflict of interest is acceptable if the joint activity is motivated by at least one shared primary interest, if regulation is possible to protect primary interests and if there is an exit strategy in case the partners' aims diverge too far. Academics ought to give priority to their primary interests—generating knowledge, integrity, transparency and openness—and make it clear to all their partners that they will stick to these. Organizational structures should support and enable them to assess their priorities as if the conflict of interest did not exist.

The four-step approach detailed in Table 1 provides a unified strategy to evaluate conflicts of interest in academic research. It includes an assessment of shared interests, an assessment of divergence and regulatory planning, and an assessment of applicability and disclosure.

Table 1.

Applying this framework: a few examples from academic medicine

| Examples | Shared primary interests? | Divergence and required regulations? | Applicability? | Disclosure? |

|---|---|---|---|---|

| Research on passive smoking with tobacco industry funding | No Although this industry might assert an aim of finding out the truth, they have no interest that this truth be known | → Fails at step one | ||

| Phase III clinical trial run by an academic hospital with pharmaceutical funding | Yes Knowing efficacy and risks of a new drug | Yes Regulations often identifiable | Usually, yes Will depend on the context Independent review by IRBs | Yes At publication as part of the methods |

| Pharmaceutical representatives to help physicians keep up with new information | No If primary interest of the industry is to improve financial margins | → Fails at step one | ||

| Yes If primary interest of the industry is to improve patient access to beneficial drugs | Yes Regulations often identifiable | No Unclear threshold involved, independent review not applicable | → Fails at step three | |

| Pharmaceutical industry funding of continuing education | No If primary interest of the industry is to improve financial margins | → Fails at step one | ||

| Yes If primary interest of the industry is keeping physicians knowledgeable of recent innovation | Yes Regulations often identifiable | Usually, yes Will depend on the context | Yes During educational intervention, as part of the methods | |

| Clinical investigator whose spouse holds shares in the company | Yes Strictly speaking, there is no partnership involved in the decision that could be affected | Will depend crucially on the sort of decision that is contemplated |

IRBs, independent review boards.

Generally, it is justified to accept a conflict of interest if the partnership will be beneficial for at least one of the primary interests shared by both parties—which should, of course, be investigated on a case-by-case basis (Johns et al, 2003). Simple coexistence of either primary or secondary interests of both partners, such as the participation of academics in advertising, for example, is not sufficient (MacDonald & Chrisp, 2005). If both partners do not share at least one primary interest, one of them will lack the incentive to protect its integrity.

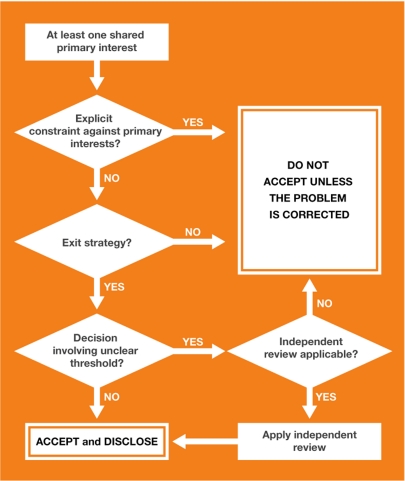

The purpose of the second step—diversity and regulatory planning—is to create rules that protect academics and help them to assess their priorities as if the conflict of interest did not exist. It therefore requires freedom from constraints that could otherwise lead researchers to sacrifice their primary interests, an exit strategy and, under some circumstances, independent review. It involves identifying diversity—interests that are legitimate for one partner, but not for the other—because these could jeopardize freedom from constraints. For example, academics have a primary interest in publishing the results of their research and a ‘gag rule'—or a contract that forbids them to publish results without approval from an industrial partner—is in clear contradiction to this. Freedom from constraints would require that such a contract be refused (Fig 1). Similarly, interpreting results in a biased way that is favourable to one partner (Bekelman et al, 2003; Leavell et al, 2006) in order to publish more quickly might not require illegitimate aims per se, but it is an inappropriate prioritization of secondary interests over primary interests. The second step therefore needs to assess the differing degrees of priorities of interest that are held by both partners.

Figure 1.

Regulatory planning.

Protecting the ability of researchers to judge priorities in the absence of explicit constraints inevitably requires that they should not become exclusively dependent on one partner. This would give this partner the leeway to disregard or adapt rules on which they had agreed, or even lead to self-censure by researchers. Regulatory mechanisms should therefore include an exit strategy (Cech & Leonard, 2001) and academic institutions should set aside a small proportion of research grants to pay into a common pool. Researchers who need to sever a financial partnership to preserve their primary aims could then draw on these funds to maintain their research until they are able to secure funding from another source. Multi-sponsoring—pooling resources from several different industrial partners—would also allow academics to preserve their primary interests.

Finally, the very decisions that could be biased by conflicts of interest often involve unclear thresholds. For example, how much evidence does a research community need to carry it beyond reasonable doubt? How many benefits should a drug show in clinical trials to be approved? How large must a potential danger be until a product is banned? There is inevitably a degree of personal judgement in these answers. Of course, there are academics who change their standards and points of view owing to conflicts of interest to such a degree that they stand out, but there is also the risk of systematic bias within a more reasonable range. In situations of unclear thresholds and systematic bias such as this, review by an external, neutral third party is a common approach to counteract potential biases. The regulation of conflicts of interest should therefore include the identification of decision points that would require external review.

The third step—an assessment of applicability—does not focus on the conflict itself, but investigates the specific local context to ascertain whether regulation of a particular conflict of interest is feasible—and therefore the partnership acceptable—at that time and place. The result of this step will differ not only with the type of partnership, but also in time and between institutions.

All steps of this framework should be disclosed; making all the details public will increase the likelihood that bias can be independently identified and that the decision will be open to critique, which is a central component of the scientific method. Dealing with conflicts of interest should be no different to dealing with any other source of potential bias in science. Researchers should describe the methods used to manage conflicts of interest, including the nature of the partnership, the main shared interests and divergences, and the steps taken to protect independent judgement. This should become an integral part of publications, akin to other subparts in the methods section of a paper. It would also make disclosure statements more intelligible and the likelihood of bias easier to assess. Furthermore, it would create a potential for shared experience and refinement through peer review.

A prospective partnership could fail either at step one—assessment of shared interests—or step three—assessment of applicability (Table 1). Research into the risks of passive smoking by academic researchers who received funding from the tobacco industry is a paradigmatic example of a lack of convergence of primary interests at step one. Research into smoking cessation treatments funded by the same industry could be another (Shamasunder & Bero, 2002; Rose, 2005). Deliberate deceit about prioritized interests can disqualify a partnership at this stage; a minimal threshold of trustworthiness and accuracy in outlining priorities should be required as a condition of financial partnerships. However, reliance on the truthfulness of a partner is not entirely necessary. Even if a partner's actual aims can be exceedingly difficult to identify, as indeed the tobacco industry's aims were for decades as a result of its secretive policies, their primary interests are sometimes apparent in other ways.

Specific problems exist in situations in which a conflict of interest is not part of a formalized agreement, such as consulting contracts or investigators who hold shares in a company that stands to benefit from the results of their research. These issues raised considerable controversy in 2005 when the US National Institutes of Health (Bethesda, MD, USA) banned its researchers from taking part in such activities (Kaiser, 2004, 2005). The danger is that the people involved in this type of conflict of interest could undermine their primary interest in favour of pursuing research or bending results to suit the company in which they hold shares or for which they consult. Thus, the crucial element is to identify where the primary interest of research and the secondary interest of financial benefits diverge, and to introduce regulations to prevent these conflicts of interest from influencing decisions. It also raises the questions of whether unclear thresholds require independent review and whether this would be feasible. Despite attempts to introduce the regulation of such conflicts of interest by distinguishing their source (Brennan et al, 2006), it is actually more important to distinguish the type of decision that could be affected by the potential bias. For example, this type of conflict of interest could be acceptable in research activities that involve explicit planning and severe scrutiny by external review, as is the case with much clinical research.

By contrast, visits by drug representatives to academic physicians fail at the first step of the proposed framework, if the primary interest of the industry is to increase profit margins. Alternatively, if the primary interest is to improve patient access to beneficial drugs, it fails at step three. Drug indications often have unclear thresholds, and prescription bias has been shown in such situations (Orlowski & Wateska, 1992; Spingarn et al, 1996). This is a situation where protecting independent judgement would require a neutral review of individual prescriptions, which would not be feasible; therefore, physicians should avoid such situations altogether.

At a time when even the formulation of a research question can be subject to financial interests (Wolinsky, 2005), other conflicts of interest can emerge when formulating research strategies or focusing on specific research topics. In theory, bias could be addressed by an independent review, even if the reviewers have conflicts of interest themselves, as long as these are sufficiently diverse. However, this does not address the risk of a general prioritization of research that is beneficial to the industry as a whole (Drucker, 2004). This would require independent review by peers without any industrial conflicts of interest (Collier, 2006). Reserving several faculty positions for people without industrial conflicts of interest would represent one way of addressing this problem. The number of these people might even increase once academics became aware that receiving honoraria from industry makes them ineligible for certain activities that are not amenable to explicit regulations.

In summary, it is possible to distinguish and choose between conflicts of interest based on the primary interests of the partners, the types of decision that the conflict is likely to affect, and by introducing local regulations to protect the freedom and judgement of academic researchers. These include freedom from explicit constraints that could lead academics to discount their primary interests, the presence of an exit strategy and an independent review of all decisions made with unclear thresholds. To be effective, the entire process should be public. Our proposed approach (Fig 1) provides researchers and regulators with an equitable mechanism to evaluate the acceptability of specific conflicts of interest and to make disclosure more effective.

Rejecting a conflict of interest does not constitute an indictment of the researchers who were involved. Taking ethical risks with conflicts of interest is not the same as yielding to them. Using a systematic approach would contribute to keeping this risk within more acceptable limits than are currently tolerated. It would also help to distinguish between the conflicts of interest that are acceptable and those that are not. Thus, it might simultaneously protect academics against the increased ethical risk of some conflicts of interest and clear the air of wholesale distrust of academic partnerships with industry.

Acknowledgments

We thank Bernard Baertschi for criticism of the manuscript. This work was funded by the Institute for Biomedical Ethics at the Geneva University Medical School and by the Swiss National Science Foundation. The views expressed are the authors' own and not necessarily those of the Geneva University Medical School or of the Swiss National Science Foundation.

Footnotes

Both authors are employed by a medical school—one of the many institutions that could stand to lose some funding and, in our view, gain public trust if our views are taken seriously. A.M. was Chairman of the inquiry committee set up by the University of Geneva in the Rylander case mentioned in this article and was the main author of the inquiry report.

References

- Anon (2006) Editorial note. Eur J Public Health 16: 233 [Google Scholar]

- Bekelman JE, Li Y, Gross CP (2003) Scope and impact of financial conflicts of interest in biomedical research: a systematic review. JAMA 289: 454–465 [DOI] [PubMed] [Google Scholar]

- Brennan TA et al. (2006) Health industry practices that create conflicts of interest: a policy proposal for academic medical centers. JAMA 295: 429–433 [DOI] [PubMed] [Google Scholar]

- Cech TR, Leonard JS (2001) Science and business. Conflicts of interest—moving beyond disclosure. Science 291: 989. [DOI] [PubMed] [Google Scholar]

- Check E (2004) Ethics accusations spark rapid reaction from NIH chief. Nature 427: 187. [DOI] [PubMed] [Google Scholar]

- Cohen JE (2001) Universities and tobacco money. BMJ 323: 1–2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collier J (2006) The price of independence. BMJ 332: 1447–1449 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dana J, Loewenstein G (2003) A social science perspective on gifts to physicians from industry. JAMA 290: 252–255 [DOI] [PubMed] [Google Scholar]

- Diethelm PA, Rielle JC, McKee M (2005) The whole truth and nothing but the truth? The research that Philip Morris did not want you to see. Lancet 366: 86–92 [DOI] [PubMed] [Google Scholar]

- Drucker J (2004) Beyond conflict of interest: maybe wrong questions are being asked. BMJ 329: 686. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glantz SA (2005) Tobacco money at the University of California. Am J Respir Crit Care Med 171: 1067–1069 [DOI] [PubMed] [Google Scholar]

- Johns MM, Barnes M, Florencio PS (2003) Restoring balance to industry–academia relationships in an era of institutional financial conflicts of interest: promoting research while maintaining trust. JAMA 289: 741–746 [DOI] [PubMed] [Google Scholar]

- Jones RN (2005) Tobacco money and academic freedom. Am J Respir Crit Care Med 172: 933–934 [DOI] [PubMed] [Google Scholar]

- Kaiser J (2004) NIH ethics. Staff scientists protest plan to ban outside fees. Science 306: 1276. [DOI] [PubMed] [Google Scholar]

- Kaiser J (2005) Conflict of interest. NIH chief clamps down on consulting and stock ownership. Science 307: 824–825 [DOI] [PubMed] [Google Scholar]

- Korn D, Ehringhaus SH (2005) NIH conflicts rules are not right for universities. Nature 434: 821. [DOI] [PubMed] [Google Scholar]

- LaDou J, Teitelbaum DT, Egilman DS, Frank AL, Kramer SN, Huff J (2007) American College of Occupational and Environmental Medicine (ACOEM): a professional association in service to industry. Int J Occup Environ Health 13: 404–426 [DOI] [PubMed] [Google Scholar]

- Leavell NR, Muggli ME, Hurt RD, Repace J (2006) Blowing smoke: British American Tobacco's air filtration scheme. BMJ 332: 227–229 [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacDonald S, Chrisp T (2005) Acknowledging the purpose of partnership. J Business Ethics 59: 307–317 [Google Scholar]

- Mauron A, Morabia A, Perneger T, Rochat T (2004) Inquiry Commission Report on Professor Ragnar Rylander. Geneva, Switzerland: University of Geneva [Google Scholar]

- Morin K et al. (2002) Managing conflicts of interest in the conduct of clinical trials. JAMA 287: 78–84 [DOI] [PubMed] [Google Scholar]

- Orlowski JP, Wateska L (1992) The effects of pharmaceutical firm enticements on physician prescribing patterns. There's no such thing as a free lunch. Chest 102: 270–273 [DOI] [PubMed] [Google Scholar]

- Rose JE (2005) Ethics of tobacco company funding. Science 308: 632. [DOI] [PubMed] [Google Scholar]

- Shamasunder B, Bero L (2002) Financial ties and conflicts of interest between pharmaceutical and tobacco companies. JAMA 288: 738–744 [DOI] [PubMed] [Google Scholar]

- Spingarn RW, Berlin JA, Strom LB (1996) When pharmaceutical manufacturers' employees present grand rounds, what do residents remember? Acad Med 71: 86–88 [DOI] [PubMed] [Google Scholar]

- Steinbrook R (2005) Standards of ethics at the National Institutes of Health. N Engl J Med 352: 1290–1292 [DOI] [PubMed] [Google Scholar]

- Steinman MA, Shlipak MG, McPhee SJ (2001) Of principles and pens: attitudes and practices of medicine housestaff toward pharmaceutical industry promotions. Am J Med 110: 551–557 [DOI] [PubMed] [Google Scholar]

- Stossel TP (2005) Regulating academic–industrial research relationships—solving problems or stifling progress? N Engl J Med 353: 1060–1065 [DOI] [PubMed] [Google Scholar]

- Thompson DF (1993) Understanding financial conflicts of interest. N Engl J Med 329: 573–576 [DOI] [PubMed] [Google Scholar]

- University of Geneva (2001) L'Université de Genève prend ses distances avec l'industrie du tabac. Geneva, Switzerland: University of Geneva. [Google Scholar]

- Wazana A (2000) Physicians and the pharmaceutical industry: is a gift ever just a gift? JAMA 283: 373–380 [DOI] [PubMed] [Google Scholar]

- Wolinsky H (2005) Disease mongering and drug marketing. Does the pharmaceutical industry manufacture diseases as well as drugs? EMBO Rep 6: 612–614 [DOI] [PMC free article] [PubMed] [Google Scholar]