Abstract

Animals prefer an immediate over a delayed reward, just as they prefer a large over a small reward. Exposure to psychostimulants causes long-lasting changes in structures critical for this behavior and might disrupt normal time-discounting performance. To test this hypothesis, we exposed rats to cocaine daily for 2 weeks (30 mg/kg, i.p.). Approximately 6 weeks later, we tested them on a variant of a time-discounting task, in which the rats responded to one of two locations to obtain reward while we independently manipulated the delay to reward and reward magnitude. Performance did not differ between cocaine-treated and saline-treated (control) rats when delay lengths and reward magnitudes were equal at the two locations. However, cocaine-treated rats were significantly more likely to shift their responding when we increased the delay or reward size asymmetrically. Furthermore, they were slower to respond and made more errors when forced to the side associated with the lower value. We conclude that previous exposure to cocaine makes choice behavior hypersensitive to differences in the time to and size of available rewards, consistent with a general effect of cocaine exposure on reward valuation mechanisms.

Keywords: time-discounting, behavior, cocaine, delayed gratification, rat, reward

Introduction

Animals prefer an immediate over a delayed reward, just as they prefer a large over a small reward (Herrnstein, 1961; Evenden and Ryan, 1996; Ho et al., 1999; Cardinal et al., 2001; Mobini et al., 2002; Winstanley et al., 2004; Kalenscher et al., 2005). This time-discounting function is evident in studies that ask subjects to choose between a small reward delivered immediately and a large reward delivered after some delay. Because the total length of each trial is held constant, the optimal strategy is to always choose the large reward. However, in all species tested thus far, normal subjects fail to follow this strategy, instead biasing their choices toward the small, immediate reward as the delay to the large reward becomes longer. This pattern of behavior has been termed “impulsive choice.”

In some situations, high levels of impulsivity (choosing immediate over delayed outcomes) can be maladaptive, resulting in suboptimal choices. One example is the behavior of drug addicts, which is often characterized by impulsive, apparently ill-considered choices that favor short-term gains. Such behavior has been termed “myopia for the future” and has been proposed to reflect persistent drug-induced changes in corticolimbic areas (Jentsch and Taylor, 1999; Bechara et al., 2002). These circuits include many of the same areas shown to be critical to making choices about delayed rewards (Kheramin et al., 2002; Mobini et al., 2002; Cardinal et al., 2004; Winstanley et al., 2004; Rudebeck et al., 2006). Thus, decision-making deficits in addicts might reflect difficulties in discounting functions mediated by these circuits. Consistent with such speculation, it has been reported that addicts show impairments in discounting tasks (Monterosso et al., 2001; Coffey et al., 2003).

However, in humans, deficits may reflect a pre-existing condition. Furthermore, because delayed-discounting tasks typically confound time to and size of reward, it is not clear whether deficits reflect selective impairments in discounting functions, general changes in reward valuation (e.g., size), or both. We have recently shown that information regarding time to and size of rewards is encoded by dissociable neural populations in the orbitofrontal cortex (Roesch et al., 2006). Furthermore, human literature suggests that impulsivity can be broken down into these two factors (Dawe et al., 2004).

To address these issues, we tested the long-term effects of cocaine exposure on performance in a choice task in which rats responded to one of two wells to receive reward. During testing, we independently manipulated the time to reward or the size of reward available in one well. We found that choice behavior of cocaine-treated rats was significantly more sensitive to both manipulations than was the behavior of saline-treated controls.

Materials and Methods

Rats were tested at the University of Maryland School of Medicine in accordance with the School of Medicine and National Institutes of Health guidelines.

Subjects.

Twenty-four male Long–Evans rats (300–350 g), obtained from Charles River Laboratories (Wilmington, MA) served as subjects. Rats were housed individually on a 12 h light/dark cycle with ad libitum access to food and water except during testing when their access to water was restricted to ∼30 min a day.

Cocaine sensitization.

Cocaine sensitization was conducted as in previous experiments (Schoenbaum et al., 2004; Schoenbaum and Setlow, 2005; Burke et al., 2006; Stalnaker et al., 2006). Locomotor activity was monitored using a set of eight Plexiglas chambers (25 cm on a side) equipped with activity monitors (Coulbourn Instruments, Allentown, PA). The day before the start of the treatment regimen, the rats were placed into the activity chambers for 1 h and then divided into two groups with similar activity levels. Over the next 14 d, one group (n = 14) received daily intraperitoneal injections of 30 mg/kg cocaine HCl (20 mg/ml; National Institute on Drug Abuse); the other (n = 10) received similar volume injections of a 0.9% saline. After each injection, rats were monitored for 1 h.

Time-discounting choice task.

Training in the time discounting task began 6 weeks after sensitization. Before training, the rats were tested in a place preference task, which was conducted in a different room, using different training boxes, and training materials from the discounting task. The discounting task was conducted in custom aluminum chambers ∼18“ on each side with sloping walls narrowing to an area of 12 × 12” at the bottom. A central odor port was located above two fluid wells. The odor port was connected to an air flow dilution olfactometer to allow the rapid delivery of olfactory cues. Odors where obtained from International Flavors and Fragrances (New York, NY),

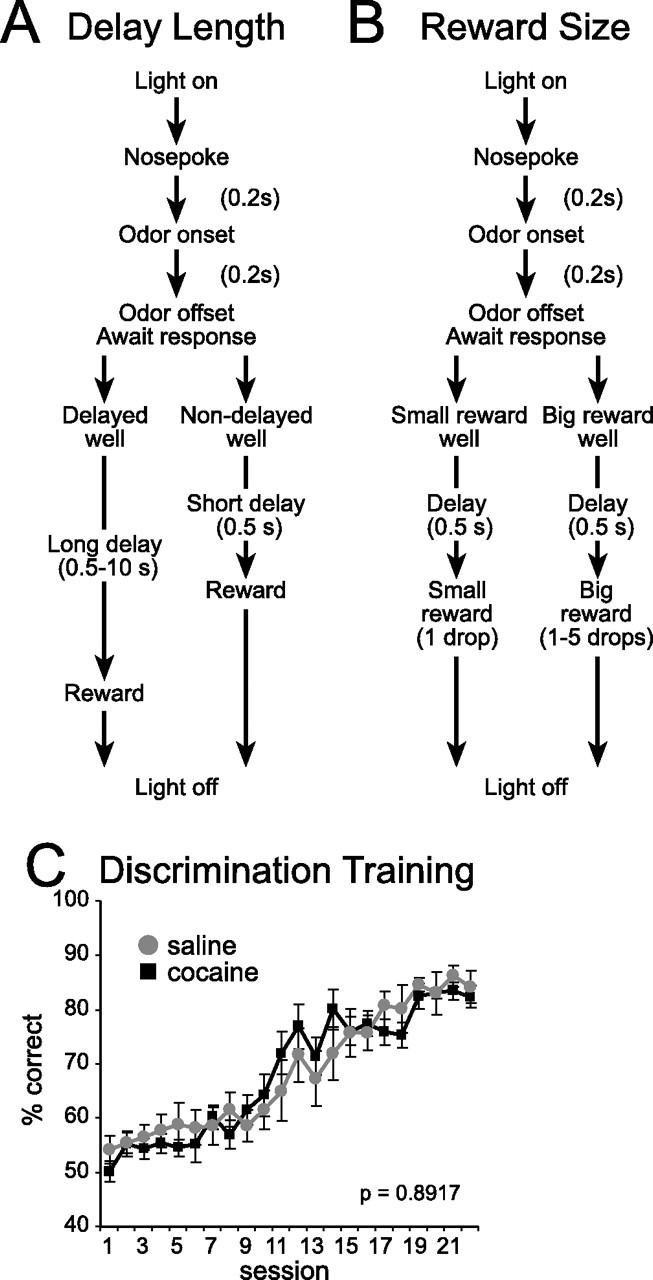

The task is illustrated in Figure 1A,B. Trials were signaled by illumination of the panel lights. When these lights were on, a nosepoke into the odor port resulted in delivery of an odor. One of two different odors was delivered to the port on each trial, in a pseudorandom order. One odor instructed the rat to go to the left to get a reward and a second odor instructed the rat to go to the right to get a reward. Training proceeded until average performance exceeded 75% for 2 d in a row. Once shaped to perform this basic task, we introduced delays (1–8 s) that preceded reward delivery (6 d) and rewards of different sizes (1 d). These manipulations were always varied in both wells simultaneously and allowed animals to become accustomed to them before testing without biasing behavior in one direction.

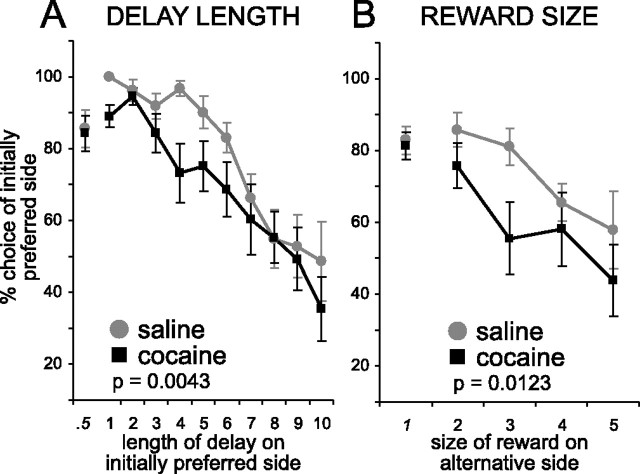

Figure 1.

A, B, Choice task during which we varied delay length (A) and reward size (B). The figure shows the sequence of events in each trial. C, One of two different odors was delivered to the port on each trial. One odor instructed the rat to go to the left to get reward, and a second odor instructed the rat to go to the right to get reward. C plots the percentage of correct scores during learning. Error bars indicate SE.

After this initial training, rats were tested in two probe sessions, in which we systematically increased either the time or the size of the reward in one of the two wells (see Fig. 1). For these sessions, which occurred on different days, a new odor was presented in pseudorandom sequence with the other two. We will refer to these trials as “free choice” because responding to either well led to reward. Trials involving odors 1 and 2 will be referred to as “forced choice” because on these trials the rats had to respond according to the previously acquired contingencies or no reward would be delivered. This design allows us to control which well the animal was responding to by forcing the animal to sample both sides, while at the same time determining the rat's preference.

In the first session, we systematically increased the delay preceding reward delivery while holding reward size constant (see Fig. 1A). Both wells initially produced a reward (1/20 ml of sucrose) after 500 ms. Then the delay was increased in one well over 30 trial blocks until it reached 10 s. All rats began by responding more in one well than the other on free-choice trials in the initial 30 trial block (>60%); the delay was titrated in the preferred well. For example, if the rat chose more lefts than rights (>60%) during the first 30 trials, then reward in the left well was delayed.

In the second session, we systematically increased reward size while holding delay constant (see Fig. 1B). Both directions initially produced a reward (0.05 ml bolus) after 500 ms. Then the reward size was increased by 1 bolus every 50 trials up to a maximally possible reward of 5 boli. Again rats began by responding more in one well than the other in the initial trial block (>60%); reward was increased in the nonpreferred well.

Behavioral analysis.

For each session, we examined choice rate, percent correct, and reaction time. Choice rate is defined by the percentage of choices made to the initially preferred side relative to the number of total choices made on free-choice trials. Percent correct scores measure the rate at which animals responded correctly on forced-choice trials. Reaction times are defined as the time it took for the rat to exit the odor port after odor sampling on forced-choice trials. Percent correct scores and reaction times will be examined for both directions. That is, for those responses made to the initially preferred side and those made to the alternative side. For each measure, we will perform a two-factor ANOVA with group (cocaine vs saline) and delay (short vs long) or size (big vs small) as factors.

Results

Cocaine sensitization

Cocaine-treated rats exhibited increased locomotor activity after injection of cocaine (day 14, mean, 247; SD, 128.7) as compared with controls (day 14, mean, 40; SD, 36.2). A two-factor ANOVA revealed significant effects of treatment (F(1,22) = 149.4; p < 0.00001) and session (F(14,308) = 3.83; p < 0.00001), and a significant interaction between them (F(14,308) = 6.27; p < 0.00001). Post hoc testing showed a significant difference between groups on every day (p < 0.0001) except the pretreatment session (cocaine, mean, 121; SD, 37.6; saline, mean, 122; SD, 43.8; p = 0.78).

Training on odor discrimination

There was no significant effect of cocaine treatment on acquisition of the left/right discrimination or on performance in the sessions in which we manipulated delay or reward size simultaneously in both wells (Fig. 1C). This was confirmed by a two-factor ANOVA, which revealed a significant main effect of session (F(21,471) = 471; p = 0.0001) but no significant main effect nor any interaction involving treatment (F values < 0.78; p values > 0.7472).

Cocaine-treated animals are hypersensitive to delay length

To assess the effects of delay on choice behavior, we systematically increased the length of the delay in the preferred well while at the same time introducing a novel odor, which signaled that the rats were free to respond at either well for reward. Choice behavior on these free choice trials is illustrated in Figure 2A. Cocaine-treated rats were more sensitive to the delay length, shifting responding away from the preferred side on these trials as the delay increased more quickly than saline-treated controls (Fig. 2A). Importantly, there was no difference between groups when delays were equal (Fig. 2A) (0.5 s) and both groups reduced responding as the delay became longer (Fig. 2A) (1–10 s). Thus, there appeared to be an effect of cocaine on sensitivity to short delays rather than on the behavior or in perception of very prolonged delays. Consistent with this interpretation, a two-factor ANOVA revealed significant main effects of treatment (F(1,215) = 8.32; p = 0.0043) and delay (F(10,215) = 12.37; p < 0.0001).

Figure 2.

A, B, Choice rate as expressed as the percentage of all choices made to the initially preferred side during manipulations of delay length (A) and reward size (B). The p value reflects the significance level for a main effect of group (cocaine vs saline) in a two-factor ANOVA taking group and delay (or reward) as factors. Error bars indicate SE.

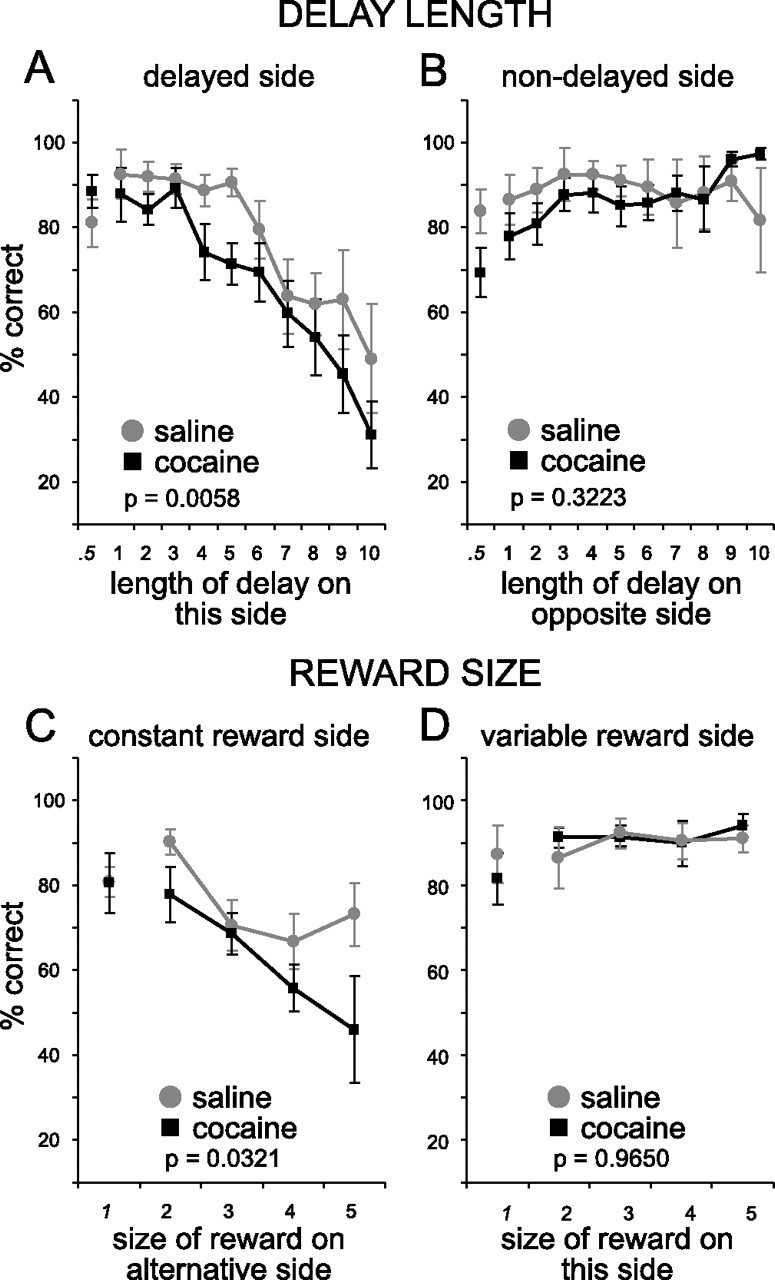

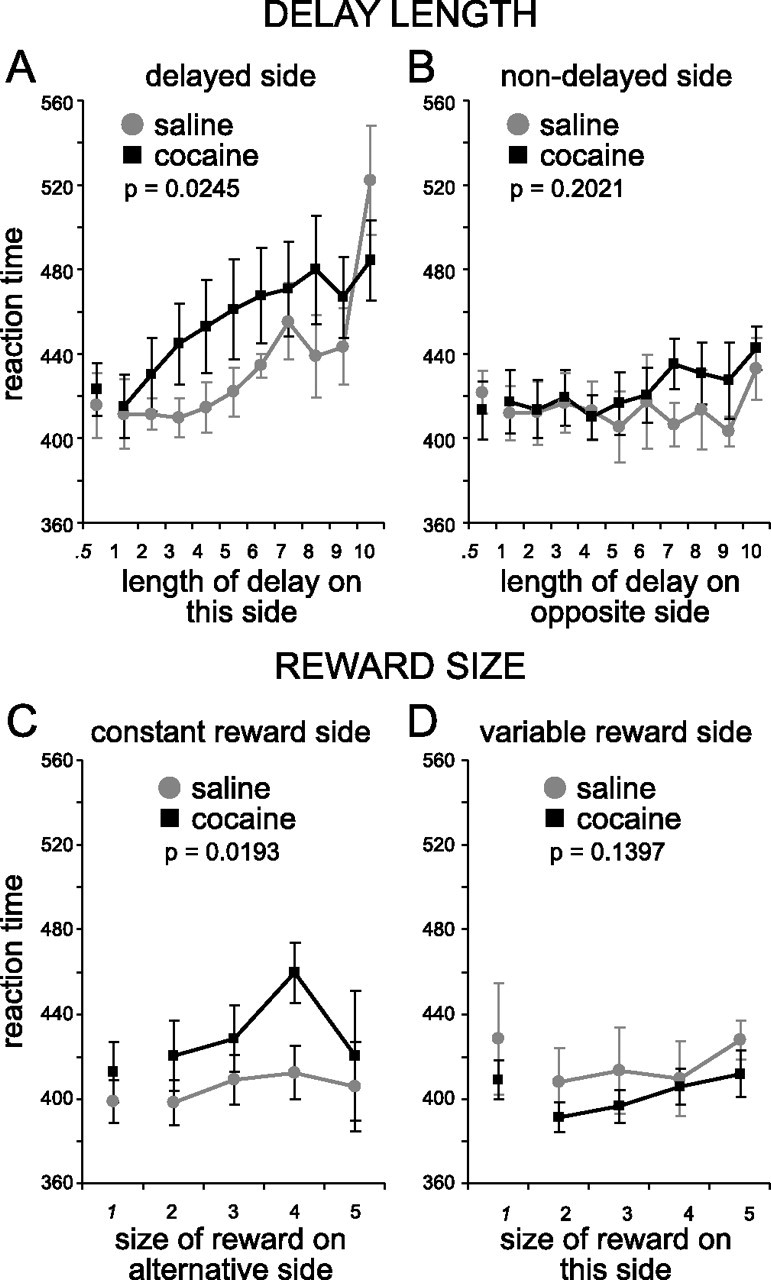

Cocaine-treated rats also showed increased sensitivity to delays on forced-choice trials, exhibiting less accurate and longer latency responses to the delayed well (Figs. 3, 4). Two-factor ANOVAs of percent correct and reaction time on the delayed side found significant main effects of treatment (percent correct, F(1,204) = 7.79, p < 0.0058; reaction time, F(1,214) = 5.13, p = 0.0245) and delay (percent correct, F(10,204) = 9.19, p < 0.0001; reaction time, F(10,214) = 2.75, p = 0.0033). These effects were only observed on the delayed side; ANOVAs of percent correct and reaction time on the nondelayed side found no significant effects (F values < 1.64; p values > 0.2021). Cocaine-treated rats did not differ from controls in any of these measures in the initial trial block, before the delay was increased (t test; not significant).

Figure 3.

A–D, Percentage correct as expressed as the rate at which animals correctly responded on forced choice trials when either delay length (A, B) or reward size (C, D) varied. A, Percentage correct as the delay became longer. B, Percentage correct as the delay on the other side got longer. C, Percentage correct as the reward size on the other side got bigger. D, Percentage correct as the reward size got bigger on that side. The p value reflects the significance level for a main effect of group (cocaine vs saline) in a two-factor ANOVA taking group and delay (or reward) as factors. Error bars indicate SE.

Figure 4.

Reaction times are defined by the time it took for the rat to exit the odor port after odor sampling. A, Reaction time as the delay became longer. B, Reaction time as the delay on the other side got longer. C, Reaction time as the reward size on the other side got bigger. D, Reaction time as the reward size got bigger. The p value reflects the significance level for a main effect of group (cocaine vs saline) in a two-factor ANOVA taking group and delay (or reward) as factors. Error bars indicate SE.

Cocaine-treated animals are hypersensitive to reward size

To determine whether the altered sensitivity to discounted reward reflected a more general change in sensitivity to reward value, we conducted a second probe test in which we made the preferred well relatively less valuable by systematically increasing the reward magnitude in the nonpreferred well. Effects are illustrated in Figure 2B. Although both groups shifted choices to the larger rewards, cocaine-treated rats did so more rapidly, suggesting that cocaine-treated animals were more sensitive to changes in reward size (Fig. 2B). Consistent with this interpretation, a two-factor ANOVA revealed significant main effects of treatment (F(1,84) = 6.54; p = 0.0123) and reward size (F(4,84) = 5.73; p = 0.0004).

Cocaine-treated rats also exhibiting less accurate and longer latency responses to the less-valued, small reward well (Figs. 3, 4). Two-factor ANOVAs of performance at this well also revealed significant main effects of treatment (F(1,74) = 4.77; p = 0.0321) and reward (F(4,74) = 4.3; p = 0.0035). Analysis of reaction time revealed a main effect of treatment (F(1,79) = 5.4; p < 0.0193) but not of reward (F(4,79) = 1.36; p = 0.2552). ANOVAs on percent correct and reaction time on the opposite side showed no effects (F values < 2.23; p values > 0.1397). Cocaine-treated rats showed no differences in any of these measures in the initial trial block (t test; not significant).

Discussion

Addicts are generally described as impulsive, prone to poor decisions that seem to maximize immediate reward over long term benefit. This is reflected in reports that addicts are impulsive in delay discounting tasks (Monterosso et al., 2001; Coffey et al., 2003). This deficit may reflect persistent drug-induced changes in areas that are critical for discounting delayed rewards. Consistent with this proposal, we found that exposure to cocaine increased the sensitivity of rats to delay and reward magnitude.

These results were obtained by pitting changes in delay and reward magnitude against the initial bias of each animal. Importantly, this bias was present during performance of both delay and reward blocks, and was unaffected by cocaine exposure. Thus, cocaine treatment altered sensitivity to delay length and reward size without affecting the strength of the initial well bias.

Notably, the difference between treatment groups became less clear as trial blocks progressed. This may reflect the contribution of neural circuits not affected by cocaine that compensate for disrupted delay/reward processing, albeit at a slower rate. Another possibility is that cocaine-treated rats were initially more flexible. This seems unlikely because studies have demonstrated that animals chronically exposed to psychostimulants are actually less flexible (Jentsch et al., 2002; Schoenbaum et al., 2004) and more prone to forming habits (Nelson and Killcross, 2006). Still another possibility is that cocaine-treated animals extinguished response-reward contingencies faster than controls. If true, the smaller number of delayed reward choices would result from weaker representations of response-reward contingencies; however, cocaine-treated animals do not appear to extinguish any faster than controls in other settings (Schoenbaum and Setlow, 2005).

Putting these alternative interpretations aside, these findings address two important shortcomings in the literature. First, in humans, it is unclear whether deficits in delay discounting tasks are caused by drug exposure or whether they reflect a pre-existing condition. Our results show that the deficit can be caused by exposure to addictive drugs (Simon et al., 2006). Second, because delayed-discounting tasks typically confound delay and reward magnitude, it is not clear whether deficits reflect selective impairments in discounting or more general changes in reward valuation mechanisms or both. Although we cannot rule out independent effects of cocaine on discounting and perception of reward magnitude, the most parsimonious explanation of our results is that cocaine exposure has a general effect on reward valuation mechanisms.

Interestingly, hypersensitivity to delay and size would actually counteract each other in normal discounting tasks. According to our data, sensitized rats would be more motivated to respond for the large reward and also show greater sensitivity to the delay, leading to more or less impulsive behavior depending on what combination of delays and reward sizes were tested (Kheramin et al., 2002). Consistent with this, discounting behavior has been somewhat variable (Logue et al., 1992; Charrier and Thiebot, 1996; Evenden and Ryan, 1996; Richards et al., 1999; Monterosso et al., 2001; Bechara et al., 2002; de Wit et al., 2002; Coffey et al., 2003; Paine et al., 2003; Cardinal et al., 2004; Simon et al., 2006).

Fundamentally, however, these data are consistent with the prevailing notion that addicts are impulsive. Notably, a preference for immediate over delayed rewards can also be caused by acute administration of dopaminergic agents (Logue et al., 1992; Charrier and Thiebot, 1996; Evenden and Ryan, 1996; Cardinal et al., 2000; Wade et al., 2000). Thus, changes in delay sensitivity in rats exposed to cocaine may be the result of increased sensitivity to dopamine. According to recent proposals, phasic dopamine reflects error signaling or unexpected reward (Hollerman and Schultz, 1998; Waelti et al., 2001). Increased sensitivity of target regions would amplify this signal, thereby causing cocaine-treated rats to bias their choices toward more valuable rewards (e.g., immediate or larger). Increased sensitivity could also affect responses to tonic levels of dopamine that have been proposed to signal the average rate of reward (Niv et al., 2005, 2006). As a result, waiting for the delayed reward would be more costly, leading cocaine-treated rats to choose the immediate reward at shorter delays. Such an effect would be consistent with evidence that addicts remain sensitive to the costs of rewards (Carroll, 1993; Grossman and Chaloupka, 1998; Higgins et al., 2004; Redish, 2004; Negus, 2005).

Finally, the finding that cocaine-treated rats are hypersensitive to the relative value of different available outcomes is at odds with recent reports that animals exposed to psychostimulants are insensitive to the value of expected outcomes. For example, animals exposed to psychostimulants are unable to modify conditioned responding when the predicted food is devalued (Schoenbaum and Setlow, 2005; Nelson and Killcross, 2006; Schoenbaum et al., 2006a) or after reversal (Jentsch et al., 2002; Schoenbaum et al., 2004). Similarly, addicts are slower to change their initial choices in gambling tasks to avoid large penalties (Bechara et al., 2002). These results suggest that exposure to psychostimulants makes animals less sensitive to the value of expected outcomes.

One way to reconcile these results would be if drug-induced changes in these circuits altered the dynamic range in which outcomes could be effectively represented, thereby shifting neural resources to representation of appetitive outcomes at the expense of aversive outcomes. Such a shift would result in hypersensitivity to relative differences in the value of a reward, while at the same time diminishing the ability of animals to respond properly to penalties. This explanation would be consistent with the studies cited above and with recording studies, in which we found that neurons in corticolimbic areas in cocaine-treated rats are less responsive to cues that predict a quinine penalty but more responsive to cues that predict a sucrose reward (Calu et al., 2005; Schoenbaum et al., 2006b; Stalnaker et al., 2006).

Footnotes

This work was supported by National Institute on Drug Abuse Grant R01-DA015718 (G.S.) and National Institute of Neurological Disorders and Stroke Grant T32-NS07375 (M.R.).

References

- Bechara A, Dolan S, Hindes A. Decision-making and addiction (part II): myopia for the future or hypersensitivity to reward? Neuropsychologia. 2002;40:1690–1705. doi: 10.1016/s0028-3932(02)00016-7. [DOI] [PubMed] [Google Scholar]

- Burke KA, Franz TM, Gugsa N, Schoenbaum G. Prior cocaine exposure disrupts extinction of fear conditioning. Learn Mem. 2006;13:416–421. doi: 10.1101/lm.216206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calu D, Stalnaker T, Roesch M, Franz T, Schoenbaum G. Basolateral amygdala generates abnormally persistent representations of predicted outcomes and cue value after cocaine exposure. Soc Neurosci Abstr. 2005;31:112.1. [Google Scholar]

- Cardinal RN, Robbins TW, Everitt BJ. The effects of d-amphetamine, chlordiazepoxide, alpha-flupenthixol and behavioural manipulations on choice of signalled and unsignalled delayed reinforcement in rats. Psychopharmacology (Berl) 2000;152:362–375. doi: 10.1007/s002130000536. [DOI] [PubMed] [Google Scholar]

- Cardinal RN, Pennicott DR, Sugathapala CL, Robbins TW, Everitt BJ. Impulsive choice induced in rats by lesions of the nucleus accumbens core. Science. 2001;292:2499–2501. doi: 10.1126/science.1060818. [DOI] [PubMed] [Google Scholar]

- Cardinal RN, Winstanley CA, Robbins TW, Everitt BJ. Limbic corticostriatal systems and delayed reinforcement. Ann NY Acad Sci. 2004;1021:33–50. doi: 10.1196/annals.1308.004. [DOI] [PubMed] [Google Scholar]

- Carroll ME. The economic context of drug and non-drug reinforcers affects acquisition and maintenance of drug-reinforced behavior and withdrawal effects. Drug Alcohol Depend. 1993;33:201–210. doi: 10.1016/0376-8716(93)90061-t. [DOI] [PubMed] [Google Scholar]

- Charrier D, Thiebot MH. Effects of psychotropic drugs on rat responding in an operant paradigm involving choice between delayed reinforcers. Pharmacol Biochem Behav. 1996;54:149–157. doi: 10.1016/0091-3057(95)02114-0. [DOI] [PubMed] [Google Scholar]

- Coffey SF, Gudleski GD, Saladin ME, Brady KT. Impulsivity and rapid discounting of delayed hypothetical rewards in cocaine-dependent individuals. Exp Clin Psychopharmacol. 2003;11:18–25. doi: 10.1037//1064-1297.11.1.18. [DOI] [PubMed] [Google Scholar]

- Dawe S, Gullo MJ, Loxton NJ. Reward drive and rash impulsiveness as dimensions of impulsivity: implications for substance misuse. Addict Behav. 2004;29:1389–1405. doi: 10.1016/j.addbeh.2004.06.004. [DOI] [PubMed] [Google Scholar]

- de Wit H, Enggasser JL, Richards JB. Acute administration of d-amphetamine decreases impulsivity in healthy volunteers. Neuropsychopharmacology. 2002;27:813–825. doi: 10.1016/S0893-133X(02)00343-3. [DOI] [PubMed] [Google Scholar]

- Evenden JL, Ryan CN. The pharmacology of impulsive behaviour in rats: the effects of drugs on response choice with varying delays of reinforcement. Psychopharmacology (Berl) 1996;128:161–170. doi: 10.1007/s002130050121. [DOI] [PubMed] [Google Scholar]

- Grossman M, Chaloupka FJ. The demand for cocaine by young adults: a rational addiction approach. J Health Econ. 1998;17:427–474. doi: 10.1016/s0167-6296(97)00046-5. [DOI] [PubMed] [Google Scholar]

- Herrnstein RJ. Relative and abflute strength of response as a function of frequency of reinforcement. J Exp Anal Behav. 1961;4:267–272. doi: 10.1901/jeab.1961.4-267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Higgins ST, Heil SH, Lussier JP. Clinical implications of reinforcement as a determinant of substance use disorders. Annu Rev Psychol. 2004;55:431–461. doi: 10.1146/annurev.psych.55.090902.142033. [DOI] [PubMed] [Google Scholar]

- Ho MY, Mobini S, Chiang TJ, Bradshaw CM, Szabadi E. Theory and method in the quantitative analysis of “impulsive choice” behaviour: implications for psychopharmacology. Psychopharmacology (Berl) 1999;146:362–372. doi: 10.1007/pl00005482. [DOI] [PubMed] [Google Scholar]

- Hollerman JR, Schultz W. Dopamine neurons report an error in the temporal prediction of reward during learning. Nat Neurosci. 1998;1:304–309. doi: 10.1038/1124. [DOI] [PubMed] [Google Scholar]

- Jentsch JD, Taylor JR. Impulsivity resulting from frontostriatal dysfunction in drug abuse: implications for the control of behavior by reward-related stimuli. Psychopharmacology (Berl) 1999;146:373–390. doi: 10.1007/pl00005483. [DOI] [PubMed] [Google Scholar]

- Jentsch JD, Olausson P, De La Garza R, II, Taylor JR. Impairments of reversal learning and response perseveration after repeated, intermittent cocaine administrations to monkeys. Neuropsychopharmacology. 2002;26:183–190. doi: 10.1016/S0893-133X(01)00355-4. [DOI] [PubMed] [Google Scholar]

- Kalenscher T, Windmann S, Diekamp B, Rose J, Gunturkun O, Colombo M. Single units in the pigeon brain integrate reward amount and time-to-reward in an impulsive choice task. Curr Biol. 2005;15:594–602. doi: 10.1016/j.cub.2005.02.052. [DOI] [PubMed] [Google Scholar]

- Kheramin S, Body S, Mobini S, Ho MY, Velazquez-Martinez DN, Bradshaw CM, Szabadi E, Deakin JF, Anderson IM. Effects of quinolinic acid-induced lesions of the orbital prefrontal cortex on inter-temporal choice: a quantitative analysis. Psychopharmacology (Berl) 2002;165:9–17. doi: 10.1007/s00213-002-1228-6. [DOI] [PubMed] [Google Scholar]

- Logue AW, Tobin H, Chelonis JJ, Wang RY, Geary N, Schachter S. Cocaine decreases self-control in rats: a preliminary report. Psychopharmacology (Berl) 1992;109:245–247. doi: 10.1007/BF02245509. [DOI] [PubMed] [Google Scholar]

- Mobini S, Body S, Ho MY, Bradshaw CM, Szabadi E, Deakin JF, Anderson IM. Effects of lesions of the orbitofrontal cortex on sensitivity to delayed and probabilistic reinforcement. Psychopharmacology (Berl) 2002;160:290–298. doi: 10.1007/s00213-001-0983-0. [DOI] [PubMed] [Google Scholar]

- Monterosso J, Ehrman R, Napier KL, O'Brien CP, Childress AR. Three decision-making tasks in cocaine-dependent patients: do they measure the same construct? Addiction. 2001;96:1825–1837. doi: 10.1046/j.1360-0443.2001.9612182512.x. [DOI] [PubMed] [Google Scholar]

- Negus SS. Effects of punishment on choice between cocaine and food in rhesus monkeys. Psychopharmacology (Berl) 2005;181:244–252. doi: 10.1007/s00213-005-2266-7. [DOI] [PubMed] [Google Scholar]

- Nelson A, Killcross S. Amphetamine exposure enhances habit formation. J Neurosci. 2006;26:3805–3812. doi: 10.1523/JNEUROSCI.4305-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Niv Y, Daw ND, Dayan P. How fast to work: response vigor, motivation and tonic dopamine. Adv Neural Inf Process Syst. 2005;18:1019–1026. [Google Scholar]

- Niv Y, Daw ND, Joel D, Dayan P. Tonic dopamine: opportunity costs and the control of response vigor. Psychopharmacology (Berl) 2006 doi: 10.1007/s00213-006-0502-4. in press. [DOI] [PubMed] [Google Scholar]

- Paine TA, Dringenberg HC, Olmstead MC. Effects of chronic cocaine on impulsivity: relation to cortical serotonin mechanisms. Behav Brain Res. 2003;147:135–147. doi: 10.1016/s0166-4328(03)00156-6. [DOI] [PubMed] [Google Scholar]

- Redish AD. Addiction as a computational process gone awry. Science. 2004;306:1944–1947. doi: 10.1126/science.1102384. [DOI] [PubMed] [Google Scholar]

- Richards JB, Sabol KE, de Wit H. Effects of methamphetamine on the adjusting amount procedure, a model of impulsive behavior in rats. Psychopharmacology (Berl) 1999;146:432–439. doi: 10.1007/pl00005488. [DOI] [PubMed] [Google Scholar]

- Roesch MR, Taylor AR, Schoenbaum G. Encoding of time-discounted rewards in orbitofrontal cortex is independent of value representation. Neuron. 2006;51:509–520. doi: 10.1016/j.neuron.2006.06.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rudebeck PH, Walton ME, Smyth AN, Bannerman DM, Rushworth MF. Separate neural pathways process different decision costs. Nat Neurosci. 2006;9:1161–1168. doi: 10.1038/nn1756. [DOI] [PubMed] [Google Scholar]

- Schoenbaum G, Setlow B. Cocaine makes actions insensitive to outcomes but not extinction: implications for altered orbitofrontal-amygdalar function. Cereb Cortex. 2005;15:1162–1169. doi: 10.1093/cercor/bhh216. [DOI] [PubMed] [Google Scholar]

- Schoenbaum G, Roesch MR, Stalnaker TA. Orbitofrontal cortex, decision-making and drug addiction. Trends Neurosci. 2006a;29:116–124. doi: 10.1016/j.tins.2005.12.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoenbaum G, Stalnaker TA, Roesch MR. Ventral striatum fails to represent bad outcomes after cocaine exposure. Soc Neurosci Abstr. 2006b;32:485.16. [Google Scholar]

- Schoenbaum G, Saddoris MP, Ramus SJ, Shaham Y, Setlow B. Cocaine-experienced rats exhibit learning deficits in a task sensitive to orbitofrontal cortex lesions. Eur J Neurosci. 2004;19:1997–2002. doi: 10.1111/j.1460-9568.2004.03274.x. [DOI] [PubMed] [Google Scholar]

- Simon NW, Mendez IA, Setlow B. Cocaine exposure causes long-term increases in impulsive choice. Soc Neurosci Abstr. 2006;32:485.15. doi: 10.1037/0735-7044.121.3.543. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stalnaker TA, Roesch MR, Franz TM, Burke KA, Schoenbaum G. Abnormal associative encoding in orbitofrontal neurons in cocaine-experienced rats during decision making. Eur J Neurosci. 2006;24:2643–2653. doi: 10.1111/j.1460-9568.2006.05128.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wade TR, de Wit H, Richards JB. Effects of dopaminergic drugs on delayed reward as a measure of impulsive behavior in rats. Psychopharmacology (Berl) 2000;150:90–101. doi: 10.1007/s002130000402. [DOI] [PubMed] [Google Scholar]

- Waelti P, Dickinson A, Schultz W. Dopamine responses comply with basic assumptions of formal learning theory. Nature. 2001;412:43–48. doi: 10.1038/35083500. [DOI] [PubMed] [Google Scholar]

- Winstanley CA, Theobald DE, Cardinal RN, Robbins TW. Contrasting roles of basolateral amygdala and orbitofrontal cortex in impulsive choice. J Neurosci. 2004;24:4718–4722. doi: 10.1523/JNEUROSCI.5606-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]