Abstract

People make sense of continuous streams of observed behavior in part by segmenting them into events. Event segmentation seems to be an ongoing component of everyday perception. Events are segmented simultaneously at multiple timescales, and are grouped hierarchically. Activity in brain regions including the posterior temporal and parietal cortex and lateral frontal cortex increases transiently at event boundaries. The parsing of ongoing activity into events is related to the updating of working memory, to the contents of long-term memory, and to the learning of new procedures. Event segmentation might arise as a side effect of an adaptive mechanism that integrates information over the recent past to improve predictions about the near future.

Making sense by segmenting

Imagine walking with a friend to a coffee shop. If asked to describe this activity in more detail you might list a few of the events that make it up. The events listed could be broken up by changes in the physical features of the activity, such as location: ‘We started out by going down to the laboratory. We grabbed our coats and put them on. Then we walked out of the building to the corner by the subway station…’ Or, they could be broken up by changes in conceptual features, such as your goals: ‘We started our walk talking about how much construction is going on. When the topic turned to the new building with the coffee shop we decided to head over there to give it a try…’ Such descriptions are typical of how people talk about events, and they illustrate something important about perception: people make sense of a complex dynamic world in part by segmenting it into a modest number of meaningful units. Recent research on event perception reveals that, as an ongoing part of normal perception, people segment activity into events and subevents. This segmentation is related to core functions of cognitive control and memory encoding, and is subserved by isolable neural mechanisms.

Events and their boundaries

By ‘event’ we mean a segment of time at a given location that is conceived by an observer to have a beginning and an end [1]. In particular we focus on the events that make up everyday life on the timescale of a few seconds to tens of minutes – things like opening an envelope, pouring coffee into a cup, changing the diaper of a baby or calling a friend on the phone. Event Segmentation Theory (EST) [2] (see Glossary) proposes that perceptual systems spontaneously segment activity into events as a side effect of trying to anticipate upcoming information (see Box 1). When perceptual or conceptual features of the activity change, prediction becomes more difficult and errors in prediction increase transiently. At such points, people update memory representations of ‘what is happening now’. The processing cascade of detecting a transient increase in error and updating memory is perceived as the subjective experience that a new event has begun.

Box 1. One step ahead of the game

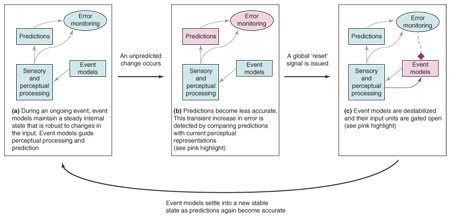

Reacting quickly to changes is good – but anticipating them is better. Humans and many other animals make predictions about the near future when perceiving and acting. For example, when watching someone wrap a present you can make precise predictions about the trajectories of the arms based on the recent paths of the arms combined with previously learned information about how bodies typically move. Or, you can make broader predictions about sequences of intentional actions based on the recent sequence of actions combined with knowledge about present-wrapping and inferences about the current goals of the actor. Sequential predictions depend in part on a neural pathway involving the anterior cingulate cortex, which might represent the disparity between predictions and outcomes [54], and subcortical catecholamine nuclei, which might broadcast error signals throughout the brain [55,56]. Event Segmentation Theory (EST, [2]) proposes that the perception of event boundaries arises as a side effect of using prediction for perception. According to EST, perceivers form working memory representations that capture 'what is happening now', called event models. When predictions are accurate, event models are maintained in a stable state to guide prediction, integrating information over the recent past. When prediction errors transiently increase relative to their current baseline, event models are updated based on currently present information (Figure I). This updating affects what information is currently actively maintained, and the transient increase in processing during updating produces more robust encoding into long-term memory. The error-based updating mechanism has been modeled in a set of connectionist simulations [57]. If the environment contains sequences of inputs that recur in the training set, the updating mechanism can use such predictable sequential structure to improve prediction.

Figure I.

A schematic depiction of how event segmentation emerges from perceptual prediction and the updating of event models. (a) Most of the time, sensory and perceptual processing leads to accurate predictions, guided by event models that maintain a stable representation of the current event. Event models are robust to moment-to-moment fluctuations in the perceptual input. (b) When an unexpected change occurs, prediction error increases and this is detected by error monitoring processes. (c) The error signal is broadcast throughout the brain. The states of event models are reset based on the current sensory and perceptual information available; this transient processing is an event boundary. Prediction error then decreases and the event models settle into a new stable state.

Segmentation tasks

How can a researcher discover when a person perceives that a new event has begun? One simple but surprisingly powerful answer is simply to ask them, usually by having them press a button [3]. Viewers tend to identify event boundaries at points of change in the stimulus, ranging from physical changes, such as changes in the movements of the actors, to conceptual changes, such as changes in goals or causes. To investigate movements of actors, Newtson et al. [4] asked participants to segment movies of an actor conducting everyday activities. The physical pose of the actor was coded at one-second intervals. Event boundaries tended to be marked at larger changes in pose. Zacks [5] extended this qualitative finding to a quantitative analysis of movement variables in a set of studies using simple animated stimuli. Event boundaries were predicted by changes in movement parameters including the acceleration of the objects and their location relative to one another (see also [Ref. 6].) Larger physical changes have been studied using commercial cinema as a stimulus, in which the locations and temporal setting of characters can change from shot to shot. In one study, such changes were found to predict where viewers segmented Hollywood movies [7]. Conceptual changes that are correlated with event boundaries include changes in goals of actors, in causal relations and in interactions amongst characters [8,9].

Automatic event segmentation

Does asking people for conscious judgments about event boundaries really tell us anything about ongoing perception outside the laboratory? Event segmentation tasks have good intersubjective agreement [10] and reliability [11], which suggests they tap into ongoing processing. Nonetheless, a basic limitation of directly applying segmentation tasks is that they might interfere with the ongoing perceptual processes they attempt to measure. Stronger evidence that event segmentation is automatic comes from implicit behavioral measures and from neurophysiological measures that require no overt task.

Reading-time evidence for automaticity of event segmentation

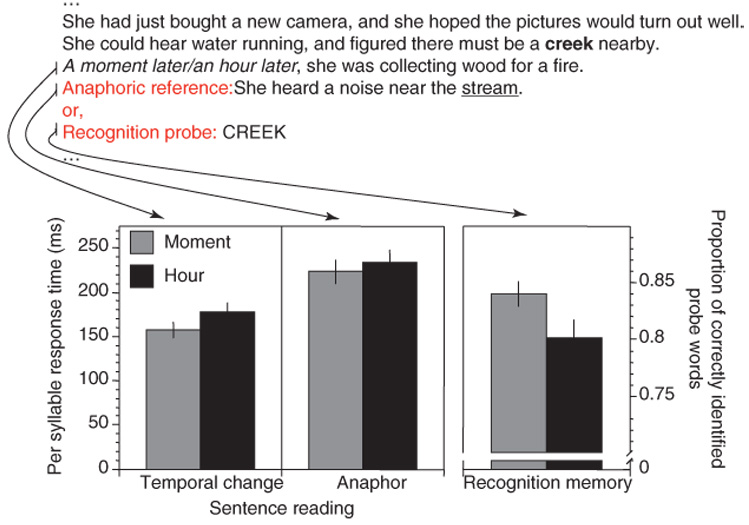

Studies of the pace of reading during narrative text comprehension indicate that readers slow down at event boundaries. These studies arise mostly from discourse comprehension theories, which propose that readers construct a series of mental models of a situation described by a narrative. In the Structure Building Framework [12], new mental models are initiated when the text refers to a new set of people, places or things, when the action in the text changes its temporal or spatial location, or when a new causal sequence is initiated. In the Event Indexing Model [13], new mental models are initiated when there is a change in space, time, protagonist, objects, goals or causes. When the changes identified by these models occur, readers have been found to read more slowly. This is consistent with the processing load hypothesis put forward by the Event Indexing Model, which states that changes in situational features increase the difficulty of integrating newly encountered information into the current mental model. In one such study [14], participants read two literary narratives one sentence at a time on a computer screen, and reading time was recorded. The texts were coded to identify changes in time, space and causal contingency. Reading time increased consistently at changes in time and cause; reading time sometimes increased at spatial changes, but this depended on the previous knowledge of the reader and task goals. More recent work has found that reading time increases at shifts in characters and their goals [15,16]. Such results support the notion that reading times increase at event boundaries because, as noted previously, event boundaries are associated with changes in time, space, causes, characters and goals. Although most reading-time studies have not measured event segmentation, two recent studies have directly compared event segmentation and narrative reading time. In one [17], cues to event boundaries were experimentally manipulated by changing the temporal contiguity of events (Figure 1). Phrases marking a temporal discontinuity (‘an hour later’) increased the likelihood that a clause would be identified as an event boundary during segmentation, and slowed reading during self-paced comprehension. The second study looked at the relation between event segmentation and reading time correlationally [8]. Two groups of participants read narratives about a young boy. One group segmented them into events; the other read them one clause at a time while reading time was recorded. Clauses in which more readers identified event boundaries produced longer reading times. These results support the view that when comprehenders encounter boundaries between events they perform extra processing operations.

Figure 1.

Temporal changes are perceived as event boundaries, and this affects working memory [17]. Participants read narratives containing sentences (marked here in italics) that either indicated a significant interval of time had passed ('an hour later') or not ('a moment later'). These temporal reference sentences often were identified as event boundaries – particularly in the 'hour later' condition. Subsequent experiments assessed memory for objects mentioned before the temporal shift (creek, in this example). Memory was tested in two ways: by measuring the time it took to read an anaphoric reference to the previously mentioned object, or by asking directly whether the participant recognized the word as having been read. Bottom left: Sentences in the 'hour later' condition were read more slowly than the nearly identical 'moment later' versions. Bottom middle: Anaphoric references to previously mentioned objects were read more slowly in the 'hour later' condition. Bottom right: Recognition memory for previously mentioned objects was less accurate in the 'hour later' condition. Such results indicate that working memory is updated at event boundaries. Error bars are standard errors.

Neurophysiological evidence for automaticity of event segmentation

Converging evidence for the automaticity of event segmentation comes from noninvasive neurophysiological measures, including functional magnetic resonance imaging (fMRI) and electroencephalography (EEG). These studies have been motivated by the hypothesis that if the brain is undertaking processing that relates to event boundaries, transient changes in brain activity should be observed at those points in time corresponding to event boundaries – whether or not you are attending to event segmentation. In one study [18], participants passively viewed short movies of everyday activities while their brain activity was recorded with fMRI. During the initial viewing and fMRI data recording, participants were asked simply to watch the movies and try to remember as much as possible. In the second phase of the experiment these participants segmented the movies into events. Event boundaries were associated with increases in brain activity during passive viewing in bilateral posterior occipital, temporal and parietal cortex and right lateral frontal cortex. The posterior activation included the MT complex [11], an area associated with the processing of motion [19]. A subsequent study using simple animations of geometric objects found that activity in the MT complex was correlated with the speed of motion of objects, and with the presence of event boundaries [20].

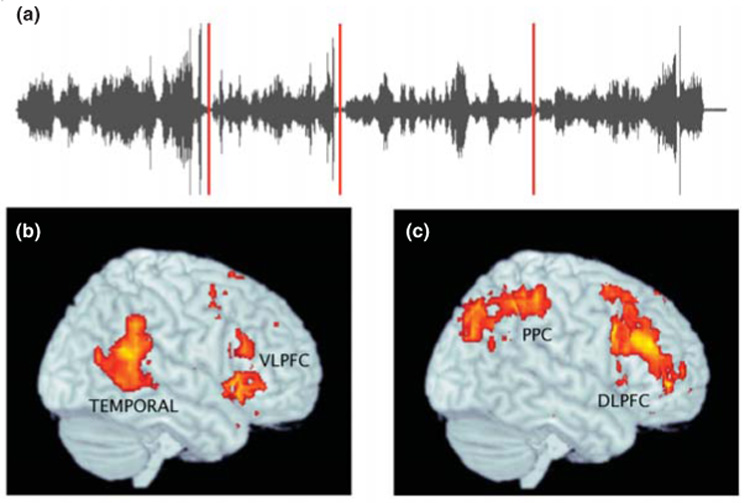

Whereas most of the neurophysiological research on event perception involves visual events, a new study by Sridharan et al. [21] investigated the perception of event structure in music. This study examined the extent to which musically untrained listeners use transitions between movements to segment classical pieces into coarse-grained events. A particular advantage in studying musical movements is that they provide objective, normative events. As Figure 2 illustrates, two dissociable networks in the right hemisphere were selectively responsive at transitions between movements: an early-responding ventral network that included the ventrolateral prefrontal cortex and posterior temporal cortex, and a late-responding dorsal network that included the dorsolateral prefrontal cortex and posterior parietal cortex.

Figure 2.

The boundaries between musical movements elicited increased activity at a network of brain regions. (a) In Western concert music, symphonies are made up of movements. The sound wave is plotted, and the breaks between movements are illustrated with red lines. In [21], musically untrained participants listened to two 8–10-min segments of symphonies by William Boyce, consisting of movements lasting an average of 1 min and 10 s, while their brain activity was recorded using fMRI. (b) A ventral network including the ventrolateral prefrontal cortex (VLPFC) and posterior temporal cortex (TEMPORAL) increased in activity first at movement boundaries. (c) A dorsal network including the dorsolateral prefrontal cortex (DLPFC) and posterior parietal (PPC) increased in activity slightly later. Both networks were lateralized to the right hemisphere. The authors interpreted these data by suggesting that the response of the ventral network reflected the processing of violations of musical expectancy, whereas the response of the dorsal network reflected consequent top-down modulation of the processing of new musical information. Reproduced, with permission, from [21].

fMRI data also have provided evidence for automatic event segmentation during reading. Speer et al. [22] got participants to read narrative texts, one word at a time, while brain activity was recorded with fMRI. Participants subsequently segmented the texts into events. Brain activity increased at the later-identified event boundaries in a set of regions that corresponded substantially with the regions that increased at event boundaries in movies; these included bilateral regions in medial posterior temporal, occipital and parietal cortex, in the lateral temporal-parietal and anterior temporal cortex, and in the right posterior dorsal frontal cortex.

Converging with the fMRI data, recent experiments using EEG indicate that perceptual processing is modulated at event boundaries on an ongoing basis. In one set of experiments [23], participants viewed movies of goal-directed activities while undergoing EEG recording, after which they segmented the movies into events. Evoked responses were detected at frontal and parietal electrode sites, and these responses were modulated by whether or not participants were familiar with the movies themselves or with the activities they depicted.

Finally, measurements of pupil diameter provide further evidence that information processing increases transiently after the perception of an event boundary. Pupil diameter provides an online measure of cognitive processing load, as demonstrated in studies using a variety of motor and cognitive paradigms [24]. In one study [25], participants viewed movies while their pupil diameters and eye movements were recorded. They then segmented the movies into events. Pupil diameter transiently increased following those points that were later identified as event boundaries. (Saccades also became more frequent around event boundaries, which might reflect the reorienting of viewers at the beginning of a new event.)

Together, the reading-time, neurophysiological and oculomotor data strongly suggest that there are transient changes in brain activity correlated with the subjective experience that one event has ended and another has begun. We can be confident these effects are independent of task demands such as the conscious intention to segment activity or covert attention to the location of event boundaries, because for all the studies discussed here the crucial reading-time or physiological data were collected before participants were introduced to the event segmentation task. An important question for ongoing research is: to what extent do such effects reflect neural processing that has a causal role in event segmentation, and to what extent do they reflect processing that is correlated with the presence of event boundaries but not a cause or consequence of event segmentation as such?

Hierarchical event perception

Events can be identified at a range of temporal grains, from brief (fine-grained) to extended (coarse-grained). In goal-directed human activity it is natural to think of such events as being hierarchically organized, with groups of fine-grained events clustering into larger units; this is partly because actions are hierarchically organized by goals and subgoals [1]. For example, the activity of making a sandwich includes the coarse-grained events of (i) removing ingredients from the refrigerator, and (ii) assembling them. The event of assembling the ingredients, in turn, might include subevents such as adding meat, adding cheese and spreading mayonnaise. When understanding activities, people seem spontaneously to track the hierarchical grouping of events. Evidence for hierarchical grouping has been found by asking a viewer or reader to segment an activity twice, on different occasions, once to identify coarse-grained event boundaries and once to identify fine-grained boundaries. If coarse-grained events subsume a group of finer-grained events, it would be expected that each coarse event boundary would fall slightly later in time than the fine event boundary to which it is closest. That is exactly what is observed [26]. Hierarchical dividing of coarse events into finer-grained events also would predict that each coarse event boundary should fall near one of the fine boundaries; this is also the case [27].

Events on different temporal grains can be sensitive to different features of activities. Running descriptions by viewers of coarse- and fine-grained events in movies has provided evidence for such differences [27]. Descriptions of coarse-grained events focused on objects, using more precise nouns and less precise verbs. Descriptions of fine-grained events focused on actions on those objects, using more precise verbs but specifying the objects less precisely. If different temporal grains depend on different features, you would expect that segmentation of fine- or coarse-grained events could be selectively impaired. Selective impairments of coarse-grained segmentation have been found in patients with frontal lobe lesions [28] and in patients with schizophrenia [29] (Box 2).

Box 2. Event segmentation and neuropathology

Recent results suggest that event segmentation is impaired as a result of frontal lobe lesions, schizophrenia and neuropathology associated with aging. In a pair of studies by Zalla and colleagues [27,28], participants segmented brief movies of everyday activities and then completed recall and recognition memory tests. Compared with controls, patients with frontal lobe lesions [27] and patients with schizophrenia [28] were less likely to identify normative coarse-grained units. The fine-grained segmentation of the patient groups did not differ from that of the controls.

Zacks et al. [52] investigated the effect of aging and Alzheimer-type dementia on event segmentation and event memory. Healthy younger adults, healthy older adults and older adults with mild dementia segmented movies of everyday activities. They then completed a recognition memory test for pictures from each movie and a test of order memory. Segmentation of older adults differed more from group norms than did that of younger adults. This was particularly pronounced for those with dementia, and held both for fine- and coarse-grained segmentation. Older adults (particularly those with dementia) also performed less well on the memory tests. Moreover, those older adults who segmented less well also remembered less.

One possibility is that damage to prefrontal cortex is the common cause of impaired event segmentation in all three of these groups. This could reflect selective damage to the neural substrate of working memory representations of events (see Box 1). Alternatively, the event segmentation impairments of these groups could reflect damage to other mechanisms that participate in event segmentation, such as perceptual processing or error monitoring. Further studies including other conditions such as Parkinson disease and obsessive-compulsive disorder will help to improve our understanding of the causes of impairments in event segmentation.

Parsing ongoing activity into hierarchically organized events and subevents might be important for relating ongoing perception to knowledge about activities. Studies of memory for events and story comprehension suggest that people use hierarchically organized event representations (scripts or schemata) to understand a particular activity in relation to similar previously experienced activities (see, e.g., [Refs 30,31]). Infants as young as 12 months seem to be sensitive to the hierarchical organization of behavior [32,33] (Box 3) and non-human primates seem to be sensitive to hierarchical organization in the behavior of conspecifics such that they can use such organization to learn manual skills [34]. Recent research has found that learning of a new procedure by adults can be facilitated by explicitly representing the hierarchical structure of the activity, or impaired by misrepresenting that structure [35]. Such learning might be related to the mechanisms by which people form ‘chunks’ in semantic knowledge [36]. In sum, people seem to track the hierarchical structure of activity during perception because this enables them to use prior knowledge for understanding, and to adapt that prior knowledge to learning new skills.

Box 3. Developing into an adult event segmentation system

How does event segmentation emerge in early development? There is evidence that some components of this ability are present at 10–11 months of life. The relevant studies have used two infant-friendly paradigms: habituation paradigms, in which infants tend to look longer at stimuli they perceive as more different from what came just before; and cross-modal matching paradigms, in which infants tend to look longer at visual stimuli that they perceive as synchronized with auditory stimuli.

In one habituation experiment [58], infants as young as 6 months were familiarized with displays in which a puppet jumped two or three times; they then looked longer when tested on sequences with a different number of jumps. In another habituation experiment [59], 10–11-month-old infants were familiarized with 4-s movies of everyday actions, and then shown movies with 1.5-s still frames inserted at different moments. They looked longer if the still frame interrupted an intentional action than if it came at the completion of the action.

In a study using the cross-modal matching paradigm [60], 10–11-month-old infants simultaneously viewed two visual displays; in each, an actor performed sequences of simple actions involving objects in, on and next to containers. While both action displays were simultaneously displayed, a series of tones sounded that were synchronized to the boundaries between actions in one of the visual displays. Infants looked longer at the display whose action boundaries matched the tones.

By 12 months, infants seem to be sensitive to how sequences of actions are grouped to achieve higher-level goals. In one habituation study [61], infants were familiarized to action sequences involving pulling objects toward the experimenter. They then looked longer at actions that were physically similar but not goal-appropriate, compared with sequences that were physically dissimilar but were goal-appropriate (see also [Refs 62,63]). By 24 months, toddlers clearly code action in terms of hierarchical goals, and this affects memory recall [64].

Memory for events

Event boundaries relate systematically to both the online maintenance of information (working memory) and the permanent storage of information for later retrieval (long-term memory). According to EST [2], this is because at event boundaries people update representations of the current event, which frees information from working memory, and orient to incoming perceptual information, which encodes it particularly strongly for long-term memory. Evidence for working memory updating at event boundaries comes from the comprehension of text narratives, picture stories and cinema.

Working memory for events in text

Studies of memory access during text reading generally have not directly measured event boundaries, but have found evidence that those cues associated with event boundaries reduce memory access. Memory for recently mentioned objects is poorer after reading sentences that indicate a shift in time or space [37–41]. As noted previously, one study [17] experimentally manipulated the presence of event boundaries in narratives by varying the passage of time indicated in an auxiliary phrase. As is shown in Figure 1, implicit and explicit measures of memory indicated that information became less accessible following a time shift.

Working memory for events in pictures

Gernsbacher [42] got participants to identify episode boundaries in a picture story. New participants then viewed the picture story, and from time to time were probed to discriminate pictures that had recently been presented in the story from pictures that were left–right reversed. Recognition was better for images from within the current episode than for images from the previous episode, indicating that some of the surface information in the pictures was less available once a new event had begun.

Working memory for events in cinema and virtual reality

Converging with the results from text and picture stories, experiments looking at recognition memory for objects in cinema and virtual reality indicate that working memory is updated at event boundaries. In one recent study [43], participants navigated in a virtual reality environment, and memory for recently appearing objects was tested. Memory was reduced after walking through a door into an adjacent room – a probable event boundary. In another recent set of studies [44], participants viewed excerpts from movies that were occasionally interrupted by recognition memory tests for objects that had been on the screen five seconds previously. The information that participants could retrieve about these objects differed systematically depending on whether an event boundary had occurred during those five seconds. Further, neuroimaging data suggested that the basis for responding also differed: retrieval of information about objects from within the current event selectively activated brain areas including the bilateral occipital and lateral temporal cortices and the right inferior lateral frontal cortex, whereas retrieval of information about objects from the previous event selectively activated brain areas including the medial temporal cortex and medial parietal cortex. The medial temporal regions correspond to the hippocampal formation, which is known to have a key role in long-term memory storage and retrieval [45]. This is consistent with the hypothesis that participants depended preferentially on working memory for within-event retrieval and shifted to long-term memory for across-event retrieval – although the information to be retrieved was only a few seconds old.

Long-term memory for events

Of course, the core function of long-term memory is to support access to information over much longer delays. Evidence from tests in which participants retrieve information over delays of minutes to hours indicates that event boundaries serve as anchors in long-term memory. Recognition memory for pictures drawn from event boundaries has been found to be better than memory for pictures drawn from points between the boundaries [46]. Manipulations that affect the perception by viewers of the locations of event boundaries in a film also affect their memory for the film when tested later. Boltz [47] got participants to watch feature films with or without embedded commercials. The commercials were inserted at event boundaries, or between event boundaries. Memory for the activity was better for movies without commercials and for movies in which commercials were inserted at event boundaries. Similarly, Schwan and Garsoffky [48] got participants to view movies of everyday events with or without deletions. The deletions were either of segments of time surrounding an event boundary, or segments of time within an event. The researchers found that recall was better for events when there were no deletions and when the deletions were of segments within an event, preserving the event boundaries. Segmentation grain has also shown an impact on memory: recall for details is better after fine-grained segmentation than after coarse-grained segmentation [49–51].

If event boundaries serve as anchors for long-term memory encoding, then individuals who segment an activity effectively should have better later memory for it. As described in Box 2, recent evidence supports this hypothesis [52].

How does segmentation help?

Why do people segment ongoing activity into events? Segmentation results from the continual anticipation of future events. This anticipation enables you adaptively to encode structure from the continuous perceptual stream, to understand what an actor will do next, and to select your own future actions [53]. Segmentation simplifies, enabling you to treat an extended interval of time as a single chunk. If you segment well, this chunking saves on processing resources and improves comprehension. However, to segment well, you must identify the correct units of activity – the correct events. One possible mechanism for identifying events is to monitor your ongoing comprehension and break activity into units when comprehension begins to falter (Box 1). Once events have been individuated you can start to learn to recognize sequences of events and plan reactions based on such sequences. Grouping fine-grained events hierarchically into larger units enables you to learn not just rote one-after-the-other relations but more complex ones. One such relation is partial ordering, which is ubiquitous in problem-solving and planning. Think of baking a cake, where there are several different orders in which you could mix the ingredients, but all those steps must be complete before the cake goes in the oven.

Events segmented during perception also can form the units for memory encoding, enabling you to store compact representations of extended activities. Identifying the correct events facilitates memory, much as it is easier to remember a sequence of vocalizations if it comes from a language you know, enabling you to segment it into words, clauses and sentences.

Event segmentation is automatic and important for perception, comprehension, problem-solving and memory (See Box 4 for open questions). None of this would be true if the structure of the world were not congenial to segmentation. If sequential dependencies were not predictable, if activity were not hierarchically organized, there would be no advantage to imposing chunking and grouping on the stream of behavior. In this regard, as in many others, human perceptual systems seem to be specialized information-processing devices that are tuned to the structure of their environment.

Box 4. Questions for future research

Recent work on event segmentation raises a number of interesting challenges for future studies. These relate to both the neural mechanisms of perception and to broader aspects of cognition and memory.

Changes have been observed in the central nervous system and in the oculomotor system at those points identified by observers as event boundaries. Which changes reflect neural processing that has a causal role in event segmentation, and which reflect processing that is correlated with the presence of event boundaries but is not a cause or consequence of event segmentation as such?

Segmenting activity hierarchically is associated with better learning of procedural skills. Is hierarchical understanding of an activity necessary to learn it, or does hierarchical encoding simply tend to occur in the same learning circumstance that produces good performance?

How do prior knowledge and expectation affect which points are identified as event boundaries? There is some evidence that participants segment familiar activities more at a coarser grain than unfamiliar activities [6], although this effect is not consistent in the literature [27]. Although the mechanisms underlying the role of event knowledge in segmentation remain unclear, one possibility is that possessing a schema or script for a type of activity might reduce prediction error during perception of that activity.

How is event segmentation related to the understanding and planning of self-initiated events? Ideomotor theories of action control [65–67] propose that actions are coded in terms of the goals they are intended to satisfy rather than motor movements themselves. Similar to the predictive nature of event perception, actions are preceded by the anticipation of a desired perceptual effect [53,68]. A recent theory of perception and action planning expanded on this idea by proposing that the perception of events and production of events (actions) share a representational medium [69], possibly subserved by a mirror system that maps the actions of others onto your own action representations [70]. In such accounts, the events that you are currently producing constrain the events that you can concurrently perceive.

Acknowledgements

Preparation of this article was supported in part by grants R01-MH070674 and T32 AG000030–31 from the National Institutes of Health. We thank Devarajan Sridharan for providing figure materials, and thank the following colleagues for thoughtful comments on the manuscript: Dare Baldwin, Joe Magliano, G.A. Radvansky, Stephan Schwan, Ric Sharp, Khena Swallow and Barbara Tversky.

Glossary

- Event model

an actively maintained representation of the current event, which is updated at perceptual event boundaries.

- Event segmentation

the perceptual and cognitive processes by which a continuous activity is segmented into meaningful events.

- Temporal grain

events can be perceived on a range of temporal grains, or timescales, from a second or less to tens of minutes.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Zacks JM, Tversky B. Event structure in perception and conception. Psychol. Bull. 2001;127:3–21. doi: 10.1037/0033-2909.127.1.3. [DOI] [PubMed] [Google Scholar]

- 2.Zacks JM, et al. Event perception: A mind/brain perspective. Psychol. Bull. 2007;133:273–293. doi: 10.1037/0033-2909.133.2.273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Newtson D. Attribution and the unit of perception of ongoing behavior. J. Pers. Soc. Psychol. 1973;28:28–38. [Google Scholar]

- 4.Newtson D, et al. Objective basis of behavior units. J. Pers. Soc. Psychol. 1977;12:847–862. [Google Scholar]

- 5.Zacks JM. Using movement and intentions to understand simple events. Cognit. Sci. 2004;28:979–1008. [Google Scholar]

- 6.Hard BM, et al. Making sense of abstract events: Building event schemas. Mem. Cognit. 2006;34:1221–1235. doi: 10.3758/bf03193267. [DOI] [PubMed] [Google Scholar]

- 7.Magliano JP, et al. Indexing space and time in film understanding. Appl. Cognit. Psychol. 2001;15:533–545. [Google Scholar]

- 8.Speer NK, et al. Perceiving narrated events. In: Forbus K, et al., editors. Proceedings of the 26th Annual Meeting of the Cognitive Science Society. Chicago, IL: Cognitive Science Society; 2004. p. 1637. [Google Scholar]

- 9.Zacks JM, et al. The human brain’s response to change in cinema. Abstr. Psychon. Soc. 2006;11:9. [Google Scholar]

- 10.Newtson D. Foundations of attribution: the perception of ongoing behavior. In: Harvey JH, et al., editors. New Directions in Attribution Research. Erlbaum; 1976. pp. 223–248. [Google Scholar]

- 11.Speer NK, et al. Activation of human motion processing areas during event perception. Cogn. Affect. Behav. Neurosci. 2003;3:335–345. doi: 10.3758/cabn.3.4.335. [DOI] [PubMed] [Google Scholar]

- 12.Gernsbacher MA. Language Comprehension as Structure Building. Earlbaum; 1990. [Google Scholar]

- 13.Zwaan RA, et al. The construction of situation models in narrative comprehension: An event-indexing model. Psychol. Sci. 1995;6:292–297. [Google Scholar]

- 14.Zwaan RA, et al. Dimensions of situation model construction in narrative comprehension. J. Exp. Psychol. Learn. Mem. Cogn. 1995;21:386–397. [Google Scholar]

- 15.Rinck M, Weber U. Who when where: an experimental test of the event-indexing model. Mem. Cognit. 2003;31:1284–1292. doi: 10.3758/bf03195811. [DOI] [PubMed] [Google Scholar]

- 16.Zwaan RA, et al. Constructing multidimensional situation models during reading. Sci. Stud. Read. 1998;2:199–220. [Google Scholar]

- 17.Speer NK, Zacks JM. Temporal changes as event boundaries: processing and memory consequences of narrative time shifts. J. Mem. Lang. 2005;53:125–140. [Google Scholar]

- 18.Zacks JM, et al. Human brain activity time-locked to perceptual event boundaries. Nat. Neurosci. 2001;4:651–655. doi: 10.1038/88486. [DOI] [PubMed] [Google Scholar]

- 19.Sekuler R, et al. Perception of visual motion. In: Pashler H, Yantis S, editors. Steven’s handbook of experimental psychology: Sensation and perception. 3rd edn. Vol. 1. John Wiley & Sons; 2002. pp. 121–176. [Google Scholar]

- 20.Zacks JM, et al. Visual movement and the neural correlates of event perception. Brain Res. 2006;1076:150–162. doi: 10.1016/j.brainres.2005.12.122. [DOI] [PubMed] [Google Scholar]

- 21.Sridharan D, et al. Neural dynamics of event segmentation in music: Converging evidence for dissociable ventral and dorsal networks. Neuron. 2007;55:521–532. doi: 10.1016/j.neuron.2007.07.003. [DOI] [PubMed] [Google Scholar]

- 22.Speer NK, et al. Human brain activity time-locked to narrative event boundaries. Psychol. Sci. 2007;18:449–455. doi: 10.1111/j.1467-9280.2007.01920.x. [DOI] [PubMed] [Google Scholar]

- 23.Sharp RM, et al. Proceedings of the Annual Meeting of the Cognitive Neuroscience Society. New York: Cognitive Neuroscience Society; 2007. Electrophysiological correlates of event segmentation: how does the human mind process ongoing activity? p. 235. [Google Scholar]

- 24.Beatty J, Lucero-Wagoner B. The pupillary system. In: Cacioppo JT, et al., editors. Handbook of Psychophysiology. Cambridge University Press; 2000. pp. 142–162. [Google Scholar]

- 25.Swallow KM, Zacks JM. Hierarchical grouping of events revealed by eye movements. Abstr. Psychon. Soc. 2004;9:81. [Google Scholar]

- 26.Hard BM, et al. Hierarchical encoding of behavior: translating perception into action. J. Exp. Psychol. Gen. 2006;135:588–608. doi: 10.1037/0096-3445.135.4.588. [DOI] [PubMed] [Google Scholar]

- 27.Zacks JM, et al. Perceiving, remembering, and communicating structure in events. J. Exp. Psychol. Gen. 2001;130:29–58. doi: 10.1037/0096-3445.130.1.29. [DOI] [PubMed] [Google Scholar]

- 28.Zalla T, et al. Perception of action boundaries in patients with frontal lobe damage. Neuropsychologia. 2003;41:1619–1627. doi: 10.1016/s0028-3932(03)00098-8. [DOI] [PubMed] [Google Scholar]

- 29.Zalla T, et al. Perception of dynamic action in patients with schizophrenia. Psychiatry Res. 2004;128:39–51. doi: 10.1016/j.psychres.2003.12.026. [DOI] [PubMed] [Google Scholar]

- 30.Lichtenstein ED, Brewer WF. Memory for goal-directed events. Cognit. Psychol. 1980;12:412–445. [Google Scholar]

- 31.Rumelhart DE. Understanding and summarizing brief stories. In: Laberge D, Samuels SJ, editors. Basic Processes in Reading: Perception and Comprehension. Erlbaum; 1977. pp. 265–303. [Google Scholar]

- 32.Sommerville JA, Woodward AL. Pulling out the intentional structure of action: the relation between action processing and action production in infancy. Cognition. 2005;95:1–30. doi: 10.1016/j.cognition.2003.12.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Bauer PJ, Mandler JM. One thing follows another: effects of temporal structure on 1- to 2-year-olds’ recall of events. Dev. Psychol. 1989;25:197–206. [Google Scholar]

- 34.Byrne RW. Seeing actions as hierarchically organized structures: great ape manual skills. In: Meltzoff A, Prinz W, editors. The Imitative Mind: Development, Evolution, and Brain Bases. Cambridge University Press; 2002. pp. 122–142. [Google Scholar]

- 35.Zacks JM, Tversky B. Structuring information interfaces for procedural learning. J. Exp. Psychol. Appl. 2003;9:88–100. doi: 10.1037/1076-898x.9.2.88. [DOI] [PubMed] [Google Scholar]

- 36.Gobet F, et al. Chunking mechanisms in human learning. Trends Cogn. Sci. 2001;5:236–243. doi: 10.1016/s1364-6613(00)01662-4. [DOI] [PubMed] [Google Scholar]

- 37.Glenberg AM, et al. Mental models contribute to foregrounding during text comprehension. J. Mem. Lang. 1987;26:69–83. [Google Scholar]

- 38.Bower GH, Rinck M. Selecting one among many referents in spatial situation models. J. Exp. Psychol. Learn. Mem. Cogn. 2001;27:81–98. [PubMed] [Google Scholar]

- 39.Morrow DG, et al. Updating situation models during narrative comprehension. J. Mem. Lang. 1989;28:292–312. [Google Scholar]

- 40.Morrow DG, et al. Accessibility and situation models in narrative comprehension. J. Mem. Lang. 1987;26:165–187. [Google Scholar]

- 41.Rinck M, Bower G. Temporal and spatial distance in situation models. Mem. Cognit. 2000;28:1310–1320. doi: 10.3758/bf03211832. [DOI] [PubMed] [Google Scholar]

- 42.Gernsbacher MA. Surface information loss in comprehension. Cognit. Psychol. 1985;17:324–363. doi: 10.1016/0010-0285(85)90012-X. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Radvansky GA, Copeland DE. Walking through doorways causes forgetting: situation models and experienced space. Mem. Cognit. 2006;34:1150–1156. doi: 10.3758/bf03193261. [DOI] [PubMed] [Google Scholar]

- 44.Swallow KM, et al. Perceptual events may be the "episodes" in episodic memory. Abstr. Psychon. Soc. 2007;12:25. [Google Scholar]

- 45.Squire LR, Zola-Morgan S. The medial temporal lobe memory system. Science. 1991;253:1380–1386. doi: 10.1126/science.1896849. [DOI] [PubMed] [Google Scholar]

- 46.Newtson D, Engquist G. The perceptual organization of ongoing behavior. J. Exp. Soc. Psychol. 1976;12:436–450. [Google Scholar]

- 47.Boltz M. Temporal accent structure and the remembering of filmed narratives. J. Exp. Psychol. Hum. Percept. Perform. 1992;18:90–105. doi: 10.1037//0096-1523.18.1.90. [DOI] [PubMed] [Google Scholar]

- 48.Schwan S, Garsoffky B. The cognitive representation of filmic event summaries. Appl. Cognit. Psychol. 2004;18:37–55. [Google Scholar]

- 49.Hanson C, Hirst W. On the representation of events: A study of orientation, recall, and recognition. J. Exp. Psychol. Gen. 1989;118:136–147. doi: 10.1037//0096-3445.118.2.136. [DOI] [PubMed] [Google Scholar]

- 50.Lassiter GD. Behavior perception, affect, and memory. Soc. Cognit. 1988;6:150–176. [Google Scholar]

- 51.Lassiter GD, et al. Memorial consequences of variation in behavior perception. J. Exp. Soc. Psychol. 1988;24:222–239. [Google Scholar]

- 52.Zacks JM, et al. Event understanding and memory in healthy aging and dementia of the Alzheimer type. Psychol. Aging. 2006;21:466–482. doi: 10.1037/0882-7974.21.3.466. [DOI] [PubMed] [Google Scholar]

- 53.Schütz-Bosbach S, Prinz W. Prospective coding in event representation. Cogn. Process. 2007;8:93–102. doi: 10.1007/s10339-007-0167-x. [DOI] [PubMed] [Google Scholar]

- 54.Holroyd CB, Coles MG. The neural basis of human error processing: reinforcement learning, dopamine, and the error-related negativity. Psychol. Rev. 2002;109:679–709. doi: 10.1037/0033-295X.109.4.679. [DOI] [PubMed] [Google Scholar]

- 55.Schultz W, Dickinson A. Neuronal coding of prediction errors. Annu. Rev. Neurosci. 2000;23:473–500. doi: 10.1146/annurev.neuro.23.1.473. [DOI] [PubMed] [Google Scholar]

- 56.Bouret S, Sara SJ. Network reset: a simplified overarching theory of locus coeruleus noradrenaline function. Trends Neurosci. 2005;28:574–582. doi: 10.1016/j.tins.2005.09.002. [DOI] [PubMed] [Google Scholar]

- 57.Reynolds JR, et al. A computational model of event segmentation from perceptual prediction. Cognit. Sci. 2007;31:613–643. doi: 10.1080/15326900701399913. [DOI] [PubMed] [Google Scholar]

- 58.Wynn K. Infants’ individuation and enumeration of actions. Psychol. Sci. 1996;7:164–169. [Google Scholar]

- 59.Baldwin DA, et al. Infants parse dynamic action. Child Dev. 2001;72:708–717. doi: 10.1111/1467-8624.00310. [DOI] [PubMed] [Google Scholar]

- 60.Saylor MM, et al. Infants’ on-line segmentation of dynamic human action. J. Cognit. Dev. 2007;8:113–128. [Google Scholar]

- 61.Sommerville JA, Woodward AL. Pulling out the intentional structure of action: the relation between action processing and action production in infancy. Cognition. 2005;95:1–30. doi: 10.1016/j.cognition.2003.12.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Woodward AL. Infants selectively encode the goal object of an actor’s reach. Cognition. 1998;69:1–34. doi: 10.1016/s0010-0277(98)00058-4. [DOI] [PubMed] [Google Scholar]

- 63.Woodward AL, Sommerville JA. Twelve-month-old infants interpret action in context. Psychol. Sci. 2000;11:73–77. doi: 10.1111/1467-9280.00218. [DOI] [PubMed] [Google Scholar]

- 64.Travis LL. Goal-based organization of event memory in toddlers. In: van den Broek PW, et al., editors. Developmental Spans on Event Comprehension and Representation: Bridging Fictional and Actual Events. LEA; 1997. pp. 111–139. [Google Scholar]

- 65.Greenwald AG. A choice reaction time test of ideomotor theory. J. Exp. Psychol. 1970;6:20–25. doi: 10.1037/h0029960. [DOI] [PubMed] [Google Scholar]

- 66.Greenwald AG. Sensory feedback mechanisms in performance control: with special reference to ideo-motor mechanism. Psychol. Rev. 1970;77:73–99. doi: 10.1037/h0028689. [DOI] [PubMed] [Google Scholar]

- 67.James W. The Principles of Psychology. Holt; 1890. [Google Scholar]

- 68.Schütz-Bosbach S, Prinz W. Perceptual resonance: action-induced modulation of perception. Trends Cogn. Sci. 2007;11:349–355. doi: 10.1016/j.tics.2007.06.005. [DOI] [PubMed] [Google Scholar]

- 69.Hommel B, et al. The theory of event coding (TEC): a framework for perception and action planning. Behav. Brain Sci. 2001;24:849–937. doi: 10.1017/s0140525x01000103. [DOI] [PubMed] [Google Scholar]

- 70.Rizzolatti G, Craighero L. The mirror-neuron system. Annu. Rev. Neurosci. 2004;27:169–192. doi: 10.1146/annurev.neuro.27.070203.144230. [DOI] [PubMed] [Google Scholar]