Abstract

We present the self-paced 3-class Graz brain-computer interface (BCI) which is based on the detection of sensorimotor electroencephalogram (EEG) rhythms induced by motor imagery. Self-paced operation means that the BCI is able to determine whether the ongoing brain activity is intended as control signal (intentional control) or not (non-control state). The presented system is able to automatically reduce electrooculogram (EOG) artifacts, to detect electromyographic (EMG) activity, and uses only three bipolar EEG channels. Two applications are presented: the freeSpace virtual environment (VE) and the Brainloop interface. The freeSpace is a computer-game-like application where subjects have to navigate through the environment and collect coins by autonomously selecting navigation commands. Three subjects participated in these feedback experiments and each learned to navigate through the VE and collect coins. Two out of the three succeeded in collecting all three coins. The Brainloop interface provides an interface between the Graz-BCI and Google Earth.

1. INTRODUCTION

A brain-computer interface (BCI) transforms electrophysiological or metabolic brain activity into control signals for applications and devices (e.g., spelling system or neuroprosthesis). Instead of muscle activity, a specific type of mental activity is used to operate such a system. For a review on BCI technologies see [1–4].

After years of basic research, modern BCIs have been moving out of the laboratory and are under evaluation in hospitals and at patients' homes (e.g., [5–11]). However, BCIs have to meet several technical requirements before they are practical alternatives to motor controlled communication devices. The most important requirements are high information transfer rates, ease-of-use, robustness, on-demand operability, and safety [12]. In summary, for the end-user, BCI systems have to carry information as quickly and accurately as needed for individual applications, have to work in most environments, and should be available without the assistance of other people (self-initiation). To fulfill these issues, the Graz group focused on the development of small and robust systems which are operated by using one or two bipolar electroencephalogram (EEG) channels only [13]. Motor imagery (MI), that is, the imagination of movements, is used as the experimental strategy.

In this work, we aim at two important issues for practical BCI systems. The first is detection of electromyographic (EMG) and reduction of electrooculographic (EOG) artifacts and the second is the self-paced operation mode. Artifacts are undesirable signals that can interfere and may change the characteristics of the brain signal used to control the BCI [14]. Especially in early training sessions, EMG artifacts are present in BCI training [15]. It is therefore crucial to ensure that (i) brain activity and not muscle activity is used as source of control and that (ii) artifacts are not producing undesired BCI output. Self-paced BCIs are able to discriminate between intentionally generated (intentional control, IC) and ongoing (non-control, NC) brain activity [16]. This means that the system is able to determine whether the ongoing brain pattern is intended as control signals (IC) or not (NC). In this mode the user has control over timing and speed of communication.

In addition to the above stated methods, two applications, designed for self-paced operation, are presented. The first is a computer game like virtual environment (VE) that users navigate through and collect points, and the second is a user-interface which allows operating the Google-Earth (Google, Mountain View, CA, USA) application.

2. METHODS

2.1. Electromyography (EMG) artifact detection

The results of [17] showed that muscle and movement artifacts can be well detected by using the principle of inverse filtering. The inverse filtering method aims to estimate an autoregressive (AR) filter model (see (1)) of the EEG activity. The output yt of the AR model is the weighted sum of the number of model order p last sample values y t−i and the model parameters a i with i = 1…p. v t is a zero-mean-Gaussian-noise with variance σ v 2. Applying the filter model inversely (using the inverted transfer functions) to the measured EEG yields a residual (i.e., prediction error) which is, usually, much smaller than the measured EEG. In effect, all EEG frequencies are suppressed. If some transient or other high-frequency artifacts (like EMG artifacts) are recorded at the EEG channels, the average prediction error will increase. This increase can be detected by computing the time-varying root-mean-square (RMS) of the residual and comparing it with a predefined threshold value. Once the AR parameters ai are identified from an artifact free EEG segment, these parameters can be applied inversely to estimate the prediction error xt from the observed EEG yt,

| (1) |

For on-line experiments, the detection threshold of five times RMS from artifact-free EEG was used. Each time the inversely filtered process exceeded this threshold, the occurrence of an EMG artifact in form of a yellow warning marker, positioned in the lower-left part of the screen, was reported back to the user. After any occurrence, users were instructed to relax until the warning disappeared. The warning was disabled once the threshold was not exceeded for a 1-second period.

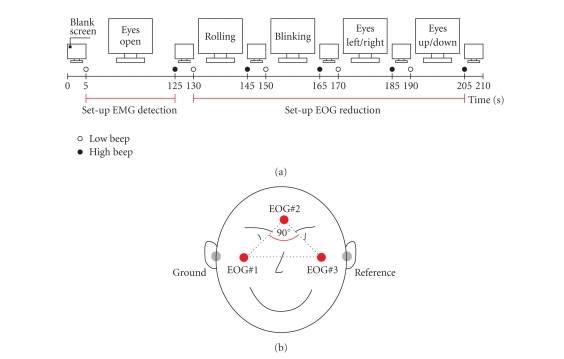

At the beginning of each BCI session, a 2-minute segment of artifact free EEG was recorded in order to estimate the AR-parameters ai (model order p = 10) by using the Burg method. See Figure 1(a) for details on the protocol used to collect the artifact free EEG. Subjects were instructed to sit relaxed and not move.

Figure 1.

(a) Protocol used for the collection of EEG and EOG samples to set up the EMG detection and EOG reduction. The recording was divided into several segments, each separated by a 5-s resting period. Instructions were presented on a computer screen. At the beginning and end of each task low -and high-warning tones were presented, respectively. (b) Positions of EOG electrodes (reference left mastoid, ground right mastoid). The three EOG electrodes are placed above the nasion, and below the outer canthi of the eyes, generating in a right-angled triangle. The legs of the triangle form two spatially orthogonal components (modified from [18]).

2.2 Automatic reduction of electrooculography (EOG) artifacts

Electrooculogram (EOG) artifacts are potential shifts on the body surface resulting from retinal dipole movements or blinking of the eyes. Since generally both eyes are in the same line of sight, one single dipole consisting of three spatial components (horizontal, vertical, and radial) should be sufficient to model the bioelectrical eye activity [18]. Assuming that (i) for each channel the recorded EEG Yt is a linear superposition of the real EEG signal St and the three spatial EOG components ( N t,horizontal, N t,vertical, and N t,radial) weighted by some coefficient b (2) and that (ii) EEG S and EOG N are independent, the weighting coefficient b can be estimated according to (3) (matrix notation) by computing the autocorrelation matrix C N,N of the EOG and the cross-correlation C N,Y between EEG Y and EOG N. Equation (4) describes how the “EOG-corrected” EEG is computed.

| (2) |

| (3) |

| (4) |

To limit the total number of channels, the EOG was measured by using three monopolar electrodes, from which two bipolar EOG channels, covering the horizontal and the vertical EOG activity, were derived (Figure 1(b)).

In order to compute the weighting coefficient b, at the beginning of each BCI session, a 1-minute segment of EEG and EOG with eye movement artifacts was recorded. Subjects were instructed to repetitively perform eye blinks, clockwise and counter-clockwise rolling of the eyes and perform horizontal and vertical eye movements. The eyes should circumscribe the whole field of view without moving the head. Figure 1(a) summarizes the recording protocol used. A more detailed description as well as the evaluation of the EOG correction method can be found in [18].

2.3. Self-paced BCI operation

Essential for the development of self-paced motor imagery (MI)-based BCIs is to train (i) the user to reliably induce distinctive brain patterns and to train (ii) the BCI to detect those patterns in the ongoing EEG. In this work, prior to participate in self-paced experiments, subjects learned to generate three different MI patterns by performing cue-based feedback training. The resulting classifier is named CFRMI . Once a reliable classification performance was achieved, a second classifier (CFRIC )was trained to discriminate between characteristic EEG changes induced by MI and the ongoing EEG. Self-paced control was obtained by combining both classifiers. Each time MI-related brain activity was detected by CFRIC the type of motor imagery task was determined by CFRMI. If no MI-related activity was detected from CFRIC, the output was “0” or “NC.”

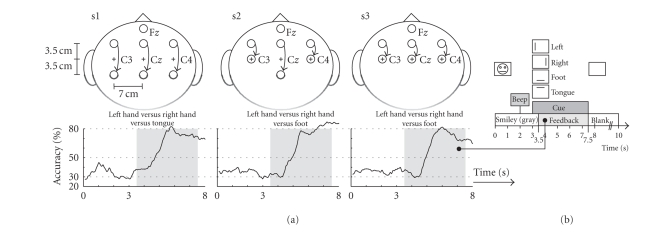

Three healthy subjects (2 males, 1 female, right handed) participated in self-paced experiments. Subject specific electrode positions (according to the international 10–20 system), motor imagery tasks and the on-line classification accuracies of CFRMI after about 4 hours of cue-based 3-class feedback training are summarized in Figure 2(a). Three bipolar EEG channels (named C3, Cz, and C4) and three monopolar EOG channels (Figure1(a) ) were recorded from Ag/AgCl electrodes, analog filtered between 0.5 and 100 Hz and sampled at a rate of 250 Hz. Figure 2(b) shows the timing of the cue-based paradigm. Classifier CFRMI was realized by combining 3 pairwise trained Fisher's linear discriminant analysis (LDA) functions with a majority vote. A maximum of six band power (BP) features were extracted from the EEG by band pass filtering the signal (5th-order Butterworth), squaring and applying a 1-second moving average filter. From the averaged value the logarithm was computed (BPlog).

Figure 2.

(a) Electrode positions used for self-paced feedback experiments. Fz served as ground. The curves show the average classification accuracy (40 trials/class) of the specified motor imagery tasks. (b) Timing of the cue-based training paradigm. The task was to move a smiley-shaped cursor into the direction indicated by the cue.

CFRIC consisted of one single LDA. To identify the most discriminative BPlog the distinction sensitive learning vector quantization (DSLVQ [19, 20]) method was used. DSLVQ is an extended learning vector quantizer (LVQ) which employs a weighted distance function for dynamical scaling and feature selection [20]. The major advantage of DSLVQ is that it does not require expertise, nor any a priori knowledge or assumption about the distribution of the data. To obtain reliable values for the discriminative power of each BPlog the DSLVQ method was repeated 100 times. For each run of the DSLVQ classification, 50% of the features were randomly selected and used for the training and the remaining 50% were kept to test the classifier. The classifier was fine-tuned with DSLVQ type C training (10000 iterations). The learning rate α t decreased during this training from an initial value of α t = 0.05 to α t = 0. The DSLVQ relevance values were updated with the learning rate λ t = α t/10.

DSLVQ was applied to the last session of the cue-based feedback training data (4 runs with 30 trials each; 10 per class). From each trial at two time points t 1 and t 2 = t 1 = 1.0 second around the best on-line classification accuracy during motor imagery, ninety-three BPlog features were extracted; thirty-one for each bipolar EEG channel between 6–36 Hz with a bandwidth of 2 Hz (1 Hz overlap). Motor imagery tasks were pooled together and labeled as class IC (intentional-control). For class NC (noncontrol), in contrast, BPlog were extracted at time of cue onset ( t = 3.0 seconds, see Figure 2(b)). This time was selected to prevent the classifier to detect “unspecific” MI preactivations, resulting from the “beep” presented 1 second before the cue. Additionally from the 2-minute segment of artifact free EEG, used to set up EMG detection (Figure 1(b)), in step sizes of 1-second BPlog features were extracted. The 6 most discriminative BPlog were selected and used to set up CFRIC. To increase the robustness of CFRIC, an additional threshold THIC was introduced which had to be exceeded (dropping below) for a subject-specific transition time t T before a switch between NC and IC (IC and NC) occurred. Increasing or decreasing the value of the threshold was synonymous with shifting the optimal LDA decision border. In doing so, at least to some extent, nonstationary changes of the EEG (from session to session) could be adapted.

2.4. Navigating the freespace virtual environment

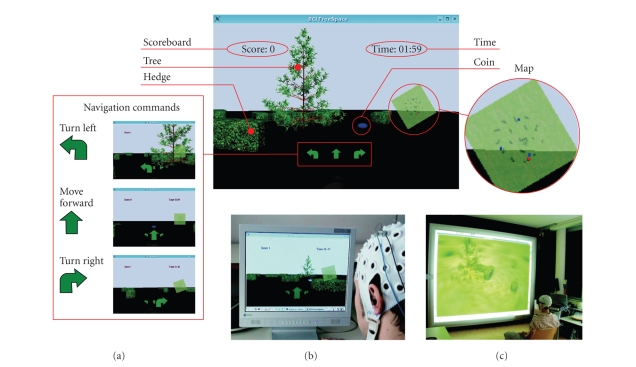

The “freeSpace” virtual environment (VE) was developed as a platform-independent application based on the Qt application framework (Trolltech, Oslo, Norway) and intended as a test platform for self-paced BCIs. The virtual park consists of a flat meadow, several hedges, and a tree positioned in the middle (Figure 3(a)). Like in computer games, coins were positioned on fixed locations inside the park and users had the task of navigating through the virtual world and collecting them. The coins were automatically picked by contact; hedges or the tree had to be bypassed (collision detection). Four commands were implemented. These are “turn left,” “turn right,” “move forward,” and “no operation.” The user datagram protocol (UDP) was used to realize the communication between BCI and VE. For easier orientation, a map showing the current position was presented (Figure 3(a)). The VE was presented in first-person-view perspective on a conventional computer screen (Figure 3(b)). However, given that proper hardware is available, also a stereoscopic 3D representation is possible (Figure 3(c)). In order to provide feedback on received navigation commands and upcoming state switches as fast as possible, during the transition time t T the command arrows were steadily growing (NC to IC) or shrinking (IC to NC), before performing the requested action.

Figure 3.

(a) The freeSpace virtual environment. The screenshot shows the tree, some hedges, and a coin to collect. In the lower mid part of the screen, the navigation commands are shown. The number of collected coins and the elapsed time are presented in the upper left and right sides, respectively. For orientation, a map showing the current position (red dot) was presented. (b) Presentation of the VE on a conventional computer screen. (c) Stereoscopic visualization of the VE on a projection wall (perspective point of view).

By autonomously selecting the navigation commands, subjects had the task of picking up the three coins within a three-minute time limit. For each run the starting position inside the VE was altered. No instructions on how to reach the coins were given to the subjects. Two sessions with 3 feedback training runs were recorded. Each session started with free-training lasting about 20 minutes. At the beginning of this period the subject-specific threshold THIC and transition time t T (maximum value 1 second) were identified empirically according to the statements of the subjects and fixed for the rest of the session. At the end of each session subjects were interviewed on the subjective-experienced classification performance. The BCI and the VE were running on different computers.

For more details on user training, self-paced BCI sytem set-up and evaluation of the freeSpace VE see [21].

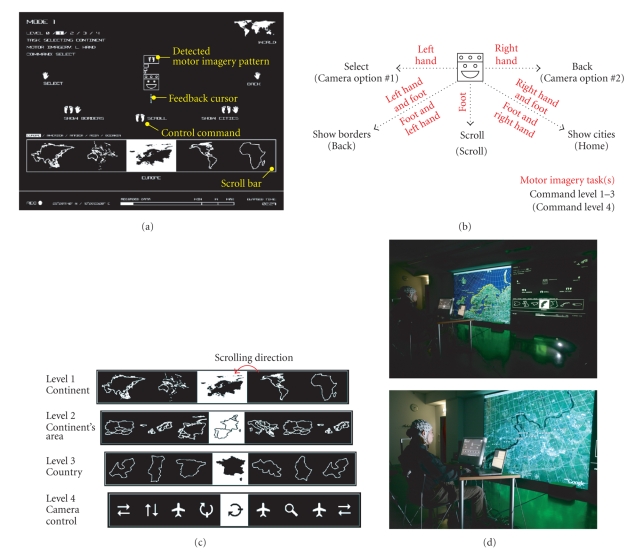

2.5. Operating Google Earth-Brainloop

The Brainloop interface for Google Earth was implemented in Java (Sun Microsystems Inc., Santa Clara, CA, USA). The communication with the BCI was realized by means of the UDP protocol; the communication with Google Earth by using the TCP/IP protocol. A multilevel selection procedure was created to access the whole functional range of Google Earth. Figure 4(a) shows a screen shot of the interface. The user was represented by an icon positioned in the center of the display. The commands at the user's disposal were placed around this icon and could be selected by moving the feedback cursor into the desired direction. The three main commands “scroll,” “select” and “back” were selected by moving the cursor to the left, right, or down, respectively. After each command Google Earth's virtual camera moved to the corresponding position. By combining the cursor movement down with left or right, the commands “show borders” and “show cities” were activated (Figure 4(b)). During the transition time t T the feedback cursor was moving towards the desired control command (NC to IC) or back to the user icon presented in the middle of the screen (IC to NC). Once the feedback cursor was close to the command, this was highlighted and accepted. Figure 4(c) summarizes the four hierarchically arranged selection levels. Levels 1 to 3 were needed to select the continent, the continental area and the country. The scroll bar at level 4 contained commands for the virtual camera (“scan,” “move,” “pan,” “tilt,” and “zoom”). For this level also the assignment of the commands was changed (see Figure 4(b)). Every selection was made by scrolling through the available options and picking the highlighted one. While the “scroll” command was selected, the options were scrolling at a speed of approximately 2 items/s from the right to the left. For more details on the interface please see [22].

Figure 4.

(a) Screenshot of the “Brainloop” interface. The upper part of the screen was used to select the command. The available options were presented in a scroll bar in the lower part of the screen. (b) Available commands for operating Google Earth and used motor imagery tasks. (c) The four levels of selections. (d). Photographs of the “Brainloop” performance.

Subject s2 took part in self-paced on-line experiments. Figure 4(b) summarizes the MI tasks used to operate the feedback cursor. After three consecutive days of training (about 2.5 hours/day) on December 14, 2006, a media performance lasting about 40 minutes was presented to the audience. Figure 4(d) shows pictures taken during the Brainloop media performance. Because it is very difficult to compute self-paced BCI performance measures, after the media performance the subject self-reported on the BCI classification accuracy.

3. RESULTS

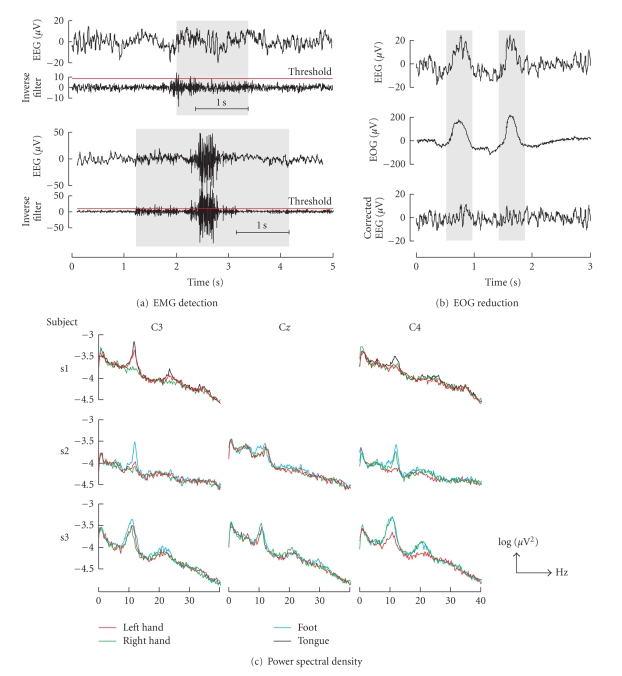

The percentage of EEG samples classified as EMG artifact during the training procedure was less than 0.9% for each subject. Figure 5(a) shows example EMG detections. The method works well for minor (upper plot) as well as for massive muscle activity (lower plot). The power density spectrum for each channel and motor imagery task is summarized in Figure 6. The power density spectrum was computed by averaging the power spectrum of the forty motor imagery trials for each class recorded during the last cue-based feedback session. Before computing the discrete Fourier transform of the 4-second motor imagery segment (see Figure 2(b)) a hamming window was applied. The spectra show clear peaks in the upper-alpha (10–12 Hz) and upper-beta bands (20–25 Hz).

Figure 5.

(a) EMG detection example. The EEG and the inverse filter output are shown for minor (upper part) and massive (lower part) EMG artifacts. (b) Example of EOG reduction. The recorded EEG, EOG channel #2, and the corrected EEG are presented in the upper, middle, and lower parts, respectively. (c) Logarithmic power density spectra of cue-based motor imagery trials (40 each) after 4 hours of feedback training.

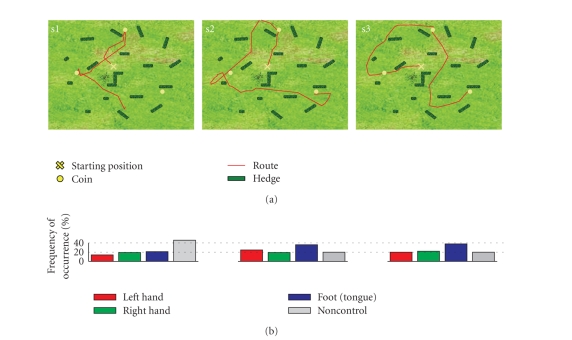

Figure 6.

(a) Map of the freeSpace virtual environment showing the best performance (covered distance) for each subject. (b) Frequencies of occurrence of the detected motor-imagery tasks (selected navigation commands).

The EOG reduction method is able to reduce about 80% of EOG artifacts [18]. The example in Figure 5(b) shows clearly that eye blinks were removed from the EEG.

The relevant frequency components for the discrimination between IC and NC identified for each subject by DSLVQ are summarized in Table 1. The therewith trained LDA classifiers achieved classification accuracies (10 × 10 cross-validation) of 77%, 84%, and 78% for subjects s1, s2, and s3, respectively [21].

Table 1.

Relevant frequency components in Hz identified by DSLVQ for the discrimination of intentional control (IC) and the non-control state (NC).

| Subject | C3 | C z | C4 |

|---|---|---|---|

| s1 | 12–14, 15–17, 20–22, 25–27 | 9–11, 21–23 | — |

| s2 | 12–14, 19–21, 27–29 | 9–11, 11–13 | 21–23 |

| s3 | 8–10, 16–18 | 8–10 | 15–17, 24–26 |

Each subject successfully navigated through the freeSpace VE and collected coins. Subjects s2 and s3 succeeded in collecting all three items within the 3-minute time limit. Subject s1 was able to collect only 2 of the 3 coins. While s1 and s2 were able to improve their performance (reflected in the covered distance and number of collected items), the results of session two for s3 were poor compared to the first. The best performance for each subject out of the 6 runs recorded on 2 different days is shown in Figure 6. The covered distances (Figure 6(a)), however, show that subjects (depending also on the ability to control the BCI) choose different ways to collect the coins. The corresponding distribution of the BCI classification in Figure 6(b) show that each class occurred during the experiment. Interviews with the subjects confirmed that all 3 motor imagery mental states as well as NC were deliberately used for navigation. The “no operation” command (non-control state) was necessary during non-MI related mental activity, like, for example, orientation or routing, or whenever subjects needed a break.

The Brainloop interface is a very intuitive graphical user interface for Google Earth. The developed selection procedure enables users to quickly pick a country and to manipulate the virtual camera. Although audience was present during the performance, subject s2 succeeded in operating Google Earth. After the performance, the subject stated that most of the time the BCI was correctly detecting the intended motor imagery patterns as well as the non-control state.

4. DISCUSSION

The presented BCI is able to automatically reduce EOG artifacts, detect EMG activity, and support the self-paced operation mode. Each of these issues is important for the realization of practical BCI systems. Additionally only three bipolar channels were used which makes the system easy to use and inexpensive compared to a system with more channels.

The proposed EOG reduction and EMG detection methods are simple to implement, computationally not demanding, and can easily be adapted at the beginning of each feedback session. One still open issue, however, is the long-term stability of the methods. Both methods are part of the BioSig open source toolbox [23] and freely available under the GNU General Public License.

Since the proposed EOG reduction method modifies the recorded EEG, an analysis of the influence on the classification accuracy was performed. DSLVQ was applied to the EEG (cue-based training) before and after EOG reduction. The computed DSLVQ feature relevance showed that the same frequency components are relevant before and after applying the EOG reduction method. A statistical analysis of the DSLVQ classification results revealed no significant difference (P > 0.05). Since the frequency range of EOG artifacts is maximal at frequencies below 4 Hz (we were looking in the range 8–30 Hz) and prominent over anterior head regions [14], this result was not surprising.

Although both methods have no direct impact on the system classification performance, the robustness of the BCI could be increased. Artifacts did not cause wrong system responses, but were either reduced or detected and reported back. After artifact detection different options are possible. These include a “pause-mode” (or “freeze-mode”) or to “reset” the system to the initial status. In both cases the BCI suspends execution. While in the former case, after a predefined period of artifact-free EEG, the BCI resumes working, in the latter case, the system resets itself. The choice, however,primarily depends on the robustness of the selected signal processing method in the presence of artifacts.

Even though very simple feature extraction and classification methods were used to create a self-paced system, subjects reported they were quite satisfied with the BCI classification performance. An open question is determining the optimum detection threshold THIC and the transition time tT. We used an empirical approach and changed the parameters according to the statements of the subjects, which is only a suboptimum solution.

For cue-based systems a variety of different performance measures exist. Since only a few groups investigate asynchronous or self-paced systems [24–26], appropriate benchmark tests and performance measures are not available yet [27].

The “freeSpace“ paradigm was introduced because no instructions, except the overall aim to collect coins, had to be given to the subjects. The paradigm is motivating, entertaining and most important there is an endless number of ways to collect the coins.

The Brainloop interface provides a new way to interact with complex applications like Google Earth. By remapping commands and options the interface can be customized also for other applications. Self-report was selected to characterize BCI performance, since performance can be difficult to measure objectively with asynchronous BCIs. Interesting is that there was no need to adapt the detection threshold THIC and the transition time t T. The values fixed during the last freeSpace session were used.

The results of the experiments show that subjects learned to successfully use applications by autonomously switching between different mental states and thereby operating the self-paced Graz-BCI.

ACKNOWLEDGMENTS

This work is supported by the “Fonds zur Förderung der Wissenschaftlichen Forschung” in Austria, project P16326-BO2 and funded in part by the EU research Project no. PRESENCCIA IST 2006-27731. The authors would also like to thank Suncica Hermansson, Brane Zorman, Seppo Gründler, and Markus Rapp for helping to realize the Brainloop interface, and Brendan Allison for proofreading the manuscript.

References

- 1.Wolpaw J, Birbaumer N, McFarland D, Pfurtscheller G, Vaughan T. Brain-computer interfaces for communication and control. Clinical Neurophysiology. 2002;113(6):767–791. doi: 10.1016/s1388-2457(02)00057-3. [DOI] [PubMed] [Google Scholar]

- 2.Pfurtscheller G, Neuper C, Birbaumer N. Human brain-computer interface (BCI) In: Riehle A, Vaadia E, editors. Motor Cortex in Voluntary Movements: A Distributed System for Distributed Functions. Boca Raton, Fla, USA: CRC Press; 2005. pp. 367–401. [Google Scholar]

- 3.Birbaumer N. Brain-computer-interface research: coming of age. Clinical Neurophysiology. 2006;117(3):479–483. doi: 10.1016/j.clinph.2005.11.002. [DOI] [PubMed] [Google Scholar]

- 4.Allison BZ, Wolpaw EW, Wolpaw JR. Brain-computer interface systems: progress and prospects. Expert Review of Medical Devices. 2007;4(4):463–474. doi: 10.1586/17434440.4.4.463. [DOI] [PubMed] [Google Scholar]

- 5.Sellers EW, Donchin E. A P300-based brain-computer interface: initial tests by ALS patients. Clinical Neurophysiology. 2006;117(3):538–548. doi: 10.1016/j.clinph.2005.06.027. [DOI] [PubMed] [Google Scholar]

- 6.Piccione F, Giorgi F, Tonin P, et al. P300-based brain computer interface: reliability and performance in healthy and paralysed participants. Clinical Neurophysiology. 2006;117(3):531–537. doi: 10.1016/j.clinph.2005.07.024. [DOI] [PubMed] [Google Scholar]

- 7.Birbaumer N, Kübler A, Ghanayim N, et al. The thought translation device (TTD) for completely paralyzed patients. IEEE Transactions on Rehabilitation Engineering. 2000;8(2):190–193. doi: 10.1109/86.847812. [DOI] [PubMed] [Google Scholar]

- 8.Pfurtscheller G, Müller-Putz GR, Pfurtscheller J, Gerner HJ, Rupp R. ‘Thought’—control of functional electrical stimulation to restore hand grasp in a patient with tetraplegia. Neuroscience Letters. 2003;351(1):33–36. doi: 10.1016/s0304-3940(03)00947-9. [DOI] [PubMed] [Google Scholar]

- 9.Müller-Putz GR, Scherer R, Pfurtscheller G, Rupp R. EEG-based neuroprosthesis control: a step towards clinical practice. Neuroscience Letters. 2005;382(1-2):169–174. doi: 10.1016/j.neulet.2005.03.021. [DOI] [PubMed] [Google Scholar]

- 10.Neuper C, Müller-Putz GR, Kübler A, Birbaumer N, Pfurtscheller G. Clinical application of an EEG-based brain-computer interface: a case study in a patient with severe motor impairment. Clinical Neurophysiology. 2003;114(3):399–409. doi: 10.1016/s1388-2457(02)00387-5. [DOI] [PubMed] [Google Scholar]

- 11.Neuper C, Müller-Putz GR, Scherer R, Pfurtscheller G. Motor imagery and EEG-based control of spelling devices and neuroprostheses. Progress in Brain Research. 2006;159:393–409. doi: 10.1016/S0079-6123(06)59025-9. [DOI] [PubMed] [Google Scholar]

- 12.Cincotti F, Bianchi L, Birch G, et al. BCI Meeting 2005-Workshop on technology: hardware and software. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2006;14(2):128–131. doi: 10.1109/TNSRE.2006.875584. [DOI] [PubMed] [Google Scholar]

- 13.Pfurtscheller G, Müller-Putz GR, Schlögl A, et al. 15 years of BCI research at Graz university of technology: current projects. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2006;14(2):205–210. doi: 10.1109/TNSRE.2006.875528. [DOI] [PubMed] [Google Scholar]

- 14.Fatourechi M, Bashashati A, Ward RK, Birch GE. EMG and EOG artifacts in brain computer interface systems: a survey. Clinical Neurophysiology. 2007;118(3):480–494. doi: 10.1016/j.clinph.2006.10.019. [DOI] [PubMed] [Google Scholar]

- 15.McFarland DJ, Sarnacki WA, Vaughan TM, Wolpaw JR. Brain-computer interface (BCI) operation: signal and noise during early training sessions. Clinical Neurophysiology. 2005;116(1):56–62. doi: 10.1016/j.clinph.2004.07.004. [DOI] [PubMed] [Google Scholar]

- 16.Mason SG, Bashashati A, Fatourechi M, Navarro KF, Birch GE. A comprehensive survey of brain interface technology designs. Annals of Biomedical Engineering. 2007;35(2):137–169. doi: 10.1007/s10439-006-9170-0. [DOI] [PubMed] [Google Scholar]

- 17.Schlögl A. The Electroencephalogram and the Adaptive Autoregressive Model: Theory and Applications. Aachen, Germany: Shaker; 2000. [Google Scholar]

- 18.Schlögl A, Keinrath C, Zimmermann D, Scherer R, Leeb R, Pfurtscheller G. A fully automated correction method of EOG artifacts in EEG recordings. Clinical Neurophysiology. 2007;118(1):98–104. doi: 10.1016/j.clinph.2006.09.003. [DOI] [PubMed] [Google Scholar]

- 19.Pregenzer M, Pfurtscheller G. Frequency component selection for an EEG-based brain to computer interface. IEEE Transactions on Rehabilitation Engineering. 1999;7(4):413–419. doi: 10.1109/86.808944. [DOI] [PubMed] [Google Scholar]

- 20.Pregenzer M. DSLVQ,Ph.D. thesis. Graz, Austria: Graz University of Technology; 1998. [Google Scholar]

- 21.Scherer R, Lee F, Schlögl A, Leeb R, Bischof H, Pfurtscheller G. Towards self-paced brain computer communication: navigation through virtual worlds. doi: 10.1109/TBME.2007.903709. to appear in IEEE Transactions on Biomedical Engineering. [DOI] [PubMed] [Google Scholar]

- 22.Grassi D. Brainloop media performance. 2007. Jun, http://www.aksioma.org/brainloop/index.html.

- 23.Schlögl A. BioSig—an open source software package for biomedical signal processing. http://biosig.sourceforge.net/ [DOI] [PMC free article] [PubMed]

- 24.Scherer R, Müller-Putz GR, Neuper C, Graimann B, Pfurtscheller G. An asynchronously controlled EEG-based virtual keyboard: improvement of the spelling rate. IEEE Transactions on Biomedical Engineering. 2004;51(6):979–984. doi: 10.1109/TBME.2004.827062. [DOI] [PubMed] [Google Scholar]

- 25.Millán JDR, Mouriño J. Asynchronous BCI and local neural classifiers: an overview of the adaptive brain interface project. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2003;11(2):159–161. doi: 10.1109/TNSRE.2003.814435. [DOI] [PubMed] [Google Scholar]

- 26.Borisoff J, Mason S, Bashashati A, Birch G. Brain-computer interface design for asynchronous control applications: improvements to the LF-ASD asynchronous brain switch. IEEE Transactions on Biomedical Engineering. 2004;51(6):985–992. doi: 10.1109/TBME.2004.827078. [DOI] [PubMed] [Google Scholar]

- 27.Mason S, Kronegg J, Huggins J, Fatourechi M, Schlögl A. Vancouver, Canada: Neil Squire Society; 2006. Evaluating the performance of self-paced brain computer interface technology. http://www.bci-info.tugraz.at/Research_Info/documents/articles/ [Google Scholar]