Abstract

Purpose

We characterized variation in adherence to quality measures of external beam radiotherapy (EBRT) for localized prostate cancer and its relation to patient and provider characteristics in a population-based, representative sample of US men.

Methods and Materials

We evaluated EBRT quality measures proposed by a RAND expert panel of physicians among men age 65 or older diagnosed from 2000 to 2002 with localized prostate cancer and treated with primary EBRT using data from the linked Surveillance, Epidemiology, and End Results (SEER) Medicare program. We assessed adherence to five EBRT quality measures that were amenable to analysis using SEER-Medicare data: 1) use of conformal radiotherapy treatment planning; 2) use of high-energy (>10MV) photons; 3) use of custom immobilization; 4) completion of two follow-up visits with a radiation oncologist in the year following therapy; and 5) radiation oncologist board certification.

Results

Of the 11,674 patients, 85% received conformal radiotherapy treatment planning, 75% received high-energy photons, and 97% received custom immobilization. One-third of patients completed two follow-up visits with a radiation oncologist, though 91% had at least one visit with a urologist or a radiation oncologist. The majority of patients (85%) were treated by a board certified radiation oncologist.

Conclusions

Overall high adherence to EBRT quality measures masked substantial variation by geography, socioeconomic status in area of residence, and teaching affiliation of the radiotherapy facility. Future research should examine reasons for variation in these measures and whether variation is associated with important clinical outcomes.

Keywords: Prostate cancer, quality of care, external beam radiotherapy, SEER-Medicare, health services research

INTRODUCTION

External beam radiotherapy (EBRT) is a potentially curative therapy for patients with clinically localized prostate cancer. The delivery of EBRT is highly technical, and, as with other technical fields in medicine, the need for quality assessment and improvement is essential. Previous studies have identified variation in radiotherapy quality in clinical trials1, 2, prompting the establishment of rigorous quality assurance procedures within the confines of the research setting.3, 4 Yet, measuring and improving cancer care quality for the 97% of patients who are cared for outside of the clinical trial context has become a national priority5, 6, and several groups have initiated programs to examine quality across the spectrum of cancer care.7

Given substantial healthcare and economic burdens of treatment for localized prostate cancer8, attention has been directed toward characterizing variation in the quality of definitive therapies for this disease.9 In 2000, the RAND Corporation, a nonprofit institution that undertakes health policy research, assembled an expert panel of physicians that proposed an inventory of quality measures for the treatment of localized prostate cancer.10, 11 The project endorsed 25 structure and process measures that were germane to the evaluation of prostate cancer EBRT quality. Of these 25 measures, 9 obtained agreement among panel members, reflecting a high level of consensus as to their appropriateness for quality assessment using medical records.11

However, initial efforts relying on medical record review to evaluate the highly-technical processes of EBRT have confronted challenges stemming from the inaccessibility of radiotherapy records. One study of 186 men at a single academic institution found that documentation of EBRT planning was not accessible in clinical data available to investigators.12 Often, radiotherapy documentation is managed and stored within outpatient radiotherapy facilities, separate from inpatient hospital documentation and not consistently included in patient charts. Therefore, a knowledge gap has emerged in the quality assessment of EBRT, despite its substantial utilization as definitive treatment for patients with localized prostate cancer.

Because radiation oncologists and radiotherapy facilities must report specific, technical information on radiotherapy delivery for reimbursement, we anticipated that details concerning adherence to RAND-proposed measures of radiotherapy quality might be discernable in billing claims data. Accordingly, we undertook this study to characterize variation in adherence to RAND-proposed EBRT quality measures using the linked Surveillance, Epidemiology and End Results (SEER)-Medicare database, a population-based source of information on patients 65 years and older with cancer; and, secondarily, to examine the relation between variation in adherence and patient and provider characteristics.

METHODS AND MATERIALS

Data Sources

The study cohort was comprised of patients from the Surveillance, Epidemiology and End Results (SEER)-Medicare database, which links patient demographic and tumor-specific data collected by SEER cancer registries to health care claims for Medicare enrollees. Information on incident cancer cases was available from 16 cancer registries from 1994 through 2002, covering 26% of the US population.13 Greater California, Kentucky, Louisiana, and New Jersey case contributions began in 2000. SEER registries collect data on each patient’s cancer site, extent of disease, histology, date of diagnosis, and initial treatment. We staged patients according to the American Joint Committee on Cancer Staging Manual, Sixth Edition.14

The Medicare program provides health care benefits to 97% of the US population 65 years old or older. SEER data have been linked to Medicare claims for inpatient and outpatient care, which provide information about initial treatment and allow patients to be followed longitudinally. Approximately 94% of patients in SEER aged 65 years or older have been successfully linked with their Medicare claims.15

Cohort Definition

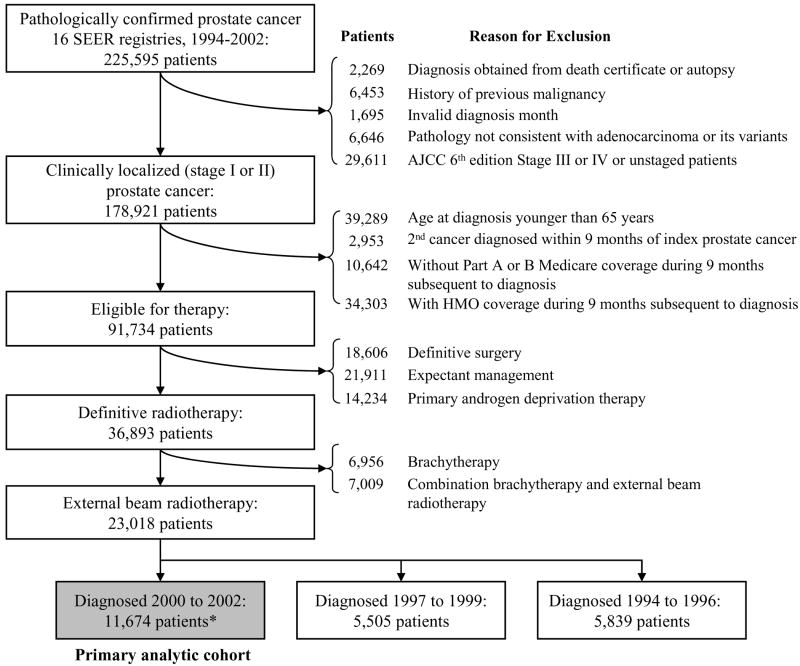

Figure 1 shows criteria used to create the cohort of 23,018 patients with localized prostate cancer diagnosed between 1994 and 2002 who received primary EBRT. These patients were further stratified into three cohorts by year of diagnosis: 1994-96, 1997-99, and 2000-02. To characterize adherence to EBRT quality measures (our primary aim) in a contemporary cohort, we focus on the 11,674 men diagnosed from 2000 to 2002 (Figure 1). To report secular trends in adherence, we consider men in all three cohorts by year of diagnosis.

Figure 1.

Definition of study cohort

Text legend:

* Includes cases from Greater California, Kentucky, Louisiana, and New Jersey registries starting in 2000.

Note: Patients with inadequate Medicare records were excluded from the analysis. Medicare Part A and B coverage is required to ascertain treatment from Medicare records. Patients with health maintenance organization (HMO) coverage were also excluded because Medicare data does not include records for HMO enrollees.

We classified initial therapy as definitive surgery, definitive radiotherapy (defined as EBRT, brachytherapy (BRT), or combination BRT with EBRT), primary androgen deprivation therapy (PADT), or expectant management occurring within 9 months after diagnosis. This time frame was selected to ensure sufficient time span from diagnosis for multidisciplinary consultation and initiation of adjuvant androgen deprivation therapy. We identified corresponding SEER variables and Medicare diagnosis and procedure billing codes for each therapy using International Classification of Diseases-9 (ICD-9)16 and Current Procedural Terminology (CPT)17 codes and searched for these codes in SEER data and Medicare claims. To enhance specificity, we required stringent evidence of radiotherapy delivery in ICD-9 or CPT codes. Codes used for classification of treatment are available from the authors by request.

Quality Measure Selection

Our objective was to evaluate the extent to which observed practice adheres to RAND-proposed EBRT structure and process quality measures.18 We chose a priori to evaluate only those EBRT structure and process measures that attained RAND expert panel agreement on both feasibility and validity and were amenable for analysis with SEER-Medicare data. In the RAND report, a measure was considered valid if adequate scientific evidence or professional consensus supported the measure as one that would differentiate lower-quality from higher-quality care. A measure was considered feasible if the information necessary to assess the measure could be obtained from a medical record, cancer registry, or other systematically collected data source. In addition, the RAND report characterized each measure by whether there was agreement or disagreement among the panel as to its validity or feasibility.11

Nine EBRT measures (two structure and seven process measures) attained RAND panel agreement on both feasibility and validity. Of these, one structure measure and four process measures were suitable for SEER-Medicare analysis (Table 1, grey). Therefore, a total of five quality measures met our selection criteria: 1) use of conformal radiotherapy treatment planning; 2) use of high-energy (>10MV) photons; 3) use of custom immobilization; 4) completion of two follow-up visits with a radiation oncologist in the year following therapy; and 5) radiation oncologist board certification.

Table 1.

RAND-proposed EBRT Quality Measures with Agreement on Both Feasibility and Validity and SEER-Medicare Suitability

| Evidence Level* | Suitable for claims-based analysis | Study inclusion | Strategy for identification | Average reimbursement, 2002¥ | |

|---|---|---|---|---|---|

| Structure Measures | |||||

| 1. Radiation oncologist board certification | III | Yes | Yes | Physician UPIN; HCFA specialty code 92 or HCFA and AMA specialty codes 30 and “RO”; AMA board certification codes 1019, 1034, 1033, and 1060. | N/A |

|

| |||||

| 2. Radiation oncologist prostate case volume | II | Total case volume not documented in Medicare | No | ||

|

| |||||

| Process Measures | |||||

|

| |||||

| 1. Use of conformal radiotherapy treatment planning | II | Yes | Yes | 77295 (conformal planning) 77301, G0178 (IMRT planning) | $1,468 per treatment course (conformal) $2,204 per treatment course (IMRT) |

|

| |||||

| 2. Use of high-energy (> 10 MV) photons | II | Yes | Yes | 77404-06, 77409-11or 77414-16 | $3,429 per treatment course¶ |

|

| |||||

| 3. Use of custom immobilization during radiotherapy | II | Yes | Yes | 77334 | $427 per immobilization |

| 4. Completion of two follow-up visits with radiation oncologist in first posttreatment year | III | Yes | Yes | 9921x, 9922x, 9923x, 9924x, 9925x, 9938x, 9939x | $127 per visit |

|

| |||||

| 5. Digital rectal examination, pretreatment clinical stage, total PSA, Gleason grade | II | Not reliably reported in SEER or Medicare | No | ||

|

| |||||

| 6. Documented assessment of comorbidity | II | Not documented in SEER or Medicare | No | ||

| 7. Delivering escalated doses (70–80 Gy) with conformal radiation therapy | II | Not reported in SEER or Medicare | No | ||

Text legend: CPT, Current Procedural Terminology

II, nonrandomized controlled clinical trials, cohort or case-control study, or multiple time series; III, expert opinion.

Average 2002 reimbursement calculated among cohort members from Medicare claims.

Beam energy claims are reported for each fraction of radiotherapy delivered. Average per treatment reimbursement calculated as the average reimbursement per patient among those patients in 2002 who received high-energy photons.

Primary Endpoints

The primary endpoints were adherence to the five EBRT quality measures proposed by the RAND expert panel that were amenable to analysis using SEER-Medicare data. We identified conformal radiotherapy treatment planning, high-energy (>10MV) photons, and custom immobilization using the strategy outlined in Table 1. CPT codes for conformal treatment planning are specific as they require three-dimensional beam’s eye view volume reconstruction and the calculation of dose-volume histograms as part of the radiotherapy simulation procedure.17, 19 CPT codes for each technical measure were in use throughout the study period, except for IMRT treatment planning codes, which were introduced in 2001.

We identified the number of follow-up visits with a radiation oncologist in the 12 months following completion of radiotherapy using Medicare claims for radiation oncologist visits (Table 1). We used physician specialty codes from Medicare claims and the American Medical Association (AMA) Physician Masterfile to identify radiation oncologists.20 Linkage between Medicare claims and the AMA Masterfile was achieved using Unique Physician Identifier Numbers (UPINs). We dichotomized follow-up visits as ≥2 or less than 2 according to the RAND quality measure.10, 11 For comparison, we also identified follow-up visits with urologists in the year following radiotherapy through similar methods using Medicare claims and AMA data. We obtained the board certification of the treating radiation oncologist from the AMA Masterfile.

For each patient, “adherence” to a radiotherapy quality measure was defined as having Medicare claims indicating measure performance. We calculated an “adherence rate” for each measure in which the numerator was the number of patients with adherence and the denominator was the total number of patients in the primary analytic cohort diagnosed from 2000 to 2002. We also calculated adherence to a composite technical measure representing the proportion of patients with adherence to all three technical measures (conformal radiotherapy, high-energy photons, and custom immobilization).

Other Variables

Demographic characteristics included age at diagnosis, race and ethnicity according to SEER designation, and marital status. Clinical characteristics included clinical tumor stage, according to the AJCC 6th edition staging system, Gleason’s sum, pretreatment PSA level, and use of adjuvant androgen deprivation therapy. We calculated a modified Charlson comorbidity index21 from Part A and Part B Medicare claims during a prediagnosis interval from 13 months to 1 month. Socioeconomic and geographic characteristics included diagnosis year, SEER registry, population of patients’ county or metropolitan area, and proxies for patient income and educational attainment. Because individual-level socioeconomic data are not available in either SEER or Medicare records, proxy measures were obtained from the 2000 US Census. The SEER-Medicare program links patient census tract of residence reported to SEER to census tract socioeconomic variables collected by the US Bureau of the Census. Income and educational attainment were estimated by census tract median household income and the percentage of adults in the census tract with less than high-school education. For approximately 6% of cases, the census tract of residence was unavailable, and zip code-level data were used.

We classified hospital-based radiotherapy facilities as teaching affiliated or community. Hospital teaching affiliation was self-reported in the Healthcare Cost Report and Provider of Service surveys available from the Center for Medicare and Medicaid Services for 2000, 2001, and 2002. Linkage between Medicare claims and hospital surveys was achieved using hospital provider identification numbers (PINs). We classified facilities whose radiotherapy claims were reported only through non-hospital-based Medicare National Claims History (NCH) records as stand-alone facilities.

Statistical Analysis

For each quality measure and for the combined technical measure, we performed univariate and multivariate logistic regression to evaluate the relation between patient characteristics and measure adherence. We choose a priori to include all covariates in adjusted analysis. Lastly, we evaluated secular trends in the adherence rate for each measure for the 23,018 patients diagnosed between 1994 and 2002. All statistical analyses were conducted using SAS version 9.1 (SAS Institute, Cary, NC). Reported analyses were two-sided and considered statistically significant when P ≤ 0.05.

RESULTS

Baseline Characteristics

Demographic, clinical, socioeconomic, and provider characteristics are shown in Table 2. Of the 11,674 patients diagnosed from 2000 to 2002, 9,944 (85%) were white and 1,052 (9%) were black. Comorbidity was absent in 7,895 (68%), mild in 2,229 (19%), and moderate to severe in 902 (8%) patients. There were 5,219 (45%) patients treated at teaching hospitals, 2,900 (25%) at community hospitals, and 3,555 (30%) at stand-alone radiotherapy facilities.

Table 2.

Baseline characteristics of study cohort, 2000 to 2002

| No. | (%) | |

|---|---|---|

| Demographic | ||

| Age at diagnosis | ||

| 65-69 | 2,626 | (22) |

| 70-74 | 4,188 | (36) |

| 75-79 | 3,684 | (32) |

| 80-84 | 1,009 | (9) |

| 85+ | 167 | (1) |

|

| ||

| Race | ||

| White | 9,944 | (85) |

| Black | 1,052 | (9) |

| Other | 511 | (4) |

| Unknown | 167 | (1) |

|

| ||

| Hispanic ethnicity | ||

| Not Hispanic | 10,865 | (93) |

| Hispanic | 541 | (5) |

| Unknown | 268 | (2) |

|

| ||

| Marital status | ||

| Married | 8,417 | (72) |

| Not married | 2,213 | (19) |

| Unknown | 1,044 | (9) |

|

| ||

| Clinical | ||

| Tumor Stage (AJCC 6th Edition) | ||

| T1 | 4,464 | (38) |

| T2 | 7,210 | (62) |

|

| ||

| Gleason’s sum | ||

| 8-10 | 2,716 | (23) |

| 5-7 | 8,451 | (72) |

| 2-4 | 290 | (2) |

| Unknown | 217 | (2) |

|

| ||

| PSA (ng/ml) | ||

| 10.0+ | 7,228 | (62) |

| 4.1-9.9 | 706 | (6) |

| 0-4.0 | 437 | (4) |

| Unknown | 3,303 | (28) |

|

| ||

| Adjuvant androgen deprivation therapy | ||

| Androgen deprivation therapy | 7,117 | (61) |

| None | 4,557 | (39) |

|

| ||

| Comorbidity index¶ | ||

| 0 | 7,895 | (68) |

| 1 | 2,229 | (19) |

| 2+ | 902 | (8) |

| Unknown | 648 | (6) |

|

| ||

| Socioeconomic | ||

| Diagnosis Year | ||

| 2000 | 3,640 | (31) |

| 2001 | 3,948 | (34) |

| 2002 | 4,086 | (35) |

| SEER Registry | ||

| Greater California | 1,953 | (17) |

| New Jersey | 1,923 | (16) |

| Detroit | 1,489 | (13) |

| Connecticut | 994 | (9) |

| Iowa | 847 | (7) |

| Louisiana | 784 | (7) |

| Kentucky | 773 | (7) |

| Los Angeles | 714 | (6) |

| Seattle | 503 | (4) |

| San Francisco | 452 | (4) |

| New Mexico | 366 | (3) |

| San Jose | 255 | (2) |

| Hawaii | 241 | (2) |

| Utah | 190 | (2) |

| Atlanta | 174 | (1) |

| Rural Georgia | 16 | (0) |

| Population of County of Residence | ||

| 0-249,999 | 3,011 | (26) |

| 250,000-999,999 | 2,230 | (19) |

| 1,000,000+ | 6,433 | (55) |

| Percentage of men with less than a high school education in census tract of residence‡ | ||

| Bottom quartile (24-100) | 2,908 | (25) |

| Second quartile (14-24) | 2,905 | (25) |

| Third quartile (8-14) | 2,908 | (25) |

| Top quartile (0-8) | 2,911 | (25) |

| Median household income (U.S. dollars) in census tract of residence‡ | ||

| Bottom quartile (7-35,641) | 2,911 | (25) |

| Second quartile (35,641-47,220) | 2,905 | (25) |

| Third quartile (47,220-64,719) | 2,908 | (25) |

| Top quartile (64,719-200,008) | 2,908 | (25) |

| Provider | ||

| Radiotherapy Facility | ||

| Teaching hospital | 5,219 | (45) |

| Community hospital | 2,900 | (25) |

| Stand-alone facility | 3,555 | (30) |

Text legend:

A comorbidity score of > 2 represents the highest level of comorbidity.

Data not shown for 42 patients with unknown education and income status.

Measure Adherence

Table 3 demonstrates adherence to each of the five RAND-proposed EBRT quality measures. The adherence rate for each technical measure was high: 85% received conformal radiotherapy treatment planning, 75% received high-energy photons, and 97% received custom immobilization. The overall adherence rate to the composite technical measure was lower (64%), due to discordance between receipt of conformal radiotherapy and high-energy photons. While 7,610 (65%) patients received both conformal radiotherapy and high-energy photons, 2,301 patients received conformal radiotherapy without high-energy photons and 1,095 patients received high-energy photons without conformal radiotherapy. Only 34% of patients adhered to the RAND quality metric for two follow-up visits with a radiation oncologist within one year of the completion of radiotherapy. The majority of patients (85%) were treated by a board certified radiation oncologist.

Table 3.

EBRT quality measure adherence, 2000 to 2002

| N | Conformal RT† % |

High-energy photons†† % |

Custom immob§ % |

Follow up visits* % |

Board cert¶ % |

Composite technical measure¥ % |

|

|---|---|---|---|---|---|---|---|

| Overall adherence | 11,674 | 84.9 | 74.6 | 97.1 | 34.3 | 85.3 | 64.2 |

|

| |||||||

| Demographic | |||||||

| Age at diagnosis | |||||||

| 65-69 | 2,626 | 84.5 | 73.8 | 96.1 | 34.5 | 84.6 | 62.8 |

| 70-74 | 4,188 | 85.3 | 75.2 | 97.3 | 35.2 | 86.2 | 64.7 |

| 75-79 | 3,684 | 84.6 | 74.5 | 97.6 | 34.3 | 84.8 | 64.3 |

| 80-84 | 1,009 | 85.9 | 73.4 | 97.7 | 31.1 | 84.4 | 64.7 |

| 85+ | 167 | 80.8 | 78.4 | 95.2 | 25.9 | 86.5 | 65.3 |

|

| |||||||

| Race | |||||||

| White | 9,944 | 84.9 | 73.8 | 97.3 | 34.1 | 85.7 | 63.4 |

| Black | 1,052 | 85.9 | 81.0 | 97.0 | 37.8 | 81.8 | 69.8 |

| Other | 511 | 84.0 | 80.4 | 95.7 | 29.3 | 83.8 | 69.9 |

| Unknown | 167 | 80.8 | 60.5 | 93.4 | 39.3 | 86.1 | 55.1 |

|

| |||||||

| Hispanic ethnicity | |||||||

| Not Hispanic | 10,865 | 85.1 | 75.0 | 97.4 | 34.0 | 85.5 | 64.4 |

| Hispanic | 541 | 83.7 | 71.2 | 93.4 | 39.2 | 80.5 | 61.9 |

| Unknown | 268 | 80.2 | 63.1 | 94.4 | 35.2 | 85.8 | 57.8 |

|

| |||||||

| Marital status | |||||||

| Married | 8,417 | 85.4 | 74.9 | 97.2 | 34.4 | 86.0 | 64.4 |

| Not married | 2,213 | 85.0 | 75.4 | 97.3 | 33.4 | 84.1 | 65.3 |

| Unknown | 1,044 | 80.5 | 70.2 | 96.3 | 35.6 | 81.7 | 59.7 |

|

| |||||||

| Clinical | |||||||

| Tumor Stage | |||||||

| T1 | 4,464 | 85.2 | 76.3 | 97.0 | 35.1 | 85.0 | 65.9 |

| T2 | 7,210 | 84.7 | 73.5 | 97.2 | 33.8 | 85.4 | 63.1 |

|

| |||||||

| Gleason’s sum | |||||||

| 8-10 | 2,716 | 84.2 | 75.9 | 97.9 | 33.4 | 85.1 | 65.3 |

| 5-7 | 8,451 | 85.5 | 74.5 | 97.0 | 32.6 | 85.3 | 64.3 |

| 2-4 | 290 | 76.6 | 70.0 | 95.2 | 36.6 | 84.2 | 54.1 |

| Unknown | 217 | 80.2 | 68.2 | 95.9 | 32.7 | 87.7 | 59.0 |

|

| |||||||

| PSA (ng/ml) | |||||||

| 10.0+ | 7,228 | 84.5 | 78.1 | 97.7 | 33.5 | 85.5 | 67.1 |

| 4.1-9.9 | 706 | 86.8 | 68.8 | 98.3 | 34.4 | 89.0 | 61.3 |

| 0-4.0 | 437 | 86.5 | 74.1 | 98.9 | 34.1 | 87.0 | 65.5 |

| Unknown | 3,303 | 85.2 | 68.1 | 95.4 | 38.5 | 83.7 | 58.3 |

|

| |||||||

| Adjuvant androgen deprivation therapy | |||||||

| Androgen deprivation therapy | 7,117 | 85.0 | 75.0 | 97.1 | 32.5 | 86.1 | 64.4 |

| None | 4,557 | 84.8 | 73.9 | 97.2 | 36.9 | 83.9 | 63.8 |

|

| |||||||

| Comorbidity index | |||||||

| 0 | 7,895 | 85.7 | 74.3 | 97.4 | 34.3 | 85.4 | 64.4 |

| 1 | 2,229 | 83.7 | 76.0 | 97.1 | 35.4 | 84.7 | 64.4 |

| 2+ | 902 | 83.8 | 73.2 | 96.1 | 34.8 | 84.7 | 63.1 |

| Unknown | 648 | 81.5 | 74.7 | 95.7 | 29.8 | 86.8 | 61.7 |

|

| |||||||

| Socioeconomic and geographic | |||||||

| SEER Registry** | |||||||

| Greater California | 1,953 | 85.3 | 65.0 | 94.0 | 29.9 | 83.9 | 54.7 |

| New Jersey | 1,923 | 86.4 | 72.8 | 98.3 | 38.4 | 89.8 | 63.6 |

| Detroit | 1,489 | 94.9 (H) | 92.6 | 99.6 (H) | 45.9 | 76.4 | 88.7 (H) |

| Connecticut | 994 | 87.5 | 51.1 (L) | 97.9 | 25.3 | 95.5 | 44.2 (L) |

| Iowa | 847 | 69.2 | 81.9 | 96.9 | 21.6 | 83.7 | 62.7 |

| Louisiana | 784 | 90.4 | 84.8 | 94.0 | 31.6 | 79.0 | 72.1 |

| Kentucky | 773 | 64.6 (L) | 79.8 | 98.5 | 28.2 | 83.6 | 59.5 |

| Los Angeles | 714 | 89.9 | 70.6 | 96.4 | 48.8 (H) | 80.5 | 64.6 |

| Seattle | 503 | 81.5 | 59.6 | 99.6 | 29.5 | 98.4 | 45.7 |

| San Francisco | 452 | 87.2 | 80.8 | 98.7 | 20.0 (L) | 87.6 | 75.2 |

| New Mexico | 366 | 86.9 | 78.4 | 93.7 (L) | 48.4 | 80.1 | 71.3 |

| San Jose | 255 | 91.4 | 64.3 | 98.0 | 40.5 | 88.7 | 60.8 |

| Hawaii | 241 | 85.5 | 99.2 (H) | 96.7 | 24.7 | 73.5 (L) | 82.2 |

| Utah | 190 | 87.9 | 79.0 | 99.5 | 47.9 | 98.4 | 68.4 |

| Atlanta | 174 | 69.5 | 92.0 | 97.1 | 48.7 | 100.0 (H) | 60.9 |

|

| |||||||

| Population of County of Residence | |||||||

| 0-249,999 | 3,011 | 78.1 | 73.2 | 96.7 | 32.4 | 81.7 | 57.4 |

| 250,000-999,999 | 2,230 | 84.4 | 71.0 | 95.8 | 32.6 | 87.6 | 62.0 |

| 1,000,000+ | 6,433 | 88.3 | 76.4 | 97.8 | 35.8 | 86.1 | 68.1 |

| Percentage of men with < a high school education in census tract‡ | |||||||

| Bottom quartile (24-100) | 2,908 | 81.0 | 71.9 | 95.2 | 35.6 | 81.8 | 60.8 |

| Second quartile (14-24) | 2,905 | 84.0 | 75.7 | 97.4 | 33.5 | 85.1 | 64.3 |

| Third quartile (8-14) | 2,908 | 85.6 | 73.8 | 98.1 | 32.7 | 85.8 | 63.2 |

| Top quartile (0-8) | 2,911 | 89.1 | 77.2 | 97.8 | 35.6 | 88.2 | 68.7 |

|

| |||||||

| Median household income (U.S. dollars) in census tract‡ | |||||||

| Bottom quartile (7-35,641) | 2,911 | 79.6 | 75.0 | 95.4 | 34.5 | 81.4 | 61.7 |

| Second quartile (35,641-47,220) | 2,905 | 82.7 | 73.9 | 97.1 | 31.3 | 85.4 | 61.8 |

| Third quartile (47,220-64,719) | 2,908 | 87.5 | 72.4 | 97.8 | 36.0 | 86.0 | 63.8 |

| Top quartile (64,719-200,008) | 2,908 | 89.9 | 77.4 | 98.1 | 35.5 | 88.2 | 69.6 |

|

| |||||||

| Provider | |||||||

| Radiotherapy Facility | |||||||

| Teaching hospital | 5,219 | 88.8 | 83.1 | 98.6 | 35.9 | 88.2 | 75.4 |

| Community hospital | 2,900 | 77.1 | 79.2 | 97.1 | 34.5 | 82.5 | 60.5 |

| Stand-alone facility | 3,555 | 85.6 | 58.2 | 94.9 | 31.8 | 83.2 | 50.7 |

Text legend: Bold character type indicates both unadjusted and adjusted trend P value < 0.05. Multivariate model included all covariates. Data for year of diagnosis not shown. Data for Rural Georgia not shown (N=16) according to SEER-Medicare patient confidentiality guidelines.

Conformal radiotherapy includes 3D conformal and intensity modulated radiotherapy. We classified 89 patients whose treatment planning technique was not reported as having received non-conformal radiotherapy.

We classified 1,007 patients in 2001 and 2002 who received IMRT but whose photon energy was not reported as having received high energy photons. We classified 333 patients whose photon energy was not reported as having received photon energies < 10 MV.

We classified 306 patients whose immobilization was not reported as having received non-custom immobilization.

We excluded 1,731 patients in 2002 who had less than 12 months of follow-up for evaluation of claims following completion of radiotherapy. The total N for the follow-up measure is 9,943 patients.

We excluded 3% (370) of patients who could not be linked to their physician in the AMA Masterfile.

Indicates adherence to all three technical measures (conformal radiotherapy, high-energy photons, and custom immobilization).

Among registries, (H) indicates highest adherence; (L) indicates lowest adherence.

Data not shown for 42 patients with unknown education and income status.

Because follow-up adherence with radiation oncologists was uniformly low, we also analyzed the proportion of patients who had follow-up visits with either urologists or radiation oncologists in the year following completion of radiotherapy. In the year following completion of radiotherapy, 80% of patients had at least 2 follow-up visits with either urologists or radiation oncologists. Furthermore, 68% of patients had at least 1 (rather than 2, as proposed by RAND) follow-up visit with a radiation oncologist and 91% had at least 1 follow-up visit with either a urologist or a radiation oncologist.

Demographic characteristics

In Table 3, characteristics significantly associated (P ≤0.05) with measure adherence on both unadjusted and adjusted analysis are displayed in bold type font. No significant difference was noted among white and black patients in the use of conformal radiotherapy (85% vs. 86%, respectively). Older age was significantly associated with declining adherence to the follow-up visit measure. Notably, older age was not associated with less follow-up among patients seen by both a urologist and a radiation oncologist (data not shown).

Clinical characteristics

We did not find any meaningful trends to suggest that adherence varied substantially by clinical characteristics such as tumor stage, Gleason’s sum, pretreatment PSA, adjuvant androgen deprivation, or comorbidity index (Table 3).

Geographic and socioeconomic characteristics

Among all of the measures, there was substantial variation in adherence on adjusted analysis by SEER registry and county or metropolitan population. For example, adherence to the conformal radiotherapy measure varied from 65% in Kentucky to 95% in Detroit and 78% in less populated areas (<250,000 persons) to 88% in highly populated areas (≥1,000,000 persons). Higher census tract education and income levels were also associated with higher adherence (Table 3).

Radiotherapy facility characteristics

Radiotherapy facilities affiliated with teaching hospitals had significantly higher adherence to each quality measure compared to community hospital affiliated facilities and stand-alone facilities (Table 3). However, teaching hospitals and stand-alone facilities both had high adherence to conformal radiotherapy (89% and 86%, respectively).

Composite technical measure adherence

Table 3 also identifies patient characteristics that were associated with adherence to the composite technical measure (conformal radiotherapy, high-energy photons, and custom-immobilization). On adjusted analysis, adherence did not vary meaningfully by demographic or clinical characteristics. However, there was significant variation in adherence by geographic area of SEER registry, population, radiotherapy facility teaching affiliation, census tract education level, and year of diagnosis. For example, adjusted analysis demonstrated lower adherence to the composite technical measure among community hospitals and stand-alone facilities when compared to teaching hospitals (51% vs. 61% vs. 75% for stand-alone facilities, community hospitals, and teaching hospitals, respectively). Unadjusted and adjusted regression models for each measure are available from the authors by request.

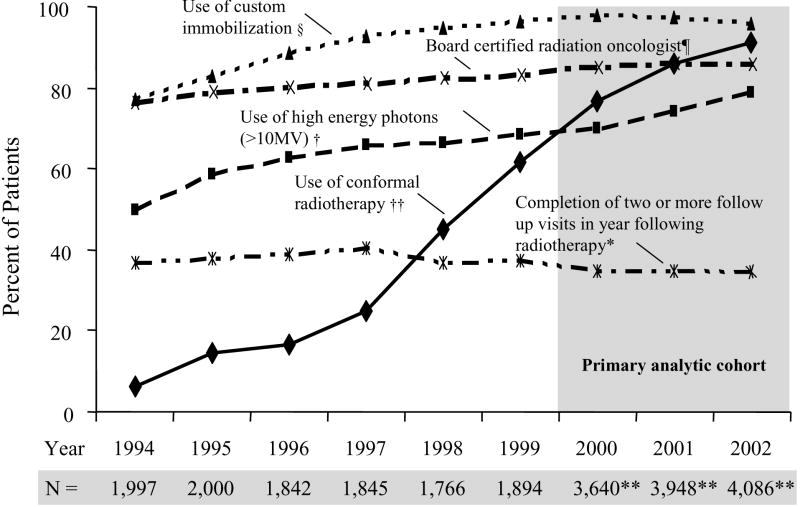

Secular Trends in Measure Adherence

Figure 2 illustrates secular trends in adherence to the five radiotherapy quality measures for the 23,018 men diagnosed from 1994 to 2002. Conformal radiotherapy adherence increased from 6% (122 of 1,997 patients) in 1994 to 91% (3,730/4,086) in 2002. Adherence to the use of high-energy photons and custom immobilization also increased, though less dramatically. The proportion of patients who had consistent follow-up with radiation oncologists plateaued at approximately one-third while self-reported board certification increased slightly during the study period.

Figure 2.

Secular Trends in Adherence to Five Radiotherapy Quality Measures among 23,018 Medicare Beneficiaries with Localized Prostate Cancer, 1994 to 2002

Text legend:

§ We classified 1,257 patients whose immobilization was not reported as having received non-custom immobilization.

¶ We excluded 3% (789) of patients who could not be linked to their physician in the AMA Masterfile.

† We classified 1,007 patients in 2001 and 2002 who received IMRT but whose photon energy was not reported as having received high energy photons. We classified 380 patients whose photon energy was not reported as having received photon energies ≤ 10 MV.

†† Conformal radiotherapy includes 3D conformal and intensity modulated radiotherapy. We classified 217 patients whose treatment planning technique was not reported as having received non-conformal radiotherapy.

*We excluded 1,731 patients in 2002 who had less than 12 months of follow-up for evaluation of claims following completion of radiotherapy.

**Includes cases from Greater California, Kentucky, Louisiana, and New Jersey registries starting in 2000.

DISCUSSION

We conducted this study to characterize variation in adherence to RAND-proposed EBRT quality measures for the treatment of localized prostate cancer using the linked SEER-Medicare database. Analyzing administrative claims data from 11,674 elderly men diagnosed between 2000 and 2002, we observed a high level of adherence to each of the three EBRT technical measures. However, overall high technical measure adherence masked variation in adherence by geography, socioeconomic status, and radiotherapy facility teaching affiliation.

Our findings are consistent with and extend previous studies describing cancer care quality. Results of the National Initiative for Cancer Care Quality documented overall high adherence to evidence-based quality measures among patients with colorectal (86% adherence) and breast cancer (78% adherence), but noted variability in adherence across metropolitan areas.7 Other studies of breast cancer care quality have found that geography and higher socioeconomic status are associated with higher adherence to quality measures and variation in treatment.22, 23 While previous work has noted the importance of geographic and socioeconomic factors in initial therapy selection for men with prostate cancer24, 25, our work highlights these factors as potentially contributing to variation in the quality of radiotherapy treatment delivery. Lastly, our work extends findings from patterns of care surveys that have documented increasing use of conformal radiotherapy for the treatment of localized prostate cancer.26

The SEER-Medicare data lack sufficient detail to determine the reasons underlying the observed variation in adherence. The principle advantage of using SEER-Medicare data is that it portrays, in broad brush strokes, an overview of radiation oncology treatment delivery to older Americans. Follow-up studies using more detailed sources of data may drill down to determine reasons for underlying variation. The variation we observed has several plausible explanations. Patients may receive care in settings which may differ in their capacity to deliver high quality care.27 This may relate to the geographic distribution of facilities with advanced technologies and patients’ ability to access to them.28 Because all patients in our cohort had Medicare insurance, financial access barriers are a less likely source of variation. Nonetheless, financial hurdles above and beyond insurance (such as co-payments, transportation costs, or time spent away from work) may present challenges for some patients.

We observed widely different adherence to the follow-up measure. The RAND report specifies that at least 2 follow-up visits be completed by the treating physician in the first post treatment year.10, 11 However, while only one-third of patients consistently follow-up with their radiation oncologists, 80% of patients follow-up with either their radiation oncologists or urologists, suggesting that urologists are playing an active role in caring for patients who receive radiotherapy. We also found that two-thirds of patients had follow-up visits with their radiation oncologists at least once in the year following therapy. Perhaps, following radiotherapy, radiation oncologists triage patients back to referring urologists. It is also possible that patients prefer to maintain their care relationships with both referring urologists and treating radiation oncologists. In addition, urologists and treating radiation oncologists may have a cooperative approach to follow-up visits that incorporates both specialties.

Multidisciplinary care is important and likely beneficial to men after radiotherapy for prostate cancer. Yet, patients may also gain from maintaining follow-up with their radiation oncologists, who have particular training in evaluating and managing the potential long-term toxicities of radiotherapy. Whether care quality or outcomes differ among patients followed by referring urologists compared to treating radiation oncologists is uncertain and cannot be ascertained from our data. It is clear that observed practice patterns deviate from the RAND metric specifying two follow-up visits by treating radiation oncologists. As a result, greater awareness of follow-up in the radiation oncology community may be required.29 Furthermore, in view of the prevalence of “shared care” models between urologists and radiation oncologists, the quality metric itself merits clarification.

This work augments the emerging literature examining the RAND-proposed quality measures for localized prostate cancer.12, 30 One study assessed RAND measures for 168 men at a single academic institution and found generally high adherence.12 However, the investigators were unable to measure adherence to the technical measures of radiotherapy despite extensive review of electronic data and medical charts. Often, radiotherapy documentation is stored within outpatient radiotherapy departments or stand-alone facilities, separate from inpatient hospital documentation and not consistently included in patient charts. We found that important, specific technical measures of radiotherapy quality can be assessed for large numbers of patients using administrative claims data. Nonetheless, challenges in accessing radiotherapy documentation could be alleviated by developing standardized treatment summaries that include appropriate quality metrics and become part of patients’ permanent medical records.

Recently, Miller et al assessed adherence to RAND radiotherapy quality metrics among a sample of 1,385 men diagnosed with localized prostate cancer between 2000 and 2001 who received EBRT.31 The great majority (93%) of the cohort was 60 years or older. Using explicit chart review to assess quality measure adherence, the investigators found similarly high adherence to measures of computed tomography planning (88% adherence), high-energy photons (82%), and board certification (94%), compared to 85%, 75% and 85%, respectively, observed in the current claims-based analysis. However, they found lower adherence to immobilization (66%) and higher adherence to follow-up visits (66%) than we observed (97% and 34% in the current study, respectively). While the similarities lend external validity to our findings, the differences raise questions about the extent to which quality measure performance is accurately reflected in medical chart documentation versus Medicare claims.32

Differences in claims reimbursement among quality measures may be one reason for the discordance between the findings of Miller et al and the current study. Claims for conformal radiotherapy and beam energy represent the largest portion of EBRT reimbursement.19 Chart documentation and Medicare claims are likely to be similar for these measures, as omitting chart documentation or claims for these highly reimbursed processes (Table 1) would be akin to not noting or billing for surgeries. There may be less financial incentive to report claims for follow-up visits, leading to differences between what is noted in patient charts and what is reported in claims. Nonetheless, the high proportion of patients (91%) who had at least 1 visit with radiation oncologists and urologists suggests that unbilled visits may not be a logical explanation for the lower follow-up observed in our study. Furthermore, we observed higher adherence to the immobilization measure in claims data compared to chart data, despite its lower reimbursement, suggesting that immobilization may have been provided but not documented in the chart. Therefore, the definition of what constitutes follow-up and with whom and the extent of immobilization may differ between claims data and chart data. These differences highlight the need for collaborative, complimentary methods of care quality assessment, combining the broad-based view of administrative databases with the nuanced, detail-rich information offered by direct medical chart abstraction.33

The use of administrative claims data for quality assessment has both advantages and limitations. While claims data can be efficiently analyzed to monitor population-based adherence to quality measures, such data are not collected for research purposes, can lack important, clinically relevant information, and may under-report treatment or processes of care that may be critical to quality ascertainment.34-37 Our research did not examine a comprehensive set of quality measures for EBRT. For example, we were not able to assess radiotherapy dose, a RAND-proposed quality measure, because it is not captured in SEER data or Medicare claims. Furthermore, while we were able to assess claims for conformal radiotherapy, we could not measure the quality of conformal planning itself (eg protection of the rectal mucosa, another RAND-proposed measure). Another limitation is that measures of socioeconomic status were captured at the census tract rather than the individual level. While previous studies have validated the use of proxy census indicators for individual socioeconomic status in health services research, such estimates may not provide precise measures of individual socioeconomic status.38 Lastly, our conclusions may reflect variation in coding practices rather than variation in care quality. While measure adherence differed among SEER registries, perhaps this reflects systematic, less detailed claims reporting in certain geographic areas rather than variation in care quality.35

In a population-based cohort of men with localized prostate cancer, we found that high adherence to care quality measures proposed by a RAND expert panel masked substantial variation in care quality by geography, socioeconomic status, and radiotherapy facility teaching affiliation. Future research should examine reasons for variation in these measures and whether variation is associated with important clinical outcomes.

Acknowledgments

This study used the linked Surveillance, Epidemiology, and End Results (SEER)–Medicare database. The interpretation and reporting of these data are the sole responsibility of the authors. The authors acknowledge the efforts of the Applied Research Program, National Cancer Institute, the Office of Research, Development and Information, Centers for Medicare & Medicaid Services, Information Management Services, Inc., and the SEER Program tumor registries in the creation of the SEER–Medicare database. We thank Yihai Liu, MS for assistance with data manipulation in the early stages of this project.

Funding/Support: Supported by an individual postdoctoral fellowship Ruth L. Kirschstein National Research Service Award (National Cancer Institute 1F32 CA 123964-01, Dr. Bekelman).

Footnotes

Meeting Presentation: This work was selected to be presented in part at the American Society of Clinical Oncology 43rd Annual Meeting, Chicago, IL, June 1 - 4, 2007 and to receive a 2007 ASCO Foundation Merit Award and a C. Julian Rosenthal Fellowship Award.

Institutional Review Board Approval: SEER-Medicare data are submitted electronically to the National Cancer Institute without personal identifiers (ie, are de-identified) and are available to the public for research purposes. As portions of this study required access to restricted variables identifying radiation oncologists and urologists, this research was approved by the review boards of the Memorial Sloan-Kettering Cancer Center and each of the individual 16 SEER registries from which data was obtained.

Conflicts of Interest Notification The authors report no actual or potential conflicts of interest.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Macdonald JS, Smalley SR, Benedetti J, et al. Chemoradiotherapy after Surgery Compared with Surgery Alone for Adenocarcinoma of the Stomach or Gastroesophageal Junction. New England Journal of Medicine. 2001 September 6;345(10):725–730. doi: 10.1056/NEJMoa010187. [DOI] [PubMed] [Google Scholar]

- 2.Gebski V, Lagleva M, Keech A, Simes J, Langlands AO. Survival Effects of Postmastectomy Adjuvant Radiation Therapy Using Biologically Equivalent Doses: A Clinical Perspective. J Natl Cancer Inst. 2006 January 4;98(1):26–38. doi: 10.1093/jnci/djj002. [DOI] [PubMed] [Google Scholar]

- 3.RTOG Procedure Manual. Philadelphia, PA: Radiation Therapy Oncology Group; 2005. [Last accessed February 22, 2007 at]. http://www.rtog.org/pdf_document/manual.pdf. [Google Scholar]

- 4.Müller R, Eich H. The Development of Quality Assurance Programs for Radiotherapy within the German Hodgkin Study Group (GHSG) Strahlentherapie und Onkologie. 2005;181:557–566. doi: 10.1007/s00066-005-1437-0. [DOI] [PubMed] [Google Scholar]

- 5.Hewitt MSJ. Ensuring Quality Cancer Care. Washington, DC: National Academy Press; 1999. [PubMed] [Google Scholar]

- 6.Niederhuber JE. The Nation’s Investment in Cancer Research: A Plan and Budget Proposal for Fiscal Year 2008. Washington, DC: US Department of Health and Human Services, National Institutes of Health, National Cancer Institute; 2006. [Google Scholar]

- 7.Malin JL, Schneider EC, Epstein AM, Adams J, Emanuel EJ, Kahn KL. Results of the National Initiative for Cancer Care Quality: How Can We Improve the Quality of Cancer Care in the United States? 2006;24:626–634. doi: 10.1200/JCO.2005.03.3365. [DOI] [PubMed] [Google Scholar]

- 8.Chan JM, Jou RM, Carroll PR. The relative impact and future burden of prostate cancer in the United States. Journal of Urology. 2004 Nov;172(5 Pt 2):S13–16. discussion S17. [PubMed] [Google Scholar]

- 9.Miller DC, Montie JE, Wei JT. Measuring the quality of care for localized prostate cancer. Journal of Urology. 2005 Aug;174(2):425–431. doi: 10.1097/01.ju.0000165387.20989.91. [DOI] [PubMed] [Google Scholar]

- 10.Litwin M, Steinberg M, Malin J, et al. Prostate Cancer Patient Outcomes and Choice of Providers: Development of an Infrastructure for Quality Assessment. Santa Monica, CA: RAND; 2000. Available at http://www.rand.org/publications/MR/MR1227/ [Google Scholar]

- 11.Spencer BA, Steinberg M, Malin J, Adams J, Litwin MS. Quality-of-Care Indicators for Early-Stage Prostate Cancer. J Clin Oncol. 2003 May 15;21(10):1928–1936. doi: 10.1200/JCO.2003.05.157. [DOI] [PubMed] [Google Scholar]

- 12.Miller DC, Litwin MS, Sanda MG, et al. Use of quality indicators to evaluate the care of patients with localized prostate carcinoma. Cancer. 2003;97(6):1428–1435. doi: 10.1002/cncr.11216. [DOI] [PubMed] [Google Scholar]

- 13.Miller M, Swan J. News: SEER doubles coverage by adding registries for four states. Cancer. 2001;93(7):500. doi: 10.1093/jnci/93.7.500. [DOI] [PubMed] [Google Scholar]

- 14.AJCC Cancer Staging Manual. 6. New York, New York: Springer-Verlag New York, Inc; 2002. [Google Scholar]

- 15.Potosky AL, Riley GF, Lubitz JD, Mentnech RM, Kessler LG. Potential for cancer related health services research using a linked Medicare-tumor registry database. Med Care. 1993 Aug;31(8):732–748. [PubMed] [Google Scholar]

- 16.International Classification of Diseases, 9th Revision (ICD-9-CM) Chicago (IL): American Medical Association Publications; 2000. [Google Scholar]

- 17.Current procedural terminology. Chicago (IL): American Medical Association; 200002. [Google Scholar]

- 18.Mant J. Process versus outcome indicators in the assessment of quality health care. Int J Qual Health Care. 2001;13(6):475–480. doi: 10.1093/intqhc/13.6.475. [DOI] [PubMed] [Google Scholar]

- 19.The ASTRO/ACR Guide to Radiation Oncology Coding. Chicago, IL: American Medical Association; 2004. [Google Scholar]

- 20.Baldwin LM, Adamache W, Klabunde CN, Kenward K, Dahlman C JLW, W JL. Linking physician characteristics and medicare claims data: issues in data availability, quality, and measurement. Med Care. 2002 Aug;40(8 Suppl):IV-82–95. doi: 10.1097/00005650-200208001-00012. [DOI] [PubMed] [Google Scholar]

- 21.Klabunde C, Potosky A, Legler J, Warren J. Development of a comorbidity index using physician claims data. J Clin Epidemiol. 2000;53:1258–1267. doi: 10.1016/s0895-4356(00)00256-0. [DOI] [PubMed] [Google Scholar]

- 22.Griggs JJ, Culakova E, Sorbero MES, et al. Effect of Patient Socioeconomic Status and Body Mass Index on the Quality of Breast Cancer Adjuvant Chemotherapy. J Clin Oncol. 2007;25:277–284. doi: 10.1200/JCO.2006.08.3063. [DOI] [PubMed] [Google Scholar]

- 23.Breen N, Wesley M, Merrill R, Johnson K. The relationship of socio-economic status and access to minimum expected therapy among female breast cancer patients in the National Cancer Institute Black-White Cancer Survival Study. Ethn Dis. 1999;9:111–125. [PubMed] [Google Scholar]

- 24.Krupski TL, Kwan L, Afifi AA, Litwin MS. Geographic and socioeconomic variation in the treatment of prostate cancer. J Clin Oncol. 2005 Nov 1;23(31):7881–7888. doi: 10.1200/JCO.2005.08.755. [DOI] [PubMed] [Google Scholar]

- 25.Shavers VL, Brown ML, Potosky AL, et al. Race/ethnicity and the receipt of watchful waiting for the initial management of prostate cancer. Journal of General Internal Medicine. 2004 Feb;19(2):146–155. doi: 10.1111/j.1525-1497.2004.30209.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Zelefsky MJ, Moughan J, Owen J, Zietman AL, Roach IIIM, Hanks GE. Changing trends in national practice for external beam radiotherapy for clinically localized prostate cancer: 1999 patterns of care survey for prostate cancer. International Journal of Radiation Oncology*Biology*Physics. 2004;59(4):1053. doi: 10.1016/j.ijrobp.2003.12.011. [DOI] [PubMed] [Google Scholar]

- 27.Bach PB. Racial Disparities and Site of Care. Ethnicity & Disease. 2005 Spring;15(2 Suppl 2):S31–33. [PubMed] [Google Scholar]

- 28.Skinner J, Weinstein J, Sporer S, Wennberg J. Racial, ethnic, and geographic disparities in rates of knee arthroplasty among Medicare patients. New England Journal of Medicine. 2003;349(14):1350–1359. doi: 10.1056/NEJMsa021569. [DOI] [PubMed] [Google Scholar]

- 29.Glatstein E. The whirligig of time. International Journal of Radiation Oncology*Biology*Physics. 2006;65(2):322–323. doi: 10.1016/j.ijrobp.2006.01.042. [DOI] [PubMed] [Google Scholar]

- 30.Krupski TL, Bergman J, Kwan L, Litwin MS. Quality of prostate carcinoma care in a statewide public assistance program. Cancer. 2005 Sep 1;104(5):985–992. doi: 10.1002/cncr.21272. [DOI] [PubMed] [Google Scholar]

- 31.Miller DC, Spencer BA, Ritchey J, et al. Treatment Choice and Quality of Care for Men With Localized Prostate Cancer. Med Care. 2007;45:401–409. doi: 10.1097/01.mlr.0000255261.81220.29. [DOI] [PubMed] [Google Scholar]

- 32.Luck J, Peabody JW, Dresselhaus TR, Lee M, Glassman P. How well does chart abstraction measure quality? A prospective comparison of standardized patients with the medical record. The American Journal of Medicine. 2000;108(8):642–649. doi: 10.1016/s0002-9343(00)00363-6. [DOI] [PubMed] [Google Scholar]

- 33.Schneider EC, Epstein AM, Malin JL, Kahn KL, Emanuel EJ. Developing a System to Assess the Quality of Cancer Care: ASCO’s National Initiative on Cancer Care Quality. J Clin Oncol. 2004 August 1;22(15):2985–2991. doi: 10.1200/JCO.2004.09.087. [DOI] [PubMed] [Google Scholar]

- 34.Malin JL, Kahn KL, Adams J, Kwan L, Laouri M, Ganz PA. Validity of Cancer Registry Data for Measuring the Quality of Breast Cancer Care. J Natl Cancer Inst. 2002;94:835–844. doi: 10.1093/jnci/94.11.835. [DOI] [PubMed] [Google Scholar]

- 35.Warren JL, Harlan LC. Can Cancer Registry Data Be Used to Study Cancer Treatment? Medical Care. 2003;41(9):1003–1005. doi: 10.1097/01.MLR.0000086827.00805.B5. [DOI] [PubMed] [Google Scholar]

- 36.Cress R, Zaslavsky A, West D, Wolf R, Felter M, Ayanian J. Completeness of information on adjuvant therapies for colorectal cancer in population-based cancer registries. Med Care. 2003;41:1006–1012. doi: 10.1097/01.MLR.0000083740.12949.88. [DOI] [PubMed] [Google Scholar]

- 37.Bickell NA, Chassin MR. Determining the Quality of Breast Cancer Care: Do Tumor Registries Measure Up? Ann Intern Med. 2000 May 2;132(9):705–710. doi: 10.7326/0003-4819-132-9-200005020-00004. [DOI] [PubMed] [Google Scholar]

- 38.Geronimus AT, Bound J. Use of census-based aggregate variables to proxy for socioeconomic group: Evidence from national samples. American Journal Of Epidemiology. 1998 Sep 1;148(5):475–486. doi: 10.1093/oxfordjournals.aje.a009673. [DOI] [PubMed] [Google Scholar]