Abstract

Previous studies demonstrated that induced EEG activity in the gamma band (iGBA) plays an important role in object recognition and is modulated by stimulus familiarity and its compatibility with pre-existent representations. In the present study we investigated the modulation of iGBA by the degree of familiarity and perceptual expertise that observers have with stimuli from different categories. Specifically, we compared iGBA in response to human faces versus stimuli which subjects are not expert with (ape faces, human hands, buildings and watches). iGBA elicited by human faces was higher and peaked earlier than that elicited by all other categories, which did not differ significantly from each other. These findings can be accounted for by two characteristics of perceptual expertise. One is the activation of a richer, stronger and, therefore, more easily accessible mental representation of human faces. The second is the more detailed perceptual processing necessary for within-category distinctions, which is the hallmark of perceptual expertise. In addition, the sensitivity of iGBA to human but not ape faces was contrasted with the face-sensitive N170-effect, which was similar for human and ape faces. In concert with previous studies, this dissociation suggests a multi-level neuronal model of face recognition, manifested by these two electrophysiological measures, discussed in this paper.

Cognitive neuroscience research has been enriched during the last decade by studies associating high-frequency EEG activity with the generation of unified perceptual representations in both vision and audition, and the activation of pre-stored memory representations (Busch, et al., 2006; Kaiser and Lutzenberger, 2003). Changing the conception that physiologically pertinent EEG frequencies are lower than 20 Hz, studies in animals (Singer and Gray, 1995) and in humans (e.g. Tallon-Baudry et al., 1996, 1997, 2003; Gruber et al. 2004, 2005; Rodriguez et al. 1999) explored activity in a range of frequencies higher than 20 Hz, which were addressed as “gamma-band”. Specifically, many studies have associated between high-level perceptual mechanisms and the so-called “induced” gamma-band activity (iGBA; for review see Bertrand and Tallon-Baudry, 2000).

iGBA is a measure for local neural synchronization appearing as bursts of high-frequency EEG activity which is event-related but is not necessarily phase locked to the stimulus onset (and thus may be cancelled out in traditional ERP analysis). The amplitude of these bursts is modulated by physical stimulus attributes such as size, contrast and spatial-frequency spectrum (Gray et al., 1990; Bauer et al., 1995, Busch et al., 2004), perceptual factors acting bottom-up, such as the ability to form coherent gestalts (Keil at al., 1999; Tallon-Baudry et al., 1996), and top-down acting factors such as attention (Gruber et al., 1999; Tiitinien et al., 1993, Fan et al. 2007) or memory (Osipova et al., 2006; Gruber and Müller 2006; Gruber et al., 2004; Tallon-Baudry et al., 1998, 2001, Jensen et al. 2007). In concert, these bottom-up and top-down modulations raised the hypothesis that iGBA reflects the integration of sensory input with pre-existent memory representations to form experienced entities (Tallon-Baudry 1997, 2003). This hypothesis is further supported by studies demonstrating that iGBA amplitude is considerably larger for meaningful than meaningless stimuli. Specifically, such effects were shown comparing objects with meaningless shapes (Gruber et al., 2005; Busch et al., 2006), upright versus inverted Mooney faces (Rodiguez et al., 1999) or regularly configured compared with scrambled faces (Zion-Golumbic and Bentin, in press). The association of iGBA with activation of memory representations was convincingly demonstrated in a recent study showing that familiar faces elicit larger iGBA amplitudes in response to familiar than unfamiliar faces (Anaki et al. in press). Since both familiar and unfamiliar faces are coherent meaningful stimuli, the enhanced iGBA for the former could only be explained by their richer memory associations. In addition to the modulation of iGBA amplitude, a recent attempt was made to explore modulation of iGBA peak latency during processing meaningful upright and rotated line drawings (Martinovic et al. 2007). Naming was delayed for rotated drawings relative to upright presentation, and this delay in performance was accompanied with a delay in iGBA peak latency. These data are evidence that the latency of iGBA can also be of value in investigations of neural mechanisms of perception.

As an extension to the familiar/unfamiliar distinction cited above, and the prevalent account for it, it is conceivable that different categories of objects could elicit different magnitudes of iGBA according to the observers’ familiarity with objects, and perceptual expertise. Yet, there has been no systematic exploration to date of iGBA modulations by different perceptual categories. In the present study we explored such modulations with a special focus on human faces, which are a prominent example of perceptual expertise.

Two aspects of perceptual expertise lead to our hypothesis that human faces may elicit higher iGBA than objects for which humans do not develop special expertise. One is evidence showing higher expertise for faces within race than across races (Byatt and Rhodes, 2004), which strongly suggests that it is based on increased familiarity. Hence, the repeated neural activation elicited by frequent exposure to faces would render stronger representations than for less familiar stimuli (cf. Hebb, 1949), a difference that might be reflected by larger iGBA for faces. The second aspect of expertise is that different processing strategies are employed for human faces (or items of expertise, e.g. Tarr and Gauthier, 2000) than for objects. Specifically, the default level of categorization for human faces is at the individual exemplar level whereas other objects, even if familiar, are categorized only at a basic level (Tanaka 2001). We postulated that these more detailed processes invoked for items of expertise, which subsequently lead to within-category distinction, may be manifested by higher iGBA.

In the current study we compared the amplitude and latency of iGBA elicited by human faces with two additional biological categories - ape faces and human hands - and two man-made categories - buildings and watches. We postulated that if iGBA is mainly affected by the pre-experimental existence of a neural representation, it should be similar in response to stimuli from all the categories. However, if iGBA is influenced by the degree of familiarity with a stimulus or is modulated by the detailed processing applied when within-category distinction is necessary (as for items of expertise), it should be higher for human faces than for stimuli from the other categories. The comparison between iGBA elicited by human and ape faces is of special interest since ape faces share the structure as human faces but are less familiar and, indeed, humans are not experts in identifying ape faces at a subordinate level (Mondloch et al., 2006). This comparison enables us to distinguish between structure-based selectivity for faces (as is exhibited by the face-sensitive N170 component, see Carmel and Bentin, 2002; Itier et al., 2006) and between processes related to the special status of human faces.

Material and Methods

Participants

The participants were 16 undergraduates (9 female) from the Hebrew University ranging in age from 18 to 30 (median age 22). All participants reported normal or corrected to normal visual acuity and had no history of psychiatric or neurological disorders. Among them 4 were left handed. They signed an informed consent according to the institutional review board of the Hebrew University, and were paid for participation.

Stimuli

The stimuli consisted of 600 photographs of human faces, ape faces, human hands, buildings, watches and flowers (100 in each category). All the pictures were equated for luminance and contrast. The spectral energy was not equated, however previous studies did not find this factor to change the N170 effect for faces (Rousselet et al. 2005). The stimuli were presented at fixation and, seen from a distance of approximately 60 cm occupied 9.5◦ × 13◦ in the visual field (10 cm × 14 cm).

Task and Procedure

The 600 stimuli were fully randomized and presented in 7 blocks with a short break between blocks for refreshment. Each stimulus was presented for 700 ms with Inter-stimulus Intervals (ISI) varying between 500 ms and 1250 ms. Participants were requested to press a button each time a flower appeared on the screen. This procedure ensured that all other stimulus categories were equally task-relevant, that is, they were all task-defined distracters. The experiment was run in an acoustically treated and electrically isolated booth.

EEG recording

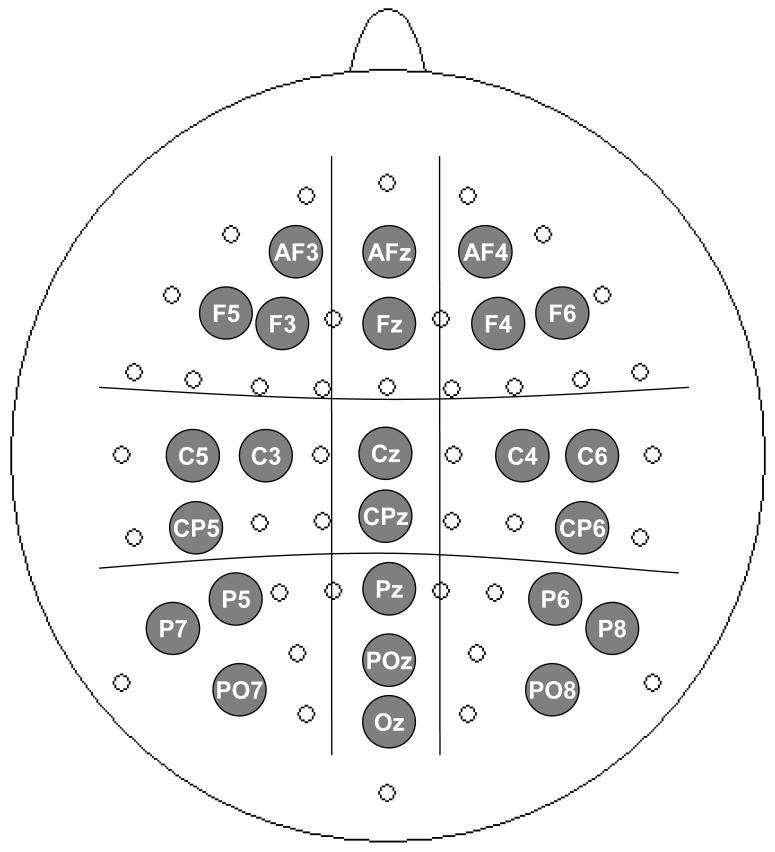

The EEG analog signals were recorded continuously by 64 Ag-AgCl pin-type active electrodes mounted on an elastic cap (ECI) according to the extended 10-20 system (Figure 1), and from two additional electrodes placed at the right and left mastoids, all reference-free. Eye movements, as well as blinks, were monitored using bipolar horizontal and vertical EOG derivations via two pairs of electrodes, one pair attached to the external canthi, and the other to the infraorbital and supraorbital regions of the right eye. Both EEG an EOG were sampled at 1024 Hz using a Biosemi Active II system (www.biosemi.com).

Figure 1.

The 64 sites from where EEG was recorded and the demarcation of the 9 regions where iGBA was analyzed. The gray labeled sites were included in the statistical analysis.

Data processing

Data were analyzed using Brain Vision Analyzer (Brain products GmBH; www.brainproducts.com) as well as house-made Matlab routines. Raw EEG data was 1.0 Hz high-pass filtered (24 dB) and referenced to the tip of the nose. Eye movements were corrected using an ICA procedure (Jung et al., 2000). Remaining artifacts exceeding ±100μV in amplitude or containing a change of over 100μV within a period of 50 ms were rejected. The EEG was then segmented into epochs ranging from 300 ms before to 800 ms after stimulus onset for all distracter conditions (targets were excluded from the analysis).

Induced gamma-band amplitudes were calculated by applying a wavelet analysis to individual trials and averaging the time frequency plots, using a procedure described in previous studies (e.g. Tallon-Baudry et al., 1997, Zion-Golumbic and Bentin, in press). Data was convolved with a complex Gaussian Morlett wavelet: using a constant ratio of f/σf =8 where σf =1/(2πσt) and normalization factor . This procedure was applied to frequencies ranging from 20Hz to 80Hz in steps of 0.75Hz, and the amplitudes were extracted and baseline-corrected relative to an epoch between -200 to -100 ms from stimulus onset yielding time-frequency plots (with some amount of smearing due to the time-frequency tradeoff). This method yields the sum of both the evoked (i.e. phase-locked) activity as well as the induced (non phase-locked) activity.

In all conditions a burst of activity was seen between 200-500 ms in the entire gamma frequency range, which was determined as the region of interest in this study. In order to verify that the activity seen during this time window is on an induced (rather than evoked) nature, we calculated the mean inter-trial phase locking values for each subject during this period (described by Tallon-Baudry et al., 1996; Delorme and Makeig, 2003). This measure produces a number between 0 and 1 reflecting the amount of phase locking between trials (1 - completely phase locked). Additional details regarding this analysis can be found in the Supplementary material as well as the values obtained for all the subjects. This analysis confirmed that during the time-window of interest there was very little phase locking and therefore we will refer to the activity as induced (iGBA).

The scalp distribution of this iGBA was widely spread, therefore, our main analysis compared the mean iGBA in left, midline and right clusters of electrodes at anterior, center and posterior regions (see Figure 1). iGBA was averaged over 20-80 Hz resulting in a single waveform for each subject, condition and electrode cluster.

Based on visual screening of the results, as well as previous results, the mid-posterior cluster was chosen for determining the latency of the iGBA peak. This was chosen as the most positive peak during an epoch between 200-500 ms for each subject and condition. Subsequently, for each electrode cluster the amplitudes at the peak latency were quantified for analysis of the distribution of iGBA amplitudes across the scalp.

In addition, since in all conditions an earlier epoch of lower-than-baseline activity (negative amplitudes) could be observed in the time-frequency plot we calculated the mean iGBA during an epoch between 50-200 ms, in the same frequency range.

The amplitudes iGBA were subjected to ANOVAs with repeated measures in order to determine statistical reliability. The factors were stimulus Category (human faces, ape faces, hands, buildings and watches), Anterior-Posterior distribution (anterior, center, posterior), and Laterality (left, medial, right). Significant main effects and interactions were followed up by subsequent one-way ANOVAs for each level of the interacting factors and planned contrasts. Latencies were analyzed in a one-way ANOVA with repeated measures comparing the 5 categories. A similar statistical design was used for assessing potentials effects during the earlier epoch except that the dependent variable was the mean (rather than peak) activity and, therefore, there was no latency analysis.

In addition, we analyzed the N170 amplitude and latency ERP components elicited by stimuli from the different categories. For each subject the EEG was segmented separately for each condition and averaged across. The ERPs were digitally filtered with a band-pass of 1-17 Hz (24 db) and baseline-corrected relative to the waveforms recorded between -200 to - 0 ms before stimulus onset. The N170 component was identified for each subject as the most negative peak between 150-200 ms in the ERP. Based on previous studies and on scrutiny of the present N170 distribution, the statistical analysis was restricted to posterior-lateral electrodes P10 (right) and P9 (left) posterior temporal sites. ANOVAs with repeated measures were applied on N170 amplitudes and latencies, as assessed at these two sites. The factors were Category and Hemisphere. Significant main effects and interactions were followed up by Bonferroni-corrected pair-wise comparisons. In all the analyses, degrees of freedom and MSEs were corrected for non-sphericity using the Greenhouse-Geisser correction (for simplicity, the uncorrected degrees of freedom are presented).

Results

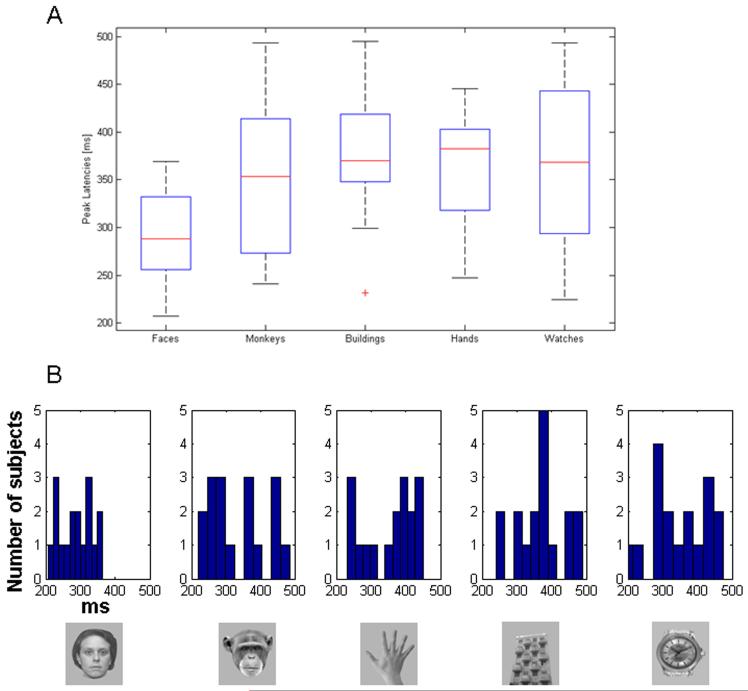

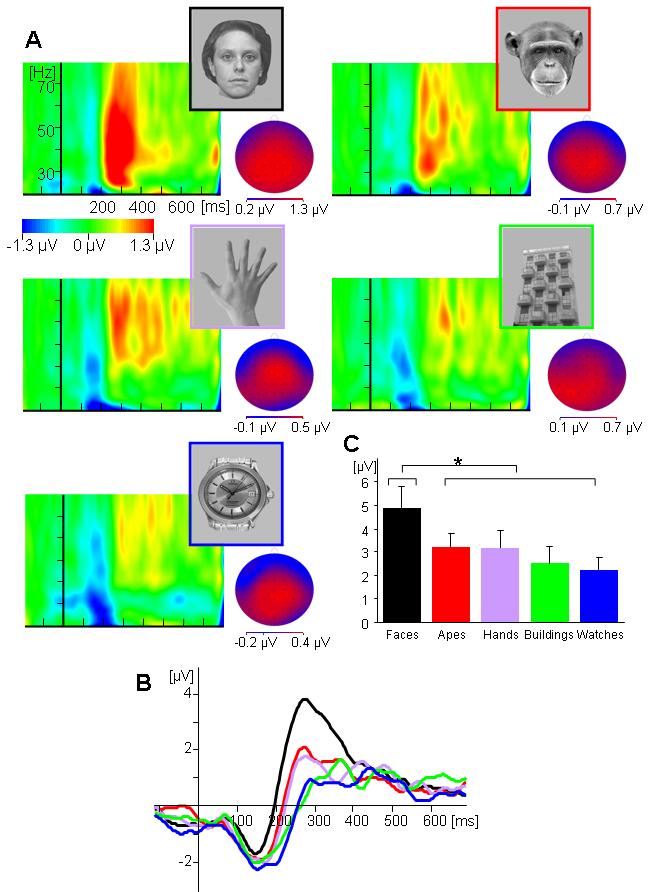

The amplitude of iGBA was higher for human faces (4.882 μV) than for all other stimulus categories [ape faces (3.217 μV), human hands (3.2 μV), buildings (2.52 μV) and watches (2.23 μV), see Figure 2]. The statistical significance of this pattern was determined by a main effect for Category [F(4,64)=3.112, p<0.05, MSE=88.2] and an interaction between Category with Anterior-Posterior distribution [F(8,120)=4.471, p<0.01, MSE=2.52] indicating that this effect was strongest in posterior clusters. Indeed, individual ANOVAs preformed at each Anterior-Posterior location revealed an effect for Category at central [F(4,64)=3.593, p<0.05, MSE=29.3] and posterior [F(4,64)=3.761, p<0.05, MSE=36.9] but not at anterior sites [F(4,64)=1.736, p>1, MSE=24.5]. In order to ensure the reliability of the Category effect given the possibility that iGBA does not have a Gaussian distribution, we also applied a non-parametric statistical test to the mean iGBA from the mid-posterior cluster where iGBA was maximal. Using a Monte Carlo randomization test we created a distribution of F-values based on 1000 random permutations of the iGBA from the different categories. This yielded a critical F-value threshold of 3.396 for alpha predetermined Type-I error of 5%, whereas the F-value obtain from the experimental data at this cluster was 3.835, which confirmed the above results.

Figure 2.

iGBA activity elicited by the five stimulus categories (A) Time-frequency plots from mid-posterior electrode Pz for human faces, ape faces, human hands, buildings and watches. The scalp distributions of iGBA are presented for all categories, at the latency of their respective maximal peak; note that whereas the same scale is used for the time-frequency plots, different scales are used for each distribution to allow visualization. (B) The time course of the iGBA amplitude (averaged over 20-80Hz) for each stimulus category. (C) Mean iGBA amplitudes for each stimulus category, as measured from the maximal peaks.

The electrode cluster analysis showed that overall iGBA amplitudes were largest in the middle-posterior cluster, as indicated by a main effect for Anterior-Posterior [posterior (3.971μV), central (3.421 μV), anterior (2.234 μV ); F(2,32)=20.334, p<0.001, MSE=16.6], and an interaction between Anterior-Posterior and Laterality [F(4,64)=11.421, p<0.001, MSE=1.04].

A planned contrast comparing iGBA at the middle-posterior cluster elicited by faces to that elicited by all other stimulus categories revealed a significant difference [F(1,16)=8.66, p<0.01, MSE=248]. Importantly, an additional contrast comparing iGBA elicited by ape faces and hands with that elicited by buildings and watches was not significant [F(1,16)<1, MSE=19.8].

iGBA peaked earlier for human faces (286ms) than for stimuli from all other categories [ape face (335ms), human hands (356 ms), buildings (367ms) and watches (348ms), see Figure 3]. ANOVA on the iGBA latencies at the middle-posterior cluster revealed a main effect for Category [F(4,64)=3.928, p<0.01, MSE=4326], and a contrast comparing the latency of iGBA peak for human faces with the other categories was significant [F(1,16)=16.909, p<0.01, MSE=69594]. Once again, the other categories did not differ significantly in latency [F(1,16]<1, MSE=21917]. Interestingly, in addition to peaking earlier than the rest of the categories, the latency of iGBA for human faces was more consistent across subjects. This is evident from the smaller standard deviation in the iGBA latencies for human faces than for the other categories and from the distribution of the latencies from individual subjects, presented in Figure 3 (a and b). The variability of the latencies was tested by replacing the latency of each subject in each condition (Xij) with its absolute deviation from the mean of that condition . A one-way ANOVA was applied on these difference values comparing the 5 categories yielding a main effect of Category [F(4,64)=3.928, p<0.05, MSE=1367]. Subsequent contrasts showed that the deviance of iGBA latency to human faces was significantly smaller than that of the other categories [F(1,17)=10.005, p<0.01, MSE=25285], which did not differ among themselves [F(1,17)=1.425, p>0.2, MSE=1949].

Figure 3.

Distribution of the peak latencies of maximal iGBA activity for each stimulus category (A) Box plots of the latencies. The line in the middle of each box is the sample median, while the top and bottom of the box are the 25th and 75th percentiles of the samples, respectively. Lines extend from the box to show the furthest observations. (B) Distribution of latency values from all subjects for each category. The mean latency of iGBA peaks for human faces was earlier than for the other categories. In addition, the peak latencies of individual subjects in response to human faces are more concentrated around the mean while for the other categories there is more dispersion.

The analysis of the mean iGBA amplitudes in the 50-200 ms time window (Figure 2) revealed no effect of stimulus category [F(4,64)=1.39, p>0.2] and no interactions. Therefore, we did not address this pattern in any additional analyses.

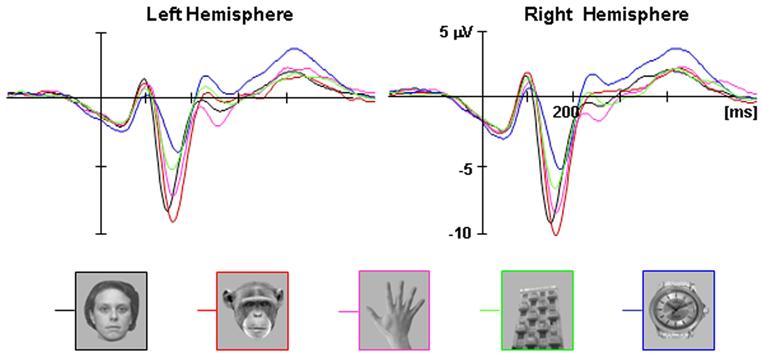

The analysis of the N170 amplitude showed the expected category effect [F(4,64)=30.823, p<0.001, MSE=8.09] as well as an effect for Hemisphere [F(1,16)=6.94, p<0.05, MSE=13.35]. Replicating previous reports, the N170 elicited by apes (-10.45 μV) was slightly higher than that elicited by human faces (-9.64 μV), however, this difference was not significant (p=0.8). In contrast, the N170 amplitude elicited by both types of faces was significantly higher than that N170 elicited by watches (-6.65 μV, p<0.01 in both cases) and buildings (-5.25μV, p<0.01 in both cases). The N170 elicited by hands was unexpectedly higher than reported in previous studies (-8.869 μV), and although it was lower than both face categories, this difference did not reach significance (p>0.1). Since the trend found here is consistent with previous results which found significant differences between the N170 elicited by faces and human hands (e.g. Bentin et al., 1996; Eimer 2000, Onitsuka et al. 2006, Kovacs et al. 2006), and it was not the focus of the study, we did not investigated further the current null face-hands N170 effect.

Discussion

The major finding in this study is that the iGBA amplitude elicited by human faces was larger and peaked earlier than that elicited by several natural (ape faces, human hands), and man-made objects (buildings and watches). Importantly, although the iGBA elicited by non-human-face categories varied, these differences were not statistically significant. Moreover, timing of the iGBA response to human face was more consistent across participants than for the other categories. The variability in the latencies of iGBA between subjects in the non human-face categories lead to a smearing of the amplitudes for these categories as observed in the grand average of the iGBA (Figure 2).

The particular sensitivity of iGBA to human faces found in the present study, coupled with current theories about factors affecting the iGBA amplitude, adds a new perspective on the perceptual and neural characteristics of face processing. As reviewed in the introduction, iGBA is a measure of stimulus-driven synchronization whose amplitude reflects the size of the synchronized neural population within a local assembly or/and the degree of synchronicity within a given neural population (Singer and Gray, 1995). Correlations between the amplitude of this activity and different experimental manipulations could, therefore, suggest factors that increase or decrease in the amount of neural synchronization during perceptual processing. Along with this hypothesis, the present results suggest that larger and/or more synchronized neural assemblies are activated during processing human faces relative to ape faces, human hands, building or watches.

Why should human faces induce larger, earlier and more time-locked neural synchronization than other stimulus categories? We suggest that this difference is a consequence of the higher expertise that humans have with distinguishing among human faces, which entails different level of categorization; the default level of categorization (entry level) is basic for non-face categories but subordinate or single-exemplar level for faces (Rosch et al., 1976; Johnson and Mervis, 1997; Tanaka, 2001; for a detailed discussion of the relationship between perceptual expertise and level of categorization see Bukach et al., 2006). This distinction has implications regarding both the structural characteristics of the mental representations and, at the functional level, the processes applied during perception. At the structural level, the neural population encoding human faces might be more strongly-connected due to repeated exposure to faces and, therefore, would elicit stronger synchronized activity. In addition, the mental representation of human faces may contain richer information that allows sub-ordinate distinctions between faces of different gender, race, age, etc. Such information might be absent or reduced from the representation of other objects for which humans are less experts, and are represented primarily according to their function (for a review see Capitani et al., 2003). Hence, the amplitude differences between iGBA elicited by human faces and other objects may reflect the amount of information contained in their respective neural representations. The latency effect suggests that richer and stronger representations for faces are more accessible, which leads to the fairly consistent and faster activation of the face neural representation than for other objects, which entertain a more variable time course.

At the functional level, the default processing of human faces at the subordinate or individual exemplar level implies that information pertinent to subordinate categorization is extracted and integrated during structural encoding. This process includes a detailed analysis of inner components and the computation of the spatial relations between them during their integration into the global face structure (Cabeza and Kato, 2000; Maurer et al., 2002). Accordingly, the higher iGBA amplitude for human faces might reflect the analysis of this information that is not necessary for the categorization of objects. Another process that may be reflected by the increase in iGBA is the allocation of attention, since iGBA has been shown to be modulated by attention (Gruber et al., 1999; Tiitinien et al., 1993, Fan et al. 2007, Jensen et al. 2007). However, although some have showed that faces “pop-out” in a visual search task when presented among other stimuli (Hershler and Hochstein (2005), others suggested that this effect can be attributed to low-level vision characteristics (VanRullen, in press). Alternatively, attention may be necessary for more detailed analysis of the face and sub-ordinate categorization (Palermo and Rhodes 2002, 2007, but see Boutet et al. 2002). Still, it is unclear if different or additional attentional resources are involved in the processing of human faces relative to processing objects.

It is important to realize that the above two accounts are not mutually exclusive. Indeed, the information needed to make subordinate or single exemplar distinctions is, by necessity, more elaborate than that needed for basic-level categorization. Therefore, on the one hand, more detailed processes are required for subordinate categorizations and, on the other hand, the representations that are formed (or brought up from memory) during this process are richer. Further research is necessary in order to determine which of these factors, or perhaps both, are reflected in the iGBA effect for human faces.

Nevertheless, the higher iGBA found in this study for faces versus other objects establishes iGBA as a new face-sensitive electrophysiological measure, alongside with the well-documented N170 ERP component which exhibits larger amplitude for faces than non-face stimuli. However, this study as well as our previous reports, demonstrates that these two electrophysiological measures are dissociated and reflect different stages in face processing. Our previous studies show that, whereas the N170 ERP effect is elicited by face components regardless of their spatial configuration, the iGBA is significantly reduced when the inner components of the face are spatially scrambled (Zion-Golumbic and Bentin, in press). Moreover, we found that the N170-effect is enhanced by face inversion and it is insensitive to face familiarity, while iGBA is reduced by face inversion and enhanced by face familiarity (Anaki et al., in press). In the current study we found additional evidence for this dissociation. Whereas the current ERP findings replicate the similar N170 effects for ape and human faces reported by Carmel and Bentin (2002) the iGBA significantly distinguished between these two categories. This suggests that during basic-level categorization tasks (such as monitoring for flowers) the mechanism eliciting the N170 is particularly sensitive to stimuli that have a global structure of a face (regardless of whether the face is human, ape face or schematic, cf. Sagiv and Bentin 2001). In fact the N170 is higher for any stimulus that includes unequivocal physiognomic information. In contrast, here we show that iGBA is particularly sensitive to human faces, and not to ape faces with which humans are less familiar and do not usually distinguish at subordinate levels (Mondloch, et al., 2006). Therefore we propose that, in contrast to N170, the iGBA is a manifestation of forming a detailed perceptual representation that includes sufficient information for individuating exemplars of a perceptually well defined category such as faces

Taken together, these results lead us to propose a multi-level hierarchical model for face processing (cf. Rotshtein et al., 2005), that involves different neural mechanisms indexed by separate electrophysiological manifestations. First, there is a mechanism of face detection - that is, a basic-level categorization of a face, regardless of species, based on the global configuration and/or on the detection of face-specific features. This mechanism is reflected by the N170-effect.1 The detection of a human face triggers additional, more detailed, processing aimed at within-category distinctions. This process (frequently labeled “structural encoding”; e.g. Bruce and Young, 1986) is aimed at integrating the configural metrics and face-features characteristics into an individualized, informational rich, global face representation. This process might be influenced not only by bottom-up perceptual factors but also by the pre-existence of well formed and easily accessible mental representations. The increased iGBA for human faces may reflect this second process.

Finally, we should stress that there is nothing in our above conceptualization of the iGBA role in face processing that should necessarily be domain specific. Indeed, it is possible that, like the N170 similar iGBA effects could be generalized and observed for familiar buildings or for objects of expertise. Further studies should explore this possibility.

Supplementary Material

Figure 4.

Average ERPs for human faces, ape faces, hands, buildings and watches over posterior lateral scalp sites in each hemisphere (P10 from the Right Hemisphere and P9 from the Left Hemisphere P9). Both human and ape faces exhibit higher N170 amplitudes than the non-face categories.

Acknowledgement

This study was funded by NIMH grant R01 MH 64458 to Shlomo Bentin.

Footnotes

We acknowledge findings showing N170 effects for other objects of expertise (e.g. Tanaka and Curran, 2001; Scott et al., 2006). It is possible that the N170 mechanism is a more general flag for items that are potentially streamed into subordinate categorization processes.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Anaki D, Zion-Golumbic E, Bentin S. Electrophysiological neural mechanisms for detection, configural analysis and recognition of faces. NeuroImage. doi: 10.1016/j.neuroimage.2007.05.054. in press. [DOI] [PubMed] [Google Scholar]

- Bauer R, Brosch M, Eckhorn R. Different rules of spatial summation from beyond the receptive field for spike rates and oscillation amplitudes in cat visual cortex. Brain Res. 1995;669(2):291–7. doi: 10.1016/0006-8993(94)01273-k. [DOI] [PubMed] [Google Scholar]

- Bertrand O, Tallon-Baudry C. Oscillatory gamma activity in humans: a possible role for object representation. Int. J. Psychophysiol. 2000;38:211–223. doi: 10.1016/s0167-8760(00)00166-5. [DOI] [PubMed] [Google Scholar]

- Boutet I, Gentes-Hawn A, Chaudhuri A. The influence of attention on holistic face encoding. Cognition. 2002;84:321–341. doi: 10.1016/s0010-0277(02)00072-0. [DOI] [PubMed] [Google Scholar]

- Bruce V, Young A. Understanding face recognition. Br J Psychol. 1986;77:305–27. doi: 10.1111/j.2044-8295.1986.tb02199.x. [DOI] [PubMed] [Google Scholar]

- Bukach CM, Gauthier I, Tarr MJ. Beyond faces and modularity: the power of an expertise framework. Trends Cogn Sci. 2006;10:159–66. doi: 10.1016/j.tics.2006.02.004. [DOI] [PubMed] [Google Scholar]

- Busch NA, Debener S, Kranczioch C, Engel AK, Herrmann CS. Size matters: effects of stimulus size, duration and eccentricity on the visual gamma-band response. Clin Neurophysiol. 2004;115:1810–20. doi: 10.1016/j.clinph.2004.03.015. [DOI] [PubMed] [Google Scholar]

- Busch NA, Herrmann CS, Müller MM, Lenz D, Gruber T. A cross-laboratory study of event-related gamma activity in a standard object recognition paradigm. NeuroImage. 2006;33:1169–77. doi: 10.1016/j.neuroimage.2006.07.034. [DOI] [PubMed] [Google Scholar]

- Byatt G, Rhodes G. Identification of own-race and other-race faces: Implications for the representation of race in face space. Psychonomic Bulletin and Review. 2004;11:735–741. doi: 10.3758/bf03196628. [DOI] [PubMed] [Google Scholar]

- Cabeza R, Kato T. Features are also important: contributions of featural and configural processing to face recognition. Psychol. Sci. 2000;11:429–433. doi: 10.1111/1467-9280.00283. [DOI] [PubMed] [Google Scholar]

- Capitani E, Laiacona M, Mahon B, Caramazza A. What are the facts of semantic category-specific deficits? A critical review of the clinical evidence. Cognitive Neuropsychology. 2003;20:213–261. doi: 10.1080/02643290244000266. [DOI] [PubMed] [Google Scholar]

- Carmel D, Bentin S. Domain specificity versus expertise: factors influencing distinct processing of faces. Cognition. 2002;83:1–29. doi: 10.1016/s0010-0277(01)00162-7. [DOI] [PubMed] [Google Scholar]

- Delorme A, Makeig S. EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods. 2003;134(1):9–21. doi: 10.1016/j.jneumeth.2003.10.009. [DOI] [PubMed] [Google Scholar]

- Eimer M. The face-specific N170 component reflects late stages in the structural encoding of faces. Neuroreport. 2000;11(10):2319–24. doi: 10.1097/00001756-200007140-00050. [DOI] [PubMed] [Google Scholar]

- Fan J, Byrne J, Worden MS, Guise KG, McCandliss BD, Fossella J, Posner MI. The relation of brain oscillations to attentional networks. J Neurosci. 2007;27(23):6197–206. doi: 10.1523/JNEUROSCI.1833-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gray CM, Engel AK, Konig P, Singer W. Stimulus-Dependent Neuronal Oscillations in Cat Visual Cortex: Receptive Field Properties and Feature Dependence. Eur J Neurosci. 1990;2:607–619. doi: 10.1111/j.1460-9568.1990.tb00450.x. [DOI] [PubMed] [Google Scholar]

- Gruber T, Müller MM, Keil A, Elbert T. Selective visual-spatial attention alters induced gamma band responses in the human EEG. Clin Neurophysiol. 1999;110:2074–85. doi: 10.1016/s1388-2457(99)00176-5. [DOI] [PubMed] [Google Scholar]

- Gruber T, Tsivilis D, Montaldi D, Müller MM. Induced gamma band responses: an early marker of memory encoding and retrieval. Neuroreport. 2004;15(11):1837–41. doi: 10.1097/01.wnr.0000137077.26010.12. [DOI] [PubMed] [Google Scholar]

- Gruber T, Müller MM. Oscillatory brain activity dissociates between associative stimulus content in a repetition priming task in the human EEG. Cereb Cortex. 2005;15(1):109–16. doi: 10.1093/cercor/bhh113. [DOI] [PubMed] [Google Scholar]

- Gruber T, Müller MM. Oscillatory brain activity in the human EEG during indirect and direct memory tasks. Brain Res. 2006;1097:194–204. doi: 10.1016/j.brainres.2006.04.069. [DOI] [PubMed] [Google Scholar]

- Hebb DO. The organization of behavior: a neuropsychological theory. J. Wiley; New York: 1949. [Google Scholar]

- Itier RJ, Latinus M, Taylor MJ. Face, eye and object early processing: What is the face specificity? NeuroImage. 2006;29:667–676. doi: 10.1016/j.neuroimage.2005.07.041. [DOI] [PubMed] [Google Scholar]

- Jensen O, Kaiser J, Lachaux JP. Human gamma-frequency oscillations associated with attention and memory. Trends Neurosci. 2007;30(7):317–324. doi: 10.1016/j.tins.2007.05.001. [DOI] [PubMed] [Google Scholar]

- Johnson KE, Mervis CB. Effects of varying levels of expertise on the basic level of categorization. J Exp Psychol Gen. 1997;126:248–77. doi: 10.1037//0096-3445.126.3.248. [DOI] [PubMed] [Google Scholar]

- Jung TP, Makeig S, Westerfield M, Townsend J, Courchesne E, Sejnowski TJ. Removal of eye activity artifacts from visual event-related potentials in normal and clinical subjects. Clin. Neurophysiol. 2000;111:1745–1758. doi: 10.1016/s1388-2457(00)00386-2. [DOI] [PubMed] [Google Scholar]

- Kaiser J, Lutzenberger W. Induced gamma-band activity and human brain function. Neuroscientist. 2003;9:475–84. doi: 10.1177/1073858403259137. [DOI] [PubMed] [Google Scholar]

- Keil A, Müller MM, Ray WJ, Gruber T, Elbert T. Human gamma band activity and perception of a gestalt. J. Neurosci. 1999;19:7152–7161. doi: 10.1523/JNEUROSCI.19-16-07152.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kovács G, Zimmer M, Bankó E, Harza I, Antal A, Vidnyánszky Z. Electrophysiological correlates of visual adaptation to faces and body parts in humans. Cereb Cortex. 2006;16:742–53. doi: 10.1093/cercor/bhj020. [DOI] [PubMed] [Google Scholar]

- Onitsuka T, Niznikiewicz MA, Spencer KM, Frumin M, Kuroki N, Lucia LC, Shenton ME, McCarley RW. Functional and structural deficits in brain regions subserving face perception in schizophrenia. Am J Psychiatry. 2006;163(3):455–62. doi: 10.1176/appi.ajp.163.3.455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Osipova D, Takashima A, Oostenveld R, Fernandez G, Maris E, Jensen O. Theta and gamma oscillations predict encoding and retrieval of declarative memory. J Neurosci. 2006;26(28):7523–31. doi: 10.1523/JNEUROSCI.1948-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martinovic J, Gruber T, Müller MM. Induced Gamma Band Responses Predict Recognition Delays during Object Identification. J. Cogn Neurosci. 2007;19(6):921–34. doi: 10.1162/jocn.2007.19.6.921. [DOI] [PubMed] [Google Scholar]

- Maurer D, Le Grand R, Mondloch CJ. The many faces of configural processing. Trends Cogn. Sci. 2002;6:255–260. doi: 10.1016/s1364-6613(02)01903-4. [DOI] [PubMed] [Google Scholar]

- Meissner CA, Brigham JC. Thirty years of investigating the own-race bias in memory for faces: A meta-analytic review. Psychology, Public Policy and Law. 2001;7:3–35. [Google Scholar]

- Mondloch CJ, Maurer D, Ahola S. Becoming a face expert. Psychol. Sci. 2006;17:930–934. doi: 10.1111/j.1467-9280.2006.01806.x. [DOI] [PubMed] [Google Scholar]

- Palermo R, Rhodes G. The influence of divided attention on holistic face perception. Cognition. 2002;82(3):225–257. doi: 10.1016/s0010-0277(01)00160-3. [DOI] [PubMed] [Google Scholar]

- Palermo R, Rhodes G. Are you always on my mind? A review of how face perception and attention interact. Neuropsychologia. 2007;45(1):75–92. doi: 10.1016/j.neuropsychologia.2006.04.025. [DOI] [PubMed] [Google Scholar]

- Rodriguez E, George N, Lachaux JP, Martinerie J, Renault B, Varela FJ. Perception’s shadow: long-distance synchronization of human brain activity. Nature. 1999;397:430–433. doi: 10.1038/17120. [DOI] [PubMed] [Google Scholar]

- Rosch E, Mervis CB, Gray WD, Johnson DM, Boyes-Bream P. Basic objects in natural categories. Cognitive Psychology. 1976;8:382–439. [Google Scholar]

- Rousselet GA, Husk JS, Bennett PJ, Sekuler AB. Spatial scaling factors explain eccentricity effects on face ERPs. J. Vis. 2005;5:755–63. doi: 10.1167/5.10.1. [DOI] [PubMed] [Google Scholar]

- Rotshtein P, Henson RN, Treves A, Driver J, Dolan RJ. Morphing Marilyn into Maggie dissociates physical and identity face representations in the brain. Nat Neurosci. 2005;8(1):107–13. doi: 10.1038/nn1370. [DOI] [PubMed] [Google Scholar]

- Sagiv N, Bentin S. Structural encoding of human and schematic faces: Holistic and part-based processes. Journal of Cognitive Neuroscience. 2001;13:937–951. doi: 10.1162/089892901753165854. [DOI] [PubMed] [Google Scholar]

- Scott LS, Tanaka JW, Scheinberg DL, Curran T. A re-evaluation of the electrophysiological correlates of expert object processing. J. Cog Neurosci. 2006;18:1–13. doi: 10.1162/jocn.2006.18.9.1453. [DOI] [PubMed] [Google Scholar]

- Tanaka JW. The entry point of face recognition: Evidence for face expertise. J. Exp. Psychol. General. 2001;130:534–543. doi: 10.1037//0096-3445.130.3.534. [DOI] [PubMed] [Google Scholar]

- Tanaka JW, Curran T. A neural basis for expert object recognition. Psychol Sci. 2001;12(1):43–7. doi: 10.1111/1467-9280.00308. [DOI] [PubMed] [Google Scholar]

- Tallon-Baudry C, Bertrand O, Delpuech C, Pernier J. Stimulus specificity of phase-locked and non-phase locked 40 Hz visual responses in human. J. Neurosci. 1996;16:4240–4249. doi: 10.1523/JNEUROSCI.16-13-04240.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tallon-Baudry C, Bertrand O, Delpuech C, Pernier J. Oscillatory gamma-band (30-70 Hz) activity induced by a visual search task in humans. J. Neurosci. 1997;17:722–734. doi: 10.1523/JNEUROSCI.17-02-00722.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tallon-Baudry C, Bertrand O, Peronnet F, Pernier J. Induced gamma-band activity during the delay of a visual short-term memory task in humans. J Neurosci. 1998;18(11):4244–54. doi: 10.1523/JNEUROSCI.18-11-04244.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tallon-Baudry C, Bertrand O, Fischer C. Oscillatory synchrony between human extrastriate areas during visual short-term memory maintenance. J. Neurosci. 2001;21:RC117, 1–5. doi: 10.1523/JNEUROSCI.21-20-j0008.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tallon-Baudry C. Oscillatory synchrony and human visual cognition. J Physiol Paris. 2003;97:355–63. doi: 10.1016/j.jphysparis.2003.09.009. [DOI] [PubMed] [Google Scholar]

- Tiitinen H, Sinkkonen J, Reinikainen K, Alho K, Lavikainen J, Naatanen R. Selective attention enhances the auditory 40-Hz transient response in humans. Nature. 1993;364:59–60. doi: 10.1038/364059a0. [DOI] [PubMed] [Google Scholar]

- VanRullen R. On second glance: Still no high-level pop-out effect for faces. Vision Research. doi: 10.1016/j.visres.2005.07.009. in press. [DOI] [PubMed] [Google Scholar]

- Zion-Golumbic E, Bentin S. Dissociated Neural Mechanisms for Face Detection and Configural Encoding: Evidence from N170 and Induced Gamma-Band Oscillation Effects. Cereb Cortex. doi: 10.1093/cercor/bhl100. In press. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.