Abstract

By Fourier's theorem1, signals can be decomposed into a sum of sinusoids of different frequencies. This is especially relevant for hearing, because the inner ear performs a form of mechanical Fourier transform by mapping frequencies along the length of the cochlear partition. An alternative signal decomposition, originated by Hilbert2, is to factor a signal into the product of a slowly varying envelope and a rapidly varying fine time structure. Neurons in the auditory brainstem3–6 sensitive to these features have been found in mammalian physiological studies. To investigate the relative perceptual importance of envelope and fine structure, we synthesized stimuli that we call ‘auditory chimaeras’, which have the envelope of one sound and the fine structure of another. Here we show that the envelope is most important for speech reception, and the fine structure is most important for pitch perception and sound localization. When the two features are in conflict, the sound of speech is heard at a location determined by the fine structure, but the words are identified according to the envelope. This finding reveals a possible acoustic basis for the hypothesized ‘what’ and ‘where’ pathways in the auditory cortex7–10.

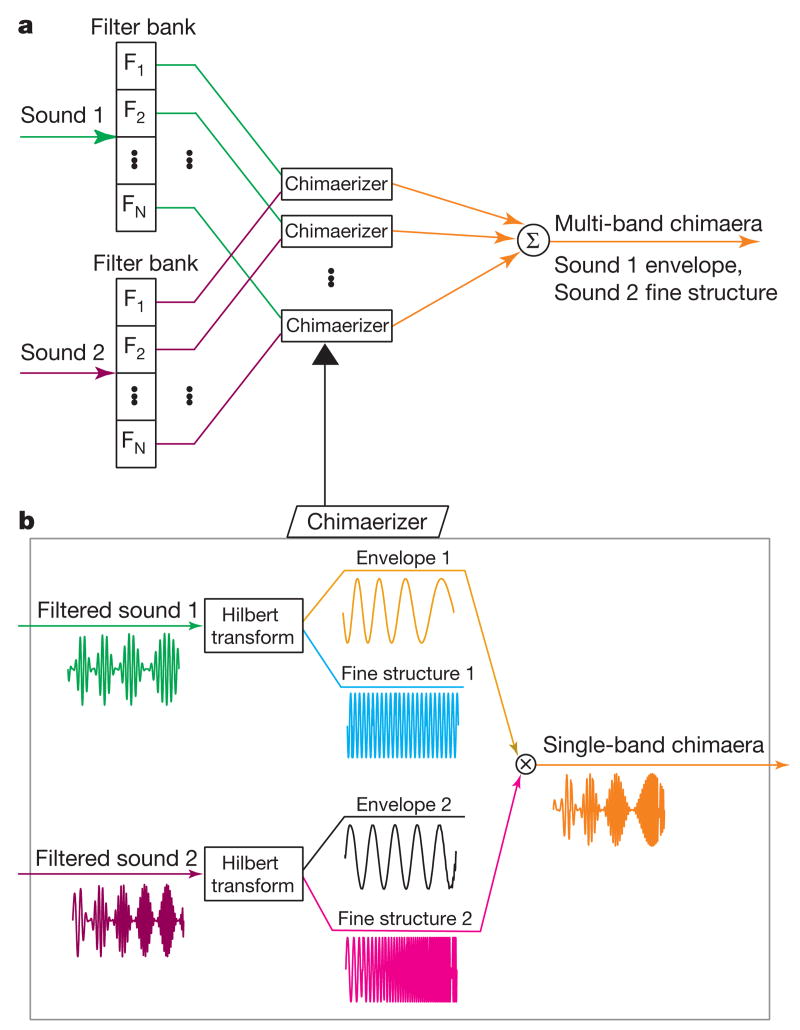

Combinations of features from different sounds have been used in the past to produce new, hybrid sounds for use in electronic music11,12. Our aim in combining features from different sounds was to study the perceptual relevance of the envelope and fine structure in different acoustic situations. To synthesize auditory chimaeras, two sound waveforms are used as inputs. A bank of band-pass filters is used to split each sound into 1 to 64 complementary frequency bands spanning the range 80–8,820 Hz. Such splitting into frequency bands resembles the Fourier analysis performed by the cochlea and by processors for cochlear implants. The output of each filter is factored into its envelope and fine structure using the Hilbert transform (see Methods). The envelope of each filter output from the first sound is then multiplied by the fine structure of the corresponding filter output from the second sound. These products are finally summed over all frequency bands to produce an auditory chimaera that is made up of the envelope of the first sound and the fine structure of the second sound in each band. The primary variable in this study is the number of frequency bands, which is inversely related to the width of each band. A block diagram of chimaera synthesis is shown in Fig. 1 with example waveforms for a single frequency band. For an audio demonstration of auditory chimaeras, see ref. 13.

Figure 1.

Auditory chimaera synthesis. a, Two sounds are used as input. Each sound is split into N complementary frequency bands with a perfect-reconstruction filter bank. Filtered signals from matching frequency bands are processed through a chimaerizer, which exchanges the envelope and the fine time structure of the two signals, producing a single-band chimaera. Partial chimaeras are summed over all frequency bands to produce a multi-band chimaera. b, Example waveforms within a chimaerizer, where band-limited input signals are factored into their envelope and fine structure using the Hilbert transform. A single-band auditory chimaera is made from the product of envelope 1 and fine structure 2.

Speech is a robust signal that can be perturbed in many different ways while remaining intelligible14,15. Speech chimaeras were created by combining either a speech sentence and noise or by combining two separate speech sentences. The speech material comprised sentences from the Hearing in Noise Test (HINT)16. Speech–noise chimaeras were synthesized from individual HINT sentences and spectrally matched noise. These chimaeras contain speech information in either their envelope or their fine structure. Speech–speech chimaeras were synthesized from two different HINT sentences of similar duration. The envelope of each speech–speech chimaera contains information about one utterance; its fine structure contains information about another.

Listening tests with speech–noise chimaeras showed that speech reception is highly dependent on the number of frequency bands used for synthesis (Fig. 2). When speech information is contained solely in the envelope, speech reception is poor with one or two frequency bands and improves as the number of bands increases. Good performance (>85% word recognition) is achieved with as few as four frequency bands, consistent with previous findings that bands of noise modulated by speech envelope can produce good speech reception with very limited spectral information17. In contrast, when speech information is only contained in the fine structure, speech reception is generally better with fewer frequency bands. The best performance is achieved with two bands; performance then deteriorates as the number of bands increases until, with eight or more bands, there is essentially no speech reception. Good performance with one and two frequency bands of fine structure is consistent with previous findings that peak-clipping (which flattens out the envelope) does not severely degrade speech reception14. Poorer performance with increasing numbers of bands is consistent with the auditory system's insensitivity to the fine structure of critical-band signals at high frequencies18.

Figure 2.

Speech reception of sentences in the envelope and fine structure of auditory chimaeras. Speech–noise chimaeras (solid lines) only contain speech information in either the envelope or the fine structure. Speech–speech chimaeras (dashed lines) have conflicting speech information in the envelope and the fine structure.

Speech–speech chimaeras measure the relative salience of the speech information transmitted through the envelope and fine structure when the two types of information are conflicting. Even though speech–speech chimaeras are constructed with two distinct utterances, listeners almost invariably heard words from only one of the two sentences. In general, the speech information contained in the envelope dominated the information contained in the fine structure. Speech reception based on the fine structure was much poorer with speech–speech chimaeras than with speech–noise chimaeras, and was only above chance with one and two frequency bands. Speech reception based on envelope information was also degraded, but not as severely, and performance still exceeded 80% with eight or more frequency bands. Thus, envelope information in speech is more resistant to conflicting fine-structure information from another sentence than vice versa.

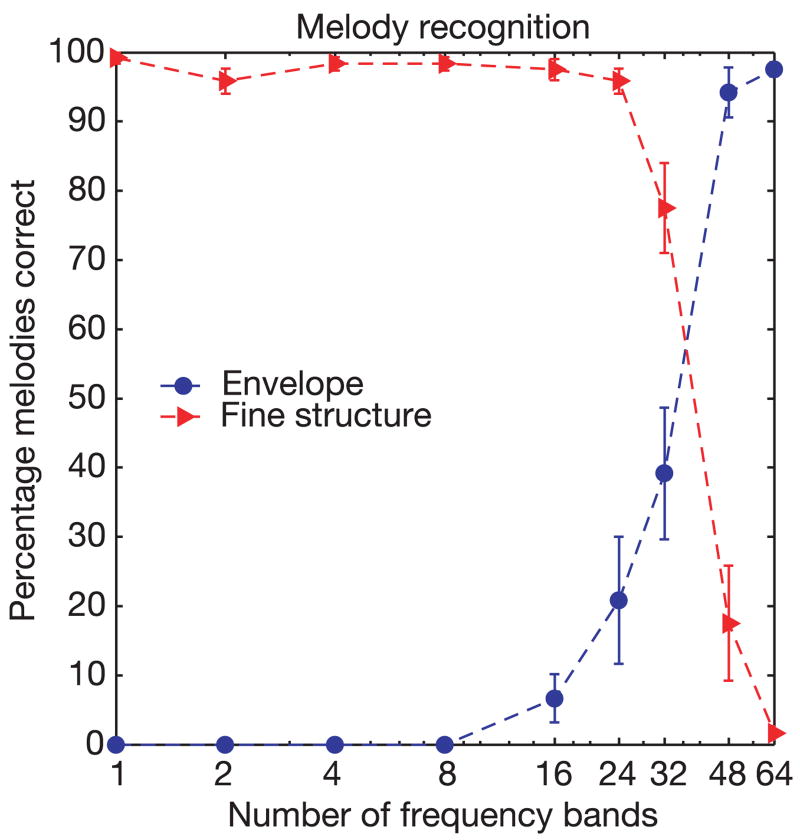

We used a melody recognition task to assess pitch perception of complex harmonic sounds. For this purpose, we synthesized chimaeras based on two different melodies, one in the envelope and the other in the fine structure. Melody–melody chimaeras show a reversal in the relative importance of envelope and fine structure when compared to speech–speech chimaeras (Fig. 3). Listeners always heard the melody based on the fine structure with up to 32 frequency bands. With 48 and 64 frequency bands, however, they identified the envelope-based melody more often than they did the melody based on fine structure. In responses to these melody–melody chimaeras, subjects sometimes reported hearing two melodies and often picked the two melodies represented in the envelope and the fine structure, respectively. This can be seen in the data with 16 to 48 frequency bands where scores based on envelope and fine structure add up to more than 100% correct.

Figure 3.

Recognition of melodies in the envelope and in the fine structure of auditory chimaeras. Melody–melody chimaeras contain conflicting melodies in the envelope and fine structure.

The crossover point, where the envelope begins to dominate over the fine structure, occurs for a much higher number of frequency bands (about 40) for melody–melody chimaeras than it does for speech–speech and speech–noise chimaeras, further suggesting that speech reception depends primarily on envelope information in broad frequency bands. For melody recognition, this crossover occurs approximately when the bandwidths of the bandpass filters become narrower than the critical bandwidths. For such narrow bandwidths, precise information about the frequency of each spectral component, and hence the overall pitch, is available in the spectral distribution of the envelopes.

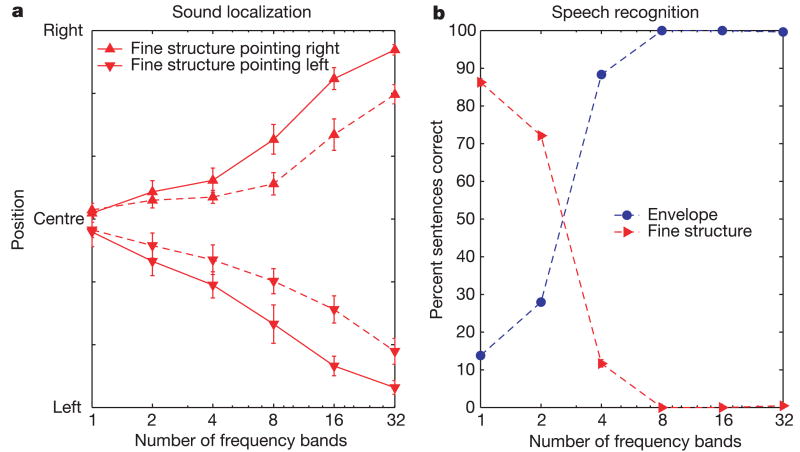

Sound localization in the horizontal plane is based on interaural differences in time and level. Interaural time differences (ITD) are the dominant cue for low-frequency sounds such as speech19. A delay of 700 μs was introduced into either the right or left channel of each HINT sentence to create ITDs that would produce completely lateralized sound images. To synthesize dichotic chimaeras, a sentence with an ITD pointing to the right was combined with a sentence having an ITD pointing to the left to produce a chimaera with its envelope information pointing to one side and its fine structure pointing to the other side. Two types of dichotic chimaeras were constructed, one using the same sentence for both the envelope and fine structure, and the other using different sentences. Lateralization of dichotic chimaeras was always based on the ITD of the fine structure (Fig. 4), consistent with results using non-speech stimuli20,21. Chimaeras synthesized with a small number of frequency bands were difficult to lateralize, but lateralization improved with increasing number of bands. Dichotic chimaeras based on the same sentence in the envelope and fine structure were more easily lateralized than those based on two different sentences.

Figure 4.

‘What’ and ‘where’ for dichotic chimaeras are determined by different cues. a, Dichotic chimaeras constructed with the same sentence are shown with solid lines and those made from different sentence are shown with dashed lines. b, Subjects heard the sentence in the envelope more than the sentence in the fine structure when four or more bands were used.

When dichotic chimaeras based on different sentences were presented, listeners were asked to pick which of the two sentences they heard in addition to reporting the lateral position of the sound image. Consistent with our results for speech–speech chimaeras, subjects most often heard the sentence based on the fine structure with one and two frequency bands, whereas they heard the sentence based on the envelope for four or more bands. With eight or more frequency bands, subjects clearly identified the sentence based on the envelope but lateralized the speech to the side to which the fine structure was pointing. Thus the fine structure determines ‘where’ the sound is heard, whereas the envelope determines ‘what’ sentence is heard. In this respect, auditory chimaeras are consistent with evidence for separate ‘where’ and ‘what’ pathways in the auditory cortex7–10.

The Hilbert transform provides a mathematically rigorous definition of envelope and fine structure free of arbitrary parameters. The squared Hilbert envelope contains frequencies up to the bandwidth of the original signal. Thus, for low numbers of frequency bands (less than about six), and hence large filter bandwidths, the fluctuations in the envelope can become rather rapid, making the functional distinction between fine structure and envelope based on fluctuation rate difficult. One of our most important findings is that the perceptual importance of the envelope increases with the number of frequency bands, while that of the fine structure diminishes (Figs 2 and 3). Had we used a different technique (such as rectification followed by low-pass filtering) to extract a smoother envelope, the smooth envelope would have contained even less information for small numbers of frequency bands, and therefore its perceptual importance would probably have been even smaller. Furthermore, a previous study17 has indicated that eliminating all rapid envelope fluctuations from bands of noise modulated by speech envelope has little or no effect on speech reception. Thus, for our purposes, it is valid to consider the envelope as slowly varying relative to the fine structure.

Cochlear implants are prosthetic devices that seek to restore hearing in the profoundly deaf by stimulating the auditory nerve via electrodes inserted into the cochlea. Current processors for cochlear implants discard the fine time structure, and present only about six to eight bands of envelope information22. Our results suggest that modifying cochlear implant processors to deliver fine-structure information may improve patients' pitch perception and sensitivity to ITD. Better pitch perception should benefit music appreciation. It should also help convey prosody cues in speech and may enhance speech reception among speakers of tonal languages, such as Mandarin Chinese, where pitch is used to distinguish different words. Better ITD sensitivity may help the increasing number of patients with bilateral cochlear implants in taking advantage of binaural cues that normal-hearing listeners use to distinguish speech among competing sound sources. Strategies for improving the representation of fine structure in cochlear implants have been proposed23 and supported by single-unit data24.

Methods

Stimulus synthesis

The perfect-reconstruction digital filter banks used for chimaera synthesis spanned the range 80–8,820 Hz, spaced in equal steps along the cochlear frequency map25 (nearly logarithmic frequency spacing). For example, with six bands, the cutoff frequencies were 80, 260, 600, 1,240, 2,420, 4,650 and 8,820 Hz. The transition over which adjacent filters overlap significantly was 25% of the bandwidth of the narrowest filter in the bank (the lowest in frequency). Thus, for the six band case, each filter transition was 45 Hz wide.

To compute the envelope and fine structure in each band, we used the analytic signal26 s(t) = sr(t) + isi(t), where sr(t) is the filter output in one band, si(t) the Hilbert transform of sr(t), and . The Hilbert envelope is the magnitude of the analytic signal, . The fine structure is cos ϕ(t), where ϕ(t) = arctan(si(t)/sr(t)) is the phase of the analytic signal. The original signal can be reconstructed as sr(t) = a(t) cos ϕ(t). In practice, the Hilbert transform was combined with the band-pass filtering operation using complex filters whose real and imaginary (subscripts r, i) parts are in quadrature27.

Subjects and procedure

Six native speakers of American English with normal hearing thresholds participated in each part of the study. Five of these subjects participated in the entire series of tests. Speech reception, melody recognition, and lateralization tests were conducted separately. Within each individual experiment, the order of all conditions was randomized. Stimuli were presented in a soundproof booth through headphones at a root-mean-square sound pressure level of 67 dB.

In the speech reception experiment, subjects listened to the processed sentences and were instructed to type the words they heard into a computer. Each subject listened to a total of 273 speech chimaeras with an additional seven for training. Speech reception was measured as percentage words correct. ‘The’, ‘a’ and ‘an’ were not scored. When speech–speech chimaeras were used, each word in a subject's response could count for either a sentence in the envelope or in the fine structure, but this condition rarely occurred in practice.

Before the melody recognition experiment, each subject selected ten melodies that he/she was familiar with. Each melody was taken from a set of 34 simple melodies with all rhythmic information removed28, consisting of 16 equal-duration notes and synthesized with MIDI software that used samples of a grand piano. During the experiment, subjects selected from their own list of ten melodies which one(s) they heard on each trial. Melodies were scored as percentage correct even when subjects reported multiple melodies in a single trial without penalty for incorrect responses.

In the lateralization experiment, subjects used a seven-point scale to rate the lateral position of the sound image inside the head. This scale ranges from -3 to +3, with -3 corresponding to the left ear and +3 to the right ear. Lateralization scores were averaged for each condition. In addition, subjects had to select which of two possible sentences they heard, one choice corresponding to the envelope and the other one to the fine structure.

Acknowledgments

We thank C. Shen for assistance with data analysis and J. R. Melcher and L. D. Braida for comments on an earlier version of the manuscript. A.J.O. is currently a fellow at the Hanse Institute for Advanced Study in Delmenhorst, Germany. This work was supported by grants from the National Institutes of Health (NIDCD).

Footnotes

Competing interests statement: The authors declare that they have no competing financial interests.

References

- 1.Fourier JBJ. La théorie analytique de la chaleur. Mém Acad R Sci. 1829;8:581–622. [Google Scholar]

- 2.Hilbert D. Grundzüge einer allgemeinen Theorie der linearen Integralgleichungen. Teubner; Leipzig: 1912. [Google Scholar]

- 3.Rhode WS, Oertel D, Smith PH. Physiological response properties of cells labeled intracellularly with horseradish peroxidase in cat ventral cochlear nucleus. J Comp Neurol. 1983;213:448–463. doi: 10.1002/cne.902130408. [DOI] [PubMed] [Google Scholar]

- 4.Joris PX, Yin TC. Envelope coding in the lateral superior olive. I. Sensitivity to interaural time differences. J Neurophysiol. 1995;73:1043–1062. doi: 10.1152/jn.1995.73.3.1043. [DOI] [PubMed] [Google Scholar]

- 5.Yin TC, Chan JC. Interaural time sensitivity in medial superior olive of cat. J Neurophysiol. 1990;65:465–488. doi: 10.1152/jn.1990.64.2.465. [DOI] [PubMed] [Google Scholar]

- 6.Langner G, Schreiner CE. Periodicity coding in the inferior colliculus of the cat. I. Neuronal mechanisms. J Neurophysiol. 1988;60:1799–1822. doi: 10.1152/jn.1988.60.6.1799. [DOI] [PubMed] [Google Scholar]

- 7.Rauschecker JP, Tian B. Mechanisms and streams for processing of “what” and “where” in auditory cortex. Proc Natl Acad Sci USA. 2000;97:11800–11806. doi: 10.1073/pnas.97.22.11800. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Recanzone GH. Spatial processing in the auditory cortex of the macaque monkey. Proc Natl Acad Sci USA. 2000;97:11829–11835. doi: 10.1073/pnas.97.22.11829. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Tian B, Reser D, Durham A, Kustov A, Rauschecker JP. Functional specialization in rhesus monkey auditory cortex. Science. 2001;292:290–293. doi: 10.1126/science.1058911. [DOI] [PubMed] [Google Scholar]

- 10.Maeder PP, et al. Distinct pathways involved in sound recognition and localization: a human fMRI study. NeuroImage. 2001;14:802–816. doi: 10.1006/nimg.2001.0888. [DOI] [PubMed] [Google Scholar]

- 11.Dodge C, Jerse TA. Computer Music: Synthesis, Composition, and Performance. Schirmer Books; New York: 1997. [Google Scholar]

- 12.Depalle P, Garcia G, Rodet X. A virtual castrato (!?). Proc Int Computer Music Conf.; Aarhus, Denmark. 1994. pp. 357–360. [Google Scholar]

- 13.Shen C, Smith ZM, Oxenham AJ, Delgutte B. Auditory Chimera Demo. 2001 available at < http://epl.meei.harvard.edu/∼bard/chimeral>.

- 14.Licklider J. Effect of amplitude distortion upon the intelligibility of speech. J Acoust Soc Am. 1946;18:429–434. [Google Scholar]

- 15.Saberi K, Perrot DR. Cognitive restoration of reversed speech. Nature. 1999;398:760. doi: 10.1038/19652. [DOI] [PubMed] [Google Scholar]

- 16.Nilsson M, Soli SD, Sullivan JA. Development of the hearing in noise test for the measurement of speech reception thresholds in quiet and in noise. J Acoust Soc Am. 1994;95:1085–1099. doi: 10.1121/1.408469. [DOI] [PubMed] [Google Scholar]

- 17.Shannon RV, Zeng FG, Kamath V, Wygonski J, Ekelid M. Speech recognition with primarily temporal cues. Science. 1995;270:303–304. doi: 10.1126/science.270.5234.303. [DOI] [PubMed] [Google Scholar]

- 18.Ghitza O. On the upper cutoff frequency of the auditory critical-band envelope detectors in the context of speech perception. J Acoust Soc Am. 2001;110:1628–1640. doi: 10.1121/1.1396325. [DOI] [PubMed] [Google Scholar]

- 19.Wightman FL, Kistler DJ. The dominant role of low-frequency interaural time differences in sound localization. J Acoust Soc Am. 1992;91:1648–1661. doi: 10.1121/1.402445. [DOI] [PubMed] [Google Scholar]

- 20.Henning GB, Ashton J. The effect of carrier and modulation frequency on lateralization based on interaural phase and interaural group delay. Hear Res. 1981;4:184–194. doi: 10.1016/0378-5955(81)90005-8. [DOI] [PubMed] [Google Scholar]

- 21.Bernstein LR, Trahiotis C. Lateralization of low-frequency complex waveforms: The use of envelope-based temporal disparities. J Acoust Soc Am. 1985;77:1868–1880. doi: 10.1121/1.391938. [DOI] [PubMed] [Google Scholar]

- 22.Wilson BS, et al. Better speech recognition with cochlear implants. Nature. 1991;352:236–238. doi: 10.1038/352236a0. [DOI] [PubMed] [Google Scholar]

- 23.Rubinstein JT, Wilson BS, Finley CC, Abbas PJ. Pseudospontaneous activity: stochastic independence of auditory nerve fibers with electrical stimulation. Hear Res. 1999;127:108–118. doi: 10.1016/s0378-5955(98)00185-3. [DOI] [PubMed] [Google Scholar]

- 24.Litvak L, Delgutte B, Eddington D. Auditory nerve fiber responses to electrical stimulation: modulated and unmodulated pulse trains. J Acoust Soc Am. 2001;110:368–379. doi: 10.1121/1.1375140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Greenwood DD. A cochlear frequency-position function for several species—29 years later. J Acoust Soc Am. 1990;87:2592–2604. doi: 10.1121/1.399052. [DOI] [PubMed] [Google Scholar]

- 26.Ville J. Théorie et applications de la notion de signal analytique. Cables Transmission. 1948;2:61–74. [Google Scholar]

- 27.Troullinos G, Ehlig P, Chirayil R, Bradley J, Garcia D. In: Digital Signal Processing Applications with the TMS320 Family. Papamichalis P, editor. Texas Instruments; Dallas: 1990. pp. 221–330. [Google Scholar]

- 28.Hartmann WM, Johnson D. Stream segregation and peripheral channeling. Music Percept. 1991;9:155–184. [Google Scholar]