Abstract

Emotionally arousing stimuli are at once both highly attention grabbing and memorable. We examined whether emotional enhancement of memory (EEM) reflects an indirect effect of emotion on memory, mediated by enhanced attention to emotional items during encoding. We tested a critical prediction of the mediation hypothesis—that regions conjointly activated by emotion and attention would correlate with subsequent EEM. Participants were scanned with fMRI while they watched emotional or neutral pictures under instructions to attend to them a lot or a little, and were then given an immediate recognition test. A region in the left fusiform gyrus was activated by emotion, voluntary attention, and subsequent EEM. A functional network, different for each attention condition, connected this region and the amygdala, which was associated with emotion and EEM, but not with voluntary attention. These findings support an indirect cortical mediation account of immediate EEM that may complement a direct modulation model.

The viewing of a gruesome roadside accident is at once emotionally arousing, highly attention-grabbing, and memorable. The experiential, cognitive, and neurohormonal impact of an emotional stimulus is thought to be an adaptive reflection of its evolutionary significance. The inherently interdependent nature of these processes adds to the challenge of understanding the unfolding of events following the perception of an emotional stimulus. As such, the parallel enhancing effects of emotion on attention and memory are difficult to separate both in nature and under laboratory conditions.

Evidence from a variety of sources shows that when both emotional and neutral items compete for processing resources, emotion is able to bias the competition (Desimone and Duncan 1995) so that emotional items receive priority, garnering the greater share of available resources (for review, see Vuilleumier 2005; Vuilleumier and Driver 2007). For example, emotion modulates the attentional blink (Anderson and Phelps 2001; Anderson 2005), impairs concurrent task performance in divided-attention paradigms (Kensinger and Corkin 2004; Schimmack 2005; Thomas and Hasher 2006; Talmi et al. 2007) and slows down font-color naming in the Stroop task (Pratto and John 1991; Algom et al. 2004; Hadley and Mackay 2006).

In addition to the effect of emotion on attention, emotion also has an enhancing effect on memory (for reviews, see Cahill and McGaugh 1998; Dolan 2002; LaBar and Cabeza 2006), which we refer to as emotional enhancement of memory (EEM). This functional correlation between the emotional influences on attention and memory is consistent with the underlying neuroanatomy, because the amygdala has a central role in both processes. Studies with amygdala-lesioned patients have shown that the amygdala is crucial both to enhanced attention to emotionally significant events (Anderson and Phelps 2001; Vuilleumier et al. 2004) and to later memory for these events (Cahill et al. 1995; LaBar and Phelps 1998; Phelps et al. 1998; Richardson et al. 2004; Adolphs et al. 2005). Similarly, functional imaging studies of emotion and attention have consistently linked amygdala activation during emotional item encoding with enhanced processing of these items (e.g., Morris et al. 1998, 1999; Vuilleumier et al. 2001b, 2004), and studies of emotion and memory have linked amygdala activation at encoding with subsequent EEM (e.g., Canli et al. 2000, 2002; Cahill et al. 2001, 2004b; Dolcos et al. 2004; Kensinger and Corkin, 2004). Because allocation of attention improves subsequent memory regardless of emotionality (Craik et al. 1996), the demonstrated correlation between amygdala activation at encoding and subsequent EEM could be due, at least partly, to the amygdala’s role in enhancing the amount of attention allocated to emotional events during initial encoding, which then causes EEM. In line with these findings, the attention mediation hypothesis of EEM (noted by Cahill and McGaugh 1998, and developed in Hamann 2001) proposes that EEM is at least partly dependent on the extra attention given to emotional items at encoding.

The proposal that the well-documented enhanced attention to emotional items has memory consequences has not been supported. Instead, previous research has provided evidence that emotion has parallel effects on attention at encoding and on memory formation. Behavioral studies show that recall and recollection of emotionally arousing items, relative to nonarousing ones, do not suffer as much from division of attention at encoding (Kensinger and Corkin 2004; Kern et al. 2005; Talmi et al. 2007). Moreover, the greater allocation of attention toward negative emotional items when attention was divided did not account significantly for the relatively preserved emotional memory (Talmi et al. 2007). However suggestive this behavioral evidence is for separate effects of emotion on attention and memory formation, at present there has been no direct examination of the neural systems supporting these processes to assess whether they interact or are dissociable.

A large number of studies show that emotion can enhance activity in occipital and inferotemporal sensory processing regions, in a manner similar to that of top-down attention (e.g., Lang et al. 1998; Bradley et al. 2003; Sabatinelli et al. 2007). Studies that manipulated both attention and emotion to visually presented stimuli, and found overlapping activations, support the notion that the functional role of this enhanced signal is to improve processing of visual input (Lane et al. 1999; Vuilleumier et al. 2001a, 2004; Keil et al. 2005; Schupp et al. 2007), and Canli et al. (2000) showed a correlation of EEM and activation in extrastriate regions—the fusiform and inferior temporal gyri. In addition to inferotemporal regions, emotion and attention were also found to activate conjointly parietal sources localized in event-related potentials (ERP) and steady-state visual evoked potentials studies (Keil et al. 2005; Schupp et al. 2007). However, none of these studies conjointly manipulated attention and emotion to examine their mutual or independent effect on subsequent memory. The current study uses functional magnetic resonance imaging (fMRI) to examine this question.

Emotion has been shown to influence memory through a different mechanism, independently from its putative influence on memory via attention. According to the modulation model of EEM (Cahill and McGaugh 1998; McGaugh 2004), memory for emotional events is better than memory for neutral events because the long-term consolidation of emotional memory traces is better than the consolidation of neutral traces. The modulation model emphasizes the importance of emotional arousal in activating the basolateral nucleus of the amygdala, which then directly modulates memory, a process that is postulated to be independent of the additional influence emotion also exerts on the allocation of resources at encoding. This model accounts for the finding that when attention to emotional and neutral items was equated, EEM was not found in an immediate memory test, but appeared in a delayed memory test (Sharot and Phelps 2004). Similarly, the effect of post-encoding amygdala stimulation, and of local infusions of pharmacological agents that influence noradrenergic function—both manipulations that enhance memory in rats in the one-trial avoidance paradigm (e.g., Bianchin et al. 1999; for review, see McGaugh 2004)—also cannot be attributed to attentional differences at encoding. Importantly, post-encoding modulation of arousal has also shown robust effects on memory consolidation in humans (Cahill and Alkire 2003; Cahill et al. 2003; Anderson et al. 2006). These findings provide ample evidence for the modulation model of EEM.

The duration of the delay required for modulatory effects of consolidation to appear in human data are currently a matter of debate, a point we take up in the discussion section. For now, we note that the bulk of the evidence suggests that immediate memory tests minimize differential consolidation effects on EEM (e.g., Bianchin et al. 1999; Izquierdo et al. 2002; Sharot and Phelps 2004), thereby highlighting the potential explanatory role that mediation via attention may play in accounting for the pattern of immediate EEM data. Therefore, in order to test the hypothesis that EEM is attentionally mediated, the present study examined memory immediately. Clearly, however, although these two routes for the effect of emotion on memory—directly through consolidation, and putatively, indirectly through attention—are distinct, they are not mutually exclusive.

The primary goal of the present experiment was to examine a central prediction of the attention mediation hypothesis of EEM, namely, that regions associated with enhanced attention allocation to emotional pictures during encoding would also be associated with subsequent EEM. To examine this prediction we scanned participants with fMRI while they viewed briefly presented negative emotional and neutral items under “high-attention” or “low-attention” conditions (Pinsk et al. 2004). In the “low-attention” condition, participants simply had to press a key whenever a picture appeared, while in the “high-attention” condition, they performed a task requiring greater attentional allocation, deciding which side of the picture had more information. Recognition memory was tested after a short study test interval (10-min). The results corresponded to the prediction of the attention mediation hypothesis: EEM was correlated with activations associated with increased attention to emotionally arousing pictures in the fusiform gyrus.

Results

Behavioral results

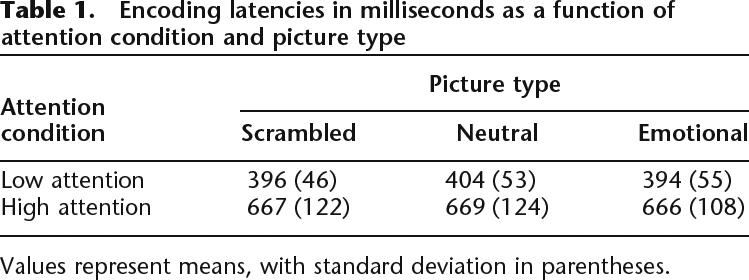

Encoding reaction times

Median reaction times were analyzed with a 2 (attention: high, low) × 3 (picture type: intact emotional, intact neutral, scrambled) repeated measures ANOVA. As expected, participants were slower in the high-attention relative to the low-attention condition, (F(1,10) = 63.76, P < 0.001). None of the other effects was significant (see Table 1). Because of the inherent latency differences in the two attention conditions, we included analysis of the attention effects that regressed out “time on task” (see below).

Table 1.

Encoding latencies in milliseconds as a function of attention condition and picture type

Values represent means, with standard deviation in parentheses.

Memory performance

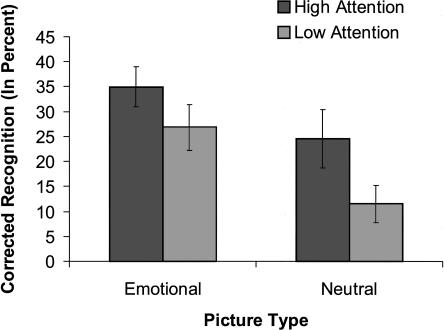

Corrected recognition (hits-false alarms) scores were analyzed with a 2 (attention: high, low) × 2 (picture type: intact emotional, intact neutral) repeated measures ANOVA (see Fig. 1). Recognition memory was greater for emotional relative to neutral pictures, consistent with EEM, and greater for high- relative to low-attention conditions, consistent with successful manipulation of attention during encoding. The significant main effects of picture type (F(1,10) = 23.12, MSE = 0.007, P < 0.001, η2 = 0.70) and of attention (F(1,10) = 17.74, MSE = 0.011, P < 0.01, η2 = 0.64) were qualified by a significant interaction, (F(1,10) = 5.02, MSE = 0.004, P < 0.05, η2 = 0.33), due to a larger EEM under low than under high attention. Planned paired t-tests showed that both neutral pictures (t(10) = 3.76, P < 0.01) and emotional pictures (t(10) = 3.89, P < 0.01) benefited from high attention. The difference between overall recognition of emotional and neutral items was significant under low attention (t(10) = 6.25, P < 0.001) but was only present as a trend under high attention (t(10) = 2.12, P = 0.06). A similar pattern was found when only the “sure” (highly confident) memory responses were taken into account (emotion: F(1,10) = 19.34, MSE = 34.76; P < 0.001, η2 = .66; attention: F(1,10) = 18.13, MSE = 11.55, P < 0.01, η2 = 0.64; and a trend for the interaction between them, F(1,10) = 3.90, MSE = 8.42, P = 0.08, η2 = .28). The interaction between picture type and attention replicates prior behavioral work demonstrating more pronounced EEM during conditions of diminished volitional attention (Kensinger and Corkin 2004; Talmi et al. 2007).

Figure 1.

Behavioral results. Corrected recognition (hits-FA, in percent, collapsed across confidence ratings) as a function of emotion and attention. Recognition was greater for emotional relative to neutral pictures, a difference larger in the low-attention condition. Error bars represent SE.

Sex and gender differences

Following Cahill et al. (2004a), gender was determined using the BEM Sex Role Inventory. One participant had an “undifferentiated” gender and was excluded from this analysis. Neither sex nor gender had any main effects or interactions with the behavioral memory results.

Imaging results

Experimental contrasts

Conjoint activation for emotion and attention could be examined with a formal conjunction analysis of their main effect, but we argue that the spatial overlap of the simple effects of emotion under low attention, and attention for neutral pictures only, operationalizes these variables more precisely. Regions involved in attention were defined according to the contrast high attention > low attention. This was done separately for the neutral and the scrambled pictures; those activated for neutral pictures defined content-dependent attention, and those activated for both neutral and scrambled pictures defined content-independent attention. This allowed for an examination of attention effects to lower-level versus higher-level visual cortical analysis. Emotional picture trials were not included in the attention contrasts to enable an unbiased examination of top-down attention networks independently of emotion effects on attention. Regions involved in emotion were defined according to the contrast emotion > neutral, within low-attention blocks only. We confined ourselves to the simple effect of emotion under low attention: (1) to examine how emotion influences processing independently of top-down attention effects, (2) because previous studies have shown that the effect of emotionality on the amygdala is often reduced when participants are engaged in a demanding cognitive orienting task such as ours (Critchley et al. 2000; Hariri et al. 2000; Adolphs 2002; Lieberman et al. 2007), and (3) because EEM is more pronounced under low attention (Kensinger and Corkin 2004; Talmi et al. 2007), as replicated in the present study. The spatial overlap of these simple effects of attention and emotion-defined regions that were responsive both to top-down attention demands and to bottom-up emotional content; we propose that the overlapping regions are involved in attentional enhancement of emotional picture processing. We separately conducted a formal conjunction analysis of the main effects of emotion and attention, which is not reported here for brevity; this analysis replicated the critical findings we report below. Finally, regions involved in EEM were those significantly activated in the interaction between picture type (intact emotional, intact neutral) and memory performance (hits, misses), and in which the emotional difference due to memory (Dm) was higher than the neutral Dm.

Attention

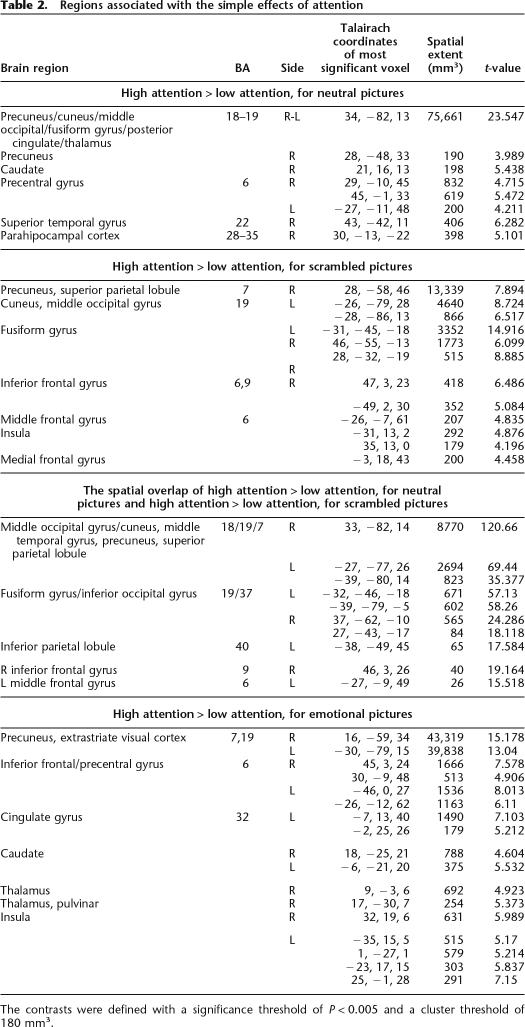

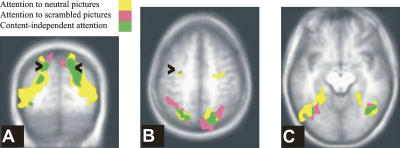

Table 2, lists regions involved in attention. Content-independent attention was associated with bilateral activations in the extrastriate visual cortex, extending to the cuneus and precuneus, fusiform gyrus, superior parietal lobule, including the intraparietal sulcus, middle frontal gyrus, including the frontal eye fields, and left inferior parietal lobule (see Fig. 2). Regions associated with attending to neutral pictures (content-dependent attention) included additional bilateral activations in inferotemporal regions, parahippocampal place area (Epstein and Kanwisher 1998), posterior cingulate gyrus, and caudate. Comparing these results with the results of the same attention contrast, but from a model that regressed out reaction times, showed that activations associated with contentent-dependent attention to neutral pictures were not crucially dependent on differential latencies under low- and high-attention conditions. Table 2 also lists regions involved in attention to emotional pictures. We note that there was no significant amygdala activation in the high- vs. low-attention contrast, even with a lenient P-value of P = 0.01.

Table 2.

Regions associated with the simple effects of attention

The contrasts were defined with a significance threshold of P < 0.005 and a cluster threshold of 180 mm3.

Figure 2.

Regions associated with attention. Regions associated with attending neutral pictures (yellow), scrambled pictures (pink), and the spatial overlap between these contrasts (green). (A) Y = −64; the arrowheads point to the intraparietal sulcus. (B) Z = 49; the arrowheads point to the frontal eye field. (C) Z = −10.

Emotion

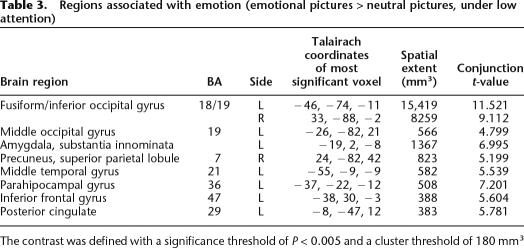

Encoding emotional, relative to neutral pictures under low attention activated bilateral occipital and inferotemporal cortex, the right dorsal parietal cortex, and, in the left-hemisphere, the amygdala, anterior cingulate, inferior frontal gyrus, parahippocampal gyrus, and anterior middle temporal gyrus (see Table 3). Amygdala activation was dorsal, a location corresponding to the location of the central nucleus. As expected, the difference in amygdala activation for emotional and neutral pictures was greater under low- than under high-attention conditions, and only reached significance under low-attention conditions.

Table 3.

Regions associated with emotion (emotional pictures > neutral pictures, under low attention)

The contrast was defined with a significance threshold of P < 0.005 and a cluster threshold of 180 mm3

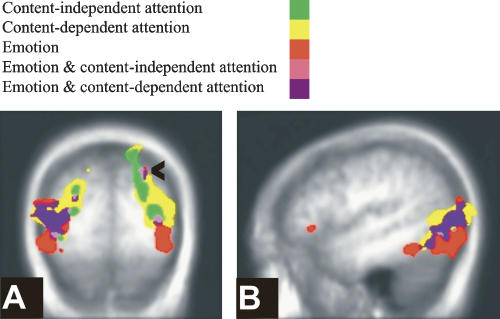

Conjoint activations of attention and emotion

Sensory-processing sites and top-down sources of attention modulation, which were associated with attention according to the contrasts specified above, were also associated with emotion. Emotion and content-independent attention conjointly activated the bilateral middle occipital gyrus and bilateral fusiform gyrus, as well as the right dorsal parietal cortex centered on the intraparietal sulcus (IPS). Emotion and content-dependent attention conjointly activated additional regions in inferotemporal cortices (see Fig. 3; Table 4).

Figure 3.

Spatial overlap of attention and emotion. The overlap (pink) of emotion (red) and content-independent attention (green), and the overlap (purple) of emotion and content-dependent attention (yellow). (A) Y = −79; the arrowhead points to the intraparietal sulcus. (B) X = −47.

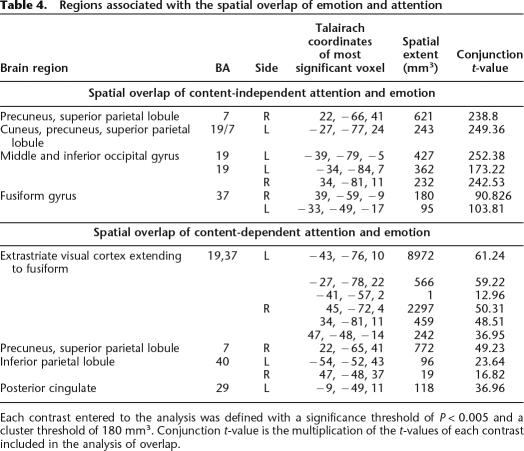

Table 4.

Regions associated with the spatial overlap of emotion and attention

Each contrast entered to the analysis was defined with a significance threshold of P < 0.005 and a cluster threshold of 180 mm3. Conjunction t-value is the multiplication of the t-values of each contrast included in the analysis of overlap.

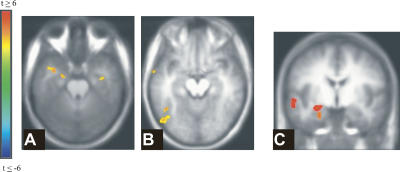

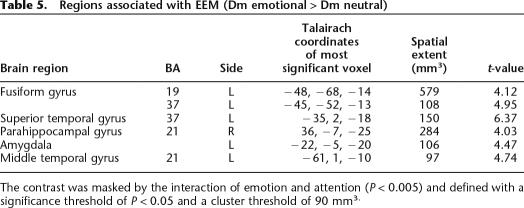

EEM

Figure 4 and Table 5 depict regions activated by the contrast of Dm emotional > Dm neutral (EEM). Figure 4C shows that amygdala activation for emotion and for EEM dissociated spatially, in that while emotion activated the dorsal amygdala, EEM correlated with more ventral amygdala activation, a location that corresponds more closely to the basolateral nucleus of the amygdala.

Figure 4.

Regions associated with EEM (emotional Dm > neutral Dm). (A) Z = −23. (B) Z = −13. (C) Spatial dissociation in the left amygdala’s association with emotion and EEM, Y = −5. Dorsal amygdala correlated with emotion (red), while ventral amygdala correlated with EEM. These locations correspond to the central nucleus and the basolateral nucleus of the amygdala, respectively.

Table 5.

Regions associated with EEM (Dm emotional > Dm neutral)

The contrast was masked by the interaction of emotion and attention (P < 0.005) and defined with a significance threshold of P < 0.05 and a cluster threshold of 90 mm3.

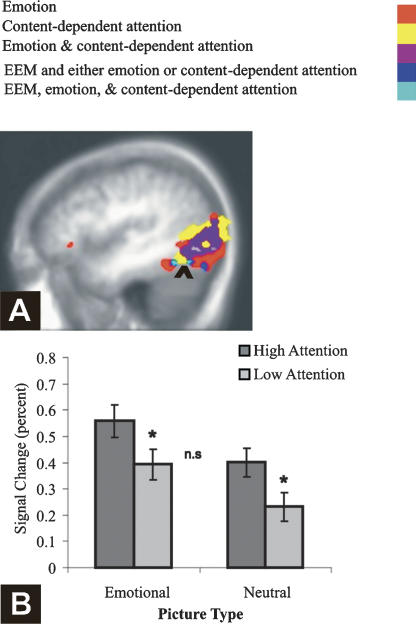

Conjoint activations of attention, emotion, and EEM

Critically, EEM was correlated with activation in a region conjointly activated by content-dependent attention and emotion—the fusiform gyrus (see Fig. 5), suggesting that enhanced processing of the higher-order content of emotional images supported their enhanced subsequent memory. Emotion and attention activated this cluster additively (F < 1 for the interaction) and to the same degree (t < 1 for the comparison of the simple effects, Fig. 5B). None of the regions conjointly activated by content-independent attention and emotion was associated with subsequent EEM, even with a lenient threshold (P < 0.05). Finally, EEM and attention also conjointly activated the right parahippocampal cortex.

Figure 5.

Spatial overlap of emotion, content-dependent attention, and EEM. (A) Activations associated with attention (yellow), with emotion (red), with the overlap of attention and emotion (purple), and with the overlap of EEM with either emotion or attention (dark blue). The arrowhead points to the spatial overlap of emotion, content-dependent attention, and EEM (light blue). X = −44. (B) Additive effects of emotion and attention as a function of picture type in the cluster activated by emotion, attention, and EEM. Error bars represent SE.

Sex and gender differences in the amygdala ROI

A 2 (picture type: emotional, neutral) × 7 (TRs, 2-sec bins from picture onset) repeated measures ANOVA of Dms was carried out with sex as a between-subject factor. Analysis of the left amygdala ROI revealed a marginal main effect of sex (with a higher activation in males, P = 0.06) but no interactions. In the right amygdala ROI, sex had a marginal interaction with picture type, P < 0.06. Upon further analysis, it was determined that only males showed a marginal effect of Type (F(1,5) = 4.36, P = 0.09). When Gender was entered instead of sex, it had no significant main effects or interactions. Previous reports of sex differences in EEM tested memory after a prolonged delay (a week or more; Cahill et al. 2001, 2004b; Canli et al. 2002; Mackiewicz et al. 2006), and Mackiewicz and colleaugues reported sex differences in EEM only in the delayed, but not in the immediate, memory test. Thus, the absence of marked sex differences in amygdala response in the present study may be attributed to the immediate memory testing as well as to our small sample size.

Inter-regional interactions

The attention-mediation hypothesis predicted that emotional picture content preferentially would recruit the amygdala to enhance picture processing in posterior visual-stream areas. The univariate analysis supported this notion, by showing increased activation relative to baseline in the left amygdala for emotion, and in the left fusiform region in the overlap of emotion, attention, and EEM. However, while the univariate analysis can only implicate each region's activity in its own right, it cannot speak to their interaction (Horwitz et al. 2000). The attention-mediation hypothesis predicts not only coactivation, but also that the two regions should covary as a function of the amygdala’s response to emotion. Functional connectivity analysis provides a test of this more specific prediction. For this purpose, we used a second data-analytic approach, seed partial least squares (PLS) (McIntosh et al. 1996; McIntosh and Lobaugh 2004). To demonstrate a functional connection between the amygdala and the fusiform, a connection that is sensitive to the amygdala’s response to emotion, we asked whether there was any task condition in which three seed voxels—in the left dorsal amygdala, the left fusiform, and the difference score between the left amygdala’s response to emotional and neutral pictures—were functionally connected to the LV. This analysis yielded two significant latent variables (LVs), which accounted for 38% (P < 0.001) and 16% (P < 0.01) of the cross-block covariance, respectively. Each LV reflects a distributed set of brain activity that is functionally connected to the seeds. The correlations between activity in the seed regions and the network’s activity in the four relevant experimental conditions (emotional and neutral pictures under high and low attention) are plotted in Figure 6, with larger correlations reflecting stronger functional connections. The correlation between activation in the three seed voxels and the LV was reliable and had the same sign for the first LV only when participants viewed emotional pictures under low-attention conditions, and for the second LV only when they viewed emotional pictures under high-attention conditions. Interestingly, the first brain LV included the right IPS region, which was activated for emotion and attention in the univariate analysis, as well as left anterior temporal cortex, a region that could mediate the connection of the amygdala and the IPS (Amaral and Price 1984; Webster et al. 1994; Sereno et al. 2001; Amaral et al. 2003; Price 2003). Notably, the right amygdala was also functionally connected to this LV. The second brain LV did not include significant parietal or right amygdala activations. To summarize, the left amygdala and the left fusiform gyrus form a functional network for emotional picture processing that is differentially connected to other brain regions depending on the attention requirements of the task. Under low attention, this network also includes the right amygdala and IPS. Thus, the multivariate results support the stronger prediction of the mediation hypothesis, that activity in the fusiform region, detected in the univariate analysis of emotion, attention, and EEM, would covary with activity in the amygdala as a function of its response to emotion.

Figure 6.

Pearson R correlations of brain scores and seed values as a function of task condition in the first (top) and second (bottom) LV in the PLS analysis. Error bars represent 95% confidence interval based on a permutations test.

Discussion

We tested a central prediction of the attention mediation hypothesis of EEM, namely, that regions activated by enhanced attention allocation to emotional pictures relative to neutral ones would also support immediate EEM. That participants paid more attention to emotional pictures than neutral ones was evidenced, firstly, by the larger behavioral EEM under low attention, and secondly, by the stronger signal, under low attention, for emotional than for neutral pictures, in regions which were functionally identified as associated with content-dependent attention pictures. The spatial overlap of emotion under low attention and attention for neutral pictures defined regions associated with emotionally enhanced attention. The prediction of the attention mediation hypothesis was supported, in that activation in a region within the fusiform gyrus, which was associated with emotionally enhanced attention, was correlated with subsequent EEM.

Activations in top-down sources of attention—the IPS and frontal regions—and in sites of attention influence in the ventral visual object processing stream (Posner and Driver 1992; Buchel and Friston 1997; Kastner and Ungerleider 2000; Corbetta and Shulman 2002) were enhanced by content-independent attention. The content-dependent attention contrast identified more extensive activations in ventral processing sites. Emotional picture content, even when these pictures were viewed under low attention, activated a subset of these regions: the right IPS and bilateral ventral visual stream regions, an overlap pattern that was also identified by Keil et al. (2002), using source localization of ERP. The spatial overlap in the effect of emotion and attention on regions responsive to the processing of scenes is in line with previous findings with positron emission tomography (PET) (Lane et al. 1999) and ERP (measures of electrocortical responses) (Keil et al. 2005; Schupp et al. 2007), and is similar to the pattern of emotion-attention spatial overlap demonstrated for emotional facial expressions (Vuilleumier et al. 2001a, 2004; Anderson et al. 2003).

Our key finding, in accordance with the attention mediation hypothesis of EEM, was that activation in the fusiform gyrus, which was associated with enhanced picture processing when the content of the pictures was emotional, also was associated with the memory advantage that these pictures had over neutral ones in an immediate subsequent memory test. The multivariate analysis showed that this region and the amygdala were functionally connected to the same LV. This functional connection was significant under both high- and low-attention conditions, but explained a larger proportion of the variance under low attention. Importantly, under both attention conditions, this functional connection was stronger when the differential response of the amygdala to emotion was stronger. These findings further support the notion that the amygdala’s response to emotion recruits sensory-processing regions to enhance attention toward, and encoding of, emotional items, in line with the amygdala’s documented direct re-entrant connections to the extrastriate cortex (Amaral and Price 1984; Amaral et al. 2003; for a similar pattern in a study of the effects of pain and attention, see Bingel et al. 2007). Greater encoding of perceptual information may increase mnemonic resolution during the subsequent recognition memory test.

Only a handful of studies have examined how emotion interacts with sources of attention, because previous studies typically used paradigms in which attention was required in every trial (e.g., when the task manipulated the focus of spatial attention). By manipulating the amount of attention required in each experimental block, we found activation associated with emotionally enhanced attention in the right IPS, in accordance with findings from ERP studies. The multivariate analysis showed that the right IPS, the right and left amygdala, the anterior temporal cortex, and ventral visual stream regions were functionally connected to the same LV under low- but not high-attention conditions, suggesting that these regions form a functional network when alternative, volitional attentional resources are diminished. Intriguingly, while activation in the amygdala and the fusiform were correlated with EEM, activation in the IPS was not, even with a liberal statistical threshold. Future research will be required to shed more light on the precise role that IPS had played when participants viewed emotional pictures under low-attention conditions.

EEM was also correlated with activation in regions outside of the overlap of emotion and attention. First and foremost, replicating previous research (for review, see LaBar and Cabeza 2006), both emotion and EEM were correlated with amygdala activation. The amygdala response to emotion and EEM dissociated spatially in that dorsal amygdala correlated with emotion, while ventral amygdala correlated with EEM. These locations correspond to the central nucleus and the basolateral nucleus of the amygdala, respectively. The dorsal amygdala activation for emotion was obtained in a contrast that included only low-attention trials, and therefore, reflected not only differences in experiential arousal, but also the neural correlates of the enhanced attention to emotional pictures under limited volitional attention. The possible role of the dorsal amygdala in attention focusing in this study, and its functional connection with the fusiform gyrus, are in line with animal studies that show that the central nucleus is involved in emotion-dependent attention focusing (Gallagher and Schoenbaum 1999; McGaugh 2004) and with the anatomical reciprocal connections between the central nucleus and extrastriate regions (Pitkanen 2000). The dorsal amygdala may thus be involved in attentionally mediated EEM, a possibility compatible with recent findings of Mackiewicz et al. (2006), who found that dorsal amygdala activation during the anticipation of aversive pictures correlated with immediate EEM (if we assume that anticipation involved allocation of attention resources to the upcoming stimuli).

While the central nucleus has primarily attentional effects, but is not associated with direct modulation of memory traces in the medial temporal lobe, the unique involvement of the basolateral nucleus in this function has been extensively documented (McGaugh 2004). Thus, it is possible that activation in the ventral amygdala in the present study reflected its direct modulatory effects on memory traces, according to the modulation model, and in line with Mackiewicz et al.’s finding that this region only correlated with delayed EEM. The time frame within which amygdalar modulation of memory traces should be expected is critical here, but, unfortunately, the parallels between human and animal “long-term memory” effects are yet to be resolved. While animal models of the modulation account required a prolonged retention interval before the effects of arousal on the amygdala were manifested in improved memory (Bianchin et al. 1999; Izquierdo et al. 2002; McGaugh 2004), there are some human data to support a direct correlation of the amygdala with EEM at much shorter delays, such as 1 h (Dolcos et al. 2004; Sharot et al. 2004) or even a few seconds (Strange et al. 2003; Anderson et al. 2006). These time frames, and the differences in methodology among the studies, leave open the question of which model better accounts for the correlation we obtained here between ventral amygdala activation and immediate EEM.

A second area activated for EEM and emotion, but not for attention, was the left temporal pole. A recent review of the literature suggested that this region is involved in storing bound linkages between emotion and perceptual analysis of social stimuli that ‘tell a story’ (Olson et al. 2007). The complex scenes we used—which all depicted people—are paramount examples of a social emotional stimulus, and this might underlie the involvement of the temporal pole in EEM. The amygdala and the anterior temporal cortex are connected anatomically (Webster et al. 1994) and were connected here functionally. Both regions could support the other’s responsiveness to emotion. Because subsequent memory was assessed with a recognition test, and because subsequent recognition memory is associated with parahippocampal (rather than hippocampal) activation (Brown and Aggleton 2001; Paller and Wagner 2002), we expected that the parahippocampal cortex would correlate with subsequent EEM. Such a correlation was found in the right parahippocampal cortex. The fact that the amygdala’s connections to this region are mostly ipsilateral (Amaral and Price 1984), and the finding that this region was activated by attention but not by emotion, suggests that the left amygdala may not be the underlying source for this correlation; however, in interpreting these results, note that a larger sample size may be required to shed light on this pattern of activation.

The behavioral and imaging data attest as predicted, that both emotional and neutral pictures were processed to a greater degree under high- relative to low-attention conditions. Because we planned to examine memory for pictures studied under both attention conditions, we used display and timing parameters (central presentation for 1 sec) that degraded picture processing to a certain extent, but did not abolish it altogether. Previous studies of emotion and attention (e.g., Vuilleumier et al. 2001a, 2004; Pessoa et al. 2002) had a different aim, and therefore, their “low-attention” condition used shorter presentation durations (peripheral presentation for 200–250 msec) and a peripheral concurrent task—parameters that, relative to their high-attention condition, attenuated amygdala activation to emotional faces in the Pessoa et al. (2002) study. The significance of this methodological difference is that in the present study, picture content could be processed even under low-attention conditions. As a result, we did not observe any significant differences in amygdala activation for emotional pictures in the high- and low-attention condition, although note that the small sample size challenges the interpretation of null effects. Replicating previous studies, we found that the differential activation in the amygdala for emotional relative to neutral pictures, which we interpreted as correlated with emotional arousal, was larger under low- than under high-attention conditions, an effect likely due to participants’ engagement in an effortful cognitive task under high-attention conditions (Critchley et al. 2000; Hariri et al. 2000; Adolphs 2002; Lieberman et al. 2007).

In conclusion, our study supported the specific prediction of the attention mediation hypothesis of immediate EEM. We showed that EEM was associated with activation in the amygdala and extrastriate regions, in particular, the fusiform gyrus, and that the fusiform gyrus was functionally connected to the amygdala and involved in emotion-dependent enhancement of attention to pictures. Emotional recruitment of sensory processing resources has memory consequences that are evident immediately following encoding and could boost the direct influence emotion also exerts on memory consolidation.

Materials and Methods

Participants

Eleven young adults (five females; mean age 24.43, SD = 6.23) participated in the study and were paid at a rate of $25/hour. Four additional participants were excluded, one for excessive motion exceeding the cutoff of 1.5 mm, one because of very low memory performance, and two because of failure to comply with the attention instructions.

Materials

A total of 288 pictures, 210 × 280 pixels in size, were drawn from the International Affective Picture System (IAPS) (Lang et al. 2005) and from the Internet. A total of 144 pictures were judged by the authors (D.T. and L.R.) to be negative and arousing, and the others were emotionally neutral. A separate group of five participants rated the pictures for visual complexity on a 1 to 9 scale, and on valence and emotional arousal using the computerized SAM scale (Bradley and Lang 1994). Emotional and neutral pictures were equivalent on visual complexity, t(288) = 1.62, P > 0.10. The emotional pictures had a more negative valence, t(286) = −40.82, P < 0.001, and were more arousing, t(286) = 43.52, P < 0.001, than the neutral pictures. All pictures depicted people. We used Correl Photopaint 8.232 to match pictures on luminance, t(286) = −1.45, P > 0.10.

Scrambled pictures were created from the intact pictures with Adobe Photoshop software (wave type: square; number of Generators: 25; wavelength min: 10, wavelength max: 30; amplitude min: 5; amplitude max: 100; scale horizontal: 10; scale vertical: 100; undefined areas: wrap around). All scrambled pictures participants saw in the study phase were created from unstudied pictures (the lures for the test phase).

The study phase used 96 emotional, 96 neutral, and 96 scrambled pictures. The test phase used all intact pictures, with a ratio of 2:1 between old and new pictures. Pictures presentation was completely randomized for each participant. Pictures were presented centrally on a gray background that was used throughout the experiment. We used E-Prime 1.1 (Psychology Software Tools) to present the stimuli and collect the data.

Behavioral procedure

Study phase

Memory encoding was incidental to ensure compliance with the attention instructions. To make it easier to maintain the orienting set, the attention conditions were blocked, and both emotional and neutral pictures were mixed within each block (a mixed blocked/event-related design). Block instructions (the words “attend” or “detect” for the high- and low-attention blocks, respectively) were presented at the bottom of the screen for 14 sec before the block started, and remained on the screen throughout the block. To minimize differences in eye movement between attention conditions, participants fixated their gaze on a green cross-hair, which appeared at the center of the screen for 2 sec before the block began, and remained throughout the block.

The study phase included four runs; each run included six attention blocks that alternated. The attention condition used in the first block of a run alternated as well, so that participants started two runs with a high-attention block and two runs with a low-attention block. Each block included four emotional pictures, four neutral pictures, and four scrambled pictures. Each picture was presented for 1 sec. The inter-trial interval was 3- to 8-sec long, with the constraint that each block was 64-sec long. Each run began with a 20-sec “get ready” signal and ended with a 16-sec fixation period.

Before the experiment began, participants practiced performing the low- and the high-attention tasks. The experimenter viewed their eye movement through a mirror mounted on top of the monitor and ensured that participants were fixating on the cross-hair in both attention conditions.

Test phase

To avoid floor effects in memory for low-attended pictures, we tested memory with a recognition rather than a recall test. During the study-test interval, participants received instructions about the test phase and were given an additional structural scan; all participants but the first performed the test in the scanner (these data will be reported separately). The test was divided into three runs, with 96 pictures in each run. Pictures were allocated randomly to each run for each participant, with the constraint that each run included an equal number of emotional and neutral pictures from each one of the attention conditions in each one of the study runs, and an equal number of unstudied emotional and neutral pictures. Each picture was presented for 4 sec. The interval between picture presentation was 0, 2, 4, or 8 sec, with the frequency of each of these intervals based on a random-selection probability distribution. Participants were required to fixate on the bright green cross during the interval between pictures, but were allowed to roam their eyes freely during picture presentation to improve their memory performance. They were encouraged to emphasize accuracy, but to respond as fast as possible once they knew what the answer was. Participants responded to each picture by choosing one of four response options to indicate that they were sure the picture was new, thought the picture was new but were unsure, were sure the picture was old, or thought the picture was old but were unsure. Participants responded by pressing one of four keys with their dominant hand.

Picture rating

Following the scan, participants saw all of the pictures again and rated them for emotional arousal using the computerized SAM scale (Bradley and Lang 1994). These ratings were used to bin pictures to low in emotionality (ratings 1–5) or high in emotionality (ratings 6–9). There was a significant difference between picture arousal ratings for those in the two bins, emotional (M = 7.45, SD = 0.40) and neutral (M = 3.50, SD = 0.78), t(10) = 21.42, P < 0.001. Participants’ own ratings were used in all analyses.

Gender differences

Following reports of gender differences in emotional memory that were even stronger than effects of sex (Cahill et al. 2004a), participants were mailed the Bem Sex Role Inventory (Bem 1981) approximately 6 mo after completing the study.

Image acquisition

Participants were scanned with a Signa 3-tesla (T) magnet with a standard head coil (CV/i hardware, LX8.3 software; General Electric Medical Systems). A standard high-resolution, 3D T1-weighted fast spoiled gradient echo image (TR = 7.0 msec; TE = 3.1 msec; flip angle = 15°; acquisition matrix = 256 × 192; FOV = 22 cm; 124 axial slices; slice thickness = 1.4 mm) was first obtained to register functional maps against brain anatomy. Functional imaging was performed to measure brain activation by means of the blood oxygenation level-dependent (BOLD) effect (Ogawa et al. 1990) with optimal contrast. Functional scans were acquired with a single-shot T2*-weighted pulse sequence with spiral in-out readout (TR = 2000 msec; TE = 30 msec; flip angle = 70°; effective acquisition matrix = 64 × 64; FOV = 20 cm; 26 slices; slice thickness = 5.0 mm), including off-line gridding and reconstruction of the raw data (Glover and Lai 1998). Images were projected to a projector mirror mount using a rear projection screen. Participants responded by pressing buttons on a Rowland USB Response Box (The Rowland Institute at Harvard) with their dominant hand.

Imaging data analysis

Univariate analysis

Image processing and analysis were performed using the Analysis of Functional Neuroimages (AFNI, version 2.25) software package (Cox 1996; Cox and Hyde 1997). The initial 10 images, in which transient signal changes occur as brain magnetization reaches a steady state, were obtained prior to task presentation and excluded from all analyses. Time series data were spatially coregistered to correct for head motion using a 3D Fourier transform interpolation, and data exceeding a 1.5-mm range of head motion was excluded. The four scanning runs were then concatenated and detrended to a third-order polynomial. Based on the individual fMRI signal data and the input stimulus function (time series), AFNI estimates the impulse response function by performing a multiple linear regression analysis. To improve power, two such analyses were conducted. The encoding model included the encoding condition regardless of subsequent memory performance. It thus included six regressors, corresponding to attention (high, low) x Type (scrambled, neutral, emotional pictures). Individual participants’ own ratings of emotional intensity were used to classify the pictures to emotional (all pictures rated >5 in the 1–9 scale) or neutral (all other pictures). The memory model classified encoding activation for each studied picture according to participants’ memory performance at test, regardless of the attention encoding condition. The model thus included four regressors, corresponding to memory (hits, misses) × Type (neutral, emotional pictures). To increase power in the analysis of subsequent memory, we collapsed across confidence levels and attention levels at encoding. In addition to the experimental regressors, each model also regressed out activation due to the interblock interval and instruction period. We examined the encoding model with and without regressing out encoding latency, but included latency as a regressor in the memory model (removing it did not change the results). The estimated impulse response was then convolved with the input stimulus time series to provide the impulse-response function associated with the experimental conditions. The estimated response from the period of 4–8 sec after picture onset (the peak of the hemodynamic response) was then extracted, transformed into Talairach coordinates (Talairach and Tournaux 1998), and smoothed with a Gaussian filter of 6-mm full-width-at-half-maximum (FWHM) to increase the signal-to-noise ratio in order to permit subsequent group analysis.

Group analysis consisted of two voxel-wise, mixed-model, repeated-measure ANOVAs (one for the encoding model and one for the memory model) with participants as a random factor and experimental condition as a fixed factor. For the encoding model, general linear tests of simple effects defined three contrasts: “attention” (high attention > low attention, separately for neutral pictures and for scrambled pictures, P < 0.005) and emotion (emotional > neutral pictures, under low attention). For the memory model, the interaction between emotion and memory defined regions that were potentially associated with EEM (P < 0.005). We used the interaction mask to search for voxels in which there were significant differences between the emotional and the neutral Dms (difference due to memory, defined as hits > misses); these contrasts were computed for each participant and tested with a third repeated-measures ANOVA (P < 0.05).

The crux of our data analysis depended on a series of conjunctions, which we take here to mean spatial overlap between separate contrasts. Our emotion and attention contrasts were not independent of each other (see Experimental Contrasts section above), but both were independent of the EEM contrast. According to Friston et al. (2005), a conjunction analysis of high-thresholded contrasts is too conservative. However, Nichols et al. (2005) cautioned about interpreting results from conjunction analysis of contrasts when each contrast was not significant on its own. In order to permit interpretation of both conjunctions and disjunctions, it was important to use only statistically significant effects in the conjunction, but with a significance threshold that is not exceedingly conservative. A deliberately lenient threshold of P < 0.005 and a minimum cluster size of 180 mm3 (four original voxels) were used throughout for all contrasts reported here, apart from those where a mask reduced the search volume substantially (EEM contrast above); there, we used P < 0.05 and a cluster threshold of 90 mm3. The coordinates of clusters were determined by the location corresponding to the peak intensity. For the composite maps shown here, the statistical maps averaged across subjects were superimposed onto a structural image averaging across participants. The cerebellum was not included in the functional maps. Finally, the amygdala ROI was defined per participant with AFNI’s Talairach atlas “drawdataset” plug-in in order to extract its hemodynamic response.

Multivariate analysis

Partial least squares (PLS) (McIntosh et al. 1996) is a multivariate analysis technique that identifies assemblies of brain regions that covary together in relation to a given input. Regions that vary their activity together across participants are thought to belong to the same interactive network. Regions that vary their activity independently of each other across participants may not be part of the same network, even if they are activated at the same time (Horwitz et al. 2000). Seed PLS examines how the inter-regional covariation relates both to the experimental conditions and to activity in seed (reference) voxels (McIntosh and Lobaugh 2004). On the basis of the univariate results, we wished to investigate the relationship between the peak-activated voxel for emotion in the left amygdala, and the peak-activated voxel in the conjunction of emotion, content-dependent attention and EEM in the left fusiform gyrus. In order to constrain our analysis to the covariance of interest—those networks that were more strongly connected as a function of the amygdala’s response to emotion—we included a third seed, namely, the difference in activation in the amygdala seed for emotional > neutral pictures. This difference score is a measure of the responsiveness of the amygdala to emotion. Seed PLS identifies a new set of latent variables (LVs), analogous to contrasts, which reveals the nature of the brain-seed covariance and the way this covariance varies across task conditions. Each LV is associated with a singular image; the numeric values (“salience”) within the image are weighted linear combinations of voxels that covary with the LV and can be positive or negative. This means that negative correlations could reflect positive functional relationship and vice versa, but same-signed correlations across seeds or brain regions reflect a same-signed functional relationship. Statistical significance of each LV as a whole was assessed by 500 permutation tests (Edgington 1980; McIntosh et al. 1996; McIntosh and Lobaugh 2004), and the stability of each brain voxel’s contribution to the LV was determined through 100 bootstrapping resampling (Efron and Tibshirani 1993). Voxels in the singular images were considered reliable if they had a bootstrap ratio of salience to SE corresponding to 95% (Efron and Tibshirani 1993; McIntosh and Gonzalez-Lima 1998; McIntosh 1999).

Acknowledgments

We thank M. Ziegler for her assistance in programming and administering the Bem questionnaire, A.R. McIntosh for helpful discussions, F. Tam for assistance in scanning, and B.A. Strange for comments on a draft of this manuscript. This study has been funded by NSERC grant CFC205055 fund 454119 to M M. and the American Psychological Foundation’s Graduate Research Scholarship in Psychology grant to D.T.

Footnotes

Article is online at http://www.learnmem.org/cgi/doi/10.1101/lm.722908.

References

- Adolphs R. Neural systems for recognizing emotion. Curr. Opin. Neurobiol. 2002;12:169–177. doi: 10.1016/s0959-4388(02)00301-x. [DOI] [PubMed] [Google Scholar]

- Adolphs R., Tranel D., Buchanan T.W. Amygdala damage impairs emotional memory for gist but not details of complex stimuli. Nat. Neurosci. 2005;8:512–518. doi: 10.1038/nn1413. [DOI] [PubMed] [Google Scholar]

- Algom D., Chaiut E., Lev S. A rational look at the emotional stroop phenomenon: A generic slowdown, not a stroop effect. J. Exp. Psychol. Gen. 2004;133:323–338. doi: 10.1037/0096-3445.133.3.323. [DOI] [PubMed] [Google Scholar]

- Amaral D.G., Price J.L. Amygdalo-cortical projections in the monkey (Macaca fascicularis) J. Comp. Neurol. 1984;230:465–496. doi: 10.1002/cne.902300402. [DOI] [PubMed] [Google Scholar]

- Amaral D.G., Behniea H., Kelly J.L. Topographic organization of projections from the amygdala to the visual cortex in the macaque monkey. Neuroscience. 2003;118:1099–1120. doi: 10.1016/s0306-4522(02)01001-1. [DOI] [PubMed] [Google Scholar]

- Anderson A.K. Affective influences on the attentional dynamics supporting awareness. J. Exp. Psychol. Gen. 2005;134:258–281. doi: 10.1037/0096-3445.134.2.258. [DOI] [PubMed] [Google Scholar]

- Anderson A.K., Phelps E.A. Lesions of the human amygdala impair enhanced perception of emotionally salient events. Nature. 2001;411:305–309. doi: 10.1038/35077083. [DOI] [PubMed] [Google Scholar]

- Anderson A.K., Christoff K., Panitz D., De Rosa E., Gabrieli J.D. Neural correlates of the automatic processing of threat facial signals. J. Neurosci. 2003;23:5627–5633. doi: 10.1523/JNEUROSCI.23-13-05627.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson A.K., Wais P.E., Gabrieli J.D. Emotion enhances remembrance of neutral events past. Proc. Natl. Acad. Sci. 2006;103:1599–1604. doi: 10.1073/pnas.0506308103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bem S.L. Bem sex-role inventory. Consulting Psychologists Press, Inc.; Redwood City, CA: 1981. [Google Scholar]

- Bianchin M., Mello e Souza T., Medina J.H., Izquierdo I. The amygdala is involved in the modulation of long-term memory, but not in working or short-term memory. Neurobiol. Learn. Mem. 1999;71:127–131. doi: 10.1006/nlme.1998.3881. [DOI] [PubMed] [Google Scholar]

- Bingel U., Rose M., Glascher J., Buchel C. fMRI reveals how pain modulates visual object processing in the ventral visual stream. Neuron. 2007;55:157–167. doi: 10.1016/j.neuron.2007.05.032. [DOI] [PubMed] [Google Scholar]

- Bradley M.M., Lang P.J. Measuring emotion: The self-assessment manikin and the semantic differential. J. Behav. Ther. Exp. Psychiatry. 1994;25:49–59. doi: 10.1016/0005-7916(94)90063-9. [DOI] [PubMed] [Google Scholar]

- Bradley M.M., Sabatinelli D., Lang P.J., Fitzsimmons J.R., King W., Desai P. Activation of the visual cortex in motivated attention. Behav. Neurosci. 2003;117:369–380. doi: 10.1037/0735-7044.117.2.369. [DOI] [PubMed] [Google Scholar]

- Brown M.W., Aggleton J.P. Recognition memory: What are the roles of the perirhinal cortex and hippocampus? Nat. Rev. Neurosci. 2001;2:51–61. doi: 10.1038/35049064. [DOI] [PubMed] [Google Scholar]

- Buchel C., Friston K.J. Modulation of connectivity in visual pathways by attention: Cortical interactions evaluated with structural equation modelling and fMRI. Cereb. Cortex. 1997;7:768–778. doi: 10.1093/cercor/7.8.768. [DOI] [PubMed] [Google Scholar]

- Cahill L., Alkire M.T. Epinephrine enhancement of human memory consolidation: Interaction with arousal at encoding. Neurobiol. Learn. Mem. 2003;79:194–198. doi: 10.1016/s1074-7427(02)00036-9. [DOI] [PubMed] [Google Scholar]

- Cahill L., McGaugh J.L. Mechanisms of emotional arousal and lasting declarative memory. Trends Neurosci. 1998;21:294–299. doi: 10.1016/s0166-2236(97)01214-9. [DOI] [PubMed] [Google Scholar]

- Cahill L., Babinsky R., Markowitsch H.J., McGaugh J.L. The amygdala and emotional memory. Nature. 1995;377:295–296. doi: 10.1038/377295a0. [DOI] [PubMed] [Google Scholar]

- Cahill L., Haier R.J., White N.S., Fallon J., Kilpatrick L., Lawrence C., Potkin S.G., Alkire M.T. Sex-related difference in amygdala activity during emotionally influenced memory storage. Neurobiol. Learn. Mem. 2001;75:1–9. doi: 10.1006/nlme.2000.3999. [DOI] [PubMed] [Google Scholar]

- Cahill L., Gorski L., Le K. Enhanced human memory consolidation with post-learning stress: Interaction with the degree of arousal at encoding. Learn. Mem. 2003;10:270–274. doi: 10.1101/lm.62403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cahill L., Gorski L., Belcher A., Huynh Q. The influence of sex versus sex-related traits on long-term memory for gist and detail from an emotional story. Conscious. Cogn. 2004a;13:391–400. doi: 10.1016/j.concog.2003.11.003. [DOI] [PubMed] [Google Scholar]

- Cahill L., Uncapher M., Kilpatrick L., Alkire M., Turner J. Sex-related hemispheric lateralization of amygdala function in emotionally influenced memory: An fMRI investigation. Learn. Mem. 2004b;11:261–266. doi: 10.1101/lm.70504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Canli T., Zhao Z., Brewer J., Gabrieli J.D., Cahill L. Event-related activation in the human amygdala associates with later memory for individual emotional experience. J. Neurosci. 2000;20:RC99. doi: 10.1523/JNEUROSCI.20-19-j0004.2000. http://www.jneurosci.org/cgi/content/full/20004570v1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Canli T., Desmond J.E., Zhao Z., Gabrieli J.D. Sex differences in the neural basis of emotional memories. Proc. Natl. Acad. Sci. 2002;99:10789–10794. doi: 10.1073/pnas.162356599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbetta M., Shulman G.L. Control of goal-directed and stimulus-driven attention in the brain. Nat. Rev. Neurosci. 2002;3:201–215. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- Cox R.W. AFNI: Software for analysis and visualization of functional magnetic resonance neuroimages. Comput. Biomed. Res. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Cox R.W., Hyde J.S. Software tools for analysis and visualization of fMRI data. NMR Biomed. 1997;10:171–178. doi: 10.1002/(sici)1099-1492(199706/08)10:4/5<171::aid-nbm453>3.0.co;2-l. [DOI] [PubMed] [Google Scholar]

- Craik F.I., Govoni R., Naveh-Benjamin M., Anderson N.D. The effects of divided attention on encoding and retrieval processes in human memory. J. Exp. Psychol. Gen. 1996;125:159–180. doi: 10.1037//0096-3445.125.2.159. [DOI] [PubMed] [Google Scholar]

- Critchley H., Daly E., Phillips M., Brammer M., Bullmore E., Williams S., Van Amelsvoort T., Robertson D., David A., Murphy D. Explicit and implicit neural mechanisms for processing of social information from facial expressions: A functional magnetic resonance imaging study. Hum. Brain Mapp. 2000;9:93–105. doi: 10.1002/(SICI)1097-0193(200002)9:2<93::AID-HBM4>3.0.CO;2-Z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desimone R., Duncan J. Neural mechanisms of selective visual attention. Annu. Rev. Neurosci. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. [DOI] [PubMed] [Google Scholar]

- Dolan R.J. Emotion, cognition, and behavior. Science. 2002;298:1191–1194. doi: 10.1126/science.1076358. [DOI] [PubMed] [Google Scholar]

- Dolcos F., Labar K.S., Cabeza R. Interaction between the amygdala and the medial temporal lobe memory system predicts better memory for emotional events. Neuron. 2004;42:855–863. doi: 10.1016/s0896-6273(04)00289-2. [DOI] [PubMed] [Google Scholar]

- Edgington E.S. Randomization tests. Marcel Dekker; New York: 1980. [Google Scholar]

- Efron B., Tibshirani R.J. An introduction to the bootstrap. Chapman & Hall, Inc.; New York: 1993. [Google Scholar]

- Epstein R., Kanwisher N. A cortical representation of the local visual environment. Nature. 1998;392:598–601. doi: 10.1038/33402. [DOI] [PubMed] [Google Scholar]

- Friston K.J., Penny W.D., Glaser D.E. Conjunction revisited. Neuroimage. 2005;25:661–667. doi: 10.1016/j.neuroimage.2005.01.013. [DOI] [PubMed] [Google Scholar]

- Gallagher M., Schoenbaum G. Functions of the amygdala and related forebrain areas in attention and cognition. Ann. N. Y. Acad. Sci. 1999;877:397–411. doi: 10.1111/j.1749-6632.1999.tb09279.x. [DOI] [PubMed] [Google Scholar]

- Glover G.H., Lai S. Self-navigated spiral fMRI: Interleaved versus single-shot. Magn. Reson. Med. 1998;39:361–368. doi: 10.1002/mrm.1910390305. [DOI] [PubMed] [Google Scholar]

- Hadley C.B., Mackay D.G. Does emotion help or hinder immediate memory? Arousal versus priority-binding mechanisms. J. Exp. Psychol. Learn. Mem. Cogn. 2006;32:79–88. doi: 10.1037/0278-7393.32.1.79. [DOI] [PubMed] [Google Scholar]

- Hamann S. Cognitive and neural mechanisms of emotional memory. Trends Cogn. Sci. 2001;5:394–400. doi: 10.1016/s1364-6613(00)01707-1. [DOI] [PubMed] [Google Scholar]

- Hariri A.R., Bookheimer S.Y., Mazziotta J.C. Modulating emotional responses: Effects of a neocortical network on the limbic system. Neuroreport. 2000;11:43–48. doi: 10.1097/00001756-200001170-00009. [DOI] [PubMed] [Google Scholar]

- Horwitz B., Friston K.J., Taylor J.G. Neural modeling and funcitonal brain imaging: An overview. Neural Netw. 2000;13:829–846. doi: 10.1016/s0893-6080(00)00062-9. [DOI] [PubMed] [Google Scholar]

- Izquierdo L.A., Barros D.M., Vianna M.R., Coitinho A., deDavid e Silva T., Choi H., Moletta B., Medina J.H., Izquierdo I. Molecular pharmacological dissection of short- and long-term memory. Cell. Mol. Neurobiol. 2002;22:269–287. doi: 10.1023/a:1020715800956. [DOI] [PubMed] [Google Scholar]

- Kastner S., Ungerleider L.G. Mechanisms of visual attention in the human cortex. Annu. Rev. Neurosci. 2000;23:315–341. doi: 10.1146/annurev.neuro.23.1.315. [DOI] [PubMed] [Google Scholar]

- Keil A., Bradley M.M., Hauk O., Rockstroh B., Elbert T., Lang P.J. Large-scale neural correlates of affective picture processing. Psychophysiology. 2002;39:641–649. doi: 10.1017.S0048577202394162. [DOI] [PubMed] [Google Scholar]

- Keil A., Moratti S., Sabatinelli D., Bradley M.M., Lang P.J. Additive effects of emotional content and spatial selective attention on electrocortical facilitation. Cereb. Cortex. 2005;15:1187–1197. doi: 10.1093/cercor/bhi001. [DOI] [PubMed] [Google Scholar]

- Kensinger E.A., Corkin S. Two routes to emotional memory: Distinct neural processes for valence and arousal. Proc. Natl. Acad. Sci. 2004;101:3310–3315. doi: 10.1073/pnas.0306408101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kern R.P., Libkuman T.M., Otani H., Holmes K. Emotional stimuli, divided attention, and memory. Emotion. 2005;5:408–417. doi: 10.1037/1528-3542.5.4.408. [DOI] [PubMed] [Google Scholar]

- LaBar K.S., Cabeza R. Cognitive neuroscience of emotional memory. Nat. Rev. Neurosci. 2006;7:54–64. doi: 10.1038/nrn1825. [DOI] [PubMed] [Google Scholar]

- LaBar K.S., Phelps E.A. Arousal-mediated memory consolidation: Role of the medial temporal lobe in humans. Psychol. Sci. 1998;9:490–493. [Google Scholar]

- Lane R.D., Chua P.M., Dolan R.J. Common effects of emotional valence, arousal and attention on neural activation during visual processing of pictures. Neuropsychologia. 1999;37:989–997. doi: 10.1016/s0028-3932(99)00017-2. [DOI] [PubMed] [Google Scholar]

- Lang P.J., Bradley M.M., Fitzsimmons J.R., Cuthbert B.N., Scott J.D., Moulder B., Nangia V. Emotional arousal and activation of the visual cortex: An fMRI analysis. Psychophysiology. 1998;35:199–210. [PubMed] [Google Scholar]

- Lang P.J., Bradley M.M., Cuthbert B.N. International affective picture system (IAPS): Affective ratings of pictures and instruction manual. Technical Report A-6. University of Florida; Gainesville, FL: 2005. [Google Scholar]

- Lieberman M.D., Eisenberger N.I., Crockett M.J., Tom S.M., Pfeifer J.H., Way B.M. Putting feelings into words: Affect labeling disrupts amygdala activity in response to affective stimuli. Psychol. Sci. 2007;18:421–428. doi: 10.1111/j.1467-9280.2007.01916.x. [DOI] [PubMed] [Google Scholar]

- Mackiewicz K.L., Sarinopoulos I., Cleven K.L., Nitschke J.B. The effect of anticipation and the specificity of sex differences for amygdala and hippocampus function in emotional memory. Proc. Natl. Acad. Sci. 2006;103:14200–14205. doi: 10.1073/pnas.0601648103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGaugh J.L. Memory reconsolidation hypothesis revived but restrained: Theoretical comment on Biedenkapp and Rudy (2004) Behav. Neurosci. 2004;118:1140–1142. doi: 10.1037/0735-7044.118.5.1140. [DOI] [PubMed] [Google Scholar]

- McIntosh A.R. Mapping cognition to the brain through neural interactions. Memory. 1999;7:523–548. doi: 10.1080/096582199387733. [DOI] [PubMed] [Google Scholar]

- McIntosh A.R., Gonzalez-Lima F. Large-scale functional connectivity in associative learning: Interrelations of the rat auditory, visual, and limbic systems. J. Neurophysiol. 1998;80:3148–3162. doi: 10.1152/jn.1998.80.6.3148. [DOI] [PubMed] [Google Scholar]

- McIntosh A.R., Lobaugh N.J. Partial least squares analysis of neuroimaging data: Applications and advances. Neuroimage. 2004;23:S250–S263. doi: 10.1016/j.neuroimage.2004.07.020. [DOI] [PubMed] [Google Scholar]

- McIntosh A.R., Bookstein F.L., Haxby J.V., Grady C.L. Spatial pattern analysis of functional brain images using partial least squares. Neuroimage. 1996;3:143–157. doi: 10.1006/nimg.1996.0016. [DOI] [PubMed] [Google Scholar]

- Morris J.S., Friston K.J., Buchel C., Frith C.D., Young A.W., Calder A.J. A neuromodulatory role for the human amygdala in processing emotional facial expressions. Brain. 1998;121:47–57. doi: 10.1093/brain/121.1.47. [DOI] [PubMed] [Google Scholar]

- Morris J.S., Ohman A., Dolan R.J. A subcortical pathway to the right amygdala mediating “unseen” fear. Proc. Natl. Acad. Sci. 1999;96:1680–1685. doi: 10.1073/pnas.96.4.1680. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nichols T., Brett M., Andersson J., Wager T., Poline J.B. Valid conjunction inference with the minimum statistic. Neuroimage. 2005;25:653–660. doi: 10.1016/j.neuroimage.2004.12.005. [DOI] [PubMed] [Google Scholar]

- Ogawa S., Lee T.M., Nayak A.S., Glynn P. Oxygenation-sensitive contrast in magnetic-resonance image of rodent brain at high magnetic-fields. Magn. Reson. Med. 1990;14:68–78. doi: 10.1002/mrm.1910140108. [DOI] [PubMed] [Google Scholar]

- Olson I.R., Plotzker A., Ezzyat Y. The Enigmatic temporal pole: A review of findings on social and emotional processing. Brain. 2007;130:1718–1731. doi: 10.1093/brain/awm052. [DOI] [PubMed] [Google Scholar]

- Paller K.A., Wagner A.D. Observing the transformation of experience into memory. Trends Cogn. Sci. 2002;6:93–102. doi: 10.1016/s1364-6613(00)01845-3. [DOI] [PubMed] [Google Scholar]

- Pessoa L., Kastner S., Ungerleider L.G. Attentional control of the processing of neural and emotional stimuli. Brain Res. Cogn. Brain Res. 2002;15:31–45. doi: 10.1016/s0926-6410(02)00214-8. [DOI] [PubMed] [Google Scholar]

- Phelps E.A., LaBar K.S., Anderson A.K., O'Connor K.J., Fulbright R.K., Spencer D.D. Specifying the contributions of the human amygdala to emotional memory: A case study. Neurocase. 1998;4:527–540. [Google Scholar]

- Pinsk M.A., Doniger G.M., Kastner S. Push-pull mechanism of selective attention in human extrastriate cortex. J. Neurophysiol. 2004;92:622–629. doi: 10.1152/jn.00974.2003. [DOI] [PubMed] [Google Scholar]

- Pitkanen A. The amygdala: A functional analysis. (ed. J.P. Aggleton); Oxford University Press, New York: 2000. Connectivity of the rat amygdaloid complex; pp. 31–116. [Google Scholar]

- Posner M.I., Driver J. The neurobiology of selective attention. Curr. Opin. Neurobiol. 1992;2:165–169. doi: 10.1016/0959-4388(92)90006-7. [DOI] [PubMed] [Google Scholar]

- Pratto F., John O.P. Automatic vigilance: The attention-grabbing power of negative social information. J. Pers. Soc. Psychol. 1991;61:380–391. doi: 10.1037//0022-3514.61.3.380. [DOI] [PubMed] [Google Scholar]

- Price J.L. Comparative aspects of amygdala connectivity. Ann. N. Y. Acad. Sci. 2003;985:50–58. doi: 10.1111/j.1749-6632.2003.tb07070.x. [DOI] [PubMed] [Google Scholar]

- Richardson M.P., Strange B.A., Dolan R.J. Encoding of emotional memories depends on amygdala and hippocampus and their interactions. Nat. Neurosci. 2004;7:278–285. doi: 10.1038/nn1190. [DOI] [PubMed] [Google Scholar]

- Sabatinelli D., Lang P.J., Keil A., Bradley M.M. Emotional perception: Correlation of functional MRI and event-related potentials. Cereb. Cortex. 2007;17:1085–1091. doi: 10.1093/cercor/bhl017. [DOI] [PubMed] [Google Scholar]

- Schimmack U. Attentional interference effects of emotional pictures: Threat, negativity, or arousal? Emotion. 2005;5:55–66. doi: 10.1037/1528-3542.5.1.55. [DOI] [PubMed] [Google Scholar]

- Schupp H.T., Stockburger J., Codispoti M., Junghofer M., Weike A.I., Hamm A.O. Selective visual attention to emotion. J. Neurosci. 2007;27:1082–1089. doi: 10.1523/JNEUROSCI.3223-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sereno M.I., Pitzalis S., Martinez A. Mapping of contralateral space in retinotopic coordinates by a parietal cortical area in humans. Science. 2001;294:1350–1354. doi: 10.1126/science.1063695. [DOI] [PubMed] [Google Scholar]

- Sharot T., Phelps E.A. How arousal modulates memory: Disentangling the effects of attention and retention. Cogn. Affect. Behav. Neurosci. 2004;4:294–306. doi: 10.3758/cabn.4.3.294. [DOI] [PubMed] [Google Scholar]

- Sharot T., Delgado M.R., Phelps E.A. How emotion enhances the feeling of remembering. Nat. Neurosci. 2004;7:1376–1380. doi: 10.1038/nn1353. [DOI] [PubMed] [Google Scholar]

- Strange B.A., Hurlemann R., Dolan R.J. An emotion-induced retrograde amnesia in humans is amygdala- and β-adrenergic-dependent. Proc. Natl. Acad. Sci. 2003;100:13626–13631. doi: 10.1073/pnas.1635116100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talairach J., Tournaux P. Co-planar stereotaxic atlas of the human brain. Thieme; Stuttgart, Germany: 1998. [Google Scholar]

- Talmi D., Schimmack U., Paterson T., Moscovitch M. The role of attention and relatedness in emotionally enhanced memory. Emotion. 2007;7:89–102. doi: 10.1037/1528-3542.7.1.89. [DOI] [PubMed] [Google Scholar]

- Thomas R.C., Hasher L. The influence of emotional valence on age differences in early processing and memory. Psychol. Aging. 2006;21:821–825. doi: 10.1037/0882-7974.21.4.821. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vuilleumier P. How brains beware: Neural mechanisms of emotional attention. Trends Cogn. Sci. 2005;9:585–594. doi: 10.1016/j.tics.2005.10.011. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P., Driver J. Modulation of visual processing by attention and emotion: Windows on causal interactions between human brain regions. Philos. Trans. R. Soc. Lond. B Biol. Sci. 2007;362:837–855. doi: 10.1098/rstb.2007.2092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vuilleumier P., Sagiv N., Hazeltine E., Poldrack R.A., Swick D., Rafal R.D., Gabrieli J.D. Neural fate of seen and unseen faces in visuospatial neglect: A combined event-related functional MRI and event-related potential study. Proc. Natl. Acad. Sci. 2001a;98:3495–3500. doi: 10.1073/pnas.051436898. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vuilleumier P., Armony J.L., Driver J., Dolan R.J. Effects of attention and emotion on face processing in the human brain: An event-related fMRI study. Neuron. 2001b;30:829–841. doi: 10.1016/s0896-6273(01)00328-2. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P., Richardson M.P., Armony J.L., Driver J., Dolan R.J. Distant influences of amygdala lesion on visual cortical activation during emotional face processing. Nat. Neurosci. 2004;7:1271–1278. doi: 10.1038/nn1341. [DOI] [PubMed] [Google Scholar]

- Webster M.J., Bachevalier J., Ungerleider L.G. Connections of inferior temporal areas TEO and TE with parietal and frontal cortex in macaque monkeys. Cereb. Cortex. 1994;4:470–483. doi: 10.1093/cercor/4.5.470. [DOI] [PubMed] [Google Scholar]