Abstract

Several sensory-motor integration regions have been identified in parietal cortex, which appear to be organized around motor-effectors (e.g., eyes, hands). We investigated whether a sensory-motor integration area might exist for the human vocal tract. Speech requires extensive sensory-motor integration, as does other abilities such as vocal musical skills. Recent work found a posterior region, area Spt, has both sensory (auditory) and motor response properties (for both speech and tonal stimuli). Brain activity of skilled pianists was measured with fMRI while they listened to a novel melody and either covertly hummed the melody (vocal tract effectors) or covert played the melody on a piano (manual effectors). Activity in area Spt was significantly higher for the covert hum versus covert play condition. A region in the anterior IPS (aIPS) showed the reverse pattern, suggesting its involvement in sensory-manual transformations. This finding suggests that area Spt is a sensory-motor integration area for vocal tract gestures for, at least, speech and music.

Keywords: Auditory system, manual, motor system, music, speech, visuomotor

Introduction

There has been a great deal of work over the last decade aimed at understanding the neural circuits supporting sensory-motor interaction. Most of this research has centered on sensory-motor integration within the context of the visual system, and much has been learned. For example, regions in the posterior parietal cortex (PPC) in both human and non-human primates have been identified as critical components of visuomotor integration circuits (Andersen, 1997; Milner & Goodale, 1992). These regions appear to be organized primarily around motor effector systems, such as ocular versus hand/limb action systems (Colby & Goldberg, 1999; Culham & Kanwisher, 2001; Grefkes, Ritzl, Zilles, & Fink, 2004; Kertzman, Schwarz, Zeffiro, & Hallett, 1997) and they may be computing coordinate transformations, mapping between sensory representations and motor commands during movement planning, online updating, and control of movement (Andersen, 1997; Castiello, 2005; Colby & Goldberg, 1999).

Much less research has been conducted on sensory-motor integration within the context of the auditory system. This is likely because most investigators who are interested in sensory-motor interactions have focused on eye or limb movements where visual information plays a dominant role in movement planning and online guidance. However, there is a domain of action where the auditory system plays a critical role, namely in the movements of the vocal tract for speech. For example, the developmental task of learning to articulate the sound patterns of one’s language is cued externally by auditory input primarily (somatosensory feedback plays an additional important role, see Tremblay, Shiller, & Ostry, 2003). As adults, we can, simply by listening, learn to pronounce new words, or pick up regional accents, sometimes unconsciously. Experimental work has also shown that delayed or otherwise altered auditory speech feedback affects speech articulation (Houde & Jordan, 1998; Yates, 1963), and it is well-known that late onset deafness results in articulatory decline (Waldstein, 1989). Finally, neuropsychological research has shown that damage to left hemisphere auditory regions leads to deficits in speech production (Damasio & Damasio, 1980). All of this shows clearly that the auditory system plays an important role in speech production and therefore, there must be a neural mechanism for interfacing auditory and motor representations of speech (Doupe & Kuhl, 1999). See Hickok and Poeppel (2007) for a recent review.

Until recently, little was known about the neural circuit(s) supporting auditory-motor integration. But recent fMRI experiments have made some progress in this respect. The design of these studies relied on the observation from the visual domain, that many neurons in PPC sensory-motor integration areas have both sensory and motor response properties (Murata, Gallese, Kaseda, & Sakata, 1996). Thus, an area supporting auditory-motor integration for speech should respond both during perception and production of speech (covert production is used in prior studies, including the present study, to avoid overt auditory feedback (Buchsbaum, Hickok, & Humphries, 2001; Hickok, Buchsbaum, Humphries, & Muftuler, 2003). Using this approach, a fronto-parietal-temporal network of auditory + motor responsive regions was identified in human cortex. Included in this network is a region in the left posterior Sylvian fissure at the parietal-temporal boundary, area Spt1. Area Spt appears to be functionally and anatomically connected with a frontal area known to be important for speech (area 44) (Buchsbaum et al., 2001; Buchsbaum, Olsen, Koch, & Berman, 2005a; Catani, Jones, & Ffytche, 2005; Galaburda & Sanides, 1980; Hickok & Peoppel, 2004) and the area Spt region, when disrupted via lesion or electrical stimulation, results in speech production deficits (Damasio & Damasio, 1980; Anderson et al., 1999). For these reasons, and also because of its anatomical location, area Spt has been hypothesized to be a sensory-motor integration area analogous in function to the sensory-motor integration areas previously identified in the PPC (Andersen, 1997; Colby & Goldberg, 1999).

Additional work showed that area Spt is not speech-specific, but responds equally well to the perception and production (covert humming) of tonal melodies (Hickok et al., 2003). Area Spt also responds during the temporary maintenance of a list of words in short-term memory independently of whether the items to be maintained were presented auditorily or visually (Buchsbaum, Olsen, Koch, Kohn, Kippenhan, & Berman, 2005b). This latter finding parallels claims that visual-motor integration areas have working memory-related properties (Murata et al., 1996), as well as the observation that sensory input from multiple modalities can drive neurons in PPC sensory-motor fields (Cohen, Batista, & Andersen, 2002; Mullette-Gillman, Cohen, & Groh, 2005). In sum, the response properties of area Spt – particularly that it shows both sensory and motor responses – are consistent with the hypothesis that it is a sensory-motor integration region similar to those found in the primate intraparietal sulcus (Buchsbaum et al., 2005b).

While area Spt has been shown to respond quite well in task involving a variety of sensory inputs (speech, tones, written words), its response to varying motor output conditions has not yet been investigated. while Baumann et al. (2007) report that the planum temporale is active in musicians generating hand/arm movements associated with playing a piece of music (the piece was “played” on a board), suggesting that Spt is active not only during orofacial-associated output tasks, but also manual tasks, without an orofacial task to use as a contrast it is impossible to gauge whether that region may be relatively selective for one or another output modality. If area Spt is organized in a manner analogous to PPC sensory-motor regions – which are organized around motor-effector systems – it should be fairly tightly linked to the vocal tract-related motor system. This, in turn, predicts that manipulation of the output modality should affect activity levels in area Spt even if the sensory stimulation were held constant. We set out to test this prediction in the present experiment.

One population in which auditory inputs can be mapped efficiently to two different motor effector systems is skilled musicians. In at least some musicians, an aurally presented novel melody can be reproduced either by covertly humming the melody (vocal track effectors) or by covertly playing the melody (manual effectors).

Based on our hypothesis that area Spt is a sensory-motor integration area involving vocal track-related motor systems, we predicted that area Spt would activate more strongly when musicians (pianists) were asked to covertly hum a heard melody than when they were asked to covertly play a heard melody. As a secondary hypothesis, we postulated that the use of manual effectors in covertly playing the melody will activate PPC, as this region has been implicated in several manual tasks including visually-guided reaching, apraxic assessments, and piano-playing (Burnod et al., 1999; Haslinger et al., 2005; Makuuchi, Kaminaga, & Sugishita, 2005; Meister et al., 2004).

Materials and Methods

Subjects

Seven (2 male) skilled pianists (age range 18–35 years) participated in the experiment after giving written informed consent. The approval of the University of California, Irvine Institutional Review Board (IRB) was received prior to the start of the study. All participants were screened for prerequisite skill level prior to inclusion in the study. Potential subjects were seated in front of a piano keyboard and presented with novel 3-second piano melodies one at a time. After each melody, participants had to accurately reproduce the melody on the keyboard with only one exposure (approximately 50% of screened participants were able to perform the task and were included in the study). All participants who satisfied criteria had several years of piano experience including professional piano training (mean = 13 years, s.d. = 5), four were music majors, and all continued to play regularly. One participant was self-reported as having perfect pitch.

Stimuli and experimental procedure

Auditory stimuli were novel 3-second piano melodies (no chords) composed in C major and recorded using a midi synthesizer. The melodies ranged in complexity with the average number of notes = 7.69 per 3-second stimulus (sd = 1.75) with varying 3/4, 4/4, 6/8 meters. A trial began with the presentation of a melody followed by a variable rehearsal period during which subjects were asked to either covertly (subvocally) hum the melody repeatedly, or to covertly (submanually) play the melody on a piano keyboard. The rehearsal period lasted 0, 6, or 12 seconds. Rehearsal conditions were counterbalanced across runs. A visual cue (an asterisk) indicated the end of the covert hum/play phase of the trial and was followed by 12 seconds of rest before the onset of the next trial. There were 16 trials of music processing, eight of each condition (ie. covert hum, covert play). Auditory stimuli were presented through air conduction. DMDX software (http://www.u.arizona.edu/~kforster/dmdx/dmdx.htm) was used to deliver the stimuli and to control the timing of stimulus presentation. The presentation of the visual stimulus indicating the end of a rehearsal period was displayed on a magnet compatible LCD monitor.

FMRI Procedure

Data were collected at the University of California, Irvine in a 4T scanner (Magnex Scientific Inc.) interfaced with a Marconi Medical Systems’ EDGE console for the pulse sequence generation and data acquisition. A high resolution anatomical image was acquired (axial plane) with a 3D SPGR pulse sequence for each subject (FOV = 240mm, TR = 50 ms, flip angle = 50 deg., size = 0.9375 mm × 0.9375 mm × 2.5 mm). A series of EPI acquisitions were then collected, obtaining the magnetic field map (Chiou Ahn, Muftuler, & Nalcioglu, 2003). Functional MRI data were acquired using single-shot EPI (FOV = 240mm, TR = 2 s, TE = 31.3 ms, flip angle = 75 deg, voxel size = 2.55 mm × 3.75 mm × 5mm).

Data Analysis

Motion correction was achieved by aligning all functional volumes to the sixth volume in the series using a 6-parameter rigid-body model in AIR 3.0 (Woods et al., 1998). Field map correction was later performed on all volumes during the postprocessing of the raw data to correct for geometric and intensity distortions in the EPI scans (Chiou et al., 2003). The high resolution structural image was co-registered to the sixth volume of the scan. The timecourse of the BOLD signal was temporally filtered (bandpass between 0.0147 Hz and 0.125 Hz). Using AFNI software (Cox, 1996), ROI-based single subject analysis was performed with separate regressors for music perception, covert hum production, and covert play production. Each predictor variable was convolved with a hemodynamic response function and entered into the analysis. An F-statistic was calculated for each voxel, and statistical parametric maps (SPM) were created for each subject. ROIs were determined using the rehearsal conditions (ie. covert hum, covert play) versus rest. Area Spt has been shown to activate strongly to covert humming of novel melodies (Hickok et al., 2003). Thus, the area Spt ROI was identified using the covert hum regressor associated with the longest rehearsal period: the top 4 activated contiguous voxels (above a cutoff threshold of p < .001, uncorrected) within the left planum temporale/parietal operculum of each subject were selected for subsequent analysis. All seven subjects had significant activations in this region. Based on previous studies showing that production of manual movements (e.g., gesture copying) activated posterior parietal areas (Makuuchi et al., 2005; Muhlau et al., 2005), we also defined a parietal ROI using the covert play regressor: a region within the left aIPS was activated in all seven subjects. The top 4 activated contiguous voxels in this ROI, in each subject, were selected for further analysis. See Table 1 for ROI coordinates in each subject. This ROI selection process has the potential to induce task-specific selection bias. That is, we may see differences in amplitude in these two ROIs not because there is any real difference between the covert hum and covert play conditions within these regions, but because we used different selection criteria that biased voxel selection toward randomly higher values in one condition versus the other. To determine if there was this bias in our analysis, we used the opposite regressors to select voxels in the two ROI regions; that is we used the covert play regressor to select planum voxels, and the covert hum regressor to select PPC voxels. This procedure selected nearly identical sets of voxels, and did not change the direction of the effects reported below. Thus, selection bias cannot explain our findings.

Table 1.

Talairach coordinates of peak activations within each subject for both ROIs (area Spt, anterior IPS).

| Area SPT

|

Anterior IPS

|

|||||

|---|---|---|---|---|---|---|

| x | y | z | x | y | z | |

| S1 | −56 | −37 | 15 | −45 | −32 | 34 |

| S2 | −57 | −25 | 6 | −42 | −31 | 37 |

| S3 | −53 | −30 | 9 | −53 | −26 | 29 |

| S4 | −51 | −39 | 11 | −43 | −41 | 27 |

| S5 | −60 | −36 | 6 | −41 | −31 | 27 |

| S6 | −41 | −31 | 22 | −30 | −21 | 43 |

| S7 | −53 | −15 | 6 | −20 | −35 | 42 |

Analysis of ROI data focused on the condition with the longest rehearsal period because it was in this condition that motor-effector based responses were most evident (see Fig. 3). Outliers (less than or greater than 2 s.d. from the mean) were removed from the timecourse data. The remaining data points were converted to z-scores, and the baselines for each condition in each ROI were aligned. Statistically significant differences were calculated using paired t-tests on the 10 voxel values surrounding the activations peaks.

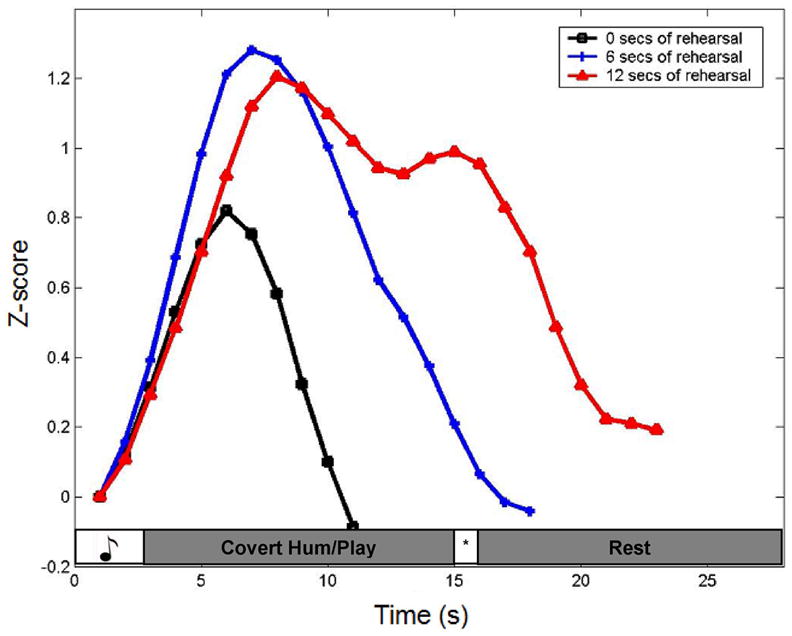

Figure 3.

Averaged timecourses for the three rehearsal periods, 0 sec, 6 sec, and 12 sec, in area Area Spt. Notice the increase in the width of the response function with progressively longer rehearsal periods, showing clearly the contribution of rehearsal to the region’s hemodynamic response. The sensory-only response can be seen in the zero rehearsal condition, showing that this region is responsive to acoustic events alone. The increase in amplitude of the initial peak in the rehearsal trials (both 6 and 12 sec) relative to the no rehearsal trials reveals additivity in the response to the sensory event and the early part of the rehearsal period. In the 12 sec rehearsal condition the drop in amplitude after the initial peak presumably reflects the decay of the sensory response leaving only the rehearsal-related activation. This relative isolation of the rehearsal-related response is evident only in the longer 12sec rehearsal condition.

Results

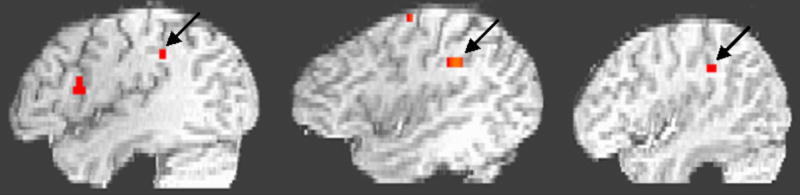

Our main objective was to identify regions that have both perceptual and motor response properties. Our assumption is that rehearsal related activity is tapping motor-articulatory networks (Meister et al., 2004). The theoretical motivation for focusing on regions that respond both to sensory and motor phases of the task these regions are likely to be good candidates for performing sensory-motor integration. A left Sylvian parietal-temporal ROI (area Spt), a region previously identified to be a part of an auditory-motor network (Buchsbaum et al., 2001, Hickok et al., 2003) was shown to be active in all 7 subjects for the music perception + covert hum task. Representative activation maps from 3 subjects are shown (Fig. 1). In contrast, left aIPS was shown to be active in all 7 subjects during music perception + covert play condition with representative activation maps from 3 subjects (Fig. 2).

Figure 1.

Representative activation maps from 3 subjects in area Spt during covert hum

Figure 2.

Representative activation maps from 3 subjects in anterior IPS during covert play

To unequivocally show the distinct contributions of sensory and motor responses, the activation of area Spt during 0 (sensory only), 6, and 12 seconds of rehearsal is shown (Fig. 3). It is clearly shown that the behavior of area Spt is driven not only by the sensory condition, but that it is also modulated by the time period of rehearsal.

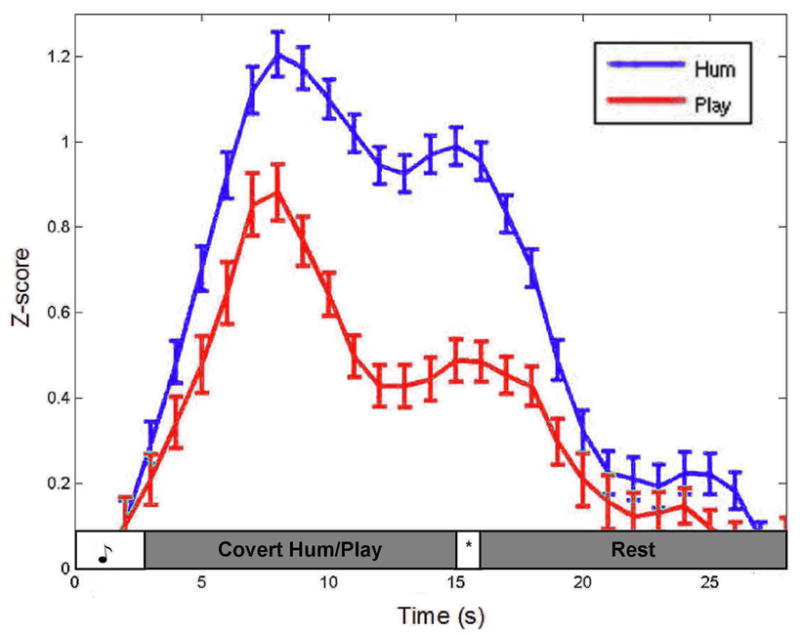

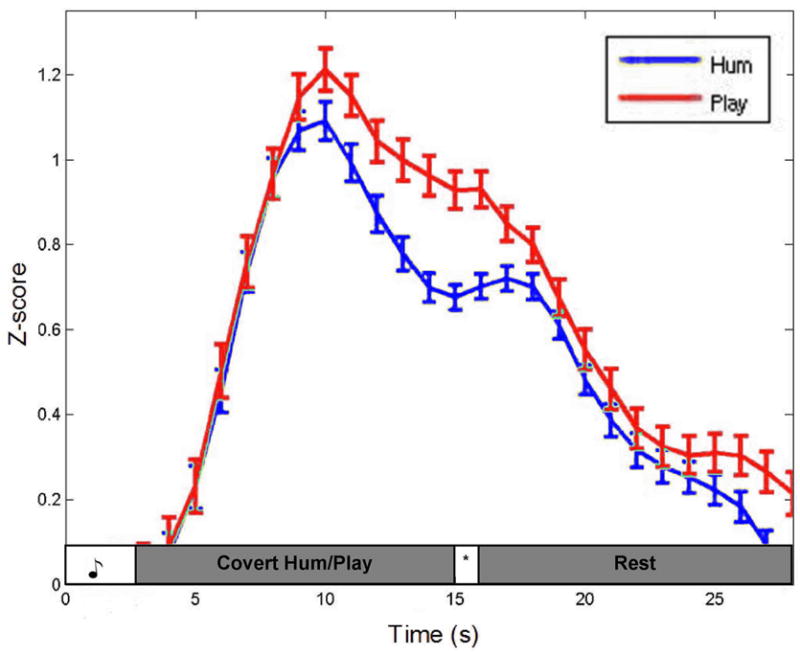

Timecourse graphs for the 12 sec rehearsal trials in area Spt ROI and the left aIPS ROI are shown (Fig. 4 and 5, respectively). Area Spt shows greater activation during the covert hum trials compared the covert play trials, particularly during the rehearsal phase. The aIPS, conversely, shows the reverse pattern, with greater activation for covert play than covert hum, again evident most prominently during the rehearsal phase. Paired t-test on the time points between the first and second peaks indicated that the amplitude differences were highly significant both in Spt (t(9) = 15.87, p = .000) and in the aIPS ROIs (t(9) = 8.28, p = .000).

Figure 4.

The averaged timecourse of the top 4 activated voxels from 7/7 subjects in area Spt during the covert hum condition.

Figure 5.

The averaged timecourse of the top 4 activated voxels from 7/7 subjects in anterior IPS during the covert play condition.

Although our focus and theoretical hypotheses centered on posterior regions, we also found frontal activations during both the covert hum and play conditions, as expected. Two regions were found to be activated consistently across subjects and for both tasks: a premotor location [− 48 − 4 33], and a region in the posterior inferior frontal gyrus [− 46 19 18].

Fig. 6 shows the averaged talairach coordinates of each subject in both ROIs (area Spt: − 53 − 30 11; anterior IPS: − 39 − 31 34) are shown overlaid onto an high resolution, volume-rendered brain to reflect the relative location of the ROIs, smoothing across individual differences.

Figure 6.

The averaged peak activations pooled across subject in each ROI overlaid on a volume-rendered brain. The blue cluster represents the activations in area Spt [− 53 − 30 11] during the covert hum condition and green cluster represents the activations in anterior IPS [− 39 − 31 34] during the covert play condition.

Discussion

A region in the posterior Sylvian fissure at the parietal-temporal boundary (area Spt), known to have auditory-motor response properties (Buchsbaum et al., 2001, 2005b; Hickok et al., 2003; Hickok & Poeppel, 2004; Okada & Hickok, 2006), was found to be substantially more active during covert humming of novel melodies than during covert playing on a keyboard of those same melodies. This finding is in support of the hypothesis that area Spt is a sensory-motor integration area for the vocal tract motor system. There is substantial evidence from previous studies suggesting that area Spt, a sub-region of PT, is involved in sensory and motor outputs of the vocal tract (Buchsbaum et al., 2001; Hickok et al., 2003; Buchsbaum et al., 2005b). Until now, this specialization of area Spt has not been contrasted with a different motor effector in a within-subjects design. Some studies investigating the function of PT have not shown this specialization, suggesting that PT is a computational hub or is involved in rhythm processing (Griffiths & Warren, 2002; Chen et al., 2005); however, these studies were not designed in a way to functionally dissociate the nature and location of area Spt, as these studies were formed with other theoretical motivations in mind and may include the possible smoothing over of our area Spt site.

Somatosensory feedback from the vocal tract is an important component of the production of speech and oro-facial gestures generally (Tremblay et al., 2003). Further, somatosensory responses are commonly observed in parietal sensory-motor integration areas in monkey (Murata et al., 1996). It is, therefore, conceivable that a portion of our activation pattern results from modulation of somatosensory feedback. This possibility does not undermine our fundamental claim of separable motor-effector based networks, and in fact may strengthen the claim. However, a somatosensory explanation of our findings seems unlikely as sensory stimulation of the vocal tract appears to activate primarily regions anterior to our ROIs (Miyamoto et al., 2006). This is an important issue, however, that deserves further investigation.

Consistent with previous functional imaging studies on the production of manual gestures including piano-playing and gesture imitation (Meister et al., 2004; Peigneux et al., 2004; Makuuchi et al., 2005), the present study found a region in the left aIPS which showed an effector bias in the opposite direction, responding significantly more during the covert play than covert hum condition. This finding is consistent with the idea that the aIPS contains a system that functions as a sensory-motor interface for the manual effectors (Colby & Goldberg, 1999; Culham & Kanwisher, 2001). A music study investigating musical imagery vs. performance found a similar region active during both piano-playing conditions, using a visual stimulus to induce playing instead of an aurally-presented stimulus and suggested its role in visuomotor transformations (Meister et al., 2004). Further, a study by Bangert et al. (2006) found BA40 (emcompassing aIPS) active during a conjunction analysis of a musical acoustic task and a motion-related task of piano-playing. These findings along with the present findings suggest that aIPS (a sub region of BA40) may be involved in both visuo- and auditory-to-manual transformations, and that this region is recruited for imagined and overt play in musicians. Interestingly, recent diffusion tensor imaging work has identified not only the classic arcuate fasciculus pathway connecting posterior superior temporal regions with Broca’s area, but also a parallel pathway that projects to the inferior parietal region (Catani et al., 2005). This finding may provide the anatomical substrate for our functional interactions.

No conclusions are being drawn about left-hemisphere dominance for integrating auditory and manual actions as the covert play task in this study relied on covert right-hand movements for performing the task which would lead to left-hemisphere dominance. This predominantly left hemisphere activation has also been found in other musical studies, but again, may simply be due to methodological idiosyncrasies (Bangert et al., 2006).

Although we observed a clear double-dissociation between the covert hum and covert play conditions in the two ROIs, the dissociations were relative. That is, area Spt activated during both covert hum and covert play, but with a significantly greater amplitude to covert hum. The aIPS ROI also activated during both covert hum and covert play, but with a significantly greater amplitude to covert play. This observation does not contradict our claim that these regions are functionally linked to specific motor effector systems. Skilled pianists are likely to have tight associations between auditory representations of tonal stimuli and both vocal tract (for singing/humming) and manual motor systems (for playing) such that hearing a piece of music may automatically activate both auditory-vocal, and auditory-manual circuits. Although we instructed participants to avoid covert humming in the covert play condition and vice versa, this may have been impossible to prevent altogether. A recent study showing greater activation in posterior inferior parietal cortex among musicians compared to non-musicians during passive auditory stimulation, supports this speculation (Bangert et al., 2006). It appears however, that our instructions were able to bias the activation patterns in these circuits sufficiently well to detect the double-dissociation.

The present result extends much existing work on sensory-motor integration systems in the primate and human parietal lobe. The vast majority of this work has focused on manual or ocular motor effector systems, and has been carried out in the context of vision, the sensory system most relevant to manual and ocular action systems. We have shown that the recent work on auditory-motor integration, which has been carried out mostly on the context of speech (Buchsbaum et al., 2001; Hickok et al., 2003), fits nicely within the broader sensory-motor literature. Specifically, we argue that previously identified area Spt, which had been characterized as an auditory-motor integration area, might instead be considered a sensory-motor integration area for the vocal tract action system, much like the lateral intraparietal sulcus (area LIP) is a sensory-motor integration area for eye movements, and anterior intraparietal sulcus (area AIP) is a sensory-motor integration area for grasping (Culham, 2004; Grefkes et al., 2004). The fact that the planum temporale region activates to non-auditory stimulation (e.g., during silent lip reading (Calvert et al., 1997)) is consistent with this characterization.

On this view, posterior parietal regions, extending down into the dorsal most aspect of the posterior temporal lobe, contain systems critical for interfacing sensory information with action systems. These areas are organized around particular motor-effector systems (Andersen 1997; Colby et al., 1999; Simon et al., 2002). While these areas can take input from multiple sensory modalities (Cohen et al., 2002; Mullette-Gillman et al., 2005; Xing & Andersen, 2000), these systems may nonetheless be biased towards certain sensory modalities depending on the demands of the particular action systems involved. For example, manual action systems may be biased towards visual input in most individuals because of visually-guided reaching/grasping functions, whereas vocal tract systems may be biased toward auditory input for reasons discussed in the Introduction. These biases have led many writers (including ourselves) to discuss these posterior parietal/dorsal temporal areas as being part of the dorsal stream of one sensory modality or another. But on the current view, a more accurate conceptualization may be that these parietal-temporal regions comprise a network of interfaces between particular motor effector systems on the one hand and multiple sensory systems on the other (for a comprehensive review of parietal lobe function, please see Andersen, 1997; Culham & Kanwisher, 2001). Thus, it may be more accurate to refer to this network as a set of sensory-effector integration regions, rather than as visual-motor or auditory-motor integration areas.

Acknowledgments

Thanks to Susan Anderson for creating the melodic stimuli.

This work was supported by NIHDC03681. Address correspondence to Gregory Hickok, Department of Cognitive Sciences, University of California, Irvine, Irvine, CA 92697, USA. Email: greg.hickok@uci.edu.

Footnotes

Spt is functionally defined within an anatomically constrained region of interest. It was initially identified in an anatomically unconstrained analysis that specified regions showing both auditory (speech responsive) and motor (responsive during covert speech production) response properties (Buchsbaum et al. (2001), Hickok et al. (2003)). A network of regions is identified in such an analysis including area 44 and a more dorsal pre-motor site in the frontal lobe, a region in the superior temporal sulcus, and a region in the posterior aspect of the planum temporale, sometimes extending up into the parietal operculum. Anatomically this later area, Spt appears to be a sub-portion of Galaburda and Sanides’ (1980) area Tpt. Although auditory-motor responses are identifiable within this region very consistently in individual subjects (using their own anatomy as a guide localization), the activation location in standardized space can vary substantially. Thus Spt is defined as a region with the posterior portion of the Sylvian fissure that exhibits both auditory and motor response properties.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Andersen R. Multimodal integration for the representation of space in the posterior parietal cortex. Philos Trans R Soc Lond B Biol Sci. 1997;352:1421–1428. doi: 10.1098/rstb.1997.0128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson J, Gilmore R, Roper S, Crosson B, Bauer R, Nadeau S, et al. Conduction aphasia and the arcuate fasciculus: A reexamination of the Wernicke–Geschwind model. Brain and Language. 1999;70:1–12. doi: 10.1006/brln.1999.2135. [DOI] [PubMed] [Google Scholar]

- Bangert B, Peschel T, Schlaug G, Rotte M, Drescher D, Hinrichs H, et al. Shared networks for auditory and motor processing in professional pianists: Evidence from fMRI conjunction. NeuroImage. 2006;30:917–926. doi: 10.1016/j.neuroimage.2005.10.044. [DOI] [PubMed] [Google Scholar]

- Baumann S, Koeneke S, Schmidt C, Meyer M, Lutz K, Jancke L. A network for audio-coordination in skilled pianists and non-musicians. Brain Research. doi: 10.1016/j.brainres.2007.05.045. in press. [DOI] [PubMed] [Google Scholar]

- Buchsbaum B, Hickok G, Humphries C. Role of Left Posterior Superior Temporal Gyrus in Phonological Processing for Speech Perception and Production. Cognitive Science. 2001;25:663–678. [Google Scholar]

- Buchsbaum BR, Olsen RK, Koch P, Berman KF. Human dorsal and ventral auditory streams subserve rehearsal-based and echoic processes during verbal working memory. Neuron. 2005a;48:687–97. doi: 10.1016/j.neuron.2005.09.029. [DOI] [PubMed] [Google Scholar]

- Buchsbaum B, Olsen RK, Koch PF, Kohn P, Kippenhan JS, Berman KF. Reading, hearing, and the planum temporale. Neuroimage. 2005b;24:444–454. doi: 10.1016/j.neuroimage.2004.08.025. [DOI] [PubMed] [Google Scholar]

- Burnod Y, Baraduc P, Battaglia-Mayer A, Guigon E, Koechlin E, Ferraina S, et al. Parieto-frontal coding of reaching: an integrated framework. Experimental Brain Research. 1999;129:325–346. doi: 10.1007/s002210050902. [DOI] [PubMed] [Google Scholar]

- Calvert GA, Bullmore ET, Brammer MJ, Campbell R, Williams SC, McGuire PK, Woodruff PW, Iverson SD, David AS. Activation of auditory cortex during silent lipreading. Science. 1997;276:593–6. doi: 10.1126/science.276.5312.593. [DOI] [PubMed] [Google Scholar]

- Castiello U. The Neuroscience of Grasping. Nature Reviews Neuroscience. 2005;6:726–736. doi: 10.1038/nrn1744. [DOI] [PubMed] [Google Scholar]

- Catani M, Jones DK, Ffytche DH. Perisylvian language networks of the human brain. Ann Neurol. 2005;57(1):8–16. doi: 10.1002/ana.20319. [DOI] [PubMed] [Google Scholar]

- Chen J, Zatorre R, Penhune V. Interactions between auditory and dorsal premotor cortex during synchronization to musical rhythms. Neuroimage. 2006;32(4):1771–81. doi: 10.1016/j.neuroimage.2006.04.207. [DOI] [PubMed] [Google Scholar]

- Chiou J, Ahn CB, Muftuler T, Nalcioglu O. A simple simultaneous geometric and intensity correction method in echo planar imaging by EPI based phase modulation. IEEE TMI. 2003;22:200–205. doi: 10.1109/TMI.2002.808362. [DOI] [PubMed] [Google Scholar]

- Cohen Y, Batista A, Andersen R. Comparison of neural activity preceding reaches to auditory and visual stimuli in the parietal reach region. Neuroreport. 2002;13:891–894. doi: 10.1097/00001756-200205070-00031. [DOI] [PubMed] [Google Scholar]

- Colby C, Goldberg M. Space and attention in parietal cortex. Annual Review Neuroscience. 1999;22:319–349. doi: 10.1146/annurev.neuro.22.1.319. [DOI] [PubMed] [Google Scholar]

- Cox RW. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Culham J. Human brain imaging reveals a parietal area specialized for grasping. In: Kanwisher N, Duncan J, editors. Attention and Performance XX: Functional Brain Imaging of Visual Cognition. Oxford: Oxford University Press; 2004. pp. 417–438. [Google Scholar]

- Culham J, Kanwisher N. Neuroimaging of cognitive functions in human parietal cortex. Current Opinion in Neurobiology. 2001;11:157–163. doi: 10.1016/s0959-4388(00)00191-4. [DOI] [PubMed] [Google Scholar]

- Damasio H, Damasio AR. The anatomical basis of conduction aphasia. Brain. 1980;103:337–350. doi: 10.1093/brain/103.2.337. [DOI] [PubMed] [Google Scholar]

- Doupe AJ, Kuhl PK. Birdsong and human speech: Common themes and mechanisms. Annual Review of Neuroscience. 1999;22:567–631. doi: 10.1146/annurev.neuro.22.1.567. [DOI] [PubMed] [Google Scholar]

- Galaburda A, Sanides F. Cytoarchitectonic organization of human auditory cortex. Journal of Comparative Neurology. 1980;190:597–610. doi: 10.1002/cne.901900312. [DOI] [PubMed] [Google Scholar]

- Grefkes C, Ritzl A, Zilles K, Fink G. Human medial intraparietal cortex subserves visuomotor coordinate transformation. Neuroimage. 2004;23:1494–1506. doi: 10.1016/j.neuroimage.2004.08.031. [DOI] [PubMed] [Google Scholar]

- Griffiths T, Warren J. The planum temporale as a computational hub. Trends In Neurosciences. 2002;25(7):348–353. doi: 10.1016/s0166-2236(02)02191-4. [DOI] [PubMed] [Google Scholar]

- Haslinger B, Erhard P, Altenmuller E, Schroeder U, Boecker H, Ceballos Baumann AO. Transmodal Sensorimotor Networks during Action Observation in Professional Pianists. Journal of Cognitive Neuroscience. 2005;17(2):282–293. doi: 10.1162/0898929053124893. [DOI] [PubMed] [Google Scholar]

- Hickok G, Buchsbaum B, Humphries C, Muftuler T. Auditory-motor interaction revealed by fMRI: Speech, music, and working memory in area Spt. Journal of Cognitive Neuroscience. 2003;15:673–682. doi: 10.1162/089892903322307393. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D. Dorsal and ventral streams: A framework for understanding aspects of the functional anatomy of language. Cognition. 2004;92:67–99. doi: 10.1016/j.cognition.2003.10.011. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D. The cortical organization of speech processing. Nature Rev Neurosci. 2007;8(5):393–402. doi: 10.1038/nrn2113. [DOI] [PubMed] [Google Scholar]

- Houde JF, Jordan MI. Sensorimotor adaptation in speech production. Science. 1998;279:1213–1216. doi: 10.1126/science.279.5354.1213. [DOI] [PubMed] [Google Scholar]

- Kertzman C, Schwarz U, Zeffiro T, Hallett M. The role of posterior parietal cortex in visually guided reaching movements in humans. Experimental Brain Research. 1997;114:170–183. doi: 10.1007/pl00005617. [DOI] [PubMed] [Google Scholar]

- Makuuchi M, Kaminaga T, Sugishita M. Brain activation during ideomotor praxis: imitation and movements executed by verbal command. Journal of Neurol Neurosurg Psychiatry. 2005;76:25–33. doi: 10.1136/jnnp.2003.029165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meister IG, Krings T, Foltys H, Boroojerdi B, Muller M, Topper R. Playing piano in the mind – an fMRI study on music imagery and performance in pianists. Brain Res Cog. 2004;19:219–28. doi: 10.1016/j.cogbrainres.2003.12.005. [DOI] [PubMed] [Google Scholar]

- Milner A, Goodale M. The visual brain in action. Oxford: Oxford University Press; 1995. [Google Scholar]

- Miyamoto JJ, Honda M, Saito DN, Okada T, Ono T, Ohyama K, Sadato N. The representation of the human oral area in the somatosensory cortex: a functional MRI study. Cereb Cortex. 2006;16:669–675. doi: 10.1093/cercor/bhj012. [DOI] [PubMed] [Google Scholar]

- Muhlau M, Hermsdorfer J, Goldenberg G, Wohlschlager A, Castrop F, Stahl R, et al. Left inferior parietal dominance in gesture imitation: an fMRI study. Neuropsychologia. 2005;43:1068–1098. doi: 10.1016/j.neuropsychologia.2004.10.004. [DOI] [PubMed] [Google Scholar]

- Mullette-Gillman O, Cohen Y, Groh J. Eye-centered, head-centered, and complex coding of visual and auditory targets in the intraparietal sulcus. Journal of Neurophysiology. 2005;94:2259–2260. doi: 10.1152/jn.00021.2005. [DOI] [PubMed] [Google Scholar]

- Murata A, Gallese V, Kaseda M, Sakata H. Parietal neurons related to memory guided hand manipulation. Journal of Neurophysiology. 1996;75:2180–2186. doi: 10.1152/jn.1996.75.5.2180. [DOI] [PubMed] [Google Scholar]

- Okada K, Hickok G. Left posterior auditory-related cortices participate both in speech perception and speech production: Neural overlap revealed by fMRI. Brain and Language. 2006;98:112–117. doi: 10.1016/j.bandl.2006.04.006. [DOI] [PubMed] [Google Scholar]

- Peigneux P, Van der Linden M, Garraux G, Laureys S, Degueldre C, Aerts J, et al. Imaging a cognitive model of apraxia: The neural substrate of gesture-specific cognitive processes. Human Brain Mapping. 2004;21:119–142. doi: 10.1002/hbm.10161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simon O, Mangin J, Cohen L, Bihan D, Dehaene S. Topographical layout of hand, eye, calculation, and language-related areas in the human parietal lobe. Neuron. 2002;33:475–487. doi: 10.1016/s0896-6273(02)00575-5. [DOI] [PubMed] [Google Scholar]

- Tremblay S, Shiller DM, Ostry DJ. Somatosensory basis of speech production. Nature. 2003;19:866–889. doi: 10.1038/nature01710. [DOI] [PubMed] [Google Scholar]

- Waldstein R. Effects of postlingual deafness on speech production: Implications for the role of auditory feedback. Journal of the Acoustical Society of America. 1989;99:2099–2144. doi: 10.1121/1.400107. [DOI] [PubMed] [Google Scholar]

- Woods RP, Grafton ST, Holmes CJ, Cherry SR, Mazziotta JC. Automated image registration: I. General methods and intrasubject, intramodality validation. J Comput Assist Tomography. 1998;22:141–154. doi: 10.1097/00004728-199801000-00027. [DOI] [PubMed] [Google Scholar]

- Xing J, Andersen R. Models of the posterior parietal cortex which perform multimodal integration and represent space in several coordinate frames. Journal of Cognitive Neuroscience. 2000;12:601–614. doi: 10.1162/089892900562363. [DOI] [PubMed] [Google Scholar]

- Yates AJ. Delayed auditory feedback. Psychological Bulletin. 1963;60:213–251. doi: 10.1037/h0044155. [DOI] [PubMed] [Google Scholar]