Abstract

The primate prefrontal cortex (PF) plays a central role in choosing goals and strategies. To better understand its mechanisms, we recorded from PF neurons as monkeys used abstract response strategies to select a spatial goal. A visual cue, selected randomly from a set of three cues, appeared on each trial. All three cues were novel when neuronal recording commenced. From trial to trial, the cue could have either repeated or changed from the previous trial, called repeat trials and change trials, respectively. On repeat trials, the monkeys used a Repeat-stay strategy to gain a reward by choosing the same spatial goal as on the previous trial; on change trials, they used a Change-shift strategy to reject the previous goal in favor of an alternative. We reported previously that when monkeys performed the task correctly, many PF neurons had activity encoding one of these two strategies. The monkeys sometimes chose the incorrect strategy, however. Strategy coding was weak or absent during the cue period of error trials, which was—for correct trials—the time when the monkeys used a strategy to choose a future goal. By contrast, later in the trial, after the chosen goal had been attained and the monkeys awaited feedback, strategy coding was present, and it reflected the strategy used, whether correct or incorrect. The weak cue-period strategy signal could, whatever its cause, have contributed to the errors made, whereas the activity prior to feedback suggests a role in monitoring task performance.

Keywords: frontal lobe, strategies, rules, Macaca mulatta, errors

Introduction

The prefrontal cortex (PF) contributes to several cognitive operations, but fundamental to many of these is the ability to select goals and implement strategies. We reported previously that different subpopulations of PF neurons encoded two strategies: one called Repeat-stay, the other Change-shift (Genovesio et al., 2005). In studies of conditional motor learning, monkeys spontaneously adopted these two strategies as they attempted to learn novel stimulus–response associations (Murray & Wise 1996; Wise & Murray 1999). In the present strategy task (Genovesio et al., 2005), we operantly conditioned monkeys to use the abstract response strategies that these other monkeys had spontaneously adopted. The present report describes the results of additional analyses on this data set.

In the Repeat-stay strategy, when a cue repeated on a current trial from the immediately previous one, the monkeys had to stay with their most recently chosen goal in order to gain a reward. In the Change-shift strategy, when the cue on a current trial differed from that on the previous trial, the most recent goal had to be rejected, and the monkeys had to shift to an alternative goal. In a small number of trials, the monkeys performed the task incorrectly, choosing a goal according to the wrong strategy. Here we report the properties of PF neurons during these error trials at two critical time periods: (1) the time that the cue was visible, during which the monkeys had to make a decision about which strategy to choose, and (2) the period after the strategy had been chosen and implemented, during which the monkeys awaited feedback—in the form of reward or nonreward—about their choice.

Materials and Methods

Two male rhesus monkeys (Macaca mulatta), 8.8 kg and 7.7 kg, were seated in a primate chair with their heads fixed. They faced a video screen, 32 cm away. All procedures accorded with the Guide for the Care and Use of Laboratory Animals.

The monkeys performed the strategy task of Genovesio et al. (2005). First, a white fixation spot (0.7°) appeared at the center of a video screen. Once the monkey fixated this spot, three 2.2° unfilled white squares came on: 14° left, up, and right from center (Fig. 1A1). If the monkeys maintained fixation within 7.5° for 1.0 s, a visual cue replaced the fixation spot for either 1.0, 1.5 or 2.0 s, selected pseudorandomly (Fig. 1A2). Each cue comprised two ASCII characters (~2°) of different color, selected pseudorandomly from a set of three such cues, which were novel at the beginning of each recording block, defined as a block of trials in which neuronal activity was collected. The offset of the cue was the trigger (“go”) stimulus (Fig. 1A3). The monkeys then made a saccade (Fig. 1A4) to one of the squares (within 7.7°) and fixated it for 1.0 s (Fig. 1A5), after which all three targets became solid white (Fig 1A6). A 0.1 ml drop of fluid reward might be delivered 0.5 s later (Fig. 1A7), but regardless of reward or nonreward, the squares disappeared at that time and a 2.5-s intertrial interval began (Fig. 1A8).

Figure 1.

Task and recording zone. A. Sequence of events in the strategy task. Dashed lines indicated fixation point, the white horizontal arrow shows saccade vector. Abbreviations: imp, impulses; ITI, intertrial interval; TS, trigger (“go”) stimulus. B. Cues and responses for two consecutive trials. Repeat trials call for staying with the right goal. Change trials require the rejection of that goal. C. Recording zones (shading). Rostral, right; dorsal, up. Abbreviations: AS, arcuate sulcus; PS, principal sulcus.

By design, every trial ended with a reward, and that trial then became the previous trial. The goal on the previous trial was called the previous goal. A current trial followed. Each current trial had a future goal, which was the response target chosen after the cue appeared. We use the term future goal for the entirety of each current trial, despite the fact that the goal was acquired by fixation after the trigger signal and was, at that time, no longer in the future. On each current trial (Fig. 1B), if the cue repeated from the previous trial (called a repeat trial), the monkeys should have stayed with the previous goal (the Repeat-stay strategy). If the cue differed from that on the previous trial (called a change trial), the monkeys should have shifted from the previous goal to one of the two remaining alternatives (the Change-shift strategy). On repeat trials, staying with the previous goal always produced a reward (Suppl. Fig. 1A). On change trials, only rejection of the previous goal did so. The cue was repeated until the monkey received a reward (Suppl. Fig. 1A).

The recording procedures have been described elsewhere (Genovesio et al., 2005; 2006). Quantitative analysis was based on average trial-by-trial activity rates within specified time windows (described in the Results) using ANOVA (α=0.05). Paired comparisons also used α=0.05, with one-tailed, paired t tests employed for evaluating whether the differences between preferred and anti-preferred activity rates diverged significantly from zero and two-tailed paired t tests for examining differences between correct and error trials. Near the end of data collection, we made electrolytic lesions (15 μA for 10 s) in selected locations, and later plotted the recording sites in Nissl-stained sections by reference to these lesions and to pins inserted at known coordinates.

Results

The neuronal database contained 1,456 isolated single neurons, 700 from monkey 1 and 756 from monkey 2: the same data set used by Genovesio et al. (2005). The sample of neurons was taken from dorsolateral PF, mainly area 46, and dorsal PF, spanning areas 6, 8 and 9 (Fig. 1C). We found no differences in neuronal properties among these regions, either in the representation of strategies (Genovesio et al., 2005) or in the representation of previous and future goals (see Supplemental Figure 1 in Genovesio et al., 2006).

The monkeys performed the strategy task very accurately. On repeat trials, monkey 1 chose the correct strategy at a rate of 96.8% and monkey 2 at 96.0% correct. On change trials, the two monkeys chose the strategy correctly on 98.9% and 98.3% of the trials, respectively. Here we report strategy encoding on the remaining, incorrect trials. We included only neurons with at least one error for both the cell’s preferred strategy (the strategy associated with the highest discharge rate) and its anti-preferred strategy (the strategy associated with lowest discharge rate). In order to examine whether the monkey remained engaged in the task throughout each day of recording, we calculated the error rate as each day progressed. Error rates remained fairly constant during each day of recording (Suppl. Table 1). The reaction times on correct trials were comparable to those on error trials (270±84 ms and 267±105 ms, respectively, mean ± SD).

Strategy coding during the cue period

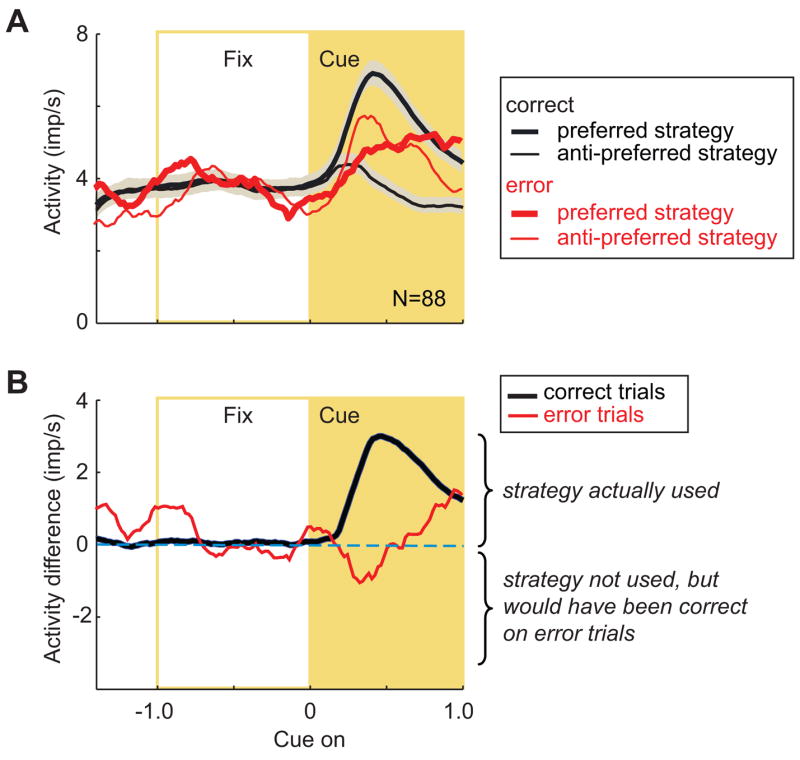

The cue persisted for a minimum of 1.0 s. Separate analyses were performed on two periods: 80–400 ms after cue onset and 400–1000 ms after cue onset, as well as those periods combined. Of the 472 neurons that showed a strategy effect in either period, 88 had a sufficient number of error trials for the present analysis.

Fig. 2A shows separate population averages for each cell’s preferred and anti-preferred strategy. For correct trials, the cells encoded their preferred strategy by a mean difference of more than 3 impulses/s, reaching a peak difference 451 ms after cue onset (Fig. 2B, black line). The difference between preferred and anti-preferred strategies (Fig. 2B, black line) differed significantly from zero (t=9.80, df=87, p<0.0001), as did activity for the preferred and anti-preferred strategies from each other (Fig. 2A, thick vs. thin black lines), as reported previously (Genovesio et al., 2005). Normalizing the activity of each neuronto its arithmetic mean, set to a value of 1.0, produced averages with the same properties (Suppl. Fig. 2) and statistical results.

Figure 2.

Strategy selectivity in cue period. A. Mean discharge rate (bin width, 25 ms) for preferred strategy (thick lines) and anti-preferred strategy (thin lines), for correct (black) and error (red) trials. Means were 4.0 error trials/cell (range 2–8) and 107.3 correct trials/cell (range 46–160). Correct trials have standard error depicted by shading. Plots show mean discharge rates in 20 ms bins. Curves smoothed with a 10-bin moving average for the population curve. Abbreviations: Fix, fixation period (gold box); N, the number of cells; imp, impulses. B. Difference in activity between preferred and anti-preferred strategies. Note that negative values would reflect the encoding, by the population, of the strategy that would have been correct on error trials, but was not chosen.

In contrast, during trials in which the monkeys used the wrong strategy, the cells did not encode their preferred strategy immediately after cue onset (Fig. 2B, red line). On such error trials, we sorted trials according to the strategy that the monkeys eventually used on each trial, not according to the one that they should have used. For example, if a cell preferred the repeat-stay strategy on correct trials, repeat trials were always classed as preferred, regardless of whether the monkey chose the correct or incorrect strategy and regardless of the relative activity for the two strategies on error trials. Using this convention, difference plots such as the ones in Figures 2B and 3B always show the strategy used as positive values and the strategy not used as negative values. On error trials (Fig. 2B, red line), the mean difference between the cells’ preferred and anti-preferred strategies fluctuated near zero, and did not significantly differ from zero for the minimal cue period as a whole (t=0.58, df=87, p=0.56, n.s.). In normalized averages, the difference between preferred and anti-preferred strategies also failed to reach statistical significance (t=1.33, df=87, p=0.10, n.s.).

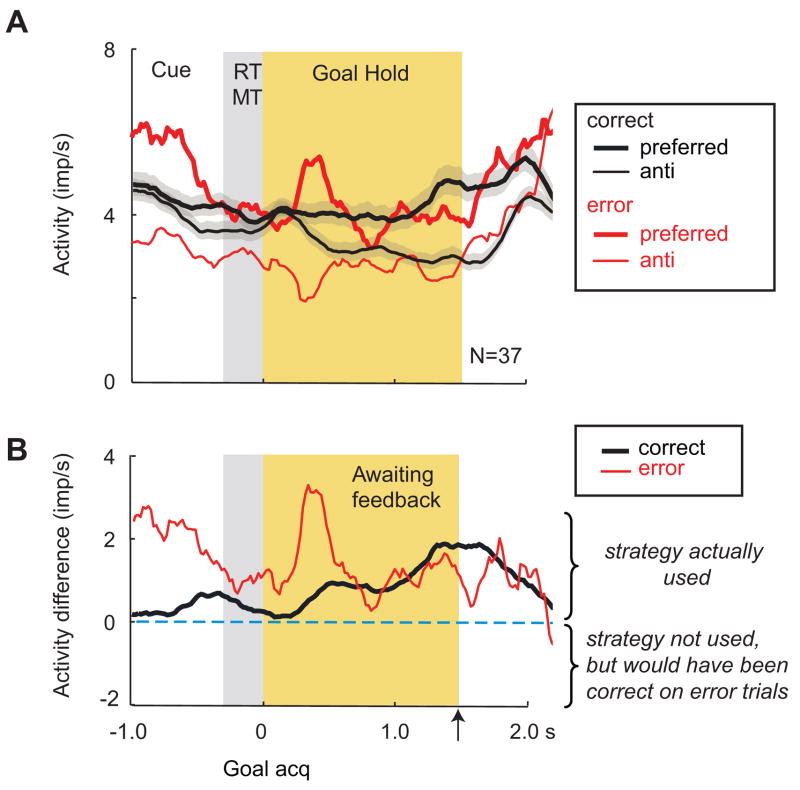

Figure 3.

Strategy selectivity prior to feedback. A. Population averages aligned on goal acquisition (acq), otherwise in format of Fig. 2A. Abbreviations: anti-, anti-preferred goal; MT, movement time; RT, reaction time; Rew, reward; Means were 3.8 error trials/cell (range 2–8) and 55.2 correct trials/cell (range 53–149). B. Difference in activity between preferred and anti-preferred strategies. Format as in Fig. 2B. The time of the reward/nonreward event is marked with the upward arrow.

In accord with this finding, error-trial activity during this period differed significantly from correct-trial activity (Fig. 2B, black vs. red lines, t=3.08, df=87, p=0.003), as it did with in the normalized averages(t=5.40, df=87, p≪0.0001).

We also tested activity during portions of the cue period. For error trials (Fig. 2B, red line), the brief negative difference during the early part of the cue period (80–400 ms after cue onset) failed to reach statistical significance when tested separately (t=0.56, df=87, p=0.57, n.s). The final 250 ms of the minimal cue period, aligned on cue onset, did differ from zero for error trials (t=1.97, df=87, p=0.03, uncorrected), but this difference was not significant after correcting for multiple paired comparisons. There was no significant activity difference between correct and error trials during this time (t=0.58, df=87, p=0.56, n.s.).

A strategy index was defined as the contrast ratio for preferred (p) versus anti-preferred (a) strategies: (p−a)/(p+a). The mean strategy index for correct trials was 0.35, which represents an ~200% difference between the cells’ preferred and anti-preferred strategies, whereas for error trials the strategy index was 0.11, only a 25% difference.

Strategy coding prior to feedback

Late in the goal-hold period, the immediate pre-reward period consisted of the final 420 ms prior to the reward or nonreward feedback (Fig. 1A6, see also Genovesio et al., 2005; 2006). Of the 213 neurons showing a strategy effect during this period, 37 neurons had sufficient errors for the present analysis.

Figure 3A shows separate population averages for each cell’s preferred (thick lines) and anti-preferred (thin lines) strategy, defined on the basis of correct trials (Genovesio et al., 2005). The difference between preferred and anti-preferred strategies on error trials (Fig. 3A, thick vs. thin red lines) was highly significant (t=7.46, df=36, p<0.0001), as, of course, was the analogous difference on correct trials (Fig. 3A, thick vs. thin black lines), as reported previously (Genovesio et al., 2005). These differences were also significant in the normalized averages (Suppl. Fig. 3, t=2.55, df=36, p=0.008).

On correct trials (Fig. 3B, black line), this population had an increasing difference between preferred and anti-preferred strategies, reaching a peak at approximately the time that feedback occurred, 1.5 s after goal acquisition. During the intertrial interval, this value decreased to nearly zero. On error trials (Fig. 3B, red line), the same population encoded the strategy that the monkeys had used, rather than the correct one. The mean activity difference between correct and error trials (Fig. 3B, red vs. black lines) was not statistically significant (t=1.02, df=36, p=0.31, n.s.), with similar results for the normalized averages (Suppl. Fig. 3, t=1.02, df=36, p=0.32, n.s.). The strategy index [(p−a)/(p+a)] also differed little: 0.26 and 0.18 for correct and error trials, respectively. [Note that the apparent difference between error and correct trials in the 800 ms immediately after goal acquisition resulted from an outlier and was not significant (t=1.43, df=36, p=0.16, n.s.)]. Our findings thus indicate that during the immediate pre-reward period, the strategy used was represented on both correct and error trials.

Spatial tuning for previous and future goals

We previously reported that, among neurons with significant spatial tuning, most showed selectivity for either the previous goal or the future goal (see Fig. 4A), but not both (Genovesio et al., 2006). From a population of 827 cells, 87 were selective for the previous (but not the future) goal (ANOVA, p<0.05), and 21 of these neurons had sufficient error data for the present analysis. A separate subpopulation of 84 cells were selective for the future (but not the previous) goal, and 30 of these could be analyzed.

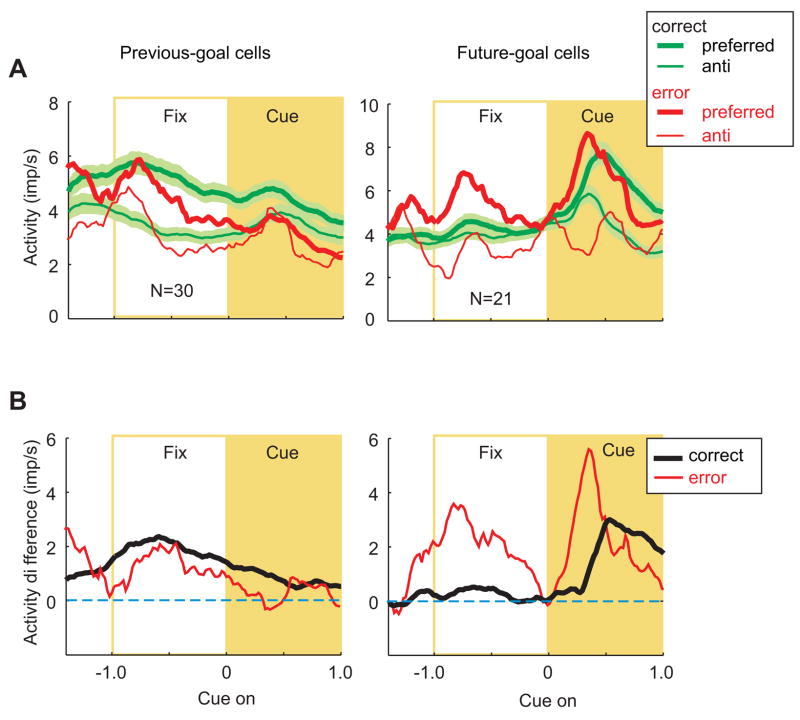

Figure 4.

Previous- and future-goal selectivity. A. Population averages for preferred goals (thick line) and anti-preferred goals (thin lines). Mean discharge rate (bin width, 200 ms) for correct trials (green lines), with the standard error depicted by the shading, and for error trials (red lines). For both previous-goal cells (left) and future-goal cells (right), averages are aligned on cue onset. Abbreviations as in Figs. 2 and 3. B. Difference in activity between preferred and anti-preferred goals for correct (black) and error (red) trials.

As described previously (Genovesio et al., 2006), on correct trials the previous goal was well represented during the fixation period preceding cue onset (Fig. 4A and B, left). The previous goal had to be remembered during this period in order for the monkeys to successfully stay with or shift from their most recent goal. During trials in which the monkeys chose the wrong strategy (Fig. 4B, left, red line), the population of previous-goal cells showed no significant activity difference compared to correct trials (t=0.42, df=29, p=0.67, n.s.). The same result was obtained for normalized averages (Suppl. Fig. 4, t=0.32, df=20, p=0.76).

The future goal became well represented during the cue period for both correct and error trials (Fig. 4A and B, right). Following the convention used for the strategy signal (Figs. 2 and 3), we sorted the trials according to the goal that the monkeys eventually chose, rather than the one they should have chosen. Although one outlier cell caused an apparent difference between correct trials and error trials, they did not differ statistically (t=1.86, df=20, p=0.08, n.s.), with the same result being obtained for the normalized averages (Suppl. Fig. 4, t=0.42, df=29, p=0.68).

In both correct and error trials, these cells appeared to encode the goal that was chosen and not the correct one (see also Fig. 12 in Genovesio et al., 2006). Because of the high variance, however, the differences between preferred and anti-preferred goals on error trials (Fig. 4A, thick vs. thin red lines) did not reach statistical significance for the time periods tested.

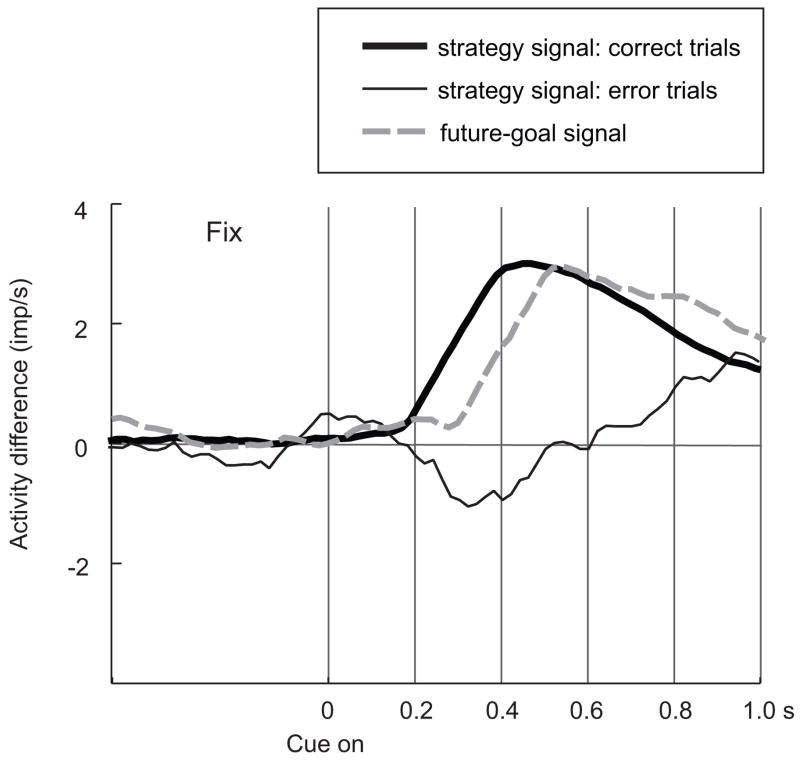

Figure 5 shows the relative time course for the strategy signal and the future-goal signal, from Figures 2B and 4B (right). Note that on correct trials, the future-goal signal developed shortly (~110 ms) after the strategy signal, within the first 200–400 ms after cue onset and long before the earliest trigger signal, which occurred no earlier than 1000 ms after cue onset. On error trials, although a strategy signal eventually developed toward the end of the minimal cue period, at the time that the network would normally produce the future-goal signal, the strategy signal remained weak or absent.

Figure 5.

Time course of strategy and future-goal signals. Format as in Fig. 2B, except as noted in key.

Discussion

The present study yielded two main findings: (1) the correct strategy was weakly represented, if at all, when the monkeys made strategic errors (Fig. 2); and (2) prior to receiving feedback, PF neurons encoded the strategy used, whether it was correct or incorrect (Fig. 3). Thus, on both correct and error trials, PF neurons encoded the actual strategy pursued while awaiting feedback (Fig. 3), but this differential strategy encoding was absent during the cue period of error trials (Fig. 2).

The first finding suggests a general cause of the monkeys’ strategic errors: They seem to be related to choosing the wrong strategy rather than failing to remember the previous goal. The specific cause of these errors cannot be addressed with the present data. Although we know that the monkeys continued to fixate throughout the 1-s period leading to cue onset, did not have long periods (e.g., at the end of recording sessions) when they became disengaged from the task (Suppl. Table 1), and seemed to retain the location of the previous goal in memory, we cannot rule out the possibility that the monkeys’ inattention to the cue led to errors in strategy. The second finding indicates that the strategy selected was still available after the response for monitoring functions (Owen et al., 1996; Rowe & Passingham 2001; Petrides et al., 2002; Rowe et al., 2002; Lau et al., 2004).

Interpretational issues

Interpretations of these results in terms of outcomes or knowledge of the correctness of a choice can be ruled out: in these time periods the monkeys had not yet received any feedback about their choice. Interpretations in terms of general arousal can be ruled out on similar grounds. There was no basis for differential arousal until after feedback arrived in the form of reward or nonreward. It is also implausible that the activity reported here represented error, per se, independent of strategy. In the present analysis we compared activity on certain error trials vs. other error trials (i.e., for preferred vs. anti-preferred strategies), rather than comparing correct-trial activity to error-trial activity, without reference to neuronal preferences, as has been commonly done in the past. Task- or rule-set accounts can be ruled out because of trial-to-trial change in strategy that the present task required. No cue established a task- or rule set over a block of trials, as in much previous work. Because the cues did not map uniquely to a given saccade or saccade target, accounts in terms of sensory and motor signals can also be eliminated.

Relation to previous studies

Several previous reports have examined whether, on error trials, neuronal activity reflected what the monkeys did or what they should have done. In a task involving perceptual decisions, PF activity reflected the monkey’s choice, even when wrong (Kim & Shadlen 1999). PF activity also correlated with choices that monkeys made in the test phase of a match-to-sample task for visual motion, unlike cells in visual cortex (the middle temporal area), which veridically reflected the sensory input (Zaksas & Pasternak 2006). Averbeck and Lee (2007) found that when monkeys executed incorrect sequences of actions, PF neuronal activity reflected the sequence planned rather than the correct one. At a more abstract level, Shima et al. (2007) reported that prior to erroneous sequential movements, the activity of PF neurons reflected the category of sequence that their monkeys eventually executed, rather than the kind of sequence the monkeys should have performed. Similarly, in our task, as the monkeys awaited feedback, the population’s activity reflected the strategy used rather than the correct one. The present findings thus generalize previous result from the perception of stimuli, motor responses and movement sequences, to the choice of an abstract response strategy within that context.

Mansouri et al. (2006) recorded PF activity in monkeys performing a task modeled on the Wisconsin card sorting task (WCST), which involved changing rules from one block of trials to the next. They found a maintained representation of the correct, current rule on error trials. In contrast with their result, we found that, during the period of cue presentation, the representation of the correct strategy was lost in PF (Fig. 2B). Unlike WCST and related rule-switching tasks, the present task cannot be performed by maintaining a rule or strategy in short-term memory across trials because the strategy changes from trial-to-trial. Perhaps this aspect of our task accounts for the discrepancy noted. Johnston and Everling (2006), however, obtained results similar to ours using a block design, which required monkeys to remember the task to be performed within each block of trials. Comparing the activity in delayed matching-to-sample and conditional motor tasks, Johnston and Everling found that PF neurons in correct trials were modulated by the task context, but the effect was lost in the error trials.

In summary, whereas the representation of goals, actions, and movement sequences reflect what the monkeys actually do, however erroneously, the representation of more abstract principles guiding the behavior—such as strategies, rules or task context—often deteriorates in PF when errors occur, especially at the times when decisions must be made of the basis of those abstractions (Fig. 5).

Monitoring

Contrary to the loss of the strategy signal during the cue period, PF activity reflected the strategy used as the monkeys awaited feedback (Fig. 3). This information could be useful for monitoring performance in order to maintain strategies or change them in the future, as appropriate.

Monitoring functions have often been attributed to the medial frontal cortex. The rostral cingulate motor area, for example, has been reported to participate in monitoring individual behavioral events (Hoshi et al., 2005). Oculomotor and medial frontal areas such as the supplementary eye field and the anterior cingulate cortex (ACC) are thought to play a role in monitoring responses in tasks requiring behavioral inhibition (Stuphorn et al., 2000; Ito et al., 2003; Stuphorn & Schall 2006). Walton et al. (2004), likewise, found activation in the dorsal ACC when subjects selected their actions, as opposed to when someone else selected them, which requires less monitoring of past actions. Also based on neuroimaging results, Carter et al. (1998) concluded that the ACC detects conditions under which errors are likely to occur rather than the errors per se. Detection of such conditions may lead to enhanced monitoring.

Despite the focus on medial frontal cortex in monitoring, other parts of the frontal lobe also play a role (Owen et al., 1996; Stern et al., 2000). Lesions of mid-dorsolateral PF yield deficits when people monitor their choices or the choices made by others (Petrides 2005), and neuroimaging activations have been found there in such tasks (Curtis et al., 2000; Petrides et al., 2002). Similarly, Gehring and Knight (2000) concluded that lateral PF interacted with ACC in monitoring behavior and in guiding systems that compensate for errors. In addition to neuroimaging and neuropsychological results, neurophysiological evidence also points to a role for more lateral parts of PF in monitoring. There is ample evidence, for example, that PF neurons modulate their activity according to task context. Such context-dependent modulation, which effectively represents what a monkey recently did or is currently doing, could play an important role in monitoring behaviors, such as those guided by a rule (Asaad et al., 2000) or a predicted outcome (Watanabe et al., 2002). For example, the reward-related activity of PF neurons showed modulation that depended on the direction of a prior saccade (Tsujimoto & Sawaguchi 2004). When the same reward occurred during periods of steady fixation, those cells lacked such position-dependency, even for fixation of the same location. Much as the strategy signal shown in Figure 3 could be used to monitor what the monkey had done in order to guide future strategies, a signal that reflected recent actions as well as outcomes (Tsujimoto & Sawaguchi 2004) could be used to monitor recent decisions in order to guide the selection of future ones (Lee & Seo 2007).

The present result shows that PF neurons, including those in dorsolateral PF, have a neural signal that provides the information needed for monitoring behavior at an abstract level, one involving knowledge about which strategy the monkeys used on a given trial. Whether and how such information is used in monitoring remains an issue for future investigation.

Overview of PF function

PF performs several cognitive functions, which have defied an elegant summary to date. Neurophysiological studies indicate that PF neurons contributes to the prospective coding of objects(Rainer et al., 1999) and places (Hasegawa et al., 1998; Fukushima et al., 2004; Genovesio et al., 2006) that will be future goals, and its cells encode both remembered (Fuster & Alexander 1971; Funahashi et al., 1989; Quintana & Fuster 1993; Rao et al., 1997; Rainer et al., 1998) and attended (Miller et al., 1996; Lebedev et al., 2004) objects and places. These functions, however, have been difficult to relate to many others: the categorization of objects (Freedman et al., 2002), sounds (Cohen et al., 2006) or action sequences (Shima et al., 2007), monitoring and affecting the sequence of events (Quintana & Fuster 1999; Averbeck et al., 2002; Ninokura et al., 2003; 2004; Hoshi & Tanji 2004), and the selection of task rules (Hoshi et al., 1998; Asaad et al., 2000), among others. Almost all of these findings have their counterparts for PF in humans, as revealed by lesion and neuroimaging studies, yet a unifying theme has been difficult to articulate.

Perhaps a synthesis concerning PF functions can center around behavior-guiding rules and strategies (White & Wise 1999; Hoshi et al., 2000; Asaad et al., 2000; Wallis & Miller 2003; Barraclough et al., 2004; Genovesio et al., 2005). According to this view, PF encodes knowledge about behavior (Wood & Grafman 2003), by analogy with the idea that inferotemporal and perirhinal cortex encodes knowledge about objects. This knowledge includes previously encountered sequences of events and decisions, decisions that have produced identifiable outcomes in the past, together with an evaluation of the current value of those outcomes, and a repertoire of behavior-guiding rules and problem-solving strategies (Owen et al., 1990; 1996; Collins et al., 1998; Rushworth et al., 2002; Gaffan et al., 2002; Bunge et al., 2003; Bunge 2004).

Supplementary Material

Acknowledgments

We thank Mr. Alex Cummings for the histological material, Mr. James Fellows for help training the animals, Dr. Peter Brasted for numerous contributions, and Dr. Andrew Mitz for assistance with data analysis and engineering. This research was supported by the Intramural Research Program of the National Institutes of Health, NIMH (Z01MH-01092-28). S.T. was supported by a research fellowship from the Japan Society for the Promotion of Science.

Abbreviations

- ACC

anterior cingulate cortex

- ANOVA

analysis of variance

- ASCII

American standard code for information interchange

- PF

prefrontal cortex

- WCST

Wisconsin card sorting task

References

- Asaad WF, Rainer G, Miller EK. Task-specific neural activity in the primate prefrontal cortex. J Neurophysiol. 2000;84:451–459. doi: 10.1152/jn.2000.84.1.451. [DOI] [PubMed] [Google Scholar]

- Averbeck BB, Chafee MV, Crowe DA, Georgopoulos AP. Parallel processing of serial movements in prefrontal cortex. Proc Nat Acad Sci USA. 2002;99:13172–13177. doi: 10.1073/pnas.162485599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Averbeck BB, Lee D. Prefrontal neural correlates of memory for sequences. J Neurosci. 2007;27:2204–2211. doi: 10.1523/JNEUROSCI.4483-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barraclough DJ, Conroy ML, Lee D. Prefrontal cortex and decision making in a mixed-strategy game. Nat Neurosci. 2004;7:404–410. doi: 10.1038/nn1209. [DOI] [PubMed] [Google Scholar]

- Bunge SA. How we use rules to select actions: A review of evidence from cognitive neuroscience. Cogn Affect Behav Neurosci. 2004;4:564–579. doi: 10.3758/cabn.4.4.564. [DOI] [PubMed] [Google Scholar]

- Bunge SA, Kahn I, Wallis JD, Miller EK, Wagner AD. Neural circuits subserving the retrieval and maintenance of abstract rules. J Neurophysiol. 2003;90:3419–3428. doi: 10.1152/jn.00910.2002. [DOI] [PubMed] [Google Scholar]

- Carter CS, Braver TS, Barch DM, Botvinick MM, Noll D, Cohen JD. Anterior cingulate cortex, error detection, and the online monitoring of performance. Science. 1998;280:747–749. doi: 10.1126/science.280.5364.747. [DOI] [PubMed] [Google Scholar]

- Cohen YE, Hauser MD, Russ BE. Spontaneous processing of abstract categorical information in the ventrolateral prefrontal cortex. Biol Lett. 2006;2:261–265. doi: 10.1098/rsbl.2005.0436. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collins P, Roberts AC, Dias R, Everitt BJ, Robbins TW. Perseveration and strategy in a novel spatial self-ordered sequencing task for nonhuman primates: Effects of excitotoxic lesions and dopamine depletions of the prefrontal cortex. J Cogn Neurosci. 1998;10:332–354. doi: 10.1162/089892998562771. [DOI] [PubMed] [Google Scholar]

- Curtis CE, Zald DH, Pardo JV. Organization of working memory within the human prefrontal cortex: a PET study of self-ordered object working memory. Neuropsychologia. 2000;38:1503–1510. doi: 10.1016/s0028-3932(00)00062-2. [DOI] [PubMed] [Google Scholar]

- Freedman DJ, Riesenhuber M, Poggio T, Miller EK. Visual categorization and the primate prefrontal cortex: Neurophysiology and behavior. J Neurophysiol. 2002;88:929–941. doi: 10.1152/jn.2002.88.2.929. [DOI] [PubMed] [Google Scholar]

- Fukushima T, Hasegawa I, Miyashita Y. Prefrontal neuronal activity encodes spatial target representations sequentially updated after nonspatial target-shift cues. J Neurophysiol. 2004;91:1367–1380. doi: 10.1152/jn.00306.2003. [DOI] [PubMed] [Google Scholar]

- Funahashi S, Bruce CJ, Goldman-Rakic PS. Mnemonic coding of visual space in the monkey’s dorsolateral prefrontal cortex. J Neurophysiol. 1989;61:331–349. doi: 10.1152/jn.1989.61.2.331. [DOI] [PubMed] [Google Scholar]

- Fuster JM, Alexander GE. Neuron activity related to short-term memory. Science. 1971;173:652–654. doi: 10.1126/science.173.3997.652. [DOI] [PubMed] [Google Scholar]

- Gaffan D, Easton A, Parker A. Interaction of inferior temporal cortex with frontal cortex and basal forebrain: Double dissociation in strategy implementation and associative learning. J Neurosci. 2002;22:7288–7296. doi: 10.1523/JNEUROSCI.22-16-07288.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gehring WJ, Knight RT. Prefrontal-cingulate interactions in action monitoring. Nat Neurosci. 2000;3:516–520. doi: 10.1038/74899. [DOI] [PubMed] [Google Scholar]

- Genovesio A, Brasted PJ, Mitz AR, Wise SP. Prefrontal cortex activity related to abstract response strategies. Neuron. 2005;47:307–320. doi: 10.1016/j.neuron.2005.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Genovesio A, Brasted PJ, Wise SP. Representation of future and previous spatial goals by separate neural populations in prefrontal cortex. J Neurosci. 2006;26:7281–7292. doi: 10.1523/JNEUROSCI.0699-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hasegawa R, Sawaguchi T, Kubota K. Monkey prefrontal neuronal activity coding the forthcoming saccade in an oculomotor delayed matching-to-sample task. J Neurophysiol. 1998;79:322–333. doi: 10.1152/jn.1998.79.1.322. [DOI] [PubMed] [Google Scholar]

- Hoshi E, Shima K, Tanji J. Neuronal activity in the primate prefrontal cortex in the process of motor selection based on two behavioral rules. J Neurophysiol. 2000;83:2355–2373. doi: 10.1152/jn.2000.83.4.2355. [DOI] [PubMed] [Google Scholar]

- Hoshi E, Shima K, Tanji J. Task-dependent selectivity of movement-related neuronal activity in the primate prefrontal cortex. J Neurophysiol. 1998;80:3392–3397. doi: 10.1152/jn.1998.80.6.3392. [DOI] [PubMed] [Google Scholar]

- Hoshi E, Tanji J. Area-selective neuronal activity in the dorsolateral prefrontal cortex for information retrieval and action planning. J Neurophysiol. 2004;91:2707–2722. doi: 10.1152/jn.00904.2003. [DOI] [PubMed] [Google Scholar]

- Hoshi E, Sawamura H, Tanji J. Neurons in the Rostral Cingulate Motor Area Monitor Multiple Phases of Visuomotor Behavior With Modest Parametric Selectivity. J Neurophysiol. 2005;94:640–656. doi: 10.1152/jn.01201.2004. [DOI] [PubMed] [Google Scholar]

- Ito S, Stuphorn V, Brown JW, Schall JD. Performance Monitoring by the Anterior Cingulate Cortex During Saccade Countermanding. Science. 2003;302:120–122. doi: 10.1126/science.1087847. [DOI] [PubMed] [Google Scholar]

- Johnston K, Everling S. Neural activity in monkey prefrontal cortex is modulated by task context and behavioral instruction during delayed-match-to-sample and conditional prosaccade-antisaccade tasks. J Cogn Neurosci. 2006;18:749–765. doi: 10.1162/jocn.2006.18.5.749. [DOI] [PubMed] [Google Scholar]

- Kim JN, Shadlen MN. Neural correlates of a decision in the dorsolateral prefrontal cortex of the macaque. Nat Neurosci. 1999;2:176–185. doi: 10.1038/5739. [DOI] [PubMed] [Google Scholar]

- Lau HC, Rogers RD, Ramnani N, Passingham RE. Willed action and attention to the selection of action. Neuroimage. 2004;21:1407–1415. doi: 10.1016/j.neuroimage.2003.10.034. [DOI] [PubMed] [Google Scholar]

- Lebedev MA, Messinger A, Kralik JD, Wise SP. Representation of attended versus remembered locations in prefrontal cortex. PLoS Biol. 2004;2:1919–1935. doi: 10.1371/journal.pbio.0020365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee D, Seo H. Mechanisms of reinforcement learning and decision making in the primate dorsolateral prefrontal cortex. Ann NY Acad Sci. 2007;1104:108–122. doi: 10.1196/annals.1390.007. [DOI] [PubMed] [Google Scholar]

- Mansouri FA, Matsumoto K, Tanaka K. Prefrontal cell activities related to monkeys’ success and failure in adapting to rule changes in a Wisconsin Card Sorting Test analog. J Neurosci. 2006;26:2745–2756. doi: 10.1523/JNEUROSCI.5238-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller EK, Erickson CA, Desimone R. Neural mechanisms of visual working memory in prefrontal cortex of the macaque. J Neurosci. 1996;16:5154–5167. doi: 10.1523/JNEUROSCI.16-16-05154.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray EA, Wise SP. Role of the hippocampus plus subjacent cortex but not amygdala in visuomotor conditional learning in rhesus monkeys. Behav Neurosci. 1996;110:1261–1270. doi: 10.1037//0735-7044.110.6.1261. [DOI] [PubMed] [Google Scholar]

- Ninokura Y, Mushiake H, Tanji J. Representation of the temporal order of visual objects in the primate lateral prefrontal cortex. J Neurophysiol. 2003;89:2868–2873. doi: 10.1152/jn.00647.2002. [DOI] [PubMed] [Google Scholar]

- Ninokura Y, Mushiake H, Tanji J. Integration of temporal order and object information in the monkey lateral prefrontal cortex. J Neurophysiol. 2004;91:555–560. doi: 10.1152/jn.00694.2003. [DOI] [PubMed] [Google Scholar]

- Owen AM, Downes JJ, Sahakian BJ, Polkey CE, Robbins TW. Planning and spatial working memory following frontal lobe lesions in man. Neuropsychologia. 1990;28:1021–1034. doi: 10.1016/0028-3932(90)90137-d. [DOI] [PubMed] [Google Scholar]

- Owen AM, Evans AC, Petrides M. Evidence for a two-stage model of spatial working memory processing within the lateral frontal cortex: A positron emission tomography study. Cereb Cortex. 1996;6:31–38. doi: 10.1093/cercor/6.1.31. [DOI] [PubMed] [Google Scholar]

- Petrides M. Lateral prefrontal cortex: architectonic and functional organization. Phil Trans R Soc Lond B Biol Sci. 2005;360:781–795. doi: 10.1098/rstb.2005.1631. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petrides M, Alivisatos B, Frey S. Differential activation of the human orbital, midventrolateral, and mid-dorsolateral prefrontal cortex during the processing of visual stimuli. Proc Nat Acad Sci USA. 2002;99:5649–5654. doi: 10.1073/pnas.072092299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quintana J, Fuster JM. Spatial and temporal factors in the role of prefrontal and parietal cortex in visuomotor integration. Cereb l Cortex. 1993;3:122–132. doi: 10.1093/cercor/3.2.122. [DOI] [PubMed] [Google Scholar]

- Quintana J, Fuster JM. From perception to action: Temporal integrative functions of prefrontal and parietal neurons. Cereb Cortex. 1999;9:213–221. doi: 10.1093/cercor/9.3.213. [DOI] [PubMed] [Google Scholar]

- Rainer G, Asaad WF, Miller EK. Memory fields of neurons in the primate prefrontal cortex. Proc Natl Acad Sci USA. 1998;95:15008–15013. doi: 10.1073/pnas.95.25.15008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rainer G, Rao SC, Miller EK. Prospective coding for objects in primate prefrontal cortex. J Neurosci. 1999;19:5493–5505. doi: 10.1523/JNEUROSCI.19-13-05493.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rao SC, Rainer G, Miller EK. Integration of what and where in the primate prefrontal cortex. Science. 1997;276:821–824. doi: 10.1126/science.276.5313.821. [DOI] [PubMed] [Google Scholar]

- Rowe J, Friston K, Frackowiak R, Passingham R. Attention to action: Specific modulation of corticocortical interactions in humans. Neuroimage. 2002;17:988–998. [PubMed] [Google Scholar]

- Rowe JB, Passingham RE. Working memory for location and time: activity in prefrontal area 46 relates to selection rather than maintenance in memory. Neuroimage. 2001;14:77–86. doi: 10.1006/nimg.2001.0784. [DOI] [PubMed] [Google Scholar]

- Rushworth MFS, Hadland KA, Paus T, Sipila PK. Role of the human medial frontal cortex in task switching: A combined fMRI and TMS study. J Neurophysiol. 2002;87:2577–2592. doi: 10.1152/jn.2002.87.5.2577. [DOI] [PubMed] [Google Scholar]

- Shima K, Isoda M, Mushiake H, Tanji J. Categorization of behavioural sequences in the prefrontal cortex. Nature. 2007;445:315–318. doi: 10.1038/nature05470. [DOI] [PubMed] [Google Scholar]

- Stern CE, Owen AM, Tracey I, Look RB, Rosen BR, Petrides M. Activity in ventrolateral and mid–dorsolateral prefrontal cortex during nonspatial visual working memory processing: Evidence from functional magnetic resonance imaging. Neuroimage. 2000;11:392–399. doi: 10.1006/nimg.2000.0569. [DOI] [PubMed] [Google Scholar]

- Stuphorn V, Schall JD. Executive control of countermanding saccades by the supplementary eye field. Nat Neurosci. 2006;9:925–931. doi: 10.1038/nn1714. [DOI] [PubMed] [Google Scholar]

- Stuphorn V, Taylor TL, Schall JD. Performance monitoring by the supplementary eye field. Nature. 2000;408:857–860. doi: 10.1038/35048576. [DOI] [PubMed] [Google Scholar]

- Tsujimoto S, Sawaguchi T. Neuronal representation of response-outcome in the primate prefrontal cortex. Cereb Cortex. 2004;14:47–55. doi: 10.1093/cercor/bhg090. [DOI] [PubMed] [Google Scholar]

- Wallis JD, Miller EK. From rule to response: Neuronal processes in the premotor and prefrontal cortex. J Neurophysiol. 2003;90:1790–1806. doi: 10.1152/jn.00086.2003. [DOI] [PubMed] [Google Scholar]

- Walton ME, Devlin JT, Rushworth MF. Interactions between decision making and performance monitoring within prefrontal cortex. Nat Neurosci. 2004;7:1259–1265. doi: 10.1038/nn1339. [DOI] [PubMed] [Google Scholar]

- Watanabe M, Hikosaka K, Sakagami M, Shirakawa S. Coding and monitoring of motivational context in the primate prefrontal cortex. J Neurosci. 2002;22:2391–2400. doi: 10.1523/JNEUROSCI.22-06-02391.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- White IM, Wise SP. Rule-dependent neuronal activity in the prefrontal cortex. Exp Brain Res. 1999;126:315–335. doi: 10.1007/s002210050740. [DOI] [PubMed] [Google Scholar]

- Wise SP, Murray EA. Role of the hippocampal system in conditional motor learning: mapping antecedents to action. Hippocampus. 1999;9:101–117. doi: 10.1002/(SICI)1098-1063(1999)9:2<101::AID-HIPO3>3.0.CO;2-L. [DOI] [PubMed] [Google Scholar]

- Wood JN, Grafman J. Human prefrontal cortex: processing and representational perspectives. Nat Rev Neurosci. 2003;4:139–147. doi: 10.1038/nrn1033. [DOI] [PubMed] [Google Scholar]

- Zaksas D, Pasternak T. Directional signals in the prefrontal cortex and in area MT during a working memory for visual motion task. J Neurosci. 2006;26:11726–11742. doi: 10.1523/JNEUROSCI.3420-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.