Abstract

This study used item response theory (IRT) to examine the extent to which criminal justice facilities and community-based agencies are using evidence-based substance abuse treatment practices (EBPs), which EBPs are most commonly used, and how EBPs cluster together. The study used data collected from wardens, justice administrators, and treatment directors as part of the National Criminal Justice Treatment Practices survey (NCJTP; Taxman et al., 2007a), and includes both adult criminal and juvenile justice samples. Results of Rasch modeling demonstrated that a reliable measure can be formed to gauge the extent to which juvenile and adult correctional facilities, and community treatment agencies serving offenders, have adopted various treatment practices supported by research. We also demonstrated the concurrent validity of the measure by showing that features of the facilities’ organizational contexts were associated with the extent to which facilities were using EBPs, and which EBPs they were using. Researchers, clinicians, and program administrators may find these results interesting not only because they show the program factors most strongly related to EBP use, but the results also suggest that certain treatment practices are generally clustered together, which may help stakeholders plan and prioritize the adoption of new EBPs in their facilities. The study has implications for future research focused on understanding the adoption and implementation of EBPs in correctional environments.

Keywords: Item response theory, Rasch model, substance abuse, criminal justice, evidence-based practice

1. Introduction

The criminal justice system has emerged as a primary service delivery system for nearly 9 million adults and adolescents facing challenges of drug and alcohol abuse, mental illness, and other service needs (National Institute of Justice, 2003; Taxman et al., 2007b; Young et al., 2007). The overwhelming needs of the population, compounded by accompanying public safety and health issues, has spurred a growing body of research focused on service delivery within the criminal and juvenile justice systems. Central to this research is an interest in characteristics of programs, services, and systems that address the goals of reducing crime and improving public health and social productivity. Service provision for this population is complicated; increasingly, programs are subject to the high standard of achieving each of these multifaceted goals.

Recently, a focus on evidence-based practices (EBPs) has provided a new perspective on the value of treatment services--along with optimism for achieving it--as a means of protecting society by reducing the recidivism rates of offenders, while at the same time improving the quality of life and social productivity of individual offenders. Consequently, researchers, service providers, and policy-makers have devoted much attention to identifying correctional and/or treatment practices that have research support for improving the health of offenders and lowering recidivism. However, enthusiasm for programs adopting EBPs has run ahead of the development of psychometrically strong and clinically useful assessment tools for evaluating EBP use and ultimately the effectiveness of the EBPs programs are using.

This paper applies state-of-the-art item response theory (IRT) methods to examine the extent to which programs that serve the offender population utilize EBPs and which EBPs are most frequently used. The data were collected from a recent national survey of substance abuse treatment practices in the adult and juvenile justice systems (Taxman et al., 2007a). Using this data source, Friedmann et al. (2007) and Henderson et al. (2007) showed that these programs varied considerably in the number and types of EBPs they were using, and that organizational variables were associated with the extent to which EBPs were in place. However, because these studies used an inventory approach to assessing EBP use (i.e., taking a simple sum of items), the measurement properties of their dependent variable were limited in important ways that restricted the conclusions that could be drawn. The two existing studies provided a substantively crude thumbnail sketch of EBP use without being able to address the important questions of which EBPs were most difficult to implement and how programs go about implementing EBPs. Two key questions remain unanswered: do programs tend to implement EBPs sequentially, or do they tend to implement some EBPs in clusters as suggested by Knudsen et al., (2007) in her recent study of private sector substance abuse organizations. The primary objectives of this paper are to address these limitations by illustrating: (1) how modern measurement analytical tools (i.e., the Rasch model) can provide a stronger assessment of EBP use in corrections agencies and community agencies serving offenders, and (2) how a more thorough assessment of EBP use can contribute to a greater understanding of EBP adoption patterns, which may foster better research-to-practice transportation models. We hypothesized that there would be systematic differences in the EBPs that were more versus less widely used, and that these differences would be partially explained by organizational characteristics. Further, following Knudsen et al., (2007), we hypothesized that the pattern of EBPs programs were using would indicate that some EBPs reliably clustered together.

1.1 Defining Evidence Based Practices (EBPs)

The Institute of Medicine has defined EBP as “the integration of best research evidence with clinical expertise and patient values” (Institute of Medicine, 2001, p. 147). Scientists use several tools to identify and articulate clinical practices designed to reach these goals. One technique that remains popular is a consensus-driven model in which scientists, often along with clinicians and practitioners, join together to review research findings and practice. Through a deliberative process of compiling, reviewing, and evaluating findings from various research studies and also integrating clinical experience, the consensus panel develops a series of recommendations regarding treatment practices with the best empirical and clinical support. These are frequently called “best practices” with prime examples being the National Institute of Drug Abuse’s (NIDA) Principles of Drug Abuse Treatment for Criminal Justice Populations: A Research Based Guide (National Institute on Drug Abuse, 2006), and Drug Strategies’ Bridging the Gap: A Guide to Drug Treatment in the Juvenile Justice System (2005).

Consensus panels have been criticized for being selective and/or biased in their review of research literature, and typically some recommendations have greater empirical support than others (Barlow, 2004; Miller, et al, 2005). Meta-analysis has arisen as a more objective, deliberative strategy for identifying EBPs based on an accumulation of empirical evidence across studies (Farrington and Welsh, 2005). Treatment practices associated with the largest effect sizes with respect to important outcome variables (e.g., recidivism, substance use) are then established as EBPs. Results from meta-analyses have been used to identify and describe programs, services, and system features that reliably achieve improved offender outcomes (Landenberger and Lipsey, 2005; Lipsey and Wilson, 1998, 2001; Farrington and Welsh, 2005).

A synthesis of nearly two decades of consensus reviews and meta-analyses have painted an increasingly detailed picture on the nature of EBPs in adult and juvenile correctional settings. Several key features comprise this picture, which we summarize as: (1) specific treatment orientations that have been successful (e.g., cognitive-behavioral, therapeutic community, and family-based treatments); (2) effective re-entry services designed to build upon initial treatment gains as well as integrated services provided by the justice and treatment systems; (3) the use of sanctions and incentives to improve program retention; (4) interventions to engage the offender in treatment services and motivate him/her for change; (5) treatment of sufficient duration and intensity to produce change (typically defined as 90 days or longer, Simpson et al., 1999); (6) quality review designed to monitor treatment progress and outcomes; (7) family involvement in treatment; (8) assessment practices, particularly the use of standardized substance abuse screening tools; (9) comprehensive services that address co-occurring medical and psychiatric disorders; and (10) qualified staff delivering treatment (Brannigan et al., 2004; Knudsen and Roman, 2004; Landenberger and Lipsey, 2006; Mark et al., 2006; National Institute on Drug Abuse, 2006; Taxman, 1998). In addition, research with juvenile offenders has indicated that the services must appropriately address key developmental themes of adolescence (Dennis et al., 2003; Drug Strategies, 2005; Grella, 2006).

1.2 Organizational Factors Related to Adoption of Evidence-Based Practices

Everett Rogers’ (2003) diffusion of innovations theory has been extremely influential in understanding the lag between the development of innovations (in our case EBPs) and their adoption in practice. Rogers defines diffusion as “the process by which an innovation is communicated…over time among the members of a social system” (p. 5), with innovations consisting of new ideas or ways of achieving functional activities. Integrating a broad, interdisciplinary literature on organizational innovation, Rogers proposes that innovative organizations tend to be characterized as having: (1) leaders that have positive attitudes toward new developments, (2) less centralized authority structures, (3) members with a high level of knowledge and expertise (i.e., good training), (4) less formal rules and procedures for carrying out activites, (5) opportunities for organizational members to share ideas, (6) available funding and resources for implementing innovations, (7) large size (which Rogers characterizes as a proxy for such elements as resources and technical expertise), and (8) a high degree of linkage to other individuals external to the organizational system. There is an emerging literature conducted primarily on community substance abuse treatment programs, which indicates that a similar array of organizational factors are related to programs’ adoption and utilization of EBPs; these factors include: (a) organizational structure (Backer et al., 1986; Knudsen et al., 2006; Roman and Johnson, 2002), (b) organizational climate (Aarons and Sawitzky, 2006; Glisson, 2002; Glisson and Hemmelgarn, 1998; Lehman et al., 2002); (c) training opportunities (Brown and Flynn, 2002; Knudsen et al., 2005); (d) resource adequacy (Lehman et al., 2002; Simpson, 2002; Stirman et al., 2004); (e) network connectedness (Knudsen and Roman, 2004), and (f) administrator and staff attitudes (Knudsen et al., 2005; Liddle et al., 2002; Schmidt and Taylor, 2002).

In terms of research in the criminal justice system, Friedmann et al. (2007), found that adult offender treatment programs that provided more EBPs were community-based, accredited, and integrated with various agencies; with a performance-oriented, non-punitive culture, and more training resources. The organizations also tended to be led by individuals with a background in human services, a high regard for the value of substance abuse treatment, and a greater understanding of EBPs identified in the criminal justice and substance abuse treatment literature. In a companion paper on juvenile offenders, Henderson et al. (2007) found that programs that provided more EBPs were community-based, had more extensive networking connections, and received more support for new programs and training opportunities. The organizational culture of these agencies is defined by an emphasis on performance quality and by leaders that understand that public safety and health issues are intertwined.

1.3 Limitations of Current Approaches to Assessing Adoption of Evidence-Based Practices

Although previous research has provided greater understanding of the organizational factors influencing the adoption of evidence-based practice, there are some common limitations to this research as well. First, investigators employ different measures of adoption that are not comparable, and some are limited to respondents’ self-reports of their intentions to adopt EBPs (Miller et al., 2006; Simpson, 2002). Second, the research has either focused on the adoption of single innovations, which presumably serves as a proxy for the organization’s capability or motivation to adopt other innovations, or employ an inventory approach, in which programs receive a “score” based on a simple count of practices they have adopted (e.g., Roman and Johnson, 2002; Knudsen and Roman, 2004). The inventory approach is limited in treating all practices as equally important and equally likely to be adopted, and it assumes that all practices have the same value to the organization.

In contrast, the IRT approach we take in this paper mathematically models the likelihood that programs are using various EBPs along an underlying continuous dimension of EBP use. More widely used EBPs receive lower scores, less used EBPs receive higher scores, and EBPs approximately equally used receive similar scores. Likewise programs using more EBPs receive higher scores, and those using fewer receive lower scores on the underlying dimension of EBP use. In sum, the IRT approach represents a significant advance over more commonly used methods, as it provides a much more precise meaning of the measurement of EBP use, as well as allowing consumers of the research to understand: (1) which EBPs are most difficult for programs to adopt, and (2) which EBPs may be most amenable to being adopted, given a facility’s current pattern of EBP use. The current study applies Rasch modeling with data from nationally representative surveys of substance abuse treatment practices in the adult and juvenile justice systems (Taxman et al., 2007a) to produce continuous measures (adult and juvenile) of the extent to which such agencies are using EBPs.

1.4 Rasch Modeling

Rasch modeling (Rasch, 1960) improves on previous investigations of EBP adoption by using a model-based approach to develop scales with strong measurement properties including greater generalizability and improved accuracy and statistical validity (Cole et al., 2004; Embretson and Reise, 2000; Hambleton et al., 1991). Rasch modeling provides a number of advantages over traditional methods of scale development (Embretson and Reise, 2000), two of which we describe in detail here. First, a Rasch analysis will independently scale items (EBPs in our case) and persons (programs in our case) along a continuous, intervally scaled latent trait (in our case self-reported extent of EBP use). Therefore, when data show adequate fit to the Rasch model, the underlying trait will be measured on a true interval scale (Bond and Fox, 2001). This property of Rasch measures has obvious advantages in statistical analyses such as multiple regression that assume the data are intervally measured. Second, because the Rasch analysis will independently estimate EBP difficulty (or probability that a given EBP is used) and a program’s score on the latent trait, Rasch-derived measures (in contrast to measures derived by methods other than IRT) are also considered sample-independent (Embretson and Reise, 2000). Therefore, the measurement properties are more likely to generalize to other similar populations. In explicating this point, Bond and Fox (2001) use the metaphor of a yard stick, which has objective value for measuring the length of a variety of objects irrespective of the nature of the objects themselves. Rasch-derived scales possess the same objectivity, and therefore, the measure’s usefulness is not constrained by the sample on which it was standardized.

2. Methods

The National Criminal Justice Treatment Practices (NCJTP) survey is a multilevel survey designed to assess state and local adult and juvenile justice systems in the United States. The primary goals of the survey are to examine organizational factors that affect substance abuse treatment practices in correctional settings as well as to describe available programs and services. The NCJTP survey solicited information from diverse sources ranging from executives of state criminal justice and substance abuse agencies to staff working in correctional facilities and drug treatment programs. Details of the study samples and survey methodology are provided in Taxman et al. (2007a). The present study analyzes findings on use of EBPs from surveys of administrators of correctional facilities and directors of substance abuse treatment programs serving adult and juvenile offenders in both secure facilities and non-secure community settings.

2.1 Sample and Procedure

The survey obtained representative samples of adult prisons, juvenile residential facilities, and community corrections agencies using a two-stage stratification scheme (first counties then facilities located within counties) that utilizes region of the country and size of the facility (or jurisdictions in the case of the community corrections sample) as stratification variables. We report sample sizes and response rates for four targeted populations: (1) a sample of corrections administrators in the adult criminal justice system (the adult correctional sample; n = 302, response rate = 70%), (2) a sample of corrections administrators in the juvenile justice system (the juvenile correctional sample; n = 141, response rate = 65%), (3) a sample of treatment directors either located in corrections facilities or community treatment agencies, both providing services to adult offenders (the adult treatment director sample; n=183, response rate = 61%), and (4) a sample of treatment directors in corrections and community settings providing services to juvenile offenders (the juvenile treatment director sample; n = 122, response rate = 49%). The response rates meet or exceed that typically found for mailed, self-administered organizational surveys (Baruch, 1999), and an analysis of response bias indicated no systematic differences between responders and non-responders (Taxman 2007a). As we describe below, the two adult samples were analyzed together, and the two juvenile samples were analyzed together.

2.2 Instrumentation

Recent research on substance abuse treatment with adult and juvenile offenders guided the selection of survey items representing evidence-based practice. The survey instrument was developed by a team of 12 researchers who contributed to the NCJTP survey as part of the National Institute on Drug Abuse’s Criminal Justice Drug Abuse Treatment Studies (CJ-DATS) research cooperative. The items selected were derived from a number of sources including NIDA’s thirteen principles of effective treatment practices (National Institute on Drug Abuse, 1999), meta-analysis findings in the correctional and/or drug treatment areas (Farrington and Welsh, 2005) and a recent report released by Drug Strategies (2005). See Table 1 for the key elements and their operationalization by the NCJTP survey items. All items are dichotomized on the basis of whether or not they met the EBP criteria set by Friedmann et al. (2007) and Henderson et al. (2007) (see Table 1).

Table 1.

Evidence-Based Practices (EBPs) and the National Criminal Justice Treatment Practices Survey Items Operationalizing the EBPs

| Evidence-Based Practice | Content of Survey Item | Consensus or Empirical Basis |

|---|---|---|

| Developmental Appropriateness | Respondent reports whether their program provides specialized services for adolescent offenders | Consensus |

| Treatment Orientation | Respondent reports whether their program provides cognitive-behavioral treatment, therapeutic community, or manualized treatment approach | Empirical |

| Use of Standardized Risk Assessment Tool | Respondent reports whether their program uses a standardized risk assessment tool | Empirical |

| Continuing Care | Respondent reports that all clients have a referral to substance abuse treatment program and pre-arranged appointments with substant abuse treatment programs for most or all clients | Empirical |

| Graduated Sanctions | Respondent reports that their program uses 3 or more of the following as sanctions for undesirable behavior: | Consensus |

| Extra work duty | ||

| Extra homework/written response | ||

| Wearing signs | ||

| Time outs/hot seats | ||

| Loss of privileges/points | ||

| Report to court/parole boards | ||

| More time added to sentence | ||

| Terminations from treatment | ||

| Drug Testing | Respondent reports that at least 50% of clients are tested | Consensus |

| Systems Integration | Respondent reports that they have joint participation of judiciary, community corrections, and community-based treatment agencies in providing services to offenders | Consensus |

| Engagement Techniques | Respondent reports that their program “always” or “often” uses specific engagement techniques such as motivational interviewing | Empirical |

| 90 Day Duration | Respondent reports that clients receiving services have planned durations of 90 days or greater | Empirical |

| Assessment of Treatment Outcomes | Respondent reports that they “agree” or “strongly agree” that they are kept informed about the effectiveness of their substance abuse treatment programs | Consensus |

| Family Involvement | Respondent reports that they clients receive family therapy services | Empirical |

| Co-Occurring Disorders | Respondent reports that their program has specific services for clients with co-occurring substance abuse and mental health disorders | Consensus |

| Use of Standardized Substance Abuse Assessment Tool | Respondent reports whether their program uses a standardized substance abuse assessment tool | Empirical |

| Qualified Staff | Respondent reports that 75% or more program staff have specialized training or credentials in substance abuse treatment | Consensus |

| Comprehensive Services | Respondent reports that clients receive medical, mental health/substance abuse, and case management services | Consensus |

| Incentives | Respondent reports that their program uses 2 or more of the following as incentives for desirable behavior: | Consensus |

| Good time credits | ||

| Certificate of completion | ||

| Graduation ceremony | ||

| Praise | ||

| Tokens/points redeemable for material items | ||

After examining the fit of the EBPs to the Rasch model, we examined organizational context correlates of the underlying latent trait measuring programs’ extensiveness of EBP use. The predictor variables are the same as those used by Friedmann et al. (2007) and Henderson et al. (2007). Organizational Structure and Leadership measures included items indicating whether the setting was corrections- or community-based, whether the administrator had education or experience in human service provision, and an item assessing facility size. In addition, the corrections survey included measures assessing the leadership style of the lead administrator (transformative and transactional; Arnold et al., 2000; Podsakoff, et al., 1990); and a measure assessing the administrator’s knowledge of EBP (Melnick et al., 2004; Young and Taxman, 2004). Organizational Climate measures in the treatment director’s survey included subscales that assessed management emphasis on treatment quality and correctional staff support for treatment (Schneider et al., 1998). Subscales in the correctional survey assessed organizational culture (cohesive, hierarchical, performance achievement, and innovation/adaptability), as well as the extent to which it promoted new learning (Cameron and Quinn, 1999; Denison and Mishra, 1995; Orthner et al., 2004; Scott and Bruce, 1994).

Training and Resources measures were adapted from the Survey of Organizational Functioning for correctional institutions (Lehman et al., 2002). Subscales assessed respondents’ views about the adequacy of funding, the physical plant, staffing, resources for training and development, and internal support for new programming. Administrators’ Attitudes about corrections and treatment were measured through subscales that assessed beliefs about crime (rehabilitation, punishment, deterrence), as well as support for substance abuse treatment; these scales were adapted from previous similar surveys of public opinion and justice system stakeholders (Cullen et al., 2000). Other attitude scales in the treatment director’s survey included scales adapted from standardized measures of organizational commitment (Balfour and Wechsler, 1996), cynicism for change (Tesluk et al., 1995), and personal value fit with the agency (Parker and Axtell, 2001). Network Connectedness was assessed by the extent to which the institution had working relationships with justice agencies, mental health programs, health clinics, housing services, vocational support agencies, and victim and faith-based organizations.

2.3 Data Analysis

Rasch modeling techniques used in this study proceeded as follows. First, the researchers conducted separate Rasch analyses with the data from the adult and juvenile samples. Some programs reported providing services to both adult and juvenile populations. Therefore, we included these programs in both the adult and juvenile analyses (which were always analyzed separately). A primary assumption of the Rasch model is that responses to items reflect variation on a single underlying dimension and that responses to a given item are independent of other item responses, once the items’ contribution to the latent trait is taken into account. Therefore, before interpreting the fit of the data to the Rasch model, we conducted a principal components analysis (PCA) of residual variance after the Rasch model was fit (Linacre, 1998; Smith & Maio, 1994). The presence of additional factors accounting for greater than 2 units of variance are considered significant, indicating some conditionality between the items (Linacre, 1998).

We next examined how well each EBP fit the Rasch model using item fit indices (infit and outfit) provided by the software WINSTEPS (Linacre and Wright, 1999). Infit and outfit statistics are sensitive to violations of the Rasch model, such as unexpected variation in response patterns (e.g., a lower frequency EBP is endorsed by a program that reports using fewer EBPs). Linacre and Wright (1994) provide a guideline of infit and outfit values of 0.6–1.4 as indicating that an item adequately fits the Rasch model.

Corrections administrators and treatment program directors completed different versions of the survey, including questions about EBPs (see Table 2 and Table 3). Responses from both samples (albeit conducted separately in the adult and juvenile samples) were combined in the Rasch analysis using maximum likelihood estimation to accommodate the missing data (Schafer and Graham, 2002). Graham et al. (2006) demonstrate that data that is missing entirely under the researcher’s control (data missing by design) satisfy the assumption that the data are missing at random (MAR). Combining responses from the correction administrator and treatment director samples allows us to account for differing perspectives of among the two types of respondents as well as increasing our statistical power to detect significant correlates of EBP use.

Table 2.

Frequency and Percentage of Respondents from Juvenile Programs Indicating Use of Evidence-Based Practice Items and Rasch Parameter Estimates, Fit Statistics, Standard Errors, and DIF Between Responses of Corrections Administrators and Treatment Directors

| Item | Frequency (%) | Latent Trait Score | SE | Infit | Outfit | DIF t |

|---|---|---|---|---|---|---|

| Developmental Appropriateness | 13 (11) | 2.78 | 0.34 | 0.88 | 0.63 | N/A |

| Treatment Orientation | 22 (16) | 1.29 | 0.24 | 0.98 | 0.82 | N/A |

| Use of Standardized Risk Assessment Tool | 24 (17) | 1.18 | 0.24 | 0.97 | 0.81 | N/A |

| Continuing Care | 85 (33) | 0.57 | 0.15 | 1.19 | 1.25 | −1.58 |

| Graduated Sanctions | 41 (29) | 0.40 | 0.20 | 0.95 | 0.81 | N/A |

| Drug Testing | 49 (35) | 0.09 | 0.19 | 1.11 | 1.08 | N/A |

| Systems Integration | 112 (43) | 0.06 | 0.14 | 1.11 | 1.17 | −1.61 |

| Engagement Techniques | 122 (46) | −0.13 | 0.14 | 0.87 | 0.83 | 1.74 |

| 90 Day Duration | 57 (40) | −0.19 | 0.19 | 0.87 | 0.81 | N/A |

| Assessment of Treatment Outcomes | 72 (59) | −0.34 | 0.20 | 0.88 | 0.85 | N/A |

| Family Involvement | 146 (56) | −0.58 | 0.14 | 0.93 | 0.92 | 2.87** |

| Co-Occurring Disorders | 146 (56) | −0.60 | 0.14 | 0.89 | 0.89 | 0.70 |

| Use of Standardized Substance Abuse Assessment Tool | 161 (61) | −0.87 | 0.14 | 0.93 | 0.88 | 1.20 |

| Qualified Staff | 85 (70) | −0.91 | 0.22 | 1.09 | 1.05 | N/A |

| Comprehensive Services | 164 (62) | −0.93 | 0.14 | 1.25 | 1.31 | −2.85 |

| Incentives | 102 (72) | −1.82 | 0.21 | 0.99 | 0.91 | N/A |

Note. Frequencies fluctuate for the EBPs depending on whether the item was administered to both corrections administrator and treatment director samples or only one of the samples. Negative numbers in the Latent Trait Score column reflect practices more programs report using; positive numbers reflect practices fewer programs report using. Infit and outfit values between 0.6 and 1.4 reflect adequate fit to the Rasch model.

* p < .05

p < .01

*** p < .001

Table 3.

Frequency and Percentage of Respondents from Adult Programs Indicating Use of Evidence-Based Practice Items and Rasch Parameter Estimates, Fit Statistics, Standard Errors, and DIF Between Responses of Corrections Administrators and Treatment Directors

| Item | Frequency (%) | Latent Trait Score | SE | Infit | Outfit | DIF t |

|---|---|---|---|---|---|---|

| Treatment Orientation | 46 (15) | 1.68 | 0.17 | 1.02 | 0.85 | N/A |

| Use of Standardized Risk Assessment Tool | 81 (27) | 0.87 | 0.14 | 1.03 | 0.94 | N/A |

| Continuing Care | 173 (36) | 0.61 | 0.10 | 1.14 | 1.23 | 1.54 |

| Family Involvement | 185 (38) | 0.48 | 0.10 | 0.89 | 0.83 | 3.29** |

| Graduated Sanctions | 102 (34) | 0.48 | 0.13 | 0.86 | 0.79 | N/A |

| Engagement Techniques | 204 (42) | 0.28 | 0.10 | 0.93 | 0.93 | 1.32 |

| Co-Occurring Disorders | 220 (45) | 0.11 | 0.10 | 0.97 | 0.99 | 0.88 |

| Drug Testing | 138 (46) | −0.12 | 0.13 | 1.17 | 1.22 | N/A |

| 90 Day Duration | 140 (46) | −0.15 | 0.13 | 0.88 | 0.84 | N/A |

| Assessment of Treatment Outcomes | 110 (60) | −0.27 | 0.17 | 0.94 | 0.89 | N/A |

| Systems Integration | 268 (55) | −0.38 | 0.10 | 1.05 | 1.05 | −1.54 |

| Use of Standardized Substance Abuse Assessment Tool | 299 (62) | −0.71 | 0.10 | 0.88 | 0.83 | 1.11 |

| Qualified Staff | 130 (71) | −0.88 | 0.18 | 1.01 | 0.95 | N/A |

| Comprehensive Services | 324 (67) | −0.99 | 0.11 | 1.28 | 1.40 | −3.22** |

| Incentives | 192 (64) | −1.00 | 0.13 | 0.89 | 0.83 | N/A |

Note. Frequencies fluctuate for the EBPs depending on whether the item was administered to both corrections administrator and treatment director samples or only one of the samples. Negative numbers in the Latent Trait Score column reflect practices more programs report using; positive numbers reflect practices fewer programs report using. Infit and outfit values between 0.6 and 1.4 reflect adequate fit to the Rasch model.

* p < .05

p < .01

*** p < .001

Next, we conducted analyses to determine whether there was significant differential item functioning (DIF) across the corrections administrators and treatment program directors. As implemented in Winsteps, DIF measures are calculated from the difficulty (likelihood of use) of each EBP in each sample. A DIF contrast is then calculated from the difference between the DIF measures derived in the two samples. This DIF contrast is equivalent to the Mantel-Haenszel DIF size. Dividing the DIF contrast by the joint standard error of the two DIF measures yields a t-statistic (Holland and Wainer, 1993). Significant values for this statistic would indicate that DIF had occurred.

Finally, we examined correlates of the Rasch-derived measure following Friedmann et al. (2007) and Henderson et al. (2007) to examine the extent to which organizational context variables predicted the use of EBPs. Whereas Friedmann et al. and Henderson et al. used a simple sum of the number of practices programs reported using, we replicate their results using the Rasch measure as the criterion variable. The predictor variables are the same as those used by these authors, namely organizational structure/leadership, culture and climate, resources and training opportunities, administrator attitudes, and network connectedness. These blocks of variables were assessed in hierarchical regression models, which we first adjusted for region of the country in which the facility was located to control for any effects of using region as a stratum in sample selection. Because we limited the number of comparisons we tested to those that replicated the procedures used by Freidmann et al. and Henderson et al., we opted to not adjust alpha for multiple comparisons, using an alpha of .05 for each analysis.

3. Results

3.1 Juvenile Corrections/Treatment Agencies

Before assessing the fit of the data to the Rasch model, we conducted a PCA of residual variance to examine the assumption of local independence. Results indicated that the first residual component accounted for approximately 2 units of variance, suggesting that the data are arguably unidimensional (Linacre, 1998; Raiche, 2005); there was no evident pattern in the component loadings, suggesting that the data meet the assumptions of the Rasch model.

Table 2 shows the item-level estimates of the 16 EBP items, listed in ascending order of the probability of their use, indicated by the measure estimate and expressed in logit units (i.e., log odds ratios). The measure estimates indicate the point along the continuous latent trait of EBP use at which 50% of the respondents endorsed that they were using a given EBP, also known as the item thresholds. The measure estimates are standardized so that the average is assigned the value of 0; EBPs that are less likely to be used have positive estimates, and EBPs that are more likely to be used have negative estimates.

As shown in Table 2, the EBP items showed good fit to the Rasch model, with infit values ranging from .87 to 1.25 and outfit values ranging from .63 to 1.31. Both values fit within the target range of .60 to 1.40 (Linacre and Wright, 1994). Measure parameters ranged from −1.82 to 2.78, indicating that the items cover a broad range of probability of use.

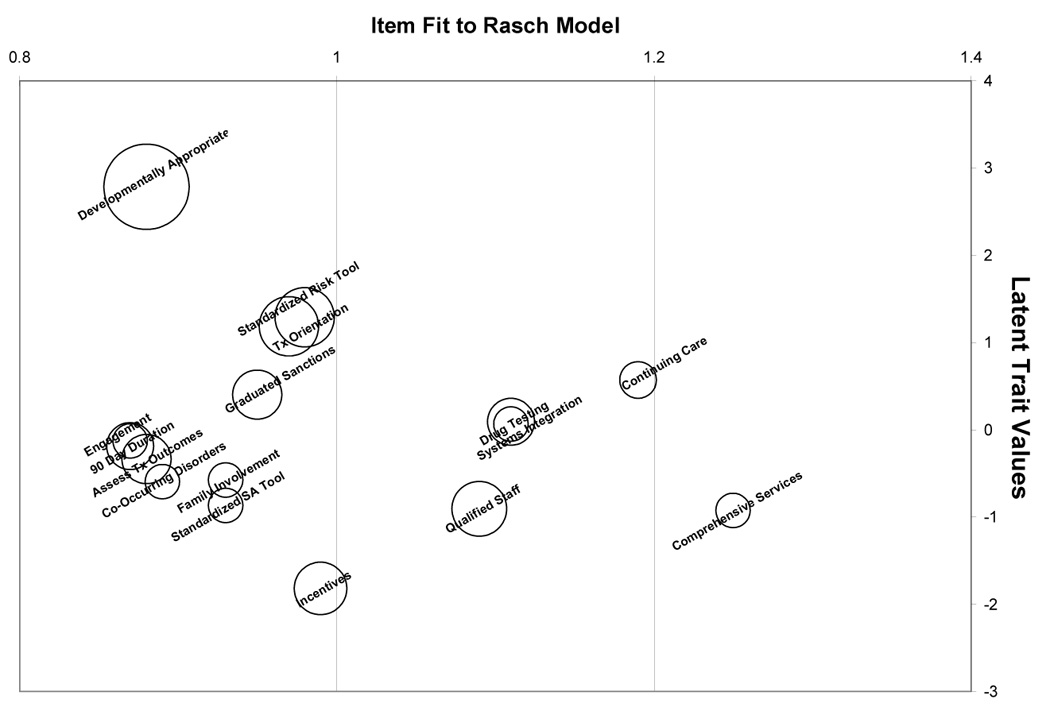

Figure 1 depicts a bubble chart showing the difficulty (i.e., prevalence of use) of the EBPs, their fit to the Rasch model, and the standard error of measurement. As shown in Figure 1, the EBPs were measured with varying precision and difficulty, and verifying what is shown in Table 2, each of the items showed good fit to the Rasch model. Items located closely along the vertical axis of Figure 1 indicate EBPs with a similar likelihood of use. For example, programs that reported assessing their own treatment outcomes were also likely to use engagement methods and planned treatment durations for at least 90 days. Interestingly, the least frequently implemented (as well as the least precisely measured) EBP for the juvenile sample was developmentally appropriate treatment. More frequently used EBPs (by respondent self-report) included the use of incentives, qualified staff, comprehensive services, the use of standardized substance abuse assessment measures, family involvement in treatment, and treatment designed to address co-occurring disorders. In addition to developmentally appropriate treatment, less frequently used EBPs included the use of standardized recidivism risk assessment tools, and the use of CBT, TC, or another manualized treatment approach.

Figure 1.

Bubble chart of the 16 EBPs administered to the juvenile sample. The vertical displacement of the bubbles illustrate the “difficulty” of the item; items with negative values indicate a greater likelihood of use. Fit to the Rasch model is illustrated by the horizontal displacement of the items, with better-fitting items obtaining infit values closer to 1 and acceptable infit values occurring between 0.6 and 1.4. The size of the bubbles reflects the precision of the estimates or standard errors.

Assessment of DIF revealed two items that corrections administrators and treatment directors rated differently, family involvement in treatment (t = 2.85) and whether the program offered comprehensive services (t = 2.87; see Table 2). While treatment directors indicated that they were likely to involve families in treatment (DIF measure = −2.31, SE = 0.31), corrections administrators indicated that families were relatively uninvolved in treatment (DIF measure = 0.52, SE = 0.20). The opposite pattern was apparent for comprehensive services (corrections administrators: DIF measure = −2.34, SE = 0.23; treatment directors: DIF measure = 0.51, SE = 0.20), with corrections administrators reporting that their programs were likely to provide comprehensive services and treatment directors reporting that comprehensive services were relatively unavailable. However, we did not delete these items from the measure because we determined that given the differences between the two samples (corrections and treatment directors), these findings were likely due to true differences between the settings (recall that a number of the respondents in the treatment director sample directed community-based programs) and not bias due to respondents interpreting the items differently.

3.2 Adult Corrections/Treatment Agencies

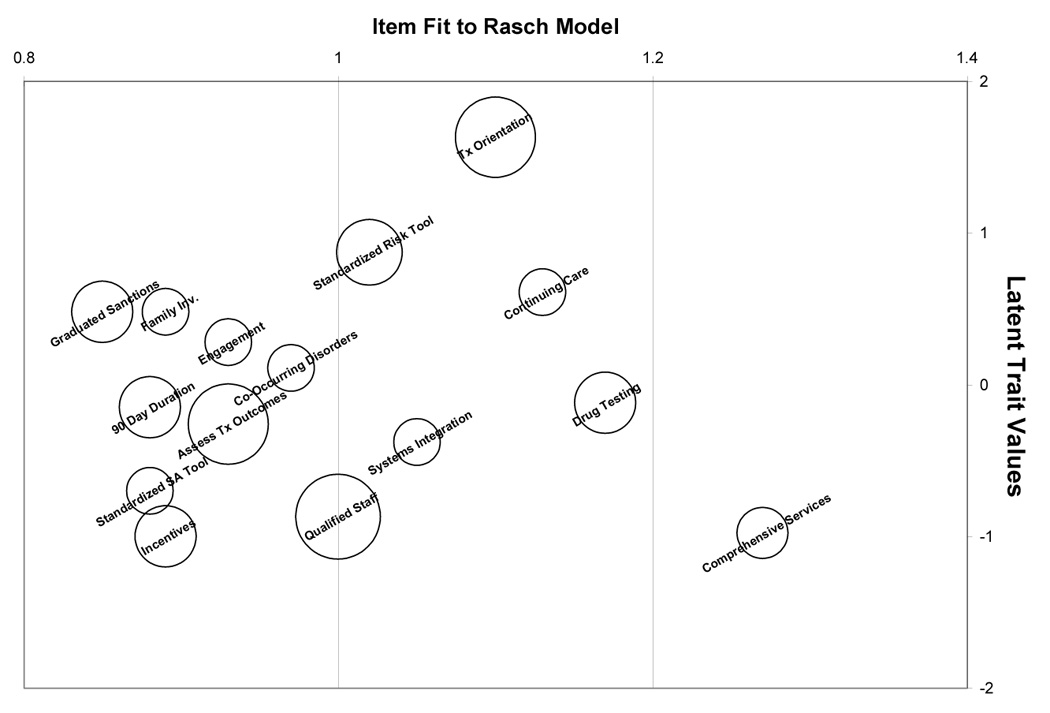

For the sample of respondents in adult facilities, results of the unidimensionality tests indicated that the first residual component accounted for 2 units of variance, suggesting that the data are most likely unidimensional. Item thresholds for the 15 EBP items (developmental appropriateness was deleted for the adult sample) are listed in Table 3 and shown graphically in Figure 2. The EBP items showed good fit to the Rasch model, with infit values ranging from .88 to 1.28 and outfit values ranging from .79 to 1.40. Measure parameters for the items ranged from −1.00 to 1.68, indicating adequate coverage of the range of EBP use. As with the juvenile agencies, several EBPs showed similar likelihoods of use. For example, similar to the juvenile sample, respondents that reported assessing their own treatment outcomes were also likely to plan treatment durations for at least 90 days. However, while respondents in the juvenile sample reported that engagement methods corresponded with assessing treatment outcomes and 90 day treatment durations, engagement methods were less likely to be used in the adult system. Instead drug testing occurred with approximately the same frequency.

Figure 2.

Bubble chart of the 16 EBPs administered to the adult sample. The vertical displacement of the bubbles illustrate the “difficulty” of the item; items with negative values indicate a greater likelihood of use. Fit to the Rasch model is illustrated by the horizontal displacement of the items, with better-fitting items obtaining infit values closer to 1 and acceptable infit values occurring between 0.6 and 1.4. The size of the bubbles reflects the precision of the estimates or standard errors.

Similar to the juvenile sample, the EBPs were measured with varying precision and difficulty in the adult sample (see Figure 2). Less frequently used EBPs included using CBT, TC, or a manualized treatment approach and standardized risk assessment tools; more frequently used EBPs included participant incentives, staff qualified to provide substance abuse treatment, comprehensive services, and the use of standardized substance abuse assessment tools.

With respect to DIF, the same items found to differ between the corrections and treatment director samples from the juvenile facilities—family involvement in treatment and comprehensive services—also differed for adults. Likewise, treatment directors were more likely to indicate that they involved families in treatment (DIF measure = −1.41, SE = 0.20) than corrections administrators (DIF measure = 1.87, SE = 0.18), and corrections administrators were more likely to indicate that their programs provided comprehensive services (DIF measure = −2.32, SE = 0.17) than treatment directors (DIF measure = 0.88, SE = 0.17).

3.3 Correlates of the EBP Adoption Measure

3.3.1 Juvenile Justice/Treatment Sample

Table 4 shows the results of the regression models predicting EBP use among juvenile facilities and programs. The set of organizational structure variables as a group significantly predicted EBP use (F [4, 116] = 3.32, p = .013, R2 = .10). In terms of individual predictors, community-based programs were using more of the EBPs than treatment programs based in institutional settings (β = .30, t = 2.00, p = .048). The treatment climate variables as a group also predicted EBP use (F [3, 99] = 5.64, p = .001, R2 = .15) with management emphasis on the quality of treatment predicting EBP use (β = .34, t = 3.63, p < .001). The set of training and resources variables also predicted EBP use (F [7, 251] = 5.31, p < .001, R2 = .13), with internal support (β = .18, t = 2.72, p = .007), training (β = .19, t = 2.92, p = .004), physical facilities (β = .51, t = 2.97, p = .003), resources (β = −.52, t = −2.96, p = .003), and funding (β = −.14, t = −2.17, p = .031) each predicting use among the individual predictors. Network connectedness as a group showed the strongest relationship with EBP use (F [3, 114] = 9.18, p < .001, R2 = .20). In terms of individual variables, connections with non-criminal justice agencies was a significant predictor of EBP use (β = .30, t = 2.77, p = .007). Finally, administrator attitudes as a group was significantly associated with EBP use (F [5, 109] = 2.45, p = .038, R2 = .10), with commitment to the organization related to more use (β = .28, t = 2.21, p = .030).

Table 4.

Multiple Regression Analyses of Organizational Variables (IVs) and Rasch Measure (DV) in Juvenile Sample

| Variable | B | SE B | β | t |

|---|---|---|---|---|

| Organizational Structure (F = 3.32*, R2 = .10) | ||||

| Institution vs. Community Setting | 0.83 | 0.41 | 0.30 | 2.00* |

| Substance Abuse Treatment Facility | 0.37 | 0.22 | 0.15 | 1.67 |

| Offenders vs. Mixed Populations | −0.39 | 0.42 | −0.14 | −0.92 |

| Organizational Climate (F = 5.64**, R2 = .15) | ||||

| Management Emphasis on Quality Treatment | 0.62 | 0.17 | 0.34 | 3.62*** |

| Correctional Staff Respect for Treatment | 0.11 | 0.15 | 0.06 | 0.69 |

| Training & Resources (F = 5.31**, R2 = .13) | ||||

| Funding | −0.21 | 0.10 | −0.14 | −2.17* |

| Physical Plant | 0.69 | 0.23 | 0.51 | 2.97** |

| Staffing | −0.14 | 0.09 | −0.10 | −1.57 |

| Training Development | 0.36 | 0.12 | 0.19 | 2.92** |

| Internal Support | 0.28 | 0.10 | 0.18 | 2.72** |

| Network Connectedness (F = 9.18***, R2 = .20) | ||||

| Non-criminal Justice Facilities | 0.43 | 0.12 | 0.31 | 3.55*** |

| Criminal Justice Facilities | 0.26 | 0.09 | 0.24 | 2.78** |

| Administrator Attitudes (F = 2.45*, R2 = .10) | ||||

| Punishment/Deterrence | −0.20 | 0.18 | −0.11 | −1.13 |

| Rehabilitation | 0.16 | 0.22 | 0.07 | 0.72 |

| Organizational Commitment | 0.54 | 0.25 | 0.28 | 2.21* |

| Cynicism for Change | 0.13 | 0.22 | 0.07 | 0.58 |

Note. B = Unstandardized regression coefficient, SE B = Standard error, β = Standardized regression coefficient. F statistics and R2 values reflect the impact of the predictor variables as a group on the Rasch-derived measure of EBP use.

p < .05.

p < .01

p < .001

3.3.2 Adult Corrections/Treatment Sample

Table 5 shows the results of the regression models predicting EBP use in the adult sample. Because some of predictors were administered to both corrections administrator and treatment director samples, and some were administered only to corrections administrators or treatment directors, we report results separately for the different samples. Across samples, the training and resources domain also correlated with EBP use across samples (F [7, 466] = 9.62, p <.001, R2 = .13), especially training (β = .20, t = 3.85, p < .001), resources (β = −.46, t = −3.45, p = .001), physical facilities (β = .43, t = 3.34, p = .001), and internal support (β = .22, t = 4.46, p < .001). Administrator attitudes were related to EBP use (F [5, 378] = 7.88, p < .001, R2 = .09), with more prominent attitudes toward rehabilitation (β = .17, t = 3.20, p = .001) and attitudes emphasizing less punishment (β = −.18, t = −3.36, p = .001) associated with greater use.

Table 5.

Multiple Regression Analyses of Organizational Variables (IVs) and Rasch Measure (DV) in Adult Sample

| Variable | B | SE B | β | t |

|---|---|---|---|---|

| Organizational Structure and Leadership (F = 6.63***, R2 = .15) | ||||

| Institution vs. Community Setting | −0.38 | 0.12 | −0.18 | −3.13** |

| Size of Facility | <0.01 | 0.06 | <0.01 | −0.08 |

| Administrator Background in Human Services | 0.32 | 0.09 | 0.21 | 3.64*** |

| Transformational Leadership | −0.03 | 0.15 | −0.02 | −0.19 |

| Transactional Leadership | 0.11 | 0.14 | 0.09 | 0.82 |

| Administrator Knowledge about EBPs | 0.04 | 0.01 | 0.24 | 4.09*** |

| Organizational Culture and Climate (F = 4.99***, R2 = .09) | ||||

| Climate for Learning | 0.46 | 0.16 | 0.25 | 2.82** |

| Cohesive Culture | −0.05 | 0.12 | −0.03 | −0.36 |

| Hierarchical Culture | −0.19 | 0.12 | −0.12 | −1.61 |

| Performance Achievement Culture | 0.21 | 0.10 | 0.15 | 2.05* |

| Innovation/Adaptability Culture | 0.06 | 0.11 | 0.04 | 0.58 |

| Management Emphasis on Quality Treatment | 0.68 | 0.14 | 0.42 | 4.99*** |

| Correctional Staff Respect for Treatment | −0.01 | 0.12 | −0.01 | −0.07 |

| Training & Resources (F = 9.62***, R2 = .13) | ||||

| Funding | −0.02 | 0.07 | −0.01 | −0.27 |

| Physical Plant | 0.52 | 0.16 | 0.43 | 3.34** |

| Staffing | −0.10 | 0.07 | −0.07 | −1.52 |

| Training Development | 0.34 | 0.09 | 0.20 | 3.85*** |

| Resources | −0.62 | 0.18 | −0.46 | −3.45** |

| Internal Support | 0.36 | 0.08 | 0.22 | 4.46*** |

| Network Connectedness (F = 18.07***, R2 = .26) | ||||

| Non-criminal Justice Facilities | 0.45 | 0.13 | 0.31 | 3.53** |

| Criminal Justice Facilities | 0.25 | 0.10 | 0.23 | 2.57* |

| Administrator Attitudes (F = 7.88***, R2 = .09) | ||||

| Punishment/Deterrence | −0.26 | 0.08 | −0.18 | −3.21** |

| Rehabilitation | 0.39 | 0.12 | 0.17 | 3.20** |

| Importance of Substance Abuse Treatment-Prison | <0.01 | 0.02 | <.01 | −0.07 |

| Importance of Substance Abuse Treatment-Community | 0.04 | 0.03 | 0.07 | 1.30 |

| Organizational Commitment | 0.13 | 0.19 | 0.07 | 0.72 |

| Cynicism for Change | −0.27 | 0.18 | −0.15 | −1.51 |

Note. B = Unstandardized regression coefficient, SE B = Standard error, β = Standardized regression coefficient. F statistics and R2 values reflect the impact of the predictor variables as a group on the Rasch-derived measure of EBP use.

p < .05.

p < .01

p < .001

Among prison, jail, and community corrections administrators, organizational structure and leadership correlated with EBP use (F [7, 263] = 6.63, p < .001, R2 = .15). Prisons use more EBPs than county-based corrections facilities (i.e., jails and probation/parole offices; β = −.18, t = −3.13, p = .002). Facilities whose administrators reported greater knowledge of EBPs (β = .24, t = 4.09, p < .001) and education or experience in human services (β = .21, t = 3.64, p < .001) were more likely to use EBPs. Organizational culture and climate variables were also related to EBP use (F [6, 292] = 4.99, p < .001, R2 = .09), with performance achievement culture (β = .15, t = 2.05, p = .041) and climates more conducive to learning (β = .25, t = 2.82, p = .005) associated with more use.

Finally, among treatment directors, organizational climate correlated with EBP use (F [3, 148] = 10.82, p < .001, R2 = .18), with management emphasis on the quality of treatment associated with greater EBP use (β = .42, t = 4.99, p < .001). Network connectedness was also related to EBP use (F [3, 171] = 18.07, p < .001, R2 = .26) with stronger relationships with both criminal justice (β = .24, t = 2.79, p = .006) and non-criminal justice agencies correlated with more use of EBPs (β = .31, t = 3.54, p = .001).

4. Discussion

Results of item response analyses of the 16 EBP items (15 items with the adult sample in which we did not include the item for developmentally appropriate treatment) demonstrated that a reliable measure of the extent to which juvenile and adult correctional facilities, and community treatment agencies serving offenders, have adopted various research-supported treatment practices suggested by the literature could be developed using Rasch modeling. The literature has made a distinction between EBP adoption and implementation, with adoption characterized by a facility’s use of EBPs and implementation characterized by the extent to which eligible recipients receive them (Roman and Johnson, 2002). We have focused on EBP adoption in this study. Future studies incorporating measures of treatment participation, utilization, and fidelity are needed to study EBP implementation.

The observed patterns of EBP use were consistent with the Rasch model, reflecting both the difficulty of using the EBPs across programs as well as the extent to which the programs were using them. These findings advance the field of the assessment of EBPs, particularly with substance abusing offenders, which has been limited by either focusing on individual treatment practices or by assessing an array of practices using an inventory approach.

Inventory approaches to EBP assessment implicitly assume that all EBPs are equally important to the persons responsible for adopting innovative treatment practices. In contrast, and consistent with Knudsen et al. (2007), these data suggest that clusters of EBPs tend to occur together. This adoption pattern suggests that facilities using more EBPs may have overcome key resource-related and philosophical barriers to EBP use such that additional EBPs may be adopted with less difficulty. As such, these findings are consistent with Rogers’ (2003) diffusion of innovation theory, which posits that new innovations are likely to be adopted when they are consistent with previously introduced technologies. While Knudsen et al. tested a clustering hypothesis with respect to medication use in private sector treatment facilities, the current study tests the idea with respect to psychosocial interventions adopted in criminal justice settings.

The fact that EBPs clustered together introduces new hypotheses concerning EBP adoption behavior in criminal justice settings. Namely, the findings suggest that innovators do not necessarily implement one EBP at a time but that they instead may implement certain practices together. For example, drug testing and systems integration tended to be equally likely to be used in both adult and juvenile samples. One process that may explain these seemingly distinct activites occurring together is that committing to do good drug testing necessitates that a program deal with other agencies in determining how to arrive at valid, clinically useful results, deciding with whom to share the results, etc. Similarly, the use of engagement techniques, assessment of treatment outcomes, and a planned 90 day duration of treatment have approximately equal difficulty levels. This pattern of results may suggest that programs serious about assessing the impact of their treatment practices take additional measures to ensure that offenders are also appropriately engaged in treatment given the consistency in the literature linking engagement and treatment outcome. Such programs may also want to ensure that treatment is of sufficient intensity and duration to produce the desired impact on treatment outcomes. Because EBPs tended to cluster together these results may assist researchers, clinicians, and program administrators plan for and prioritize new EBP adoption by starting with “easier” treatment practices or strategically targeting EBPs with a similar difficulty levels to EBPs facilities are currently using.

The Rasch measure we derived also shows evidence of concurrent validity because it was significantly associated with numerous organizational variables, namely the extent to which the facilities carried out joint activities, particularly with non-criminal justice focused organizations, internal support for new programs, training opportunities, management emphasis on the quality of treatment, and administrator commitment to the organization. As such, these findings are consistent with other organizational and health services research focused on predictors of innovation, including EBP adoption (Glisson, 2002; Knudsen and Roman, 2004; Rogers, 2003; Wejnert, 2002). With a few exceptions, the pattern of relationships was similar to what Henderson et al. (2007) and Friedmann et al. (2007) found in previous studies using the same data source, and those exceptions pointed to the benefits of Rasch modeling. First, the relationships were typically stronger using the Rasch measure, through smaller standard errors, which suggests that the Rasch measure produces estimates with improved precision. A second difference is that more of the training and resources variables were significant in these models. Namely, resources in the adult sample and physical facilities, resources, and funding in the juvenile sample significantly predicted EBP use. Predictably, training and quality facilities were positively correlated with EBP use; however, funding and other resources showed a negative association with EBP use. It may be that training and facilities comprise an infrastructure important to EBP use, while additional, more general resources play a superfluous role. In our experience, programs that adopt and implement EBPs are those that find a way to do so in spite of funding and resource limitations (within reasonable limits); therefore, these negative relationships may be associated with other administrator attitudes (e.g., hopelessness, comfort with the status quo) that were not assessed in this survey.

In comparison to the findings reported in Friedmann et al. (2007) and Henderson et al. (2007), in which simple, summary counts of number of EBPs present served as the dependent measures, results using the Rasch-developed measure showed few substantive differences. However, the Rasch measure has stronger measurement properties in that it takes into account both the likelihood that programs are using the various EBPs (i.e., item difficulty in an IRT sense) and programs’ patterns of EBP use. And, the Rasch-developed technique allows us to examine patterns or clustering of EBPs in a manner that is not obvious from the counts of EBPs. In addition, because the Rasch model is a linear model producing latent trait estimates on a true interval scale, we can be certain that our Rasch-derived EBP measure has these same measurement properties. In essence, although the concurrent validity of the Rasch measure is not superior to the summary counts we reported previously (at least in this particular application), the construct validity of the Rasch measure is stronger given its superior measurement properties.

In terms of substantive interpretations of the results, it is of some concern, though not necessarily surprising, that developmental appropriateness and research-supported treatment modalities (i.e., CBT, therapeutic community, or other manualized treatment) were the least used EBPs. Research on empirically supported substance abuse treatments has indicated that treatment type does matter, and that with respect to adolescents, developmental concerns must be taken into account to maximize treatment gains (Dennis et al., 2003; Liddle and Rowe, 2006). However, a large body of research has indicated that transporting empirically supported treatments to naturalistic settings is plagued by many difficulties (Institute of Medicine, 1998). Findings from the current study suggest that EBPs that do not necessarily require changing the service delivery infrastructure, or are driven by legislation and/or accreditation (e.g., incentives, qualified staff, use of standardized substance abuse assessment tools, comprehensive services) are used fairly frequently. However, EBPs requiring “deep structure” modifications of service delivery or the content of programming are less likely to be used. Therefore, we are encouraged by recent studies (Baer et al., 2007; Liddle, et al., 2006) suggesting that empirically supported treatments can be successfully transported to naturalistic settings, and policy movements supporting studies designed to disseminate research-supported treatment models and investigate their implementation and sustainability (e.g., NIDA’s Clinical Trials Network, http://www.nida.nih.gov/ctn; National Institutes of Health, 2006; Oregon’s passage of the Evidence-Based Practices legislation, etc.). Similar studies are greatly needed in criminal justice settings.

4.1 Limitations

The current study is limited in certain respects. First, the items were selected from a broad survey assessing many different constructs. Although one goal of the survey was to assess EBPs, this was only one of several goals. As such, certain items needed to be defined by establishing threshold performances and combining items. It is possible that more parsimonious items could be derived for future investigations. Second, DIF between the corrections administrators and treatment directors was evidenced for two items, family involvement in treatment, and comprehensive services. Some researchers have recommended removing items with DIF, as they may reflect bias in how groups interpreted and responded to the items. We have chosen to retain them in the current study given the strong empirical base for family involvement in treatment (Henggeler et al., 1995) and comprehensive services (Etheridge et al., 2001). Our assumption is that rather than reflecting item bias, the DIF results from real differences in respondent perceptions between the two populations. Corrections settings face many more logistical difficulties providing family services than community settings (Henderson et al., 2007), and conversely because offenders can not access services from other facilities when incarcerated and secure institutions often have legal mandates to provide services, corrections settings are more likely to provide offenders with comprehensive services (Taxman et al., 2007b; Young et al., 2007). Third, the response rate for juvenile facilities was substantially lower than the response rate for adult facilities. Although Taxman et al. (2007a) found no evidence of response bias among the juvenile respondents, it is nevertheless possible that we may have found different results if a larger number of respondents working with juvenile offenders had completed the survey. Fourth, this is a cross sectional survey and longitudinal data would benefit a greater understanding of EBP use. Fifth, the data are limited to self-reports of program administrators, and therefore, there is no way of verifying their use of EBPs or examining the quality or fidelity with which they are used.

4.2 Conclusions

Despite these limitations, the current study also possesses noteworthy strengths. Foremost among these is the fact that the parent study obtained nationally representative estimates of substance abuse treatment practices in juvenile and adult correctional and community settings (Taxman 2007a). Second, to our knowledge, this is the first application of IRT methods to the study of EBPs, and our findings suggest there is promise in conducting similar studies in the future. Such studies will help advance further stages of inquiry, namely assessing predictors of EBPs, and potentially setting the foundation for future interventions aimed at modifying the organizational context of corrections agencies so that they may be implemented more effectively. However, consensus on what constitutes evidence-based practice, and on measures for assessing its use, are necessary first steps in developing effective interventions.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Aarons GA, Sawitzky AC. Organizational culture and climate and mental health provider attitudes toward evidence-based practice. Psychological Services. 2006;3:61–72. doi: 10.1037/1541-1559.3.1.61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arnold JA, Arad S, Rhoades JA, Drasgow F. The empowering leadership questionnaire: The construction and validation of a new scale for measuring leader behaviors. Journal of Organizational Behavior. 2000;21:249–269. [Google Scholar]

- Backer TE, Liberman RP, Kuehnel TG. Dissemination and adoption of innovative psychological interventions. J Consult Clin Psychol. 1986;54:111–118. doi: 10.1037//0022-006x.54.1.111. [DOI] [PubMed] [Google Scholar]

- Baer JS, Ball SA, Campbell BK, Miele GM, Schoener EP, Tracy K. Training and fidelity monitoring og behavioral interventions in multi-site addictions research. Drug Alcohol Depend. 2007;87:107–118. doi: 10.1016/j.drugalcdep.2006.08.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balfour D, Wechsler B. Organizational commitment: Antecedents and outcomes in public organizations. Public Productivity and Management Review. 1996;29:256–277. [Google Scholar]

- Barlow D. Psychological treatments. Am Psychol. 2004;59:869–878. doi: 10.1037/0003-066X.59.9.869. [DOI] [PubMed] [Google Scholar]

- Baruch Y. Response rate in academic studies: A comparative analysis. Human Relations. 1999;52:421–438. [Google Scholar]

- Bond TG, Fox CM. Applying the Rasch model: Fundamental measurement in the human sciences. Mahwah, NJ: Lawrence Erlbaum Associates; 2001. [Google Scholar]

- Brannigan R, Schackman BR, Falco M, Millman RB. The quality of highly regarded adoelscent substance abuse treatemnt programs: Results of an in-depth national survey. Arch Pediatr Adolesc Med. 2004;158:904–909. doi: 10.1001/archpedi.158.9.904. [DOI] [PubMed] [Google Scholar]

- Brown BS, Flynn PM. The federal role in drug abuse technology transfer: A history and perspective. J Subst Abuse Treat. 2002;22:245–257. doi: 10.1016/s0740-5472(02)00228-3. [DOI] [PubMed] [Google Scholar]

- Cameron KS, Quinn RE. Diagnosing and changing organizational culture. Addison-Wesley: Reading; 1999. [Google Scholar]

- Cole JC, Rabin AS, Smith TL, Kaufman AS. Development and validation of a Rasch-derived CES-D short form. Psychol Assess. 2004;16:360–372. doi: 10.1037/1040-3590.16.4.360. [DOI] [PubMed] [Google Scholar]

- Cullen FT, Fisher BS, Applegate BK. Public opinion about punishment and corrections. In: Michael Tonry., editor. Crime and justice: a review of research. Vol. 27. Chicago: University of Chicago Press; 2000. pp. 1–79. [Google Scholar]

- Denison DR, Mishra AK. Toward a theory of organizational culture and effectiveness. Organization Science. 1995;6:204–223. [Google Scholar]

- Dennis ML, Dawud-Noursi S, Muck RD, McDermeit M. The need for developing and evaluating adolescent treatment models. In: Stevens SJ, Morral AR, editors. Adolescent substance abuse treatment in the United States. New York: Haworth Press; 2003. pp. 3–34. [Google Scholar]

- Drug Strategies. Bridging the gap: A guide to drug treatment in the juvenile justice system. Washington, DC: Drug Strategies; 2005. [Google Scholar]

- Embretson SE, Reise SP. Item response theory for psychologists. Mahwah, NJ: Lawrence Erlbaum Associaties; 2000. [Google Scholar]

- Etheridge RM, Smith JC, Rounds-Bryant JL, Hubbard RL. Drug abuse treatment and comprehensive services for adolescents. Journal of Adolescent Research. 2001;16:563–589. [Google Scholar]

- Farrington DP, Welsh BC. Randomized experiments in criminology: What have we learned in the last two decades? Journal of Experimental Criminology. 2005;1:9–38. [Google Scholar]

- Friedmann PD, Taxman FS, Henderson CE. Evidence-based treatment practices for drug-involved adults in the criminal justice system. J Subst Abuse Treat. 2007 doi: 10.1016/j.jsat.2006.12.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glisson C. The organizational context of children’s mental health services. Clin Child Fam Psychol Rev. 2002;5:233–253. doi: 10.1023/a:1020972906177. [DOI] [PubMed] [Google Scholar]

- Glisson C, Hemmelgarn A. The effects of organizational climate and interorganizational coordination on the quality and outcomes of children’s service systems. Child Abuse Negl. 1998;22:401–421. doi: 10.1016/s0145-2134(98)00005-2. [DOI] [PubMed] [Google Scholar]

- Graham JW, Taylor BJ, Olchowski AE, Cumsille PE. Planned missing data designs in psychological research. Psychol Methods. 2006;11:323–343. doi: 10.1037/1082-989X.11.4.323. [DOI] [PubMed] [Google Scholar]

- Grella C. The Drug Abuse Treatment Outcome Studies: Outcomes with adolescent substance abusers. In: Liddle HA, Rowe CL, editors. Adolescent substance abuse: Research and clinical advances. New York: Cambridge University Press; 2006. pp. 148–173. [Google Scholar]

- Hambleton RK, Swaminathan H, Rogers HJ. Fundamentals of item response theory. Newbury Park, CA: Sage; 1991. [Google Scholar]

- Henderson CE, Young DW, Jainchill N, Hawke J, Farkas S, Davis RM. Adoption of evidence-based drug abuse treatment practices for juvenile offenders. J Subst Abuse Treat. 2007 doi: 10.1016/j.jsat.2006.12.021. [DOI] [PubMed] [Google Scholar]

- Henggeler SW, Schoenwald SK, Pickrel SG. Multisystemic therapy: Bridging the gap between university- and community-based treatment. J Consult Clin Psychol. 1995;63:709–717. doi: 10.1037//0022-006x.63.5.709. [DOI] [PubMed] [Google Scholar]

- Holland PW, Wainer H, editors. Differential item functioning. Hillsdale, NJ: Lawrence Erlbaum Associates; 1993. [Google Scholar]

- Institute of Medicine. Bridging the gap between practice and research: Forging partnerships with community-based drug and alcohol treatment. Washington, DC: National Academy Press; 1998. [PubMed] [Google Scholar]

- Institute of Medicine. Crossing the quality chasm: A new health system for fhe 21st century. Washington, DC: National Academy Press; 2001. [Google Scholar]

- Kahler CW, Strong DR, Read JP, Palfai TP, Wood MD. Mapping the continuum of alcohol problems in college students: A Rasch model analysis. Psychol Addict Behav. 2004;18:322–333. doi: 10.1037/0893-164X.18.4.322. [DOI] [PubMed] [Google Scholar]

- Knudsen HK, Ducharme LJ, Roman PM. Early adoption of buprenorphine in substance abuse treatment centers: Data from the private and public sectors. J Subst Abuse Treat. 2006;30:363–373. doi: 10.1016/j.jsat.2006.03.013. [DOI] [PubMed] [Google Scholar]

- Knudsen HK, Ducharme LJ, Roman PM. The adoption of medications in substance abuse treatment: associations with organizational characteristics and technology clusters. Drug Alcohol Depend. 2007;87:164–174. doi: 10.1016/j.drugalcdep.2006.08.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knudsen HK, Ducharme LJ, Roman PM, Link T. Buprenorphine diffusion: The attitudes of substance abuse treatment counselors. J Subst Abuse Treat. 2005;29:95–106. doi: 10.1016/j.jsat.2005.05.002. [DOI] [PubMed] [Google Scholar]

- Knudsen HK, Roman PM. Modeling the use of innovations in private treatment organizations: the role of absorptive capacity. J Subst Abuse Treat. 2004;26:353–361. doi: 10.1016/s0740-5472(03)00158-2. [DOI] [PubMed] [Google Scholar]

- Landenberger N, Lipsey MW. The positive effects of cognitive–behavioral programs for offenders: A meta-analysis of factors associated with effective treatment. Journal of Experimental Criminology. 2005;1:451–476. [Google Scholar]

- Lehman WK, Greener JM, Simpson DD. Assessing organizational readiness for change. J Subst Abuse Treat. 2002;22:197–209. doi: 10.1016/s0740-5472(02)00233-7. [DOI] [PubMed] [Google Scholar]

- Liddle HA, Rowe CL, editors. Adolescent substance abuse: Research and clinical advances. New York: Cambridge University Press; 2006. [Google Scholar]

- Liddle HA, Rowe CL, Gonzalez A, Henderson CE, Dakof GA, Greenbaum PE. Changing provider practices, program environment, and improving outcomes by transporting Multidimensional Family Therapy to an adolescent drug treatment setting. Am J Addict. 2006;15:102–112. doi: 10.1080/10550490601003698. [DOI] [PubMed] [Google Scholar]

- Liddle HA, Rowe CL, Quille TJ, Dakof GA, Mills DS, Sakran E, Biaggi H. Transporting a research-based adolescent drug treatment into practice. J Subst Abuse Treat. 2002;22:231–243. doi: 10.1016/s0740-5472(02)00239-8. [DOI] [PubMed] [Google Scholar]

- Linacre JM. Structure in Rasch residuals: Why principal components analysis? Rasch Measurement Transactions. 1998;12:636. [Google Scholar]

- Linacre JM, Wright BD. Reasonable mean-square fit values. Rasch Measurement Transactions. 1994;8:370. [Google Scholar]

- Linacre JM, Wright BD. WINSTEPS Rasch-model computer program [computer software] Chicago: MESA Press; 1999. [Google Scholar]

- Lipsey MW, Wilson DB. Effective intervention for serious juvenile offenders: A synthesis of research. In: Loeber R, Farrington DP, editors. Serious and violent juvenile offenders: Risk factors and successful interventions. Thousand Oaks, CA: Sage Publications; 1998. pp. 313–345. [Google Scholar]

- Lipsey MW, Wilson DB. Practical meta-analysis. Thousand Oaks, CA: Sage Publications; 2001. [Google Scholar]

- Mark TL, Song X, Vandivort R, Duffy S, Butler J, Coffey R, Schabert VF. Characterizing substance abuse programs that treat adolescents. J Subst Abuse Treat. 2006;31:59–65. doi: 10.1016/j.jsat.2006.03.017. [DOI] [PubMed] [Google Scholar]

- Melnick G, Hawke J, Wexler HK. Client perceptions of prison-based therapeutic community drug treatment programs. Prison Journal. 2004;84:121–138. (Special Edition) [Google Scholar]

- Miller WR, Sorensen JL, Selzer JA, Brigham GS. Disseminating evidence-based practices in substance abuse treatment: A review with suggestions. J Subst Abuse Treat. 2006;31:25–39. doi: 10.1016/j.jsat.2006.03.005. [DOI] [PubMed] [Google Scholar]

- Miller WR, Zweben J, Johnson WR. Evidence-based treatment: Why, what, where, when, and how? J Subst Abuse Treat. 2005;29:267–276. doi: 10.1016/j.jsat.2005.08.003. [DOI] [PubMed] [Google Scholar]

- National Institute of Justice. 2000 Arrestee drug abuse monitoring: Annual report (NIJ 193013) 2003 accessed on March 6, 2006, from http://www.ncjrs.gov/pdffiles1/nij/193013.pdf.

- National Institutes of Health. Dissemination and implementation research in health (PAR-06-039) 2006 accessed on March 13, 2007, from http://grants.nih.gov/grants/guide/pafiles/PAR-06-039.html.

- National Institute on Drug Abuse. Rockville, MD: National Institute on Drug Abuse; Principles of Drug Addiction Treatment: A Research Based Guide (NIH Publication No. 00-4180) 1999

- National Institute on Drug Abuse. Rockville, MD: National Institute on Drug Abuse; Principles of Drug Abuse Treatment for Criminal Justice Populations (NIH Publication No. 06-5316) 2006

- Orthner DK, Cook PG, Sabah Y, Rosenfeld J. Measuring organizational learning in human services: development and validation of the Program Style Assessment Instrument. Bethesda, MD: Invited Presentation to the National Institute on Drug Abuse; 2004. [Google Scholar]

- Parker SK, Axtell CM. Seeing another viewpoint: Antecedents and outcomes of employee perspective taking. Acad Manage J. 2001;44:1085–1100. [Google Scholar]

- Podsakoff PM, MacKenzie SB, Moorman RH, Fetter R. Tranformational leader behaviors and their effects on followers' trust in leader, satisfaction, and organizational citizenship behaviors. Leadership Quarterly. 1990;1:107–142. [Google Scholar]

- Raiche G. Critical eigenvalue sizes in standardized residual principal components analysis. Rasch Measurement Transactions. 2005;19:1012. [Google Scholar]

- Rasch G. Probabilistic models for some intelligence and attainment tests. Denmark: Danish Institute for Educational Research; 1960. [Google Scholar]

- Rogers EM. Diffusion of innovations. 5th ed. New York: Free Press; 2003. [Google Scholar]

- Roman PM, Johnson JA. Adoption and implementation of new technologies in substance abuse treatment. J Subst Abuse Treat. 2002;22:211–218. doi: 10.1016/s0740-5472(02)00241-6. [DOI] [PubMed] [Google Scholar]

- Schafer JL, Graham JW. Missing data: Our view of the state of the art. Psychol Methods. 2002;7:147–177. [PubMed] [Google Scholar]

- Schmidt F, Taylor TK. Putting empirically supported treatment into practice: Lessons learned in a children’s mental health center. Professional Psychology: Research and Practice. 2002;33:483–489. [Google Scholar]

- Schneider B, White SS, Paul MC. Linking service climate and customer perceptions of service quality: Tests of a causal model. J Appl Psychol. 1998;83:150–163. doi: 10.1037/0021-9010.83.2.150. [DOI] [PubMed] [Google Scholar]

- Scott SG, Bruce RA. Determinants of innovative behavior: A path model of individual innovation in the workplace. Acad Manage J. 1994;37:580–607. [Google Scholar]

- Simpson DD. A conceptual framework for transferring research to practice. J Subst Abuse Treat. 2002;22:171–182. doi: 10.1016/s0740-5472(02)00231-3. [DOI] [PubMed] [Google Scholar]

- Simpson DD, Joe GW, Fletcher BW, Hubbard RL, Anglin MD. A national evaluation of treatment outcomes for cocaine dependence. Arch Gen Psychiatry. 1999;56:507–514. doi: 10.1001/archpsyc.56.6.507. [DOI] [PubMed] [Google Scholar]

- Smith RM, Miao CY. Assessing unidimensionality for the Rasch measurement. In: Wilson M, editor. Objective measurement: Theory into practice. Vol. 2. Norwood, NJ: Ablex; pp. 316–327. [Google Scholar]

- Stirman SW, Crits-Christoph P, DeRubeis RJ. Achieving successful dissemination of empirically supported psychotherapies: A synthesis of dissemination theory. Clinical Psychology: Science and Practice. 2004;11:343–358. [Google Scholar]

- Taxman FS. Reducing recidivism through a seamless system of care: Components of effective treatment, supervision, and transition services in the community; Paper presented at the Office of National Drug Control Policy Treatment and Criminal Justice System Conference; Washington, DC. 1998. Feb, [Google Scholar]

- Taxman FS, Perdoni M, Harrison LD. Drug treatment services for adult offenders: The state of the state. J Subst Abuse Treat. 2007b;32:239–254. doi: 10.1016/j.jsat.2006.12.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taxman FS, Young D, Wiersema B, Mitchell S, Rhodes AG. The National Criminal Justice Treatment Practices Survey: Multi-level survey methods and procedures. J Subst Abuse Treat. 2007a;32:225–238. doi: 10.1016/j.jsat.2007.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tesluk PE, Farr JL, Mathieu JE, Vance RJ. Generalization of employee involvement training to the job setting: Individual and situational effects. Personnel Psychology. 1995;48:607–632. [Google Scholar]

- Wejnert R. Integrating models of diffusion of innovations: a conceptual framework. Annual Review of Sociology. 2002;28:197–326. [Google Scholar]

- Young DW, Dembo R, Henderson CE. A national survey of substance abuse treatment for juvenile offenders. J Subst Abuse Treat. 2007;32:255–266. doi: 10.1016/j.jsat.2006.12.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Young DW, Taxman FS. Instrument development on treatment practices and rehabiliation philosophy. College Park, MD: CJ-DATS Coordinating Center Working Paper; 2004. [Google Scholar]