Abstract

Associative reinforcement provides a powerful explanation of learned behavior. However, an unproven but long-held conjecture holds that spatial learning can occur incidentally rather than by reinforcement. Using a carefully controlled virtual-reality object-location memory task, we formally demonstrate that locations are concurrently learned relative to both local landmarks and local boundaries but that landmark-learning obeys associative reinforcement (showing “overshadowing” and “blocking” or “learned irrelevance”), whereas boundary-learning is incidental, showing neither overshadowing nor blocking nor learned irrelevance. Crucially, both types of learning occur at similar rates and do not reflect differences in levels of performance, cue salience, or instructions. These distinct types of learning likely reflect the distinct neural systems implicated in processing of landmarks and boundaries: the striatum and hippocampus, respectively [Doeller CF, King JA, Burgess N (2008) Proc Natl Acad Sci USA 105:5915–5920]. In turn, our results suggest the use of fundamentally different learning rules by these two systems, potentially explaining their differential roles in procedural and declarative memory more generally. Our results suggest a privileged role for surface geometry in determining spatial context and support the idea of a “geometric module,” albeit for location rather than orientation. Finally, the demonstration that reinforcement learning applies selectively to formally equivalent aspects of task-performance supports broader consideration of two-system models in analyses of learning and decision making.

Keywords: associative learning, blocking, hippocampus, overshadowing, striatum

The dominant model of learning from repeated feedback (or “reinforcement”) associates environmental cues with expected reinforcement and with actions, using a single prediction-error signal (the difference between actual and expected reinforcement) to modify these associations (1–5). However, spatial learning, a crucial aspect of daily life, has long been proposed to exemplify a qualitatively different type of learning (6, 7), whereby “incidental” and “latent” learning occur independent of reinforcement. Perhaps surprisingly, formal demonstration that spatial learning deviates from the predictions of associative reinforcement, given the additional assumption that exploration can be rewarding in itself, has not been forthcoming (8–11).

Here, we carefully dissociate two contributions to spatial learning in virtual environments: learning locations relative to a local boundary and learning locations relative to a local landmark. Learning relative to a local landmark has been shown to follow the predictions of associative reinforcement learning (10). By contrast, we predicted that boundary-related learning would be incidental, for two reasons. First, the hippocampus has been specifically implicated in incidental learning in spatial (12) and other (13–19) domains. Second, the hippocampus seems to specifically process location relative to local environmental boundaries. The firing of hippocampal place cells reflects distances and directions to local boundaries (20, 21) but not to intramaze landmarks (22), and human hippocampal activation corresponds to learning of locations relative to local boundaries but not to local landmarks (23).

Associative reinforcement operating on multiple cues, using a single error term, predicts “blocking” (24) and “overshadowing” (24, 25) between cues. We give a standard analysis, based on ref. 1, but note that these predictions also apply to other formulations of associative learning based on an error-correcting principle (2, 4, 26). Expected reinforcement v is related to the vector of stimuli u via the vector of associative strengths or “weights” w according to:

and the learning rule adjusts these weights according to:

where ε is a constant and δ, the prediction-error term, is the difference between expected and actual reinforcement r:

It can be seen that learning to cue 2 (i.e., adjusting weight w2 from element u2 of the stimulus vector) is reduced by the extent to which reinforcement is already predicted by another cue 1 (e.g., if w2 = 0 and v = w1u1 = r, then δ = 0 and w2 will not be modified). Put simply, if one cue already accurately predicts feedback, the error term δ is reduced, blocking the learning of associations from other cues. Similarly, when learning to two cues occurs concurrently, learning to one of the cues can overshadow learning to the other by reducing δ. In models of learning to act through reinforcement, the above mechanism is referred to as the “critic,” whereas an “actor” learns to associate stimuli to actions, using the same prediction-error and learning rule to modify these associations [more generally, Eq. 3 is adapted to include the effects of actions on the prediction of future reward, e.g., via the temporal difference rule: δ(t) = r(t) + v(t + 1) − v(t); see refs. 5 and 27]. Thus, learned behavior (“instrumental” conditioning) is predicted to show blocking and overshadowing in the same way as learned expectation of reward (Pavlovian or “classical” conditioning). We predicted that learning relative to the landmark would show blocking and overshadowing, whereas learning relative to the boundary would continue irrespective of prior or concurrent learning to other cues.

To test these predictions, we designed a virtual environment in which locations can be learned relative to a local circular boundary or to a local intramaze landmark or landmarks. Distal cues were always present to provide orientation but could not be associated with locations as rendered at infinity. The virtual environment (a modified video game; see Methods) was presented in first-person perspective on a screen; the participant could navigate through it by pressing buttons to turn left or right or to move forwards. Participants initially collected several objects in turn from the virtual arena by navigating to them. In each subsequent trial, they were cued to replace an object (the object appeared on a blank background, they navigated to where they thought they had collected it and pressed a button). Performance was measured as the distance between the participant's response and the correct location. Learning trials included feedback (the object appearing in the correct location to be collected again) from which participants learned to improve performance. “Test” trials omitted this feedback. See Fig. 1. Start positions varied between trials so that path integration could not be used.

Fig. 1.

Virtual reality task. (A) Trial structure (after initial collection of objects). Participants replace the cued object after a short delay phase and received feedback during learning trials (object appears in correct location immediately after the response and is collected) but not testing trials. (B) Virtual arena from the participant's perspective (i, replace phase; ii, feedback phase; different viewpoints) showing the intramaze landmark (traffic cone), the boundary (circular wall), the extramaze orientation cues (mountains, which were projected at infinity), and one object (vase). ITI, intertrial interval.

Results

Overshadowing Experiments.

In everyday life, locations are learned in the presence of multiple stable cues of both types [local landmarks (L) and boundaries (B)]. How do both types of learning interact? Four groups of 12 participants each learned four object-locations over four trials per object with either a landmark or a boundary present alone (“simple learning,” groups L and B) or both cues present together (“compound learning,” groups LB1 and LB2). Performance on all four objects was then tested with one or other cue alone (group LB1 tested with L; group LB2 tested with B) to compare the strengths of associations formed to that cue during compound learning with the strengths of equivalent associations formed during simple learning (i.e., group L tested with L; group B tested with B) (see Fig. 2). Associative reinforcement predicts a reduced associative strength after compound learning compared with simple learning because of overshadowing.

Fig. 2.

Overshadowing experiment. The landmark is overshadowed by the boundary but not vice versa. (A) Four different groups (columns, 12 participants per group) learned four object-locations with either one of (simple learning) or both of the landmark (L) and boundary (B) present (compound learning; Upper) and were tested with either landmark or boundary alone (Lower). (B) Boundary overshadows landmark (i.e., the presence of the boundary during learning reduces learning to the landmark in group LB1 compared with group L) but not vice versa (i.e., the presence of the landmark during learning does not reduce learning to the boundary in group LB2 compared with group B). Bars indicate mean distance between response and correct location during test phase in virtual meters, ± SEM; *, P < 0.05; ns, not significant. +, landmark; circle, boundary; dots, object locations.

As predicted, learning to the landmark was reduced (overshadowed) by the presence of the boundary during compound learning, consistent with associative reinforcement, while learning to the boundary occurred incidentally, unaffected by the presence or absence of the landmark. ANOVA of the test performance of the four groups as a function of learning-situation (simple vs. compound) × test-cue (L vs. B) revealed a significant interaction (F1,44 = 5.57; P < 0.05) and no main effects of learning-situation (F1,44 = 3.16; P > 0.08) or test-cue (F1,44 = 2.79; P > 0.1). Posthoc tests verified the greater replacement error of group LB1 than group L (both tested with landmark; t22 = 2.58, P < 0.05), whereas groups LB2 and B did not differ (both tested with boundary; t22 < 1, P > 0.6). These differential effects were not due to differences in performance during the learning phase with either cue [no difference in simple learning to L and B: t22 < 1, P > 0.7; see supporting information (SI) Fig. 5 and SI Text].

Thus, the presence of the boundary during compound learning overshadowed learning to the landmark, as predicted by associative reinforcement. By contrast, the presence of the landmark during compound learning did not affect learning to the boundary. Simple learning to either cue alone did not differ, ruling out differences in the salience of the two cues. Nonetheless, we also explicitly manipulated the visual salience of the landmark: running new groups L′, LB1′, and LB2′ in a second experiment with a landmark three times as tall as previously (group B never saw the landmark). We found identical effects; overshadowing of the landmark was unaffected by its visual salience (see SI Fig. 4 and SI Text).

Blocking Experiments.

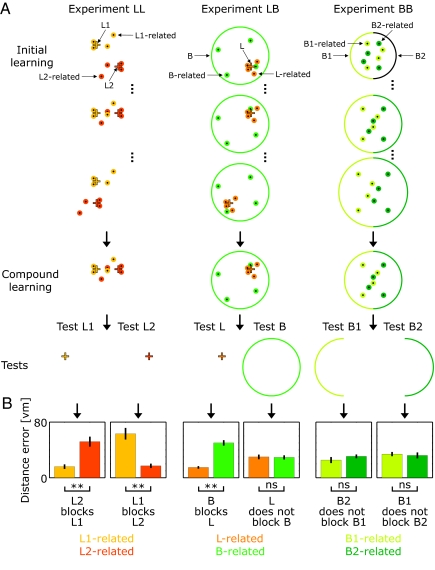

In three further experiments, we examined blocking between two local landmarks L1 and L2 (Experiment LL), between a local landmark L and a local boundary B (Experiment LB), and between opposite sections B1 and B2 of the local boundary (Experiment BB). Each experiment occurred in three phases: (i) During an initial learning phase (known as “prelearning”), the two cues (L1 and L2, L and B, or B1 and B2) moved relative to each other at the start of each block of trials (eight blocks, 14 trials per object in total) with four object-locations paired with either cue; i.e., after the two cues moved, four objects kept a fixed bearing to the first cue (but not to the second), whereas the other four objects kept a fixed bearing to the second cue (but not to the first). (ii) Next, during compound learning, both cues and object-locations remained fixed (six trials per object), allowing associations to be learned to the previously unpaired cue. (iii) Finally, performance was tested with one or other cue alone (four objects tested with each cue, two paired with either cue during initial learning) to compare the strength of association to object-locations previously paired with it or with the other cue (see Fig. 3 for details). Associative reinforcement predicts that, during the compound learning phase, learning to the initially unpaired cue will be blocked by prior learning to the initially paired cue.

Fig. 3.

Blocking experiments. Landmark learning obeys associative reinforcement; boundary learning does not. (A) Three experiments investigate blocking between two local landmarks (Experiment LL), between a local landmark and a boundary (Experiment LB), and between opposing sections of a boundary (Experiment BB). In each experiment, 16 participants performed initial learning of eight object-locations over eight blocks (three example blocks shown) with different spatial configurations of the two cues: landmarks L1 (light orange) and L2 (dark orange) in Experiment LL (Left); landmark L (orange) and boundary B (green) in Experiment LB (Center); or opposite sections B1 (light green) and B2 (dark green) of an enclosing boundary in Experiment BB (Right; note here that the boundary radius changed from block to block). Four object locations were paired with one cue (L1, L, or B1), and four were paired with the other cue (L2, B, or B2), indicated by little dots in the same colors as the associated cues. For example, when landmarks L1 and L2 were moved relative to each other, the four L1-associated objects (light orange dots) kept a fixed bearing to landmark L1 (light orange plus sign) but not to landmark L2 (dark orange plus sign) and vice versa for L2-associated objects (dark orange dots). Subsequently, participants performed compound learning, where both cues were fixed, allowing associations to be learned to the previously unpaired cue. (B) Performance was tested with either cue alone. Learning to the landmark was blocked by prior learning to either another landmark (see Test L1 and Test L2 in Experiment LL) or to the boundary (see Test L in Experiment LB). Learning to the boundary was unaffected by prior learning to the landmark (see Test B in Experiment LB) or to the opposite section of the boundary (see Test B1 and Test B2 in Experiment BB). **, P < 0.001; ns, not significant. Orange +, landmark; green circle/half circle, boundary; dots, object locations (orange is associated with the landmark; green is associated with the boundary).

This design maximizes the power of the effects predicted by associative reinforcement, because the strengths of associations to either cue can be compared within-subject and will also include any effects of “learned irrelevance” (28) of the unpaired cue during initial learning. This latter effect is consistent with the associative reinforcement framework and explained by a reduction in the learning rate (or “associability”; ε in Eq. 2) specifically for the unpaired cue (28); or by association of the unpaired cue to the absence of reinforcer (i.e., a negative or “inhibitory” association from that cue in Eq. 1). We refer to these as blocking experiments for convenience and because the primary effect of sufficient initial learning to the paired cue (i.e., reducing the error term to zero) would be to prevent any subsequent learning to the unpaired cue, notwithstanding the retardation of any potential learning to it due to learned irrelevance.

In all three experiments, performance improved at similar rates during initial learning for both types of cue, reaching near-asymptotic levels from which compound learning produced negligible improvement (see SI Fig. 6 and SI Text). Blocking during the compound learning phase was assessed by testing with one cue alone (e.g. L1) and comparing performance on objects initially paired with that cue with performance on objects initially paired with the other cue (e.g. L2). If learning to cue L1 during the compound phase is blocked by prior learning to L2, performance will be worse for objects initially associated with L2 compared with those initially associated with L1.

As predicted, learning to a local landmark during the compound phase was blocked by prior learning to either another landmark (experiment LL; test L1: t15 = 5.04; P < 0.001; test L2: t15 = 4.79; P < 0.001) or to the boundary (experiment LB; test L: t15 = 8.71; P < 0.001). Thus, landmark-learning appears to operate via associative reinforcement. By contrast, learning to the boundary during the compound phase was unaffected by prior learning to either a landmark (experiment LB; test B: t15 < 1; P > 0.75) or to the opposite section of boundary (experiment BB; test B1: t15 = 1.12; P > 0.25; test B2: t15 < 1; P > 0.7) (see Fig. 3). See SI Text for results of the basic ANOVAs.

Thus, unlike learning to the landmark, learning to the boundary occurs incidentally, irrespective of the level of error: Object-locations already successfully predicted by another cue were nonetheless learned relative to the boundary and to the same accuracy as object-locations paired with the boundary throughout.

As with the overshadowing results, the differential blocking of landmarks but not boundaries does not reflect differences in learning rates (see above), or greater salience of boundaries (initial performance is, if anything, slightly better for objects paired with landmarks (see SI Fig. 6 and SI Text). In further analyses, of objects equidistant to both cues during compound learning, we additionally ruled out that differential blocking effects were due to differential cue proximity (see SI Fig. 7 and SI Text).

Finally, we note that the absence of any performance differences, when tested with a boundary in experiments LB and BB, between objects initially paired or unpaired with the boundary also rules out any effects of learned irrelevance. Both learned irrelevance and blocking should produce a performance difference in this situation, and none was found.

Discussion

Although associative reinforcement via a single prediction-error signal provides a powerful, almost ubiquitous, model for learning over repeated experience, it captures the acquisition of some types of knowledge, but not others, even when both are learned concurrently in formally identical conditions (see ref. 29; O. Hardt, A. Hupbach, and L. Nadel, unpublished data). More specifically, learning object-locations relative to intramaze landmarks obeys associative reinforcement (showing overshadowing and blocking or learned irrelevance), whereas learning relative to environmental boundaries is incidental, occurring independently of behavioral error or the presence of other predictive cues (showing neither overshadowing nor blocking nor learned irrelevance).

The two types of learning occur in parallel within the same task, without differences in the time-courses of learning and stimulus presentation, performance levels, instructions, location-cue proximity, or cue salience. Thus, learning to the boundary seems fundamentally inconsistent with associative reinforcement based on a single prediction-error term, and would potentially require separate error signals for landmarks and for each segment of boundary in the environment (see also ref. 30).

The differential learning relative to the boundary compared with that relative to the landmark over the three blocking experiments is also inconsistent with the many elaborations of basic associative reinforcement. Thus, increased learning rates to more salient stimuli (2) cannot explain our results, because performance indicates that the landmark was always at least as salient as the boundary. There was also no effect of tripling the landmark's height. Goal-directed reinforcement learning, in which information about the nature of the reinforcer is learnt (refs. 31 and 32; see also ref. 33) cannot explain our differential results, because learning to both cues results from the same feedback. Other potential explanations are that poor predictors during initial learning will have an increased learning rate during compound learning (4), that the presence of a good predictor will aid the learning to a poor predictor by increasing reward frequency (“feature enhancement”) (34), that a cue's associability varies with its associative history (35), or that performance changes after removal of a cue reflect a “generalization decrement” (36, 37) cannot easily explain the differential blocking seen in experiment LL compared with experiment BB in which the contingencies of the two landmarks and the two boundaries are identical.

What causes the distinct characteristics of learning to landmarks and boundaries in our experiments? One potential explanation would be that the two cues interact differently with visual behavior (see also ref. 34) such that the previously paired landmark is not seen during some compound learning trials, allowing learning to the boundary, whereas the (larger) boundary is nearly always seen and therefore provides more potent blocking. However, participants started each trial from a position toward the edge of the arena looking inwards, with all cues visible, and often made an entire rotation during the feedback phase. In addition, this explanation would predict at most a graded difference (i.e., with some learning for the unpaired landmark in experiment LL and some blocking of the unpaired boundary in experiment BB), rather than the stark contrast observed. Thus, this explanation applies more readily to failures of transiently presented stimuli in blocking continuous “contextual” cues (38) (i.e., temporal or environmental cues, although incidental learning to environmental boundaries might also contribute to the latter).

Our interpretation, and the hypothesis behind our design, is that the contributions of landmarks and boundaries to spatial memory are supported by distinct striatal and hippocampal systems operating different learning rules. To test for this, participants in an fMRI experiment (23), performed four blocks of trials, each block identical to the learning phase of compound groups LB1 and LB2 in our overshadowing experiment. However, at the start of each block, the landmark and boundary were moved relative to each other, with two of the four objects paired with either cue (similarly to the initial learning phase of our blocking experiments). The association of each object location to landmark or boundary was learned at similar rates for either cue. Significantly, learning relative to the boundary corresponded to activation in the right posterior hippocampus, whereas right dorsal striatum activation reflected learning of landmark-related locations. In this view, the incidental learning to boundaries comprises unsupervised Hebbian association between hippocampal place cells [whose firing represents conjunctions of bearings to boundaries (20, 21)] and object locations, whenever both representations are coincidentally active.

Previous studies of associative reinforcement in spatial learning have produced mixed results. Our present data in combination with our identification of distinct systems for processing local boundaries versus local landmarks (23) helps to clarify these previous findings. Thus, rats in the water maze task use the boundary to locate the platform (39), a task that is hippocampal-dependent (40), and boundary-learning did not show blocking in our study. Conversely, learning the platform location relative to an intramaze landmark in the water maze is not hippocampal-dependent (41), and blocking has been observed in the processing of intramaze landmarks both in rats (10) and our study. Note that we avoided using intramaze landmarks placed exactly at the target location, because these can impede learning of more distant cues simply by focusing attention on the target location alone (11, 42). We also ensured that orientation depended solely on distal cues. Otherwise, orientation might depend on many types of cue, combining outside of the hippocampus in the head-direction circuit (43) and possibly by associative reinforcement. Thus, asymmetrical boundary geometry and distal cues combine to orient participants (44) [and place cell firing (45)] and can overshadow and block each other in doing so (8, 9, 46, 47). Whether or not distal cues do block each other in determining orientation depends on many factors, including task instructions (O. Hardt, A. Humpbach, and L. Nadel, unpublished data). Even though boundary geometry can dominate intramaze cues to orientation in some circumstances (7, 48) and this can result in an absence of blocking or overshadowing of the boundary in determining orientation (49), it is hard to rule out associative explanations invoking the differential salience of the cues (2) or feature enhancement because of interactions between cues mediated by behavioral choice (34).

What distinguishes a local boundary from a local landmark? The finding that firing of hippocampal place cells is well described as a match to the distances to obstacles in all directions around the animal (20, 21) indicates that an important attribute for hippocampal processing is simply the horizontal angle subtended by the obstacle at the animal. Thus, “boundaryness” may simply reflect extent as seen from the participant's viewpoint. Note that the distinction is not due to differential proximity to the object-location, because we found the same differential pattern of blocking between boundaries and landmarks equidistant to the object (see SI Fig. 7 and ref. 23 for further discussion).

How do our results relate to findings that disoriented rats (48) and toddlers (50) reorient themselves using only boundary geometry—ignoring salient local features? These are interpreted as evidence of a “geometric module” for reorientation (refs. 7, 48, and 50 but see also ref. 51). Our results are not explainable by this type of nonspecific dominance of the boundary over the landmark: Performance and learning rates to both types of cue are matched. In addition, orientation is controlled by the distal cues rather than the circular boundary. By contrast, we show that there may be specialized learning of locations relative to surface geometry, even when it does not dominate behavior, in that it proceeds via a different rule than learning relative to intramaze landmarks.

How do our results relate to the involvement of the hippocampal and striatal systems in respectively supporting “place” versus “response” learning (12, 52), or flexible–relational/declarative/episodic versus procedural memory (13–15, 17, 18, 53)? Consistent with many of these suggestions, we formally demonstrate that the two systems' distinct roles may result from differences in the learning rule implemented in either system—and not necessarily from other differences, such as in learning rate, that were not controlled in previous less formal approaches. In this view, striatal synaptic plasticity is controlled by a single error signal reflecting deviations from expected reinforcement (1, 3, 5) possibly mediated by dopamine (54–56). This produces “trial-and-error learning” or “learning by doing” appropriate for procedural memory (15). Unsupervised hippocampal Hebbian synaptic plasticity occurs independent of error or reinforcement, although it may be boosted by novelty [i.e., inconsistency between perception and relationships stored in hippocampus (12, 17)]. This produces incidental learning or “learning by observation” appropriate with maintenance of a flexible mental model (17), “mediating representation” (19), or “cognitive map” (6, 12), and with efficient encoding of experience into episodic memory (15, 16). In addition, our results suggest that the local boundaries may dominate over local landmarks in defining the spatial context of hippocampally mediated episodic memories. More generally, our demonstration of concurrent incidental and reinforcement learning requires a broadening of the models of learning and decision-making applied to social and economic neuroscience (see also ref. 33).

Methods

Participants.

One hundred thirty-two male participants (aged 18–37, mean age 21.9 years) took part in this study, which was approved by the local Research Ethics Committee. Data from three additional participants had to be excluded because of technical problems or misunderstanding of the task. Participants gave full informed written consent and were paid for participating.

Virtual Reality Environment.

We used UnrealEngine2 Runtime software (Epic Games) to display a first-person perspective view of a grassy plane surrounded by a landscape of two mountains, clouds, and the sun (created by using Terragen; Planetside Software) and projected at infinity, to provide distal cues to orientation but not to location. The arena was surrounded by a circular boundary (a cliff) in some experiments (or contained a semicircular portion of it in the BB blocking test). In the absence of the cliff, the grassy plane extended to infinity. A traffic cone was used as an intramaze landmark (and a bowling pin as the second landmark in the LL blocking experiment). Both the boundary and landmarks were rotationally symmetric, leaving the distal cues as the main source of orientation. Participants moved the viewpoint by pressing keys to move forward and turn left or right. The viewpoint is ≈2 virtual meters above ground, the standard boundary was ≈180 virtual meters in diameter (this varied in the BB blocking experiment), and the virtual heading and location were recorded every 100 ms. Participants received training in an unrelated virtual environment before performing the experiment (see SI Text).

Stimuli, Task, and Trial Structure.

Pictures of everyday objects were used as experimental stimuli. Before each experiment, participants familiarized themselves with the arena by exploring for 2–3 min. Next, objects were presented sequentially (once each) within the arena and participants collected the objects by running over them and were instructed to remember their locations. At the beginning of each subsequent trial, a picture of an object was presented for 2 s on a blank background (the cue phase), followed by a variable delay period (fixation cross for 2–6 s; mean 4 s). Participants then started at a variable position within the arena (see SI Text) and had to move to where they thought the cued object had been (the replace phase; mean duration, 8.20 s). After participants had indicated their response by a button press, feedback was provided, i.e., the object appeared in its correct position and participants collected it by running over it (the feedback phase; mean duration 6.23 s). Feedback was not provided in the test phases of the experiments (see below); in the blocking experiments, participants waited in the arena for 6 s (mean) after their response. A fixation cross was then presented for a variable intertrial interval (ITI) (overshadowing experiments: 2–10 s, mean 6 s; blocking experiments: 2–6 s, mean = 4 s) before the start of the next trial.

Overshadowing Experiments.

Procedure and design.

The overshadowing experiments comprised two phases: learning and test. Each phase was one block of 16 trials (4 trials per object; four objects). Objects were presented in pseudorandom order within blocks. Feedback was provided in the learning phase but not in the test phase. To reduce the error variance in the planned between-group comparisons, each participant of a particular group had the same configuration of object order and delay and ITI times as a matching participant in the other groups. Participants were randomly assigned to different groups.

Basic experiment.

Fourty-eight participants were tested in four groups of 12. In the learning phase of groups LB1 and LB2, both the landmark and the boundary were present. For group L only the landmark was present and in group B only the boundary was present. In the test phase, either solely the landmark (groups LB1 and L) or solely the boundary (groups LB2 and B) was present [see Fig. 2; see SI Text for details of the control experiment with a taller landmark (three groups of 12 participants)].

Blocking Experiments.

Procedure and design.

The three blocking experiments (16 participants in each experiment) comprised three phases: Initial learning (or prelearning), separated into eight blocks of one to three trials per object (14 trials per object in total; eight experimental objects); compound learning (one block of six trials per object); test phase, comprising two blocks in which memory for four experimental object-locations was tested in the presence of one cue alone, followed by testing of memory for the other four object locations in the presence of the other cue alone (six trials per object). Feedback about the correct object position was provided in the initial learning and compound learning phases but not in the test phase. Objects were presented in pseudorandom order within blocks (trials with two control objects were interspersed among regular trials; these trials do not require spatial memory: the objects are collected from an infinite grassy plane with blue background and are always visible, as used in our fMRI experiment, see ref. 23 for details).

Initial learning phase.

The experimental objects were separated into two sets: four objects associated with cue 1 (L1 in Experiment LL; L in Experiment LB; or B1 in Experiment BB) and four objects associated with cue 2 (L2 in Experiment LL; B in Experiment LB; or B2 in Experiment BB).

In Experiment LL, the two landmarks were moved relative to each other between blocks (using three different spatial configurations of the two landmarks). After each change, four objects maintained a fixed bearing to landmark L1 (but not to landmark L2); the other four objects maintained a fixed bearing to landmark L2 (but not to landmark L1).

In Experiment LB, the landmark and boundary moved relative to each other between blocks (using four different landmark positions approximately in the middle of the NE, SE, SW, and NW sectors of the arena, as defined by the distal orientation cues). After each change, four objects maintained a fixed bearing to the boundary B (but not to the landmark L), the other four objects maintained a fixed bearing to the landmark L (but not to the boundary B).

In Experiment BB, the radius of the circular boundary changed between blocks (three sizes: the one used in all other experiments including a boundary, a 20% smaller radius, and a 20% larger radius). After each change, four objects maintained a fixed bearing to the Western part of the cliff, boundary B1 (but not to boundary B2); the other four objects maintained a fixed bearing to the Eastern part of the cliff, boundary B2 (but not to boundary B1).

Thus, over the course of the initial learning phase, the positions of four objects were predicted by cue 1 but not by cue 2, whereas the positions of the other four objects were predicted by cue 2 but not by cue 1 (see Fig. 3).

Compound Learning Phase.

During compound learning, both cues predicted the position of all eight experimental objects, remaining in the same locations throughout.

Test Phase.

Memory for four of the object locations defined in the compound learning phase was tested with only one cue present (L1 in Experiment LL, L in Experiment LB, B1 in Experiment BB), and the other four were tested with only the other cue present (L2 in Experiment LL, B in Experiment LB, B2 in Experiment BB). Two cue 1-related objects and two cue 2-related objects entered each test. The order of test runs was counterbalanced across subjects.

Supplementary Material

Acknowledgments.

This work was funded by the Biotechnology and Biological Sciences Research Council and the U.K. Medical Research Council. We thank J. King for technical help; M. Lehmann for help with testing; and P. Dayan, U. Frith, M. Good, R. Honey, M. Mishkin, J. O'Keefe, and R. Rescorla for useful discussions.

Footnotes

The authors declare no conflict of interest.

This article contains supporting information online at www.pnas.org/cgi/content/full/0711433105/DC1.

References

- 1.Rescorla RA, Wagner AR. A theory of Pavlovian conditioning: Variations in the effectiveness of reinforcement and non-reinforcement. In: Black AH, Prokasy WF, editors. Classical conditioning II. Current research and theory. New York: Appleton-Century-Crofts; 1972. pp. 64–99. [Google Scholar]

- 2.Mackintosh NJ. A theory of attention: Variations in the associability of stimuli with reinforcement. Psychol Rev. 1975;82:276–298. [Google Scholar]

- 3.Dickinson A. Cambridge, UK: Cambridge Univ Press; 1980. Contemporary animal learning theory. [Google Scholar]

- 4.Pearce JM, Hall G. A model for Pavlovian learning: Variations in the effectiveness of conditioned but not of unconditioned stimuli. Psychol Rev. 1980;87:532–552. [PubMed] [Google Scholar]

- 5.Sutton RS, Barto AG. Cambridge, MA: MIT Press; 1988. Reinforcement learning: An introduction. [Google Scholar]

- 6.Tolman EC. Cognitive maps in rats and men. Psychol Rev. 1948;55:189–208. doi: 10.1037/h0061626. [DOI] [PubMed] [Google Scholar]

- 7.Gallistel R. Cambridge, MA: MIT Press; 1990. The organization of learning. [Google Scholar]

- 8.Rodrigo T, Chamizo VD, McLaren IP, Mackintosh NJ. Blocking in the spatial domain. J Exp Psychol Anim Behav Process. 1997;23:110–118. doi: 10.1037//0097-7403.23.1.110. [DOI] [PubMed] [Google Scholar]

- 9.Hamilton DA, Sutherland RJ. Blocking in human place learning: Evidence from virtual navigation. Psychobiol. 1999;27:453–461. [Google Scholar]

- 10.Biegler R, Morris RGM. Blocking in the spatial domain with arrays of discrete landmarks. J Exp Psychol Anim Behav Process. 1999;25:334–351. [PubMed] [Google Scholar]

- 11.Chamizo VD. Acquisition of knowledge about spatial location: Assessing the generality of the mechanism of learning. Q J Exp Psychol B. 2003;56:102–113. doi: 10.1080/02724990244000205. [DOI] [PubMed] [Google Scholar]

- 12.O'Keefe J, Nadel L. Oxford, UK: Oxford Univ Press; 1978. The hippocampus as a cognitive map. [Google Scholar]

- 13.Hirsh R. The hippocampus and contextual retrieval of information from memory: A theory. Behav Biol. 1974;12:421–444. doi: 10.1016/s0091-6773(74)92231-7. [DOI] [PubMed] [Google Scholar]

- 14.Mishkin M, Malamut B, Bachevalier J. Memories and habits: Two neural systems. In: Lynch G, McGaugh JL, Weinberger M, editors. Neurobiology of Learning and Memory. New York: Guilford; 1984. pp. 65–77. [Google Scholar]

- 15.Squire LR, Zola SM. Structure and function of declarative and nondeclarative memory systems. Proc Natl Acad Sci USA. 1996;93:13515–13522. doi: 10.1073/pnas.93.24.13515. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Morris RGM, Frey U. Hippocampal synaptic plasticity: Role in spatial learning or the automatic recording of attended experience? Philos Trans R Soc Lond B. 1997;352:1489–1503. doi: 10.1098/rstb.1997.0136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Eichenbaum H, Cohen NJ. From Conditioning to Conscious Recollection: Memory Systems of the Brain. Oxford: Oxford Univ Press; 2001. [Google Scholar]

- 18.Poldrack RA, Clark J, Pare-Blagoev EJ, Shohamy D, Creso MJ, Myers C, Gluck MA. Interactive memory systems in the human brain. Nature. 2001;414:546–550. doi: 10.1038/35107080. [DOI] [PubMed] [Google Scholar]

- 19.Gluck MA, Meeter M, Myers CE. Computational models of the hippocampal region: Linking incremental learning and episodic memory. Trends Cogn Sci. 2003;7:269–276. doi: 10.1016/s1364-6613(03)00105-0. [DOI] [PubMed] [Google Scholar]

- 20.O'Keefe J, Burgess N. Geometric determinants of the place fields of hippocampal neurons. Nature. 1996;381:425–428. doi: 10.1038/381425a0. [DOI] [PubMed] [Google Scholar]

- 21.Hartley T, Burgess N, Lever C, Cacucci F, O'Keefe J. Modeling place fields in terms of the cortical inputs to the hippocampus. Hippocampus. 2000;10:369–379. doi: 10.1002/1098-1063(2000)10:4<369::AID-HIPO3>3.0.CO;2-0. [DOI] [PubMed] [Google Scholar]

- 22.Cressant A, Muller RU, Poucet B. Failure of centrally placed objects to control the firing fields of hippocampal place cells. J Neurosci. 1997;17:2531–2542. doi: 10.1523/JNEUROSCI.17-07-02531.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Doeller CF, King JA, Burgess N. Parallel striatal and hippocampal systems for landmarks and boundaries in spatial memory. Proc Natl Acad Sci USA. 2008;105:5915–5920. doi: 10.1073/pnas.0801489105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Kamin LJ. Predictability, surprise, attention and conditioning. In: Campbell BA, Church RM, editors. Punishment and aversive behavior. New York: Appleton-Century-Crofts; 1969. pp. 279–296. [Google Scholar]

- 25.Pavlov IP. Conditioned Reflexes. Oxford: Oxford Univ Press; 1927. [Google Scholar]

- 26.Wagner AR. SOP: A model of automatic memory processing in animal behaviour. In: Spear NE, Miller RR, editors. Information Processing in Animals: Memory Mechanism. Hillsdale, NY: Lawrence Erlbaum; 1981. pp. 5–47. [Google Scholar]

- 27.Dayan P, Balleine BW. Reward, motivation, and reinforcement learning. Neuron. 2002;36:285–298. doi: 10.1016/s0896-6273(02)00963-7. [DOI] [PubMed] [Google Scholar]

- 28.Baker AG, Mackintosh NJ. Excitatory and inhibitory conditioning following uncorrelated presentations of CS and UCS. Anim Learn Behav. 1977;5:315–319. [Google Scholar]

- 29.Raby CR, Alexis DM, Dickinson A, Clayton NS. Planning for the future by western scrub-jays. Nature. 2007;445:919–921. doi: 10.1038/nature05575. [DOI] [PubMed] [Google Scholar]

- 30.Rescorla RA. Effect of following an excitatory-inhibitory compound with an intermediate reinforcer. J Exp Psychol Anim Behav Process. 2002;28:163–174. [PubMed] [Google Scholar]

- 31.Dickinson A. Actions and habits: The development of behavioural autonomy. Philos Trans R Soc Lond B. 1985;308:67–78. [Google Scholar]

- 32.Balleine BW, Dickinson A. Goal-directed instrumental action: Contingency and incentive learning and their cortical substrates. Neuropharmacology. 1998;37:407–419. doi: 10.1016/s0028-3908(98)00033-1. [DOI] [PubMed] [Google Scholar]

- 33.Daw ND, Niv Y, Dayan P. Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nat Neurosci. 2005;8:1704–1711. doi: 10.1038/nn1560. [DOI] [PubMed] [Google Scholar]

- 34.Miller NY, Shettleworth SJ. Learning about environmental geometry: An associative model. J Exp Psychol Anim Behav Process. 2007;33:191–212. doi: 10.1037/0097-7403.33.3.191. [DOI] [PubMed] [Google Scholar]

- 35.Le Pelley ME. The role of associative history in models of associative learning: A selective review and a hybrid model. Q J Exp Psychol B. 2004;57:193–243. doi: 10.1080/02724990344000141. [DOI] [PubMed] [Google Scholar]

- 36.Pearce JM. A model for stimulus generalization in Pavlovian conditioning. Psychol Rev. 1987;94:61–75. [PubMed] [Google Scholar]

- 37.Brandon SE, Vogel EH, Wagner AR. A componential view of configural cues in generalization and discrimination in Pavlovian conditioning. Behav Brain Res. 2000;110:67–72. doi: 10.1016/s0166-4328(99)00185-0. [DOI] [PubMed] [Google Scholar]

- 38.Williams DA, LoLordo VM. Time cues block the CS, but the CS does not block time cues. Q J Exp Psychol B. 1995;48:97–116. [PubMed] [Google Scholar]

- 39.Maurer R, Derivaz V. Rats in a transparent morris water maze use elemental and configural geometry of landmarks as well as distance to the pool wall. Spatial Cognit Comput. 2000;2:135–156. [Google Scholar]

- 40.Morris RGM, Garrud P, Rawlins JN, O'Keefe J. Place navigation impaired in rats with hippocampal lesions. Nature. 1982;297:681–683. doi: 10.1038/297681a0. [DOI] [PubMed] [Google Scholar]

- 41.Pearce JM, Roberts AD, Good M. Hippocampal lesions disrupt navigation based on cognitive maps but not heading vectors. Nature. 1998;396:75–77. doi: 10.1038/23941. [DOI] [PubMed] [Google Scholar]

- 42.Redhead ES, Roberts A, Good M, Pearce JM. Interaction between piloting and beacon homing by rats in a swimming pool. J Exp Psychol Anim Behav Process. 1997;23:340–350. doi: 10.1037//0097-7403.23.3.340. [DOI] [PubMed] [Google Scholar]

- 43.Taube JS. Head direction cells and the neuropsychological basis for a sense of direction. Prog Neurobiol. 1998;55:225–256. doi: 10.1016/s0301-0082(98)00004-5. [DOI] [PubMed] [Google Scholar]

- 44.Hartley T, Trinkler I, Burgess N. Geometric determinants of human spatial memory. Cognition. 2004;94:39–75. doi: 10.1016/j.cognition.2003.12.001. [DOI] [PubMed] [Google Scholar]

- 45.Jeffery KJ, Donnett JG, Burgess N, O'Keefe JM. Directional control of hippocampal place fields. Exp Brain Res. 1997;117:131–142. doi: 10.1007/s002210050206. [DOI] [PubMed] [Google Scholar]

- 46.Fenton AA, Arolfo MP, Nerad L, Bureš J. Place navigation in the Morris water maze under minimum and redundant extramaze cue conditions. Behav Neural Biol. 1994;62:178–189. doi: 10.1016/s0163-1047(05)80016-0. [DOI] [PubMed] [Google Scholar]

- 47.Pearce JM, Graham M, Good MA, Jones PM, McGregor A. Potentiation, overshadowing, and blocking of spatial learning based on the shape of the environment. J Exp Psychol Anim Behav Process. 2006;32:201–214. doi: 10.1037/0097-7403.32.3.201. [DOI] [PubMed] [Google Scholar]

- 48.Cheng K. A purely geometric module in the rat's spatial representation. Cognition. 1986;23:149–178. doi: 10.1016/0010-0277(86)90041-7. [DOI] [PubMed] [Google Scholar]

- 49.Hayward A, McGregor A, Good MA, Pearce JM. Absence of overshadowing and blocking between landmarks and the geometric cues provided by the shape of a test arena. Q J Exp Psychol B. 2003;56:114–126. doi: 10.1080/02724990244000214. [DOI] [PubMed] [Google Scholar]

- 50.Hermer L, Spelke E. Modularity and development: The case of spatial reorientation. Cognition. 1996;61:195–232. doi: 10.1016/s0010-0277(96)00714-7. [DOI] [PubMed] [Google Scholar]

- 51.Pearce JM, Good MA, Jones PM, McGregor A. Transfer of spatial behavior between different environments: Implications for theories of spatial learning and for the role of the hippocampus in spatial learning. J Exp Psychol Anim Behav Process. 2004;30:135–147. doi: 10.1037/0097-7403.30.2.135. [DOI] [PubMed] [Google Scholar]

- 52.Packard MG, McGaugh JL. Inactivation of hippocampus or caudate nucleus with lidocaine differentially affects expression of place and response learning. Neurobiol Learn Mem. 1996;65:65–72. doi: 10.1006/nlme.1996.0007. [DOI] [PubMed] [Google Scholar]

- 53.Knowlton BJ, Mangels JA, Squire LR. A neostriatal habit learning system in humans. Science. 1996;273:1399–1402. doi: 10.1126/science.273.5280.1399. [DOI] [PubMed] [Google Scholar]

- 54.Schultz W, Dayan P, Montague PR. A neural substrate of prediction and reward. Science. 1997;275:1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- 55.Waelti P, Dickinson A, Schultz W. Dopamine responses comply with basic assumptions of formal learning theory. Nature. 2001;412:43–48. doi: 10.1038/35083500. [DOI] [PubMed] [Google Scholar]

- 56.Pessiglione M, Seymour B, Flandin G, Dolan RJ, Frith CD. Dopamine-dependent prediction errors underpin reward-seeking behaviour in humans. Nature. 2006;442:1042–1045. doi: 10.1038/nature05051. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.