Abstract

Psychophysical experiments have shown that the discrimination of human vowels chiefly relies on the frequency relationship of the first two peaks F1 and F2 of the vowel’s spectral envelope. It has not been possible, however, to relate the two-dimensional (F1,F2)-relationship to the known organization of frequency representation in auditory cortex. We demonstrate that certain spectral integration properties of neurons are topographically organized in primary auditory cortex in such a way that a transformed (F1,F2) relationship sufficient for vowel discrimination is realized.

Keywords: auditory cortex, gerbil, speech, electrophysiology, 2-deoxyglucose

In 1952, Delattre et al. (1) reported that the first two peaks on the spectral envelopes of vowel sounds, the so-called first and second formants, F1 and F2, are necessary and sufficient for identifiying a vowel.† In the same year Peterson and Barney (5) showed that different vowels of American English uttered by numerous speakers form almost disjunct clusters in a coordinate system spanned by the center frequencies of the two formants F1 and F2 (Peterson–Barney map). This was taken to imply that a mechanism of vowel identification relied on the extraction of separate formants, using spectral or temporal codes (6–9),‡ at some point in the auditory pathway (“formant extraction” principle) and on subsequent representation in a two-dimensional frequency map (11). Although two-formant receptive fields of single units for synthetic two-formant vowels have been demonstrated in an animal model of vowel perception (12) and combination-sensitive units have been described in a specialized area of bat auditory cortex (13–15), it has not been possible to translate the principle of the Peterson vowel map (i.e., systematic vowel representation in terms of a two-dimensional frequency map) into any of the known maps of mammalian auditory systems. Tonotopic maps typically contain a one-dimensional frequency gradient (tonotopic axis) with an isofrequency axis showing little variation of frequency sensitivity (isofrequency axis). On the other hand, recent experiments using complex sounds with sinusoidally shaped spectral envelopes (“ripple spectra”) demonstrated a systematic representation of the periodicity of the modulated envelope in these maps (16–19). This can be interpreted as a neuronal sensitivity for interactions between spectral components, a principle that may be applied to vowel discrimination without requiring separate formant representations in the sense of feature extraction.§ However, it has not been clarified whether such a mechanism would separate natural vowels with their characteristic variation of F1 and F2 as implied by the Peterson–Barney map. We demonstrate that a synthesis between the two views, a first formant sensitivity together with a formant interaction achieved by a reformulation of the principle of the Peterson–Barney map conserves vowel separation. With brain imaging and electrophysiological recording, we show that the new principle is indeed represented in mammalian auditory cortex.

MATERIALS AND METHODS

Stimuli.

Natural vowels, synthetic two-formant vowels, and aritificial two-formant stimuli were used. Natural vowels were obtained from speech samples of 25 male and 25 female native speakers (age 28–55 years) uttering the German words vital (/i/), viel (/i:/), Methan (/e/), Beet (/e:/), Moral (/o/), Boot (/o:/), kulant (/u/), and Hut (/u:/). Speech samples were recorded through a condenser microphone on a DAT tape and 12-bit digitized for further analysis. Steady-state segments (50 ms) of the vowels were visually identified from calculated spectrograms [wide-band fast Fourier transform (FFT), 8-ms time segment, 300-Hz filter, and 2048 points] and subsequently verified acoustically by replaying. Within these segments, formants F1 and F2 were identified by narrow-band FFT (25-ms time segment, 45-Hz filter, and 2048 points). Synthetic two-formant vowels were generated by harmonic synthesis of three spectral lines per formant, a central line at F1 or F2, and two flanking lines of 50% amplitude at a 100-Hz distance. Formant combinations (F1[Hz], F2[Hz]) were/i/: (300, 2500),/e/: (600, 2500),/u/: (300, 800), and/o/: (600, 800). Formants of artificial two-formant stimuli were identically synthesized with F1 fixed at 300 Hz and F2 at a variable distance to F1.

2-Fluoro-2-Deoxy-d-[14C(U)]Glucose (FDG) Autoradiography.

Vowel-evoked cortical activity patterns were studied by using FDG imaging (26). Sixteen awake subadult Mongolian gerbils (Meriones unguiculatus) were injected i.p. with 18 μCi of FDG (1 Ci = 37 GBq) and exposed individually for 45 min to repetitive free-field stimulation with synthesized two-formant vowels (250 ms, vowel; 750 ms, pause) at 70-dB sound pressure level (SPL) in an anechoic sound-attenutated chamber, four animals per vowel. Animals were killed by an injection of T61 (Hoechst Pharmaceuticals), brains were removed, and autoradiographs of consecutive 40-μm horizontal sections were prepared and reconstructed by using anatomical landmarks as detailed in ref. 26. By using these landmarks, the topography and geometry of AI in gerbils is precise enough to accomplish spatial averages of tonotopic organization across groups of animals and, thus, to predict tonotopic locations in a given animal (26, 27).

Electrophysiology.

Animals were prepared for chronic recording under deep halothane anesthesia. Recordings in five awake but stereotaxically fixed animals took place after several days of recovery in an anechoic chamber; for details, see ref. 28. Formant interaction patterns in single units and small clusters of units were studied in tangential penetrations of AI along low frequency (<4 kHz) isofrequency contours in layers III and IV by using standard extracellular electrophysiological recording techniques (1- to 3-MΩ impedance tungsten microelectrodes, 1- to 3-kHz bandpass filtering, ×10,000 amplification, and spike windowing). Reference for recording depth was the most dorsal responsive unit encountered in each track, i.e., the dorsal roof of auditory cortex (28, 29). Artificial two-formant stimuli were presented through a loudspeaker in front of the contralateral ear at 65-dB SPL. Experiments and maintenance of animals were in accordance with National Institutes of Health guidelines.

RESULTS

Formant Structure of Vowels.

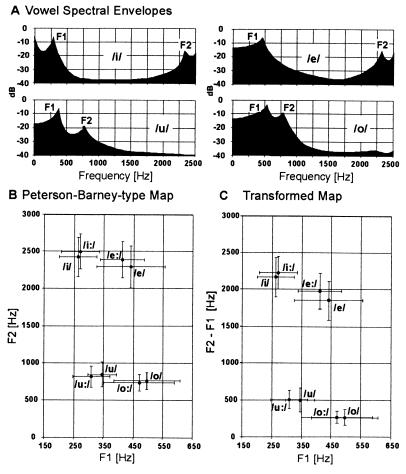

To investigate alternative principles of vowel discrimination and representation that might be compatible with auditory maps, we first analyzed F1 and F2 in short and long versions of the German vowels/i/, /e/,/u/, and/o/(Fig. 1A). These were chosen for symmetry of shared formant pairs [F1 shared by the pair (/u/,/i/) and the pair (/o/,/e/); F2 shared by (/u/,/o/) and (/i/,/e/)]. This configuration allowed us to draw maximum information about potential neuronal correlates of F1 and F2. When the plot of the formant frequencies according to Peterson and Barney (Fig. 1B) was transformed into an (F1, F2−F1) coordinate system (Fig. 1C), the gross structure of the map was maintained, with a comparable separation of the individual vowels. This identified the spectral relation F2−F1 as a potentially useful parameter for vowel identification. The demonstration of the physiological relevance of this relation required evidence for a systematic neuronal representation along the isofrequency axis.¶

Figure 1.

Spectral envelopes (A), Peterson–Barney type map (B), and F2−F1 transformed map (C) for the German vowels/i/,/i:/,/e/, /e:/,/o/,/o:/,/u/, and/u:/. Averages and standard deviations of F1, F2, and F2−F1 across the 50 samples of each vowel were plotted. The transformed map (interaction principle) not only has a gross structure similar to the original Peterson–Barney type map (extraction principle) but also specifically shows a comparable amount of separation of the individual vowels demonstrating the usefulness of the parameter F2−F1 for vowel identification.

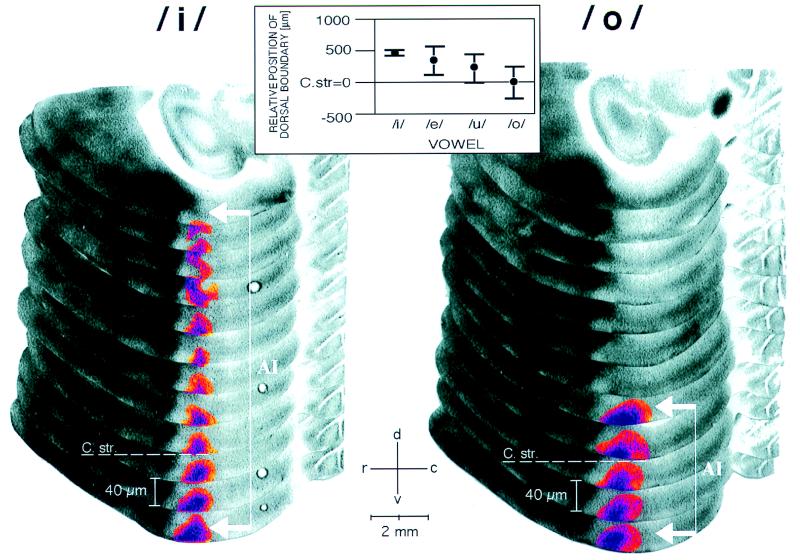

Metabolic Cortical Activity Patterns.

The first evidence was provided by analyzing the auditory cortical activity patterns evoked by stimulation with the synthesized two-formant vowels/i/,/e/,/u/, and/o/using the FDG functional mapping method in gerbils (26). The general usefulness of animal models in auditory speech research has been theoretically analyzed and convincingly demonstrated in the pioneering work of Kuhl and Miller (30) and Kuhl (31–34). Gerbils were chosen for the present experiments because their cortical maps show an unusually high spatial resolution for frequencies in the speech range (26, 28, 29) and they learn to discriminate vowels (unpublished data) as many other mammals do (35–37).

The metabolic activity patterns obtained were interpretable in the light of a previous analysis of tonotopic organization of auditory cortical fields in the gerbil with the FDG method (26). In consecutive horizontal sections through the cortex, a vowel-evoked pattern in the primary field AI appeared as a vertical stripe of increased metabolic activity parallel to the dorso-ventral isofrequency contours (Fig. 2). Although the method resolves frequencies in the range of the two formants (at least for/i/and/e/) (26), none of the used vowels produced two parallel stripes but rather each vowel produced one broad stripe in contrast to expectations based on the idea of separate formant extraction and representation. In the rostro-caudal direction, a stripe was centered to tonotopic positions corresponding to frequencies between 1 and 2 kHz (see Materials and Methods) but did not allow differentiation between vowels. However, in the dorso-ventral direction along the isofrequency contour the position of the stripe was vowel-dependent: the dorsal boundary of the activated region was located more ventrally as the formant distance F2−F1 decreased in the sequence/i/,/e/,/u/, and/o/(Fig. 2 Inset). This sequence is also the succession of vowels along the F2−F1 axis in the transformed Peterson–Barney map (Fig. 1C). This result demonstrates that the spectral relation F2−F1 as proposed by the transformed Peterson map has a neuronal representation.

Figure 2.

Activity patterns in dorsal auditory cortex evoked by vowels/i/and/o/demonstrated with FDG mapping and reconstruction procedures (26). Autoradiographs of every second serial horizontal section (40 μm per section) of the left hemisphere are mounted in a partially overlapping fashion. Vowel representation in field AI appeared as a dorso-ventral stripe along the isofrequency axis and was highlighted by using a pseudo-color transformation relative to the optical density of the corpus callosum. The dorso-ventral boundary of the labeling was determined as the position where the acitivity peak had completely vanished, measured relative to the dorsal roof of the corpus striatum (C str. dashed line), which serves as a reliable topographic anatomical reference (26). Large formant distances F2−F1, as in/i/, led to stripes that extended far dorsally (white bracket with arrows), whereas stripes obtained with small formant distances, as in/o/, ended close to the dorso-ventral level of the roof of the corpus striatum. r, Rostral; c, caudal; d, dorsal; v, ventral. (Inset) Topographic representation of formant distance F2−F1 along the isofrequency axis in AI. The dorsal boundary of the vowel-evoked FDG labeling was located more ventrally with decreasing formant distance in the sequence/i/,/e/,/u/, and/o/(cf. Fig. 1) and, therefore, provided a topographic measure of the formant distance (ANOVA, P < 0.05).

Additional tests with single formants F1 or F2 produced stripes of labeling with maximal dorsal extent (data not shown). This indicated that inhibition plays a role in the vowel-specific reduction of neuronal activity along the isofrequency axis.‖ Specifically, inhibitory formant interaction may depend on vowel formant distance F2−F1 and may be topographically organized along isofrequency contours causing the activity patterns to reflect the structure of the transformed Peterson map.

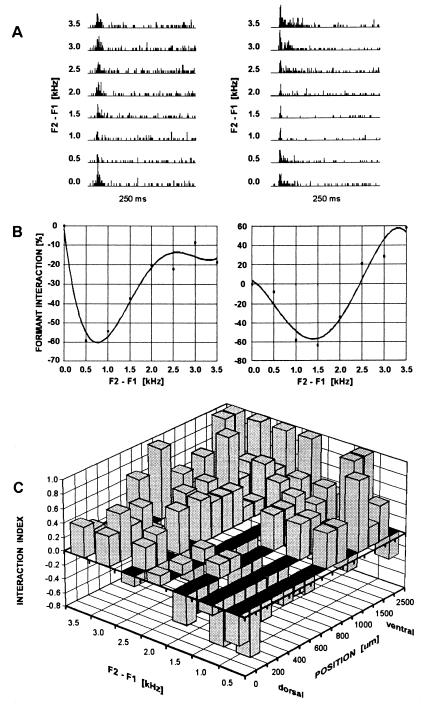

Topographic Organization of Formant Interaction.

This hypothesis was tested electrophysiologically by making single and multiunit recordings (n = 87) along isofrequency (<4 kHz) contours in dorsal AI (Fig. 3A). Stimuli were synthetic two-formant vowels similar to those used in FDG experiments but with formant distances F2−F1 continuously varied from 0.5 to 3.5 kHz, keeping F1 constant at 300 Hz. Fig. 3B shows examples of formant interaction profiles of two units demonstrating inhibition (left diagram) and inhibition and summation (right diagram) of responses as a result of formant combination. The proportions of purely summational, purely inhibitory, and mixed formant interaction types were 18.4%, 9.2%, and 72.4%, respectively. The types of interaction varied with respect to the formant distance that produced maximum inhibition or summation. To clarify the topographic distribution of formant interactions across all units, we determined the proportion of units showing an inhibitory or summational interaction for a given position along the isofrequency contour and a given formant distance F2−F1. This analysis revealed that inhibitory responses after stimulation with vowels with small/o/-like formant distances occurred most abundantly in dorsal parts of the isofrequency contour. The proportion of such responses decreased ventrally and with increasing formant distances (Fig. 3C). The proportion of inhibitory formant interactions depended on both the formant distance F2−F1 and the spatial coordinates along isofrequency contours, which supports our initial hypothesis and provides an explanation for the topographic shift of metabolic acitivity in the FDG data observed with different vowels. Thus, with the prominent inhibitory mechanisms, the summational responses at larger formant distances F2−F1 indicate the presence of a sensitive contrast mechanism for formant interaction along isofrequency contours. The spatial range of (F2−F1)-dependent inhibitory modulation of neuronal activity encompassed the dorsal third of AI (29) in agreement with the FDG data (roughly 800 μm corresponding to 20 horizontal sections; Fig. 2 Inset).

Figure 3.

Illustration of neuronal sensitivity to formant interaction and topographic distribution of proportion of summational and inhibitory interactions along the isofrequency axis. (A) Peristimulus time histograms (250 ms; bin width, 1 ms; 10 repetitions) of spike counts from a dorsal unit (Left) and a more ventral unit (Right) responding to eight synthetic 2-formant vowels with variable formant distance F2−F1. At 0.0, responses to F1 alone are shown. (B) Formant interaction profiles of the same units with fourth-order polynomial data fit. Formant interaction at a given formant distance F2−F1 was defined as [r(F2−F1) − r(F1)]/r(F1) × 100%, where r(F1) is the spike count after stimulation with F1 alone and r(F2−F1) is the spike count after addition of F2 at a frequency distance F2−F1. (C) Topographic distribution of summational and inhibitory responses to formant interaction based on 87 units. An interaction index I = (sum − in)/(sum + in), where sum and in are the numbers of occurrences of summational and inhibitory formant interaction, respectively, was plotted as a function of formant distance F2−F1 (0.5-kHz bins) and dorso-ventral position along the isofrequency axis (100-μm bins dorsal to 1000 μm and 250-μm bins ventral to 1000 μm). An I value of +1 corresponds to summational interactions only and an I value of −1 corresponds to inhibitory interactions only. Inhibitory formant interaction occurred most abundantly in dorsal parts of the isofrequency contour for vowels with small formant distances.

DISCUSSION

The present study made use of almost crystalline topographic organization of the gerbil auditory cortex (26, 29) to tackle the old question of how information about the formant structure vowels (5) might be represented in the auditory nervous system. The results reported herein suggest that vowels are represented in auditory cortex by activity distributions in the low-frequency areas along the tonotopic axis, without resolving formant peaks, and mapping relations between spectral peaks in a topographic manner along the isofrequency axis. This result indicates the principal physiological relevance of spectral relations (feature interaction) as was proposed in the literature on the basis of psychophysical considerations (41) as opposed to exclusive separation of frequencies (feature extraction) sought for in past physiological studies.

Relation to Other Physiological Studies of Vowel Representation.

The weak extraction of vowel formant peaks by rate codes or temporal codes in the auditory nerve (3) that is compatible with a lack of separate F1 and F2 peaks of FDG labeling in our data all pose a problem for attempts to explain psychophysical data of vowel perception (1–3, 5) by physiological data under the implicit assumption that units contribute to the coding only of those frequencies in a vowel spectrum that correspond to their characteristic frequencies (extraction principle). This problem is circumvented by the proposed mechanism of vowel representation based on formant interaction (interaction principle). This principle implies a partial overlap of vowel representations in agreement with theoretical arguments given by modeling studies (42).

Relevance for Cortical Mechanisms of Speech Perception.

Functional imaging and psycholinguistic (43–46) studies have revealed cortical territories and human-specific modes of auditory speech processing that leave open the possibility that vowel-specific maps exist in humans. However, the present findings indicate that some underlying neuronal mechanisms of phoneme discrimination could be based upon phylogenetically old principles of functional organization of auditory cortex. In this light and under the assumption that formant extraction in the peripheral human auditory system is as weak as in animals, the described mechanisms of auditory cortical representation may in humans have the role of preprocessing for a subsequent more detailed analysis of the multitude of human vowels.

Acknowledgments

We thank Drs. J. Altmann (London) and G. Ehret (Ulm) for critical comments about earlier versions of the manuscript. Part of this work was supported by the Schwerpunktprogramm “Physiologie und Theorie neuronaler Netzwerke” of the Deutsche Forschungsgemeinschaft.

ABBREVIATION

- FDG

fluoro-2-deoxy-d-[14C(U)]glucose

Footnotes

For overview and general critique, see ref. 10.

Effective interactions of spectral components of complex sounds have been variously demonstrated in the auditory cortex (20–23). Spectral interactions have been discussed in terms of critical bands in the midbrain (24). Nonlinear formant interactions have been reported in monkey auditory cortex in ref. 25.

It is noteworthy that the demonstration of topography in the neural representation of the spectral relation F2−F1 does not exclude the topographic representation of other spectral relations such as F2/F1 or more complicated transformations. Herein, we did not aim to distinguish between these alternatives, which would yield only differently warped maps but would all share the same monotonicity, i.e., topography, which is the essential scope of the study.

References

- 1.Delattre P, Libermann A M, Cooper F S, Gerstman L J. Word. 1952;8:195–210. [Google Scholar]

- 2.Pickett J M J. Acoust Soc Am. 1957;29:613–620. [Google Scholar]

- 3.Hose B, Langner G, Scheich H. Hear Res. 1983;9:13–25. doi: 10.1016/0378-5955(83)90130-2. [DOI] [PubMed] [Google Scholar]

- 4.Rosner B S, Pickering J B. Vowel Perception and Production. Oxford: Oxford Univ. Press; 1994. [Google Scholar]

- 5.Peterson G E, Barney H L. J Acoust Soc Am. 1952;24:175–184. [Google Scholar]

- 6.Delgutte B J. Acoust Soc Am. 1984;75:879–886. doi: 10.1121/1.390597. [DOI] [PubMed] [Google Scholar]

- 7.Delgutte B, Kiang N Y S. Acoust Soc Am. 1984;75:866–878. doi: 10.1121/1.390596. [DOI] [PubMed] [Google Scholar]

- 8.Sachs M B, Young E D. Acoust Soc Am. 1979;66:470–479. doi: 10.1121/1.383098. [DOI] [PubMed] [Google Scholar]

- 9.Young E D, Sachs M B. Acoust Soc Am. 1979;66:1381–1403. doi: 10.1121/1.383532. [DOI] [PubMed] [Google Scholar]

- 10.Pickles J O. An Introduction to the Physiology of Hearing. 2nd Ed. London: Academic; 1988. pp. 287–291. [Google Scholar]

- 11.Sussman H M. Brain Lang. 1986;28:12–23. doi: 10.1016/0093-934x(86)90087-8. [DOI] [PubMed] [Google Scholar]

- 12.Langner G, Bonke D, Scheich H. Exp Brain Res. 1981;43:11–24. doi: 10.1007/BF00238805. [DOI] [PubMed] [Google Scholar]

- 13.Suga N. J Physiol (London) 1968;198:51–80. doi: 10.1113/jphysiol.1968.sp008593. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Suga N, O’Neili W E, Kujirai K, Manabe T. J Neurophysiol. 1983;49:1573–1626. doi: 10.1152/jn.1983.49.6.1573. [DOI] [PubMed] [Google Scholar]

- 15.Fitzpatrick D C, Kanwal J S, Butman J A, Suga N. J Neurosci. 1993;13:931–940. doi: 10.1523/JNEUROSCI.13-03-00931.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Schreiner C E, Calhoun B M. Auditory Neurosci. 1994;1:39–61. [Google Scholar]

- 17.Shamma S A, Versnel H, Kowalski N. Auditory Neuroscience. 1995;1:233–254. [Google Scholar]

- 18.Shamma S A, Versnel H. Auditory Neurosci. 1995;1:255–270. [Google Scholar]

- 19.Versnel H, Kowalski N, Shamma S A. Auditory Neurosci. 1995;1:271–285. [Google Scholar]

- 20.Nelken I, Prut Y, Vaadia E, Abeles M. Hear Res. 1994;42:206–222. doi: 10.1016/0378-5955(94)90220-8. [DOI] [PubMed] [Google Scholar]

- 21.Nelken I, Prut Y, Vaadia E, Abeles M. Hear Res. 1994;42:223–236. doi: 10.1016/0378-5955(94)90220-8. [DOI] [PubMed] [Google Scholar]

- 22.Nelken I, Prut Y, Vaadia E, Abeles M. Hear Res. 1994;42:237–253. doi: 10.1016/0378-5955(94)90222-4. [DOI] [PubMed] [Google Scholar]

- 23.Rauschecker J P, Tian B, Hauser M. Science. 1995;268:111–114. doi: 10.1126/science.7701330. [DOI] [PubMed] [Google Scholar]

- 24.Ehret G, Merzenich M M. Science. 1985;225:1245–1247. doi: 10.1126/science.3975613. [DOI] [PubMed] [Google Scholar]

- 25.Steinschneider M, Arezzo J C, Vaughan H G., Jr Brain Res. 1990;519:158–168. doi: 10.1016/0006-8993(90)90074-l. [DOI] [PubMed] [Google Scholar]

- 26.Scheich H, Heil P, Langner G. Eur J Neurosci. 1993;5:898–914. doi: 10.1111/j.1460-9568.1993.tb00941.x. [DOI] [PubMed] [Google Scholar]

- 27.Scheich H, Zuschratter W. Behav Brain Res. 1995;66:195–205. doi: 10.1016/0166-4328(94)00140-b. [DOI] [PubMed] [Google Scholar]

- 28.Ohl F W, Scheich H. Eur J Neurosci. 1996;8:1001–1017. doi: 10.1111/j.1460-9568.1996.tb01587.x. [DOI] [PubMed] [Google Scholar]

- 29.Thomas H, Tillein J, Heil P, Scheich H. Eur J Neurosci. 1993;5:882–897. doi: 10.1111/j.1460-9568.1993.tb00940.x. [DOI] [PubMed] [Google Scholar]

- 30.Kuhl P K, Miller J D. Science. 1975;190:69–72. doi: 10.1126/science.1166301. [DOI] [PubMed] [Google Scholar]

- 31.Kuhl P K. Brain Behav Evol. 1979;16:374–408. doi: 10.1159/000121877. [DOI] [PubMed] [Google Scholar]

- 32.Kuhl P K. J Acoust Soc Am. 1981;70:340–349. [Google Scholar]

- 33.Kuhl P K. Exp Biol. 1986;45:233–265. [PubMed] [Google Scholar]

- 34.Kuhl P K. Hum Evol. 1988;3:19–43. [Google Scholar]

- 35.Dewson J H., III Science. 1964;144:555–556. doi: 10.1126/science.144.3618.555. [DOI] [PubMed] [Google Scholar]

- 36.Baru A V. In: Auditory Analysis and Perception of Speech. Fant G, Tatham M A A, editors. London: Academic; 1975. pp. 91–101. [Google Scholar]

- 37.Burdick C K, Miller J D. J Acoust Soc Am. 1975;58:415–427. doi: 10.1121/1.380686. [DOI] [PubMed] [Google Scholar]

- 38.Shamma S A. Acoust Soc Am. 1985;78:1612–1621. doi: 10.1121/1.392799. [DOI] [PubMed] [Google Scholar]

- 39.Shamma S A. Acoust Soc Am. 1985;78:1622–1632. doi: 10.1121/1.392800. [DOI] [PubMed] [Google Scholar]

- 40.Shamma S A, Symmes D J. Hear Res. 1985;19:1–13. doi: 10.1016/0378-5955(85)90094-2. [DOI] [PubMed] [Google Scholar]

- 41.Miller J D. J Acoust Soc Am. 1989;85:2114–2134. doi: 10.1121/1.397862. [DOI] [PubMed] [Google Scholar]

- 42.Sejnowski T J, Rosenberg C R. Complex Systems. 1987;1:145–168. [Google Scholar]

- 43.Paulescu E, Frith C D, Frackowiak R S J. Nature (London) 1993;362:342–345. doi: 10.1038/362342a0. [DOI] [PubMed] [Google Scholar]

- 44.Frackowiak R S J. Trends Neurosci. 1994;17:109–115. doi: 10.1016/0166-2236(94)90119-8. [DOI] [PubMed] [Google Scholar]

- 45.Nobre A C, Allison T, McCarthy G. Nature (London) 1994;372:260–263. doi: 10.1038/372260a0. [DOI] [PubMed] [Google Scholar]

- 46.Kuhl P K. Percept Psychophys. 1991;50:93–107. doi: 10.3758/bf03212211. [DOI] [PubMed] [Google Scholar]