Abstract

Objective

To investigate the temporal trends in the volume–outcome relationship in coronary artery bypass graft (CABG) surgery in California from 1998 to 2004, and to assess the selection effects on this relationship by using data from periods of voluntary and mandatory hospital reporting.

Data Sources

We used patient-level clinical data collected for the California CABG Mortality Reporting Program (CCMRP, a voluntary reporting program with between 68 and 81 hospitals) from 1998 to 2002 and the California CABG Outcomes Reporting Program (CCORP, a mandatory reporting program with 121 and 120 hospitals) from 2003 to 2004.

Study Design

The patient was the primary unit of analysis, and in-hospital mortality was the primary outcome. We used hierarchical logistic regression models (generalized linear mixed models) to assess the association of hospital annual volume with hospital mortality while controlling for detailed patient-level covariates in each of the 7 years.

Data Collection Methods

All data were systematically collected, reviewed for accuracy, and validated by the State of California's Office of Statewide Health Planning and Development (OSHPD).

Principal Findings

We found that during the period of voluntary hospital reporting (1998–2002), with the exception of 1998, higher volume hospitals had significantly lower risk-adjusted in-hospital mortality rates, on average, than lower volume hospitals (1998 odds ratio [OR] per 100 operations performed = 0.962, 95 percent confidence interval [CI]: 0.912–1.015; 1999 OR = 0.955, 95 percent CI: 0.920–0.991; 2000 OR = 0.942, 95 percent CI: 0.897–0.989; 2001 OR = 0.935, 95 percent CI: 0.887–0.986; 2002 OR = 0.946, 95 percent CI: 0.899–0.997). We also found that in the period of mandatory reporting (2003 and 2004) there was no volume–outcome relationship (2003 OR = 0.997, 95 percent CI: 0.939–1.058; 2004 OR = 0.984, 95 percent CI: 0.915–1.058) and that this lack of association was not due to a reporting bias from the addition of data from hospitals that did not originally contribute during the voluntary program.

Conclusions

In California, where no state regulations support regionalization of CABG surgeries, a weak volume–outcome relationship was present from 1998 to 2002, but was absent in 2003 and 2004. The disappearance of the volume–outcome association was temporally related to the implementation of a statewide mandatory CABG surgery reporting program.

Keywords: Volume–outcome relationship, coronary artery bypass graft surgery, regionalization of services, risk adjustment, multilevel modeling

The association between the quantity of care that a physician or hospital provides and the quality of care that patients receive has been rigorously studied by clinicians and health services researchers (Luft, Bunker, and Enthoven 1979; Birkmeyer et al. 2003; Gandjour, Bannenberg, and Lauterbach 2003). This “volume–outcome” relationship has been documented for a wide variety of medical conditions and surgical procedures at the physician, clinical team, and hospital level of care (Halm, Lee, and Chassin 2002; Elixhauser, Steiner, and Fraser 2003). In a report reviewing the volume–outcome relationship published by the Institute of Medicine, the authors noted that 77 percent of the published volume–outcome studies had demonstrated a significant relationship between higher physician and hospital volumes and better health outcomes (Hewitt et al. 2001).

The volume–outcome relationship has been most intensely studied for patients receiving coronary artery bypass grafting (CABG) surgery, perhaps because regionalization is a particularly promising policy strategy to improve care for high-risk elective surgery. Although many studies have found that hospitals performing relatively more CABG surgeries have better outcomes (Luft, Bunker, and Enthoven 1979; Birkmeyer et al. 2002, 2003; Hannan et al. 2003; Peterson et al. 2004), the importance of this relationship for health policy remains controversial. First, several recent studies using robust statistical methods and careful risk adjustment have found either minor or insignificant hospital CABG volume–outcome relationships, or no relationship at all (Christiansen and Morris 1997; Shahian et al. 2001; Kalant and Shrier 2004; Peterson et al. 2004). Second, studies of regionalization policies have been inconclusive regarding their impact on improving care (Vaughan-Sarrazin et al. 2002; Nobilio and Ugolini 2003). Third, there is some evidence that the observed volume–outcome association may reflect selective referral of patients to high-quality surgeons and hospitals, rather than greater experience leading to improved skills (Luft et al. 1990; Escarce et al. 1999).

In addition, the effect of public reporting of CABG surgery performance on the volume–outcome relationship is unknown. In several studies, the publication of CABG surgery quality reports has been associated with reduced CABG mortality (Hannan et al. 1995; Rosenthal, Quinn, and Harper 1997; Romano and Zhou 2004) and enhanced quality improvement efforts, particularly for low-quality and low-volume outliers (Bentley and Nash 1998; Rainwater, Romano, and Antonius 1998; Chassin 2002). Therefore, public performance data may help stimulate standardization of procedures, improved training, acquisition of better equipment, and diffusion of innovation, thereby improving outcomes among low-quality and low-volume outliers and attenuating the volume–outcome relationship. Further, as some of the studies that have reported a significant volume–outcome relationship used data from voluntary reporting programs, selection bias could have contributed to misestimation of the volume effect (Clark 1996; Christian et al. 2003; Peterson et al. 2004). For example, the volume–outcome relationship could be overestimated if nonparticipating low-volume hospitals had better than average outcomes or if nonparticipating high-volume hospitals had worse than average outcomes.

The goal of this study was to investigate the volume–outcome relationship for CABG surgery in California from 1998 to 2004, a period during which public reporting of CABG outcomes transitioned from a voluntary basis to a state-mandated program. We analyze data from the California CABG Mortality Reporting Program (CCMRP), a voluntary reporting program with between 68 and 81 participating nonfederal hospitals from 1998 to 2002, and from the California CABG Outcomes Reporting Program (CCORP), a mandatory reporting program with 121 and 120 nonfederal hospitals from 2003 to 2004, respectively. Our objectives were (1) to use hierarchical logistic regression to determine whether hospitals performing more CABG surgeries have lower risk-adjusted mortality than hospitals performing fewer cases, and (2) to assess potential explanations for any change in the magnitude of the volume–outcome association between the voluntary reporting period (1998–2002) and the mandatory reporting period (2003 and 2004).

METHODS

The CCMRP was established in 1995 as a joint activity between the California Office of Statewide Health Planning and Development (OSHPD) and the Pacific Business Group on Health (PBGH). This voluntary program collected data from 1997 through 2002. The first CCMRP report was publicly released in July 2001 and presented data from 1997 to 1998 (Damberg, Chung, and Steimle 2001). The second report was released in August 2003 and presented data from 1999 (Damberg et al. 2003). The third report was released in February 2005 and presented data from 2000 to 2002 (Parker et al. 2005a). Beginning in 2003, data reporting became mandatory for all licensed nonfederal hospitals performing CABG surgery in California. The mandatory reporting program, referred to as the CCORP, published its first report in February 2006, based on data collected during 2003 (Parker et al. 2005b). The second CCORP report, to be published in 2007, will include physician-level data from 2003 to 2004.

The data used in this article include 5 years of data from the voluntary CCMRP (1998–2002) plus the first 2 years of data from the mandatory CCORP program (2003 and 2004). The data from 1997, the first year of the CCMRP, only included 34 hospitals and were therefore not included in these analyses. Further details on the CCMRP and CCORP reports including specifics on data collection, validation procedures, and statistical methods have been published (Parker et al. 2005a, b) and are available online (http://www.oshpd.ca.gov/hqad/outcomes/clinical.htm).

Data Elements

CCMRP and CCORP collect patient-level data elements defined by the Society of Thoracic Surgeons (STS) in its National Adult Cardiac Surgery database (Welke et al. 2004) as well as other state programs (Table 1) (Shroyer et al. 2003; Massachusetts Data Analysis Center [MASS-DAC] 2004; New York State Department of Health 2004; New Jersey Department of Health and Senior Services 2005; Pennsylvania Health Care Cost Containment Council 2005). To ensure the quality and comparability of data submitted, OSHPD provided hospitals with training sessions and written guidance in the form of a data collection handbook.

Table 1.

Risk Factors Included in the CCMRP and CCORP CABG Risk-Adjustment Models Compared with Other Risk-Adjustment Models

| Risk Factor | CCMRP 1998– 2002 | CCORP 2003–2004* | STS† | NY‡ | NJ | PA | MA§ |

|---|---|---|---|---|---|---|---|

| Dependent Variable | Inpatient Mortality | Inpatient and Operative Mortality | 30-Day Mortality | Inpatient Mortality | Operative Mortality | 30-Day Mortality | 30-Day Mortality |

| Race | √ | √ | √ | ||||

| Gender | √ | √ | √ | √ | √ | √ | |

| Age | √ | √ | √ | √ | √ | √ | √ |

| Body mass index | à | à | æ | ||||

| Status of procedure | √ | √ | √ | √∥ | √ | ||

| Creatinine (mg/dl) | √ | √ | RF | RF | RF | RF | RF |

| Hypertension | √ | √ | √ | √ | |||

| Dialysis | √ | √ | √ | √ | √ | √ | |

| Peripheral vascular disease | √ | √ | √ | √ | √ | ||

| Cerebrovascular disease | √ | √ | √ | √ | |||

| Cerebrovascular accident | √ | √ | √ | ||||

| Diabetes | √ | √ | √ | √ | √ | ||

| Chronic lung disease | √ | √ | √ | √ | √ | √ | |

| Immunosuppressive treatment | √ | √ | √ | ||||

| Hepatic failure | √ | ||||||

| Arrhythmia type | √ | √ | |||||

| Myocardial infarction | √ | √ | √ | √ | √ | √ | |

| Cardiogenic shock | √ | √ | √ | √ | √ | √ | |

| Congestive heart failure | √ | √ | √ | ||||

| NYHA Class IV | √ | √ | √ | ||||

| Prior cardiac surgery | √** | √ | √ | √ | √ | √ | |

| PTCA | √ | √ | |||||

| Ejection fraction | √ | √ | √ | √ | √ | ||

| Left main disease (% stenosis) | √ | √ | √ | √ | |||

| Number of diseased vessels | √ | √ | √ | √ | |||

| Mitral insufficiency | √ | √ | |||||

| c-statistic | 0.828 | 0.854†† | 0.78 | 0.803 | 0.765 | 0.772 | Not available |

Note :

Although the CCORP public reports use operative (inpatient or 30-day) mortality as the outcome measure, for this manuscript we used only inpatient mortality and kept all risk factors unchanged for a fair comparison across years.

STS model also includes “PTCA with 6 hours.”

NY model also includes “unstable hemodynamic state” and “extensively calcified aorta.”

MA uses a multilevel, hierarchical model and includes “prior PTCA.”

Body surface area.

MediQual measure.

On bypass pump only.

c-statistic reported for inpatient mortality.

CCMRP, California Coronary Artery Bypass Graft Mortality Reporting Program; CCORP, California CABG Outcomes Reporting Program; STS, Society of Thoracic Surgeons; NY, New York; NJ, New Jersey; PA, Pennsylvania; MA, Massachusetts; RF, renal failure; NYHA, New York Heart Association; PTCA, percutaneous transluminal coronary angioplasty.

Data Quality Review and Verification

The data submitted by each hospital for both the CCMRP and CCORP were systematically reviewed for completeness, data errors, and data discrepancies. The first step was to compare each hospital's specific rates for each data element to the state average. Invalid, missing, and abnormally high- or low-risk mean values and prevalences were identified. Reports were then generated for each hospital with discrepancies and each of these hospitals was asked to check for possible coding errors. The second step was to link the CCMRP and CCORP data with the State of California's Patient Discharge Data (PDD) to conduct further validation. This matched data set was used to generate additional reports to alert hospitals with apparent discrepancies in the number of isolated CABG surgeries performed or the number of in-hospital deaths. The relevant hospitals were contacted and asked to check the cases by reviewing medical records.

The PDD-CCMRP and PDD-CCORP linkages were also used to verify selected risk factors. Although the selected risk factors reviewed varied between years, they generally included the prevalence of cardiogenic shock, the use of percutaneous transluminal coronary angioplasty, the need for dialysis, myocardial infraction, and whether the surgery was considered a “salvage” case. The specific findings of these discrepancy reports and the hospital reconciliations are summarized in the CCMRP and CCORP reports (Parker et al. 2005a, b). Finally, independent medical record audits were undertaken for the CCORP data, but not the CCMRP data. Details of the audit, the audit results, and subsequent interventions are available in the CCORP report (Parker et al. 2005b).

Risk Adjustment Model

Multivariable logistic regression models were used to determine the relationship between each of the demographic and preoperative risk factors and the likelihood of in-hospital mortality. Details regarding risk model development are available elsewhere (Parker et al. 2005a, b). Briefly, the risk models were developed for the CCMRP and CCORP data separately. Table 1 lists the factors used in the risk adjustment compared with the factors included in the STS model and models used by the states of New York, New Jersey, Pennsylvania, and Massachusetts. The coefficients were developed on the patients included in the data sets without any missing variables (more than 89 percent of the cases in both data sets) to ensure that the effects of risk factors were estimated based on the most complete data available. Then, for all patient fields that were incomplete, parameters were imputed using the lowest risk category, so that there were no missing data in any of the seven final data sets (one for each year).

Volume–Outcome Models

To assess the association between annual hospital volume of isolated CABGs and risk-adjusted mortality, annual hospital volume (the only fixed effects hospital-level variable) was specified in three ways. First, we entered annual volume as a continuous variable for each year-specific model. Second, we entered annual volume as a quadratic (linear plus squared term) for each year-specific model to evaluate a possible curvilinear relation between mortality and volume. Third, we dichotomized volume using two volume thresholds that we selected before any analyses. By this method, hospitals were dichotomized as performing fewer than 250 or at least 250 CABG cases/year, as well as hospitals performing fewer than 450 or at least 450 cases/year, consistent with previously used volume thresholds (Birkmeyer et al. 2003; Parker et al. 2005a, b). Of note, a hospital could therefore be categorized as low volume in one year and high volume in another year if the number of CABG procedures crossed the specified volume threshold. Finally, to assess whether other transformations should be considered, for each year as well as for all years combined, we generated scatterplots of observed mortality versus volume and of risk-adjusted mortality versus volume, and produced locally weighted least squares (LOWESS) curves for visual inspection (Cleveland 1979; Royston 1991).

For data collected during the mandatory reporting program in 2003 and 2004, we conducted separate analyses on the 86 and 85 hospitals, respectively, that had previously reported data during any of the 5 years of the voluntary reporting period (CCMRP). This was done to assess potential explanations for any change in the magnitude of the volume–outcome association between the voluntary reporting period (1998–2002) and the mandatory reporting period (2003 and 2004).

Statistical Methods

The CABG volume–outcome relationship was analyzed with a hierarchical logistic regression model (PROC GLIMMIX, SAS, version 9.1) with hospital-specific random intercepts. These models are increasingly used in health services research to analyze multilevel data, such as patient data that are aggregated by hospital (Christiansen and Morris 1997; Shahian et al. 2001), and provide several theoretical and statistical advantages. These models are more appropriate for using patient data to make inferences at the hospital level by adjusting for the “clustering” of patients with similar characteristics (Christiansen and Morris 1997; Shahian et al. 2001). To aid in the visual inspection of the volume–mortality scatterplots, LOWESS curves were fitted to the data using Stata, version 9.2 (Cleveland 1979; Royston 1991).

RESULTS

Overall hospital volume and mortality data are presented in Table 2. During the voluntary reporting program years of 1998, 1999, 2000, 2001, and 2002, the number of hospitals contributing data were 68, 81, 76, 71, and 70, respectively. During the mandatory program years of 2003 and 2004, the number of hospitals performing CABG surgeries was 121 and 120, respectively. Of these hospitals, 86 and 85, respectively, had previously participated in at least one of the years during the prior voluntary program. The median annual hospital CABG volume generally decreased over the years studied; from its peak in 2000, the median annual hospital CABG volume fell to 120 for all hospitals, and 121 for those 85 hospitals that had previously reported during the voluntary reporting program.

Table 2.

Overall Volume, Mortality, Hospital Volume, and Hospital Mortality Rates, by Year

| Hospital Volume (n) | Hospital Mortality Rate (%) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Year | Patients (n) | Hospitals (n) | Overall Mortality (%) | Median | Mean | Range | Median | Mean | Range |

| 1998 | 17,459 | 68 | 2.70 | 178 | 257 | 45–1,289 | 3.02 | 3.19 | 0.00–8.05 |

| 1999 | 21,973 | 81 | 2.83 | 196 | 271 | 58–1,597 | 3.03 | 3.32 | 0.00–8.28 |

| 2000 | 21,109 | 76 | 2.63 | 217 | 278 | 50–1,531 | 2.88 | 3.23 | 0.00–10.48 |

| 2001 | 18,550 | 71 | 2.84 | 197 | 261 | 38–1,237 | 2.94 | 3.37 | 0.60–15.00 |

| 2002 | 17,728 | 70 | 2.66 | 187 | 253 | 29–1,062 | 2.76 | 3.16 | 0.00–10.34 |

| 2003 (v)* | 16,420 | 81 | 2.25 | 151 | 203 | 25–992 | 2.29 | 2.49 | 0.00–5.71 |

| 2003 (m)† | 21,272 | 121 | 2.34 | 140 | 176 | 25–992 | 2.50 | 2.58 | 0.00–10.34 |

| 2004 (v)* | 14,562 | 80 | 2.77 | 128 | 182 | 4–975 | 2.76 | 3.02 | 0.00–9.62 |

| 2004 (m)† | 19,133 | 120 | 2.73 | 120 | 159 | 4–975 | 2.58 | 2.94 | 0.00–11.54 |

2003 (v) and 2004 (v)=2003 and 2004 data from the hospitals that participated at least 1 year in the voluntary reporting program.

2003 (m) and 2004 (m) = 2003 and 2004 data from the hospitals that participated in the mandatory reporting program.

Table 3 shows that when hospital volume was entered as a continuous variable, there was a statistically significant association between higher hospital volume and lower mortality in 1999, 2000, 2001, and 2002. While this association was not statistically significant in 1998, the OR associated with hospital volume was similar when compared to the four following years; however, there were fewer patients in the 1998 cohort. To give an example of the magnitude of this association, in the year 2000, the OR associated with each additional 100 CABG procedures performed was 0.942. Therefore, a hospital with the median in-hospital mortality rate of 2.88 percent would be expected to have its mortality rate drop by 0.17 percentage point to 2.71 percent if it performed 100 additional procedures. During 2003 and 2004, there was no significant volume–outcome association among either the hospitals that had previously reported during the voluntary program or the entire set of hospitals reporting in the mandatory program.

Table 3.

Results of Hierarchical Multivariable Regression, Assessing Association between In-Hospital Mortality and Hospital Annual Volume

| Year | Volume Effect | SE | p-Value | OR | 95% CI |

|---|---|---|---|---|---|

| Annual volume as a continuous variable (per 100 patients per year) | |||||

| 1998 | −0.039 | 0.027 | 0.154 | 0.962 | 0.912–1.015 |

| 1999 | −0.046 | 0.019 | 0.017 | 0.955 | 0.920–0.991 |

| 2000 | −0.060 | 0.025 | 0.020 | 0.942 | 0.897–0.989 |

| 2001 | −0.067 | 0.027 | 0.015 | 0.935 | 0.887–0.986 |

| 2002 | −0.055 | 0.027 | 0.041 | 0.946 | 0.899–0.997 |

| 2003 (v)* | −0.007 | 0.032 | 0.826 | 0.993 | 0.932–1.058 |

| 2003 (m)† | −0.003 | 0.030 | 0.911 | 0.997 | 0.939–1.058 |

| 2004 (v)* | −0.026 | 0.034 | 0.448 | 0.974 | 0.911–1.042 |

| 2004 (m)† | −0.016 | 0.037 | 0.658 | 0.984 | 0.915–1.058 |

| Annual volume >250 (<250 is reference group) | |||||

| 1998 | −0.077 | 0.155 | 0.619 | 0.926 | 0.684–1.254 |

| 1999 | −0.199 | 0.112 | 0.079 | 0.820 | 0.659–1.020 |

| 2000 | −0.423 | 0.132 | 0.002 | 0.655 | 0.506–0.849 |

| 2001 | −0.348 | 0.143 | 0.017 | 0.706 | 0.534–0.934 |

| 2002 | −0.292 | 0.132 | 0.031 | 0.747 | 0.576–0.968 |

| 2003 (v)* | 0.007 | 0.159 | 0.965 | 1.007 | 0.737–1.376 |

| 2003 (m)† | 0.086 | 0.141 | 0.544 | 1.089 | 0.827–1.435 |

| 2004 (v)* | −0.001 | 0.160 | 0.995 | 0.999 | 0.731–1.366 |

| 2004 (m)† | 0.163 | 0.162 | 0.317 | 1.177 | 0.856–1.618 |

| Annual volume >450 (<450 is reference group) | |||||

| 1998 | −0.286 | 0.203 | 0.164 | 0.751 | 0.505–1.119 |

| 1999 | −0.263 | 0.140 | 0.064 | 0.769 | 0.584–1.011 |

| 2000 | −0.323 | 0.202 | 0.114 | 0.724 | 0.487–1.076 |

| 2001 | −0.533 | 0.204 | 0.011 | 0.587 | 0.394–0.873 |

| 2002 | −0.327 | 0.174 | 0.064 | 0.721 | 0.513–1.013 |

| 2003 (v)* | 0.050 | 0.208 | 0.810 | 1.052 | 0.699–1.582 |

| 2003 (m)† | 0.016 | 0.206 | 0.940 | 1.016 | 0.678–1.522 |

| 2004 (v)* | −0.106 | 0.204 | 0.605 | 0.900 | 0.603–1.341 |

| 2004 (m)† | −0.050 | 0.231 | 0.829 | 0.951 | 0.605–1.495 |

Reported are the coefficients and statistics for volume, years 2000–2004. Data for covariates are not shown.

2003 (v) and 2004 (v) = 2003 and 2004 data from the hospitals that participated at least 1 year in the voluntary reporting program.

2003 (m) and 2004 (m) = 2003 and 2004 data from the hospitals that participated in the mandatory reporting program.

CI, confidence interval.

When hospital volume was entered as a quadratic term (linear plus squared), the squared term was not significant in eight of the nine models. In the year-specific model for 2000, the squared term was significant with a coefficient = 0.000002, 95 percent confidence interval [CI]: 0.0000007–0.0000033, p =.004.

When annual hospital volume was dichotomized as fewer than 250 cases versus 250 or more, a significant association was found between volume and outcome during the years, 2000–2002. This association disappeared during the mandatory reporting years, 2003 and 2004, with ORs of 1.007 (95 percent CI: 0.737–1.376) and 0.999 (95 percent CI: 0.731–1.366) for the hospitals that had participated in the voluntary program, and 1.089 (95 percent CI: 0.827–1.435) and 1.177 (95 percent CI: 0.856–1.618) for all hospitals performing CABG surgeries, respectively. This suggests that this loss of association did not result from including hospitals that had not participated in the voluntary program.

When annual hospital volume was dichotomized as fewer than 450 cases versus 450 or more, the ORs for mortality among high-volume hospitals compared with low-volume hospitals ranged from 0.587 to 0.769 from 1998 to 2002. For the years 2003 and 2004, the ORs were 1.016 and 0.951, respectively, for all hospitals and 1.052 and 0.900, respectively, for hospitals that had previously reported during the voluntary, CCMRP reporting period. Of note, only six hospitals during the mandatory program performed 450 or more cases/year.

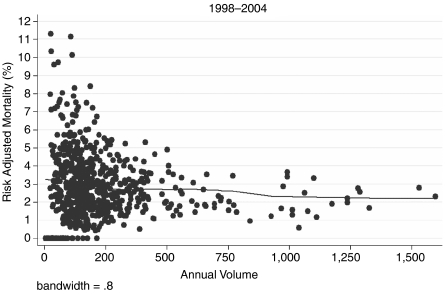

Finally, upon visual inspection of scatterplots and LOWESS curves of observed mortality and hospital volume, and risk-adjusted mortality and hospital volume, we found no evidence of nonlinear associations or evidence to suggest that other transformations or dichotomization thresholds should be considered. Figure 1 shows the scatterplot of risk-adjusted mortality versus annual volume for all years, 1998–2004. The scatterplots and LOWESS curves for observed mortality and risk-adjusted mortality for individual years are available in the electronic version for publication (Appendices A and B).

Figure 1.

Risk-Adjusted Mortality versus Annual Volume for all Years, 1998–2004. A LOWESS Curve Has Been Fitted to the Data for Visual Inspection

DISCUSSION

In California, we found that the hospital volume–outcome relationship for CABG surgery was consistent but small during the years 1998–2002, and that this relationship disappeared in 2003 and 2004. We found that the use of data collected during a voluntary statewide reporting program (1998–2002) appeared to yield unbiased estimates of the volume effect. That is, when we assessed the volume–outcome relationship for 2003 and 2004, conducting separate analyses on all hospitals as well as those hospitals that had previously participated in the voluntary reporting program, we found similar results. The disappearance of the volume–outcome relationship resulted, in part, from improved risk-adjusted outcomes among lower volume hospitals. It is unknown whether the overall improvement in mortality, especially at low-volume hospitals, during this study period was partially attributable to the state's public reporting of CABG surgery performance data. Low-volume hospitals may have implemented improved training programs, quality improvement activities, or other innovations to improve outcomes; these interventions may have been stimulated partially by the prospect of mandatory public reporting.

The policy implications of the volume–outcome association are important. If a clinically significant volume–outcome association exists, then legislators and policy makers should establish minimum volume guidelines and/or promote the regionalization of such services, as the New York Department of Health has done for many years. More patients would then receive care in higher volume centers, and average quality of care would improve. There are data that support this policy direction. Two large observational studies (Grumbach et al. 1995; Vaughan-Sarrazin et al. 2002) have compared outcomes of patients after CABG surgery in areas of high regionalization (e.g., Canada and New York) to areas of low regionalization (e.g., California). These reports have suggested that higher volume centers have lower mortality rates and that areas of high regionalization have better outcomes and less variability in outcomes when compared with areas of low regionalization. However, both of these studies used administrative data, which are inferior in their ability to risk adjust (Wray et al. 1997). Second, the data used in these analyses were from 1987–1989 (Grumbach et al. 1995) to 1994–1999 (Vaughan-Sarrazin et al. 2002) when overall CABG surgery mortality was higher and the volume–outcome association was more pronounced.

Our finding that the volume–outcome relationship has diminished over time is consistent with previous reports (Sowden, Deeks, and Sheldon 1995; Clark 1996; Kalant and Shrier 2004). In a recent literature review, Kalant and Shrier (2004) found that studies from the 1980s demonstrated that the risk of death after CABG surgery was 3–5 percent less at high-volume centers than at low-volume centers. Studies since 1990, on the other hand, have generally found that the risk of death after CABG surgery is between 0 and 3 percent less at high-volume centers (Kalant and Shrier 2004). These and other authors suggest that this trend is due to both general advances in medicine and maturation of the CABG procedure (Shahian and Normand 2003; Kalant and Shrier 2004). Peterson et al. (2004) analyzed data from 2000 to 2001 using the STS National Cardiac Database and found only a very small volume–outcome association, corresponding to a reduction in mortality of 0.07 percent for every additional 100 CABG surgeries performed (Peterson et al. 2004). Birkmeyer et al. 2002 also found a <2 percent difference in adjusted mortality between very low- and very high-volume hospitals performing CABG surgery in a national sample of Medicare patients. The most current data and systematic reviews of the CABG volume–outcome relationship conclude that caution should be exercised to avoid overinterpreting the significant findings of previous studies and that hospital volume may no longer be an adequate quality metric for CABG surgery (Sowden, Deeks, and Sheldon 1995; Sowden and Sheldon 1998; Shahian and Normand 2003; Kalant and Shrier 2004).

Using databases in which hospitals voluntarily report performance data to estimate the volume–outcome relationship could lead to selection bias. Several analyses reporting significant volume–outcome associations have used such databases (Clark 1996; Christian et al. 2003; Peterson et al. 2004). Bias could be introduced if either low- or high-volume hospitals not participating in the voluntary program had worse than average outcomes, resulting in either an under- or overestimation of the volume–outcome relationship, respectively. While other studies have shown that nonparticipating entities tend to have worse outcomes than participating entities (McCormick et al. 2002), ours is the first study that directly evaluated this potential bias for the CABG surgery volume–outcome relationship. Although we found the hospitals that did not participate in the voluntary program tended to be smaller and have higher observed mortality rates, including them in the full analyses did not significantly change the lack of volume–outcome association during the mandatory reporting program. These previously nonparticipating hospitals had similar risk-adjusted mortality rates in 2003 and 2004 as those previously participating hospitals, on average.

It is also possible that in California, the introduction of a mandatory CABG surgery outcome reporting program stimulated quality improvement efforts, particularly among low-quality outliers. While several studies have suggested that the publication of CABG surgery reports has been associated with reductions in CABG mortality (Hannan et al. 1995; Rosenthal, Quinn, and Harper 1997) and improvements in quality of care (Bentley and Nash 1998; Rainwater, Romano, and Antonius 1998; Chassin 2002), it is unknown whether the observed reduction in hospital mortality, particularly among hospitals performing fewer than 250 surgeries/year, and the recent lack of a volume–outcome association in California reflects such improvements. Clearly, other factors may have contributed to this temporal finding, including patient selection, standardization of procedures, improved training, better equipment, and diffusion of innovation. Of note is the fact that expected mortality rates remained stable among low-volume hospitals throughout the study period, arguing against the possibility of low-volume hospitals inflating clinical risk factors during the mandatory program. It is also not known whether our findings reflect trends in other states or nationally, or whether they might be limited to states with public reporting programs or those that collect clinical data. Another limitation of our study is that we only examined 7 years of data, and the absence of the volume–outcome association may not be sustained. In addition, relative to other states, California has low-volume CABG centers, and there may be a volume–outcome association above the volumes reflected in the California data. Finally, our risk-adjustment models, although based on detailed clinical data similar to those collected in New York and New Jersey, may have omitted unidentified confounders.

Although many studies have supported the existence of an association between CABG hospital volume and mortality, this association has decreased with time, and in our analyses of the most recent California data, the relationship has disappeared. Our findings, in combination with the findings of other researchers, challenge the proposition that regionalizing services will result in improved quality of care, and raise questions about the validity of the Leapfrog Group's Evidence-based Hospital Referral standards for CABG volume. Other interventions, such as public reporting and collaborative quality improvement, may foster resolution of outcome disparities between low- and high-volume hospitals. Future analyses are clearly warranted to investigate whether the volume outcome association still exists for other cardiovascular procedures or in other states that do not impose regionalization.

Acknowledgments

This work was supported, in part, by a contract from the State of California's Office of Statewide Health Planning and Development, Agreement 03-4275.

Disclosures: The authors have no conflicts of interests or financial interests to disclose.

Disclaimers: None.

Supplementary material

The following supplementary material for this article is available:

Scatter-plots of Observed Hospital Mortality versus Hospital Annual Volume, Years 1998–2004.

Scatter-plots of Risk-Adjusted Hospital Mortality versus Hospital Annual Volume, Years 1998–2004.

This material is available as part of the online article from: http://www.blackwell-synergy.com/doi/abs/10.1111/j.1475-6773.2007.00740.x (this link will take you to the article abstract).

Please note: Blackwell Publishing is not responsible for the content or functionality of any supplementary materials supplied by the authors. Any queries (other than missing material) should be directed to the corresponding author for the article.

REFERENCES

- Bentley JM, Nash DB. How Pennsylvania Hospitals Have Responded to Publicly Released Reports on Coronary Artery Bypass Graft Surgery. Joint Commission Journal on Quality Improvement. 1998;24(1):40–9. doi: 10.1016/s1070-3241(16)30358-3. [DOI] [PubMed] [Google Scholar]

- Birkmeyer JD, Siewers AE, Finlayson EV, Stukel TA, Lucas FL, Batista I, Welch HG, Wennberg DE. Hospital Volume and Surgical Mortality in the United States. New England Journal of Medicine. 2002;346(15):1128–37. doi: 10.1056/NEJMsa012337. [DOI] [PubMed] [Google Scholar]

- Birkmeyer JD, Stukel TA, Siewers AE, Goodney PP, Wennberg DE, Lucas FL. Surgeon Volume and Operative Mortality in the United States. New England Journal of Medicine. 2003;349(22):2117–27. doi: 10.1056/NEJMsa035205. [DOI] [PubMed] [Google Scholar]

- Chassin MR. Achieving and Sustaining Improved Quality: Lessons from New York State and Cardiac Surgery. Health Affairs (Millwood) 2002;21(4):40–51. doi: 10.1377/hlthaff.21.4.40. [DOI] [PubMed] [Google Scholar]

- Christian CK, Gustafson ML, Betensky RA, Daley J, Zinner MJ. The Leapfrog Volume Criteria May Fall Short in Identifying High-Quality Surgical Centers. Annals of Surgery. 2003;238(4):447–55. doi: 10.1097/01.sla.0000089850.27592.eb. discussion 55–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Christiansen CL, Morris CN. Improving the Statistical Approach to Health Care Provider Profiling. Annals of Internal Medicine. 1997;127(8, part 2):764–8. doi: 10.7326/0003-4819-127-8_part_2-199710151-00065. [DOI] [PubMed] [Google Scholar]

- Clark RE. Outcome as a Function of Annual Coronary Artery Bypass Graft Volume. Annals of Thoracic Surgery. 1996;61(1):21–6. doi: 10.1016/0003-4975(95)00734-2. [DOI] [PubMed] [Google Scholar]

- Cleveland WS. Robust Locally Weighted Regression and Smoothing Scatterplots. Journal of the American Statistical Association. 1979;74:829–36. [Google Scholar]

- Damberg CL, Chung RE, Steimle AE. The California Report on Coronary Artery Bypass Graft Surgery: 1997–1998 Hospital Data. 2001 Technical Report. San Francisco: Pacific Business Group on Health and the California Office of Statewide Health Planning and Development. [Google Scholar]

- Damberg CL, Danielsen B, Parker JP, Castles AG, Steimle AE. The California Report on Coronary Artery Bypass Graft Surgery: 1999 Hospital Data. 2003 Technical Report. San Francisco: Pacific Business Group on Health and the California Office of Statewide Health Planning and Development. [Google Scholar]

- Elixhauser A, Steiner C, Fraser I. Volume Thresholds and Hospital Characteristics in the United States. Health Affairs (Millwood) 2003;22(2):167–77. doi: 10.1377/hlthaff.22.2.167. [DOI] [PubMed] [Google Scholar]

- Escarce JJ, Van Horn RL, Pauly MV, Williams SV, Shea JA, Chen W. Health Maintenance Organizations and Hospital Quality for Coronary Artery Bypass Surgery. Medical Care Research and Review. 1999;56(3):340–62. doi: 10.1177/107755879905600304. discussion 63–72. [DOI] [PubMed] [Google Scholar]

- Gandjour A, Bannenberg A, Lauterbach KW. Threshold Volumes Associated with Higher Survival in Health Care: A Systematic Review. Medical Care. 2003;41(10):1129–41. doi: 10.1097/01.MLR.0000088301.06323.CA. [DOI] [PubMed] [Google Scholar]

- Grumbach K, Anderson GM, Luft HS, Roos LL, Brook R. Regionalization of Cardiac Surgery in the United States and Canada. Journal of the American Medical Association. 1995;274(16):1282–8. [PubMed] [Google Scholar]

- Halm EA, Lee C, Chassin MR. Is Volume Related to Outcome in Health Care? A Systematic Review and Methodologic Critique of the Literature. Annals of Internal Medicine. 2002;137(6):511–20. doi: 10.7326/0003-4819-137-6-200209170-00012. [DOI] [PubMed] [Google Scholar]

- Hannan EL, Siu AL, Kumar D, Kilburn H, Jr, Chassin MR. The Decline in Coronary Artery Bypass Graft Surgery Mortality in New York State. The Role of Surgeon Volume. Journal of the American Medical Association. 1995;273(3):209–13. [PubMed] [Google Scholar]

- Hannan EL, Wu C, Ryan TJ, Bennett E, Culliford AT, Gold JP, Hartman A, Isom OW, Jones RH, McNeil B, Rose EA, Subramanian VA. Do Hospitals and Surgeons with Higher Coronary Artery Bypass Graft Surgery Volumes Still Have Lower Risk-Adjusted Mortality Rates? Circulation. 2003;108(7):795–801. doi: 10.1161/01.CIR.0000084551.52010.3B. [DOI] [PubMed] [Google Scholar]

- Hewitt ME, Petitti DB National Cancer Policy Board (U.S.), National Research Council (U.S.), and Division on Earth and Life Studies. Interpreting the Volume–Outcome Relationship in the Context of Cancer Care. Washington, DC: National Academy Press; 2001. [Google Scholar]

- Kalant N, Shrier I. Volume and Outcome of Coronary Artery Bypass Graft Surgery: Are More and Less the Same? Canadian Journal of Cardiology. 2004;20(1):81–6. [PubMed] [Google Scholar]

- Luft HS, Bunker JP, Enthoven AC. Should Operations Be Regionalized? New England Journal of Medicine. 1979;301(25):1364–9. doi: 10.1056/NEJM197912203012503. [DOI] [PubMed] [Google Scholar]

- Luft HS, Garnick DW, Mark DH, Peltzman DJ, Phibbs CS, Lichtenberg E, McPhee SJ. Does Quality Influence Choice of Hospital? Journal of the American Medical Association. 1990;263(21):2899–906. [PubMed] [Google Scholar]

- Massachusetts Data Analysis Center (MASS-DAC) Adult Coronary Artery Bypass Graft Surgery in the Commonwealth of Massachusetts. Boston: Department of Health Care Policy, Harvard Medical School; 2004. [2007 May 4]. Available at http://www.massdac.org/reports/CABG%202004.pdf. January 1–December 31, 2002. [Google Scholar]

- McCormick D, Himmelstein DU, Woolhandler S, Wolfe SM, Bor DH. Relationship between Low Quality-of-Care Scores and HMOs' Subsequent Public Disclosure of Quality-of-Care Scores. Journal of the American Medical Association. 2002;288(12):1484–90. doi: 10.1001/jama.288.12.1484. [DOI] [PubMed] [Google Scholar]

- New Jersey Department of Health and Senior Services. “Cardiac Surgery in New Jersey 2002.”. 2005. Technical Report, June.

- New York State Department of Health. “Adult Cardiac Surgery in New York State 2000–2002.”. 2004. [2007 May 4]. Available at http://www.health.state.ny.us/nysdoh/heart/pdf/2000-2002_cabg.pdf.

- Nobilio L, Ugolini C. Different Regional Organisational Models and the Quality of Health Care: The Case of Coronary Artery Bypass Graft Surgery. Journal of Health Services Research and Policy. 2003;8(1):25–32. doi: 10.1177/135581960300800107. [DOI] [PubMed] [Google Scholar]

- Parker JP, Li Z, Damberg CL, Danielsen B, Marcin J, Dai J, Steimle AE. The California Report on Coronary Artery Bypass Graft Surgery 2000–2002 Hospital Data. San Francisco: California Office of Statewide Health Planning and Development and the Pacific Business Group on Health; 2005a. [Google Scholar]

- Parker JP, Li Z, Danielsen B, Marcin J, Dai J, Mahendra G, Steimle AE. The California Report on Coronary Artery Bypass Graft Surgery 2003 Hospital Data. San Francisco: California Office of Statewide Health Planning and Development and the Pacific Business Group on Health; 2005b. [Google Scholar]

- Pennsylvania Health Care Cost Containment Council. “Pennsylvania's Guide to Coronary Artery Bypass Graft Surgery 2003 Information About Hospitals and Cardiothoracic Surgeons.”. 2005. [2007 May 4]. Available at http://www.phc4.org/reports/cabg/03/docs/cabg2003report.pdf.

- Peterson ED, Coombs LP, DeLong ER, Haan CK, Ferguson TB. Procedural Volume as a Marker of Quality for CABG Surgery. Journal of the American Medical Association. 2004;291(2):195–201. doi: 10.1001/jama.291.2.195. [DOI] [PubMed] [Google Scholar]

- Rainwater JA, Romano PS, Antonius DM. The California Hospital Outcomes Project: How Useful Is California's Report Card for Quality Improvement? Joint Commission Journal on Quality Improvement. 1998;24(1):31–9. doi: 10.1016/s1070-3241(16)30357-1. [DOI] [PubMed] [Google Scholar]

- Romano PS, Zhou H. Do Well-Publicized Risk-Adjusted Outcomes Reports Affect Hospital Volume? Medical Care. 2004;42(4):367–77. doi: 10.1097/01.mlr.0000118872.33251.11. [DOI] [PubMed] [Google Scholar]

- Rosenthal GE, Quinn L, Harper DL. Declines in Hospital Mortality Associated with a Regional Initiative to Measure Hospital Performance. American Journal of Medical Quality. 1997;12(2):103–12. doi: 10.1177/0885713X9701200204. [DOI] [PubMed] [Google Scholar]

- Royston P. “Lowess Smoothing.”. Stata Technical Bulletin. 1991:7–9. [Google Scholar]

- Shahian DM, Normand SL. The Volume–Outcome Relationship: From Luft to Leapfrog. Annals of Thoracic Surgery. 2003;75(3):1048–58. doi: 10.1016/s0003-4975(02)04308-4. [DOI] [PubMed] [Google Scholar]

- Shahian DM, Normand SL, Torchiana DF, Lewis SM, Pastore JO, Kuntz RE, Dreyer PI. Cardiac Surgery Report Cards: Comprehensive Review and Statistical Critique. Annals of Thoracic Surgery. 2001;72(6):2155–68. doi: 10.1016/s0003-4975(01)03222-2. [DOI] [PubMed] [Google Scholar]

- Shroyer AL, Coombs LP, Peterson ED, Eiken MC, DeLong ER, Chen A, Ferguson TB, Jr, Grover FL, Edwards FH. The Society of Thoracic Surgeons: 30-Day Operative Mortality and Morbidity Risk Models. Annals of Thoracic Surgery. 2003;75(6):1856–64. doi: 10.1016/s0003-4975(03)00179-6. discussion 64–5. [DOI] [PubMed] [Google Scholar]

- Sowden AJ, Deeks JJ, Sheldon TA. Volume and Outcome in Coronary Artery Bypass Graft Surgery: True Association or Artefact? British Medical Journal. 1995;311(6998):151–5. doi: 10.1136/bmj.311.6998.151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sowden AJ, Sheldon TA. Does Volume Really Affect Outcome? Journal of Health Services Research and Policy. 1998;3(3):187–90. doi: 10.1177/135581969800300311. [DOI] [PubMed] [Google Scholar]

- Vaughan-Sarrazin MS, Hannan EL, Gormley CJ, Rosenthal GE. Mortality in Medicare Beneficiaries following Coronary Artery Bypass Graft Surgery in States with and without Certificate of Need Regulation. Journal of the American Medical Association. 2002;288(15):1859–66. doi: 10.1001/jama.288.15.1859. [DOI] [PubMed] [Google Scholar]

- Welke KF, Ferguson TB, Jr, Coombs LP, Dokholyan RS, Murray CJ, Schrader MA, Peterson ED. Validity of the Society of Thoracic Surgeons National Adult Cardiac Surgery Database. Annals of Thoracic Surgery. 2004;77(4):1137–9. doi: 10.1016/j.athoracsur.2003.07.030. [DOI] [PubMed] [Google Scholar]

- Wray NP, Hollingsworth JC, Peterson NJ, Ashton CM. Case-Mix Adjustment Using Administrative Databases: A Paradigm to Guide Future Research. Medical Care Research and Review. 1997;54(3):326–56. doi: 10.1177/107755879705400306. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Scatter-plots of Observed Hospital Mortality versus Hospital Annual Volume, Years 1998–2004.

Scatter-plots of Risk-Adjusted Hospital Mortality versus Hospital Annual Volume, Years 1998–2004.