Abstract

BACKGROUND

The Veterans Health Administration (VHA) is a leader in developing computerized clinical reminders (CCRs). Primary care physicians’ (PCPs) evaluation of VHA CCRs could influence their future development and use within and outside the VHA.

OBJECTIVE

Survey PCPs about usefulness and usability of VHA CCRs.

DESIGN AND PARTICIPANTS

In a national survey, VHA PCPs rated on a 7-point scale usefulness and usability of VHA CCRs, and standardized scales (0–100) were constructed. A hierarchical linear mixed (HLM) model predicted physician- and facility-level variables associated with more positive global assessment of CCRs.

RESULTS

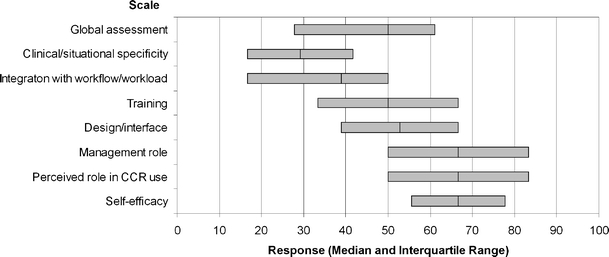

Four hundred sixty-one PCPs participated (response rate, 69%). Scale Cronbach’s alpha ranged from 0.62 to 0.82. Perceptions of VHA CCRs were primarily in the midrange, where higher ratings indicate more favorable attitudes (weighted standardized median, IQR): global assessment (50, 28–61), clinical/situational specificity (29, 17–42), integration with workflow/workload (39, 17–50), training (50, 33–67), VHA’s management of CCR use (67, 50–83), design/interface (53, 40–67), perceived role in CCR use (67, 50–83), and self-efficacy (67, 57–78). In a HLM model, design/interface (p < .001), self-efficacy (p < .001), integration with workflow/workload (p < .001), and training (p < .001) were associated with more favorable global assessments of CCRs. Facilities in the west as compared to the south (p = .033), and physicians with academic affiliation (p = .045) had less favorable global assessment of CCRs.

CONCLUSIONS

Our systematic assessment of end-users’ perceptions of VHA CCRs suggests that CCRs need to be developed and implemented with a continual focus on improvement based on end-user feedback. Potential target areas include better integration into the primary care clinic workflow/workload.

KEY WORDS: decision support systems clinical, primary health care, quality of health care, evidence-based medicine, medical records systems computerized

INTRODUCTION

Randomized controlled trials have demonstrated that computerized clinical reminders (CCRs) can improve adherence to preventive and chronic disease guidelines.1–6 CCRs, which are triggered by information in specific data fields, are automated decision support tools that can consistently prompt clinicians to take evidence-based actions for prespecified types of conditions. As such, CCRs, as part of a multicomponent approach described in the Chronic Care Model,7 are a promising quality improvement technology to help implement evidence-based care and improve care.

Despite the promise of CCRs, their effectiveness in practice is mixed.1,8 Understanding and characterizing clinicians’ perceptions of current CCR features and implementation strategies are important steps toward improving CCR technology. A conceptual framework developed for a study of Human Immunodeficiency Virus (HIV) CCRs described several factors that influence perceived usefulness and usability of CCRs.9 These include system, team, and individual factors as well as factors related to the CCR interface design and clinical appropriateness.

The Veterans Health Administration (VHA), a leader in the development of clinical reminders,10,11 uses a variety of CCRs to improve care.12 Some VHA CCRs apply to all patients, whereas others are triggered based upon diagnostic codes or other information embedded in the electronic health record (EHR). The types of CCRs available and the rules used to determine eligibility, periodicity, and requirements for satisfying the CCRs closely mirror those found in the VHA’s External Peer Review Program (EPRP),12 a performance measurement program. Although the EPRP does not require CCR use, facilities implement CCRs to help clinicians meet EPRP targets. However, a study of CCRs involving 451 clinicians in 8 VHA facilities found considerable range in adherence to use of CCRs (29–100%).13 A recent study found widespread implementation of information technology (IT) clinical support in the VHA, particularly in hospitals that were urban and had cooperative cultures, but the study also found opportunities to enhance the use of IT to support clinical decision making, such as better use of CCRs.14

Asking clinicians from VHA facilities across the United States (U.S.) to provide feedback about CCR usefulness and usability is an important step toward developing and implementing CCRs more effectively. Our main objective was to describe primary care physicians’ (PCPs) perceptions of CCR usability and usefulness. A secondary objective was to identify correlates of more favorable global assessment (overall satisfaction, perceived effectiveness, and perceived usefulness) of CCRs.

METHODS

Study Design

Reminder Design and Conceptual Framework

Common features characterize all the CCRs currently in use within the VHA. The resolution of the CCR requires the clinician to access a list of CCRs that are “due” for the patient. Selecting a particular CCR launches a CCR pop-up window, which includes a list of questions with prespecified response options. The clinician’s responses are inserted into the clinic note. Some CCRs are linked to other parts of the EHR, such as lab orders. As CCR resolution requires clinicians to be proactive, it is possible for CCRs to remain unresolved.

We used a previously developed conceptual framework to study physician perceptions regarding these CCRs.9 Organizational factors include CCR-related training, opinion leaders, clinic workload, time constraints, maintenance and management of CCRs, and financial constraints. Team factors include coordinating responsibilities and defining team member roles. Individual factors include attitude (overall satisfaction with CCRs), self-efficacy for using CCRs, and expertise. Interface design factors encompass ease of use, efficiency, and function of the CCR interface. Factors related to interaction with other tools include redundancy and dependency of CCRs with other tools. Finally, clinical appropriateness is how well the CCR applies to the clinical situation or the patient for whom the CCR is triggered.

Data Sources

Our data sources were a national survey we designed for this study that assessed physicians’ perceptions of CCRs, the VHA Personnel and Accounting Integrated Data (PAID) database,15 the 1996 and 1999 VHA Managed Care Surveys, and the 2005 Department of Agriculture Area Resource File.

Sampling Frame and Data Collection

The survey’s sampling frame was the PAID database’s list of primary care physicians (internists, family physicians, and geriatricians). We used a stratified random sampling approach to obtain an initial sample of 1,000 physicians: At four sites (Greater Los Angeles, Cincinnati, Indianapolis, and Minneapolis), we sampled all PCPs. At the remaining VHA sites, we randomly sampled PCPs (random sampling fraction, 15%). We chose this stratified approach because qualitative studies on CCRs were underway at the 4 sites,16 enabling us to compare our results with those obtained through other methods.

We collected survey data in 3 waves between March 2005 and October 2005: Web-based (n = 403, 71%), paper (n = 98, 17%), and telephone (n = 69, 12%). Trained staff telephoned, up to 3 times, nonresponders to our Web and paper surveys. Staff used a standardized script and followed a written protocol, offering to administer the survey via telephone or to mail another copy of the questionnaire. Each respondent’s department/facility was entered in a drawing for $1,000 for quality improvement activities. Local institutional review boards approved the study protocol.

Eligibility and Response Rate

A clinician was eligible for the survey if he/she met 3 criteria: (1) identified himself/herself as a PCP, (2) provided direct patient care at a VHA facility at least a half-day per week, and (3) responded that he/she uses VHA CCRs. Of the 570 respondents, only 31 (5%) were deemed ineligible because they responded that they had never used a VHA CCR. Our weighted sample response rate was 69% (4 sites, 66%; other VHA sites, 69%).

Survey Instrument and Scale Development

Several survey instruments provided items for our questionnaire: Computer System Usability Questionnaire,17 1999 Veterans Affairs Survey of Primary Care Practices,18 and the 2000 Veterans Affairs Survey of HIV/AIDS Programs and Practices.19 In addition, we developed items to triangulate findings from prior observations and interviews about barriers to effective use of CCRs.16

We developed scales from the survey items as a method of data reduction, using the CCR usability framework of Patterson et al. as a guide.9 Higher scores indicate a more favorable attitude toward CCRs; items were reverse scored when necessary to provide consistent direction. We calculated standardized scores (standardized response range, 0–100; original response range, 1–7) to facilitate comparison among items and scales. Table 1 lists the items that contributed to each scale, paraphrased for conciseness. A copy of the survey instrument is available upon request. The scales are: (1) integration with workload/work flow (3 items, Cronbach’s α = .69); (2) clinical/situational specificity (4 items, α = .62); (3) perceived role in CCR use (2 items, α = .72); (4) design/interface factors (6 items, α = .82); (5) sources of training for learning how to use CCRs (4 items, α = .76); (6) VHA management of CCRs (single item); (7) self-efficacy (9 items, α = .68); and (8) global assessment of CCRs (3 items, α = .79). An example of poor clinical/situational specificity is a CCR that prompts a clinician to conduct a diabetic eye-screening exam for a patient with chronic binocular blindness. Our “perceived role in CCR use” scale assesses perceived responsibility for completing CCRs. The design/interface scale assesses issues related to the CCR screen. Self-efficacy is one’s assessment or confidence in his/her ability to effectively use CCRs in his/her VHA clinic, using VHA computers. The global assessment of CCRs scale is composed of 3 survey items that query 3 domains: satisfaction/usability, perceived effectiveness, and usefulness.

Table 1.

Primary Care Physicians’ Perceptions of VHA CCRs (Weighted, Standardized)

| Variable name | n | Median | IQR |

|---|---|---|---|

| Global assessment | 458 | 50.0 | 27.8–61.1 |

| Overall satisfied with CCRs | 460 | 50.0 | 16.7–66.7 |

| Overall CCRs are effective | 460 | 50.0 | 33.3–83.3 |

| Overall CCRs are not more useful in principle than in practice* | 460 | 33.3 | 16.7–66.7 |

| Clinical/situational specificity | 452 | 29.2 | 16.7–41.7 |

| CCR dialog boxes provide appropriate options for MD to resolve CCR* | 457 | 33.3 | 16.7–50.0 |

| Most CCRs apply to MD’s patients* | 460 | 33.3 | 16.7–66.7 |

| Adding “not applicable” would not improve use and effectiveness of CCRs* | 458 | 8.3 | 0–33.3 |

| Adding “pending” would not improve use and effectiveness of CCRs* | 455 | 16.7 | 0–50.0 |

| Integration with workload/flow | 460 | 38.9 | 16.7–50.0 |

| Enough time to complete CCRs under typical clinical workload | 460 | 33.3 | 0–50.0 |

| CCRs do not unnecessarily duplicate information in my progress notes* | 461 | 33.3 | 16.7–66.7 |

| Total CCR number is not too large* | 460 | 33.3 | 16.7–66.7 |

| Sources of training help MD learn CCRs | 451 | 50.0 | 33.3–66.7 |

| Training sessions | 457 | 50.0 | 16.7–83.3 |

| Online documentation | 453 | 50.0 | 16.7–66.7 |

| Performance feedback | 456 | 50.0 | 33.3–83.3 |

| Other clinical staff | 457 | 50.0 | 33.3–83.3 |

| Design/interface | 448 | 52.8 | 38.9–66.7 |

| Easy to use most CCRs | 459 | 50.0 | 16.7–66.7 |

| Easy to learn how to use CCRs | 461 | 66.7 | 50.0–83.3 |

| Expected functions and capabilities are available | 459 | 33.3 | 16.7–66.7 |

| Formats easy to use | 458 | 50.0 | 33.3–83.3 |

| Not surprised by actions of some CCRs* | 452 | 50.0 | 33.3–66.7 |

| Information on CCR screen is presented pleasantly | 457 | 50.0 | 33.3–66.7 |

| Management role | 460 | 66.7 | 50.0–83.3 |

| VHA managing of CCRs increases my completion of CCRs | 460 | 66.7 | 50.0–83.3 |

| Perceived role in CCR use | 459 | 66.7 | 50.0–83.3 |

| Know exactly which CCRs responsible for completing | 461 | 83.3 | 50.0–83.3 |

| Views CCRs as part of core work activity | 459 | 66.7 | 50.0–83.3 |

| Self-efficacy | 446 | 66.7 | 55.6–77.8 |

| CCRs help MD provide care | 459 | 66.7 | 50.0–83.3 |

| MD feels comfortable using CCRs | 457 | 83.3 | 50.0–100 |

| CCRs make MD more productive | 459 | 50.0 | 16.7–83.3 |

| MD recovers quickly when makes mistake using CCRs | 455 | 50.0 | 33.3–83.3 |

| Enough workstations are available | 461 | 83.3 | 66.7–100 |

| Computer speed sufficient to use CCRs* | 460 | 50.0 | 16.7–83.3 |

| Has proficient computer skills to use CCRs* | 460 | 100.0 | 83.3–100 |

| Prefers to use computer while with patient* | 461 | 83.3 | 50.0–100 |

| Makes no notes on paper to use later to complete CCRs* | 460 | 83.3 | 50.0–100 |

Each item response ranges from 0 to 100 where 0 = “strongly disagree” and 100 = “strongly agree”.

*Scores reverse coded from original item; variable labels have also been changed to provide consistent direction within scale.

Statistical Analyses

Main Analyses

To describe physicians’ perceptions of CCRs (main objective), we calculated a weighted median for each survey item and scale, considering the entire sample. To determine whether scale responses differ by data collection method (Web, paper, telephone), scale medians across differing data collection methods were examined with a Kruskal–Wallis test.

To identify correlates of more favorable (higher) global assessment of CCRs (secondary objective), we constructed a hierarchical linear mixed (HLM) model to predict global assessment of CCRs. Each subject was assigned to 1 of 5 clusters: the 4 sites that were oversampled each constituted its own cluster and all the remaining sparsely sampled sites were assigned to 1 cluster. We used an HLM model because we suspected that perceptions of CCRs within each site might be correlated, especially within each of the oversampled sites. Variables tested in the model are listed in Table 3. We tested data collection method because PCPs who responded via Web-based survey may have more favorable perceptions of CCRs and PCPs who required more invitations to participate may have less favorable perceptions. Alpha was set at 0.05, and all p values are two-tailed. We used SAS versions 9.1 and 8.2 to conduct the analyses.

Table 3.

Hierarchical Linear Mixed Model Predicting Global Assessment of CCRs (N = 414)

| Variable | Parameter estimate (95%CI) | p value |

|---|---|---|

| Gender | ||

| Male | Reference | |

| Female | 0.22 (−0.34, 0.77) | .44 |

| Primary care specialty | ||

| Internal medicine | Reference | |

| Family physician | 0.34 (−0.56, 1.24) | .46 |

| Geriatrician | −0.28 (−1.34, 0.79) | .61 |

| Number of clinic half-days | −0.0066 (−0.104, 0.091) | .90 |

| Years since medical school | 0.028 (−0.0060, 0.0618) | .11 |

| Physician academic affiliation | ||

| No | Reference | |

| Yes | 0.66 (0.014, 1.310) | .045* |

| VHA tenure | ||

| <5 years | Reference | |

| 5–9 years | −0.019 (−0.73, 0.69) | .96 |

| 10–14 years | −0.53 (−1.52, 0.46) | .29 |

| 15 or more years | −0.48 (−1.38, 0.42) | .30 |

| Urban location | ||

| No | Reference | |

| Yes | 0.33 (−0.52, 1.18) | .45 |

| Primary care patient visits (log10) | −0.38 (−1.15, 0.39) | .34 |

| Facility academic affiliation | ||

| No | Reference | |

| Yes | −0.19 (−1.09, 0.71) | .67 |

| Region | ||

| South | Reference | |

| Northeast | 0.42 (−0.43, 1.27) | .33 |

| Midwest | −0.50 (−1.33, 0.33) | .24 |

| West | −0.89 (−1.712, −0.073) | .033* |

| Perceptions of CCRs scales | ||

| Clinical/situational specificity | 0.00089 (−0.065, 0.067) | .98 |

| Self-efficacy | 0.10 (0.059, 0.146) | <.001* |

| Integration with workload/flow | 0.23 (0.15, 0.31) | <.001* |

| Training | 0.10 (0.047, 0.154) | <.001* |

| Design/interface | 0.28 (0.22, 0.33) | <.001* |

| Perceived role in CCR use | 0.045 (−0.057, 0.148) | .38 |

| Management role | 0.092 (−0.070, 0.253) | .27 |

| Data collection method | ||

| Web-based questionnaire | Reference | |

| Paper questionnaire | 0.32 (−0.53, 1.16) | .40 |

| Telephone | −0.14 (−1.23, 0.95) | .77 |

*p < .05

Sensitivity Analyses

We also constructed 2 additional HLM models to determine how our imputation of missing facility academic affiliation data affected the results. Because the facilities with missing academic affiliation data (21% of facilities) were primarily satellite clinics, we assumed in the main analyses that these facilities did not have rotating trainees and therefore were not academically affiliated. In the first additional model, we restricted the analysis to facilities that had data available on academic affiliation. In the second additional model, we assumed that all the facilities that were missing facility academic affiliation data were in fact academically affiliated.

RESULTS

Physician and Facility Characteristics

Table 2 displays the physicians’ and facilities’ characteristics (weighted). Of the eligible sample, 73% reported “always” using at least some CCRs, whereas 18% reported “sometimes” using CCRs, 9% reported only “occasionally” or “rarely” using CCRs, and 5% responded that they “never” used a VHA CCR. Our clusters of physicians within facilities were small (87% of sites had 3 or fewer physicians represented).

Table 2.

Physician and Facility Characteristics

| Characteristics of physician and facility | |

|---|---|

| Physician-level categorical variables | Weighted frequency (%) N = 461 |

| Length of VHA service* | |

| <5 years | 47% |

| 5 to 9 years | 23% |

| 10 to 14 years | 11% |

| >15 years | 18% |

| Missing | 0% |

| Specialty | |

| Internal medicine | 82% |

| Geriatrics | 7% |

| Family practice | 11% |

| Male gender | 59% |

| Missing | 0% |

| Has academic appointment | 55% |

| Missing | 3% |

| Affiliated with an oversampled site (Greater Los Angeles, Cincinnati, Indianapolis, and Minneapolis) | |

| Yes | 21% |

| No | 79% |

| Physician-level continuous variables | Median (IQR) |

| Number of half-days of direct patient care | 9 (5–10) |

| Missing | 0.43% |

| Facility-level categorical variables | Frequency (%) N = 197 |

| Years since medical school graduation | 19 (11–27) |

| Missing | 0.21% |

| Academic affiliation* | |

| Yes | 61% |

| Missing | 21% |

| Located in metropolitan area | 81% |

| Missing | 0% |

| Facility-level continuous variables | Median (IQR) |

| Number of primary care visits (FY’04) | 148,000 (65,000–296,000) |

| Missing | 1.02% |

*Percentages for this variable do not sum to 100% because of rounding.

PCPs’ Perceptions of CCRs

Figure 1 shows that the global assessment of CCRs was in the midrange and that the clinical/situational specificity items received the lowest overall ratings. Table 1 summarizes responses to the individual survey items. Higher responses suggest a more favorable attitude toward CCRs (0 = strongly disagree to 100 = strongly agree).

Figure 1.

VHA primary care physicians’ perceptions of computerized clinical reminders. 0 = Least favorable perception of CCR, 100 = most favorable perception of CCR, CCR = computerized clinical reminder, VHA = Veterans Health Administration

Only the clinical situational/specificity scale differed depending on the data collection method (p = .044). PCPs who responded by telephone had lower clinical/situational specificity ratings (median 25, n = 59) than Web-based (29.2, n = 307) or paper questionnaire (33.3, n = 95) respondents.

Predictors of Higher Global Assessment of CCRs

Main Analyses

Table 3 presents the results of our HLM model that predicted global assessment of CCRs. Adjusting for all the covariables listed in Table 3, the intraclass (intrafacility) correlation was 3.8%. Thus, variation in PCPs’ global assessment of CCRs within facilities greatly outweighed its variation across facilities. Among the facility-level characteristics, facilities in the west compared to the south viewed CCRs less favorably (p = .033); other regions and urban location were not significant.

Of the physician-level characteristics, PCPs’ academic affiliation was associated with more favorable global assessment (p = .045). Gender, type of primary care specialty, number of half-days of clinic per week, number of years in practice, and tenure in the VHA were not statistically significant predictors. The physicians’ method of responding to our survey was not significantly associated with global assessment.

Higher responses on the following scales were predictive of more favorable global assessment of CCRs: self-efficacy (p < .001), integration with workflow/workload (p < .001), training (p < .001), and design/interface (p < .001).

Sensitivity Analyses

When we limited our analysis to cases with known facility academic affiliation data, physicians’ academic affiliation (p = .09) and facilities in the west (p = .12) no longer reached conventional levels of statistical significance, but number of years in practice became a significant predictor of more favorable global assessment (p = .044). In another analysis, we assumed that all of the facilities with missing academic affiliation data had an academic affiliation. We found that physicians’ academic affiliation (p = .051) no longer reached statistical significance. Self-efficacy (p < .001), integration with workflow/workload (p < .001), training (p < .001), design/interface (p < .001), and west region (p = .032) remained significant predictors in both sensitivity analyses.

DISCUSSION

This study of PCPs’ perceptions of CCRs demonstrates that there is room for improvement in each of the domains we surveyed. PCPs did not rate the CCRs extremely low in any dimension, but with the exception of items related to the PCPs’ computer skills, no item received very strong ratings either. As for physicians’ global assessment of CCRs, the rating was only in the midrange. Our findings about VHA CCR usability and PCPs’ global assessment of CCRs provide insight into ways to improve CCRs.

The VHA has invested a large amount of resources into the development and implementation of CCRs.20 VHA PCPs are often expected to routinely use CCRs to improve quality of care. Many U.S.-trained clinicians’ earliest experiences with CCRs will come from their VHA experiences, and the VHA’s EHR and CCR system is often held up as a model for other integrated systems.21 Whereas prior studies have assessed CCR effectiveness, a broad-based end-users’ evaluation of usability, usefulness, and overall satisfaction with the VHA’s CCRs has not been conducted. Our results are consistent with a recent study of IT clinical support for quality improvement that identified opportunities to enhance the effective use of IT to support clinical decision making.14

The lowest ratings were related to clinical specificity/appropriateness, suggesting that improved specificity, perhaps by adding options that enable the PCP to resolve the CCR more appropriately (e.g., “not applicable,” “pending,”) or modifying the algorithms used to trigger the CCRs, could improve end-users’ experience. An example of poor clinical specificity is a hepatitis C CCR that provides no option to document that the patient does not have hepatitis C. Saleem et al. reported similar findings about the lack of flexibility of CCRs in their study that used direct observation to explore barriers to CCR use.16 In another study of non-VHA clinical reminders, end-users provided feedback about the lack of relevance of some of the clinical reminders (e.g., the provider had previously accepted the advice recommended by the CCR).22 Some may be concerned that making CCRs more flexible will provide PCPs with an excuse to dismiss the CCRs inappropriately. However, whether CCRs in their current format may at times inadvertently encourage overuse or misuse of care is also an important concern. A recent paper described occurrences within the VHA of prostate cancer screening unsupported by guidelines.23 Our study was not designed to assess the contribution of CCRs to these occurrences, but the relationship should be investigated.

Our findings that integration into workflow/workload ratings are only in the midrange suggest that this is another area that warrants attention. A diabetic eye-screening CCR that prompts the PCP to assess for recent fundus examination despite documentation of an upcoming eye clinic appointment in the patient’s electronic chart represents one opportunity for better integration with workflow/workload. In addition, there is growing evidence that primary care encounters require reconciliation of an ever-increasing number of competing demands; for complex patients, the amount of time available to respond to all of the patient’s needs may not be sufficient in a typical primary care encounter.24–27 A study of predictors of CCR use in 8 VHA facilities found strong association between the use of support staff to complete processes of care and higher rates of CCR completion.28 Our findings support the need for more usability testing and refinement of CCRs. Strategies that do not rely solely on the one-on-one clinician–patient encounter to improve care (e.g., group visits or standing orders for non-PCPs to complete CCRs such as counseling for hypertensive patients) may enhance workflow/workload.29

A secondary objective of our study was to identify factors that predict higher global ratings of CCRs. Integration with workflow/workload was a strong predictor, as was better CCR design/interface. The latter suggests that greater attention to the CCR interface should be considered in CCR development, a finding echoed in a study that used direct observation methods.16 More positive ratings related to sources of training and having greater confidence in using CCRs on VHA computers also predicted higher global ratings. A recent study also found that providers at facilities with a cooperative culture rated the implementation of IT support for QI as higher.14 Whether improvements in each of these areas would lead to higher global ratings should be investigated using prospective designs. Physician’s academic affiliation predicted higher global ratings, and lower global ratings were associated with working in a facility in the west compared with the south, even when adjusting for other physician- and facility-level factors. We do not have definitive explanations for these findings. Perhaps CCRs are primarily developed by academically affiliated physicians, and unmeasured differences in organizational culture, the CCR development process, and end-users’ expectations of CCR systems might explain our findings.

Several study limitations should be noted. Because facilities can develop and implement their own CCRs, PCPs may have been exposed to a variety of types of CCRs and had different types of CCRs in mind when responding to our survey. However, we accounted for clustering at the facility level in our analyses. We assessed perceptions of the overall CCR process rather than actual use or each step in the CCR process because such detailed assessment would have greatly increased survey respondent burden and a related study that used direct observation was underway to answer this question.16 We did not measure the clinical complexity of each PCP’s practice; it is possible that an interaction exists between clinical complexity and PCPs’ attitudes toward CCRs. We surveyed PCPs only and excluded residents; other clinicians may have different perceptions of the VHA’s CCRs. The VHA’s CCRs, performance measures, culture, and incentive structure may differ from other health care systems, limiting the generalizability of our results.

End-users’ assessment of automated decision support tools such as CCRs is an important step to improving these tools. Whereas facilities may receive informal feedback from end-users, a systematic assessment can identify areas that are problematic across facilities. For example, we noted that CCRs could be improved by making them more specific to various clinical scenarios, thus calling for more extensive usability testing during development and implementation. Also, our results suggest that integration of CCRs with workload and workflow may be improved. Future studies should investigate methods of facilitating CCR completion, such as extending visit length, using group visits and standing orders that can be executed by nonphysician staff, and increasing efficiency of the physician–patient interaction by preparing patients previsit about CCR-related topics.

Acknowledgements

The authors are grateful to Barbara Simon, MA for her assistance with the survey, Mingming Wang, MPH for her assistance in programming the facility data, and Joya Golden for her administrative support of the project. This research was supported by the Department of Veterans Affairs, Veterans Health Administration, Health Services Research and Development Service (TRX 02-216). A VA HSR&D Advanced Career Development Award supported Dr. Asch, and a VA HSR&D Merit Review Entry Program Award supported Dr. Patterson. The views expressed in this article are those of the authors and do not necessarily represent the view of the Department of Veterans Affairs.

Conflict of Interest Statement Drs. Glassman, Asch, and Doebbeling are staff physicians at their respective VHA facilities. After completing this project, Dr. Fung became an employee at Zynx Health Incorporated. Dr. Fung was a staff physician at a VHA facility during the study.

References

- 1.Shea S, DuMouchel W, Bahamonde L. A meta-analysis of 16 randomized controlled trials to evaluate computer-based clinical reminder systems for preventive care in the ambulatory setting. J Am Med Inform Assoc. 1996;3(6):399–409. [DOI] [PMC free article] [PubMed]

- 2.Balas EA, Weingarten S, Garb CT, Blumenthal D, Boren SA, Brown GD. Improving preventive care by prompting physicians. Arch Intern Med. 2000;160(3):301–8. [DOI] [PubMed]

- 3.Cannon DS, Allen SN. A comparison of the effects of computer and manual reminders on compliance with a mental health clinical practice guideline. J Am Med Inform Assoc. 2000;7(2):196–203. [DOI] [PMC free article] [PubMed]

- 4.Hoch I, Heymann AD, Kurman I, Valinsky LJ, Chodick G, Shalev V. Countrywide computer alerts to community physicians improve potassium testing in patients receiving diuretics. J Am Med Inform Assoc. 2003;10(6):541–6. [DOI] [PMC free article] [PubMed]

- 5.Dexter PR, Wolinsky FD, Gramelspacher GP, et al. Effectiveness of computer-generated reminders for increasing discussions about advance directives and completion of advance directive forms: a randomized, controlled trial. Ann Intern Med. 1998;128(2):102–110. [DOI] [PubMed]

- 6.Dexter PR, Perkins S, Overhage JM, Maharry K, Kohler RB, McDonald CJ. A computerized reminder system to increase the use of preventive care for hospitalized patients. N Engl J Med. 2001;345(13):965–70. [DOI] [PubMed]

- 7.Wagner EH, Austin BT, Von Korff M. Organizing care for patients with chronic illness. Milbank Q. 1996;74(4):511–44. [DOI] [PubMed]

- 8.Sequist TD, Gandhi TK, Karson AS, et al. A randomized trial of electronic clinical reminders to improve quality of care for diabetes and coronary artery disease. J Am Med Inform Assoc. 2005;12(4):431–7. [DOI] [PMC free article] [PubMed]

- 9.Patterson ES, Nguyen AD, Halloran JP, Asch SM. Human factors barriers to the effective use of ten HIV clinical reminders. J Am Med Inform Assoc. 2004;11(1):50–9. [DOI] [PMC free article] [PubMed]

- 10.Perlin JB, Kolodner RM, Roswell RH. The Veterans Health Administration: quality, value, accountability, and information as transforming strategies for patient-centered care. Am J Manag Care. 2004;10(11 Pt 2):828–36. [PubMed]

- 11.Demakis JG, Beauchamp C, Cull WL, et al. Improving residents' compliance with standards of ambulatory care: results from the VA Cooperative Study on Computerized Reminders. JAMA. 2000;284(11):1411–6. [DOI] [PubMed]

- 12.Fung CH, Woods JN, Asch SM, Glassman P, Doebbeling BN. Variation in implementation and use of computerized clinical reminders in an integrated healthcare system. Am J Manag Care. 2004;10(11 Pt 2):878–85. [PubMed]

- 13.Agrawal A, Mayo-Smith MF. Adherence to computerized clinical reminders in a large healthcare delivery network. Medinfo. 2004;11(Pt 1):111–4. [PubMed]

- 14.Doebbeling BN, Vaughn TE, McCoy KD, Glassman P. Informatics Implementation in the Veterans Health Administration (VHA) healthcare system to improve quality of care. AMIA Annu Symp Proc. 2006:204–8. [PMC free article] [PubMed]

- 15.Weeks WB, Yano EM, Rubenstein LV. Primary care practice management in rural and urban Veterans Health Administration settings. J Rural Health. 2002;18(2):298–303. [DOI] [PubMed]

- 16.Saleem JJ, Patterson ES, Militello L, Render ML, Orshansky G, Asch SM. Exploring barriers and facilitators to the use of computerized clinical reminders. J Am Med Inform Assoc. 2005;12(4):438–47. [DOI] [PMC free article] [PubMed]

- 17.Web-based user interface evaluation with questionnaires. http://www1.acm.org/perlman/question.cgi. Accessed August 23, 2006.

- 18.Yano EM, Simon B, Canelo I, Mittman B, Rubenstein LV. 1999 VHA survey of primary care practices. VA HSR&D Center of Excellence for the Study of Healthcare Provider Behavior; 2000. Report No.: 00-MC12.

- 19.Yano EM, Asch SM, Phillips B, Anaya H, Bowman C, Chang S, Bozzette S. Organization and management of care for military veterans with HIV/AIDS in Department of Veterans Affairs Medical Centers. Mil Med 2005;170(11):952–9. [DOI] [PubMed]

- 20.McClure DL. Veterans Affairs: Subcommittee Questions Concerning the Department’s Information Technology Program. http://www.gao.gov/new.items/d01691r.pdf. Accessed April 10, 2007.

- 21.Gaul GM. Revamped Veterans’ Health Care Now a Model. Washington Post 2005;Sect. A01.

- 22.Zheng K, Padman R, Johnson MP, Diamond HS. Understanding technology adoption in clinical care: clinician adoption behavior of a point-of-care reminder system. Int J Med Inform. 2005;74(7–8):535–43. [DOI] [PubMed]

- 23.Walter LC, Bertenthal D, Lindquist K, Konety BR. PSA screening among elderly men with limited life expectancies. JAMA. 2006;296(19):2336–42. [DOI] [PubMed]

- 24.Nutting PA, Rost K, Smith J, Werner JJ, Elliot C. Competing demands from physical problems. Arch Fam Med. 2000;9(1059):1059–64. [DOI] [PubMed]

- 25.Werner RM, Greenfield S, Fung CH, Turner BJ. Measuring quality of care in complex patients: findings from a conference organized by the Society of General Internal Medicine. http://www.sgim.org/PDF/SGIMReports/MeasuringQualityCareFinalReportOct42006.pdf. Accessed December 4, 2006.

- 26.Tai-Seale M, McGuire TG, Zhang W. Time allocation in primary care office visits. Health Serv Res. 2007;42(5):1871–94. [DOI] [PMC free article] [PubMed]

- 27.Werner RM, Asch DA. Clinical concerns about clinical performance measurement. Ann Fam Med. 2007;5(2):159–63. [DOI] [PMC free article] [PubMed]

- 28.Mayo-Smith MF, Agrawal A. Factors associated with improved completion of computerized clinical reminders across a large healthcare system. Int J Med Inform. 2007;76(10):710–6. [DOI] [PubMed]

- 29.McGlynn EA. Intended and unintended consequences: what should we really worry about? Med Care. 2007;45(1):3–5. [DOI] [PubMed]