Abstract

Background

Clinicians in ambulatory care settings are increasingly called upon to use health information technology (health IT) to improve practice efficiency and performance. Successful adoption of health IT requires an understanding of how clinical tasks and workflows will be affected; yet this has not been well described.

Objective

To describe how health IT functions within a clinical context.

Design

Qualitative study, using in-depth, semi-structured interviews.

Participants

Executives and staff at 4 community health centers, 3 health center networks, and 1 large primary care organization.

Approach

Transcribed audio-recorded interviews, analyzed using the constant comparative method.

Results

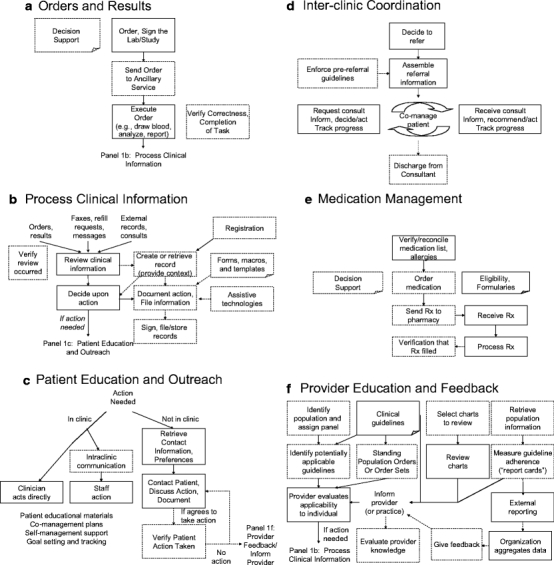

Systematic characterization of clinical context identified 6 primary clinical domains. These included results management, intra-clinic communication, patient education and outreach, inter-clinic coordination, medication management, and provider education and feedback. We generated clinical process diagrams to characterize these domains. Participants suggested that underlying workflows for these domains must be fully operational to ensure successful deployment of health IT.

Conclusions

Understanding the clinical context is a necessary precursor to successful deployment of health IT. Process diagrams can serve as the basis for EHR certification, to identify challenges, to measure health IT adoption, or to develop curricular content regarding the role of health IT in clinical practice.

KEY WORDS: ambulatory care, medical informatics, qualitative research, quality improvement

BACKGROUND

In 2001, the Institute of Medicine (IOM) reported that health information technology (health IT) is a critical component of planning for safe, effective, patient-centered, timely, efficient, and equitable care.1 Subsequently, adoption of health IT has become a national priority. Despite considerable efforts, adoption of ambulatory electronic health record (EHR) systems remains slow, especially among smaller practices, with efforts constrained by financial considerations and insufficient health IT knowledge and expertise.2–4

Even the measurement of such adoption has proven challenging because of the lack of an accepted definition of an EHR. Depending on the definition, adoption of ambulatory EHR systems may be as low as 9%.5 The IOM6 and Health Level 77 have made efforts to standardize descriptions of EHR systems, which have served as the foundation for the certification criteria for ambulatory EHR systems.8 However, these perspectives and the marketing efforts of EHR vendors have focused on product features such as medication management,9–12 and not on the broader clinical context of such systems.

Understanding the full clinical context for health IT to the level of tasks, resources, and workflows is a necessary prerequisite for successful adoption of health IT and measurement of its diffusion. The innovation diffusion literature suggests that future health IT users may have more confidence in peer-identified opportunities for application.13 Accordingly, we sought to draw from the experiences of ambulatory health centers to describe how their health IT solutions function within a clinical context.

METHODS

We conducted a qualitative study using in-depth interviews, an approach well suited to exploring organizational experiences with health IT.14,15 The study was approved by the Yale Human Investigation Committee.

Sample

The aim was to construct a sample that was diverse in pertinent characteristics and experiences, specifically sites that deployed different health IT solutions in the ambulatory care setting.16 We purposefully selected15 “national leaders” in use of vendor-supplied electronic health record systems, sites that primarily used registry systems, and sites that developed health IT solutions. Our sampling included both centers and supporting organizations to facilitate discussions among practices with differing levels of existing health IT infrastructure. We focused on community health centers and the organizations providing them with IT expertise because of their experiences reporting quality measures to the Health Disparities Collaboratives.17,18

Potential sites were identified with the assistance of experts from the Health Resources and Services Administration (HRSA), the National Association of Community Health Centers (NACHC), the Community Clinics Initiative of the Tides Foundation, the Community Health Care Association of New York State, and the Connecticut Primary Care Association.

Recruitment

From August 2006 through March 2007, organizations were invited to participate via phone or electronic mail. Organizations that expressed interest were sent an invitation letter, the interview guide, and a letter of support from NACHC. During on-site visits, we conducted in-depth, semi-structured interviews with organization executives. Visits lasted between 2 and 4 hours, and included product demonstrations. Interviews probed clinical uses of health IT, facilitators and barriers to adoption, and benefits and limitations of systems with respect to improving care. Interviews were audio-recorded, transcribed by an independent professional transcription service, and reviewed for accuracy. We enrolled sites and conducted site visits until theoretical saturation was reached.15,16

Analysis

Data were entered into Atlas:ti 5.2.9 to facilitate data organization, review, and analysis. Coding and analysis were performed by a multidisciplinary team with expertise in medicine, health IT, public health, and qualitative analysis.19 Analysis was conducted throughout the data collection process, iteratively, so that analytic questions and insights informed subsequent data collection.20 An integrated approach to building the code structure was employed, which involved both inductive development from the data and a deductive organizing framework for code types.21 Using the constant comparative method,22 we examined coded text to identify novel ideas and confirm existing concepts, refining the codes as appropriate until “informational redundancy” occurred.23 Differences in independent coding were negotiated during group sessions, and codes were refined as needed.24,25 All data were consistently coded with the final, comprehensive code structure, and were synthesized to create a framework reflecting clinical context for health IT solutions. An audit trail was maintained to document analytic decisions.

We have verified our clinical domains and process diagrams with participating organizations, with health IT experts in one-on-one review sessions, and with community health center executives (for external confirmation).15,20,26

RESULTS

Eight of 10 invited sites agreed to participate. Participating organizations included 4 community health centers, and 4 supporting organizations (3 health center networks, and 1 private clinic) (Table 1). We completed 20 on-site, in-person interviews with 1 CEO, 1 COO, 4 CIO’s, 4 medical directors, and 8 directors or staff responsible for systems implementation.

Table 1.

Characteristics of Participating Organizations

| Organization Number | Location | Sites | Visits/year |

|---|---|---|---|

| 1 | Middletown, CT | 10 sites, 95 + schools | 200,000+ |

| 2 | Napa, CA | 6 sites | 48,000 |

| 3 | Scott Depot, WV | 19 centers, 70 sites | ~400,000 |

| 4 | San Diego, CA | 15 sites | 300,000+ |

| 5 | Doral, FL | 26 centers, 163 sites | Almost 1,000,000 |

| 6 | New Haven, CT | 8 sites plus 8 schools | 162,000 |

| 7 | New York, NY | 10 sites | 120,000 |

| 8 | Palo Alto, CA | 6 locations | 750,000 |

Participants expressed that health IT systems facilitate and support existing clinical processes. Having workable processes was described as a necessary precondition for successful deployment, independent of solution. Table 2 summarizes the 6 primary clinical domains and the spectrum of health IT solutions described by participants. We detail these domains with clinical process diagrams, depicting tasks and workflows that are applicable independent of health IT (Fig. 1).

Table 2.

Primary Clinical Domains and Spectrum of Health IT Solutions

| Area of Health IT Use | Impact on Clinician Workflow | ||

|---|---|---|---|

| Minimal/None | Some | Significant | |

| Orders, results, and results management | Results for ordered tests printed or faxed (paper-based) | Online order sets, online results for clinicians of ordered tests | Tools track and follow up preventive care needs, results, and outcomes |

| Intra-clinic communication | Providers and clinic staff communicate with e-mail, text messages; notes dictated | Clinical tasks assigned electronically, document imaging of paper notes (a.k.a., “Go paperless”) | Multidisciplinary coordinated care; documentation in structured, analyzable format |

| Patient education and outreach | Practice website with educational materials, automated reminders for appointments | Automatically generated forms/care plans (e.g., asthma action plan) | Clinical care managed between visits (includes goal-setting and tracking) |

| Inter-clinic coordination | Basic immunization records | Electronic referral paperwork | Referrals managed per electronically reinforced protocols; information exchanges |

| Medication management | Online drug reference | e-prescribing (with interaction checking), frequently used lists | e-prescribing with diagnosis- and patient-based decision support |

| Provider education and feedback | Web site links, online clinic policy manuals | Integrated or handheld reference materials, online training | Integrated electronic clinical decision support; report cards, assessments |

Figure 1.

Clinical process diagrams. In these diagrams, a rectangle represents a clinical task, a page represents information or resource, and an arrow represents existing workflow. If these symbols are dashed, it means that a study participant described using health IT to transform this task, resource, or workflow.

“If you can’t get a system to work on paper, making it electronic won’t make it any better.” — Medical Director

Clinical Domain 1: Orders, Results, and Results Management

Orders and Results

Orders written by providers are executed by ancillary services in many clinics. Participants revealed that this creates the possibility of non-executed orders. There are also orders that may take days to result (e.g., blood cultures). Respondents reported a need to reliably identify patients and/or the orders to be executed at the ancillary site. They suggest that health IT can facilitate the identification process via bar codes, can support order transmission, can track whether orders are completed in a timely manner, and can provide decision supports to promote evidence-based care.

Processing Clinical Information/Results Management

Once results or other clinical information become available (such as prescription refill requests, faxes/messages, external records, visit notes from Emergency Departments, consult notes), the health care team processes and acts on this information. Electronic records allow a patient’s health to be viewed longitudinally, or in the words of one respondent, you are “able to see... the whole person literally... no matter what the specific issue is that they are coming [in] for.”

“The technology... can really enhance what we do and help us keep track of what we do and particularly prevent the kinds of things that fall through the cracks when you are dealing with paper”—Chief Operating Officer

Health IT depends on a reliable workflow at the provider level. “So one example might be that these EHR’s send you... an email or an e-message... when you get your lab results back. And the management of that Inbox becomes a problem... where they delete the messages,... before they read them” (Senior Director). To address this, respondents suggest that health IT can also be used to collect usage information. Examples included: 1) checking if providers are reading lab result e-mails before deleting them, 2) comparing the number of electronic notes written to the number of visits, and 3) examining when health IT systems are accessed (e.g., registration, charting). These measurements can be used to target providers for special attention and training, and to identify practice inefficiencies.

“What we’re really finding is that the sites really end up doing a lot more work... they realize there is a lot more there to do because they have the information right in front of them.”—Project Manager

Clinical Domain 2: Intra-clinic Communication

Intra-clinic communication includes communicating clinical tasks to other staff members, and documenting visits and actions taken. Many health IT systems facilitate intra-office communication through text messaging, electronic mail, or “assignable clinical tasks” within their electronic health record system. In one CIO’s words, this is “basically a replacement for the sticky notes syndrome.”

Most clinicians consider visit documentation (electronic charting) to be the primary advantage of electronic health record systems, using templates and macros to enter comprehensive notes. Some participants reported using assistive technologies such as voice recognition or smart pens in attempts to generate structured data while minimizing impact on providers.

“...the thing that they want is something that maximizes the amount of data that they can capture, but simultaneously minimizes the amount of time it takes to do it...”—CIO

Non-clinicians felt that increased charting did not necessarily improve clinical care, especially if free text was generated instead of the high-quality, structured data needed to drive quality improvement efforts. This tension may be related to the concurrent billing and medicolegal uses of the medical record identified by participants.

“I think physician charting is wrong... the level of detail at which some physicians are asked to capture the data way, way exceeds what is necessary for health IT to help them with their case load. Secondly, I think that the techniques for just-in-time harvesting out of direct text are going to get much better. So it is silly to temporarily retrain a whole staff of physicians to become [clerks]”—Senior Director

Clinical Domain 3: Patient Education and Outreach Services

All practices described a need to work with patients to take actions. In the clinic, these actions might be supported by patient educational materials, coordinated care plans, or self-management support, or all of the above. Outside the clinic, this involves telephone calls or mailings for medication recalls, appointment reminders, or to discuss abnormal lab results. One practice reported using health IT to support follow-up of these communications.

One specialized application of health IT used in two organizations is “self-management support.” Providers record specific, measurable goals with patients (e.g., exercise 20 minutes a day, 3 times a week), then track patient progress toward those goals across multiple visits. These tools facilitate goal reinforcement.

Clinical Domain 4: Inter-Clinic Coordination

Managing Referrals

Care coordination between the medical home and subspecialists was a frequently mentioned process. Health IT helps to organize the referral process by ensuring that the pre-referral workup is completed before the subspecialty visit, and by managing interactions between the subspecialist and the medical home. One system used algorithms to determine when subspecialist services were no longer needed. These strategies were felt to reduce unnecessary subspecialist visits, resulting in increased availability and decreased overall cost.

Optimizing Resource Use

Participants also described the ability for health IT to direct patients to the most efficient resources, such as an on-site pharmacy.

“The revenue from pharmacy services went up when they instituted e-prescribing, primarily because it was easier for the providers to send all the prescriptions to on-site pharmacy. So the prescription revenue went up significantly.”—Medical Director

Care Transition Management

Several practices mentioned using health IT to help manage care transitions with external providers, including Emergency Departments, schools, and dental clinics. This included keeping clinical information such as problem and medication lists synchronized. Good transition management was deemed necessary in the mobile, low utilizing population served by these health centers. Efficient information exchange helps all providers to work in concert to address an individual’s health concerns.

Clinical Domain 5: Medication Management

Health IT can provide real-time decision support including automated interaction checking (drug–drug, drug–allergy, drug–diagnosis), and enhance prescription legibility and clarity. Respondents reported that upgrading prescription transmission capabilities (e.g., electronic transmission) requires simultaneous pharmacy involvement. One practice reported using medication dispensation data obtained from claims databases to improve their medication reconciliation and refill process.

Clinical Domain 6: Provider Education and Feedback

Participants reported a few basic strategies for improving care that we grouped as providing education, prospective feedback, and retrospective feedback.

Provider Education

Providers have extensive educational needs that can be addressed by health IT. Some practices make their clinic- and region-specific guidelines and protocols available online, whereas others make web-based informational resources available at the point-of-care (UpToDate™ was mentioned most frequently). Physicians may also use other informational resources such as journals, audio tapes, podcasts, or handheld reference materials. Finally, health IT can be used to verify that specific resources have been accessed, to assess proficiency, and to support continuing medical education efforts.

Prospective Feedback: Point-of-Care Reminders

With the encouragement of pay-for-performance, one of the desired ways to use health IT is to support preventive care and chronic disease management. Every office visit presents an opportunity to reinforce clinical guidelines to the health care team, by providing patient-specific recommendations at the point of care. However, participants suggested that point-of-care reminders in the form of pop-ups or alerts have well-documented problems, including provider alert fatigue. They felt that reminders needed to be accurate, with an ability to disable those not applicable to specific patients. One practice reported using health IT to create standing orders for a population (e.g., serum hemoglobin A1c for all patients that are due), eliminating the need to enter these orders individually.

Retrospective Feedback: Chart Review

As part of traditional quality improvement efforts, medical directors often supervise reviews of randomly selected charts, looking for documentation or medical errors. These reviews may not always reveal the true extent of chart deficiencies. With health IT, participants reported selecting charts purposefully (e.g., patients without a pain score documented), allowing more targeted feedback.

Reporting

Data stored in electronic medical record systems can be evaluated for compliance to clinical guidelines and quality metrics. However, before using health IT to report on performance, there are numerous key issues to address. These include guideline selection, data quality and maintenance, identity reconciliation, entering or migrating historical data, identification of the population being managed, assignment of provider panels, system configurability, and standardization of measures. These issues applied to efforts at all sites, irrespective of health IT deployed. We will discuss these prerequisites, and then describe the generation of provider-focused reports.

Specifying Guidelines/Outcome Measures Participating organizations created clinical committees to select clinical guidelines to adopt and measure. These guidelines originated from the U.S. Preventive Services Task Force recommendations, Health Plan Employer Data and Information Set (HEDIS), practice-specific rules, and best-practice guidelines from specialty organizations such as the American Heart Association and the American Diabetes Association. Practices also created guidelines for other reporting requirements such as to the Health Disparities Collaboratives, and outcomes for research studies. Some practices also chose to create guidelines to measure compliance with documentation needs such as growth charts, vital signs, and developmental/screening assessments.

Identification of Population The application of population-based guidelines and measurement is predicated upon being able to identify subpopulations of patients as meeting inclusion and exclusion criteria (e.g., patients with a chronic medical condition such as asthma). At one practice, patients were prospectively identified as being likely to have diabetes based on information already contained in their electronic chart (such as weight, random glucose level, and other laboratory results)—which could be confirmed at future visits.

“Once you identify a patient as requiring follow-up, that is health IT at its best.”—Director of Quality

Data Quality/Maintenance Practices identified data quality as a challenge to driving quality improvement efforts. Ideally, demographic and scheduling data come directly from the practice management system, with laboratory and pathology results interfacing directly into the registry system. In practice, these interfaces do not always exist, resulting in a highly manual and error-prone data entry process. One practice suggests that it is sufficient if the people using the data (e.g., case managers) also enter the data, as they develop a strong feeling of ownership. Although that strategy may be helpful, most practices reported difficulty keeping the data clean without significant time investment unless there were electronic interfaces with the practice management, laboratory, and pathology systems.

Identity Reconciliation Individuals must be uniquely identifiable so that their information can be reliably collected. This demographic information is often stored in the practice management system, and sophisticated algorithms may sometimes be employed to automate the process of identify reconciliation involving social security number, address, birth date, and insurance information. A manual reconciliation process can follow this when identity remains ambiguous. This reconciliation process, along with consolidation of duplicate records (record de-duplication), allows one set of data to be uniquely associated with and analyzed for each patient. This is critical where decision support and quality measurements are concerned. Clinical information that is spread across disparate records results in erroneous calculations, clinical alerts, and reports.

Entering (or Migrating) Historical Data With some clinical guidelines, historical data may be necessary to determine compliance. For example, if cervical cancer screening rates are desired and the health IT system has been recently installed, practices report it necessary to have a strategy to address Pap smears from the previous 3 years.27 Supporting guidelines, which require historical data entry, may necessitate a substantial amount of data entry to realize the benefits of accurate reporting and decision support. Participants mentioned this as a frequently missed consideration. They also reported frustration that historical data stored in electronic “interim solutions” was rarely transferable to new systems.

Assignment of Provider Panels Although the medical home is at the level of the practice, internal reporting can be at the level of the provider or team, necessitating conventions for assigning patients to provider or team panels. One practice used health IT to determine which provider evaluated a given patient most often, assigning the patient to that provider’s panel.

“Part of the challenge... is, with the open access system... how do you empanel providers and patients... [where] it’s fair to say... the results for this patient will be reflective of this care team.”—CEO

System Configurability Most practices found it necessary for decision support and data to be configurable, so that they would not have to undergo vendor release cycles to make minor changes. This allowed the flexibility to add support for new guidelines in a timely, independent manner.

Standardization of Measures Some practices identified difficulties in comparing their performance to other practices because of a lack of equivalence of information being reported, and because data was not interoperable and could not be aggregated.

Generating Reports The practices reported that functions required for retrospective provider feedback and for external reporting are typically not core functionality in EHR products. As a result, most practices reported having used chart abstraction with tabulation, database reports, spreadsheets, and registry systems.

“We actually had an electronic medical record system... one of the things we learned is that that system made a beautiful note, but it did nothing for quality of care. This system... helps us a lot for quality care although does not make pretty notes but we know that the quality is the reason we are doing this.”

Some practices described creating provider “report cards.” In these practices, clinical data are extracted from the practice management system (diagnosis codes, demographics, schedule), a chronic disease registry, and/or an EHR system (laboratory and other results). These fields are normalized and stored in a secondary database known as a data warehouse. From the warehouse, reports measuring conformance to guidelines (e.g., what percentage of my patients have a hemoglobin A1c over 8.0) are used for provider feedback. They are also used to identify patients most refractory to treatment, allowing for intensive case management resources to target patients needing the most help.

Practices also discussed reporting to external agencies. Standard data formats (e.g., standard vocabulary for chief complaint) and the ability to exchange data are keys to these efforts.

DISCUSSION

From the experiences of a diverse set of community health centers, we created process diagrams describing the clinical context in which health IT systems are used. These diagrams can serve as a framework for evaluation of EHR systems, or as the basis for use cases for EHR certification. They may also be used as the basis for comparison charts to help providers examine the advantages, disadvantages, and error-susceptible processes of different health IT solutions.

Our findings and associated diagrams can also be used to identify challenges to the effective clinical use of health IT. These challenges include effective documentation of visits, supporting patient outreach and education, and tracking and support for routine patient care and individual goals. Of note, the clinical process diagrams may also help vendors to design better products for the ambulatory care setting.

This study also has further educational and policy implications. From an educational standpoint, this work can be used to further efforts to design curricula about the role of health IT in clinical practice, and to plan how health IT might be used to actively evaluate provider conformance to practice standards. From a policy perspective, providers would benefit from consistent adoption of well-specified national guideline recommendations. Furthermore, as suggested by a recent AHRQ report,28 data specifications and analysis standards for these guidelines should be defined so that performance data can be reported uniformly, in a way that can be meaningfully compared across practices.

There are important limitations to our study. Despite our development of a purposeful sample with input from a variety of sources, the selected sites might not fully represent the existing spectrum of clinical uses of health IT in health centers. It is also possible that sites may have misrepresented their health IT capabilities. However, the on-site extensive data collection by interviewers with substantial IT and clinical expertise and direct observation minimized the likelihood of this occurring. We did not discuss respondents’ views of organizational readiness, change management, training, nonclinical uses, development and maintenance of IT infrastructure (off-site backups, downtime and disaster recovery strategies), privacy, security, or authentication.

Despite these limitations, the clinical process diagrams illuminate the basic issues that practices should consider when planning for new health IT systems. With these models, clinicians, informaticians, and industry will have a common frame of reference when discussing the health IT systems of the present and the future.

Acknowledgments

We would like to thank the Ethel Donaghue Center for Translating Research Into Practice and Policy for research support, and the Robert Wood Johnson Foundation for funding Dr. Leu and Ms. Webster’s time through the Clinical Scholars Program at Yale University. Dr. Bradley is supported by a Patrick and Catherine Weldon Donaghue Medical Research Foundation Investigator Award. We would also like to thank Community Health Center, Inc. for Mr. Cheung’s time.

The funding organizations, except authors, had no role in the design and conduct of the study; collection, management, analysis, and interpretation of the data; or preparation, review, or approval of the manuscript. Dr. Leu, Dr. Curry, and Dr. Burstin have had full access to all the data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis.

We would like to acknowledge Dr. Harlan Krumholz for providing administrative oversight and for supporting this project, and would like to thank Saurish Bhattacharjee and the Human Investigative Committee for their support. We would like to thank our collaborators that helped us with selecting sites to visit, our interviewees at participating organizations, our reviewers, and our families for their ongoing support.

This research has been presented as a poster at the National Association of Community Health Center’s Community Health Institute and Expo, in Dallas, Texas, on August 28, 2007.

Conflict of Interest None disclosed.

References

- 1.Institute of Medicine. Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC: The National Academies Press; 2001. [PubMed]

- 2.Simon S, Kaushal R, Cleary PD, et al. Correlates of electronic health record adoption in office practices: a statewide survey. J Am Med Inform Assoc. 2007;14:110–17. [DOI] [PMC free article] [PubMed]

- 3.Shields A, Shin P, Leu M, et al. Adoption of health information technology in community health centers: results of a national survey. Health Aff. 2007;26:1373–83. [DOI] [PubMed]

- 4.Bates DW. Physicians and ambulatory electronic health records. Health Aff. 2005;24:1180–9. [DOI] [PubMed]

- 5.Jha AK, Ferris TG, Donelan K, et al. How common are electronic health records in the United States? A summary of the evidence. Health Aff. 2006;25:w496–507. [DOI] [PubMed]

- 6.Institute of Medicine. Key Capabilities of an Electronic Health Record System. Washington, DC: The National Academies Press; 2003. [PubMed]

- 7.HL7 2007 EHR-S Functional Model. Ann Arbor, MI: Health Level Seven, Inc. Available at: http://www.hl7.org/documentcenter/public/standards/EHR_Functional_Model/R1/EHR_Functional_Model_R1_Final.zip. Accessed April 3, 2007.

- 8.Ambulatory EHR Functionality 2007 Final Criteria. Chicago, IL: Certification Commission for Healthcare Information Technology. Available at: http://www.cchit.org/work/criteria.htm. Accessed April 3, 2007.

- 9.Osheroff JA, Teich JM, Middleton BF, Steen EB, Wright A, Detmer DE. A Roadmap for National Action on Clinical Decision Support. Bethesda, MD: American Medical Informatics Association, 2006. Available at: http://www.amia.org/inside/initiatives/cds/cdsroadmap.pdf. Accessed April 28, 2007. [DOI] [PMC free article] [PubMed]

- 10.Bell DS, Cretin S, Marken RS, Landman AB. A conceptual framework for evaluating outpatient electronic prescribing systems based on their functional capabilities. J Am Med Inform Assoc. 2004;11:60–70. [DOI] [PMC free article] [PubMed]

- 11.Institute of Medicine. Preventing Medication Errors: Quality Chasm Series. Washington, DC: The National Academies Press; 2006.

- 12.Findings from the Evaluation of E-Prescribing Pilot Sites. Rockville, MD: AHRQ Publication No. 07-0047-EF, 2007.

- 13.Rogers EM. Diffusion of Innovations, 5th ed. New York, NY: Free Press (Simon & Schuster, Inc.); 2003.

- 14.Sofaer S. Qualitative methods: what are they and why use them. Health Serv Res. 1999;34:1101–18. [PMC free article] [PubMed]

- 15.Patton M. Qualitative Evaluation and Research Methods, 3rd ed. Thousand Oaks, CA: Sage; 2002.

- 16.Morse JM. The significance of saturation. Qual Health Res. 1995;5:147–9. [DOI]

- 17.Landon BE, Hicks LS, O’Malley AJ, Lieu TA, Keegan T, BcNeil BJ, Guadagnoli E. Improving the management of chronic disease at community health centers. N Engl J Med. 2007;356:921–34. [DOI] [PubMed]

- 18.Health Disparities Collaboratives. Rockville, MD: HRSA. Available at: http://www.healthdisparities.net/hdc/html/home.aspx. Accessed April 20, 2007.

- 19.Pope C, Mays N. Reaching the parts other methods cannot reach: an introduction to qualitative methods in health and health services research. BMJ. 1995;311:42–45. [DOI] [PMC free article] [PubMed]

- 20.Giacomini M, Cook D. Users’ guides to the medical literature: XXIII. Qualitative research in health care A. Are the results of the study valid. JAMA. 2000;284:357–62. [DOI] [PubMed]

- 21.Bradley E, Curry L, Devers K. Qualitative Data Analysis for Health Services Research: Developing Taxonomy, Themes, and Theory. Health Serv Res. 2007; 42(4):1758–72. doi 10.1111/j.1475-6773.2006.00684.x [DOI] [PMC free article] [PubMed]

- 22.Glaser BG, Strauss AL. The Discovery of Grounded Theory: Strategies for Qualitative Research. Chicago, IL: Aldine; 1967.

- 23.Lincoln YS, Guba EG. Naturalistic Inquiry. Beverly Hills, CA: Sage Publications; 1985.

- 24.Armstrong D, Gosling A, Weinman J, Marteau T. The place of inter-rater reliability in qualitative research: an empirical study. Sociology. 1997;31:597–606. [DOI]

- 25.Morse JM. Designing funded qualitative research. In: Denzin NK, Lincoln YS, eds. Handbook of Qualitative Research. London, UK: Sage Publications; 1994:220–35.

- 26.Miles MB, Huberman AM. Qualitative Data Analysis: An Expanded Sourcebook, 2nd ed. Thousand Oaks, CA: Sage Publications; 1994.

- 27.U.S. Preventive Services Task Force Screening for Cervical Cancer. Rockville, MD: AHRQ. Available at: http://www.ahrq.gov/clinic/uspstf/uspscerv.htm. Accessed April 20, 2007.

- 28.AHRQ Conference on Health Care Data Collection and Reporting. Rockville, MD: AHRQ Publication No. 07-0033-EF, 2007.