Abstract

Multiple factors are driving residency programs to explicitly address practice-based learning and improvement (PBLI), yet few information systems exist to facilitate such training. We developed, implemented, and evaluated a Web-based tool that provides Internal Medicine residents at the University of Virginia Health System with population-based reports about their ambulatory clinical experiences. Residents use Systems and Practice Analysis for Resident Competencies (SPARC) to identify potential areas for practice improvement. Thirty-three (65%) of 51 residents completed a survey assessing SPARC’s usefulness, with 94% agreeing that it was a useful educational tool. Twenty-six residents (51%) completed a before–after study indicating increased agreement (5-point Likert scale, with 5=strongly agree) with statements regarding confidence in ability to access population-based data about chronic disease management (mean [SD] 2.5 [1.2] vs. 4.5 [0.5], p < .001, sign test) and information comparing their practice style to that of their peers (2.2 [1.2] vs. 4.6 [0.5], p < .001).

KEY WORDS: practice-based learning and improvement, medical education, databases, quality improvement, information technology

INTRODUCTION

Although the fundamental principles of practice-based learning and improvement (PBLI) have been discussed for decades,1 its inclusion as 1 of 6 core competencies specified by the Accreditation Council of Graduate Medical Education (ACGME) has sparked attention within residency programs across the United States. PBLI competence includes the ability to “analyze practice experience and perform practice-based improvement activities...”, and “obtain and use information about their own population of patients...”2 The potential value of data describing the clinical practice experiences of trainees is clear. In describing the necessary elements to facilitate PBLI training, Manning includes “a database making it possible to study practice” and “the opportunity to discuss practice data with colleagues”.3 If competence is a “habit”,4,5 then use of these databases coupled with multiple occasions to discuss the findings will be important components of a PBLI curriculum. Ogrinc6 notes that access to health data for setting quality improvement priorities facilitates PBLI teaching. Most clinical information systems, however, are optimized to access individual patient records; although it may be possible to use them to “study practice”, it is not easy.

We previously conducted a needs assessment and created a Web-based prototype with mock population-based reports describing residents’ panel patients.7 In this paper, we describe the development, implementation, and evaluation of an innovative tool to facilitate resident PBLI learning by providing aggregate views of ambulatory clinical practice experiences.

AIMS

Our project included 3 aims: (1) develop an information system to support PBLI training by providing aggregate patient data to residents, (2) introduce this tool into the ambulatory curriculum of our Internal Medicine residency program, and (3) evaluate the tool’s usefulness and impact on short-term learning.

SETTING

UMA Clinic

The Internal Medicine (IM) residency program at the University of Virginia Health System (UVaHS) provides training for approximately 90 residents, each of whom cares for a panel of 50–80 ambulatory patients. The University Medical Associates (UMA) clinic serves as the primary care practice site for residents whose clinics are organized into firms with a dedicated attending physician.

Clinical Data Repository

The Clinical Data Repository (CDR) at the UVaHS is a Web-enabled, enterprise-wide data warehouse containing records on more than 900,000 patients and 12 million inpatient and outpatient encounters.8 The CDR integrates administrative (e.g., financials, claims) and clinical (e.g., laboratory results, medications) data from multiple UVaHS information systems, enabling authorized users to directly query the database using a locally developed interface. Patient identifiers are stored on a highly secure server requiring 2-factor authentication, and a separate server stores de-identified data. Although the CDR was created to facilitate clinical investigation, we have expanded its use to support quality assessment and education.9,10

PROGRAM DESCRIPTION

SPARC Development

The Systems and Practice Analysis for Resident Competencies (SPARC) application is a Web-based information system that provides de-identified population-based reports on residents’ patient panels. Our original specification was based on a needs assessment conducted with 14 Internal Medicine residents and several faculty members. Housestaff identified a variety of information that would be helpful for evaluating their clinical experiences, including demographic information for their patient panels and population-based disease screening and management reports with information about compliance with therapy.7 For SPARC, a physician informaticist (JL) and programmer (KS) worked with 2 IM faculty members (JV, MN) serving as domain experts to create the measurement reports and build a functional interface for a fully operational information system. We obtained periodic feedback from a larger group of stakeholders as needed.

Although the CDR contained most of the required data, we used additional sources to link patients to residents. Panel membership data were obtained from the clinic scheduling system, and we also drew data from a local diabetes registry managed within the resident clinic. This Microsoft Access database contains clinically rich data including measurements of blood pressure and body mass.

System development took 6 months, and required 0.3 full-time equivalents (FTE) of programmer time, 0.05 FTE of clinical faculty time, and 0.2 FTE of physician informaticist time. Development costs were low as we were able to use the hardware and software tools available through the CDR. Open-source tools were used extensively in the development of SPARC: data were linked and transformed with Perl and SQL and stored in a MySQL database on Linux-based machines running the Tomcat application server. Servlets were written in Java. JavaScript-enhanced Hypertext Markup Language (HTML) pages were dynamically generated with templates using Velocity.

The SPARC Tool

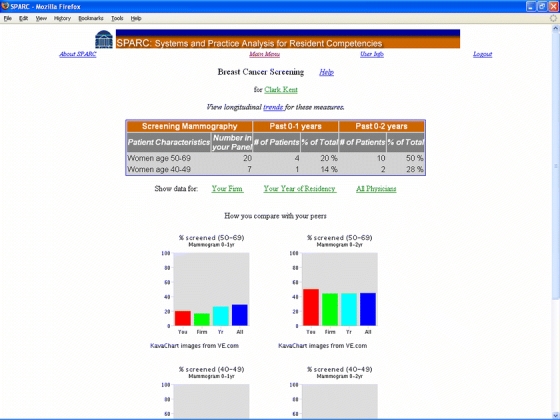

SPARC reports are divided into 3 categories: (1) aggregate reports of demographic and clinical patient characteristics; (2) preventive medicine reports on immunizations, cholesterol testing, and breast, colorectal, and cervical cancer screening, and (3) disease management reports for diabetes, congestive heart failure, and chronic obstructive pulmonary disease (see Fig. 1). Residents can compare their data to all residents in their same firm, same year, or to all UMA residents and faculty.

Figure 1.

Breast Cancer Screening Report. Actual data for 1 disguised resident is shown, displaying the denominator for the particular measure and the number that met the criteria for the numerator. Residents can view historic rates (updated quarterly) for their panel to assess changes over time.

SPARC includes reports of diagnoses commonly coded for each panel, based on ICD9-CM codes drawn from the CDR. To facilitate query and reporting in the CDR, we supplement the ICD9-CM hierarchy with the Clinical Classifications Software (CCS) categories.11 For SPARC, we needed a classification with fewer categories to allow easier comparison between residents. By elimination and aggregation, we reduced the number of CCS categories from 250 to 139. Users can “drill down” to the individual ICD9-CM codes that comprise each category.

We provide detailed definitions for all measures in the tool to facilitate interpretation. As SPARC’s focus is to support PBLI learning rather than patient care, we display only de-identified data. If needed, (e.g., for a focused chart review), residents may request identifiers through a separate, manual CDR process that allows for their provision for quality improvement initiatives.

SPARC data are limited to events that occurred within UVaHS, and are typically 2–3 months out of date, primarily because of the associated lag time for data transfer to the CDR. Some of the desired data were unavailable within our institution, including prescription-related information.

Use in the Curriculum

Occurring as a required small group ambulatory block activity over the first 2 training years, residents complete an 11-session systems-based practice and PBLI seminar series of graduated difficulty. In 3 PGY-1 year seminars, residents learn the principles of quality improvement, systems thinking, and human factor analysis. In 8 seminars spread over the PGY-2 year, residents complete an error investigation, conduct root cause analyses, discuss health policy, practice billing, and participate in a clinical health economics simulation.12 As a final curriculum experience for each resident, learners use SPARC to conduct a population-based analysis and design a quality improvement initiative. In this multipart exercise, faculty members introduce the principles of population-based medicine, and the SPARC project director, an informatics-trained physician, demonstrates SPARC. Trainees then use SPARC to analyze their practice data and identify specific strengths and weaknesses of their ambulatory practices. Based on the analysis, they prepare a process flow map and root cause analysis of a self-identified deficiency and develop a formal Quality Improvement (QI) proposal. The residents’ projects culminate in a presentation to their peers and written 4- to 5-page report. In this first SPARC year, third year residents naïve to SPARC also performed the complete exercise described above, which was not available to them as second year residents.

PROGRAM EVALUATION

Methods

To assess SPARC’s usefulness, residents received an anonymous Web-based survey 2 weeks after module completion using a Likert scale and open-ended questions to measure ease of use and utility of the system as a teaching tool and for quality improvement. To assess the impact on PBLI learning, our analysis of SPARC is primarily contained within pre-, mid-, and postcurriculum assessments of resident competency including a 33-item knowledge assessment and short answer QI scenarios. The complete assessment package including the SPARC subcomponent is currently undergoing final stage validation. We conducted an initial analysis of short-term learning from SPARC using an 8-item 5-point Likert scale questionnaire targeting self-rated competence in specific PBLI tasks, administered before and after the module. Pre-post differences were analyzed using the distribution-free sign test. Study completers were compared to noncompleters with respect to gender and year of training (Pearson’s Chi-Square) and pretest scores (Mann–Whitney).

RESULTS

During the 6-month intervention period, 51 of 61 second and third year residents participated in the curriculum. Thirty-three residents (65%) completed the usefulness survey. Twenty-six residents (51%) completed pre- and postquestionnaires. The remaining 25 trainees (noncompleters) only submitted the prequestionnaire.

Usefulness Survey

The 33 respondents included 16 second and 17 third year residents. Most respondents (94%) agreed that SPARC was a useful tool for learning about PBLI, and would help them improve their patient care. Only 46% of respondents believed the data in SPARC “accurately reflect my practice”. Many perceived strengths of the system were described: “eye-opening data documenting exactly where your practice is lacking”, and “Ability to compare one’s practice with those of one’s peers... ” Respondents also identified weaknesses: “It was difficult to know how to interpret data for smaller numbers of patients...”, and “It assumes people get all their medical care at UVA and look at performance based on that.”

Impact on Learning

A comparison of pre- vs. postscores showed statistically significant (p < .001) increases on all 8 items of the instrument. Mean scores with standard deviations are shown in the Table 1. Noncompleters were no different from study completers with regard to gender (p = .918, Pearson’s Chi-Square), year of training (p = 0.877, Pearson’s Chi-Square), or mean responses to the pretest questions (p = .312, Mann–Whitney).

Table 1.

Impact on PBLI Learning, Pre vs. Post (n = 26)

| Question | Mean (SD) | |

|---|---|---|

| Pretest | Posttest | |

| I feel confident in my ability to access population-based demographic data on my UMA patient panel (those patients for whom I am the PCP). | 2.2 (1.0) | 4.4 (0.6) |

| I feel confident in my ability to use local electronic databases to access population-based demographic data on my UMA patient panel. | 2.2 (1.2) | 4.4 (0.6) |

| I feel comfortable interpreting demographic data to understand important characteristics of the patients that I take care of. | 2.6 (1.2) | 4.4 (0.6) |

| I feel confident in my ability to access data about disease screening (e.g., mammography) rates in my UMA patient panel. | 2.6 (1.3) | 4.5 (0.5) |

| I feel confident in my ability to use electronic databases to access data about disease screening rates in my UMA patient panel. | 2.0 (1.1) | 4.5 (0.5) |

| I feel confident in my ability to access population-based data about chronic disease management (e.g., Type II DM) in my UMA patient panel | 2.5 (1.2) | 4.5 (0.5) |

| I feel confident in my ability to use electronic databases to access population-based data about chronic disease management (e.g., Type II DM) in my UMA patient panel | 2.3 (1.2) | 4.5 (0.5) |

| I can access information that compares my practice style (preventive health practices, disease management) to that of my peers. | 2.2 (1.2) | 4.6 (0.5) |

Using a 5-point Likert Scale (1=Strongly Disagree, 2=Somewhat Disagree, 3=Neutral, 4=Somewhat Agree, 5=Strongly Agree)

*All pre-post differences are significant at the p < .001 level (2-tailed) using the sign test.

DISCUSSION

We developed and implemented SPARC, a Web-based tool for residents to use to evaluate their outpatient continuity practice experiences. Data from our enterprise-wide CDR were supplemented with local data sources that link patients and residents and provide detailed clinical data for diabetic patients.

Residents found SPARC to be useful for learning about PBLI and believed it would improve the care they provide to their patients. Residents also reported increased confidence in their ability to conduct PBLI tasks. In addition, they expressed skepticism about the accuracy of the data, recognizing the limitations of information gathered for administrative purposes. Although our results reached statistical significance, interpretation is limited by self-assessment bias, a significant proportion of study noncompleters, and the lack of a control group. Most noncompleters were unable to complete the study because of scheduling conflicts. Our evaluation is also limited by the lack of long-term outcome data on resident knowledge and performance, which will be the focus of further study.

We focused our outcomes on resident learning instead of clinical outcomes for several reasons. The ultimate aim of PBLI is improved patient outcomes, but valid attribution of patient prevention or chronic illness outcomes to an individual resident’s work is confounded by a variety of issues. These factors include the validity of drawing conclusions based on small resident panel sizes and effects of time lags inherent in measuring an individual resident’s performance within a 3-year residency. PGY-1 resident outcomes may be contaminated by the prior resident’s contribution, whereas advanced residents may be close to program completion by the time they have accumulated enough valid individually attributable data, too late to use the information to effect change in many patient outcomes of interest. Future studies focused on short duration medical illness outcomes may remedy these limitations. Despite these problems, ambulatory profiling may be one of the best opportunities because episodes of care can be linked to a specific provider, unlike the current team-based inpatient setting where no resident can be held solely accountable.

Our findings may be especially useful for organizations that have existing clinical data warehouses or are seeking to justify their adoption. We used widely available electronic data combined with open-source tools that are easily available at no cost, increasing the likelihood that our tool can be adopted by others. Because of mounting pressure from professional societies, payors, and educational accreditation groups, residents need to acquire the skills and knowledge to regularly analyze and act upon their practice data. We believe tools like SPARC may be an important component of a PBLI curriculum that directly engages residents and helps them acquire these important skills.

Acknowledgments

This work was supported through an Academic Administrative Units in Primary Care grant funded by the DHHS Health Resources and Services Administration.

Conflict of Interest None disclosed.

References

- 1.Moore DE, Jr., Pennington FC. Practice-based learning and improvement. J Contin Educ Health Prof. 2003;23(Suppl 1):S73–80. [DOI] [PubMed]

- 2.Accreditation Council of Graduate Medical Education. The ACGME outcome project. Available at: http://www.acgme.org/outcome/. Accessed on August 21, 2007.

- 3.Manning PR. Practice-based learning and improvement: a dream that can become a reality. J Contin Educ Health Prof. 2003;23(Suppl 1):S6–9. [DOI] [PubMed]

- 4.Epstein RM, Hundert EM. Defining and assessing professional competence. JAMA. 2002 Jan 9;287(2):226–35. [DOI] [PubMed]

- 5.Leach DC. Competence is a habit. JAMA. 2002;287(2):243–4. [DOI] [PubMed]

- 6.Ogrinc G, Headrick LA, Mutha S, Coleman MT, O’Donnell J, Miles PV. A framework for teaching medical students and residents about practice-based learning and improvement, synthesized from a literature review. Acad Med. 2003;78(7):748–56. [DOI] [PubMed]

- 7.Schubart J, Lyman J, Scully K, Einbinder J, Knaus W. User-centered Design of a Web-based Tool for Medical Residency Training. In Barker P, Rebelsky S, eds. Proceedings of World Conference on Educational Multimedia, Hypermedia and Telecommunications, Chesapeake, VA. AACE; 2002:1758–63.

- 8.Einbinder JS, Scully KW, Pates RD, Schubart JR, Reynolds RE. Case study: a data warehouse for an academic medical center. J Healthc Inf Manag. 2001;15(2):165–75. [PubMed]

- 9.Lyman JA, Cohn W, Knaus W, Einbinder JS. Introducing an academic data warehouse into the undergraduate medical curriculum. Proc. AMIA Symp. 2002:474–8. [PMC free article] [PubMed]

- 10.Scully KW, Lyman JA, Stukenborg GJ. Improving quality measurement using multiple data sources. Proc AMIA Symp. 2003:1001. [PMC free article] [PubMed]

- 11.Agency for Healthcare Research and Quality. Clinical classifications software (CCS) for ICD-9-CM. Available at: http://www.hcup-us.ahrq.gov/toolssoftware/ccs/ccs.jsp. Accessed 8/20/2007.

- 12.Voss JD, Nadkarni MM, Schectman JM. The clinical health economics system simulation (CHESS): a teaching tool for systems- and practice-based learning. Acad Med. 2005;80(2):129–34. [DOI] [PubMed]