Abstract

Studies of spatial perception during visual saccades have demonstrated compressions of visual space around the saccade target. Here we psychophysically investigated perception of auditory space during rapid head turns, focusing on the “perisaccadic” interval. Using separate perceptual and behavioral response measures we show that spatial compression also occurs for rapid head movements, with the auditory spatial representation compressing by up to 50%. Similar to observations in the visual system, this occurred only when spatial locations were measured by using a perceptual response; it was absent for the behavioral measure involving a nose-pointing task. These findings parallel those observed in vision during saccades and suggest that a common neural mechanism may subserve these distortions of space in each modality.

Keywords: action and perception, auditory localization, head motion, saccades, spatial perception

Rapid movements of the head or eyes toward novel or attention-grabbing stimuli is a basic orienting mechanism, characterized behaviorally by a fast reaction time and a high rate of motion. Because the spatial acuity of the auditory and visual systems is sharpest in the frontal region, both senses rely on this mechanism to bring stimuli of interest into the high-resolution zone of the spatial representation. Even when not reflexively driven by novel stimuli, head and eye movements inevitably form part of normal exploratory behavior in visual and auditory environments. Studies have shown that the auditory system may use the dynamic acoustical cues produced by head motion to improve localization accuracy in stimulus elevation (1) and to resolve ambiguities in the binaural cues (2). Head motion, therefore, provides important information in creating an accurate auditory scene. An analogous behavior exists in vision where the eyes saccade on average three times per second at high speed (>500°/sec), allowing the rapid foveation of visual stimuli within the field of view so that a detailed representation of visual space can be built up (3).

One key aspect of visual saccades is that they are rapid, ballistic movements. Although not all orienting head movements are ballistic, rapid or reflexive head turns do fall into this category. A rapidly rotating head may approach speeds of 500°/sec or so, and, given the significant mass of the head, the resulting high momentum of a head turning rapidly toward a target makes it effectively ballistic. Thus, rapid head turns and visual saccades share two common characteristics: both are orienting movements, and both are ballistic in nature. Interestingly, both kinds of movement also share a common neural substrate in the superior colliculus (SC), which is the key structure involved in the control of overt orienting of the eyes and head, as well as the covert orienting of spatial attention (4, 5). Many units in the deep layers of SC are bimodal audiovisual neurons with overlapping unimodal receptive fields (6, 7), ensuring a congruent spatial topography that allows orienting movements in both sensory modalities to work together.

In the visual domain, the perceptual consequences of visual saccades have been well studied. Psychophysical studies have demonstrated that, despite extremely fast retinal motion during saccades and an abrupt change in the scene imaged on the retina, visual stability can be preserved across the saccadic interval through two mechanisms: first, saccadic suppression (8, 9) and, second, anticipatory shifts of receptive-field location (10). Saccadic suppression refers to the strong attenuation of visual processing during a saccade, particularly of motion processing, so as to suppress visual transients and visual blurring that would otherwise swamp visual perception (8, 11). It is easily demonstrated by the fact that one cannot see one's own saccadic eye movements when looking into a mirror. There are also anticipatory shifts in receptive field position during saccades that occur in the “perisaccadic” interval, beginning ≈80 ms before the onset of the eye movement and lasting into the early portion of the saccade. These shifts have been well documented at various stages of visual processing and are thought to reveal the visual system in the process of remapping its spatial receptive fields to where they will need to be to maintain spatial correspondence after the saccade is executed (12, 13).

One consequence of anticipatory shifts in receptive field position is that it distorts visual space considerably in the perisaccadic interval. It has been demonstrated that during the perisaccadic interval the location of brief probe stimuli is misperceived as being closer to the saccade target than they actually are (14, 15). This effect is bidirectional: not only are locations between the original fixation point and the new one shifted in the direction of the saccade toward the new fixation point, but probes beyond the new fixation point are misperceived in the opposite direction, back toward the new fixation point. One curious aspect of spatial compression in saccades is that it is reported only when a “perceptual” measure is used to indicate location, as in a verbal report of the perceived location of the stimulus, and not when a behavioral “action” is used (e.g., physically pointing to the probe location) (16). Because rapid head motion and visual saccades are both important orienting behaviors that share a common neural substrate in the SC, it is possible that the spatial distortions in visual perception observed during visual saccades could potentially be observed in the auditory modality during rapid head motion.

In the auditory domain, whether rapid head turns would influence the stability of the acoustic image and localization accuracy analogously to what occurs in vision with saccades is an open question. It is clear that the same kinds of problem would arise in each modality. For example, the potential for transients and smearing to distort perception during rapid head turns is one potential problem, as is the challenge of maintaining spatial congruence around the head-turn interval when head-defined receptive fields have been rapidly relocated. Given that both kinds of movement are rapid, ballistic orienting movements sharing a common neural substrate in the SC, it is possible that perceptual effects similar to those occurring in visual spatial perception during saccades would also occur during rapid head turns, although to date this issue has scarcely been addressed. One study, using behavioral action to localize sounds, showed that localization accuracy during head motion was comparable to conditions where the head remained stationary during stimulus presentation (17). Although this null result appears consistent with observations in the visual literature that visual distortions do not occur during saccades for action tasks, the more critical experiment would be to test whether spatial distortion effects do indeed occur for perceptual measures (as they do in vision). Another study examined a subject who presented with congenital ophthalmoplegia who compensated for their lack of eye movements by using “head saccades” to perform visual scans (18) with no obvious adverse consequences on their auditory perception and with comparable localization accuracy between “head moving” and “head stationary” conditions. In this case, however, the subject's head-turn speed was slow relative to the average speed of visual saccades (≈50°/sec versus ≈400°/sec), so the results are not surprising.

In the present study, our aim is to test for distortions of auditory spatial perception during fast, saccade-like head turns (>200°/sec) and to measure performance in a perceptual paradigm designed to reveal any perceptual distortions of space. To relate our findings to these previous studies, performance will be compared in perception and action response paradigms. Briefly stated, the design involves subjects turning their heads rapidly to a target location and then localizing sounds that are presented at various spatial locations during the head turn.

Results

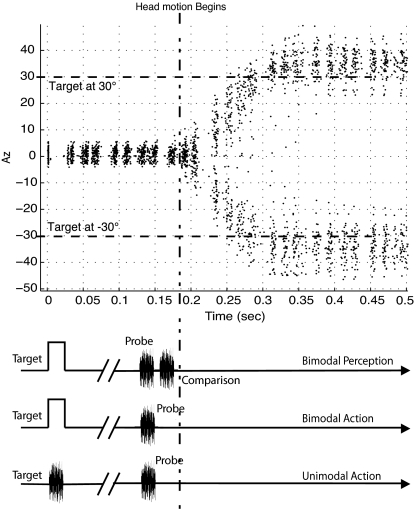

Data from the head tracker show that subjects' average head-turn speeds were much faster than in previous experiments (17, 18) with a mean velocity of 256°/sec and a maximum of 552°/sec, speeds that approach the eye movement speed in visual saccades (10). Reaction times to begin a head turn after the head-turn target was presented varied between 222 ms and 269 ms, which is similar to previous data (18).

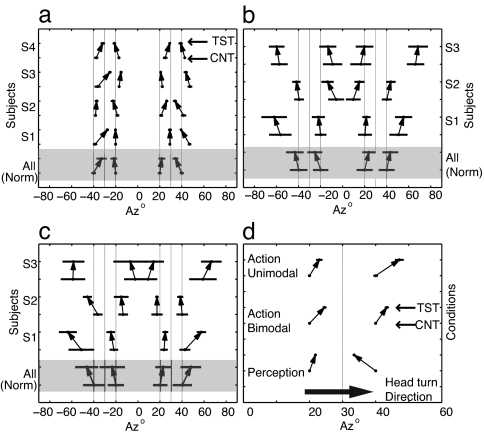

In the perception condition, subjects' responses were obtained by averaging the last 10 trials from two Quest staircases (see Methods). As shown in Fig. 1a, these responses varied over a narrow range—between 1° and 3°—indicating a high response confidence. Overall, subjects perceived the probe stimulus location accurately in the control condition (where head position remained stationary). The mean localization error varied between 1.6° ± 1° and 4.4° ± 4° and compared well with other studies on static sound localization (19). This was not the case, however, for test trials where the probe sound was presented just before a rapid head rotation. On these trials, probe localization data differed significantly from the control data for all four probe locations examined for subjects S2–S4 (±20° and ±40°) and for ±40° probes for subject S1 (one-way ANOVA, P < 0.005). Although localization was quite stable within subjects, there were slight localization biases across subjects, as indicated by an overall shift in the perceived location of both control and test stimuli. To remove this bias the data were normalized by shifting each subject's data so that the median localization position in the control condition was aligned with the actual probe position. These data were averaged across observers and are shown in the bottom (shaded) row of Fig. 1a. Probe localization between test and control trials for these averaged data was significantly different at all probe locations (one-way ANOVA, P < 0.0005). The difference between left and right hemisphere localization was not significant for either control or test conditions (P > 0.05).

Fig. 1.

Data from the experimental conditions (a–c) and summary data (d). (a) Localization results comparing test and control conditions using the perception reporting paradigm. Each row shows the subjects' perceived probe location for the control condition followed by the test condition (control underneath test), joined by arrows. Results are shown for actual probe locations at azimuth ± 40° and ± 20°, head-turn target at ±30°, with the arrows starting and ending at the mean of the distributions and the error bars representing the standard deviation. The x axis shows the azimuth, and the y axis shows the subjects. The bottom row (shaded) shows the normalized results. (b and c) Data gathered from subjects responding with the action paradigm, using bimodal (b) and unimodal (c) stimulus pairs respectively, plotted in the same manner as in a. (d) Summary results quantifying the spatial compression after combining all left and right hemisphere of the normalized data by mirroring symmetrically along the midline of 0° azimuth. The y axis shows the results of the three conditions, and the x axis shows the azimuth with the direction of head turn indicated by the gray arrow and the head-turn target at 30°. The error bars represent the standard error of mean. For the action-unimodal and action-bimodal conditions, subjects' perception of the probe stimuli (20° and 40° azimuth) was biased toward the direction of motion. This head-turn bias disappeared under the perception task, revealing a compression of spatial percept.

To determine whether this difference between test and control conditions corresponded to a “compression” of auditory space around the head-turn target (analogous to visual spatial compression found during saccades), we devised a measure of spatial extent we called “perceptual width.” This is defined as the distance between the perceived locations for the 40° and 20° probes (right hemisphere) and between −40° and −20° probes (left hemisphere). If there were no distortion of auditory space, perception would be veridical and perceptual width would be 20° in each hemisphere. This is indeed the case in the control condition, where the mean perceptual width averaged over the left and right hemispheres was not significantly different from 20°, ranging from 18.2° ± 1.4° to 22.2° ± 1.5°. However, in the test condition, perceptual width decreased significantly to between 8.8° ± 1.6° and 16.5° ± 1.6°, indicating a compression of auditory space of between 29% and 52% relative to the control value. Across the subject group, auditory spatial compression averaged 41%.

It is likely that accurate localization under dynamic conditions requires integrating information about head and body position. Previous studies have suggested that the vestibular system may influence localization accuracy, with stimuli mislocalized in the direction of motion during whole-body rotations (20, 21), whereas other studies have shown that head position relative to the body can also influence sound localization (22). Furthermore, perceptual overshoot has been demonstrated when the subject's head was kept stationary while the stimulus moved (23, 24). In the current experiment the stimuli were presented just before the head turn to ensure that the positions of the head and body were aligned, making it difficult to compare our results with these previous studies. However, it is clear that any systematic bias of this kind would be indicated by an unidirectional shift of the localization means for both the ±20° and ±40° probes in the direction of head motion, yet this pattern is not evident in our data. Instead, as shown in Fig. 1a, the slopes of the arrows connecting the probe localization means for control and test trials at ±20° and ±40° tilt in opposite directions, converging inward toward the head-turn target of ±30°. This bidirectional effect is indicative of spatial compression and is inconsistent with any unidirectional effect predicted by the head-turn direction. In particular, the clear inward slope of the outer arrows in Fig. 1a (at ±40°) is in the direction opposite what would be expected of dynamic localization errors induced by head rotation. We conclude, therefore, that our perceptual report paradigm revealed spatial compression effects due to rapid, ballistic-like head motion.

To examine whether auditory spatial compression effects will generalize to other types of response measure, and to allow comparison of the present results with the existing literature, we repeated a very similar version of the experiment using a behavioral action measure requiring subjects to indicate sound probe locations using a nose-pointing gesture. We also compared head turns to visual targets (bimodal action condition) with head turns to auditory targets (unimodal action condition). Results for the bimodal-action experiment are shown in Fig. 1b, and several differences from the bimodal-perception data are apparent. First, the spread of probe localization responses was greater (standard deviations ranged from 4° to 13°), and, second, there was a general probe localization bias in the head-turn direction. For the normalized data the test and control conditions were different (P < 0.005) for all probe locations. In this case, and unlike the mislocalization reported in the perception-bimodal data, the biases consistently follow head-turn direction for stimuli on either side of the head-turn target (arrows in Fig. 1b point away from 0°). To test for any compression of auditory space in this condition, we followed the normalization procedure used in the bimodal-perception condition to align the data across subjects and then measured the perceptual width between the ±20° and ±40° probe locations. There was no significant spatial compression in this bimodal-action condition.

The same data analyses were conducted on the unimodal-action data (Fig. 1c). Overall, the data from the unimodal and bimodal action conditions were very similar. In the head-turn condition, there was again a general localization bias in the direction of head turn, and there was no significant compression of space (P > 0.50). There was no significant difference between unimodal and bimodal for probe localization in the control condition at any of the locations (one-way ANOVA, P > 0.50), showing that the sensory modality of the head-turn target is not a relevant factor. In contrast, when comparing the data between the action-bimodal and perception-bimodal experiments, there were highly significant differences (P < 0.0001) in probe localization during head turns for all but one location (20°), whereas probe localization in control conditions did not differ between action and perception at any location (P > 0.50).

The results for all three conditions are summarized in Fig. 1d, combining normalized data from both left and right hemispheres. Clearly, responses from the action tasks exhibited an unidirectional shift along the direction of head movement (as indicated by the horizontal gray arrows)—this was true for probe locations (20° and 40°) on either side of the head-turn target (30°). However, this disappeared under the perception task, where responses from both probe locations were drawn toward the head-turn target. Importantly, the narrowing of the perceptual width in the test condition with respect to the head static condition is an indication that our perception of auditory space is compressed during rapid head turns.

Discussion

This study was designed to examine auditory spatial perception during rapid head motion. The results demonstrated that, when using a perceptual measure of auditory location of probe sounds, the perception of auditory space was compressed during rapid head motion for stimuli occurring in the perisaccadic interval. A spatial compression on the order of 50% was observed in most subjects with a tight confidence limit. Importantly, the narrowing of perceptual width was not simply due to an excessive mislocalization in the direction of head turn. Rather, the compressive effect was bidirectional, with subjects consistently mislocalizing probes presented at ±40° against the direction of head rotation (Fig. 1d). This compression of auditory space was not observed using an action measure to localize probe sounds. The motive for focusing on the perisaccadic time interval in the current study was 2-fold: first, this is the period where spatial distortions are observed in visual saccades, and, second, before head motion, the subjects' eye, head, and body frames of reference are aligned. Our findings differ from those in a previous case-study report that found that auditory spatial perception was characterized by mislocalization away from the head-turn target (18). This case study, using both action and perception measures, examined a subject who suffered from congenital ophthalmoplegia and who compensated for a lack of eye movements by making analogous head movements. No suggestion of compressive errors was found in this study, although there are several probable reasons that likely explain this. One is that the spatial range examined was rather small at just ±10°. Although this is appropriate for the typical magnitude of visual saccades, head movements are commonly many times larger than this. A second reason for the lack of spatial compression is that the velocity of the head turns was much less than those we used here and certainly many times less than those typical of saccadic eye movements. In contrast, our study used much larger head movements (±30°) and fast, ballistic-like head turns that approximate visual saccadic behavior. Given that the temporal window of saccade-related spatial distortion is brief (15) and that dissociation of visual information between action and perception pathways appears to require rapid movements (25), it would require a far higher head-turn velocity than the ≈50°/sec speed used in Jackson et al.'s study (18) for compressive spatial errors to be revealed.

The physiological basis for the compression of auditory space just before a saccade-like head turn is unknown. In vision, it has been postulated that perisaccadic spatial compression is due to anticipatory shifts in receptive field positions of various neurons in areas including the lateral intraparietal area, the frontal eye field, and the SC (10, 12). These shifts are thought to be made on the basis of a corollary discharge of motor signals issued to move the ocular muscles and sent to visual spatial areas so that spatial maps can be updated to maintain continuity when the eye arrives at its new position. Consequently, for a brief period before the eye movement has been made, units with shifted receptive fields erroneously indicate spatial signals to be shifted toward the saccade target (15). Recently, the neural connection relaying the corollary discharge necessary for these anticipatory shifts, from the SC through to the frontal eye field via the mediodorsal nucleus, has been reported (13). Because the SC contains bimodal neurons providing a potential common substrate for visual saccades and saccade-like head turns, it is possible that a similar corollary discharge mechanism also exists in the auditory system. Moreover, because the head is heavy and has high momentum once turning quickly toward a target location, rapid head turns such as those we have used here can be considered as effectively ballistic, as visual saccades are known to be. Hypothetically, this anticipatory shift in auditory receptive field would be triggered when behavioral conditions similar to those of visual saccades occur, such as rapid reaction times and high-velocity head turns.

Finally, we turn to the difference between the perception and action conditions. Our motive for contrasting these two measurement paradigms came from work on visual saccades showing that spatial compression is not observed if subjects reach out and point to the location of the probe stimulus (16). The absence of the compression illusion when behaviorally indicated may be related to functional differences between the dorsal and ventral processing pathways in the visual system (26). Anatomically, these pathways are separated into a dorsal stream that projects from the SC and primary visual cortex to the posterior parietal cortex, and a ventral stream projecting from the primary visual cortex to the temporal lobe. It has been proposed that the dorsal and ventral streams subserve functionally distinct purposes in the visual system. Accumulating evidence suggests that the dorsal action stream is involved in rapid, real-time motor control, whereas the ventral perception stream is slower and mediates perceptual processing. One distinction between the pathways is that behavioral action appears not to be subject to perceptual illusions (27, 28), and Burr et al.'s (16) result of spatial distortions for perception, but not for action, fits into this dichotomy.

Several factors led us to expect that perceptual and behavioral measures would produce a different picture of auditory space during rapid head turns, just as was found in vision for saccade-related compression. Saccadic eye movements and rapid head turns are both ballistic spatial orienting movements that depend critically on the SC. Because SC integrates spatial maps from the different modalities (29), it suggests the likelihood of an integrated multisensory “action space.” Therefore, we reasoned that spatial compressions revealed using a visual orienting task might also be revealed by using an auditory orienting task. Given that this prediction was confirmed, it is tempting to push these parallels further in the search for an explanation. In recent years, separate dorsal and ventral pathways have been found in the auditory system (30–32), and they exhibit timing differences similar to those observed between the visual pathways (31). However, we are cautious about offering an account of our findings in terms of dorsal and ventral pathways, analogous to vision. Neuroimaging has shown that dorsal regions subserve auditory localization whereas ventral regions are involved in pitch processing (32). In our experiments, for both perception and action, the task was always localization. Instead of a “dorsal versus ventral” hypothesis, an alternative explanation of our results could be based on collicular versus cortical processing. The lack of distortion in the action condition may be due to the involvement of SC and its tight and early links with head movement control, whereas the spatial compression found in the perception condition may reflect a more sluggish response from the cortical areas of the dorsal “where” stream.

In conclusion, this article presents psychophysical data showing that auditory spatial perception becomes compressed as a result of rapid, saccade-like head turns. At high speeds, head turns approach the speed of visual saccades and become ballistic, and spatial compressions are observed that parallel those seen in vision during saccades. The spatial compression occurs on both sides of the head-turn target such that brief probe sounds are mislocalized as being closer to the target than they truly are. The magnitude of this compression can approach 50% of the spatial extent estimated by localization with the head stationary. We propose that auditory spatial compression, not seen in previous studies using low head-turn velocities and restricted ranges of movement, requires high velocities. We suggest that the mechanism for this effect may be similar to that thought to underlie visual spatial compression and involve anticipatory spatial shifts of receptive-field locations driven by a corollary discharge from the motor system issuing commands to the neck muscles controlling head position. Also similar to saccade-related spatial compression, spatial compression during head turns required a perceptual measurement paradigm rather than a behavioral one.

Methods

Subjects, Stimulus, and Setup.

The experiments were conducted in a darkened anechoic chamber using a robotic arm capable of placing a sound source at any location [specified by an (azimuth, elevation) coordinate system] around a subject (radius 1 m) with a precision of better than 1°. Stimuli were presented on the frontal audiovisual horizon where 0° azimuth was directly ahead and positive azimuth to the right. Acoustic stimuli were delivered by a loudspeaker (Vifa D26-TG-35-06) mounted on the robotic arm and by two freestanding speakers (Tannoy System 600A) located 1.3 m from the center of the chamber at (30°, −15°) and (−30°, −15°). Visual stimuli were delivered by using red light-emitting diodes (LEDs) located at (30°, −10°) and (−30°, −10°). Feedback was provided via centrally mounted green LEDs.

All sounds (whether targets or probes; see below) consisted of 10 ms of Gaussian broadband white noise modulated by 0.5-ms raised cosine onset and offset windows at an average sound level of 75 dB sound pressure level. When visual stimuli were used as head-turn targets, they were 10-ms flashes from one of the red LEDs. A TDT DD1 system controlled the Vifa speaker and the LEDs, and a RME Hammerfall Multiface combination controlled the Tannoy speakers. The combined audiovisual stimulus delivery system was controlled with Matlab software (Mathworks). The timing of the auditory and visual components was carefully calibrated and verified with reference recordings measured at the center of the anechoic chamber using a Brüel & Kjær ¼-inch sound field microphones and photodiodes connected to an oscilloscope (Hameg HM407 Analog/Digital). The subjects' head motion was sampled by using a head-mounted magnetic tracker (Intersense IC3), which sampled every 12 ms.

The data were collected from four male subjects in the perception paradigm, and three other naive subjects (two males and one female) performed the action series of experiments. All subjects (aged between 21 and 40) had normal hearing by audiometric testing and were well trained in using the nose-pointing gesture to localize auditory stimuli [see Carlile et al. (19) for a review of the nose-pointing localization task].

Experimental Design.

Subjects stood on a platform in the center of the anechoic chamber at a calibrated central start position defined by intersecting orthogonal lasers. At the beginning of each trial they were asked to fixate their gaze and point their noses at (0°, 0°) as indicated by a green LED. Head positioning was verified by using the head tracker system. After a random interval, a stimulus indicating the head-turn target location was played at one of the Tannoy loudspeakers (head-turn targets were always either +30° or −30° in azimuth), and subjects had to rotate their heads rapidly to localize the source (using nose-pointing) while keeping their bodies still. At various spatial and temporal offsets relative to the head-turn target stimulus, a probe stimulus was played by using the loudspeaker on the robot arm (see Fig. 2). Subjects were then asked to localize the position of the probe stimulus (see below). To ensure that subjects made head rotations toward the target as rapidly as possible, training sessions were conducted before the experiment in which subjects were provided with feedback showing their head turning profiles after each trial. The feedback emphasized two behavioral metrics—reaction time to initiate the head turn and the speed of head rotation. During training, subjects adjusted their head turning to minimize the response time and maximize the rotation speed.

Fig. 2.

Stimulus presentation time line and subject head-turn trace. Upper shows a recording of a subject's head turn during an experiment. The target stimuli were at azimuth ± 30°. Lower illustrates the stimulus presentation in relation to the head-turn profile. Subjects were asked to fixate at (0°, 0°) and begin moving their heads at the presentation of the head-turn target. The dotted line represents the time at which the subject's movement began. After a variable ISI, the probe stimulus was played and, in the case of the experiments using the perception reporting paradigm, was immediately followed by a comparison stimulus.

Action and Perception Paradigms.

In the action paradigm, subjects were required to identify first the head-turn target location (by pointing their noses at it) followed by the probe location, with the indicated positions recorded via the head tracker. The method of constant stimuli was used, and both spatial and temporal offsets were examined. Spatially, probe positions varied in azimuth in the range ±10° around the target locations in 10° intervals (−40°, −20°, +20°, +40°). Temporally, the interstimulus interval (ISI) between target and probe stimuli was varied over four levels to examine the time period just before the head turn began. The ISIs were chosen for each subject to optimally span this preturn period based on the distribution of their head-turn reactions times obtained during the training sessions. Each condition was repeated 10 times resulting in 320 trials for each subject (4 spatial variants × 4 temporal variants × 10 repeats × 2 hemispheres). These trials were divided into a series of four sessions, each lasting ≈20 min. In the action paradigm, two types of head-turn target were compared: visual targets and auditory targets. In each case, the targets were located at either +30° or −30° azimuth. Visual targets were brief flashes from a red LED (“bimodal” condition), and brief white noise bursts (see above) served as auditory targets (“unimodal” condition). For both action conditions, probe localization data were compared against a no-turn control condition in which the subjects' heads remained stationary throughout the stimulus presentation.

For the purposes of data analysis, we analyzed only trials in which the probe was presented before the moment when the head turn began. This preturn period, bounded by the presentation of the head-turn target but before the movement has begun, is analogous to the perisaccadic interval in visual saccades when spatial compression is maximal. To determine when the probe was presented with respect to the initiation of the head turn, subjects' head-turn profiles were analyzed post hoc and the moment when the head position first departed from the start position was determined. This post hoc analysis also allowed us to ensure that the behavioral metrics were within the range expected for each subject based on their training data. Trials were deleted if response times or head-turn speeds were slow, as were trials where subjects prejudged the direction of turn (indicated by abnormally short response times). Interestingly, subjects' localization responses sometimes indicated an order reversal (similar to those mentioned in ref. 33) in which the location of the probe was indicated first and that of the head-turn target was indicated second. These responses were removed for this study.

In the perception paradigm, subjects turned to indicate the target position at +30° or −30° with a head turn, as in the action condition, but the location of the probe sound was indicated perceptually, relative to the location of a second “comparison” sound that was played shortly after the probe. To avoid confusion and possible interference between sounds, the head-turn target in the perception condition was always a red LED (i.e., a bimodal condition). In the perception condition, subjects remained with their heads oriented toward the head-turn target and indicated whether the comparison stimulus that followed the probe stimulus was perceived as being to the left or to the right of the probe, using button presses to indicate their percept. The spatial separation between the comparison and probe stimuli was varied adaptively using Quest [an adaptive staircase procedure (34)], which terminated after 20 trials. At each probe location two Quest staircases were measured to examine spatial distortion on both the left and right sides of the probe. To reduce subject expectation bias, both Quests ran concurrently and were randomly interleaved. Based on results from our pilot experiments, which suggested that a target-to-probe separation of ±10° azimuth produced quantifiable results, the probe stimuli in this experiment were located at ±40° and ±20°, being ±10° around the target locations. The ISI between target and probe was individually set for each subject to examine the perisaccadic interval at ≈50 ms before the onset of head rotation. To ensure that subjects' head turns were within their behavioral norm, each Quest trial was analyzed after each stimulus presentation. Trials where the head-turn rate or response times were slower than the individuals' statistical norm by greater than a standard deviation were not accepted (and the data not included in the evolving Quest staircase) and an extra trial was run at the end of the session. Overall, each subject performed 160 trials, divided into two sessions each lasting ≈15 min. As in the two action conditions, probe localization data in the perception condition were compared against a no-turn control condition.

Acknowledgments.

This work was supported by the Australian Research Council.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission. M.G. is a guest editor invited by the Editorial Board.

References

- 1.Perrett S, Noble W. The effect of head rotations on vertical plane sound localization. J Acoust Soc Am. 1997;102:2325–2332. doi: 10.1121/1.419642. [DOI] [PubMed] [Google Scholar]

- 2.Wightman FL, Kistler DJ. Resolution of front-back ambiguity in spatial hearing by listener and source movement. J Acoust Soc Am. 1999;105:2841–2853. doi: 10.1121/1.426899. [DOI] [PubMed] [Google Scholar]

- 3.Carpenter RHS. Movements of the Eyes. 2nd Ed. London: Pion; 1998. [Google Scholar]

- 4.Sparks DL. Translation of sensory signals into commands for control of saccadic eye movements: Role of primate superior colliculus. Physiol Rev. 1986;66:118–171. doi: 10.1152/physrev.1986.66.1.118. [DOI] [PubMed] [Google Scholar]

- 5.Desimone R, Wessinger M, Thomas L, Schneider W. Attentional control of visual perception: Cortical and subcortical mechanisms. Cold Spring Harbor Symp Quant Biol. 1990;55:963–971. doi: 10.1101/sqb.1990.055.01.090. [DOI] [PubMed] [Google Scholar]

- 6.King AJ, Palmer AR. Integration of visual and auditory information in bimodal neurones in the guinea-pig superior colliculus. Exp Brain Res. 1985;60:492–500. doi: 10.1007/BF00236934. [DOI] [PubMed] [Google Scholar]

- 7.Meredith MA, Nemitz JW, Stein BE. Determinants of multisensory integration in superior colliculus neurons. I. Temporal factors. J Neurosci. 1987;7:3215–3229. doi: 10.1523/JNEUROSCI.07-10-03215.1987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Burr DC, Holt J, Johnstone JR, Ross J. Selective depression of motion sensitivity during saccades. J Physiol. 1982;333:1–15. doi: 10.1113/jphysiol.1982.sp014434. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Burr DC, Morrone MC, Ross J. Selective suppression of the magnocellular visual pathway during saccadic eye movements. Nature. 1994;371:511–513. doi: 10.1038/371511a0. [DOI] [PubMed] [Google Scholar]

- 10.Ross J, Morrone MC, Goldberg ME, Burr DC. Changes in visual perception at the time of saccades. Trends Neurosci. 2001;24:113–121. doi: 10.1016/s0166-2236(00)01685-4. [DOI] [PubMed] [Google Scholar]

- 11.Bridgeman B, Hendry D, Stark L. Failure to detect displacement of the visual world during saccadic eye movements. Vision Res. 1975;15:719–722. doi: 10.1016/0042-6989(75)90290-4. [DOI] [PubMed] [Google Scholar]

- 12.Duhamel JR, Colby CL, Goldberg ME. The updating of the representation of visual space in parietal cortex by intended eye-movements. Science. 1992;255:90–92. doi: 10.1126/science.1553535. [DOI] [PubMed] [Google Scholar]

- 13.Sommer MA, Wurtz RH. Influence of the thalamus on spatial visual processing in frontal cortex. Nature. 2006;444:374–377. doi: 10.1038/nature05279. [DOI] [PubMed] [Google Scholar]

- 14.Kaiser M, Lappe M. Perisaccadic mislocalization orthogonal to saccade direction. Neuron. 2004;41:293–300. doi: 10.1016/s0896-6273(03)00849-3. [DOI] [PubMed] [Google Scholar]

- 15.Ross J, Morrone MC, Burr DC. Compression of visual space before saccades. Nature. 1997;386:598–601. doi: 10.1038/386598a0. [DOI] [PubMed] [Google Scholar]

- 16.Burr DC, Morrone MC, Ross J. Separate visual representations for perception and action revealed by saccadic eye movements. Curr Biol. 2001;11:798–802. doi: 10.1016/s0960-9822(01)00183-x. [DOI] [PubMed] [Google Scholar]

- 17.Vliegen J, Van Grootel TJ, Van Opstal AJ. Dynamic sound localization during rapid eye-head gaze shifts. J Neurosci. 2004;24:9291–9302. doi: 10.1523/JNEUROSCI.2671-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Jackson SR, et al. Saccade-contingent spatial and temporal errors are absent for saccadic head movements. Cortex. 2005;41:205–212. doi: 10.1016/s0010-9452(08)70895-5. [DOI] [PubMed] [Google Scholar]

- 19.Carlile S, Leong P, Hyams S. The nature and distribution of errors in sound localization by human listeners. Hear Res. 1997;114:179–196. doi: 10.1016/s0378-5955(97)00161-5. [DOI] [PubMed] [Google Scholar]

- 20.Lewald J, Karnath HO. Vestibular influence on human auditory space perception. J Neurophysiol. 2000;84:1107–1111. doi: 10.1152/jn.2000.84.2.1107. [DOI] [PubMed] [Google Scholar]

- 21.Lewald J, Karnath HO. Sound lateralization during passive whole-body rotation. Eur J Neurosci. 2001;13:2268–2272. doi: 10.1046/j.0953-816x.2001.01608.x. [DOI] [PubMed] [Google Scholar]

- 22.Lewald J, Dorrscheidt GJ, Ehrenstein WH. Sound localization with eccentric head position. Behav Brain Res. 2000;108:105–125. doi: 10.1016/s0166-4328(99)00141-2. [DOI] [PubMed] [Google Scholar]

- 23.Getzmann S, Lewald J, Guski R. Representational momentum in spatial hearing. Perception. 2004;33:591–599. doi: 10.1068/p5093. [DOI] [PubMed] [Google Scholar]

- 24.Getzmann S. Representational momentum in spatial hearing does not depend on eye movements. Exp Brain Res. 2005;165:229–238. doi: 10.1007/s00221-005-2291-0. [DOI] [PubMed] [Google Scholar]

- 25.Króliczak G, Heard P, Goodale MA, Gregory RL. Dissociation of perception and action unmasked by the hollow-face illusion. Brain Res. 2006;1080:9–16. doi: 10.1016/j.brainres.2005.01.107. [DOI] [PubMed] [Google Scholar]

- 26.Ungerleider LG, Mishkin M. In: Analysis of Visual Behavior. Ingle DJ, Goodale MA, Mansfield RJ, editors. Cambridge, MA: MIT Press; 1982. pp. 549–586. [Google Scholar]

- 27.Goodale MA, Milner AD. Separate visual pathways for perception and action. Trends Neurosci. 1992;15:20–25. doi: 10.1016/0166-2236(92)90344-8. [DOI] [PubMed] [Google Scholar]

- 28.Goodale MA, Westwood DA. An evolving view of duplex vision: Separate but interacting cortical pathways for perception and action. Curr Opin Neurobiol. 2004;14:203–211. doi: 10.1016/j.conb.2004.03.002. [DOI] [PubMed] [Google Scholar]

- 29.Stein BE, Meredith MA. The Merging Senses. Cambridge, MA: MIT Press; 1994. [Google Scholar]

- 30.Rauschecker JP, Tian B. Mechanisms and streams for processing of “what” and “where” in auditory cortex. Proc Natl Acad Sci USA. 2000;97:11800–11806. doi: 10.1073/pnas.97.22.11800. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Ahveninen J, et al. Task-modulated “what” and “where” pathways in human auditory cortex. Proc Natl Acad Sci USA. 2006;103:14608–14613. doi: 10.1073/pnas.0510480103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Alain C, Arnott SR, Hevenor S, Graham S, Grady CL. “What” and “where” in the human auditory system. Proc Natl Acad Sci USA. 2001;98:12301–12306. doi: 10.1073/pnas.211209098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Morrone MC, Ross J, Burr D. Saccadic eye movements cause compression of time as well as space. Nat Neurosci. 2005;8:950–954. doi: 10.1038/nn1488. [DOI] [PubMed] [Google Scholar]

- 34.Watson AB, Pelli DG. QUEST: A Bayesian adaptive psychometric method. Percept Psychophys. 1983;33:113–120. doi: 10.3758/bf03202828. [DOI] [PubMed] [Google Scholar]