Abstract

Guideline developers use a bewildering variety of systems to rate the quality of the evidence underlying their recommendations. Some are facile, some confused, and others sophisticated but complex

In 2004 the Grading of Recommendations Assessment, Development and Evaluation (GRADE) Working Group presented its initial proposal for patient management.1 In this second of a series of five articles focusing on the GRADE approach to developing and presenting recommendations we show how GRADE has built on previous systems to create a highly structured, transparent, and informative system for rating quality of evidence.

Summary points

A guideline’s formulation should include a clear question with specification of all outcomes of importance to patients

GRADE offers four levels of evidence quality: high, moderate, low, and very low

Randomised trials begin as high quality evidence and observational studies as low quality evidence

Quality may be downgraded as a result of limitations in study design or implementation, imprecision of estimates (wide confidence intervals), variability in results, indirectness of evidence, or publication bias

Quality may be upgraded because of a very large magnitude of effect, a dose-response gradient, and if all plausible biases would reduce an apparent treatment effect

Critical outcomes determine the overall quality of evidence

Evidence profiles provide simple, transparent summaries

A guideline’s formulation should include a clear question

Any question addressing clinical management has four components: patients, an intervention, a comparison, and the outcomes of interest.2 For example, consider the following: in patients with pancreatic carcinoma undergoing surgery what is the impact of a modified resection that preserves the pylorus compared with a standard wide tumour resection—variations of the Whipple procedure—on short term and long term mortality, blood transfusions, bile leaks, hospital stay, and problems with gastric emptying?

Perhaps the most common error in formulating the question is a failure to include all the outcomes that are of importance to patients.3 Critics have, for example, documented the inadequate measurement of side effects and toxicity in randomised trials,4 5 6 7 a limitation that carries over to summaries on evidence. Guideline developers may give excessive credence to surrogate outcomes such as exercise capacity rather than quality of life, or bone density rather than fracture rate. In the Whipple procedure example, a focus on blood loss or operative time rather than blood transfusion and duration of hospital stay would represent such a limitation.

Failure to fully consider all relevant alternatives constitutes another potential problem in treatment recommendations. This may be particularly problematic when guidelines target a global audience; full consideration of less costly alternatives becomes particularly important in such situations.

Guideline developers should address the importance of their outcomes

Ultimately those making recommendations must trade-off benefits and downsides of alternative management strategies. GRADE not only challenges guideline developers to specify all outcomes of importance to patients as they begin the process of guideline development but to differentiate outcomes that are critical for decision making from those that are important but not critical and those that are not important. Because experts, clinicians, and patients may have different values and preferences,8 input from those affected by the decision—patients or members of the public—may strengthen this process, as long as selection of public representatives avoids conflicts of interest.9 Although exploration of optimal strategies for making decisions about relative importance remains limited, the desirability of making the process transparent is beyond doubt.

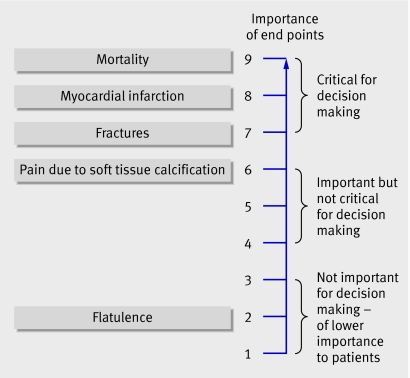

Figure 1 presents a hierarchy of patient important outcomes regarding the impact of phosphate lowering drugs in patients with renal failure. GRADE suggests a nine point scale to judge importance. The upper end of the scale, 7 to 9, identifies outcomes of critical importance. Ratings of 4 to 6 represent outcomes that are important but not critical to decision making. Ratings of 1 to 3 are items of limited importance to decision making. Guideline panels should strive for the sort of explicit approach that this example represents.

Fig 1 Hierarchy of outcomes according to importance to patients to assess effect of phosphate lowering drugs in patients with renal failure and hyperphosphataemia

Judging the quality of evidence requires consideration of the context

Clinicians have an intuitive sense of the importance of designating evidence as higher or lower quality. Inferences are clearly stronger for higher quality than for lower quality evidence. GRADE uses four levels for quality of evidence: high, moderate, low, and very low. These levels imply a gradient of confidence in estimates of treatment effect, and thus a gradient in the consequent strength of inference.

GRADE provides a specific definition for the quality of evidence in the context of making recommendations. The quality of evidence reflects the extent to which confidence in an estimate of the effect is adequate to support a particular recommendation. This definition has two important implications. Firstly, guideline panels must make judgments about the quality of evidence relative to the specific context in which they are using the evidence. Secondly, because systematic reviews do not—or at least should not—make recommendations, they require a different definition. In this case the quality of evidence reflects the extent of confidence that an estimate of effect is correct.

The following example illustrates how guideline developers must make judgments about quality in the context of their particular recommendations. Bear in mind that because quality has to do with our confidence in estimates of benefits and risks, lack of precision (wide confidence intervals) is one factor that decreases the quality of evidence.

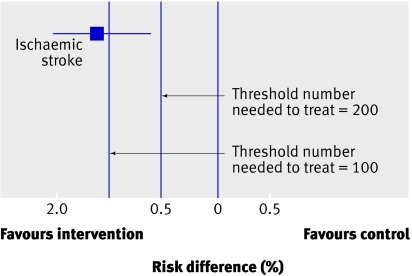

Let us say that a systematic review of randomised trials of a therapy to prevent major strokes yields a pooled estimate of absolute reduction in strokes of 1.3%, with a 95% confidence interval of 0.6% to 2.0%, over one year’s treatment (fig 2). This implies that 77 patients must be treated for one year to prevent one major stroke. The 95% confidence interval around the number need to treat (NNT)—50 to 167—means that it remains plausible that although 77 is the best estimate, as few as 50 people or as many as 167 may need to be treated for one year to prevent one major stroke.

Fig 2 Downgrading for imprecision: thresholds are key (threshold number needed to treat (NNT) of 200 does not require downgrading whereas the same result with a threshold of 100 requires downgrading)

Let it be assumed that the intervention is a drug with no serious adverse effects, minimal inconvenience, and modest cost. Under such circumstances we may be willing to enthusiastically recommend the intervention were it to reduce strokes by as little as 0.5% (blue line in fig 2)—this implies that the NNT=200. The confidence interval around the treatment effect excludes a benefit this small. We can therefore conclude that the precision (and thus the quality of the evidence) is sufficient to support a strong recommendation for the intervention.

What if, however, treatment is associated with serious toxicity and higher cost? Under these circumstances we may be reluctant to recommend treatment unless the absolute reduction in strokes is at least 1% (NNT=100; red dashed line in fig 2). The results fail to exclude an absolute benefit appreciably less than 1%. Under these circumstances the precision (and thus the quality of the evidence) is insufficient to support a strong recommendation for treatment. The thresholds chosen in this example are consistent with empirical explorations of patient values and preferences.10

In summary, greater levels of precision may be required to support a recommendation when advantages and disadvantages are closely balanced. Thus when this fine balance exists it is more likely that guideline developers will need to downgrade the evidence for imprecision.

This example illustrates that although judgments are not arbitrary when evidence is of sufficiently high quality, they rely heavily on underlying values and preferences.11 Guideline developers must therefore be transparent both in making such decisions and in providing a justification. In doing so they will find it useful to specifically consider the domains of quality assessment that GRADE has identified (see box).12

Factors in deciding on quality of evidence

Factors that might decrease quality of evidence

Study limitations

Inconsistency of results

Indirectness of evidence

Imprecision

Publication bias

Factors that might increase quality of evidence

Large magnitude of effect

Plausible confounding, which would reduce a demonstrated effect

Dose-response gradient

Study design is important in determining the quality of evidence

Early systems of grading the quality of evidence focused almost exclusively on study design.13 Study design remains critical to judgments about the quality of evidence. For recommendations addressing alternative management strategies—as opposed to issues of establishing prognosis or the accuracy of diagnostic tests—randomised trials provide, in general, stronger evidence than do observational studies. Rigorous observational studies provide stronger evidence than uncontrolled case series. In the GRADE approach to quality of evidence, randomised trials without important limitations constitute high quality evidence. Observational studies without special strengths or important limitations constitute low quality evidence. Limitations or special strengths can, however, modify the quality of the evidence.

Five limitations can reduce the quality of the evidence

The GRADE approach involves making separate ratings for quality of evidence for each patient important outcome and identifies five factors that can lower the quality of the evidence (see box). These limitations can downgrade the quality of observational studies as well as randomised controlled trials.

Study limitations

Confidence in recommendations decreases if studies have major limitations that may bias their estimates of the treatment effect.14 These limitations include lack of allocation concealment; lack of blinding, particularly if outcomes are subjective and their assessment highly susceptible to bias; a large loss to follow-up; failure to adhere to an intention to treat analysis; stopping early for benefit15; or selective reporting of outcomes (typically failing to report those for which no effect was observed). For example, a randomised trial suggests that danaparoid sodium is of benefit in treating heparin induced thrombocytopenia complicated by thrombosis.16 That trial, however, was unblinded and the key outcome was the clinicians’ assessment of when the thromboembolism had resolved, a subjective judgment.

Most of the randomised trials examining the relative impact of a standard compared with modified Whipple procedure were limited by lack of optimal concealment, lack of possible blinding of patients and of adjudicators of outcome, and substantial losses to follow-up. Thus the quality of evidence for each of the important outcomes is no higher than moderate (table 1).

Table 1.

GRADE evidence profile for impact of surgical alternatives for pancreatic cancer from systematic review and meta-analysis of randomised controlled trials in inpatient hospitals of pylorus preserving versus standard Whipple pancreaticoduodenectomy for pancreatic or periampullary cancer by Karanicolas et al19

| No of studies (No of participants) | Quality assessment | Summary of findings | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Study limitations* | Consistency | Directness | Precision | Publication bias | Relative effect† (95% CI) | Best estimate of Whipple group risk | Absolute effect (95% CI) | Quality | ||

| Five year mortality: | ||||||||||

| 3 (229) | Serious limitations (−1) | No important inconsistency | Direct | No important imprecision | Unlikely | 0.98 (0.87 to 1.11) | 82.5% | 20 less/1000; 120 less to 80 more | +++, moderate | |

| In-hospital mortality: | ||||||||||

| 6 (490) | Serious limitations (−1) | No important inconsistency | Direct | Imprecision (−1)‡ | Unlikely | 0.40 (0.14 to 1.13) | 4.9% | 20 less/1000; (50 less to 10 more) | ++, low | |

| Blood transfusions (units): | ||||||||||

| 5 (320) | Serious limitations (−1) | No important inconsistency | Direct | No important imprecision | Unlikely | — | 2.45 units | −0.66 (−1.06 to −0.25); favours pylorus preservation | +++, moderate | |

| Biliary leaks: | ||||||||||

| 3 (268) | Serious limitations (−1) | No important inconsistency | Direct | Imprecision (−1)‡ | Unlikely | 4.77 (0.23 to 97.96) | 0 | 20 more/1000 20 less to 50 more | ++, low | |

| Hospital stay (days): | ||||||||||

| 5 (446) | Serious limitations (−1) | No important inconsistency | Direct | Imprecision (−1)‡ | Unlikely | — | 19.17 days | −1.45 (−3.28 to 0.38); favours pylorus preservation | ++, low | |

| Delayed gastric emptying: | ||||||||||

| 5 (442) | Serious limitations (−1) | Unexplained heterogeneity (−1)§ | Direct | Imprecision (−1)‡ | Unlikely | 1.52 (0.74 to 3.14) | 25.5% | 110 more/1000; 80 less to 290 more | +, very low | |

*Unclear allocation concealment in all studies, patients blinded in only one study, outcome assessors not blinded in any study, >20% loss to follow-up in three studies, not analysed using intention to treat in one study.

†Relative risks (95% confidence intervals) are based on random effect models.

‡Confidence interval includes possible benefit from both surgical approaches.

§I2=72.6%, P=0.006.

Inconsistent results

Widely differing estimates of the treatment effect (heterogeneity or variability in results) across studies suggest true differences in the underlying treatment effect. Variability may arise from differences in populations (for example, drugs may have larger relative effects in sicker populations), interventions (for example, larger effects with higher drug doses), or outcomes (for example, diminishing treatment effect with time). When heterogeneity exists but investigators fail to identify a plausible explanation then the quality of evidence decreases.

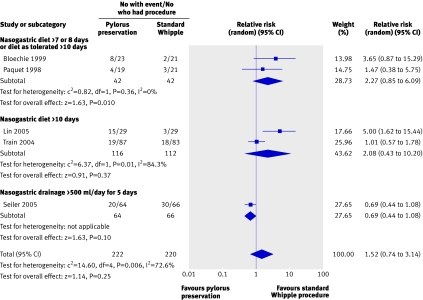

For example, the randomised trials of alternative approaches to the Whipple procedure yielded widely differing estimates of effects on gastric emptying, thus further decreasing the quality of the evidence (fig 3).

Fig 3 Effect on delayed gastric emptying of pylorus preserving pancreaticoduodenectomy compared with standard Whipple procedure for pancreatic adenocarcinoma

Indirectness of evidence

Guideline developers face two types of indirectness of evidence. The first occurs when, for example, considering use of one of two active drugs. Although randomised comparisons of the drugs may be unavailable, randomised trials may have compared one of the drugs with placebo and the other with placebo. Such trials allow indirect comparisons of the magnitude of effect of both drugs. Such evidence is of lower quality than would be provided by head to head comparisons of the two drugs.

Increasingly, recommendations must simultaneously tackle multiple interventions. For example, possible approaches to thrombolysis in myocardial infarction include streptokinase, alteplase, reteplase, and tenecteplase. Attempts to deal with multiple interventions inevitably involve indirect comparisons. A variety of recently developed statistical methods may help in generating estimates of the relative effectiveness of multiple interventions.17 Their confident application requires, in addition to evidence from indirect comparisons, substantial evidence from direct comparisons—evidence that is often unavailable.17

The second type of indirectness includes differences between the population, intervention, comparator to that intervention, and outcome of interest, and those included in the relevant studies. Table 2 presents examples of each.

Table 2.

Quality of evidence is weaker if comparisons in trials are indirect

| Question of interest | Source of indirectness |

|---|---|

| Relative effectiveness of alendronate and risedronate in osteoporosis | Indirect comparison: randomised trials have compared alendronate with placebo and risedronate with placebo, but trials comparing alendronate with risedronate are unavailable |

| Oseltamivir for prophylaxis of avian flu caused by influenza A (H5N1) virus | Differences in population: randomised trials of oseltamivir are available for seasonal influenza, but not for avian flu |

| Sigmoidoscopic screening for prevention of mortality from colon cancer | Differences in intervention: randomised trials of faecal occult blood screening provide indirect evidence, bearing on potential effectiveness of sigmoidoscopy |

| Choice of drug for schizophrenia | Differences in comparator: series of trials comparing newer generation neuroleptic agents with fixed doses of haloperidol 20 mg provide indirect evidence of how newer agents would compare with lower, flexible doses of haloperidol that clinicians typically use |

| Rosiglitazone for prevention of diabetic complications in patients at high risk of diabetes | Differences in outcome: randomised trial shows delay in development of biochemical diabetes with rosiglitazone but was underpowered to tackle diabetic complications |

Imprecision

When studies include relatively few patients and few events and thus have wide confidence intervals a guideline panel judges the quality of the evidence to be lower because of resulting uncertainty in the results. For example, most of the outcomes for alternatives to the Whipple procedure include both important effects and no effects at all, and some include important differences in both directions.

Publication bias

The quality of evidence will be reduced if investigators fail to report studies they have undertaken. Unfortunately guideline panels must often guess about the likelihood of publication bias. A prototypical situation that should elicit suspicion of publication bias occurs when published evidence is limited to a small number of trials, all of which are funded by industry. For example, 14 trials of flavonoids in patients with haemorrhoids have shown apparent large benefits, but enrolled a total of only 1432 patients.18 The heavy involvement of sponsors in most of these trials raises questions of whether unpublished trials suggesting no benefit exist.

A particular body of evidence can have more than one of these limitations, and the greater the limitations the lower the quality of the evidence. For example, despite the availability of five randomised trials only very low quality evidence exists for the effect of alternative surgical procedures in patients with pancreatic carcinoma on the incidence of gastric emptying problems (table 1).19

Three factors can increase the quality of evidence

Although well done observational studies generally yield low quality evidence, in unusual circumstances they may produce moderate or even high quality evidence (see box).20

Firstly, when methodologically strong observational studies yield large or very large and consistent estimates of the magnitude of a treatment effect, we may be confident about the results. In those situations, although the observational studies are likely to have provided an overestimate of the true effect, the weak study design is unlikely to explain all of the apparent benefit.

The larger the magnitude of effect, the stronger becomes the evidence. For example, a meta-analysis of observational studies showed that bicycle helmets reduce the risk of head injuries in cyclists involved in a crash by a large margin (odds ratio 0.31, 95% confidence interval 0.26 to 0.37).21 This large effect suggests a rating of moderate quality evidence. A meta-analysis of observational studies evaluating the impact of warfarin prophylaxis in cardiac valve replacement found that the relative risk for thromboembolism with warfarin was 0.17 (95% confidence interval 0.13 to 0.24).22 This very large effect suggests a rating of high quality evidence.

Secondly, on occasion all plausible biases from observational studies may be working to underestimate the true treatment effect. For example, if sicker patients only receive an experimental intervention or exposure yet the patients receiving the experimental treatment still fare better, it is likely that the actual intervention or exposure effect is larger than the data suggest. For example, a rigorous systematic review of observational studies that included a total of 38 million patients found higher death rates in private for profit hospitals compared with private not for profit hospitals. Biases related to different disease severity in patients in the two hospital types, and the spillover effect from well insured patients would both lead to estimates in favour of for profit hospitals.23 Therefore the evidence from these observational studies might be considered as of moderate quality rather than low quality—that is, the effect is likely to be at least as large as was observed and may be larger.

Thirdly, the presence of a dose-response gradient may increase confidence in the findings of observational studies and thereby increase the assigned quality of evidence. For example, the observation that, in patients receiving anticoagulation with warfarin, there is a dose-response gradient between higher levels of the international normalised ratio and an increased risk of bleeding increases confidence that supratherapeutic anticoagulation levels increase the risk of bleeding.24

Critical outcomes determine the rating of evidence quality across outcomes

Recommendations depend on evidence for several patient important outcomes and the quality of evidence for each of those outcomes. This presents two challenges. Firstly, how should guideline developers decide which outcomes are important enough to consider, and which are critical? We suggest that guideline developers should explicitly consider these problems, taking into account the views of those affected.

Secondly, how should the quality of evidence be rated across outcomes if quality differs? This occurred in the Whipple procedure example, in which the evidence varied from moderate to very low quality (table 1).

In cases such as the Whipple procedure example, guideline developers should consider whether undesirable consequences of therapy are important but not critical to the decision on the optimal management strategy, or whether they are critical. If an outcome for which evidence is of lower quality is critical for decision making then the rating of quality of the evidence across outcomes must reflect this lower quality evidence. If the outcome for which evidence is lower quality is important but not critical, the GRADE approach suggests a rating across outcomes that reflects the higher quality evidence from the critical outcomes. Thus for the Whipple procedure example, if those making recommendations thought that gastric emptying problems were critical, the rating of evidence quality across outcomes would be very low. If gastric emptying was important but not critical, the quality rating across outcomes would be low (on the basis of results from the clearly critical perioperative mortality) despite the presence of moderate quality evidence on survival at five years (table 1).

Evidence profiles provide simple, transparent summaries

Busy clinicians—and busy patients and policy makers—require succinct, transparent, easily digested summaries of evidence. The GRADE process facilitates the creation of such summaries. Table 1, which presents the relative effect of the standard Whipple procedure compared with more limited resection (pylorus preservation) for patients with pancreatic carcinoma, informs us that more limited resection may decrease blood loss and perioperative mortality without increasing long term adverse outcomes, but that the evidence remains limited.

Conclusion

GRADE provides a clearly articulated, comprehensive, and transparent methodology for rating and summarising the quality of evidence supporting management recommendations. Although judgments will always be required for each step, the systematic and transparent GRADE approach facilitates scrutiny of and debate about those judgments.

Contributors: All authors, including the members of the GRADE Working Group, contributed to the development of the ideas in the manuscript and read and approved the manuscript. GG wrote the first draft and collated comments from authors and reviewers for subsequent iterations. He is guarantor for this manuscript. All authors listed in the byline contributed ideas about structure and content, provided examples, and reviewed successive drafts of the manuscript and provided feedback.

The members of the GRADE Working Group are Phil Alderson, Pablo Alonso-Coello, Jeff Andrews, David Atkins, Hilda Bastian, Hans de Beer, Jan Brozek, Francoise Cluzeau, Jonathan Craig, Ben Djulbegovic, Yngve Falck-Ytter, Beatrice Fervers, Signe Flottorp, Paul Glasziou, Gordon H Guyatt, Margaret Haugh, Robin Harbour, Mark Helfand, Sue Hill, Roman Jaeschke, Katharine Jones, Ilkka Kunnamo, Regina Kunz, Alessandro Liberati, Merce Marzo, James Mason, Jacek Mrukowics, Susan Norris, Andrew D Oxman, Vivian Robinson, Holger J Schünemann, Tessa Tan Torres, David Tovey, Peter Tugwell, Mariska Tuut, Helena Varonen, Gunn E Vist, Craig Wittington, John Williams, and James Woodcock.

Funding: No specific funding.

Competing interests: All authors are involved in the dissemination of GRADE, and GRADE’s success has a positive influence on their academic career. Authors listed in the byline have received travel reimbursement and honorariums for presentations that included a review of GRADE’s approach to rating quality of evidence and grading recommendations. GHG acts as a consultant to UpToDate; his work includes helping UpToDate in their use of GRADE. HJS is documents editor and methodologist for the American Thoracic Society; one of his roles in these positions is helping implement the use of GRADE. He is supported by “The human factor, mobility and Marie Curie actions scientist reintegration European Commission grant: IGR 42192—GRADE.”

Provenance and peer review: Not commissioned; externally peer reviewed.

This is a series of five articles that explain the GRADE system for rating the quality of evidence and strength of recommendations

References

- 1.Atkins D, Best D, Briss PA, Eccles M, Falck-Ytter Y, Flottorp S, et al. Grading quality of evidence and strength of recommendations. BMJ 2004;328:1490-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Oxman AD, Guyatt GH. Guidelines for reading literature reviews. CMAJ 1988;138:697-703. [PMC free article] [PubMed] [Google Scholar]

- 3.Guyatt G, Montori V, Devereaux PJ, Schunemann H, Bhandari M. Patients at the center: in our practice, and in our use of language. ACP J Club 2004;140:A11-2. [PubMed] [Google Scholar]

- 4.Ioannidis JP, Lau J. Completeness of safety reporting in randomized trials: an evaluation of 7 medical areas. JAMA 2001;285:437-43. [DOI] [PubMed] [Google Scholar]

- 5.Ioannidis JP, Lau J. Improving safety reporting from randomised trials. Drug Saf 2002;25:77-84. [DOI] [PubMed] [Google Scholar]

- 6.Ioannidis JP, Evans SJ, Gotzsche PC, O’Neill RT, Altman DG, Schulz K, et al. Better reporting of harms in randomized trials: an extension of the CONSORT statement. Ann Intern Med 2004;141:781-8. [DOI] [PubMed] [Google Scholar]

- 7.Bent S, Padula A, Avins AL. Brief communication: better ways to question patients about adverse medical events: a randomized, controlled trial. Ann Intern Med 2006;144:257-61. [DOI] [PubMed] [Google Scholar]

- 8.Devereaux PJ, Anderson DR, Gardner MJ, Putnam W, Flowerdew GJ, Brownell BF, et al. Differences between perspectives of physicians and patients on anticoagulation in patients with atrial fibrillation: observational study. BMJ 2001;323:1218-22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Schunemann H, Fretheim A, Oxman AD. Improving the use of research evidence in guideline development: 10. Integrating values and consumer involvement. Health Res Policy Syst 2006;5:4-22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Man-Son-Hing M, Gage BF, Montgomery AA, Howitt A, Thomson R, Devereaux PJ, et al. Preference-based antithrombotic therapy in atrial fibrillation: implications for clinical decision making. Med Decis Making 2005;25:548-59. [DOI] [PubMed] [Google Scholar]

- 11.Guyatt GH, Haynes RB, Jaeschke RZ, Cook DJ, Green L, Naylor CD, et al. Users’ guides to the medical literature: XXV. Evidence-based medicine: principles for applying the users’ guides to patient care. Evidence-Based Medicine Working Group. JAMA 2000;284:1290-6. [DOI] [PubMed] [Google Scholar]

- 12.Schunemann HJ, Jaeschke R, Cook DJ, Bria WF, El-Solh AA, Ernst A, et al. An official ATS statement: grading the quality of evidence and strength of recommendations in ATS guidelines and recommendations. Am J Respir Crit Care Med 2006;174:605-14. [DOI] [PubMed] [Google Scholar]

- 13.Fletcher SW, Spitzer WO. Approach of the Canadian Task Force to the periodic health examination. Ann Intern Med 1980;92(2 Pt 1):253-4. [DOI] [PubMed] [Google Scholar]

- 14.Guyatt G, Cook D, Devereaux PJ, Meade M, Straus S. Therapy. In: Guyatt G, Rennie D, eds. The users’ guides to the medical literature: a manual for evidence-based clinical practice Chicago: AMA publications, 2002

- 15.Montori VM, Devereaux PJ, Adhikari NK, Burns KE, Eggert CH, Briel M, et al. Randomized trials stopped early for benefit: a systematic review. JAMA 2005;294:2203-9. [DOI] [PubMed] [Google Scholar]

- 16.Chong BH, Gallus AS, Cade JF, Magnani H, Manoharan A, Oldmeadow M, et al. Prospective randomised open-label comparison of danaparoid with dextran 70 in the treatment of heparin-induced thrombocytopaenia with thrombosis: a clinical outcome study. Thromb Haemost 2001;86:1170-5. [PubMed] [Google Scholar]

- 17.Caldwell DM, Ades AE, Higgins JP. Simultaneous comparison of multiple treatments: combining direct and indirect evidence. BMJ 2005;331:897-900. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Alonso-Coello P, Zhou Q, Martinez-Zapata MJ, Mills E, Heels-Ansdell D, Johanson JF, et al. Meta-analysis of flavonoids for the treatment of haemorrhoids. Br J Surg 2006;93:909-20. [DOI] [PubMed] [Google Scholar]

- 19.Karanicolas PJ, Davies E, Kunz R, Briel M, Koka HP, Payne DM, et al. The pylorus: take it or leave it? Systematic review and meta-analysis of pylorus-preserving versus standard Whipple pancreaticoduodenectomy for pancreatic or periampullary cancer. Ann Surg Oncol 2007;14:1825-34. [DOI] [PubMed] [Google Scholar]

- 20.Glasziou P, Chalmers I, Rawlins M, McCulloch P. When are randomised trials unnecessary? Picking signal from noise. BMJ 2007;334:349-51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Thompson DC, Rivara FP, Thompson R. Helmets for preventing head and facial injuries in bicyclists. Cochrane Database Syst Rev 2000;(2):CD001855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Cannegieter SC, Rosendaal FR, Briet E. Thromboembolic and bleeding complications in patients with mechanical heart valve prostheses. Circulation 1994;89:635-41. [DOI] [PubMed] [Google Scholar]

- 23.Devereaux PJ, Schunemann HJ, Ravindran N, Bhandari M, Garg AX, Choi PT, et al. Comparison of mortality between private for-profit and private not-for-profit hemodialysis centers: a systematic review and meta-analysis. JAMA 2002;288:2449-57. [DOI] [PubMed] [Google Scholar]

- 24.Levine MN, Raskob G, Beyth RJ, Kearon C, Schulman S. Hemorrhagic complications of anticoagulant treatment: the Seventh ACCP Conference on Antithrombotic and Thrombolytic Therapy. Chest 2004;126(3 suppl):S287-310. [DOI] [PubMed] [Google Scholar]