Abstract

We present a general method for including prior knowledge in a nonnegative matrix factorization (NMF), based on Gaussian process priors. We assume that the nonnegative factors in the NMF are linked by a strictly increasing function to an underlying Gaussian process specified by its covariance function. This allows us to find NMF decompositions that agree with our prior knowledge of the distribution of the factors, such as sparseness, smoothness, and symmetries. The method is demonstrated with an example from chemical shift brain imaging.

1. Introduction

Nonnegative matrix factorization (NMF) [1, 2] is a recent method for factorizing a matrix as the product of two matrices, in which all elements are nonnegative. NMF has found widespread application in many different areas including pattern recognition [3], clustering [4], dimensionality reduction [5], and spectral analysis [6, 7]. Many physical signals, such as pixel intensities, amplitude spectra, and occurrence counts, are naturally represented by nonnegative numbers. In the analysis of mixtures of such data, nonnegativity of the individual components is a reasonable constraint. Recently, a very simple algorithm [8] for computing the NMF was introduced. This has initiated much research aimed at developing more robust and efficient algorithms.

Efforts have been made to enhance the quality of the NMF by adding further constraints to the decomposition, such as sparsity [9], spatial localization [10, 11], and smoothness [11, 12], or by extending the model to be convolutive [13, 14]. Many extended NMF methods are derived by adding appropriate constraints and penalty terms to a cost function. Alternatively, NMF methods can be derived in a probabilistic setting, based on the distribution of the data [6, 15–17]. These approaches have the advantage that the underlying assumptions in the model are made explicit.

In this paper, we present a general method for using prior knowledge to improve the quality of the solutions in NMF. The method is derived in a probabilistic setting, and it is based on defining prior probability distributions of the factors in the NMF model in a Gaussian process framework. We assume that the nonnegative factors in the NMF are linked by a strictly increasing function to an underlying Gaussian process, specified by its covariance function. By specifying the covariance of the underlying process, we can compute NMF decompositions that agree with our prior knowledge of the factors, such as sparseness, smoothness, and symmetries. We refer to the proposed method as nonnegative matrix factorization with Gaussian process priors, or GPP-NMF for short.

2. NMF with Gaussian Process Priors

In the following, we derive a method for including prior information in an NMF decomposition by assuming Gaussian process priors (for a general introduction to Gaussian processes, see, e.g., Rasmussen and Williams [18].) In our approach, the Gaussian process priors are linked to the nonnegative factors in the NMF by a suitable link function. To setup the notation, we start by deriving the standard NMF method as a maximum likelihood (ML) estimator and then move on to the maximum a posteriori (MAP) estimator. Then, we discuss Gaussian process priors and introduce a change of variable that gives better optimization properties. Finally, we discuss the selection of the link function.

2.1. Maximum Likelihood NMF

The NMF problem can be stated as

| (1) |

where X ∈ ℝK×L is a data matrix that is factorized as the product of two element-wise nonnegative matrices, D ∈ ℝ+ K×M and H ∈ ℝ+ M×L, where ℝ+ denotes the nonnegative reals. The matrix N ∈ ℝK×L is the residual noise.

There exists a number of different algorithms [8, 15–17, 19–21] for computing this factorization, some of which can be viewed as maximum likelihood methods under certain assumptions about the distribution of the data. For example, least squares NMF corresponds to i.i.d. Gaussian noise [17], and Kullback-Leibler NMF corresponds to a Poisson process [6].

The ML estimate of D and H is given by

| (2) |

where ℒ X∣D,H(D, H) is the negative log likelihood of the factors.

Example 1 (least squares NMF). —

An example of a maximum likelihood NMF is the least squares estimate. If the noise is i.i.d. Gaussian with variance σN 2, the likelihood of the factors D and H can be written as

(3) The negative log likelihood, which serves as a cost function for optimization, is then

(4) where we use the proportionality symbol to denote equality subject to an additive constant. To compute a maximum likelihood estimate of D and H, the gradient of the negative log likelihood is useful:

(5) and the gradient with respect to D, which is easy to derive, is similar because of the symmetry of the NMF problem.

The ML estimate can be computed by multiplicative update rules based on the gradient [8], projected gradient descent [19], alternating least squares [20], Newton-type methods [21], or any other appropriate constrained optimization method.

2.2. Maximum a Posteriori NMF

In this paper, we propose a method to build prior knowledge into the solution of the NMF problem. We choose a prior distribution pD,H(D, H) over the factors in the model, that captures our prior beliefs and uncertainties of the solution we seek. We then compute the maximum a posteriori (MAP) estimate of the factors. Using Bayes' rule, the posterior is given by

| (6) |

Since the numerator is constant, the negative log posterior is the sum of a likelihood term that penalizes model misfit, and a prior term that penalizes solutions that are unlikely under the prior:

| (7) |

The MAP estimate of D and H is

| (8) |

and it can again be computed using any appropriate optimization algorithm.

Example 2 (nonnegative sparse coding). —

An example of a MAP NMF is nonnegative sparse coding (NNSC) [9, 22], where the prior on H is i.i.d. exponential, and the prior on D is flat with each column constrained to have unit norm

(9) where ||D k|| is the Euclidean norm of the kth column of D. This corresponds to the following penalty term in the cost function:

(10) The gradient of the negative log prior with respect to H is then

(11) and the gradient with respect to D is zero, with the further normalization constraint given in (9).

2.3. Gaussian Process Priors

In the following, we derive the MAP estimate under the assumption that the nonnegative matrices D and H are independently determined by a Gaussian process [18] connected by a link function. The Gaussian process framework provides a principled and practical approach to the specification of the prior probability distribution of the factors in the NMF model. The prior is specified in terms of two functions: (i) a covariance function that describes corellations in the factors and (ii) a link function, that transforms the Gaussian process prior into a desired distribution over the nonnegative reals.

We assume that D and H are independent, so that we may write

| (12) |

In the following, we consider only the prior for H, since the treatment of D is equivalent due to the symmetry of the NMF problem. We assume that there is an underlying variable vector, h ∈ ℝLM, which is zero-mean multivariate Gaussian with covariance matrix Σ h:

| (13) |

and linked to H via a link function, fh: ℝ+ → ℝ as

| (14) |

which operates element-wise on its input. The vec (⋅) function in the expression stacks its matrix operand column by column. The link function should be strictly increasing, which ensures that the inverse exists. Later, we will further assume that the derivatives of fh and fh −1 exist. Under these assumptions, the prior over H is given by (using the change of variables theorem)

| (15) |

where 𝒥(⋅) denotes the Jacobian determinant and fh′ is the derivative of the link function. The negative log prior is then

| (16) |

This expression can be combined with an appropriate likelihood function, such as the least-squares likelihood in (4) and can be optimized to yield the MAP solution; however, in our experiments, we found that a more simple and robust algorithm can be obtained by making a change of variable as explained next.

2.4. Change of Optimization Variable

Instead of optimizing over the nonnegative factors D and H, we introduce the variables δ and η, which are related to D and H by

| (17) |

where the vec−1(⋅) function maps its vector input into a matrix of appropriate size. The matrices C d and C h are the matrix square roots (Cholesky decompositions) of the covariance matrices Σ d and Σ h, such that δ and η are standard i.i.d. Gaussian.

This change of variable serves two purposes. First of all, we found that optimizing over the transformed variables was faster, more robust, and less prone to getting stuck in local minima. Second, the transformed variables are not constrained to be nonnegative, which allows us to use existing unconstrained optimization methods to compute their MAP estimate.

The prior distribution of the transformed variable η is

| (18) |

and the negative log prior is

| (19) |

To compute the MAP estimate of the transformed variables, we must combine this expression for the prior (and a similar expression for the prior of δ) with a likelihood function, in which the same change of variable is made

| (20) |

Then, the MAP solution can be found by optimizing over δ and η as

| (21) |

and, finally, estimates of D and H can be computed using (17).

Example 3 (least squares nonnegative matrix factorization with Gaussian process priors (GPP-NMF)). —

If we use the least squares likelihood in (4), the posterior distribution in (20) is given by

(22) The MAP estimate of δ and η is found by minimizing this expression, for which the derivative is useful

(23) where ⊙ denotes the Hadamard (element-wise) product. The derivative with respect to δ is similar due to the symmetry of the NMF problem.

2.5. Link Function

Any strictly increasing link function that maps the nonnegative reals to the real line can be used in the proposed framework; however, in order to have a better probabilistic interpretation of the prior distribution, we propose a simple principle for choosing the link function. We choose the link function such that fh −1 maps the marginal distribution of the elements of the underlying Gaussian process vector h into a specifically chosen marginal distribution of the elements of H. Such an inverse function can be found as fh −1(h i) = PH −1(Ph(h i)), where P(⋅) denotes the marginal cumulative distribution functions (CDFs).

Since the marginals of a Gaussian process are Gaussian, Ph(h i) is the Gaussian (CDF), and, using (13), the inverse link function is given by

| (24) |

where σi 2 is the ith diagonal element of Σ h and Φ(⋅) is the error function.

Example 4 (exponential-to-Gaussian link function). —

If we choose to have exponential marginals in H, as in NNSC described in Example 2, we select PH as the exponential CDF. The inverse link function is then

(25) where λ is an inverse scale parameter. The derivative of the inverse link function, which is needed for the parameter estimation, is given by

(26)

Example 5 (rectified-Gaussian-to-Gaussian link function). —

Another interesting nonnegative distribution is the rectified Gaussian given by

(27) Using this pdf in (24), the inverse link function is

(28) and the derivative of the inverse link function is

(29)

2.6. Summary of the GPP-NMF Method

The GPP-NMF method can be summarized in the following steps.

Choose a suitable negative log-likelihood function ℒ X∣D,H(D,H) based on knowledge of the distribution of the data or the residual.

For each of the nonnegative factors D and H, choose suitable link and covariance functions according to your prior beliefs. If necessary, draw samples from the prior distribution to examine its properties.

Compute the MAP estimate of δ and η by (21) using any suitable unconstrained optimization algorithm.

Compute D and H using (17).

Our Matlab implemention of the GPP-NMF method is available online [23].

3. Experimental Results

We will demonstrate the proposed method on two examples, first a toy example, and second an example taken from the chemical shift brain imaging literature.

In our experiments, we use the least squares GPP-NMF described in Example 3 and the link functions described in Examples 4-5. The specific optimization method used to compute the GPP-NMF MAP estimate is not the topic of this paper, and any unconstrained optimization algorithm could in principle be used. In our experiments, we used a simple gradient descent with line search to perform a total of 1000 alternating updates of δ and η, after which the algorithm had converged. For details of the implementation, see the accompanying Matlab code [23].

3.1. Toy Example

We generated a 100 × 200 data matrix, Y, by taking a random sample from the GPP-NMF model with two factors. We chose the generating covariance function for both δ and η as a Gaussian radial basis function (RBF)

| (30) |

where i and j are two sample indices, and the length-scale parameter, which determines the smoothness of the factors, was β 2 = 100. We set the covariance between the two factors to zero, such that the factors were uncorrelated. For the matrix D, we used the rectified-Gaussian-to-Gaussian link function with s = 1; and for H, we used the exponential-to-Gaussian link function with λ = 1. Finally, we added independent Gaussian noise with variance σN 2 = 25, which resulted in a signal-to-noise ratio of approximately −7 dB. The generated data matrix is shown in Figure 1.

Figure 1.

Toy example data matrix (upper left), underlying noise-free nonnegative data (upper right), and estimates using the four methods described in the text. The data has a fairly large amount of noise, and the underlying nonnegative factors are smooth in both directions. The LS-NMF and CNMF decompositions are nonsmooth since these methods are not model of correlations in the factors. The GPP-NMF, which uses a smooth prior, finds a smooth solution. When using the correct prior, the soulution is very close to the true underlying data.

We then decomposed the generated data matrix using the following four different methods:

LS-NMF: standard least squares NMF [8]. This algorithm does not allow negative data points, so these were set to zero in the experiment.

CNMF: constrained NMF [6, 7], which is a least squares NMF algorithm that allows negative observations.

GPP-NMF: correct prior: the proposed method with correct link functions, covariance matrix, and parameter values.

GPP-NMF: incorrect prior: to illustrate the sensitivity of the method to prior assumptions, we evaluated the proposed method with incorrect prior assumptions: we switched the link functions, such that for D we used the exponential-to-Gaussian, and for H we used the rectified-Gaussian-to-Gaussian. We used an RBF covariance function with β 2 = 10 and β 2 = 1000 for D and H, respectively.

The results of the decompositions are shown as reconstructed data matrices in Figure 1. All four methods find solutions that visually appear to fit the underlying data. Both LS-NMF and CNMF find nonsmooth solutions, whereas the two GPP-NMF results are smooth in accordance with the priors. In the GPP-NMF with incorrect prior, the dark areas (high-pixel intensities) appear too wide in the first axis direction and too narrow in the section axis direction, due to the incorrect setting of the covariance function. The GPP-NMF with correct prior is visually almost equal to the true underlying data.

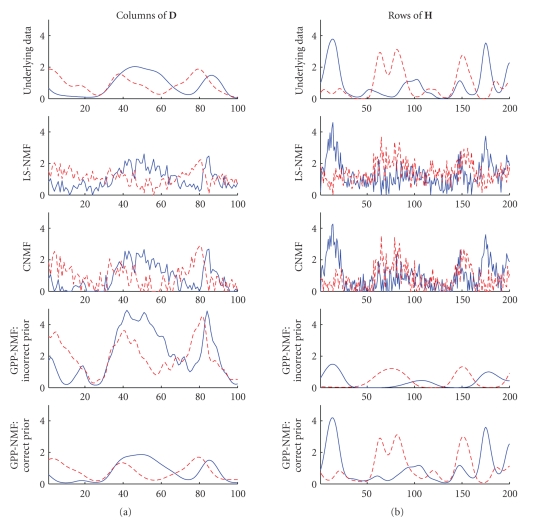

Plots of the estimated factors are show in Figure 2. The factors estimated by the LS-NMF and the CNMF methods appear noisy and are nonsmooth, whereas the factors estimated by the GPP-NMF are smooth. The factors estimated by the LS-NMF method have a positive bias, because of the truncation of negative data. The GPP-NMF with incorrect prior has too many local extrema in the D factor and too few in the H factor due to the incorrect covariance functions. There are only minor difference between the result of the GPP-NMF with the correct prior and the underlying factors.

Figure 2.

Underlying nonnegative factors in the toy example: columns of D (left) and rows of H (right). The factors found by the LS-NMF and the CNMF algorithms are noisy, whereas the factors found by the GPP-NMF method are smooth. When using the correct prior, the factors found are very similar to the true factors.

Measures of root mean squared error (RMSE) of the four decompositions are given in Figure 3. All four methods fit the noisy data almost equally well. (Note that, due to the additive noise with variance 25, a perfect fit to the underlying factors would result in an RMSE of 5 with respect to the noisy data.) The LS-NMF fits the data worst due to the truncation of negative data points, and the CNMF fits the data best, due to overfitting. With respect to the noise-free data and the underlying factors, the RMSE is worst for the LS-NMF and best for the GPP-NMF with correct prior. The GPP-NMF with incorrect prior is better than both LS-NMF and CNMF in this case. This shows that in this situation it is better to use a prior which is not perfectly correct, compared to using no prior as in the LS-NMF and CNMF methods, (which corresponds to a flat prior over the nonnegative reals and no correlations).

Figure 3.

Toy example: root mean squared error (RMSE) with respect to the noisy data, the underlying noise-free data, and the true underlying nonnegative factors. The CNMF solution fits the noisy data slightly better, but the GPP-NMF solution fits the underlying data much better.

3.2. Chemical Shift Srain Imaging Example

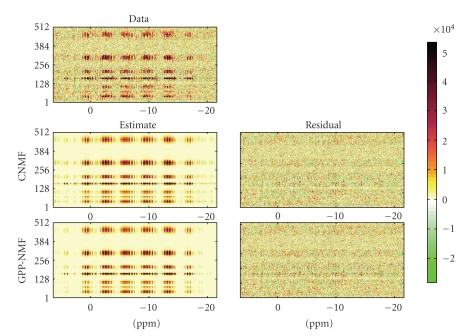

Next, we demonstrate the GPP-NMF method on 1H decoupled 31P chemical shift imaging data of the human brain. We use the data set from Ochs et al. [24], which has also been analyzed by Sajda et al. [6, 7]. The data set, which is shown in Figure 4, consists of 512 spectra measured on an 8 × 8 × 8 grid in the brain.

Figure 4.

Brain imaging data matrix (top) along with the estimated decomposition and residual for the CNMF (middle) and GPP-NMF (bottom) methods. In this view, the results of the two decompositions are very similar, the data appears to be modeled equally well and the residuals are similar in magnitude.

Ochs et al. [24] use PCA to determine that the data set is adequately described by two sources (which correspond to brain and muscle tissue). They propose a bilinear Bayesian approach, in which they use a smooth prior over the constituent spectra, and force to zero the amplitude of the spectral shape corresponding to muscle tissue at 12 positions deep inside the head. Their approach produces physically plausible results, but it is computationally very expensive and takes several hours to compute.

Sajda et al. [6, 7] propose an NMF approach that is reported also to produce physically plausible results. Their method is several orders of magnitude faster, taking less than a second to compute. The disadvantage of the method of Sajda et al. compared to the Bayesian approach of Ochs et al. is that it provides no mechanism for using prior knowledge to improve the solution.

The GPP-NMF approach we propose in this paper bridges the gap between the two previous approaches, in the sense that it is a relatively fast NMF approach, in which priors over the factors can be specified. These priors are specified by the choice of the link and covariance functions. We used prior predictive sampling to find reasonable settings of the the function parameters: we drew random samples from the prior distribution and examined properties of the factors and reconstructed data. We then manually adjusted the parameters of the prior to match our prior beliefs. An example of a random draw from the prior distribution is shown in Figure 5, with the parameters set as described below.

Figure 5.

Brain imaging data: random draw from the prior distribution with the parameters set as described in the text. The prior distribution of the constituent spectra (left) is exponential and smooth, and the spatial distribution (right) in the brain is exponential, smooth, and has a left-to-right symmetry.

We assumed that the factors are uncorrelated, so the covariance between factors is zero. We used a Gaussian RBF covariance function for the constituent spectra, with a length scale of β = 0.3 parts per million (ppm), and we used the exponential-to-Gaussian link function with λd = 1. This gave a prior for the spectra that is sparse with narrow smooth peaks. For the amplitude at the 512 voxels in the head, we used a Gaussian RBF covariance function on the 3D voxel indices, with length scale β = 2. Furthermore, we centered the left-to-right coordinate axis in the middle of the brain, and computed the RBF kernel on the absolute value of this coordinate, so that a left-to-right symmetry was introduced in the prior distribution. Again, we used the exponential-to-Gaussian link function, and we chose λh = 2 ⋅ 10−4 to match the overall magnitude of the data. This gave a prior for the amplitude distribution that is sparse, smooth, and symmetric. The noise variance was set to σN 2 = 108 which corresponds to the noise level in the data set.

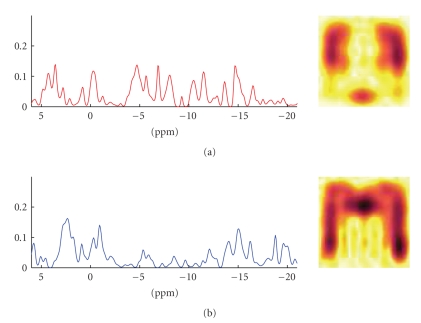

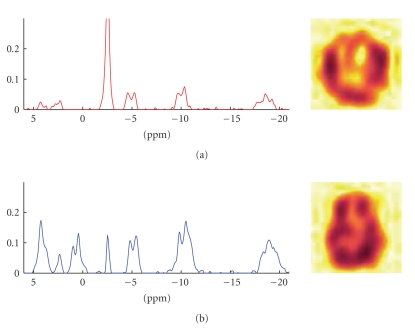

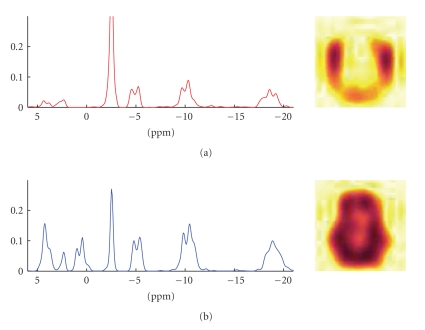

We then decomposed the data set using the proposed GPP-NMF algorithm and, for comparison, reproduced the results of Sajda et al. [7] using their CNMF method. The results of the experiments are shown in Figure 4. An example of a random draw from the prior distribution is shown in Figure 5. The results of the CNMF is shown in Figure 6, and the results of the GPP-NMF is shown in Figure 7. The figures show the constituent spectra and the fifth axial slice of the spatial distribution of the spectra. The 8 × 8 spatial distributions are smoothed in the illustration, similar to the way the results are visualized in the literature.

Figure 6.

CNMF decomposition result. The recovered spectra are physically plausible, and the spatial distribution in the brain for the muscle (top) and brain (bottom) tissue is somewhat separated. Muscle tissue is primarily found near the edge of the skull, whereas brain tissue is primarily found at the inside of the head.

Figure 7.

GPP-NMF decomposition result. The recovered spectra are very similar to the spectra found by the CNMF method, but they are slightly more smooth. The spatial distribution in the brain is highly separated between brain and muscle tissue, and it is more symmetric than the CNMF solution.

The results show that both methods give physically plausible results. The main difference is that the spatial distribution of the spectra corresponding to muscle and brain tissue is much more separated in the GPP-NMF result, which is due to the exponential, smooth, and symmetric prior distribution. By including prior information, we obtain a solution, where the factor corresponding to muscle tissue is clearly located on the edge of the skull.

4. Conclusions

We have introduced a general method for exploiting prior knowledge in nonnegative matrix factorization, based on Gaussian process priors, linked to the nonnegative factors by a link function. The method can be combined with any existing NMF cost function that has a probabilistic interpretation, and any existing unconstrained optimization algorithm can be used to compute the maximum a posteriori estimate.

Experiments on toy data show that with a suitable selection of the prior distribution of the nonnegative factors, the GPP-NMF method gives much better results in terms of estimating the true underlying factors, both when compared to traditional NMF and CNMF.

Experiments on chemical shift brain imaging data show that the GPP-NMF method can be successfully used to include prior knowledge of the spectral and spatial distribution, resulting in better spatial separation between spectra corresponding to muscle and brain tissue.

Acknowledgments

We would like to thank Paul Sajda and Truman Brown for making the brain imaging data available to us. This research was supported by the Intelligent Sound project, Danish Technical Research Council Grant no. 26–02–0092 and partly supported also by the European Commission through the sixth framework IST Network of Excellence: Pattern Analysis, Statistical Modelling and Computational Learning (PASCAL), Contract no. 506778.

References

- 1.Paatero P, Tapper U. Positive matrix factorization: a nonnegative factor model with optimal utilization of error estimates of data values. Environmetrics. 1994;5(2):111–126. [Google Scholar]

- 2.Lee DD, Seung HS. Learning the parts of objects by nonnegative matrix factorization. Nature. 1999;401(6755):788–791. doi: 10.1038/44565. [DOI] [PubMed] [Google Scholar]

- 3.Weixiang L, Nanning Z, Qubo Y. Nonnegative matrix factorization and its applications in pattern recognition. Chinese Science Bulletin. 2006;51(1):7–18. [Google Scholar]

- 4.Ding C, He X, Simon HD. On the equivalence of nonnegative matrix factorization and spectral clustering. In: Proceedings of the 5th SIAM International Conference on Data Mining; April 2005; Newport Beach, Calif, USA. pp. 606–610. [Google Scholar]

- 5.Tsuge S, Shishibori M, Kuroiwa S, Kita K. Dimensionality reduction using nonnegative matrix factorization for information retrieval. In: Proceedings of IEEE International Conference on Systems, Man and Cybernetics, vol. 2; October 2001; Tucson, Ariz, USA. pp. 960–965. [Google Scholar]

- 6.Sajda P, Du S, Parra LC. Recovery of constituent spectra using nonnegative matrix factorization. In: Wavelets: Applications in Signal and Image Processing, vol. 5207; August 2003; San Diego, Calif, USA. pp. 321–331. Proceedings of SPIE. [Google Scholar]

- 7.Sajda P, Du S, Brown TR, Stoyanova R, Shungu DC, Mao X, Parra LC. Nonnegative matrix factorization for rapid recovery of constituent spectra in magnetic resonance chemical shift imaging of the brain. IEEE Transactions on Medical Imaging. 2004;23(12):1453–1465. doi: 10.1109/TMI.2004.834626. [DOI] [PubMed] [Google Scholar]

- 8.Lee DD, Seung HS. Algorithms for nonnegative matrix factorization. In: Proceedings of the 13th Annual Conference on Neural Information Processing Systems (NIPS '00); November-December 2000; Denver, Colo, USA. pp. 556–562. [Google Scholar]

- 9.Hoyer P. Nonnegative sparse coding. In: Proceedings of the 12th IEEE Workshop on Neural Networks for Signal Processing (NNSP '02); September 2002; Martigny, Switzerland. pp. 557–565. [Google Scholar]

- 10.Li SZ, Hou XW, Zhang HJ, Cheng QS. Learning spatially localized, parts-based representation. In: Proceedings of IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR '01), vol. 1; December 2001; Kauai, Hawaii, USA. pp. 207–212. [Google Scholar]

- 11.Chen Z, Cichocki A. Technical Report. Tokyo, Japan: Laboratory for Advanced Brain Signal Processing, RIKEN; 2005. Nonnegative matrix factorization with temporal smoothness and/or spatial decorrelation constraints. [Google Scholar]

- 12.Virtanen T. Sound source separation using sparse coding with temporal continuity objective. In: Proceedings of the International Computer Music Conference (ICMC '03); September-October 2003; Singapore. pp. 231–234. [Google Scholar]

- 13.Smaragdis P. Nonnegative matrix factor deconvolution; extraction of multiple sound sources from monophonic inputs. In: Proceedings of the 5th International Conference on Independent Component Analysis and Blind Signal Separation (ICA '04), vol. 3195; September 2004; Granada, Spain. Springer; pp. 494–499. Lecture Notes in Computer Science. [Google Scholar]

- 14.Schmidt MN, Mørup M. Nonnegative matrix factor 2-d deconvolution for blind single channel source separation. In: Proceedings of the 6th International Conference on Independent Component Analysis and Blind Signal Separation (ICA '06), vol. 3889; March 2006; Charleston, SC, USA. Springer; pp. 700–707. Lecture Notes in Computer Science. [Google Scholar]

- 15.Winther O, Petersen KB. Bayesian independent component analysis: variational methods and nonnegative decompositions. Digital Signal Processing. 2007;17(5):858–872. [Google Scholar]

- 16.Hofmann T. Probabilistic latent semantic indexing. In: Proceedings of the 22nd Annual International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR '99); August 1999; Berkeley, Calif, USA. pp. 50–57. [Google Scholar]

- 17.Cichocki A, Zdunek R, Amari S-I. Csiszar's divergences for nonnegative matrix factorization: family of new algorithms. In: Proceedings of the 6th International Conference on Independent Component Analysis and Blind Signal Separation (ICA '06), vol. 3889; March 2006; Charleston, SC, USA. Springer; pp. 32–39. Lecture Notes in Computer Science. [Google Scholar]

- 18.Rasmussen CE, Williams CKI. Gaussian Processes for Machine Learning. Cambridge, Mass, USA: MIT Press; 2006. [Google Scholar]

- 19.Lin C-J. Projected gradient methods for nonnegative matrix factorization. Neural Computation. 2007;19(10):2756–2779. doi: 10.1162/neco.2007.19.10.2756. [DOI] [PubMed] [Google Scholar]

- 20.Berry MW, Browne M, Langville AN, Pauca VP, Plemmons RJ. Algorithms and applications for approximate nonnegative matrix factorization. Computational Statistics & Data Analysis. 2007;52(1):155–173. [Google Scholar]

- 21.Kim D, Sra S, Dhillon IS. Fast Newton-type methods for the least squares nonnegative matrix approximation problem. In: Proceedings of the 7th SIAM International Conference on Data Mining; April 2007; Minneapolis, Minn, USA. pp. 343–354. [Google Scholar]

- 22.Eggert J, Körner E. Sparse coding and NMF. In: Proceedings of IEEE International Joint Conference on Neural Networks (IJCNN '04), vol. 4; July 2004; Budapest, Hungary. pp. 2529–2533. [Google Scholar]

- 23.Schmidt MN, Laurberg H. Nonnegative matrix factorization with Gaussian process priors. doi: 10.1155/2008/361705. Computational Intelligence and Neuroscience. In press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Ochs MF, Stoyanova RS, Arias-Mendoza F, Brown TR. A new method for spectral decomposition using a bilinear Bayesian approach. Journal of Magnetic Resonance. 1999;137(1):161–176. doi: 10.1006/jmre.1998.1639. [DOI] [PubMed] [Google Scholar]