Abstract

Laboratory-supported, community-based local surveillance systems for influenza can act as early warning systems in identifying the initial entry points of different influenza strains into the community. Unfortunately, local health departments often have limited resources to implement this type of surveillance. We developed and evaluated an active, local influenza surveillance system in 3 metropolitan Denver, Colo, counties that enabled timely case ascertainment and strain identification at little cost. When compared with Colorado’s surveillance system, our system detected cases 7 to 8 weeks earlier than the state’s electronic disease reporting system.

LABORATORY-SUPPORTED, community-based influenza surveillance can allow early detection of epidemics and circulating strains.1–6 This type of surveillance locally can enhance early strain detection, as seen during the detection of a novel strain in Hong Kong in 1997.1 Unfortunately, many current sources of locally available influenza surveillance data, such as influenza-like illness and school absenteeism data, lack strain-specific information. Thus, national agencies have emphasized the need for rapidly expandable local surveillance systems to track influenza with strain-specific detail.7–9

Resources can be limited locally, and surveillance is often left to state or national programs.7 For years, the Colorado Department of Public Health and Environment conducted enhanced surveillance through mandatory reporting of all confirmed and probable influenza cases, which identified an early, more-severe influenza season in 2003. However, the state discontinued this surveillance in October 2004; currently only influenza-related hospitalizations and pediatric deaths are required to be reported to the state.10 To overcome this gap, the Tri-County Health Department (TCHD), a metropolitan Denver area health department, developed a local surveillance system. The objective was to create a low-cost, laboratory-supported system for early influenza detection and strain identification that could be rapidly expanded to cover the entire influenza season if needed. We describe and compare this system to Colorado’s Electronic Disease Reporting System (CEDRS), the state’s surveillance system to which notifiable diseases, influenza-related hospitalizations, and pediatric deaths are reported.10,11

PROGRAM DESCRIPTION AND METHODS

TCHD developed 2 surveillance systems for comparison, an active system and a passive one. Case definition was laboratory based; a confirmed case had positive cultures or polymerase chain reaction test, and a probable case had positive results for any other test, including rapid antigen, enzyme immunoassay, or direct immunofluorescence antibody tests. For all cases, TCHD obtained demographic, address, and laboratory information.

For the active system, TCHD made weekly phone calls beginning October 1, 2004, to a contact at each of its hospitals. Contacts were mainly infection control practitioners or laboratory professionals. All 8 hospitals in the TCHD jurisdiction agreed to report cases among inpatients and outpatients of emergency departments and clinics. Calls ended after December 31, 2004, when it was determined that influenza A and B were both circulating widely and that surveillance would no longer be useful as an early warning system. TCHD implemented the passive system through its local Health Alert Network, which allows TCHD to fax information to every health professional in its jurisdiction. TCHD sent instructions and report forms to 539 health care providers, nursing homes, and laboratories through the network.

TCHD monitored costs for each system and surveyed hospital contacts’ satisfaction with the system. The department monitored both systems for timeliness, completeness, and other attributes and compared them with CEDRS. Periodically, TCHD faxed publications to inform all health care providers in the jurisdiction of the number of cases and strains identified.

RESULTS

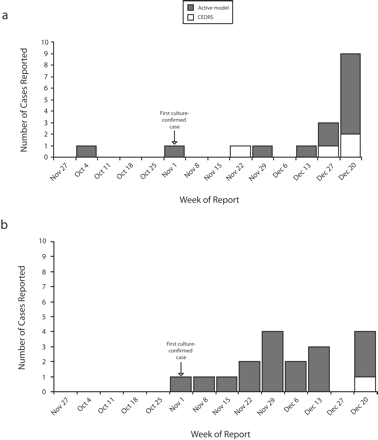

From October 1 to December 31, 2004, TCHD identified 43 probable influenza cases, 4 of which were confirmed. The active system identified 30 cases, the passive system 8 cases, and CEDRS 5 cases. Seventeen cases were identified as influenza A, 18 as influenza B, and 8 as type unspecified. The active system first detected both types of influenza in the TCHD jurisdiction 7 to 8 weeks before CEDRS (Figure 1 ▶).

FIGURE 1—

Probable and confirmed cases of (a) influenza A and (b) influenza B reported to Tri-County Health Department, by week of first report, through the active model or through Colorado’s Electronic Disease Reporting System: Greenwood Village, Colo.

The active system was well accepted by hospitals. Although reporting was voluntary, 100% of hospitals in the TCHD jurisdiction participated. All data fields were complete for the active system and CEDRS. The passive system had missing information for patients’ city of residence (25% missing), date of birth (25% missing), address (50% missing), and zip code (50% missing). On timeliness, median time from lab collection to the report to TCHD was 2 days (range: 0–7 days) for the active system, 1.5 days (range: 0–7 days) for CEDRS, and 1 day (range: 0–20 days) for the passive system.

Start-up expenses were approximately $1600 for TCHD’s active system. Maintenance averaged 30 minutes per week for 2 TCHD staff, totaling $26.39 per week. Hospital contact time (phone calls) averaged 7 minutes weekly. For the passive system, the initial Health Alert Network cost was $300, with negligible incremental maintenance expenses.

DISCUSSION

TCHD’s active surveillance system was useful in the timely identification of cases and strains. This system overcame gaps in other available influenza surveillance data, such as CEDRS hospitalization, influenza-like illness, and school absenteeism data, which can lack timeliness or specificity. Compared with CEDRS, the active system detected cases 7 to 8 weeks earlier in the TCHD jurisdiction, indicating that surveillance of hospitalized cases alone can be insufficient in early case detection. Subsequent to this study, the Colorado Department of Public Health and Environment initiated enhanced confirmatory testing of outpatients during the early season to improve outpatient tracking.

Furthermore, TCHD’s laboratory-supported surveillance enabled greater specificity compared with influenza-like illness or school absenteeism data. The proportion of influenza-like illness patients confirmed with influenza can vary greatly depending on the prevalence of influenza viruses in the community.2,12 Laboratory-supported surveillance allows differentiation between influenza and other causes of influenza-like illness.2,3 Moreover, although school absenteeism can be a useful, nonvirologic indicator of influenza, it can also be nonspecific.13–15 In 2004, a large pertussis outbreak in the TCHD jurisdiction forced schools to exclude students coughing for more than 2 days, making absenteeism a poor indicator of influenza activity. Thus, TCHD’s active system proved more timely and specific than other available data.

The active system facilitated communication between TCHD and its hospitals. Its minimal weekly costs easily allow for expansion to cover the entire influenza season, if needed, with few additional resources. Moreover, the system proved flexible in novel situations, such as the 2004 influenza vaccine shortage, during which the weekly calls were quickly adapted to assess hospital vaccine availability.

The active system had limitations, however. First, the system was implemented only in hospitals and their affiliated clinics, which may not be representative of the entire TCHD population. Second, because of the lack of a gold standard facility for comparison, we were unable to assess the system’s sensitivity and specificity.

Despite these issues, we feel the active system can be a viable model for local health departments. Epidemic and pandemic influenza preparedness requires rapidly expandable local surveillance systems that provide timely case and strain identification. We offer a model that will allow such detection at minimal cost.

Acknowledgments

The authors would like to acknowledge Tom Torok at the Centers for Disease Control and Prevention, Atlanta, Ga; Ken Gershman at the Colorado Department of Public Health and Environment, Glendale; and Nisha Alden and Katya Ledin at the Tri-County Health Department, Greenwood Village, Colo.

Human Participant Protection No protocol approval was needed for this study.

Peer Reviewed

Contributors T.S. Ghosh originated the study and supervised its implementation and led the writing. R.L. Vogt helped conceptualize ideas, interpret findings, and review drafts.

REFERENCES

- 1.Snacken R, Kendal AP, Haaheim LR, Wood JM. The next influenza pandemic: lessons from Hong Kong, 1997. Emerg Infect Dis. 1999; 5(2):195–203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kelly H, Murphy A, Leong W, et al. Laboratory-supported influenza surveillance in Victorian sentinel general practices. Commun Dis Intell. 2000;24(12):379–383. [DOI] [PubMed] [Google Scholar]

- 3.Rebelo-de-Andrade H, Zambon MC. Different diagnostic methods for detection of influenza epidemics. Epidemiol Infect. 2000;124(3):515–522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Effler PV, Leong MC, Tom T, Nakata M. Enhancing public health surveillance for influenza virus by incorporating newly available rapid diagnostic tests. Emerg Infect Dis. 2002;8(1): 23–28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Tsui FC, Wagner MM, Dato V, Chang CC. Value of ICD-9 coded chief complaints for detection of epidemics. J Am Med Inform Assoc. 2002; 9(6)(suppl 1):s41–s47. [PMC free article] [PubMed] [Google Scholar]

- 6.Hashimoto S, Murakami Y, Taniguchi K, Nagai M. Detection of epidemics in their early stage through infectious disease surveillance. Int J Epidemiol. 2000;29(5):905–910. [DOI] [PubMed] [Google Scholar]

- 7.Gensheimer KF, Fukuda K, Brammer L, Cox N, Patriarca PA, Strikas RA. Preparing for pandemic influenza: the need for enhanced surveillance. Emerg Infect Dis. 1999; 5(2):297–299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Department of Health and Human Services. HHS Pandemic Influenza Plan Part 2: Public Health Guidance on Pandemic Influenza for State and Local Partners. Available at: http://www.hhs.gov/pandemicflu/plan/part2.html. Accessed October 17, 2007.

- 9.National Vaccine Program Office. Pandemic Influenza: A Planning Guide for State and Local Officials (Draft 2.1). Atlanta, Ga: Centers for Disease Control and Prevention; 2000.

- 10.Centers for Disease Control and Prevention. Surveillance for laboratory-confirmed, influenza-associated hospitalizations—Colorado, 2004–05 influenza season. MMWR Morb Mortal Wkly Rep. 2005;54(21);535–537. [PubMed] [Google Scholar]

- 11.Vogt RL, Spittle R, Cronquist A, Patnaik JL. Evaluation of the timeliness and completeness of a web-based notifiable disease reporting system by a local health department. J Public Health Manag Pract. 2006;12:540–544. [DOI] [PubMed] [Google Scholar]

- 12.Joseph CA. Virological surveillance of influenza in England and Wales: results of a two-year pilot study 1993/94 and 1994/95. Commun Dis Rep CDR Rev. 1995;5:R141–R145. [PubMed] [Google Scholar]

- 13.Glezen WP, Couch RB. Inter-pandemic influenza in the Houston area, 1974–76. N Engl J Med. 1978;298(11):587–592. [DOI] [PubMed] [Google Scholar]

- 14.Lenaway DD, Ambler A. Evaluation of a school-based influenza surveillance system. Public Health Rep. 1995;110(3):333–337. [PMC free article] [PubMed] [Google Scholar]

- 15.Takahashi H, Fujii H, Shindo N, Taniguchi K. Evaluation of the Japanese school health surveillance system for influenza. Jpn J Infect Dis. 2001;54(1): 27–30. [PubMed] [Google Scholar]