Abstract

Objective

To implement a model of competency-based education in a basic science competency course using WebCT to improve doctor of pharmacy (PharmD) students' understanding and long-term retention of course materials.

Methods

An anatomy-cell biology course was broken down into 23 modules, and worksheets and mirrored examinations were created for each module. Students were allowed to take the proctored examinations using WebCT as many times as they wanted, with each subsequent test containing a new random subset of questions. Examination scores and the number of attempts required to obtain a passing score were analyzed.

Results

Student performance improved with the number of times a module examination was taken. Students who initially had low scores achieved final competency levels similar to those of students who initially had high scores. Score on module scores (didactic work) correlated with scores on practical work

Conclusions

Using WebCT to implement a model of competency-based education was effective in teaching foundational anatomy and cell biology to pharmacy students and could potentially be applied to other basic science courses.

Keywords: Keller method, anatomy, basic sciences, competency, WebCT

INTRODUCTION

A main concern in education is that students do not learn as effectively when teachers rely heavily upon traditional didactic lectures.1 The primary reasons for this include: (1) not distinguishing between students with regard to previous knowledge levels, learning styles, and individual learning ability; (2) using the same instructional pace for weak and strong students; and (3) lack of feedback after examinations. In addition, even when graded examinations are returned to them, students rarely undergo the process of correcting their knowledge deficits and retesting that new knowledge.

These educational limitations have been addressed by the Accreditation Council for Pharmacy Education (ACPE) in Standard 12: “Attention should be given to teaching efficiencies and effectiveness as well as innovative ways and means of curricular delivery. Educational techniques and technologies should be appropriately integrated to… meet the needs of diverse learners. (In addition) evidence that the educational process involves students as active, self-directed learners… should be provided.”2

Despite the drawbacks of the large class lecture format and ACPE's recommendation that pharmacy schools use innovative teaching strategies, many pharmacy faculty members still rely heavily upon traditional didactic lectures. To follow through on the ACPE's suggestion, we evaluated the extent to which a model of competency-based education (Keller's Personalized System of Instruction3) provides more effective PharmD instruction in the Anatomy and Cell Biology course at our School of Pharmacy.

The Keller model of competency based education is founded upon the educational concept of “mastery learning” where it is postulated that regardless of level of intelligence, all individuals could learn a subject given enough time and high enough quality instruction.4 From this initial hypothesis 2 primary models of education were developed: Bloom's “Learning for Mastery”5 and Keller's “Personalized System of Instruction.”3 Bloom's model suggested that a mid-unit examination be added to educational sets in order that students can remediate deficient knowledge before the final formative examination. Keller's model, on which this project is based, is similar but differs in that educational modules are smaller, students may take examinations as many times as they need to show competency, and there is personalized tutoring. In order to accomplish “Individualized Instruction” in the classroom, Keller in 1968 laid out major components of this methodology that defines classroom roles for the instructor, teaching assistants, and students (Table 1).

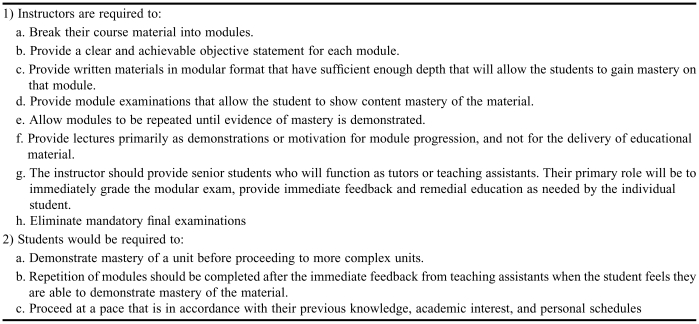

Table 1.

Primary Components of the 1968 Keller Method of Individualized Instruction

A meta-analysis of published research utilizing the Keller competency model suggested that individualized competency-based instruction has a number of advantages over didactic lectures coupled with high stake examinations. Specifically, those individuals, who normally achieve low or midlevel grades, learn significantly more and have greater long-term retention. However, the primary disadvantage is the significantly increased faculty workload and the need for teaching assistants.6

Despite this methodology being validated for use in the basic sciences, there are few published reports using the Keller method in graduate medical education and none in the discipline of pharmacy.7 We believe the reasons for this are lack of (1) faculty time, (2) limited number of student tutors or teaching assistants, and (3) knowledge regarding the methodology. We addressed these challenges by designing and implementing a modified version of the Keller Individualized Instruction model, with the primary difference being we have supplanted tutors and teaching assistants with a computer system that functions in a similar role. This was not considered to be a major methodological change as the use of student tutors has been shown to have varying impact on the success of a Keller-style competency course.8

In this manuscript, we present a computer-assisted, module-based course for Anatomy and Cell Biology, provide evidence of student learning within modules that included computer-assisted examinations, provide evaluative data showing modules increase laboratory practical scores, and summarize our conclusions about implementation of competency-module education in pharmacy basic science education.

DESIGN

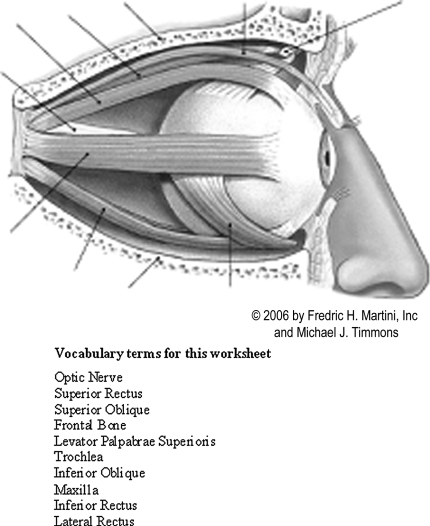

To develop the competency instructional methodology, we broke down the anatomy course material into 23 modules (Table 2). For each module, the expectations were that (1) the student would memorize the correct anatomical terms for a given organ and its structures and (2) would be able to spell them correctly. To accomplish this we assembled a set of vocabulary words that corresponded to an anatomical figure (Figure 1). All figures used in the modules were taken from the textbook Human Anatomy and used with permission of Pearson Education, Inc.9

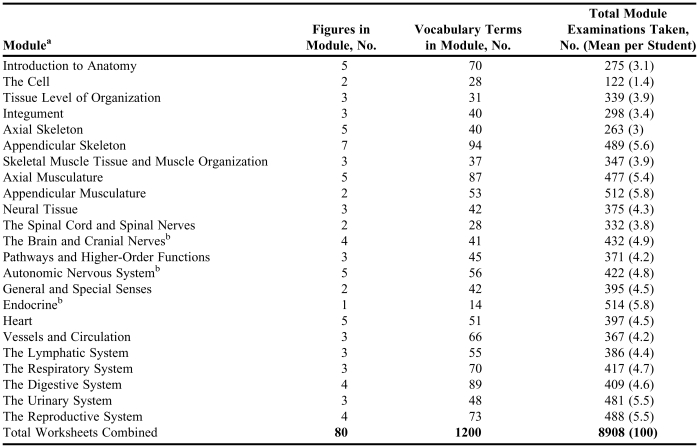

Table 2.

Modules Created for an Anatomy Course

aStudents were required to take all modules and earn a minimum grade of 70 to demonstrate competency in the course

bIndicates some of the questions in the module were conceptual and not just competency based

Figure 1.

Example of a module worksheet. Figure 1 shows one figure from the Axial Musculature worksheets. This module contained 5 figures and 87 vocabulary terms. Students would use these worksheets to memorize the anatomical terms and their corresponding anatomical locations prior to taking a module examination through WebCt. Figure is used with permission of Pearson Education, Inc., from the textbook Human Anatomy by Martini, Timmons, and Tallitsch.9

After creation of the student module materials, we assembled 23 module examinations. Examination questions were created using TestGen 6.0 for Human Anatomy. We programmed nearly 5,000 alternative answers in WebCT that would be considered correct, but were not included in the original examination question. After the questions were assembled into individual modules we imported the questions into WebCT. WebCT was programmed to present a random subset of approximately 20% of the questions in 1 module to the student for 1 examination. For subsequent module examinations, a new random subset of approximately 20% questions from the question bank was presented. This effectively created a different examination for each student and a different examination for students who desired to take the examination more than once. Because the examination was randomly generated with each attempt, it was possible that students saw a repeat question on subsequent attempts. While the initial faculty development time for this setup was extensive (approximately 160 hours), in subsequent years we realized a significant reduction in faculty work time given there were no examinations to be graded or administered (graduate students proctored the modular examinations).

After submission of a module examination, the computer calculated the student's score and provided immediate feedback including the correct answers to questions answered incorrectly. This was a deviation from the Keller method, as he originally had teaching assistants grade the examinations and provide remedial feedback. We believe that our method is as effective, as a student could generate as many random examinations as desired without needing to wait on a teaching assistant to grade the examination or a tutor to provide feedback. (Table 3 summarizes the competency module process for a student.)

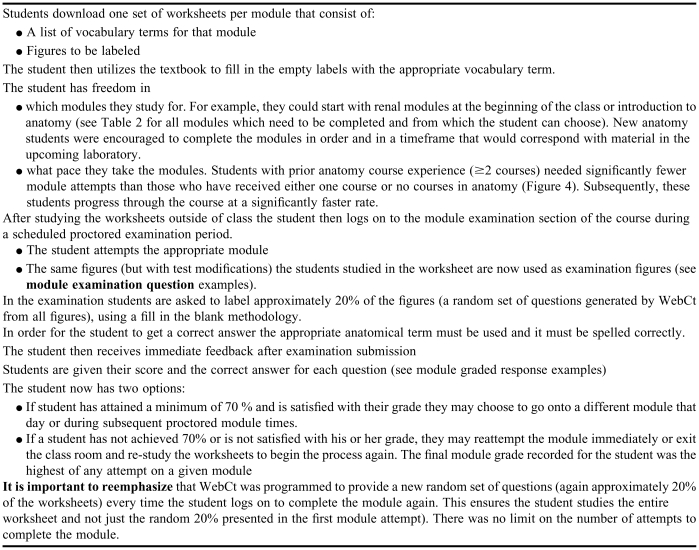

Table 3.

Competency Module Process for the Student

In addition to the modules, the course contained 43 lectures that were complementary to the modules. There were 5 small quizzes dispersed throughout the lectures to encourage lecture attendance and participation. The course also consisted of 5 human cadaver laboratories in which students were allowed to study the material in groups at 8-10 different stations. Prior to the cadaver laboratories, students were provided 8-10 different worksheets to study for the laboratory. Individual worksheets were completed by a group of approximately 10 students and presented to the other students electronically and in a short seminar-style presentation before the cadaver laboratory sessions. Students were tested on their knowledge in the day-long cadaver laboratory by multiple-choice tests in which students had to name organs or organ parts on human cadavers, cross sections, computerized tomography (CT) scans, and anatomical models. Assignment of points in the class was as follows: total module grade was 60 points multiplied by the final module grade (the average % scored on each module); total laboratory grade was 30 points multiplied by the average score (%) earned on the 5 cadaver laboratories; and lastly, the lecture quiz average was worth 10 points.

Statistical analysis included repeated measures ANOVA followed by Bonferroni's multiple comparison test or by a paired student t test as appropriate. Differences were considered statistically significant at p < 0.05. Errors are reported as standard error of mean (GraphPad Prism, version 4.0 for Windows, GraphPad Software, San Diego, Calif).

ASSESSMENT

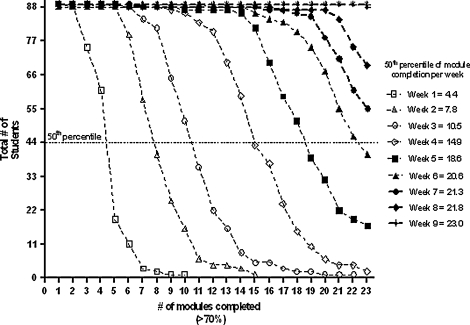

One of the concerns in implementing this approach was the requirement that all modules be completed by the student within the 9-week course; therefore, we tracked the number of completed modules per week (Figure 2). The first line (Week 1) shows that all 89 students had completed 2 modules; 74 students had completed 3 modules; 60 students had completed 4 modules; and 20 students had completed 5 modules. At the end of week 1, there was 1 student who had completed 10 modules. The first student completed all 23 modules by week 4 while 22 other students did not finish their 23rd module until week 9 of the class. All students were able to complete all modules within the proscribed timeframe.

Figure 2.

Progression of module completion by students over time. The 50th percentile of module completion is approximated on the graph (eg, at week 3, the 50th percentile for module completion was approximately 10.5 modules). It is important to note that all students finished all modules in the proscribed timeframe.

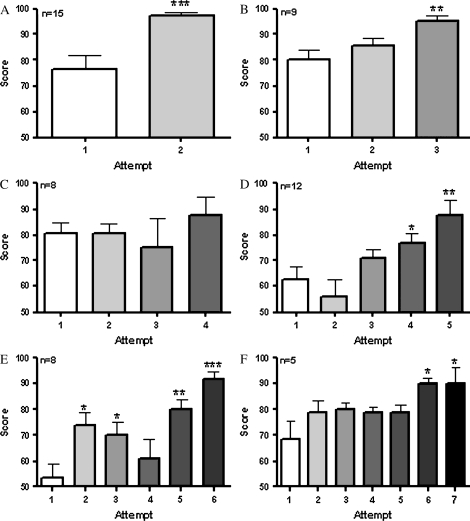

To determine whether students' grades improved during repeated module examinations, we divided students into groups according to the number of attempts at completing a module. For example, students who had taken a module twice were placed into one group, while those who had taken it 3 times were placed into another group, and so forth. Figure 3 shows the results (mean ± SEM) for the module “Appendicular Musculature.” Panel A of Figure 3 shows that there was a significant improvement between students' scores on their first attempt and scores on their second attempt (p < 0.001; n = 15). Similar improvement was seen in scores for all groups where the number of attempts was greater than or equal to 5. Of the remaining students who are not represented in the figure, there were 6 students who took the module 1 time, and 26 students who took the modules more than 7 times, with 17 attempts for this module being the highest. There were no significant differences in the mean final module grade among the above 6 groups (ANOVA), suggesting that given repeated educational guidance, students can earn the same grade as students who need less remediation. Lastly, we saw similar significant increases in student's scores with repeated module examinations for all modules throughout the course (data not shown).

Figure 3.

Evidence of student learning within the “Appendicular Musculature” module. The scores for module examinations are shown for students grouped by the number of times they completed the module examination. For example, students in group A took the module examination 2 times (n = 15) while students in group F took the module examination 7 times (n = 5). There was significant improvement between students' first attempt and their second attempt (p < 0.001). Similar statistical improvements were seen in all groups where the number of attempts was ≥ 5. There were no significant differences in the final module grade among the 6 groups (ANOVA).

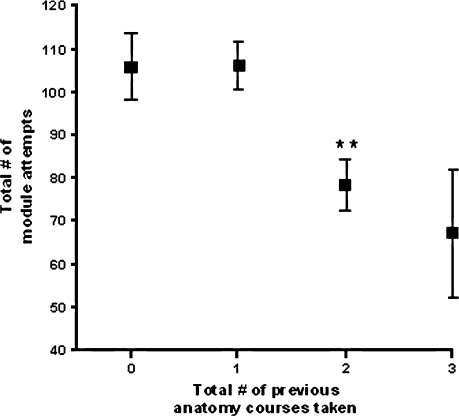

Despite the minimum requirement for the student to pass the module with a score of 70% or better in all groups and modules, the students repeated the module until their grade was greater than 90% (Figure 3). This was commented upon by the students in the course evaluations. For example, one student wrote “The repetition was helpful in learning. I thought it would be memorizing for a short test and then forgetting it, but I wanted good grades on the modules so I kept retaking them. I actually learned, not just memorized.” Similarly, another student commented, “I loved the modules and the fact that we could take it as many times as we wanted. It made me really learn the material by the time I got the grade I wanted.” There were no significant differences (p > 0.05, ANOVA) in the final module grade between the groups who took the module examinations twice and those who took them 7 times. These data suggested that if given repeated educational guidance, students who need more remediation will eventually earn on average the same grade as students who need less remediation (eg, those students who needed to take the module only twice). The data indicate that using this methodology in a pharmacy curriculum, students will bring themselves to a high level of competency (approximately 20% greater than what was required), regardless of the amount of effort needed to do so (Figure 4). This conclusion is supported by the finding that the final course module grade for approximately 80% of the students ranged between 95 and 100. For the remaining 20% of students the final class module grade ranged from 86 to 95. On the other hand, students may have been motivated to obtain good module grades because they were insecure about their performance in the cadaver laboratory examinations.

Figure 4.

The total number of module examination attempts a student required to complete the course was dependent on the number of anatomy courses they had completed prior to taking the course. Data reported as Mean ± SEM.

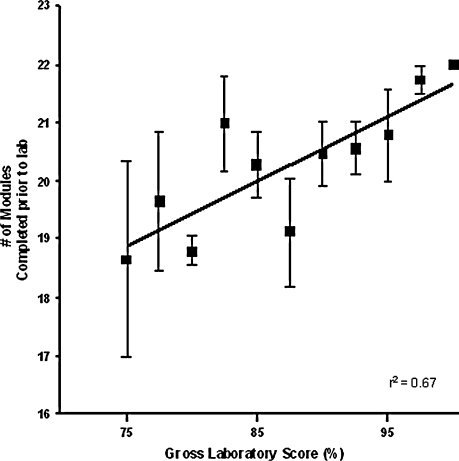

Completing a greater number of module examinations prior to the cadaver laboratory (completion is defined as a score of ≥70%) resulted in a greater score on the final non-comprehensive cadaver laboratory practical (Figure 5). These data strongly suggest that the electronic modules help the student in application of their knowledge in a real world setting (ie, outside the computer and in the laboratory). This was also supported by student comments to the open-ended course evaluation question “Did the modules help you improve your understanding in the gross anatomy lab?” Representative student comments included “if you were to make it a requirement to have all the modules pertaining to the bones completed before going to the anatomy lab that covers the bones, I am willing to bet the scores on the lab tests would improve” and “…I got behind in taking the modules and on labs that I made a C; I had not completed the corresponding modules.”

Figure 5.

Correlation between students' gross laboratory scores and the number of modules students completed (>70%) prior to the laboratory. Data reported as Mean ± SEM.

Another open-ended evaluation question was asked in student evaluations at the end of the course: “Please describe how the modules contributed to your overall understanding of anatomy.” The answers to the questions could roughly be broken into 3 major categories: (1) the modules were very valuable as a learning tool for the students, (2) module repetition helped reduce the perception of “cramming” for examinations and the students felt they learned more, and (3) students appreciated the self-paced examination which they could complete at their convenience. The responses seemed to suggest that the modules were a positive addition to the course not only as a learning tool for which they were designed, but also as a way for a student to earn the grade they wanted in the course.

DISCUSSION

We believe the module approach allowed the students to work at a pace that was in accordance with their needs and or prior academic knowledge. Self-paced progression was considered crucial when the model was implemented since the course had been shortened from 17 to 9 weeks, and we believed students needed to have flexibility in demonstrating subject competency in this short timeframe. The second reason we implemented this model was to develop a system that would show evidence of content mastery, more so than just succeeding on a few multiple-choice examinations. Indeed there is strong evidence that each student demonstrated competency in each modular subject. While there were significant differences in the number of module examination attempts students needed to demonstrate competency (Figure 3), eventually all students were able to provide evidence they had learned 1200 anatomical terms.

We believe that one of the greatest effective portions of the module approach for students was that module examinations provided immediate feedback. With increasing class sizes, it is nearly impossible for faculty members to provide tutoring and feedback to individual students on a large scale. Using this system, we were able to provide immediate feedback on all of the examinations. To understand the scale of feedback that this system provides, the students took approximately 9,000 examinations and the average number of question per examination was 15, so the total number of questions provided through the computer to the students as a whole was approximately 135,000. We believe that this system shifts a majority of the course from instructor-driven delivery of knowledge to an active student-learning paradigm.

There were 2 major categories of student comments that provided insights into possible weaknesses in the module approach. First, a few students indicated that they briefly studied before taking the modules, and then took the modules repeatedly until they documented competency and their score was as high as they desired (generally >90%). Although this may appear to be a weakness, it may just represent a new approach to studying the material. Two facts support this argument: (1) to obtain credit on any module question, a student had to be able to write and spell the correct answer, not simply recognize or ascertain the correct answer from a multiple-choice question; and (2) the questions for each module attempt were randomly chosen by the computer from a database. So it is more likely that student learning occurred rather than students were by chance improving module scores with subsequent attempts. Changes could be made to allow a finite number of module examination attempts. However, that would limit the students' ability to study by this method and the ability of weaker students to show competency.

The second concern was that while students could demonstrate evidence of mastery within a specific subject of anatomy, they were not asked to exhibit a comprehensive knowledge in the general subject of anatomy. Therefore, it may be reasonable to add a midterm and/r final examination so that overall competency could be documented. However this change would require a culture shift in the course in that students would be required to complete the modules in a more organized pattern (ie, completing the first half of the modules before midterm). Taking module examinations in a more regimented manner might improve student learning as we have shown that completing the module examination before attending the cadaver laboratory increased practical examination scores in the laboratory (Figure 5).

SUMMARY

The competency module approach presented for this course appears to be well suited when students need to comprehend and assimilate large amounts of foundational knowledge. This is appropriate for many basic science courses in pharmacy. For example, basic biochemistry, medicinal chemistry, and pharmaceutics could be taught using this approach. In addition, this method could be implemented to teach students common drugs, including generic and brand names, mechanisms of action, dosing, adverse effects, major interactions, and contraindications.

The Keller approach using a computerized system significantly improves feedback for student learning and allows them to truly take ownership for gaining competency in the course. Improvement leads to increased competency among various student groups and a self-paced, student-driven, active-learning process. To our knowledge this is the first documented use of a computerized, self-paced competency model in a pharmacy school. Previous implementation may have been limited since it requires faculty members to provide student feedback and tutoring on every examination item and the development of multiple examinations for each content module. Our innovation is found in the use of computerized technology that allowed us to create random module examinations and provide immediate feedback and tutoring to the students.

REFERENCES

- 1.Twigg CA. The Pew Learning and Technology Program, Monograph at the National Center for Academic Transformation; 2002. Improving learning and reducing costs: redesigning large enrollment courses; pp. 1–28. [Google Scholar]

- 2.Accreditation Council for Pharmacy Education, Accreditation Standards and Guidelines for the Professional Program in Pharmacy Leading to the Doctor of Pharmacy Degree, Standard 12, Available at http://www.acpe-accredit.org/shared_info/standards1_view.htm. Accessed October 31, 2007.

- 3.Keller FS. Goodbye, teacher. J Appl Behav Analysis. 1968;1:79–89. doi: 10.1901/jaba.1968.1-79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Carroll JB. A model of school learning. Teachers Coll Rec. 1963;64:723–33. [Google Scholar]

- 5.Bloom B. Learning for mastery, evaluation comment (UCLA- Center for the Study of Evaluation of Instructional Programs) 1968;1:2–12. [Google Scholar]

- 6.Kulik JA, Kulik C, Cohen PA. A meta-analysis of outcome studies of Keller's personalized system of instruction. Am Psychol. 1979;34:307–18. [Google Scholar]

- 7.Kulik JA, Kulik C, Carmichael K. The Keller Plan in Science Teaching. Science. 1974;183:379–83. doi: 10.1126/science.183.4123.379. [DOI] [PubMed] [Google Scholar]

- 8.Buskist W, Cush D, DeGrandpre RJ. The life and times of PSI. J Behave Educ. 1974;2:215–34. [Google Scholar]

- 9.Martini FH, Timmons MJ, Tallitsch RB. Human Anatomy. 5th ed. New York: Benjamin Cummings; 2006. [Google Scholar]