Abstract

Since Ungerleider and Mishkin [Underleider LG, Mishkin M (1982) Two cortical visual systems. Analysis of Visual Behavior, eds Ingle MA, Goodale MI, Masfield RJW (MIT Press, Cambridge, MA), pp 549–586] proposed separate visual pathways for processing object shape and location, steady progress has been made in characterizing the organization of the two kinds of information in extrastriate visual cortex in humans. However, to date, there has been no broad-based survey of category and location information across all major functionally defined object-selective regions. In this study, we used an fMRI region-of-interest (ROI) approach to identify eight regions characterized by their strong selectivity for particular object categories (faces, scenes, bodies, and objects). Participants viewed four types of stimuli (faces, scenes, bodies, and cars) appearing in each of three different spatial locations (above, below, or at fixation). Analyses based on the mean response and voxelwise patterns of response in each ROI reveal location information in almost all of the known object-selective regions. Furthermore, category and location information can be read out independently of one another such that most regions contain both position-invariant category information and category-invariant position information. Finally, we find substantially more location information in ROIs on the lateral than those on the ventral surface of the brain, even though these regions have equal amounts of category information. Although the presence of both location and category information in most object-selective regions argues against a strict physical separation of processing streams for object shape and location, the ability to extract position-invariant category information and category-invariant position information from the same neural population indicates that form and location information nonetheless remain functionally independent.

Keywords: fusiform face area, position invariance, parahippocampal place area

Ungerleider and Mishkin (1) argued in a seminal article that information about form and location are segregated into separate processing streams in the primate visual system. Subsequent studies, using lesions, neurophysiology, and fMRI, have generally supported this hypothesis or its variants (2). However, other evidence indicates that the two pathways are not completely distinct but instead have multiple interconnections (3) and that the occipitoparietal “where” pathway (4) contains shape information, and the occipitotemporal “what” pathway contains location information (5, 6). Here, we used mean population response and multivariate pattern methods (7–9) with a region-of-interest (ROI) approach to ask how much location information is present in shape-selective cortex in humans, how that location information is distributed across specific functionally defined regions of occipitotemporal cortex, and how location information relates to category information in this pathway.

Extensive fMRI investigations over the last decade have characterized the functional organization of the occipitotemporal pathway in humans. Multiple cortical regions have been defined by their selectivity for general object shape, or by their selectivity for specific categories, such as bodies, faces, and scenes. For each of these kinds of selectivity, two ROIs have been identified, one on the ventral surface of the brain and one on the lateral surface; for example, the body-selective fusiform body area (FBA) (10, 11) lies on the ventral surface, and the extrastriate body area (EBA) (12) lies on the lateral surface.

Although much work has been done to characterize the shape or category selectivity of these regions, very little is known about whether they also process information about object location. At the most general level, some of these regions demonstrate contralateral field biases (13–16), and some of them [e.g., the parahippocampal place area (PPA)] respond more strongly to stimuli presented in the periphery, whereas others [e.g., the fusiform face area (FFA)] respond more strongly to foveal stimuli (17, 18). Studies conducted with retinotopic mapping (19, 20) have shown object-selective responses in certain retinotopically defined regions, although the degree to which these maps overlap object-selective cortex is not yet known. Other studies have found elevation biases (13), and sensitivity to translation around fixation (21) in lateral occipital area (LO). Although each of the aforementioned studies has shown a specific kind of location information in a small number of regions, to date, there has been no comprehensive examination of location information across the many category-selective functionally defined regions spanning both lateral and ventral surfaces. Here, we set out to do just that, using a method sensitive to both retinotopic and spatiotopic location information.

Results

Eye movements were recorded inside the scanner in five of the participants included in this study to confirm that they maintained fixation throughout the experimental runs. No significant differences were found in eye position across stimulus categories or locations [see supporting information (SI) Text].

ROI Localization.

The Localizer data were used to identify bilateral extrastriate regions selective for faces, bodies, scenes and objects. The face-selective FFA and the occipital face area (OFA) were defined by using a faces > objects contrast. The body-selective areas EBA and FBA were defined with a contrast of bodies > objects, and the scene-selective PPA and an area in the transverse occipital sulcus (TOS) were identified with a scenes > objects contrast. Finally, the broadly shape-selective areas that comprise the lateral occipital complex, namely LO and a posterior fusiform area (pFs), were identified with an objects > grid-scrambled objects comparison. All ROIs were defined by using a contrast threshold of P < 0.0001 uncorrected. Because the FFA and FBA are adjacent and appear to overlap, we excluded any overlapping voxels to create the functionally dissociated regions FFA* and FBA*, as described by Schwarzlose et al. (11). Not all ROIs were found in every participant, in part because of the limitations of high-resolution slice coverage and because only clusters comprised of 20 or more voxels were counted as ROIs and included in further analyses. The following ROIs were identified, with the number of participants in whom that ROI was identified in parenthesis: right FFA (8); left FFA (4); right FFA* (8); left FFA* (4); right OFA (7); left OFA (6); right EBA (9); left EBA (9); right FBA (8); left FBA (4); right FBA* (5); left FBA* (2); right PPA (5); left PPA (5); right TOS (6); left TOS (6); right LO (5); left LO (6); right pFs (5); left pFs (6). See SI Fig. 3 for a mapping of the relative locations of these ROIs on a representative flattened occipital surface. We also analyzed a posterior, visually active region of cortex near the occipital pole for participants whose slice coverage extended that far back. These ROIs, here denoted as early visual area (earlyV) (7), were included in the analyses so that we could compare findings from high-level extrastriate cortex to those from early retinotopic regions (presumably V1 and/or V2).

Mean Response Magnitude in ROIs.

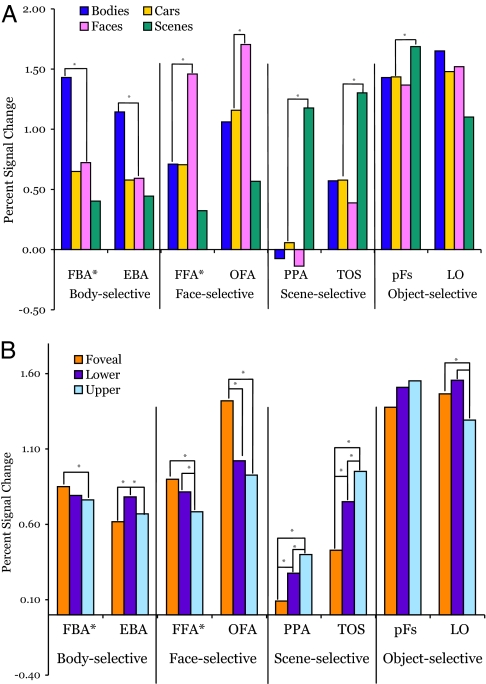

All subsequent ROI analyses were performed by using the independent dataset from the experimental runs. Using these data, we measured the mean magnitude of response to the 12 conditions (3 locations × 4 categories) across all voxels in each ROI. Fig. 1 shows the mean response in each ROI to each category (averaged across locations) and to each location (averaged across categories). These means are based on the data from those participants in whom the ROI could be identified. Each body-, face-, and scene-selective ROI produced a significantly greater response to its preferred category than to the second highest category (all P values < 0.005), replicating the category selectivity of these regions from prior studies and from the localizer by which they were defined.

Fig. 1.

Mean response magnitude in each object-selective ROI. (A) Mean responses across each ROI to each of the four object categories averaged across the three retinal locations. Significant differences (P < 0.05) in individual contrasts are indicated with an asterisk (here shown only between the highest and second highest responses). All ROIs defined by body-, face-, or scene-selectivity show significantly greater responses to their preferred category than to all other categories. (B) Mean responses across each ROI to each of the three stimulus locations averaged across all four object categories. Significant differences in mean response to different locations were found in nearly all ROIs and demonstrate that these regions contain location information.

Eccentricity.

Prior studies have shown that the magnitudes of response in the FFA, PPA, OFA, and TOS vary with the eccentricity of the stimuli (17, 18). In those studies, the peripheral stimuli consisted of multiple objects arranged into a ring or, alternately, a single object scaled so that its defining boundaries extended into the periphery (17, 22). To determine whether our paradigm, using individual objects, replicated this finding, we pooled the mean responses across the categories and conducted paired t tests comparing the response of images presented at the fovea with those presented in the lower or upper peripheral positions. These averaged response magnitudes are shown in Fig. 1, and the corresponding P values for each contrast are listed in SI Table 1. Our results support the findings of Hasson et al. (18), demonstrating a peripheral bias in the scene-selective regions and a foveal bias in face-selective regions.

Elevation.

Although eccentricity biases (17, 18) and contralateral biases (13–16) have been reported for some higher-level category-selective areas, no studies to date have systematically tested for elevation biases in regions other than LO, which shows a lower visual field bias (13). Therefore, we compared mean response magnitude in each ROI for upper and lower field stimuli at equal eccentricities. These response magnitudes are shown in Fig. 1 and the corresponding P values for this contrast are listed in SI Table 1. Remarkably, most of the ROIs demonstrated significant effects of elevation, such that the scene-selective areas PPA and TOS showed a significantly greater response to upper than lower field images, whereas the reverse was true of FFA*, EBA, and LO. These results provide new evidence that information about elevation is widespread across higher-level category-selective regions and that these regions contain different patterns of location biases across measures of both eccentricity and elevation.

Thus, all ROIs showed category information in terms of significantly different responses to different stimulus classes as expected from prior research. However, critically, nearly all ROIs also showed location information in the form of significantly different mean responses to different stimulus locations. These conclusions were reinforced by further analyses of mean responses (see SI Text).

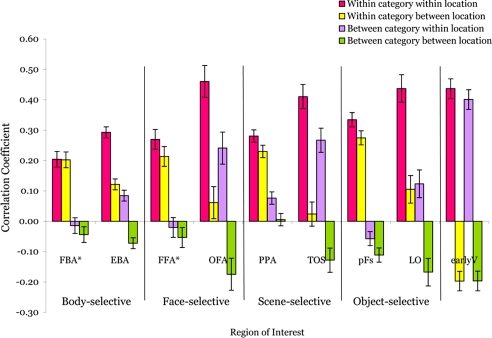

Multivariate Pattern Analyses.

Although our analyses of mean responses already demonstrate location information in all ROIs, the complementary method of pattern analysis (7–9) enables us to see information coded at a finer grain and to ask whether category information is position invariant. To measure information contained in each ROI about object category and object location, we followed the method of Haxby et al. (7) (see Materials and Methods). For each subject and ROI, 144 correlations were computed, one for each of the possible combinations of one pattern from the even runs (12 conditions) with one pattern from the odd runs (12 conditions). For each subject and ROI, we then averaged over these 144 correlation values as a function of whether the even and odd conditions were from the same (within) or different (between) category, and whether they were from the same (within) or different (between) location. The resulting means across subjects are shown in Fig. 2.

Fig. 2.

Voxelwise pattern information as demonstrated by average correlations across voxels in each ROI. These correlations are plotted as a function of whether the two response patterns are from the same (within) or different (between) categories and the same (within) or different (between) locations. They reveal that nearly all ROIs demonstrate both category and location information.

Omnibus ANOVAs for each ROI.

To determine whether location information can be found with pattern analyses, we ran a 2 × 2 repeated-measures ANOVA with category (within or between) and location (within or between) as factors for each ROI. The results of this analysis can be seen in SI Table 2. In keeping with prior pattern-classification findings (7, 23–25), all object-selective ROIs demonstrated category information, as indicated by a significant main effect of higher correlations within category than between categories (all P values < 0.0005). In contrast, there was no evidence of category information in the posterior retinotopic area earlyV (P = 0.14). These findings were as predicted. However, strikingly, all regions except FBA* (P = 0.40) also demonstrated location information, as indicated by the significant main effect of higher correlations within location than between locations (all P values < 0.05). Among the ROIs, only LO demonstrated a significant interaction between category and location, such that there was more location information within than between categories (P < 0.005). These results show that information about location exists in nearly all category-selective regions in this study and that this location information is independent of category information for almost all of them.

Because foveal stimuli were considerably smaller than peripheral stimuli, the inclusion of data from all three retinal locations confounds location information with size information. To remove the size confound, we repeated the analysis on correlations from only the upper and lower stimulus positions. Results from this ANOVA are shown in SI Fig. 4 and SI Table 3. This analysis yielded the same pattern of results, with all ROIs demonstrating a main effect of category information and all except FBA* (P = 0.73) demonstrating location information. Moreover, this analysis revealed no interaction between category and location information in any of the regions (all P values ≥ 0.10).

Lateral versus ventral surfaces.

The functional areas defined in this experiment lie either on the ventral temporal or lateral occipital cortical surfaces, and are laid out such that one region with each category selectivity (bodies, faces, scenes, and objects) is situated on each surface. We next asked whether ventral and lateral regions differ in the amount of information they contain about category and location. To address this question we conducted four repeated-measures ANOVAs of different ROI pairs. Each pair had the same category preference, with one ROI on each surface: bodies > objects for FBA* (ventral) and EBA (lateral); faces > objects for FFA* (ventral) and OFA (lateral); scenes > objects for PPA (ventral) and TOS (lateral); and objects > scrambled objects for pFs (ventral) and LO (lateral). These ANOVAs were run separately on each ROI pair to maximize the number of individual ROIs included in the analyses, because no single participant exhibited every functional region in both hemispheres. Each ANOVA had a 2 × 2 × 2 design, with surface (ventral or lateral), category (within or between), and location (within or between) as factors. The results are shown in SI Table 4. All region pairs replicated the main effects of category (all P values < 0.001) and location (all P values < 0.05). None of the four ANOVAs showed a significant interaction between category and surface (all P values ≥ 0.12), suggesting that the lateral and ventral regions contain comparable amounts of category information. However, all four ANOVAs showed a significant interaction between cortical surface and location information, such that lateral regions contained significantly more location information than did ventral regions (all P values < 0.05). Therefore, the amount of location information is a distinguishing characteristic between lateral category-selective regions and their ventral counterparts.

Ventral and lateral surfaces showing differences in location information could alternately be accounted for by ROI size, because lateral ROIs are, on average, larger than their ventral counterparts. One of the best examples of this disparity is the difference in size between the scene-selective ROIs PPA (average size, 68 voxels) and TOS (average size, 160 voxels). To test whether ROI size may be mediating the effect, we created PPA and TOS control ROIs comprised of 15 contiguous voxels each (see SI Text for details) and ran the same surface × location × category repeated-measures ANOVA described above. The complete statistical results from that test are shown in SI Table 5. Crucially, the analysis revealed a significant surface × location interaction (P < 0.05), replicating the original finding with whole ROIs of greater location information in lateral than ventral regions and ruling out ROI size as a factor mediating this effect.

Position-invariant category information.

One of the central challenges of object recognition is the ability to identify an object independent of where it appears in the visual field (26). The neural basis of this ability has been investigated at the level of individual neurons by asking whether the neuron's profile of response across different object categories is preserved despite changes in the retinal location of the stimulus (27). The question can also be asked of population codes across multiple neurons (28, 29) or voxels,‖ using pattern analysis methods. Here, we asked whether the category information present in our ROIs is invariant to changes in stimulus position. Note that the presence of location information does not preclude position-invariant category information in an ROI; rather, the same neural pattern can contain both types of information (28, 30). All ROIs except LO fail to show an interaction between category and location, indicating that these ROIs do not contain significantly more category information when position is held constant (i.e., within locations) than when it is not (i.e., between locations). It is important to note, however, that the statistical independence of location and category information in the pattern analyses conducted here do not imply that the two kinds of information do not interact at the level of individual voxels or neurons.

To further test for position-invariant category information, we compared the amount of category information present when stimuli are displayed in different retinal locations (thus, within-category versus between-category correlations when both are between-location), using 2-tailed, paired t tests. By this measure, all eight object-and shape-selective ROIs demonstrated position-invariant category information (all P values ≤ 0.001), whereas the retinotopic control area earlyV did not (P = 0.98). See SI Table 6 for individual P values from these tests of position-invariant category information.

Just as the responses of a neuron or, on a larger scale, a cortical area can contain position-invariant category information, they can also contain category-invariant position information. As a measure of this, we used greater correlations for within than between locations when the stimuli were from two different object categories. The results of this analysis are shown in SI Table 6. We found significant category-invariant position information in all ROIs (P values < 0.005) except FBA* (P = 0.23) and, marginally, FFA* (P = 0.07).

The results of these analyses indicate that all of the object-selective ROIs contain category information that is independent of stimulus location. This finding is particularly striking given our other analyses showing that nearly all of these regions also contain substantial location information. Representations in these regions are not position-invariant in the strictest sense, because they change with stimulus position. Nonetheless, our data show that the category information represented by the profiles of response in these regions is preserved across changes in stimulus position.

Confirmation of results with independent classification method.

To make sure that our results are not specific to the pattern analysis method we used, we also applied a linear support vector machine to our experimental dataset. See SI Text for the details of this analysis. Classification performance revealed category information in all ROIs (P values < 0.005) and location information in all ROIs (P values <0.05) except PPA (P = 0.09). Classification performance for each ROI is shown in SI Fig. 5, and individual P values are listed in SI Table 7.

Discussion

Our study provides a broad-based survey of category and location information across functionally defined object-selective regions, as measured by both means and multivariate pattern analyses. A number of important new findings were revealed. First, a substantial amount of information about object location was found in all ROIs except FBA*, even though these ROIs were defined by their selectivity for object shape or category. Second, category and location information are independent of one another in all regions except LO. Thus, despite the substantial amount of location information in nearly all ROIs, every object-selective ROI demonstrated significant position-invariant category information, in the sense that categories could be discriminated based on the pattern of response across voxels in that ROI even when this analysis was conducted across locations. Finally, we found more location information in the ROIs on the lateral surface (EBA, OFA, TOS, and LO) than in those on the ventral surface (FBA*, FFA*, PPA, and pFs), even though the two surfaces did not differ in the amount of information they contained about object category. These findings bear on a number of questions about the overall organization of the occipitotemporal pathway, which we discuss in turn.

Do Category and Location Information Coexist in Object-Selective Regions?

Although studies have shown the presence of location information in some cortical regions with strong selectivity for objects or categories (5, 6, 17, 18), our study shows that location information is a systematic property found in nearly all of the known object- and category-selective regions in humans. This location information is manifested in most of the ROIs by each of our two independent measures (see SI Fig. 6): (i) significant differences in mean response to stimuli presented in different locations (Fig. 1) and (ii) higher correlations across voxels within than between locations (Fig. 2). Category information is also present in all object-selective ROIs by both measures (Figs. 1 and 2). Thus, contrary to the strict interpretation of the original “dual pathway model” (1), category information and location information coexist in object-selective extrastriate cortical regions in humans, including those in the occipitotemporal pathway.

Do Category and Location Information Interact within ROIs?

The finding that information about category and location coexist in the same cortical areas raises the question of whether these types of information interact. This question goes right to the core of our understanding of vision. It is frequently argued that the central problem of vision is object recognition (26), and the crux of object recognition is solving the problem of invariance, that is, appreciating the sameness of an object despite the different images it casts as it moves across the retina. Segregating information into the “what” and “where” pathways is one way to achieve position invariance. However, both kinds of information can be represented independently in the same neural population code in the sense that either kind of information can be easily extracted (with a simple linear classifier) from the same population of neurons (28). Further, by keeping category information and location information together in the same neural substrate, it is possible to both extract position-invariant category and category-invariant position information and unite category and location information for perception, which is needed to solve the “binding problem” (29, 31) and hence to “know what is where by looking” (32). Thus, the ideal representation would contain in the same neural substrate both position-invariant category information and category-invariant position information.

The ROIs investigated here appear to contain just such an ideal representation. Our pattern analyses showed that categories can be distinguished just as well across locations as within locations in nearly all ROIs, providing striking evidence for position invariance of category information in most of the object-selective regions in our analysis. Further, most ROIs also showed a large amount of location information, which was just as strong within categories as between categories. Thus, analyses of fMRI patterns, like previous, more fine-grained analyses of local neural population codes (28), show that location and category information coexist independently at the population level in nearly all of the regions of occipitotemporal cortex.

Why do Category-Selective Regions Come in Pairs?

A notable feature of extrastriate cortex is that functionally defined category-selective regions seem to come in pairs. This phenomenon has been described for several categories, including bodies, faces, scenes, tools, and shape-selective areas (10, 11, 18, 21, 33). For each category pair, one of these regions is located on the ventral surface of the temporal lobe (FBA*, FFA*, PPA, and pFs), whereas the other is situated on the lateral occipital surface (EBA, OFA, TOS, and LO). The reason for this paired organization is not yet understood. Studies have shown that specific pairs of regions on the two surfaces differ in their sensitivity to features such as motion (18, 33), eccentricity (17), size and location (21), and object completeness (34). However, each of these studies tested only a small subset of object-selective regions. Our study, which systematically examined location information across a large set of object-selective ROIs using pattern analyses, found that lateral regions contain substantially more location information than do ventral regions, despite having equal amounts of category information. This systematic difference in the amount of location information between the two surfaces provides a preliminary clue of how the two surfaces differ in the representations they contain and computations they perform.

Why Are Combinations of Category and Location Selectivity Consistent Across ROIs?

Studies have reported that scene-selective areas show a higher response to stimuli in the periphery, whereas face-selective regions show a higher response to foveal stimuli (17, 18). The studies have proposed that these particular combinations of selectivities reflect the different computational requirements for processing each category: large-scale integration for scenes and fine-grained acuity for faces (17, 35). Although the results of our study generally replicate the peripheral preference of scene-selective regions and the foveal preference of face-selective regions, we also found biases for elevation in some of these and other ROIs. For example, both scene-selective areas, PPA and TOS, responded more strongly to upper than lower visual field locations, even though stimulus eccentricities were matched. Similarly, the EBA preferred lower visual field stimuli to both foveal and upper visual field stimuli, which activated the region equally. These findings, like earlier reports of contralateral biases in object-selective regions (13–16) do not fit within the fovea/periphery framework, and it is not clear how the computational-demands hypothesis (35) could account for them.

An alternate explanation for consistent combinations of category and location selectivities appeals instead to the statistics of experience (36); for example, to the extent that humans naturally tend to foveate faces (35), the foveal bias in face-selective areas and the lower visual field bias in the body-selective area EBA may reflect the locations where these stimuli are typically seen in daily life. Perhaps regions of cortex with a preexisting category selectivity develop location preferences corresponding to the retinal location where that object is typically seen. Alternately, location biases might arise first in the cortex, with category selectivities arising in those regions of cortex already biased toward the location where that object typically occurs. Note, however, that the difference in location biases in the body-selective areas EBA and FBA* suggests that pairings of category and location selectivity are not perfectly consistent across ROIs. Thus, although experiential statistics would seem to provide a better account of the specific combinations of category and location selectivities than do computational requirements, neither can account for all of the data.

Further Questions.

Although the results of this study yield several new insights about the relationship of location and category information in extrastriate cortex, they also raise many new questions. How precise is the location information contained in these category-selective areas? Here we sampled only three locations, many degrees apart; it is unclear how many different locations these ROIs can discriminate and whether such finer-grained location information can be detected with fMRI (at the present or perhaps higher spatial resolution). Second, does the location information reported here reflect retinotopic location or absolute location independent of eye position (16)? Third, is the location information revealed in this study epiphenomenal, or does it contribute to perception and behavior (37)? Fourth, does the location information reflect retinotopic organization within these regions? The correlational analyses used here are blind to the adjacencies of voxels and so cannot answer this question; however, the apparent overlap of some of our object-selective regions with retinotopic visual areas is suggestive. Finally, will the information in each ROI reported here be recoverable when participants view complex scenes containing multiple objects (38)? Regardless of how these questions are ultimately resolved, the present study provides the foundation for a better understanding of the information content and, hence, the function of each of the major object-selective regions in the occipitotemporal pathway.

Materials and Methods

Stimuli and Design.

Participants performed blocked localizer scans to identify ROIs and separate blocked experimental scans to measure the response of these regions to stimuli of different categories in different locations. Scans of each type (localizer and experimental) alternated throughout the scan session.

Participants completed five or six runs of the localizer scans, each of which consisted of three 16-s blocks of fixation and two 16-s blocks for each of five different stimulus classes (headless bodies, faces, outdoor scenes, assorted everyday objects, and grid-scrambled versions of those objects.) The conditions were presented in palindromic order within each run, and the serial position of each condition was counterbalanced within participants across the scan session. For each block of the localizer scan, 20 images from a single stimulus class were foveally presented (300 ms per image, with a 500-ms interstimulus interval). Scrambled object stimuli were constructed by superimposing a grid over the objects and relocating the component squares randomly (39). To ensure that participants paid attention when they freely viewed the images, they performed a one-back task in which they were asked to make a key-press whenever images were repeated consecutively, which happened 20 times per scan.

In the same scan session as the localizer runs, participants performed between 8 and 12 runs of a blocked experiment designed to test category and location selectivity in the ROIs. For these scans, participants were instructed to fixate on a central cross while images were presented at one of three locations (at, above, or below fixation with 5.25° of visual angle between the center of the image and the center of the fixation cross in the above and below conditions.) To roughly equate performance across conditions, peripheral images had to be scaled by more than the standard cortical magnification (40). Foveal stimuli were images 1.6° wide and high, whereas peripheral stimuli were 7.8° wide and high. Thus, foveal and peripheral images occupied nonoverlapping locations in visual space with an intervening gap of 0.55° as shown in SI Fig. 7. The stimuli used in these scans belonged to one of four categories: headless bodies, faces, cars, and scenes. Completely nonoverlapping sets of stimulus images (40 images per condition in each) were used for the localizer and experimental scans, and all experimental runs drew from the same set of stimuli. Each stimulus class was presented in each location for one block in every run (resulting in 12 conditions and 12 visual blocks per run). Each 16-s block consisted of 20 image presentations (300 ms per image with a 500-ms ISI). Location and stimulus class remained constant within a block. In addition, each run contained two 16-s fixation blocks. During these experimental scans, participants performed the same one-back task described above. Conditions were counterbalanced across runs to control for block ordering effects.

Four participants took part in a separate retinotopic mapping scan session, during which they viewed chromatic, continuously rotating wedges or expanding/contracting rings while performing a contrast decrement detection task at fixation. These participants each viewed three or four runs of rotating wedge angular mapping, two runs of ring eccentricity mapping, and five or six localizer runs. Full details of the retinotopic mapping stimuli and analysis methods are provided in ref. 41.

Functional Imaging.

Thirteen participants were scanned on a 3.0 T Siemens TimTrio scanner at the Martinos Imaging Center at the McGovern Institute for Brain Research, using a Siemens 12-channel Matrix head coil. Images were acquired with a gradient echo single-shot echo planar imaging sequence with a repetition time of 2 s, a flip angle 90°, and an echo time of 33.7–34.0 ms. Twenty to 26 2-mm thick slices were manually aligned approximately perpendicular to the calcarine sulcus to cover most of occipital, posterior parietal, and posterior temporal cortex. Voxel dimensions were 1.4 × 1.4 × 2.0 mm with a 0.4-mm interslice gap. In addition, one to two high-resolution MPRAGE anatomical scans were acquired for each participant in the same scan session. The same scan parameters and similar slice prescriptions were used in the retinotopic mapping sessions. For 8 of the 13 total participants, we monitored eye movements during the scans with an ISCAN model RK-826PCI pupil/corneal reflection tracking system. The data from four participants (including three who were scanned with the eye tracker) were excluded from further analysis because of excessive head motion (total vector motion >2.5 mm or rotation >3.5°).

Data Analysis.

Data analysis was performed by using Freesurfer and FS-FAST software (http://surfer.nmr.mgh.harvard.edu). The acquired images were motion corrected (42) before statistical analysis, and smoothed with a full width half maximum Gaussian kernel of 3 mm for localizer runs and 2 mm for retinotopic mapping scans. Data from experimental runs were not smoothed.

ROIs were individually defined for each participant, using the localizer scans. We then used fROI (http://froi.sourceforge.net) to extract the response magnitude for each voxel in each ROI to the various conditions in the separate experimental runs. The data and stimuli used to define the ROIs were entirely separate from those used to calculate the response magnitudes to each stimulus in each ROI. Response magnitudes were analyzed in two ways. First, we took the average of the response in all voxels within a given ROI to compute a mean percentage signal change value for each condition. Second, for the pattern analysis we followed the method of Haxby et al. (7). Specifically, we split data from the experimental scans in half, such that the odd runs were assigned to one dataset, and the even runs were assigned to the other. Responses in each individual voxel were normalized separately for each dataset by subtracting the voxel's mean response across all stimulus conditions from its response magnitude to each of the individual stimulus conditions. This resulted in normalized responses of each voxel for each condition in each of the two datasets (from even and odd runs), producing two voxelwise patterns of response for each condition in each dataset and ROI. For each ROI, 144 correlations were computed between the patterns of response for the 12 stimulus conditions in each dataset. Finally, these correlations were binned and averaged based on whether the correlated conditions were within category or location (e.g., faceodd–faceeven or upperodd–uppereven), or between category or location (e.g., faceodd–careven or upperodd–lowereven).

Although the mean population response magnitude and the voxelwise patterns of response can both demonstrate the presence of information about location and category in a region, they are orthogonal measurements that assess different neural phenomena. Pattern analyses assess the pattern of responses of subpopulations of neurons within an ROI and determine the degree to which this pattern is stable across datasets and conditions. If changes in mean occur uniformly across the voxels in the ROI, it will have no effect on the corresponding correlations. Conversely, the spatial pattern can differ greatly between two conditions, but, if the average of the responses across all voxels remains the same, the mean will be unaffected by these changes. See SI Fig. 6, which illustrates the independent effects of changes of means versus spatial patterns.

Supplementary Material

Acknowledgments.

We thank Christina Triantafyllou for technical support; Hans Op de Beeck, Nuo Li, David Cox, and Niko Kriegeskorte for comments on the manuscript; and James DiCarlo and Daniel D. Dilks for advice about the analyses. This work was supported by National Institutes of Health Grant E413455 (to N.K.), a National Science Foundation Graduate Research Fellowship, and a Sheldon Razin Graduate Student Fellowship (to R.F.S.).

Footnotes

The authors declare no conflict of interest.

This article contains supporting information online at www.pnas.org/cgi/content/full/0800431105/DC1.

Tong F, Kim DJ, Vision Sciences Society Annual Meeting, May 6–11, 2005, Sarasota, FL.

References

- 1.Underleider LG, Mishkin M. Two cortical visual systems. In: Ingle MA, Goodale MI, Masfield RJW, editors. Analysis of Visual Behavior. Cambridge, MA: MIT Press; 1982. pp. 549–586. [Google Scholar]

- 2.Goodale MA, Milner AD. Separate visual pathways for perception and action. Trends Neurosci. 1992;15:20–25. doi: 10.1016/0166-2236(92)90344-8. [DOI] [PubMed] [Google Scholar]

- 3.Merigan WH, Maunsell JH. How parallel are the primate visual pathways? Ann Rev Neurosci. 1993;16:369–402. doi: 10.1146/annurev.ne.16.030193.002101. [DOI] [PubMed] [Google Scholar]

- 4.Sereno AB, Maunsell JH. Shape selectivity in primate lateral intraparietal cortex. Nature. 1998;395:500–503. doi: 10.1038/26752. [DOI] [PubMed] [Google Scholar]

- 5.Op De Beeck H, Vogels R. Spatial sensitivity of macaque inferior temporal neurons. J Comp Neurol. 2000;426:505–518. doi: 10.1002/1096-9861(20001030)426:4<505::aid-cne1>3.0.co;2-m. [DOI] [PubMed] [Google Scholar]

- 6.DiCarlo JJ, Maunsell JH. Anterior inferotemporal neurons of monkeys engaged in object recognition can be highly sensitive to object retinal position. J Neurophysiol. 2003;89:3264–3278. doi: 10.1152/jn.00358.2002. [DOI] [PubMed] [Google Scholar]

- 7.Haxby JV, et al. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science. 2001;293:2425–2430. doi: 10.1126/science.1063736. [DOI] [PubMed] [Google Scholar]

- 8.Norman KA, Polyn SM, Detre GJ, Haxby JV. Beyond mind-reading: Multi-voxel pattern analysis of fMRI data. Trends Cogn Sci. 2006;10:424–430. doi: 10.1016/j.tics.2006.07.005. [DOI] [PubMed] [Google Scholar]

- 9.Haynes JD, Rees G. Decoding mental states from brain activity in humans. Nat Rev Neurosci. 2006;7:523–534. doi: 10.1038/nrn1931. [DOI] [PubMed] [Google Scholar]

- 10.Peelen MV, Downing PE. Selectivity for the human body in the fusiform gyrus. J Neurophysiol. 2005;93:603–608. doi: 10.1152/jn.00513.2004. [DOI] [PubMed] [Google Scholar]

- 11.Schwarzlose RF, Baker CI, Kanwisher N. Separate face and body selectivity on the fusiform gyrus. J Neurosci. 2005;25:11055–11059. doi: 10.1523/JNEUROSCI.2621-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Downing PE, Jiang Y, Shuman M, Kanwisher N. A cortical area selective for visual processing of the human body. Science. 2001;293:2470–2473. doi: 10.1126/science.1063414. [DOI] [PubMed] [Google Scholar]

- 13.Niemeier M, Goltz HC, Kuchinad A, Tweed DB, Vilis T. A contralateral preference in the lateral occipital area: Sensory and attentional mechanisms. Cereb Cortex. 2005;15:325–331. doi: 10.1093/cercor/bhh134. [DOI] [PubMed] [Google Scholar]

- 14.Hemond CC, Kanwisher N, Op de Beeck HP. A preference for contralateral stimuli in human object- and face-selective cortex. PLoS ONE. 2007:2. doi: 10.1371/journal.pone.0000574. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Macevoy SP, Epstein RA. Position selectivity in scene- and object-responsive occipitemporal regions. J Neurophysiol. 2007 doi: 10.1152/jn.00438.2007. [DOI] [PubMed] [Google Scholar]

- 16.McKyton A, Zohary E. Beyond retinotopic mapping: The spatial representation of objects in the human lateral occipital complex. Cereb Cortex. 2007;17:1164–1172. doi: 10.1093/cercor/bhl027. [DOI] [PubMed] [Google Scholar]

- 17.Levy I, Hasson U, Avidan G, Hendler T, Malach R. Center-periphery organization of human object areas. Nat Neurosci. 2001;4:533–539. doi: 10.1038/87490. [DOI] [PubMed] [Google Scholar]

- 18.Hasson U, Harel M, Levy I, Malach R. Large-scale mirror-symmetry organization of human occipito-temporal object areas. Neuron. 2003;37:1027–1041. doi: 10.1016/s0896-6273(03)00144-2. [DOI] [PubMed] [Google Scholar]

- 19.Brewer AA, Liu J, Wade AR, Wandell BA. Visual field maps and stimulus selectivity in human ventral occipital cortex. Nat Neurosci. 2005;8:1102–1109. doi: 10.1038/nn1507. [DOI] [PubMed] [Google Scholar]

- 20.Larsson J, Heeger DJ. Two retinotopic visual areas in human lateral occipital cortex. J Neurosci. 2006;2:13128–13142. doi: 10.1523/JNEUROSCI.1657-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Grill-Spector K, et al. Differential processing of objects under various viewing conditions in the human lateral occipital complex. Neuron. 1999;24:187–203. doi: 10.1016/s0896-6273(00)80832-6. [DOI] [PubMed] [Google Scholar]

- 22.Levy I, Hasson U, Harel M, Malach R. Functional analysis of the periphery effect in human building related areas. Hum Brain Mapp. 2004;22:15–26. doi: 10.1002/hbm.20010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Ishai A, Ungerleider LG, Martin A, Schouten JL, Haxby JV. Distributed representation of objects in the human ventral visual pathway. Proc Natl Acad Sci USA. 1999;96:9379–9384. doi: 10.1073/pnas.96.16.9379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Spiridon M, Kanwisher N. How distributed is visual category information in human occipito-temporal cortex? An fMRI study. Neuron. 2002;35:1157–1165. doi: 10.1016/s0896-6273(02)00877-2. [DOI] [PubMed] [Google Scholar]

- 25.O'Toole AJ, Jiang F, Abdi H, Haxby JV. Partially distributed representations of objects and faces in ventral temporal cortex. J Cogn Neurosci. 2005;17:580–590. doi: 10.1162/0898929053467550. [DOI] [PubMed] [Google Scholar]

- 26.Ullman S. High-Level Vision: Object Recognition and Visual Cognition. Cambridge, MA: MIT Press; 1996. [Google Scholar]

- 27.Ito M, Tamura H, Fujita I, Tanaka K. Size and position invariance of neuronal responses in monkey inferotemporal cortex. J Neurophysiol. 1995;73:218–226. doi: 10.1152/jn.1995.73.1.218. [DOI] [PubMed] [Google Scholar]

- 28.Hung CP, Kreiman G, Poggio T, DiCarlo JJ. Fast readout of object identity from macaque inferior temporal cortex. Science. 2005;310:863–866. doi: 10.1126/science.1117593. [DOI] [PubMed] [Google Scholar]

- 29.Cox DD. Dissertation. Cambridge, MA: Massachusetts Institute of Technology; 2007. Reverse Engineering Object Recognition. [Google Scholar]

- 30.DiCarlo JJ, Cox DD. Untangling invariant object recognition. Trends Cogn Sci. 2007;11:333–341. doi: 10.1016/j.tics.2007.06.010. [DOI] [PubMed] [Google Scholar]

- 31.Treisman A. The binding problem. Curr Opin Neurobiol. 1996;6:171–178. doi: 10.1016/s0959-4388(96)80070-5. [DOI] [PubMed] [Google Scholar]

- 32.Marr D. Vision: A Computational Investigation into the Human Representation and Processing of Visual Information. New York: Freeman; 1982. [Google Scholar]

- 33.Beauchamp MS, Lee KE, Haxby JV, Martin A. Parallel visual motion processing streams for manipulable objects and human movements. Neuron. 2002;34:149–159. doi: 10.1016/s0896-6273(02)00642-6. [DOI] [PubMed] [Google Scholar]

- 34.Taylor JC, Wiggett AJ, Downing PE. fMRI analysis of body and body part representations in the extrastriate and fusiform body areas. J Neurophysiol. 2007 doi: 10.1152/jn.00012.2007. [DOI] [PubMed] [Google Scholar]

- 35.Malach R, Levy I, Hasson U. The topography of high-order human object areas. Trends Cogn Sci. 2002;6:176–184. doi: 10.1016/s1364-6613(02)01870-3. [DOI] [PubMed] [Google Scholar]

- 36.Kanwisher N. Faces and places: of central (and peripheral) interest. Nat Neurosci. 2001;4:455–456. doi: 10.1038/87399. [DOI] [PubMed] [Google Scholar]

- 37.Williams MA, Dang S, Kanwisher NG. Only some spatial patterns of fMRI response are read out in task performance. Nat Neurosci. 2007;10:685–686. doi: 10.1038/nn1900. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Reddy L, Kanwisher N. Category selectivity in the ventral visual pathway confers robustness to clutter and diverted attention. Curr Biol. 2007 doi: 10.1016/j.cub.2007.10.043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Kourtzi Z, Kanwisher N. Cortical regions involved in perceiving object shape. J Neurosci. 2000;20:3310–3318. doi: 10.1523/JNEUROSCI.20-09-03310.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Duncan RO, Boynton GM. Cortical magnification within human primary visual cortex correlates with acuity thresholds. Neuron. 2003;38:659–671. doi: 10.1016/s0896-6273(03)00265-4. [DOI] [PubMed] [Google Scholar]

- 41.Swisher JD, Halko MA, Merabet LB, McMains SA, Somers DC. Visual topography of human intraparietal sulcus. J Neurosci. 2007;27:5326–5337. doi: 10.1523/JNEUROSCI.0991-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Cox R, Jesmanowicz A. Real-time 3D image registration for functional MRI. Magn Reson Med. 1999;42:1014–1018. doi: 10.1002/(sici)1522-2594(199912)42:6<1014::aid-mrm4>3.0.co;2-f. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.