Abstract

Clinical performance measurement and public reporting are taking center stage nationwide, linked to transparency initiatives and incentive systems that reward physicians for meeting endorsed quality standards. While electronic medical records (EMRs) are increasingly available to measure and improve quality of care, performance measurement continues to be dominated by the use of insurance claims. Limitations to claims-based measurement include challenges in assigning attribution of care to specific physicians, inefficient and incomplete sampling methods, and the coarseness of measures frequently available to insurers. Practice improvement using claims-based approaches is further limited by the inability to provide timely and specific feedback to physicians and their patients. Finally, in claims-based approaches, care is not measured for the 47 million uninsured patients in the United States. In the current presentation I describe how these limitations are being addressed using EMRs, highlighting the design and selected preliminary results of a large trial to improve the care of patients with diabetes.

Introduction and Context

Spurred by the Institute of Medicine's Quality Chasm series, clinical performance measurement and public reporting have taken center stage in the United States over the past half decade (1). The Bush administration has taken a lead role in these initiatives through its commitment to health care transparency and related efforts to encourage the nationwide dissemination of health information technology (2). Adopting other IOM recommendations to “lead by example” (3), the federal government also is beginning to leverage its enormous purchasing power - it operates six major health programs that serve nearly 100 million Americans - to create financial incentives that reward physicians who meet nationally endorsed standards of care for a variety of conditions (4, 5). In establishing such “pay-for-performance” approaches, the federal government expects to accelerate the adoption of similar ongoing programs in the private sector (6, 7).

While electronic medical records (EMRs) are increasingly available to measure and improve quality of care, performance measurement and public reporting to data have relied heavily on administrative, insurance-based claims for services, sometimes augmented by manual abstraction of a small sample of a given physician's medical records. However, there are several limitations to using claims for measurement and practice-centered improvement, beginning with challenges to the accurate assignment of responsibility for care to specific physicians or practices (called “attribution”). In a recent study of Medicare claims-based assignments by Pham and colleagues (8), for example, almost 50% of patients were identified as having changed physicians over a two-year period as determined by their claims-centered algorithm. Further, although there are ongoing efforts that seek to “harmonize” measures across systems (4, 9), different performance standards may be employed by different insurers for a given condition, based, in part, on their information systems' capabilities and their varying availability to access different measures. As a result, physicians may be confused by the variation across standards for a given condition and “lose the value” of performance measurement as a basis for quality improvement (10).

Additional limitations to claims-based performance improvement include the lack of timeliness of relevant feedback (because of the need for claims to be processed and adjudicated beforehand), its narrowness in scope (because “hybrid” approaches sample only a small fraction of a given physician's insured practice panel), inefficiency (especially when the responsibility for records abstraction needs to be borne by the physician or his/her practice), and lack of granularity in measurement (with claims-centered approaches typically unable to identify potentially important exclusions from a given standard, such as a documented allergy or clinical contraindication to a given medication). Others have raised issues of biases intrinsic to claims-based approaches (11–14). While all of these are important challenges to claims-based approaches, the absence of data pertaining to quality of care for America's 47 million uninsured is of special significance. With no common external repository of claims, this creates an essentially “invisible” but increasingly large number of patients for whom the principal sources of data are media-centered anecdotes and analyses of historical survey and utilization data.

In the current paper, I describe how some of these limitations to claims-based measurement and improvement can be addressed using ambulatory practice-based electronic medical records. I will highlight the application of EMRs as they are being employed in a large federally-funded trial to improve the care of patients with diabetes.

OVERVIEW: THE DIABETES IMPROVEMENT GROUP-INTERVENTION TRIAL

The Diabetes Improvement Group-Intervention Trial (DIG-IT), supported by the federal Agency for Health Care Research and Quality, was designed as a cluster-randomized trial of alternative EMR-catalyzed approaches to improve the care of patients with diabetes. Although two health care systems with 24 group practices participated in DIG-IT, to simplify the discussion in this paper, I will focus on the trial's design and preliminary results from the 10 group practices of one of these systems. Since 2000, each system has implemented the same commercial EMR (Epic® Systems, Madison, Wisconsin.) Using the EMR's database, we identified pre-intervention data pertaining to the 10 practices to balance their characteristics before assigning them randomly to one of two interventions - a.) Clinical Decision Support (CDS), the experimental intervention, in which we augmented the functions of the EMR to provide prompts, automated links to order sets, and graphical performance feedback to experimental group physicians and practices; and b.) “EMR Only”, the control group intervention, which retained basic decision support functions provided by the commercial EMR system, but was not further enhanced during the period of the trial. The study was approved by the Institutional Review Boards at both participating institutions. Detailed description of the study design and baseline results of the study groups are summarized elsewhere (15). The sections below intertwine our methods and selected preliminary results, highlighting how our EMR-catalyzed experimental intervention addressed selected limitations of claims-based approaches described above.

EMR-BASED METHODS FOR IDENTIFYING PATIENTS AND ATTRIBUTING RESPONSIBILITY FOR THEIR CARE

Premise:

Efforts to measure and report condition-specific and physician- or practice-level performance require accurate and complete linking of all patients with the given condition to the physician or practice to which care is attributed.

Approach:

Patients in the trial were identified by conventional medical records-based codes for diabetes on their problem list or documented prescriptions for insulin or other specific hypoglycemic medication. We wished to identify all of our practices' adult diabetic patients for whom we accepted primary longitudinal responsibility - our systems being “open” and not “closed” or staff-model health maintenance organizations - by requiring at least two kept primary care physician appointments spaced by at least one month. These diagnostic and “continuity” measures were easily retrieved and updated weekly from the EMR database. Over 7000 patients among the 10 participating practices were so identified during the two-year trial period. Current insurance status (classified as Medicare, Medicaid, Commercial, or uninsured) was identified for all patients.

For attributing responsibility for care, there having been no central repository of unambiguous links between patients and their primary care physicians, we used a “majority care” algorithm (8) to identify diabetic patient panels for each of 91 primary care physicians across the system's 10 group practices. After initializing these lists, or patient registries, we confirmed the links by asking each primary care physician two simple questions for each patient on his/her list: “Is this your patient?”, and “Does he/she have diabetes?” If the answer was “no” to either of these questions, we asked for further clarification and informed each physician that ambiguous links would be adjudicated by the group's medical director. Only 6 patient-physician links (<0.1%) required adjudication when the lists were first initialized, and no more than a dozen were questioned as the lists were updated weekly over the two-year period, including the identification of patients whose care transferred from one physician to another. Physicians had an average of 86 (interquartile range: 47–111) diabetic patients.

EMR-BASED PERFORMANCE MEASURES

Premise:

To be meaningful to the physician and his/her patient, performance measures should reflect accurate and detailed information about actionable, evidence-based indicators of health care and outcomes. If a measure does not apply to a specific patient, the physician and his/her patient should be excluded for that measure.

Approach:

After literature review, we chose to adopt several of the care standards reported and updated annually by the American Diabetes Association (16). These included standards pertaining to monitoring and improving glycemia (hemoglobin A1c testing intervals and all results), renal impairment (testing intervals for microalbuminuria and use of ACE inhibitors or angiotensin receptor blockers, ARBs), lipid status (testing intervals, all LDL cholesterol results, and the use of statin medications), blood pressure (all measurements), body mass index (all measurements), smoking (documentation and current status), and the receipt of an eye examination by an ophthalmologist (within the past 12 months) and a pneumococcal vaccination (ever). Patients with measure-specific exclusions were identified.

Eight of these measures were combined in a composite score reflecting “optimal” care or intermediate outcomes for patients with diabetes. These eight measures were further divided into those measures felt a priori to be easily accomplished through physician action, while the other four were felt to require more active patient engagement. The “physician-centric” measures included obtaining eye examinations, pneumococcal vaccinations, monitoring or treatment of kidney impairment with ACE inhibitors or ARBs, and control of lipid status (LDL cholesterol value<100mg/dl or on a statin medication). The other, more patient-centered measures included achieving optimal glycemic control (hemoglobin A1c value<7%), blood pressure (<130/80 mm Hg), body mass index (<30), and non-smoking status. As displayed below, we produced graphical displays of results for each component of the composite score, for each physician and his/her practice panel, with weekly updates.

EMR-FACILITATED CLINICAL DECISION SUPPORT (CDS)

Premise:

Performance improvement is the central purpose of performance measurement. At its core, effective clinical decision support (CDS) is intended to improve performance by giving the right information to the right person at the right time and place, making the correct action the easiest one to take.

Approach:

We designed several components to the EMR-facilitated CDS intervention, including encounter-based computerized prompts (“Best Practice Alerts”) that were linked to orders (“Smart Sets”) for specific tests, immunizations, treatments, or referrals; diabetes problem-specific educational materials for patients and physicians; and graphical and tabular on-line feedback reports for physicians that summarized their care for specific patients or their patient panels. Two types of CDS are displayed in Figures 1 and 2.

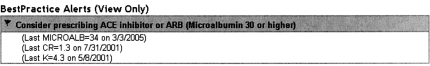

Fig. 1.

“Best Practice Alert” recommending that the physician consider prescribing an ACE inhibitor or angiotensin receptor blocker because of microalbuminuria in the diabetic patient he/she is seeing in the office. See text for further description.

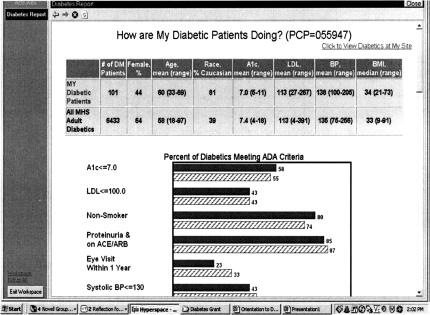

Fig. 2.

Comparative Feedback Report. Updated weekly, this chart compares the performance of a physician's patient panel with panels of other physicians within his/her practice, or with the entire health care organization. See text for further description.

Figure 1 displays an encounter-based “Alert”, prompting the physician to consider prescribing an ACE inhibitor or ARB medication for his/her patient. Key goals in the design of our Alerts were to provide timely and evidence-based recommendations that maximized the use of EMR data to minimize false-positive guidance - guidance that would lead to “alert fatigue” or dismissal of the recommendation.

For example, from Figure 1, we can infer the following about the patient: 1) that she is diabetic and is visiting her primary care physician; 2) that she has significant amounts of microalbuminuria (from laboratory data summarized in the Alert); 3) that she is not on an ACE inhibitor or ARB and has no documented allergies to them (understanding that “the system” has access both to her allergy and medication lists); and 4) that there are no other known contraindications to using an ACE inhibitor or ARB, specifically related to renal dysfunction or hyperkalemia (from laboratory data summarized in the Alert). Further, by clicking the button for the linked “Smart Set”, the physician will be able to choose from several alternative drugs and doses, which by another button click will result in the prescription being printed and the medication being documented in the patient's record.

Figure 2 displays a feedback report that provides comparative information on the current status of a given physician's patient panel with the patients of other physicians in his/her group practice or patients of all other physicians in the health system. It was designed to provide basic comparative data pertaining to the physician's “case mix” (age, sex, race, and average values of selected clinical parameters) as well as his/her panel's status relevant to the eight component measures of the composite diabetes measure/described above. Importantly, this report is available through the EMR and is updated weekly, enabling the physician to see changes in performance as a result of changes in his/her actions or those of his patients.

MEASURING RESULTS ON ALL PATIENTS: POOR GLYCEMIC CONTROL BY INSURANCE STATUS

Premise:

Performance measurement and improvement should not be limited to the care of patients with coverage by specific payers or insurers. The annual State of Health Care Quality report of the National Committee on Quality Assurance (NCQA) (17) documents systematic variation in performance across patients covered by Medicare, commercial, and Medicaid insurers. These differences, and the measurement of care for patients without health care coverage, should be highlighted.

Approach and Preliminary Results:

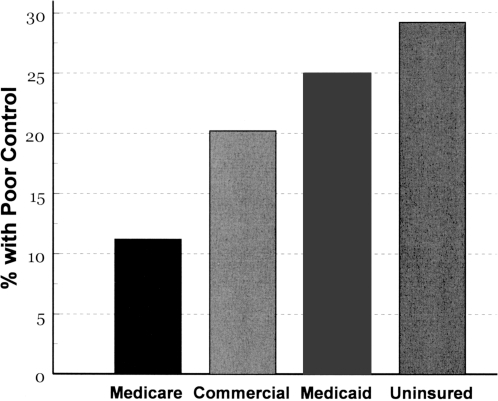

Implicit in the EMR-based practice-centered approach is its ability to measure and highlight challenges to care across all payer groups, including patients without health insurance. Figure 3, below, highlights pre-trial differences in conventionally defined “poor glycemic control” across patients defined by insurance classification. About 40% of the patients cared for by the 10 group practices were either uninsured or covered by Medicaid. Of these, 25% and almost 30%, respectively, had hemoglobin A1c values above 9%. Pay-for-performance methodologies typically reward physicians or provider groups when less than 20% of their panels have poor glycemic control.

Fig. 3.

Percentage of patients with poor glycemic control (hemoglobin A1c>9%) by insurance status, before the trial. About 40% of the system's patients were either uninsured or covered by Medicaid. See text for discussion.

HELPING PHYSICIANS DO THE RIGHT THING: BUT WHAT ABOUT THE PATIENTS?

Premise:

An increasing body of evidence identifies the key role of patient engagement, or patient “activation”, in producing better health outcomes, especially in complex chronic conditions such as diabetes. Much less is known about how to effectively engage patients to be more active partners with their health care providers.

Approach and Preliminary Results:

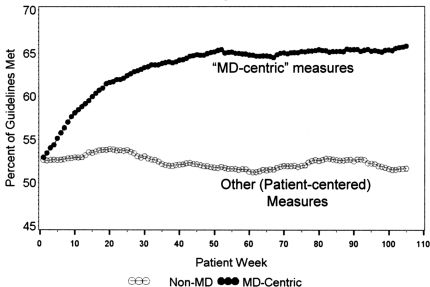

As described above, the DIG-IT project team identified eight “most important” diabetes criteria to summarize a composite measure of performance. Of these eight, four were classified a priori as “physician-centric”, relatively easily influenced by physician actions, and four were classified as “other”, requiring more patient engagement to improve. Although results for the component measures of each physician's panel were made continuously available to each physician in the CDS group, as displayed in Figure 2 neither the composite measure nor results of each sub-group were examined until the completion of patient enrollment in July, 2007. Figure 4 displays preliminary results for the percent of physician-centric and patient-centered measures that were met by CDS group patients over the 104 week (two-year) study period. The figure shows remarkable improvement in the physician-centric measures and disappointing results for measures that require better levels of patient engagement.

Fig. 4.

Changes in the percent of “physician-centered” and “Other” (more “patient-centered”) measures that were met over the trial (preliminary results). See text for discussion.

COMMENT

Several of the recognized limitations of claims-based performance measurement can be effectively addressed using a clinical practice-centered and EMR-based approach. In our trial, patient identification was straightforward, and the challenges of attributing care to specific physicians were addressed easily by using a “majority care” algorithm and asking the physicians two simple questions (904Is she your patient?” “Does she have diabetes?”). The EMR-based approach also has several relative strengths in the area of performance improvement. Clinical decision support using the EMR-based approach can be more timely, detailed, and take into account the particulars of a given patient's problems (e.g., allergies, recent laboratory-based contraindications) when either measuring or making recommendations about appropriate actions. Meaningful patient- and panel-level feedback can be continuous, ongoing, and demonstrably responsive to actions taken by the physician and his/her patients.

In addition, an EMR-based approach knows no insurance boundaries, enabling measurement of all patients, regardless of their ability to pay. Highlighting differences across payer status should focus our attention on class-specific patient barriers to care. Insurance-stratified performance measurement arguably should be reflected in pay-for-performance strategies, especially those sponsored by the government and employers, in order to optimize care and reduce the costs of care for all patients. Our preliminary results also show that even with EMR-facilitated clinical decision support, some measures appear to be much harder to improve than others. These findings should motivate additional research into methods to better engage patients in partnerships with their physicians, and other work to investigate methods to motivate physicians to be better partners themselves, and to welcome activated, well-informed patients.

ACKNOWLEDGMENTS

The work reported in this paper was supported by a grant from the Agency for Health Care Research and Quality: R01-05153. My thanks to all members of the DIG-IT team at the Cleveland Clinic and the MetroHealth System, with special gratitude to Peter J. Greco, M.D., Thomas E. Love, Ph.D., Doug Einstadter, M.D., M.P.H. and Scott S. Husak, M.S. for their help in designing our clinical decision support tools.

DISCUSSION

Schwartz, Philadelphia: The incremental value of doing some of these things in these multi-comorbid patients is, I think, not as evidence-based as we would like to think it is, and perhaps it involves some intuition on the part of the docs.

Cebul, Cleveland: I think that is great. I think that could be important in our whole thinking about how we use physician performance measures, because we don't take into account, a lot of times, the complexity of the patient. A lot of times, doctors may have reasons for not adding that other drug even though there is a guideline out there because of patient difficulties with compliance or other factors. So I think that would be a really good thing to look at.

Winchester, New York: I was thinking as you were going along that you have unique methodology to identify and confirm or refute things that have been happening recently. For instance, did you see any increased cardiovascular risk with Avandia?

Cebul, Cleveland: You know what? We are going to look at that. I have never been a fan of the TZDs in general, but Avandia in particular. We are able to look at that. We will be able to look at some cardiovascular outcomes. We are looking at heart failure visits in the emergency department and hospitalizations for that and so forth.

Jameson, Chicago: I was also interested in the mechanism of the plateau. So it looks like there is a burst of improvement with the medical decision support for 20 to 30 weeks, and then there is a desensitization that happens. I guess you will have ways to explore why that occurs. Are people turning off the decision support? Do they have that capability? Do you have patients who move from one physician to another, and then there is another burst of decision happening.

Cebul, Cleveland: Those are very good questions. They may have plateaued. They didn't appear to have gone down. That is one response to it. On the other hand, and in response that Sandy Schwartz made with regard to-Have we gotten to a point where there are other issues in the patient's life that may prevent the physician from pursuing that additional 30%? - I think that is a little bit too optimistic of you of why it happened. My guess is that there is at least some level of alert fatigue or something that causes physicians not to be as responsive as we might think they should be or that we should be. So I am not really sure what the answer to that is. I think if these, in fact, were like, for example, some performance incentives, there might have been more continued improvements than what we saw.

Neilson, Nashville: Randy, I enjoyed your talk. A philosophical question and, certainly, one we struggle with. Is making the measurement enough? Are we really measuring the right thing if we want to change physician behavior, and are there systems that allow you to design tests of changing physician behavior? There are subtle distinctions but, for example, the microalbuminuria question that was just raised. Well, we don't know whether physician behaviors will change that or not. There are lots of variables in all of this, but one is, it seems to me, if the quality of the movement is to mature, is that physicians themselves are committed to being different and responsive to these things. So where is all of this sitting at the moment in this field?

Cebul, Cleveland: The other initiative that I alluded to, that we are part of, is called Aligning Forces for Quality. It is a market-based strategy to improve care and outcomes. The care process includes initiatives to do quality improvement on a regional basis that engages physicians in practices in sort of examining and changing their own performance in relationship to quality measures.

REFERENCES

- 1.Institute of Medicine. Crossing the Quality Chasm: A New Health System for the 21th century. National Academies Press; 2001. [PubMed] [Google Scholar]

- 2.The White House. Fact Sheet: Health Care Transparency: Empowering Consumers to Save on Quality Care. http://www.whitehouse.gov/news/releases/2006/08/20060822.html.

- 3.Institute of Medicine. Leadership by Example: Coordinating Government Roles in Improving Health Care Quality. National Academies Press; 2002. [Google Scholar]

- 4.HHS Value-Driven Health Care Initiative. [Accessed Jan 29, 2007]; www.hhs.gov/transparency.

- 5.Centers for Medicare and Medicaid Services. Physician Quality Reporting Initiative. [Accessed December 30, 2007]; http://www.cms.hhs.gov/pqri/

- 6.Epstein AM Paying for performance in the United States and abroad. New Engl J Med. 2006;355:406–8. doi: 10.1056/NEJMe068131. [DOI] [PubMed] [Google Scholar]

- 7.Rowe JW. Pay-for-performance and accountability: related themes in improving health care. Ann Intern Med. 2006;145:695–9. doi: 10.7326/0003-4819-145-9-200611070-00013. [DOI] [PubMed] [Google Scholar]

- 8.Pham Care patterns in Medicare and their implications for Pay for Performance. New Engl J Med. 2007;356:1130–9. doi: 10.1056/NEJMsa063979. [DOI] [PubMed] [Google Scholar]

- 9.American Health Information Community Quality Workgroup Vision and Recommendations. [Accessed Jan 29, 2007]; www.hhs.gov/healthit/ahic/materials/meeting12/qual/WGslides.ppt.

- 10.Wagner EH, Austin B, Coleman C. Oakland, CA: California HealthCare Foundation; 2006. It Takes a Region: Creating a Framework to Improve Chronic Disease Care. [Google Scholar]

- 11.Performance Measurement: Accelerating Improvement. Washington, D.C: National Academies Press; 2006. Institute of Medicine Committee on Redesigning Health Insurance Performance Measures PaPIP. [Google Scholar]

- 12.Benin AL, et al. Validity of using an electronic medical record for assessing quality of care in an outpatient setting. Med Care. 2005;43(7):691–8. doi: 10.1097/01.mlr.0000167185.26058.8e. [DOI] [PubMed] [Google Scholar]

- 13.Peabody JW, et al. Assessing the accuracy of administrative data in health information systems. Med Care. 2004;42(11):1066–72. doi: 10.1097/00005650-200411000-00005. [DOI] [PubMed] [Google Scholar]

- 14.Jollis JG, et al. Discordance of databases designed for claims payment versus clinical information systems. Implications for outcomes research. Ann Intern Med. 1993;119(8):844–50. doi: 10.7326/0003-4819-119-8-199310150-00011. [DOI] [PubMed] [Google Scholar]

- 15.Love TE, Cebul RD, Einstadter D, Jain AK, Miller H, Harris CM, Greco PJ, Husak SS, Dawson NV. Electronic medical record-assisted design of a cluster-randomized trial to improve diabetes care and outcomes. J Gen Intl Med. doi: 10.1007/s11606-007-0454-3. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.American Diabetes Association. Standards of medical care for patients with diabetes mellitus. Diabetes Care. 2005;28:S4–S26. [Google Scholar]

- 17.NCQA. The State of Health Care Quality. 2007.