Abstract

Rapid antibody tests for the detection of human immunodeficiency virus (HIV) offer an effective means of providing a timely result of HIV serostatus to individuals. The increased use of rapid HIV antibody tests outside the laboratory has highlighted the need for new, cost-effective quality assurance methods to be developed for use in nonlaboratory-based and resource-limited settings. Photographed rapid HIV test results were used in a modified external quality assessment scheme to assess the interpretation proficiency and, therefore, to assess the feasibility of using this method as a basis for a quality assessment program for nonlaboratory-based testing. Participants (n = 148), both experienced and inexperienced in the performance and interpretation of rapid HIV testing, interpreted the photographed results of five rapid HIV assays. These were scored according to the degree of technical discordance. Error scores were grouped according to each participant's technical experience. The accuracy of interpretation for four of the five assays was between 80 and 97%, indicating that the photographed results of samples, including those difficult to read or borderline difficult to read, can be used to assess the proficiency of test operators in interpreting results. Participants had greater difficulty in interpreting samples of weak reactivity; this was consistent across the five assays. Experience played an important role in accurate interpretation, with experienced laboratory participants exhibiting greater proficiency (P < 0.05) in interpreting the results of three of the five rapid HIV assays. It was established that photographed results of rapid HIV assays could be interpreted with accuracy and demonstrated that prior experience resulted in a more accurate interpretation performance.

The determination of human immunodeficiency virus (HIV) serostatus of individuals, and the subsequent understanding of their test results, is an important step in halting the global spread of HIV (28, 30). As such, HIV testing is often the entry point of access to HIV prevention and care programs (28). It is estimated that (at least) 100 million people worldwide need to be tested for HIV to achieve the goal of universal access to HIV prevention, care, and support by 2010 (26, 28). The increased use of rapid HIV assays and the decentralization of HIV testing from regional to district levels in areas of high HIV prevalence are, therefore, vital in scaling up access to antiretroviral therapy for HIV-infected people (25, 28). A standardized quality assurance system and the adequate training of assay operators are essential to the useful, reliable performance of rapid HIV tests (5). The regulation of the quality of diagnostic tests can have a significant impact on the quality of testing being provided to patients, and the lack of regulation can compromise clinical care (20). However, accuracy also is important for effective testing programs, whether for diagnosis, surveillance, or clinical monitoring, because these shape prevention and treatment strategies as well as the care of individuals (29). Thus, the success of HIV and AIDS programs demands that accurate and reliable testing is a cornerstone (5). Consequently, it is important that the rapid HIV assays being used in nonlaboratory settings (e.g., voluntary counseling and testing centers within communities in developing countries) are equal in quality to that expected of laboratories. Inadequate quality assurance and minimal or inappropriate training present limitations to the usefulness and cost effectiveness of nonlaboratory-based rapid diagnostic testing programs (7, 17, 21).

Immunoassays that are subjectively read and interpreted, including Western blot analyses, agglutination assays, and rapid immunoassays, are open to variability in interpretation both by the individual reader and between readers (10). The implementation of the essentials of quality management and monitoring outcomes through quality assurance programs will reduce variability and increase the accuracy of interpretation, therefore providing confidence in the testing process (6, 7, 10, 17). Laboratory quality assurance monitors all aspects of the testing process (preanalytic, analytic, and postanalytic) and employs two major methods (5, 7). Quality control (QC) is used to check that an analytical phase (test precision) is optimal (9, 13, 16). External quality assessment (EQA), also called proficiency testing, is the tool used to assess the testing process independently. Importantly, EQA can not only be used to monitor technical performances but also identifies training opportunities based on proficiency results (5, 7). Thus, adequate initial and ongoing training needs may be identified through the use of carefully constructed EQA schemes (EQAS) and are an important measure to ensure both proficiency and accuracy (5). The quality assurance of rapid HIV assays (single-use devices) might best include on-site performance monitoring and the retesting of samples as well as EQAS (17). The initial increased cost of EQAS means that testing programs in developing countries often are not deemed to have sufficient resources to allocate to quality assurance (17). Consequently, there is a need for alternative, less expensive methods that can be used in resource-constrained settings. An EQAS specifically for rapid HIV testing in nonlaboratory, resource-limited settings, therefore, must take all of this into account.

The training or, more specifically, the lack of adequate and ongoing training of test operators still represents a severe limitation to achieving accurate diagnostic testing (11, 12, 15, 33). The ability of untrained or nonlaboratory personnel to perform rapid HIV assays has been characterized in a number of different studies (12). Trained nonlaboratory personnel may have difficulties in performing, interpreting, and communicating the results of rapid HIV assays if the initial training is not appropriate or efficacious (5, 15, 17). Deviations from established training methods should be approached with caution and emphasize the importance of standardized, ongoing performance monitoring and training programs that should be a requirement for all operators (30). Photographed rapid HIV test results may be useful as a cost-effective training tool that could generate ongoing training interaction with the EQAS administrator or other central training body.

The aim of the present study was to investigate the feasibility of using difficult-to-read, photographed rapid HIV test results to assess the proficiency of operator interpretation and, secondly, the potential for using the method as a training tool. It was established that photographed results of rapid HIV assays could be interpreted with relative accuracy and that the prior experience of operators resulted in the more accurate interpretation of photographed results. From this initial study, we propose that assessing operator interpretation proficiency using photographed rapid HIV test results could be used as a complement to a quality assurance and training program or as an alternative to EQAS in resource-limited settings in which conventional EQAS is not practical. This would improve the outcomes of nonlaboratory-based testing where rapid HIV tests are employed, especially in resource-limited settings.

MATERIALS AND METHODS

Participants.

A total of 192 laboratories that were enrolled in the HIV Serology EQAS of the National Serology Reference Laboratory, Australia (NRL), had the quality assurance exercise sent to them. Of these, 121 laboratories (63%) responded. Some laboratories (n = 12) provided independent interpretations from more than one member of staff, resulting in a total of 148 responses (Table 1).

TABLE 1.

Origins of laboratories participating in an external quality assessment exercise assessing photographed results of rapid HIV assaysa

| Countries with laboratories that sent one set of interpretations (no. of laboratories that participated) | Countries with laboratories that sent more than one set of interpretations (no. of results, no. of laboratories) |

|---|---|

| Bangladesh (1) | United Kingdom (4, 1) |

| Brunei Darussalam (1) | Canada (3, 1) |

| Fiji Islands (1) | Pakistan (4, 2) |

| Nepal (1) | Myanmar (3, 2) |

| Tonga (1) | India (6, 5) |

| New Caledonia (1) | Thailand (9, 5) |

| Taiwan, Republic of China (1) | Australia (83, 69) |

| Korea (1) | |

| Philippines (1) | |

| Singapore (1) | |

| Vietnam (1) | |

| Cambodia (2) | |

| Kenya (2) | |

| Indonesia (3) | |

| Japan (3) | |

| Malaysia (3) | |

| Sri Lanka (3) | |

| People's Republic of China (4) | |

| New Zealand (5) |

A total of 191 laboratories were invited to participate; 121 laboratories filled out and returned worksheets completed correctly. Twelve of these laboratories returned more than one result each. This gave a total of 148 individual sets of results for analysis.

Rapid HIV assay kits.

Kits were selected based on the different formats available, those used by current NRL EQAS participants, and those reviewed in WHO evaluations (10). The formats included two rapid immunochromatographic assays, Abbott Determine HIV-1/2 (Abbott Laboratories, Abbott Park, IL) and Trinity Biotech Plc Uni-Gold HIV (Trinity Biotech Plc., Wicklow, Ireland); one rapid immunofiltration assay, Bio-Rad Multispot HIV-1/HIV-2 (Bio-Rad Laboratories, Inc., Redmond, WA); one rapid latex agglutination assay, Trinity Biotech Plc Capillus HIV-1/HIV-2 (Trinity Biotech Plc., Wicklow, Ireland); and one semirapid particle agglutination assay, Fujirebio Inc. Serodia-HIV (Fujirebio Diagnostics, Inc., Tokyo, Japan).

Sample panels.

Each assay had a defined sample panel (n = 7) consisting of a characterized HIV type 1 (HIV-1) antibody-negative control, an undiluted HIV-1-positive control, and five dilutions of this positive sample prepared so as to provide strong, weak, and negative results (i.e., beyond the detection limits for that assay) (10). Five dilutions or panel members plus two controls were selected on the basis of previous recommendations (8). Base matrix (defibrinated human plasma negative for anti-HIV-1/HIV-2, HBsAg, anti-HBs, anti-hepatitis C virus, and anti-human T-cell leukemia type 1/anti-human T-cell leukemia type 2; BBI Diagnostics) was used as the diluent. An experienced NRL scientist tested all panels with their respective assays by following each manufacturer's instructions.

Worksheets.

For each assay type, the individual test cartridges with reactive/nonreactive bands for each panel member were photographed (with a Nikon D50 SLR digital camera) and formatted into a worksheet. The worksheets also included each manufacturer's interpretation instructions, a table in which scores could be recorded, and questions to assess the level of experience of each participant. Experience was defined by the assessment of any previous training with an estimate of how often an assay was performed by the participant (i.e., rarely, occasionally, or frequently). Each participant received a colored hardcopy of the worksheet so that the quality of the photographs supplied for interpretation purposes was uniform between participants and the NRL scientists.

Analysis of results.

The NRL's simple/rapid HIV testing strategy dictates that two trained scientists interpret a test result, and a third is used for consensus before the result is reported. Three NRL laboratory scientists experienced with reading subjective HIV diagnostic assays (including rapid assays) interpreted the photographed results, and this was taken as the correct interpretation of any given result (24, 29). Participants were asked to score a sample on the basis of the intensity of reactivity as one of strong positive (++), weak positive (+), negative (−), or inconclusive (?) and then give their final interpretations of the results as positive (++/+), negative (−), or inconclusive (?) depending on the observed pattern of reactivity. Participant interpretations were scored against the correct interpretation as correct (0), inconclusive (1), false positive (2), or false negative (3). The sum of the scores for the five samples produced the cumulative error score for each participant and overall for each assay.

The error scores were analyzed in three ways. (i) First, the scores were analyzed as one group by the Kruskal-Wallis, nonparametric, one-way analysis of variance by ranks test on SPSS V14.0 (SPSS Inc., Chicago, IL) to analyze differences between the error scores of the five assays (the null hypothesis Ho:MDetermine = MMultispot = MUni-Gold = MCapillus = MSerodia; n = 740 and α = 0.05, where M is the median and α denotes the level of significance). Dunn's multiple comparison test was used for pair-wise comparisons to determine where any differences occurred. (ii) The second method was to analyze specific experience for each of the five assays by grouping error scores according to participants who indicated having previous experience or those that did not for each individual assay. The results were analyzed by Pearson chi-square tests (Ho:MIndicated Experience − MNo experience = 0; n = 148 and α = 0.05). (iii) Owing to the number of participants indicating specific experience, a second measure of experience was used. Participant interpretations were grouped according to Australian/New Zealand participants and participants from all other countries (international) as a general measure of experience. Location was considered a surrogate indicator for experience with rapid HIV assays. Only 17% of the participants in Australia and New Zealand, compared to 84% of the international participants, reported having had experience with at least one of the five rapid assays. An examination of the national testing strategies of participating laboratories and a record of their use of rapid HIV tests in the NRL's quality assurance programs supported the grouping by experience according to location. The error scores were analyzed by the Kruskal-Wallis test (Ho:MAustralian/New Zealand − MInternational = 0; α = 0.05) for each assay and for the cumulative error scores across the five assays (n = 148). In summary, the data were analyzed in three ways: (i) all interpretations, (ii) specific experience, and (iii) general laboratory experience.

It is anticipated that, in a program utilizing this method, the accuracy of interpretation as measured by the error scores will determine the proficiency of operator interpretation. This is turn will enable the level of improvement to be measured upon the training of the operator.

RESULTS

Photographed results were interpreted accurately.

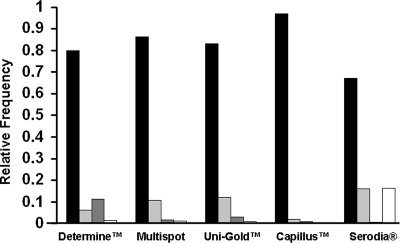

The photographed results of five rapid HIV antibody assays were interpreted by participants enrolled in the NRL HIV serology EQAS. The majority of interpretations for each of the five assays were correct (Fig. 1). The relative frequencies indicate that the number of correct interpretations for the Determine, Multispot, Capillus, and Uni-Gold assays were 80% or greater. However, the accuracy was only 67% for the Serodia assay. The results showed that overall, photographed results could be interpreted with accuracy and highlight where there is need for improved training. Dilutions interpreted as inconclusive were the most common interpretation not in accord with reference interpretations. Compared with the other assays, a greater number of false-positive interpretations were made for the Determine assay (11.5%), while there was a higher number of false-negative interpretations for the Serodia assay (16.2%).

FIG. 1.

Interpretations of photographed results from five rapid HIV assays in a quality assurance exercise. Laboratory technicians (n = 148) from 121 diagnostic laboratories interpreted the photographed results of the five rapid HIV assays (Abbott Determine HIV-1/2, Bio-Rad Multispot HIV-1/HIV-2, Trinity Biotech Plc Uni-Gold HIV, Trinity Biotech Plc Capillus HIV-1/HIV-2, and Fujirebio Inc Serodia-HIV). Interpretations were graded as correct (▪), inconclusive ( ), false positive (▩), and false negative (□) compared to the consensus interpretation by three experienced readers from the NRL. The relative frequency of interpretation is shown for each assay.

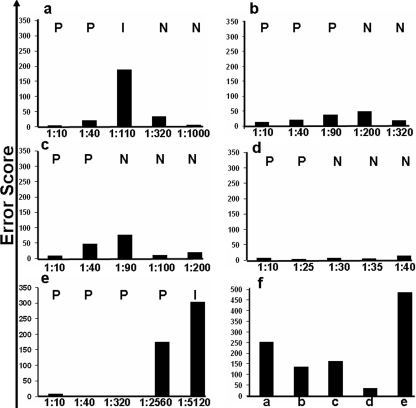

Samples at limits of detection were less accurately interpreted.

A sample-by-sample analysis of the error scores for each assay showed that participants found those results of samples reacting at or near the limits of detection for each assay more difficult to interpret (Fig. 2). This was reflected in higher error scores for dilutions corresponding to those samples reacting at the limits of detection for each assay. Dilutions that were the least accurately interpreted were similar for the Determine (Fig. 2a), Multispot (Fig. 2b), and Uni-Gold (Fig. 2c) assays. The least variation in interpretation was seen with the Capillus assay (Fig. 2d), for which readings were more consistent with the dilutions tested. The interpretation of the results from the Serodia assay (Fig. 2e) showed the greatest variation (Fig. 2f). The total error scores for the participants' interpretations among the five assays (Fig. 2f) were significantly different (χ2 = 292.96; P < 0.0001), except between Multispot and Capillus on a pair-wise comparison.

FIG. 2.

Participants' (n = 148) error scores of photographed assay results for five rapid HIV assays: (a) Abbott Determine HIV-1/2, (b) Bio-Rad Multispot HIV-1/HIV-2, (c) Trinity Biotech Plc Uni-Gold HIV, (d) Trinity Biotech Plc Capillus HIV-1/HIV-2, and (e) Fujirebio Inc. Serodia-HIV. (f) The total error scores for each assay. Reference interpretations (shown as capital letters in the upper portions of each panel) were determined by three experienced and trained NRL staff (P, positive; N, negative; I, inconclusive). Participant interpretations were compared to the reference and graded as correct (0), inconclusive (1), false positive (2), and false negative (3) and summed to give an error score.

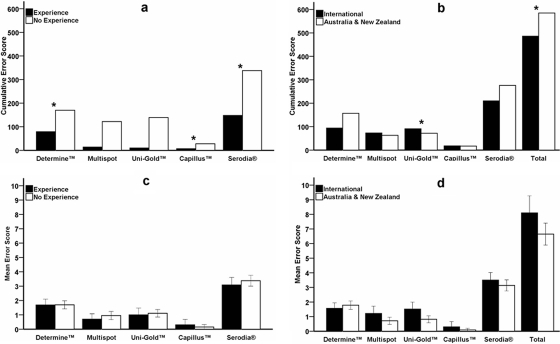

Experience is important for a more accurate interpretation.

It was investigated whether previous experience in performing rapid HIV testing was relevant to an operator's ability to interpret accurately the photographed rapid HIV assay results. An analysis of the cumulative error scores when participants were grouped according to specific experience in performing and, therefore, reading rapid HIV assays showed that experience using a particular assay had an impact on the accuracy of interpretation for three of the five assays. The total error scores for interpretations by participants who indicated experience (Fig. 3a) were lower than those for participants with no experience for Determine (χ2 = 12.08; P = 0.03), Capillus (χ2 = 13.16; P = 0.02), and Serodia (χ2 = 13.84; P = 0.02). There was a trend for experienced participants to have lower cumulative error scores for four of the five assays. Interpretations of results with the Capillus assay were the most accurate, regardless of experience.

FIG. 3.

Influence of experience on interpretation scores from 148 participants in a quality assurance exercise assessing the interpretation of photographed rapid HIV assay results. Two measures of experience were considered, and participants' error scores were grouped according to specific experience on the selected rapid HIV assay (a) and general laboratory experience with rapid HIV assays based on location (international and experienced as well as Australia/New Zealand and less experienced) (b). The mean error scores for specific experience (c) and general experience (d) are shown. The differences in error scores (*) between experienced and inexperienced participants for each assay were observed by the Pearson chi-square test for the Abbott Determine HIV-1/2 (P = 0.034), Trinity Biotech Plc Capillus HIV-1/HIV-2 (P = 0.022), and Fujirebio Inc. Serodia-HIV (P = 0.017) assays (a) and by the Kruskal-Wallis test for the Trinity Biotech Plc Uni-Gold HIV (P = 0.001) (P = 0.032 for all five assays [Total]) (b); the significance was set at P < 0.05.

Cumulative error scores for participants grouped according to general laboratory experience in performing and, by assumption, reading rapid HIV assays showed that previous experience had an impact on the accuracy of the interpretation of photographed results (Fig. 3b). International participants (i.e., countries other than Australia and New Zealand) had lower error scores for the Determine and Serodia assays than for the other three assays. An analysis of variance by ranks found that the error scores for participants from Australia and New Zealand were significantly higher for Uni-Gold (χ2 = 11.143; P = 0.001) as well as when the error scores for all five assays were combined (χ2 = 4.624; P = 0.032).

The mean error scores of participants grouped for both specific assay experience (Fig. 3c) and general laboratory experience (Fig. 3d) with rapid HIV assays were similar. The average error score for the Capillus assay was close to 0, while that for Serodia was close to 4, emphasizing the difference in interpretation accuracy for these two assays. The other three assays performed similarly regardless of whether the grouping was specific or general (mean error score between 1 and 2).

These results suggested that in the present study, both specific assay experience and general laboratory experience in performing rapid assays contributed to the accurate interpretation of the photographed results, but that the mean error scores were similar for both experienced and inexperienced participants.

DISCUSSION

The method investigated in the present study demonstrated that it is feasible to use photographed rapid HIV assay results in the monitoring and evaluation of operators by assessing their ability to interpret correctly the photographed results. This was demonstrated by the accurate interpretation (greater than 80%) of the photographed results, including difficult-to-read samples, for four of the five assays. Further, it was established that participants with previous experience on rapid HIV tests achieved lower error scores than their less experienced colleagues, thus highlighting the important role of experience and, therefore, training in the correct interpretation of the results. The utilization of photographed results as a measure of quality assurance will overcome some of the logistic and resource issues that make impractical the quality assurance of widespread rapid testing. The next step is to test such photographic panels in the field to identify their value in training and proficiency.

Testing strategies for HIV must include an algorithm for the second-round testing of all reactive or inconclusive samples from the initial screening assay to determine whether the initial reactivities were true or false (1, 24, 31). Samples interpreted as inconclusive or reactive should be retested in a second assay that uses a different technology and antigens. Samples that test negative in the primary assay are not retested, so false-negative interpretations are not defined in a standard clinical testing strategy. In the present study, inconclusive was the most common discrepant interpretation, suggesting that participants were able to identify correctly when a sample should be retested for confirmation. However, the number of false-negative interpretations seen for the Serodia assay in this study suggested that the ability of participants to interpret these results should be investigated further and the potential need for further training. This was reflected in the greater difficulty in the subjective interpretation of this assay, as evidenced by the 67% correct interpretation score for the Serodia assay versus 97% for the Capillus assay (Fig. 1). Field evaluations of rapid HIV serological tests have revealed that while most have high sensitivity and specificity, the Serodia assay did not perform as well (2, 3, 14, 19), confirming our results here regarding relative accuracy. These results suggest that certain assay formats were easier to read than others, although a more extensive analysis would be required to show this definitively.

Photographs have been used to assess eye health, investigate the accuracy of registered general nurses to grade pressure ulcers, assess medical students in emergency medicine certification exams, and assess proficiency in cytogenetic testing (27), and they currently are recommended for training personnel in the use of rapid HIV tests (4). The use of electronic images for quality assessment and training purposes also has been investigated in hematology (10). Thus, there is precedence for incorporating photographed rapid HIV assay results into an EQA program as an inexpensive method of assessing operator interpretation proficiency and, additionally, as a training tool.

The investigation of the present study into the impact that experience had on interpretation accuracy found that participants with specific assay or general laboratory experience with rapid HIV assays had a lower error score and were more accurate in their interpretations of the photographed results than were inexperienced participants. Mean error scores were consistent between groups and across the assays (error score below 2), except for the Serodia assay (error score of 4). From the sample-by-sample analysis of the individual dilutions, we identified difficulty in the interpretation of the samples mimicking weak reactivity. These dilutions were the least accurately interpreted photographs for all five assays, confirming previous studies (10, 18). These samples mimicked weak or nonspecific reactivity, similarly to that occurring during seroconversion or biological false reactivity, but most importantly do not offer information about the sensitivity and specificity of an assay (16). The accurate interpretation of rapid HIV assays in weakly reactive samples by inexperienced operators, especially when experienced laboratory participants had difficulty, implies that inexperience impacts significantly on the accuracy of results with these samples. This emphasizes the importance of training, as has been suggested previously (7, 20-23, 32). Photographed rapid HIV assay results as cost-effective training tools therefore will have importance in both initial and ongoing training programs. Assessing the degree to which trained, nonlaboratory personnel can accurately interpret photographed results is the next step in developing this method as a validated EQA for HIV testing in nonlaboratory or point-of-care settings. The assessment and training of nonlaboratory personnel may then be readily extended through the use of this method for subjectively read rapid HIV tests.

Quality assurance programs monitor performance, thereby maintaining the integrity of the testing process. When diagnostic assays that have not been adequately evaluated find their way into developing countries, the reliability of testing programs is at considerable risk (17). Further, if these assays are not quality assured on a regular basis, then errors resulting from poor performance may not be discovered (29). Therefore, it is important that all phases of diagnostic testing processes, whether performed inside or outside a laboratory, are monitored routinely through an EQA program (21). Currently there is concern over the lack of, and the standard of, diagnostics for other diseases of the developing world, such as tuberculosis and malaria. The method described in this paper has the potential to be used for other types of rapid assays and represents an effective means to help ensure the accuracy of interpreting diagnostic assays when more conventional quality assurance methods are not available.

To summarize, laboratory personnel who participated in this study, both experienced and inexperienced with rapid HIV assays, were able to interpret the photographed rapid HIV assay results with relative accuracy. Further, experience was shown to play an important role in achieving greater accuracy of interpretation. We propose that this method can be used in providing a novel, cost-effective approach to EQA for nonlaboratory rapid HIV testing. Once established, programs could be used for training and monitoring purposes, facilitating the more accurate interpretation of rapid HIV assays and thereby assisting HIV prevention efforts, especially in resource-limited countries. Most importantly, photographed test results will bring a practical and cost-effective approach to quality assurance for the scale-up of nonlaboratory HIV testing that is needed before 2010.

Acknowledgments

We thank all of the laboratories for their participation and timely results. We also thank the staff at the NRL for logistic and technical support and Chris Birch of the Victorian Infectious Diseases Reference Laboratory for his helpful and critical reading of the manuscript.

Footnotes

Published ahead of print on 19 March 2008.

REFERENCES

- 1.Andersson, S., Z. da Silva, H. Norrgren, F. Dias, and G. Biberfeld. 1997. Field evaluation of alternative testing strategies for diagnosis and differentiation of HIV-1 and HIV-2 infections in an HIV-1 and HIV-2-prevalent area. AIDS 11:1815-1822. [DOI] [PubMed] [Google Scholar]

- 2.Blouin, D., L. E. Dagnone, and R. McGraw. 2006. Performance of emergency medicine residents on a novel practice examination using visual stimuli. Can. J. Emerg. Med. 8:21-26. [DOI] [PubMed] [Google Scholar]

- 3.Briggs, S. L. 2006. How accurate are RGNs in grading pressure ulcers? Br. J. Nurs. 15:1230-1234. [DOI] [PubMed] [Google Scholar]

- 4.Burthem, J., M. Brereton, J. Ardern, L. Hickman, L. Seal, A. Serrant, C. V. Hutchinson, E. Wells, P. McTaggart, B. De la Salle, J. Parker-Williams, and K. Hyde. 2005. The use of digital ‘virtual slides’ in the quality assessment of haematological morphology: results of a pilot exercise involving UK NEQAS(H) participants. Br J. Haematol. 130:293-296. [DOI] [PubMed] [Google Scholar]

- 5.Centers for Disease Control and Prevention, WHO, and Office of the United States Global AIDS Coordinator. 2005, posting date. Guidelines for assuring the accuracy and reliability of HIV rapid testing: applying a quality system approach. http://www.who.int/diagnostics_laboratory/publications/HIVRapidsGuide.pdf.

- 6.Chalker, V. J., H. Vaughan, P. Patel, A. Rossouw, H. Seyedzadeh, K. Gerrard, and V. L. James. 2005. External quality assessment for detection of Chlamydia trachomatis. J. Clin. Microbiol. 43:1341-1347. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Chang, D., K. Learmonth, and E. M. Dax. 2006. HIV testing in 2006: issues and methods. Expert Rev. Anti-Infect. Ther. 4:565-582. [DOI] [PubMed] [Google Scholar]

- 8.Constantine, N. T., R. D. Saville, and E. M. Dax. 2005. Retroviral testing and quality assurance: essentials for laboratory diagnosis. MedMira Laboratories, Halifax, Canada.

- 9.Dax, E. M., and A. Arnott. 2004. Advances in laboratory testing for HIV. Pathology 36:551-560. [DOI] [PubMed] [Google Scholar]

- 10.Dax, E. M., and R. O'Connell. 1999. Standardisation of subjectively scored HIV immunoassays: developing a quality assurance program to assist in reproducible interpretation of results using an anti-HIV particle agglutination assay as a model. J. Virol. Methods 82:113-118. [DOI] [PubMed] [Google Scholar]

- 11.Delaney, K. P., B. Branson, and C. Fridlund. 2002. Ability of untrained users to perform rapid HIV antibody screening tests. Abstr. Am. Health Assoc. Annu. Meet., abstr. 109, p. 19.

- 12.Granade, T. C., B. S. Parekh, S. K. Phillips, and J. S. McDougal. 2004. Performance of the OraQuick and Hema-Strip rapid HIV antibody detection assays by non-laboratorians. J. Clin. Virol. 30:229-232. [DOI] [PubMed] [Google Scholar]

- 13.Gust, A., S. Walker, R. J. Chappel, and E. M. Dax. 2001. Anti-HIV quality assurance programs in Australian and the southeast Asian and Western Pacific regions. Accred. Qual. Assur. 6:168-172. [Google Scholar]

- 14.Hoeltge, G. A., G. Dewald, C. G. Palmer, M. A. David-Nelson, I. Saikevych, S. Patil, S. Schwartz, N. R. Schneider, and M. Herrmann. 1993. Proficiency testing in clinical cytogenetics. A 6-year experience with photographs, fixed cells, and fresh blood. Arch. Pathol. Lab. Med. 117:776-779. [PubMed] [Google Scholar]

- 15.Kanal, K., T. L. Chou, L. Sovann, Y. Morikawa, Y. Mukoyama, and K. Kakimoto. 2005. Evaluation of the proficiency of trained non-laboratory health staffs and laboratory technicians using a rapid and simple HIV antibody test. AIDS Res. Ther. 2:5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kettelhut, M. M., P. L. Chiodini, H. Edwards, and A. Moody. 2003. External quality assessment schemes raise standards: evidence from the UKNEQAS parasitology subschemes. J. Clin. Pathol. 56:927-932. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Martin, R., T. L. Hearn, J. C. Ridderhof, and A. Demby. 2005. Implementation of a quality systems approach for laboratory practice in resource-constrained countries. AIDS 19(Suppl. 2):S59-S65. [DOI] [PubMed] [Google Scholar]

- 18.Maskill, W. J., N. Crofts, E. Waldman, D. S. Healey, T. S. Howard, C. Silvester, and I. D. Gust. 1988. An evaluation of competitive and second generation ELISA screening tests for antibody to HIV. J. Virol. Methods 22:61-73. [DOI] [PubMed] [Google Scholar]

- 19.Muller, A., H. T. Vu, J. G. Ferraro, J. E. Keeffe, and H. R. Taylor. 2006. Rapid and cost-effective method to assess vision disorders in a population. Clin. Exp. Ophthalmol. 34:521-525. [DOI] [PubMed] [Google Scholar]

- 20.Peeling, R. W., P. G. Smith, and M. M. Bossuyt. 2006. A guide for diagnostic evaluations. Nat. Rev. Microbiol. 4:S2-S6. [DOI] [PubMed] [Google Scholar]

- 21.Perkins, M. D., and P. M. Small. 2006. Partnering for better microbial diagnostics. Nat. Biotechnol. 24:919-921. [DOI] [PubMed] [Google Scholar]

- 22.Rekart, M. L., M. Krajden, D. Cook, G. McNabb, T. Rees, J. Isaac-Renton, M. Harris, and J. S. Montaner. 2002. Problems with the fast-check HIV rapid test kits. Can. Med. Assoc. J. 167:119. [PMC free article] [PubMed] [Google Scholar]

- 23.Rekart, M. L., J. A. Quon, and T. Rees. 2004. Final evaluation results for the Fast-Check HIV rapid test kits. Can. Med. Assoc. J. 171:1324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Respess, R. A., M. A. Rayfield, and T. J. Dondero. 2001. Laboratory testing and rapid HIV assays: applications for HIV surveillance in hard-to-reach populations. AIDS 15(Suppl. 3):S49-S59. [DOI] [PubMed] [Google Scholar]

- 25.WHO. 1998. The importance of simple/rapid assays in HIV testing. WHO/UNAIDS recommendations. Wkly. Epidemiol. Rec. 73:321-326. [PubMed] [Google Scholar]

- 26.WHO. 2006, posting date. Towards universal access by 2010: how WHO is working with countries to scale-up prevention, treatment, care and support. http://www.who.int/hiv/toronto2006/UAreport2006_en.pdf.

- 27.WHO, Centers for Disease Control and Prevention, and U.S. Department of Health and Human Services. April 2007, posting date. HIV rapid test training package. http://www.who.int/hiv/pub/surveillance/en/guidelinesforUsingHIVTestingTechs_E.pdf.

- 28.WHO, Regional Office for Africa, Southern Africa HIV and AIDS Information Dissemination Service, and African AIDS Vaccine Programme. 2006, posting date. HIV/AIDS laboratory capacity: how far we have come and where we are going: an assessment report on the capacity of laboratories to support the scaling-up towards universal access to HIV/AIDS prevention, treatment, care and support services in the WHO/AFRO region. http://www.afro.who.int/aids/laboratory_services/resources/who_afro_labs_report.pdf.

- 29.WHO, UNAIDS, Centers for Disease Control and Prevention, and USAID. 2001, posting date. Guidelines for using HIV testing technologies in surveillance: selection, evaluation and implementation. http://www.who.int/hiv/pub/epidemiology/pub4/en/.

- 30.WHO, WHO/EHT, and UNAIDS. 2002, posting date. HIV assays: operational characteristics (phase I). Report 12. Simple/rapid tests, whole blood specimens. http://www.who.int/diagnostics_laboratory/publications/hiv_assays_rep_12.pdf.

- 31.Wilkinson, D., N. Wilkinson, C. Lombard, D. Martin, A. Smith, K. Floyd, and R. Ballard. 1997. On-site HIV testing in resource-poor settings: is one rapid test enough? AIDS 11:377-381. [DOI] [PubMed] [Google Scholar]

- 32.Wooltorton, E. 2002. Failure of rapid HIV tests. Can. Med. Assoc. J. 167:170. [PMC free article] [PubMed] [Google Scholar]

- 33.Ziyambi, Z., P. Osewe, and N. Taruberekera. 2002. Evaluation of the performance of nonlaboratory staff in the use of simple rapid HIV antibody assays at New Start voluntary counselling and testing (VCT) centres. Abstr. Int. Conf. AIDS, Barcelona, Spain, abstr. MoPcB3110, p. 39.