Abstract

Textbooks in learning and behavior commonly describe performance on fixed-ratio schedules as “break and run,” indicating that after reinforcement subjects typically pause and then respond quickly to the next reinforcement. Performance on variable-ratio schedules, on the other hand, is described as steady and fast, with few long pauses. Beginning with Ferster and Skinner's magnum opus, Schedules of Reinforcement (1957), the literature on pausing under ratio schedules has identified the influences on pausing of numerous important variables, in particular ratio size and reinforcement magnitude. As a result, some previously held assumptions have been called into question. For example, research has shown that the length of the pause is controlled not only by the preceding ratio, as Ferster and Skinner and others had assumed (and as implied by the phrase postreinforcement pause), but by the upcoming ratio as well. Similarly, despite the commonly held belief that ratio pausing is unique to the fixed-ratio schedule, there is evidence that pausing also occurs under variable-ratio schedules. If such widely held beliefs are incorrect, then what about other assumptions? This article selectively examines the literature on pausing under ratio schedules over the past 50 years and concludes that although there may indeed be some common patterns, there are also inconsistencies that await future resolution. Several accounts of pausing under ratio schedules are discussed along with the implications of the literature for human performances, most notably the behaviors termed procrastination.

Keywords: fixed-ratio schedule, variable-ratio schedule, postreinforcement pause, preratio pause, animal models, procrastination

2007 marked the 50th anniversary of the publication of Charles Ferster and B. F. Skinner's magnum opus, Schedules of Reinforcement (1957), which reported the results of experiments carried out under contracts to the Office of Naval Research with Harvard University between 1949 and 1955. Although Skinner had previously discussed periodic reconditioning (what was later to become the fixed-interval [FI] schedule) and fixed-ratio (FR) reinforcement (he hadn't yet used the term reinforcement schedule) in The Behavior of Organisms (1938), it wasn't until the publication of Schedules of Reinforcement that he (and Ferster, working as a research fellow under Skinner's direction) distinguished several simple and complex schedules based on nonhuman (pigeons and rats) performances. In addition, they investigated the effects of many different types of those schedules along with a variety of other variables (e.g., drugs, deprivation level, ablation of brain tissue, added counters, etc.) that were described in terms of rate of response and depicted on cumulative records. Their research documented the power of schedules of reinforcement to control behavior and established the study of schedules as a focus within the experimental analysis of behavior. Ferster and Skinner's research painted a detailed picture of the performances of the subjects as seen on a response-by-response basis. However, their wide-ranging effort did not attempt to provide systematic information about the effects of parametric variations across conditions and subjects. It remained for subsequent researchers to fill in the gaps.

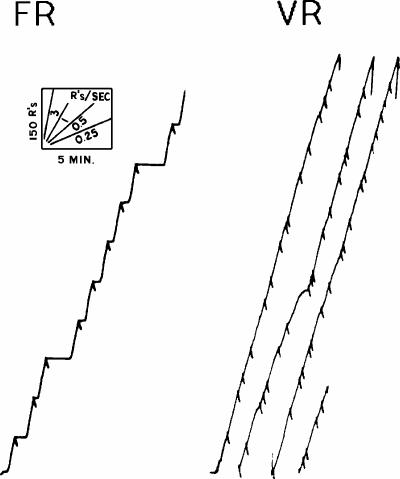

Fifty years and innumerable experiments later, it is common for textbooks on learning to illustrate FR performances with cumulative records and to describe the resulting patterns as “break and run” (see Figure 1). As explained by Lattal (1991), “following a period of nonresponding (a break, more precisely the postreinforcement pause) after food delivery, there is a relatively quick transition to a high steady rate of responding (a run) that is maintained until the next food presentation when the pattern repeats” (p. 95). FR performances are often contrasted with those under variable-ratio (VR) schedules, in which the size of the ratios varies within the schedule. Under VR schedules, performances are “characterized by high response rates with little systematic pausing either after reinforcement or at other times” (p. 95).1

Figure 1.

Cumulative records showing performances under FR 90 and VR 50 schedules of reinforcement (Ferster & Skinner, 1957). Reproduced from Figure 2 (Lattal, 1991) and reprinted with permission of Elsevier Science Publishers.

Among the myriad response patterns observed under various schedules of reinforcement, pausing under ratio schedules (especially FR schedules) has attracted special attention. Unlike the pause that follows reinforcement on interval schedules, the pause that follows reinforcement on ratio schedules reduces overall reinforcement rates. Because reinforcement rates under ratio schedules depend strictly on response rates, optimal performance would be for subjects to resume responding immediately. Yet they pause, and the resultant loss of reinforcement persists over extended exposure to the schedule without diminution. The critical question, then, is: Why would an animal pause when that very action delays reinforcement and reduces overall reinforcement rate? One possibility is that the animal is fatigued after working so hard. For example, pauses are generally shorter under FR 10 than under FR 100. Because the FR 100 involves more work, it is possible that subjects rest longer before resuming work. Another possibility is that food consumption creates satiation, which weakens the motivational operation of food deprivation. However, we will see that neither of these seemingly reasonable explanations survive simple tests and that the picture is much more complicated.

Ratio pausing within the laboratory is also of special interest because it resembles the human problem of procrastination: In both cases, an action is put off even though the resulting delay may be disadvantageous. Social commentators have noted that procrastination is a major contributor to behavioral inefficiency in schools, industry, and our daily lives in general (Steel, 2007). Identification of the variables that control the ratio pause in the laboratory may help to reveal techniques for the modification of procrastination.

Our primary purpose in this article is to identify the variables that govern pausing under ratio schedules. Research on pausing under ratio schedules has come a long way since Ferster and Skinner's (1957) pioneering contributions. They set us on our way, but in the ensuing 50 years increasingly sophisticated questions and experimental designs have revealed a more complex picture. A fundamental issue in research on ratio schedules, then, concerns how pausing should be summarized and described. In the present article we review the research on pausing under ratio schedules of reinforcement in an attempt to glean an understanding of why pausing under such schedules occurs. We first take a look at research on pausing with simple FR schedules, then consider research with complex (multiple and mixed) schedules with FR components, and, finally, look at pausing under VR schedules. We then discuss the real-world implications of ratio pausing, in particular, the bearing of laboratory research on the pervasive social problem of human procrastination. Finally, we offer a set of conclusions that might shed light on the theoretical mechanisms that govern pausing under ratio schedules.

Pausing Under FR Schedules

Skinner (1938) first described the performances of rats under FR and FI reinforcement as including a pause that was under the stimulus control of the previous reinforcer:

In both types of experiment the discrimination from the preceding reinforcement is active, since one reinforcement never occurs immediately after another. A reinforcement therefore acts as an SΔ in both cases. As the result of this discrimination the rat stops responding for a short period just after receiving and ingesting a pellet of food. (p. 288)

Thus, from the very beginning of his research on FR schedules, Skinner assumed that the pause was a function of the preceding reinforcer. Consequently, the phrase postreinforcement pause came to connote not only the pause after reinforcement but also the pause controlled by reinforcement (Griffiths & Thompson, 1973).

Skinner did acknowledge that there were “considerable individual differences” in the length of pausing produced by FR schedules. For example, in describing performance under an FR 192, he wrote,

At one extreme the pause after ingestion may be relatively great and the subsequent acceleration to a maximal or near maximal rate very rapid. … At the other extreme the pause is brief, but the rate immediately following it is low and accelerated slowly. (1938, pp. 289–290)

Recognition of such irregularities also can be found in Morse and Dews' (2002) foreword to the reprinting of Schedules of Reinforcement:

If one leafs through the pages of any chapter, there are clearly differences in the uniformity and reproducibility of performances under a particular type of schedule. Some of these differences in performances come from the continuing technical improvements in the design of keys and feeders, others from differences in the past experiences of subjects before exposure to the current contingencies or from the duration of exposure to current conditions, and sometimes from differences between subjects treated alike. (p. 315)

Inspection of the cumulative records reproduced in Ferster and Skinner's compendium confirms that despite impressive commonalities in performances, there also are unaccounted-for differences both within the performances of the same subject and between those of different subjects. These two facets of Skinner's research—regularities in performance sometimes accompanied by unaccountable variation—are not necessarily at odds. Individual differences should serve as a prod for identifying the variables that control the differences, thus strengthening conclusions about the commonalities.

Building on Skinner's (1938) and Ferster and Skinner's (1957) findings, subsequent research has identified a number of variables that affect the length of the FR pause. These include the size of the ratio (e.g., Felton & Lyon, 1966; Powell, 1968), the amount of response effort (Alling & Poling, 1995; Wade-Galuska, Perone, & Wirth, 2005), the magnitude of the reinforcer (e.g., Lowe, Davey, & Harzem, 1974; Perone & Courtney, 1992; Powell, 1969), the probability of reinforcement (Crossman, 1968; McMillan, 1971), and the level of deprivation (Malott, 1966; Sidman & Stebbins, 1954). One could say that, in general, the duration of the pause increases as a function of variables that weaken responding. These include increases in ratio size and response effort and decreases in reinforcer magnitude, reinforcement probability, and degree of deprivation. Although all of these variables likely interact in complex ways, for present purposes we restrict our discussion to the two most researched: ratio size and reinforcement magnitude.

Effects of FR Size on Pausing

In their chapter on FR schedules, Ferster and Skinner (1957) presented cumulative records of pigeons transitioning from FR 1 or low FR schedules to higher FR schedules, as well as cumulative records of final performances on FR 120 and FR 200 schedules. Although there is some within-subject and between-subjects variability, the cumulative records show that performances by pigeons on ratios as high as FR 60 are characterized by brief pauses. The final performances of 2 birds on higher FR schedules (FR 120 and FR 200) reveal some longer pauses (although at these ratios the variability is even greater), prompting the conclusion that pause length increases with FR size.

Subsequent experiments provided support for the finding that long pauses become more frequent with increases in FR size. In the first parametric study of FR pausing, Felton and Lyon (1966) exposed pigeons to schedules ranging from FR 25 to FR 150. Their results showed that the mean pause duration increased systematically as a function of FR size. A cumulative record from 1 bird reveals consistently brief pauses at FR 50 very much like the performance of Ferster and Skinner's pigeons at similar FR sizes. By comparison, the record for FR 150 shows some longer pauses but many short ones as well.

Felton and Lyon's (1966) results were replicated by Powell (1968) who, beginning with FR 10, moved pigeons through small sequential changes in FR size up to FR 160 and then back down to FR 10. His results showed that mean pausing increased as an accelerating function of FR size. Powell provided a detailed analysis of this effect by including a frequency distribution of individual pauses that showed that as ratio size increased the dispersion of pauses from shorter to longer also increased. Nevertheless, his data showed a relatively greater number of shorter pauses, even at FR 160. These data supply additional evidence that as FR size increases, longer pauses become more frequent. But they also demonstrate that shorter pauses still predominate, a fact that Ferster and Skinner also noted. Taken together, Felton and Lyon's and Powell's results confirm the standard description of performance under ratio schedules as break and run, even though Powell's distribution data and Felton and Lyon's cumulative records show that the majority of pauses were unaffected by increases in ratio size.

One problem with the Felton and Lyon (1966) and Powell (1968) experiments was that the main findings were summarized as functions showing the relation between ratio size and the mean pause, which, in retrospect, we know can obscure important variations in performance and, thus, tell only part of the story of pausing under FR schedules (Baron & Herpolsheimer, 1999).

Effects of Reinforcement Magnitude on Pausing

Ferster and Skinner (1957) did not study the effects of reinforcement magnitude on pausing per se, although they did report that pausing increased when the magazine hopper became partially blocked. Subsequent studies revealed that variations in reinforcement magnitude can have important effects on FR pausing. The results, however, have been conflicting. Powell (1969), in what perhaps was the first systematic study of magnitude, varied pigeons' access to grain using two durations (either 2.5 or 4 s), each correlated with a different colored key light. In different phases, FR values ranged from 40 to 70. His results showed an inverse relation between reinforcement magnitude and pausing. Longer pauses occurred with the shorter food duration, especially at the higher ratios, indicating that magnitude and ratio size interact to determine the extent of pausing. Interestingly, Powell suggested that pause durations could be controlled or stabilized at different FR requirements by manipulating reinforcement magnitudes. In essence, Powell's findings indicated that increasing the magnitude of reinforcement could mitigate the effects of increased ratio size on pausing.

Lowe et al. (1974) obtained different results in an experiment in which rats, performing on an FR 30 schedule, were exposed to a sweetened condensed milk solution mixed with water that varied in concentration from 10% to 70%. Contrary to Powell's (1969) report, Lowe et al. found that the length of the pause was a direct function of the magnitude of reinforcement, and they attributed the results to an unconditioned inhibitory aftereffect of reinforcement. There are a number of procedural differences between the studies by Powell (1969) and Lowe et al. (1974) that might account for the opposite results, including the species of the subject (pigeons vs. rats), the definition of magnitude (duration of access to grain vs. milk concentration), the presence of discriminative stimuli (multiple schedule vs. simple schedule), and the procedure of varying the concentration from one delivery to the next within the same session (within- vs. between-session procedures). It is also noteworthy that the literature on the effects of magnitude on pausing under FR schedules has included carefully done studies in which magnitude effects were absent (e.g., Harzem, Lowe, & Davey, 1975; Perone, Perone, & Baron, 1987).

In a discussion of these and other studies, Perone et al. (1987; see also Perone & Courtney, 1992) proposed an account that relies on an interaction between both inhibitory and excitatory control. Specifically, ratio performance is assumed to reflect unconditioned inhibition from the previous reinforcer and excitation from stimuli correlated with the upcoming reinforcer. In Powell's (1969) study, in which increased magnitude reduced pausing, the excitatory stimuli were dominant because the multiple schedule provided cues that were correlated with the magnitude of the upcoming reinforcer. By comparison, in the Lowe et al. (1974) study, such stimuli were absent because the concentration level varied in an unpredictable manner, and the dominant influence was from the aftereffect of the prior reinforcer.

Pausing Under Progressive-Ratio Schedules

Our discussion of ratio pausing has centered on simple FR schedules (i.e., a procedure in which the schedule parameters are unchanged within sessions). A variant is the progressive-ratio (PR) schedule of reinforcement, a schedule in which the ratio increases in a series of steps during the course of the session until the ratio becomes so high that the subject stops responding (the so-called breaking point). The PR schedule was originally developed by Hodos (1960) as a way of assessing the value of a reinforcer using the breaking-point measure. However, the cumulative records provided in some reports (see Hodos & Kalman, 1963; Thomas, 1974) showed that pauses increased in duration as the ratio size increased, a finding that bears obvious similarities to the relation between FR size and pausing.

More recently, quantitative analyses of PR pausing have shown that the rate of increase from ratio to ratio can be described as an exponential function of ratio size throughout most of the range of ratios (Baron & Derenne, 2000; Baron, Mikorski, & Schlund, 1992). A similar relation can be discerned in FR pausing as a function of ratio size (e.g., Felton & Lyon, 1966). However, unlike FR schedules, the PR function steepens markedly during the last few ratios prior to the final breaking point, a finding perhaps not unexpected, given that PR performances ultimately result in extinction.

Along with ratio size, reinforcement magnitude plays a parallel role in PR and FR schedules. Baron et al. (1992) found that the slope of the PR pause-ratio functions decreased with increases in the concentration of sweetened milk reinforcement, thus signifying lesser degrees of pausing. These results show that the PR schedule provides a convenient way to study variables that influence ratio pausing. A complicating factor, however, is that the contingencies embedded in the progression of increasing ratio sizes may also influence performances, particularly at the highest ratios (cf. Baron & Derenne, 2000).

Pausing Within Multiple FR FR Schedules

Identifying the variables that control pausing on simple FR schedules is difficult because such schedules hold fixed characteristics of the ratio, such as size and reinforcement magnitude. Under such schedules, the obvious place to look for the variables that control pausing is the just-completed ratio, as originally suggested by Skinner and subsequent researchers. Perhaps, as mentioned previously, the subject is pausing to rest from its labors, or perhaps the just-delivered food has reduced its deprivation level. The interpretive problem posed by simple-schedule data is that pausing could just as easily be attributed to the upcoming ratio as to the preceding ratio. The solution to this problem is to use multiple schedules with FRs in both components.

As explained by Ferster and Skinner (1957), multiple schedules are compound schedules in which two or more simple schedules of reinforcement, each correlated with a different stimulus, are alternated. Ferster and Skinner presented data on multiple FR FR schedule performances (e.g., FR 30 FR 190). However, these data did not clarify the nature of control over pausing because each of the two components was in effect for entire sessions, making it difficult to assess the contrast between the components. What was needed was a method with two (or more) FR components so that the characteristics of the two ratios could be varied independently. This essentially was the strategy followed by subsequent researchers (e.g., Baron & Herpolsheimer, 1999; Griffiths & Thompson, 1973).

For example, Griffiths and Thompson (1973) observed rats responding under several two-component multiple FR schedules (FR 20 FR 40, FR 30 FR 60, and FR 60 FR 120) that were programmed in semirandom sequences. Mixed schedules (i.e., schedules without correlated stimuli) provided control data. The results showed that prolonged pausing systematically occurred before the high ratios on the multiple schedules but not on the mixed schedules.

Griffiths and Thompson (1973) noted that in mixed or multiple schedules with unpredictable alternation between two ratio components of different lengths, there are four possible occasions for pausing: after a high ratio and before a high ratio (high-high); after a low ratio and before a high ratio (low-high); after a high ratio and before a low ratio (high-low); and after a low ratio and before a low ratio (low-low). The researchers presented data on the relative frequency distributions of pause durations for all four possible ratio combinations. The results showed that the longest pauses (30 s or greater) occurred most frequently before high ratios on the multiple schedules but not the mixed schedules. Moreover, in the multiple schedules, the frequency of longest pauses was greater before the low-high transition than before the high-high transition, suggesting to Griffiths and Thompson that “pausing in ratio schedules is largely a function of the relative size [italics added] of the upcoming ratio” (p. 234). The implication is that the preceding ratio and stimuli correlated with the upcoming ratio interacted to determine the length of the pause. These results led the researchers to suggest that although the term postreinforcement pause could still be used descriptively to refer to the pause after reinforcement, a more functionally appropriate term might be preratio pause, or the even more neutral between-ratio pause.

Baron and Herpolsheimer (1999) replicated many features of the study by Griffiths and Thompson (1973), but they also uncovered a critical analytic problem. On average, pauses increased in duration as the ratio increased, and pausing was more a function of the upcoming ratio than the preceding ratio. However, the authors addressed a feature of the results that Griffiths and Thompson had not acknowledged: The distributions of individual pauses were positively skewed, and changes in average performance were due more to increased skew than to shifts of the entire distribution. In other words, more pauses tended to be of relatively short duration than relatively long duration, and this occurred even when relatively high ratios (e.g., 150) were employed.

Discrepancies between the measure of central tendencies and the individual values within the distribution raise a fundamental issue in the study of pausing: Should the individual pause serve as the unit of analysis or should the distribution of pauses be aggregated into a single value? Behavior analysts have traditionally viewed the practice of aggregating data from different subjects with suspicion because the average from a group of individuals may not provide a satisfactory picture of any member of the group (Sidman, 1960). By comparison, methods that characterize an individual's performance through an average of data from that individual are commonly regarded as acceptable. However, the results from studies on pausing suggest that an average based on within-subject aggregation may not provide a satisfactory picture of individual performances either. In particular, the use of the mean may exaggerate the degree of pausing and conceal the fact that often the subject pauses very little, if at all. These questions about ways of aggregating individual responses underscore an important issue raised previously: Although mean pausing increases as a function of ratio size, a significant number of ratios are accompanied by brief pauses. The origin of this mix between long and short pauses remains to be discovered.

As noted earlier, pausing under multiple FR FR schedules depends on the transition from the preceding to the upcoming reinforcement. The research design exemplified by Griffiths and Thompson (1973) originally was directed toward pausing as a function of consecutive ratios that differed in size. Subsequently, Perone and his students investigated transitions from ratios that varied in terms of reinforcer magnitude (Galuska, Wade-Galuska, Woods, & Winger, 2007; Perone, 2003; Perone & Courtney, 1992) and response effort (Wade-Galuska et al., 2005). As is the case when different-sized FR schedules were contrasted, pausing was longest following a transition from a large to a small reinforcer or, in the case of response effort, a transition from a low to a high response-force requirement. By comparison, pauses were relatively short when the transition was from a small to a large magnitude or from a high to a low response force. Taken as a whole, these findings suggest that pausing is affected by the relative “favorableness” of the situation, with pauses becoming most pronounced when the transition is from a more to a less favorable contingency (Wade-Galuska et al., 2005).

We noted that pauses tend to be longest when the upcoming ratio is higher, requires more effort, or produces smaller reinforcement magnitudes, but that pausing may also be influenced by features of the previous ratio. Harzem and Harzem (1981) have summarized the view that pausing is due to an unconditioned inhibitory aftereffect of reinforcement. Such an effect should dissipate with the passage of time, depending on the magnitude of the just-completed reinforcer. One manipulation that can clarify the extent of the inhibitory effect is to include a period of time-out between the delivery of the reinforcer and the start of the next ratio. Several studies have confirmed that pausing is indeed reduced when such time-out periods are interposed on FR schedules (Mazur & Hyslop, 1982; Perone et al., 1987) and PR schedules (Baron et al., 1992).

Pausing Within Mixed FR FR Schedules

Multiple FR FR schedules provide insight into the degree to which pausing is controlled by upcoming, as opposed to preceding, contingencies. Because each component is correlated with a distinct stimulus, the discriminative control exerted by the upcoming ratio can be manipulated within a session. By comparison, mixed FR FR schedules, that is, schedules in which the FR contingencies vary within the session, lack discriminative stimuli associated with upcoming ratios that might exert control over responding. Consequently, control of pausing appears to be limited to characteristics of the previous ratio. When Ferster and Skinner (1957) compared mixed FR FR schedules with markedly different ratio sizes (e.g., FR 30 and FR 190), they found that pausing occurred within the ratio rather than during the transition from one ratio size to the next. Specifically, during FR 190, pausing occurred within the ratio after approximately 30 responses (the size of the lower ratio). Ferster and Skinner called this within-ratio pausing “priming,” stating that, “the emission of approximately the number of responses in the smaller ratio ‘primes’ a pause appropriate to the larger ratio” (p. 580).

Several subsequent experiments have provided quantitative analyses of priming (e.g., Crossman & Silverman, 1973; Thompson, 1964). For example, Crossman and Silverman presented detailed data using procedures that varied the proportion of FR 10 to FR 100 components within a mixed schedule. When most of the ratios were FR 10, pausing occurred chiefly after reinforcement (a finding consistent with simple FR ratios). However, priming emerged with increases in the proportion of high ratios. Once again, subjects paused within the higher ratio after emission of the approximate number of responses in the lower ratio. Taken as a whole, the phenomenon of priming within mixed schedules points to the subject's high degree of sensitivity to the size of the FR. When the upcoming ratio is higher than the preceding one, and this difference is signaled by correlated stimuli (multiple schedule), pausing after reinforcement is the rule. When, however, experimenter-controlled stimuli are not available (mixed schedule), a pause commensurate with the lower ratio appears within the higher one. It appears, then, that animals can discriminate the size of the ratio based on their own responding (Thompson).

Pausing Under VR Schedules

Having completed our review of FR schedules (and FR variants), we now turn to a consideration of their VR counterparts. Ferster and Skinner (1957) assumed that pausing under FR schedules occurs because the reinforcer delivered at the end of one ratio is also an SΔ for responding at the beginning of the next ratio. Because the size of the upcoming ratio under VR schedules is typically unpredictable and sometimes quite low, the SΔ effects of the reinforcer are weak. As a result, pausing under VR schedules should be minimal.

In line with this interpretation, most of Ferster and Skinner's cumulative records of VR performances reveal uniformly high rates with few irregularities. For example, with typical birds, although response rates occasionally varied, pausing was clearly absent at moderate ratios (e.g., VR 40 or 50). Even on relatively high VR schedules (e.g., VR 360), pauses were short. Perhaps cumulative records such as these, as well as Ferster and Skinner's description of VR performance in general, led to the widespread belief that pauses under VR schedules are either very short or nonexistent. As we have already seen with FR schedules, however, research has revealed a more complex picture than that presented by Ferster and Skinner. Likewise, pausing under VR schedules is also more complex than Ferster and Skinner assumed.

Effects of Ratio Size and Reinforcement Magnitude in VR Schedules

Although studies on pausing under FR schedules are numerous, relatively few studies have been concerned with the variables that control pausing under VR schedules. With regard to the role of ratio size, Crossman, Bonem, and Phelps (1987) confirmed Ferster and Skinner's findings in a study in which pigeons responded under simple FR and VR schedules with ratio sizes ranging from 5 to 80. Both schedules yielded brief pauses at low-to-moderate ratios. However, under the FR 80 schedule pausing was relatively long, whereas under the VR 80 schedule pausing was relatively short.

Other research has opened to question the view that significant pausing is absent under VR schedules. In a study with rats, Priddle-Higson, Lowe, and Harzem (1976) varied the mean ratio size (e.g., VR 10, VR 40, VR 80) across sessions and the magnitude of the reinforcer (concentration of sweetened milk) within sessions using a mixed schedule. Results showed that mean pause durations increased as a function of VR size. Moreover, the longest pauses occurred whenever the highest concentrations were employed, indicating that ratio size and reinforcement magnitude interacted. The finding of long pauses with a high-concentration reinforcer (means ranged from approximately 18 s to 43 s for individual subjects) echoes the finding described previously by Lowe et al. (1974) with mixed FR schedules. The implication is not just that marked VR pausing is possible but that VR and FR pausing are controlled by similar variables.

Blakely and Schlinger (1988) also examined interactions between mean ratio size and reinforcer magnitude under VR schedules. Pigeons responded on multiple VR VR schedules ranging from VR 10 to VR 70 in which access to food was available for 2 s in one component and 8 s in the other. Results showed that pausing increased with ratio size at both magnitudes. However, the effect was considerably more marked in the component with the smaller of the two magnitudes, a finding exactly opposite to those of Priddle-Higson et al. (1976). Applying the analysis of excitation and inhibition by Perone et al. (1987), this difference is expected because the multiple-schedule procedure of Blakely and Schlinger exerted excitatory control by the stimuli correlated with the upcoming magnitude size.

A remaining issue concerns why some experiments have shown little or no VR pausing (e.g., Crossman et al., 1987; Ferster & Skinner, 1957), whereas others have (e.g., Blakely & Schlinger, 1988; Priddle-Higson et al., 1976; Schlinger, Blakely, & Kaczor, 1990). Schlinger and colleagues have shown that the chief cause is probably the size of the lowest ratio within the distribution of individual ratios that comprise the VR schedule. When Ferster and Skinner studied VR schedules, the lowest ratio was “usually 1” (p. 391). For example, on a VR 360 schedule, the actual progression of ratios ranged from 1 to 720. Other researchers who have reported minimal VR pausing have followed suit (e.g., Crossman et al.). When Schlinger et al. set the lowest ratio to 1 they also found that minimal pausing occurred and that other manipulations in the schedule (e.g., mean ratio size and the magnitude of the reinforcer) had little effect on performances. However, when the lowest ratio was higher (additional values were 4, 7, or 10 for the lowest ratio), longer pausing occurred, and other manipulations were observed to have an effect that paralleled the results with FR schedules. Thus, a procedural artifact—incorporating 1 as the lowest ratio in the VR distribution, found in much of the early research on pausing under VR schedules—is the likely reason why researchers and textbook authors assert that pausing is absent under VR schedules.

Pausing on The Human Level

Ferster and Skinner's (1957) research, together with the various experiments that followed, has yielded a wealth of data pertaining to performances under ratio schedules. This literature has produced a set of empirical principles that provides a framework for control by reinforcement schedules. Equally important is that the study of schedules has provided the basis for an understanding of human affairs as exemplified by Skinner's classic book, Science and Human Behavior (1953). However, a conspicuous feature of the literature we have reviewed so far is that the research comes only from the animal laboratory. We now turn to the question of the applicability of such findings to humans.

Although the value of animal models is sometimes challenged as a way to understand human behavior, this approach has met with considerable success within the behavioral and biological sciences. Skinner (1953) provided a vigorous defense of animal models in the following terms:

Human behavior is distinguished by its complexity, its variety, and its greater accomplishments, but the basic processes are not necessarily different. Science advances from the simple to the complex; it is constantly concerned with whether the processes and laws discovered are adequate for the next. It would be rash to assert at this point that there is no essential difference between human behavior and the behavior of the lower species; but until an attempt has been made to deal with both in the same terms, it would be equally rash to assert that there is. A discussion of human embryology makes considerable use of research on the embryos of chicks. Treatises on digestion, respiration, circulation, endocrine secretion, and other physiological processes deal with rats, hamsters, rabbits, and so on, even though the interest is primarily in human beings. The study of behavior has much to gain from the same practice. (p. 38)

Skinner's views, as well as those of such pioneering figures as Pavlov, Thorndike, and Watson, were shaped by the Darwinian assumption of biological continuity between species.

Behavioral researchers study nonhuman animals because they are more suited for experimental research. Researchers are reluctant to expose humans to the extreme forms of control and the extended study required for the steady-state method needed for experimentation. In addition, the complex histories brought by humans into the laboratory interact with the conditions under investigation. Of course, the experimental study of nonhuman subjects does not necessarily guarantee correct conclusions about human behavior. The development of comprehensive behavioral principles requires a balanced approach. Thus, detailed experimental data from the animal laboratory, although clearly important, must be combined with knowledge from the study of human behavior. To understand how the data on pausing with nonhuman subjects may relate to human behavior, we first consider results from experiments with human subjects; this is followed by a discussion of how a behavioral interpretation of the human problem of procrastination might proceed. We conclude with a brief consideration of how variables manipulated in experiments with nonhuman animals might be used to reduce human procrastination.

Experiments With Human Subjects

The most direct source of information about ratio pausing in humans comes from experiments with human subjects. The logic is the same as in the biomedical sciences, when an intermediate step is inserted between research with animal subjects and clinical practice. For example, the effectiveness of a drug developed in the animal laboratory is verified through systematic experiments with human volunteers. Only when the drug has been found to pass muster on the human level is it introduced within medical practice. In similar terms, behavioral questions can be addressed in the human laboratory to clarify a range of issues. But it is also the case that experimental research with human subjects, both behavioral and biological, poses ethical and methodological problems. However, within these boundaries, animal-based principles can be examined in experiments with humans, and the results can both buttress behavioral interpretations and inform behavioral interventions.

Ratio schedules of reinforcement appear in several basic and applied experiments with humans. Sometimes, ratio schedules are used as a tool to study some other process, and the particulars of ratio schedule performances are not reported. Even when ratio schedule performances are described, response rates or pausing may be presented in minimal detail. A further complication pertains to the results reported in research on ratio schedules in humans. Following the lead of Ferster and Skinner, most often the data have been in the form of selected cumulative records. In those cases in which the results have not been analyzed in quantitative terms, there often is room for different, sometimes conflicting, interpretations. Moreover, there are noteworthy instances in which a given researcher's conclusions differed from those reported by other researchers.

These difficulties notwithstanding, the available evidence suggests that human subjects do pause under FR schedules and pause very little under VR schedules. The exact degree of pausing varies substantially, depending on idiosyncratic features of the experimental procedure. At one extreme are studies in which FR pausing appears to be entirely absent (e.g., Sanders, 1969; Weiner, 1964). At the other are studies in which the results obtained with humans neatly mirror those found in the animal laboratory (e.g., R. F. Wallace & Mulder, 1973; D. C. Williams, Saunders, & Perone, in press). The remaining studies reveal a range of intermediate performances, depending on the degree of regularity in the pause–run pattern.

We located 13 experiments specifically concerned with human performance under simple FR schedules. In addition to experiments with normally functioning adults (Holland, 1958; Sanders, 1969; Weiner, 1964, 1966), studies include those with infants (Hillman & Bruner, 1972), young children (Long, Hammack, May, & Campbell, 1958; Weisberg & Fink, 1966; Zeiler & Kelley, 1969), mentally retarded persons (Ellis, Barnett, & Pryer, 1960; Orlando & Bijou, 1960; R. F. Wallace & Mulder, 1973; D. C. Williams et al., in press), and schizophrenics (Hutchinson & Azrin, 1961). Perhaps it is not surprising that individuals with a wide range of histories and other personal characteristics have produced such varied outcomes. Aside from subject characteristics, differences include preexperimental instructions (usually these are not explicitly specified), the type of reinforcer (candy, trinkets, points, etc.), and the characteristics of the manipulandum (e.g., telegraph key, plunger, touch screen, etc.). Undoubtedly, developmental level and verbal capability also play important roles.

In light of this variation, it is a formidable task to establish correspondences between specific characteristics of the experimental procedure and the degree of ratio pausing. The scarcity of human experiments that have addressed basic schedule parameters makes it difficult to draw definitive conclusions. Nonetheless, two promising leads are well worth mentioning. In an early study, R. F. Wallace and Mulder (1973) demonstrated that pause duration increased and decreased systematically according to an ascending and descending series of FR sizes. More recently, D. C. Williams et al. (in press), using multiple FR FR schedules (cf. Perone & Courtney, 1992), found that the extent of pausing was maximal when a large-magnitude reinforcer was followed by a small one. Studies such as these can serve as a model of effective research on the human level: replication, steady-state methods, and systematic variation of the controlling variables.

Behavioristic Interpretation

In addition to experiments with human subjects, human behavior may be informed by research with nonhumans through behavioral interpretation, that is, correspondences between naturally occurring human behaviors and contingencies studied in the animal laboratory. More simply, researchers interpret complex human behaviors with principles derived from nonhuman laboratory investigations. For example, to illustrate FR response patterns, Mazur (2006) described his own observations when he was a student doing summer work in a hinge-making factory. He reported that the workers were paid on the basis of completion of 100 hinges (a piecework system), and that “once a worker started up the machine, he almost always worked steadily and rapidly until the counter on the machine indicated that 100 pieces had been made. At this point, the worker would record the number completed on a work card and then take a break” (p. 147). In other words, the workers exhibited the familiar break-and-run pattern associated with FR schedules. Although such interpretations of operant performances have an important place within science, by the usual standards they cannot be regarded as definitive. One cannot be certain about the role of the observer's expectation or whether the behavior sample is representative. Also, other theoretical systems may generate equally plausible interpretations of the same behavior.

No doubt, behavioral interpretations pose numerous unresolved questions. An essential first step is to develop ways of systematically recording human performances as they occur in the natural environment. A simple, but instructive, study was reported by the famous novelist Irving Wallace, who kept detailed charts of his own writing output (I. Wallace, 1977). This information, when expressed in the form of cumulative records, showed that “the completion of a chapter always coincided with the termination of writing for the day on which the chapter was completed” (p. 521). In other words, Wallace's records, which were based on the writing of several novels, resembled the FR break-and-run pattern. More broadly, field studies of behaviors in a variety of settings (e.g., the factory or the classroom) can provide detailed information that can interrelate descriptive observations with the results of experimental research (cf. Bijou, Peterson, & Ault, 1968). The science of physics has a long history that relates the events of the natural world (e.g., the tides of the oceans, the orbits of the planets) to the controlled conditions of the experimental laboratory. By comparison, behavior analysis continues to fall short of a set of agreed-upon procedures that characterize naturally occurring behaviors (Baron, Perone, & Galizio, 1991).

Notwithstanding these considerations, there are several aspects of pausing on ratio schedules that bear at least a superficial resemblance to the human problem of procrastination. In simple terms, to procrastinate is to delay or postpone an action or to put off doing something. Such definitions seem to involve something akin to the ratio pause in which an individual pauses before completing a ratio. Moreover, the two phenomena appear to arise from similar causes, in that the schedule parameters that contribute to pausing (e.g., higher ratios, smaller reinforcement magnitudes, greater response effort) are analogous to the situational variables that lead to procrastination (e.g., greater delay to reward, less reward, greater task aversiveness; cf. Howell, Watson, Powell, & Buro, 2006; Senécal, Lavoie, & Koestner, 1997; Solomon & Rothblum, 1984). The relation between pausing and procrastination has received some degree of recognition in past publications (Derenne, Richardson, & Baron, 2006; Shull & Lawrence, 1998), but serious efforts to model procrastination in ratio-schedule terms remain to be attempted.

We must remember, however, that the variables that control the use of the term procrastination may be very different among different speakers. In some instances, such variables may be homologous with those that control the use of the terms postreinforcement or preratio pause. In this case, interpreting procrastinative behaviors in terms of ratio pausing may be justified. Conversely, when using the term procrastination with nonhumans (see Mazur, 1996, 1998), perhaps we should follow Skinner's lead in his article, “‘Superstition’ in the Pigeon” (1949), by putting “procrastination” in quotation marks, implying that we are speaking analogically. Regardless of whether human behavior is homologous to nonhuman (or even human) performances in the laboratory, knowledge of the variables that control pausing in the animal laboratory has the potential to modify the behaviors described as procrastination.

Applied Analysis of Behavior

A third way that human behavior can be approached is through the direct application of animal-based procedures in clinical settings. Behaviors typical of anxiety disorders and depression as well as socially important behaviors normally deficient among autistic and mentally retarded individuals can be cast within a research framework. For example, the clinician can describe problem behaviors before the intervention is introduced, and systematic records can be maintained of the outcome of the therapy. Applied behavior analysis has led to notable accomplishments in varied settings including clinics, institutions, schools, and organizations. Studies have provided convincing evidence that behavioral interventions lead to therapeutic changes that would not occur without the treatment. But application also has limitations from the standpoint of experimental analysis. In applied research, variables cannot be manipulated solely to advance scientific understanding. Perhaps it goes without saying that the primary concern must be the welfare of the client. When this value comes into conflict with scientific understanding, scientific understanding must give way.

Because some behaviors that we call procrastination may arise from causes similar to those characterized as ratio pausing, the knowledge gleaned from basic research on pausing might be used to ameliorate such behavior. To date, however, procrastination, as an area of clinical concern within applied behavior analysis, has generated interest mostly among behavioral educators concerned with improving academic behaviors (Brooke & Ruthven, 1984; Lamwers & Jazwinski, 1989; Wesp, 1986; Ziesat, Rosenthal, & White, 1978). Studies have shown that it is possible to reduce procrastination, for example, through self-reward or self-punishment procedures (Green, 1982; Harrison, 2005; Ziesat et al.). However, efforts of this kind have not led to widespread adoption, and little remains known about how procrastination might be most effectively treated. More important for the present purpose, it appears that there have been no treatments of procrastination based on the results of research on ratio pausing from the animal (or human) laboratory.

Those who desire to reduce procrastination may, thus, be interested in research showing how normal patterns of pausing on ratio schedules can be reduced. Pause durations will shorten, for example, when reinforcement is contingent on completion of the whole ratio within a set length of time (Zeiler, 1970), when the pause alone must end before some criterion duration (R. A. Williams & Shull, 1982), or when time-out punishment is imposed as soon as the pause exceeds some criterion duration (Derenne & Baron, 2001). There is even some evidence that reductions in pausing can remain long after punishment is withdrawn (Derenne et al., 2006). Results from the animal laboratory using time-out punishment are encouraging insofar as they show ways to reduce procrastination-like behavior. However, comparable procedures remain to be developed by applied researchers. There are many unanswered questions. What reinforcers and punishers would be used? How would delivery of the consequences be programmed? What role is played by the individual's history and personal characteristics? Efforts to bridge the gap between basic and applied research on this issue are needed.

Summary and Conclusions

Ratio schedules of reinforcement follow a simple rule. Reinforcement is delivered after completion of a given number of responses (the FR schedule), a value that varies from ratio to ratio but averages a ratio size (the VR schedule), or a value that progressively increases from ratio to ratio (the PR schedule). An essential feature of all three variants is that the organism's work output is equivalent to the reinforcement yield. In other words, response rates are a direct function of reinforcement rates.

Although the procedures embodied in the ratio rule appear straightforward, numerous experiments have shown considerable complexity in the outcomes. In the case of FR and PR schedules, response rates typically appear as a break-and-run pattern: A pause follows delivery of the reinforcement followed by a high rate of responding to the next reinforcer. The duration of the pause has proven to be influenced by a number of variables, most notably the size of the ratio, the magnitude of the reinforcer, and the force of the response requirement. Moreover, even though performances on VR schedules often are described as indicating little or no pausing, research has shown that depending on the distribution of the individual ratios, such pausing can and does occur and is influenced by some of the same variables as the FR pause.

We have already considered the possibility that pausing is a consequence of the previous ratio. However, pausing on FR schedules cannot be attributed to such factors as time needed to consume the reinforcer, satiation, or fatigue. As a rule, subjects typically pause much less under equivalent-sized VR schedules while they consume as many reinforcers and emit as many responses. Moreover, using multiple FR FR schedules, researchers have shown that the duration of pausing does not depend on the size of the previous ratio, thus effectively countering the fatigue hypothesis. Identification of the variables that control pausing using simple FR schedules is difficult because characteristics of successive ratios, such as the size of the ratio, are held constant. More informative are the results of procedures that vary the size of successive ratios using multiple FR FR schedules that include discrete discriminative stimuli that define the components. These procedures have shown that pausing is more a consequence of the upcoming ratio than the preceding one. In both cases, we can say that pausing is more pronounced when the transition is from a more to a less favorable contingency, that is, from a lower to a higher ratio, from a larger to a smaller reinforcer magnitude, or from a lesser to a greater response-force requirement.

Beginning with Ferster and Skinner's (1957) original studies of ratio schedules, there has been debate about the appropriate interpretation of the results that we have described. Of these, three interpretations warrant special attention: (a) Pausing is the result of interacting processes of inhibition and excitation; (b) pausing is the outcome of a competition between reinforcers scheduled by the experimenter and reinforcers from other sources; and (c) pausing avoids the work needed to meet the ratio requirement. Noteworthy is that such views are primarily concerned with what are usually termed molecular effects; in other words, the models represent moment-to-moment effects on ratio performance, along the lines originally described by Ferster and Skinner (cf. Mazur, 1982).

Inhibitory and Excitatory Processes

In this view, pausing reflects the joint effect of the inhibition and the excitation of responding (see Leslie, 1996). We related the well-documented finding, obtained especially with mixed FR FR schedules, that inhibition originates in the unconditioned effects of the previously delivered reinforcing stimulus (Harzem & Harzem, 1981; Lowe et al., 1974; Perone & Courtney, 1992). However, it is possible that more than one source of inhibition exerts control. We also mentioned Skinner's (1938) suggestion that conditioned inhibition is responsible for pausing, in that the delivery of one reinforcer may serve as an SΔ for subsequent responding. Regardless of the origins of inhibition, there may be several reasons why pausing is kept in check. First, the inhibitory aftereffects of reinforcement dissipate with time. Second, the passage of time since the start of the ratio is correlated with the past delivery of reinforcers; thus, excitation of responding increases with time. Third, there is differential reinforcement of responses that occur soon after reinforcement insofar as shorter pauses lessen the delay to the next reinforcer.

Competing Reinforcers

The second account envisions a competition between concurrently available sources of reinforcement. On the one hand, subjects can work towards the reinforcer scheduled by the experimenter. On the other hand, subjects can obtain sources of reinforcement within the experimental apparatus that are not programmed by the experimenter. According to this view, pausing occurs whenever subjects choose an alternative reinforcer over the scheduled one. Alternative reinforcers may be added to the operant chamber, such as the opportunity to drink water when food pellets are the scheduled reinforcers, but more typical alternatives involve automatic reinforcers inherent in grooming, resting, and exploring (see Derenne & Baron, 2002; Shull, 1979). Although the efficacy of such alternatives may be low by comparison with the scheduled one, such reinforcers are immediately available. Thus, as a general principle, at the beginning of the ratio, when the probability of responding is lowest, subjects would be expected to select unscheduled smaller–sooner reinforcers over the scheduled larger–later one (cf. Rachlin & Green, 1972).

Work Avoidance

The third account focuses on aversive properties of responding. A subject confronted with the task of completing a substantial ratio of responding can preclude an unfavorable situation by pausing, that is, by escaping stimuli that signal the response requirement, thus avoiding having to respond. Research reported by Azrin (1961) and Thompson (1964) provided evidence that, if given the opportunity, subjects will avoid high FR requirements. In both experiments, subjects were provided with concurrent access to an FR schedule of reinforcement and to the opportunity to suspend the FR schedule (i.e., self-imposed time-out). Time-out responses were frequent at the beginning of the ratio (the time at which remaining work was greatest and pausing normally occurred), even though they had the effect of reducing reinforcement rates. More recently, Perone (2003) showed a similar pattern of escape responding under multiple FR FR schedules. Subjects not only paused longest when a large reinforcer on one ratio was followed by a smaller one on the next, but they were also more likely to make an escape response during large–small transitions.

These three accounts (the inhibition–excitation model, the competing reinforcer model, and the work-avoidance model) rely on different mechanisms to explain why pausing occurs. However, it would be incorrect, or at least premature, to conclude that one view is more accurate than the others. Each model addresses a different factor that may contribute to pausing, and these factors need not be viewed as mutually exclusive. Further, each can be used to explain major findings from research with ratio schedules of reinforcement, most notably the well-established findings that pause durations increase with ratio size and pause durations on FR schedules generally exceed those on VR schedules.

Consider the finding that pause durations increase with ratio size. According to the inhibition–excitation model, the delivery of a reinforcer signals the beginning of a period of time during which subsequent reinforcement is not immediately available. The extent to which inhibitory processes depress responding is dependent on the delay to reinforcement. An increase in the size of the ratio necessarily increases the minimum delay to reinforcement; therefore, inhibition (and pausing) increases as a function of ratio size. The competing reinforcer model also points to the delay to reinforcement as a critical variable. In this case, an increase in the delay to reinforcement alters the balance of choice between the delayed scheduled reinforcer and immediately available alternative reinforcers. With greater delay, the scheduled reinforcer becomes less efficacious and subjects therefore will more likely choose alternative sources of reinforcement early in the ratio. The consequence of this shift in choice is that pause durations are lengthened.

For the work-avoidance model, the critical consideration is that higher ratios, of necessity, require increased work. Insofar as increased work is more aversive (i.e., it increases the reinforcing value of escape or avoidance behavior; cf. Azrin, 1961; Thompson, 1964), higher ratios should be accompanied by longer pausing. Research with FR schedules has not been able to show that either the time until the reinforcer or the amount of required work alone is the critical factor responsible for pausing. Killeen (1969) obtained equivalent pause durations under FR and FI schedules of reinforcement when the average interreinforcement interval was held constant across schedules. However, others have found that FR and FI pause durations depart, suggesting that other aspects of the contingencies, such as the relatively aversive work requirement on FR schedules, also have an important role (Aparicio, Lopez, & Nevin, 1995; Capehart, Eckerman, Guilkey, & Shull, 1980; Lattal, Reilly, & Kohn, 1998).

In the case of differences in pausing between VR and FR schedules, two models once again focus on the time until reinforcement, whereas the third addresses the amount of required work. A key finding is that pauses under VR schedules are controlled in part by the size of the lowest possible ratio (Schlinger et al., 1990). When the lowest possible ratio is 1, pausing is almost nonexistent, and when the ratio increases in size, VR pausing begins to resemble FR pausing in duration. Why should a low minimum ratio reduce pausing? According to the inhibition–excitation model, occasional reinforcement early in the ratio would reduce inhibition (i.e., under VR schedules the delivery of one reinforcer does not clearly predict a period of subsequent reinforcement unavailability). Under the competing reinforcer model, the control exerted by the scheduled reinforcer over behavior early in the ratio is increased when reinforcers are sometimes delivered after only one or a few responses. Therefore, subjects become less likely to select alternative reinforcers over responding for the scheduled reinforcer. For the work-avoidance model, it is the response requirement that matters. Subjects are less likely to put off responding if there is a possibility that the work requirement is very small.

A problematic feature of all three models is the variation in pausing from ratio to ratio. Although research on FR pausing shows overall a direct relation between pause duration and ratio size (e.g., Felton & Lyon, 1966; Powell, 1968) and an inverse relation between pause duration and reinforcement magnitude (e.g., Powell, 1969), cumulative records and frequency distributions of pausing suggest that performances on a typical ratio are minimally affected by these variables. Subsequent research has indicated that aggregated data (most notably means) present an inaccurate picture because means tend to be disproportionately influenced by the extreme scores of skewed distributions (Baron & Herpolsheimer, 1999). A plausible account of ratio-to-ratio variation relies on the aforementioned inhibition–excitation model. Thus, the extent to which responding is regarded as inhibited or excited should wax and wane over time insofar as sequential increases in pausing are counteracted by decreases in pausing. However, the expectation of orderly cycles of increasing and decreasing pause durations within sessions has not been forthcoming thus far (Derenne & Baron, 2001).

Another puzzle pertains to the finding that the break-and-run pattern persists despite extensive exposure to the FR schedule. The consequence is that overall reinforcement rates engendered by responding are reduced below optimal levels. By comparison, extended exposure to other schedules (e.g., FI) improves response efficiency insofar as response rates are reduced without impairing reinforcement rate (see Baron & Leinenweber, 1994). However, an analysis in terms of FR pausing does not take into account the potential role of alternative reinforcers. From this standpoint, the net reinforcing consequence of responding must include reinforcement from both sources. Interestingly, pausing can be modified by imposing additional contingencies on the FR schedule. For example, if the pause must be shorter than some minimum duration for reinforcement to be delivered at the end of the ratio, then subjects will typically pause no longer than the criterion allows (R. A. Williams & Shull, 1982). Furthermore, even after the additional contingencies are removed, subjects may continue to pause less than they did before the procedure started, suggesting that forced exposure to a more efficient response pattern may sensitize subjects to overall reinforcement rates (Derenne et al., 2006).

Finally, we addressed the issue of the relevance of animal models for an understanding of the impact of ratio schedules of reinforcement on the world of human affairs. We concluded that the general principles that have emerged from our review, although imperfect, shed light on human behavior. In particular, pausing under ratio schedules may illuminate the human problem of procrastination in the sense that procrastination is influenced by the size of the upcoming task, the relative difficulty in performing it, and perhaps the magnitude of the expected reinforcer. In fact, based on the interpretation of pausing under ratio schedules as an avoidance response, some behaviors we label as procrastination may occur because they avoid the upcoming task. This might also momentarily increase the effectiveness of alternative reinforcers and might explain why, when faced with a large effortful task, we tend to find other things to do even if those other activities involve consequences that are typically not very effective reinforcers (e.g., washing the dishes, etc.).

So, what can we conclude about pausing under ratio schedules after 50 years of research? We can begin to answer this question by addressing schedule effects in general. As Zeiler (1984) has written,

Suffice it to say that we still lack a coherent explanation of why any particular schedule has its specific effects on behavior. … Whether the explanation has been based on interresponse time, reinforcement, reinforcer frequency, relations between previous and current output, direct or indirect effects, or whatever, no coherent and adequate theoretical account has emerged. Forty years of research has shown that a number of variables must be involved—schedule performances must be multiply determined—but they provide at best a sketchy picture and no clue as to interactive processes. (p. 489)

So it is with the variables that influence pausing under ratio schedules. They are likely numerous and complex. Nonetheless, our review has revealed that amid the variability, there is a consistent orderliness across myriad experiments over the past 50 years. Zeiler (1984) pessimistically concluded that any attempt to understand schedules of reinforcement at a more molecular level is doomed to fail “because of the complexity of the interactions, and also because many of the controlling variables arise indirectly through the interplay of ongoing behavior and the contingencies” (p. 491). But as we have discovered, as a result of innovative methods and probing research questions, researchers are moving closer to a more fundamental understanding of why pausing under ratio schedules occurs.

Footnotes

A pause is usually defined as the time from the delivery of a reinforcer until emission of the first response of the subsequent ratio and has been variously referred to as a postreinforcement or preratio pause. Although there is evidence that pauses will sometimes occur after the first response (Ferster & Skinner, 1957; Griffiths & Thompson, 1973; Mazur & Hyslop, 1982), we use the terms pause and pausing to refer to the postreinforcement or preratio pause.

References

- Alling K, Poling A. The effects of differing response-force requirements on fixed-ratio responding of rats. Journal of the Experimental Analysis of Behavior. 1995;63:331–346. doi: 10.1901/jeab.1995.63-331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aparicio C.F, Lopez F, Nevin J.A. The relation between postreinforcement pause and interreinforcement interval in conjunctive and chain fixed-ratio fixed-time schedules. The Psychological Record. 1995;45:105–125. [Google Scholar]

- Azrin N.H. Time-out from positive reinforcement. Science. 1961;133:382–383. doi: 10.1126/science.133.3450.382. [DOI] [PubMed] [Google Scholar]

- Baron A, Derenne A. Progressive-ratio schedules: Effects of later schedule requirements on earlier performances. Journal of the Experimental Analysis of Behavior. 2000;73:291–304. doi: 10.1901/jeab.2000.73-291. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baron A, Herpolsheimer L.R. Averaging effects in the study of fixed-ratio response patterns. Journal of the Experimental Analysis of Behavior. 1999;71:145–153. doi: 10.1901/jeab.1999.71-145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baron A, Leinenweber A. Molecular and molar aspects of fixed-interval performance. Journal of the Experimental Analysis of Behavior. 1994;61:11–18. doi: 10.1901/jeab.1994.61-11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baron A, Mikorski J, Schlund M. Reinforcement magnitude and pausing on progressive-ratio schedules. Journal of the Experimental Analysis of Behavior. 1992;58:377–388. doi: 10.1901/jeab.1992.58-377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baron A, Perone M, Galizio M. Analyzing the reinforcement process at the human level: Can application and behavioristic interpretation replace laboratory research? The Behavior Analyst. 1991;14:93–105. doi: 10.1007/BF03392557. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bijou S.W, Peterson R.F, Ault M.H. A method to integrate descriptive and experimental field studies at the level of data and empirical concepts. Journal of Applied Behavior Analysis. 1968;1:175–191. doi: 10.1901/jaba.1968.1-175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blakely E, Schlinger H. Determinants of pausing under variable-ratio schedules: Reinforcer magnitude, ratio size, and schedule configuration. Journal of the Experimental Analysis of Behavior. 1988;50:65–73. doi: 10.1901/jeab.1988.50-65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brooke R.R, Ruthven A.J. The effects of contingency contracting on student performance in a PSI class. Teaching of Psychology. 1984;11:87–89. [Google Scholar]

- Capehart G.W, Eckerman D.A, Guilkey M, Shull R.L. A comparison of ratio and interval reinforcement schedules with comparable interreinforcement times. Journal of the Experimental Analysis of Behavior. 1980;34:61–76. doi: 10.1901/jeab.1980.34-61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crossman E.K. Pause relationships in multiple and chained fixed-ratio schedules. Journal of the Experimental Analysis of Behavior. 1968;11:117–126. doi: 10.1901/jeab.1968.11-117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crossman E.K, Bonem E.J, Phelps B.J. A comparison of response patterns on fixed-, variable-, and random-ratio schedules. Journal of the Experimental Analysis of Behavior. 1987;48:395–406. doi: 10.1901/jeab.1987.48-395. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crossman E.K, Silverman L.T. Altering the proportion of components in a mixed fixed-ratio schedule. Journal of the Experimental Analysis of Behavior. 1973;20:273–279. doi: 10.1901/jeab.1973.20-273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Derenne A, Baron A. Time-out punishment of long pauses on fixed-ratio schedules of reinforcement. The Psychological Record. 2001;51:39–51. [Google Scholar]

- Derenne A, Baron A. Preratio pausing: Effects of an alternative reinforcer on fixed-and variable-ratio responding. Journal of the Experimental Analysis of Behavior. 2002;77:272–282. doi: 10.1901/jeab.2002.77-273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Derenne A, Richardson J.V, Baron A. Long-term effects of suppressing the preratio pause. Behavioural Processes. 2006;72:32–37. doi: 10.1016/j.beproc.2005.11.011. [DOI] [PubMed] [Google Scholar]

- Ellis N.R, Barnett C.D, Pryer M.W. Operant behavior in mental defectives: Exploratory studies. Journal of the Experimental Analysis of Behavior. 1960;3:63–69. doi: 10.1901/jeab.1960.3-63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Felton M, Lyon D.O. The post-reinforcement pause. Journal of the Experimental Analysis of Behavior. 1966;9:131–134. doi: 10.1901/jeab.1966.9-131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferster C.B, Skinner B.F. Schedules of reinforcement. New York: Appleton-Century-Crofts; 1957. [Google Scholar]

- Galuska C.M, Wade-Galuska T, Woods J.H, Winger G. Fixed-ratio schedules of cocaine self-administration in rhesus monkeys: Joint control of responding by past and upcoming doses. Behavioural Pharmacology. 2007;18:171–175. doi: 10.1097/FBP.0b013e3280d48073. [DOI] [PubMed] [Google Scholar]

- Green L. Minority students' self-control of procrastination. Journal of Counseling Psychology. 1982;6:636–644. [Google Scholar]

- Griffiths R.R, Thompson T. The post-reinforcement pause: A misnomer. The Psychological Record. 1973;23:229–235. [Google Scholar]

- Harrison H.C. The three-contingency model of self-management. Unpublished doctoral disseration, Western Michigan University; 2005. [Google Scholar]

- Harzem P, Harzem A.L. Discrimination, inhibition, and simultaneous association of stimulus properties. In: Harzem P, Zeiler M.D, editors. Predictability, correlation, and contiguity. Chichester, England: Wiley; 1981. pp. 81–128. [Google Scholar]

- Harzem P, Lowe C.F, Davey G.C.L. Aftereffects of reinforcement magnitude: Dependence on context. Quarterly Journal of Experimemental Psychology. 1975;27:579–584. [Google Scholar]

- Hillman D, Bruner J.S. Infant sucking in response to variations in schedules of feeding reinforcement. Journal of Experimental Child Psychology. 1972;13:240–247. doi: 10.1016/0022-0965(72)90023-9. [DOI] [PubMed] [Google Scholar]

- Hodos W. Progressive ratio as a measure of reward strength. Science. 1960;34:943–944. doi: 10.1126/science.134.3483.943. [DOI] [PubMed] [Google Scholar]

- Hodos W, Kalman G. Effects of increment size and reinforcer volume on progressive ratio performance. Journal of the Experimental Analysis Behavior. 1963;6:387–392. doi: 10.1901/jeab.1963.6-387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holland J.G. Human vigilance: The rate of observing an instrument is controlled by the schedule of signal detections. Science. 1958;128:61–67. doi: 10.1126/science.128.3315.61. [DOI] [PubMed] [Google Scholar]

- Howell A.J, Watson D.C, Powell R.A, Buro K. Academic procrastination: The pattern and correlates of behavioural postponement. Personality and Individual Differences. 2006;40:1519–1530. [Google Scholar]

- Hutchinson R.R, Azrin N.H. Conditioning of mental-hospital patients to fixed-ratio schedules of reinforcement. Journal of the Experimental Analysis of Behavior. 1961;4:87–95. doi: 10.1901/jeab.1961.4-87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Killeen P. Reinforcement frequency and contingency as factors in fixed-ratio behavior. Journal of the Experimental Analysis of Behavior. 1969;12:391–395. doi: 10.1901/jeab.1969.12-391. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lamwers L.L, Jazwinski C.H. A comparison of three strategies to reduce student procrastination in PSI. Teaching of Psychology. 1989;16:8–12. [Google Scholar]

- Lattal K.A. Scheduling positive reinforcers. In: Iversen I.H, Lattal L.A, editors. Experimental analysis of behavior. New York: Elsevier; 1991. pp. 87–134. [Google Scholar]

- Lattal K.A, Reilly M.P, Kohn J.P. Response persistence under ratio and interval reinforcement schedules. Journal of the Experimental Analysis of Behavior. 1998;70:165–183. doi: 10.1901/jeab.1998.70-165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leslie J.C. Principles of behaviour analysis. Amsterdam: Harwood Academic Publishers; 1996. [Google Scholar]

- Long E.R, Hammack J.T, May F, Campbell B.J. Intermittent reinforcement of operant behavior in children. Journal of the Experimental Analysis of Behavior. 1958;1:315–339. doi: 10.1901/jeab.1958.1-315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lowe C.F, Davey G.C.L, Harzem P. Effects of reinforcement magnitude on interval and ratio schedules. Journal of the Experimental Analysis Behavior. 1974;22:553–560. doi: 10.1901/jeab.1974.22-553. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Malott R.W. The effects of prefeeding in plain and chained fixed ratio schedules of reinforcement. Psychonomic Science. 1966;4:285–287. [Google Scholar]

- Mazur J.E. A molecular approach to ratio schedule performance. In: Commons M.L, Herrnstein R.J, Rachlin H, editors. Quantitative analyses of behavior: Vol. 2. Matching and maximizing accounts. Cambridge, MA: Ballinger; 1982. pp. 79–110. [Google Scholar]

- Mazur J.E. Procrastination by pigeons: Preference for larger, more delayed work requirements. Journal of the Experimental Analysis of Behavior. 1996;65:159–171. doi: 10.1901/jeab.1996.65-159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazur J.E. Procrastination by pigeons with fixed-interval response requirements. Journal of the Experimental Analysis of Behavior. 1998;69:185–197. doi: 10.1901/jeab.1998.69-185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazur J.E. Learning and behavior. Upper Saddle River, NJ: Pearson Prentice Hall; 2006. [Google Scholar]

- Mazur J.E, Hyslop M.E. Fixed-ratio performance with and without a postreinforcement timeout. Journal of the Experimental Analysis Behavior. 1982;38:143–155. doi: 10.1901/jeab.1982.38-143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McMillan J.C. Percentage reinforcement of fixed-ratio and variable-interval performances. Journal of the Experimental Analysis Behavior. 1971;15:297–302. doi: 10.1901/jeab.1971.15-297. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morse W.H, Dews P.B. Foreword to Schedules of Reinforcement. Journal of the Experimental Analysis of Behavior. 2002;77:313–317. doi: 10.1901/jeab.2002.77-313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Orlando R, Bijou S.W. Single and multiple schedules of reinforcement in developmentally retarded children. Journal of the Experimental Analysis of Behavior. 1960;3:339–348. doi: 10.1901/jeab.1960.3-339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perone M. Negative effects of positive reinforcement. The Behavior Analyst. 2003;26:1–14. doi: 10.1007/BF03392064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perone M, Courtney K. Fixed-ratio pausing: Joint effects of past reinforcer magnitude and stimuli correlated with upcoming magnitude. Journal of the Experimental Analysis of Behavior. 1992;57:33–46. doi: 10.1901/jeab.1992.57-33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perone M, Perone C.L, Baron A. Inhibition by reinforcement: Effects of reinforcer magnitude and timeout on fixed ratio pausing. The Psychological Record. 1987;37:227–238. [Google Scholar]

- Powell R.W. The effects of small sequential changes in fixed-ratio size upon the post-reinforcement pause. Journal of the Experimental Analysis of Behavior. 1968;11:589–593. doi: 10.1901/jeab.1968.11-589. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell R.W. The effect of reinforcement magnitude upon responding under fixed-ratio schedules. Journal of the Experimental Analysis of Behavior. 1969;12:605–608. doi: 10.1901/jeab.1969.12-605. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Priddle-Higson P.J, Lowe C.F, Harzem P. Aftereffects of reinforcement on variable-ratio schedules. Journal of the Experimental Analysis of Behavior. 1976;25:347–354. doi: 10.1901/jeab.1976.25-347. [DOI] [PMC free article] [PubMed] [Google Scholar]