Abstract

Objective

Previous studies from our laboratory have shown that a restricted stimulus bandwidth can have a negative effect upon the perception of the phonemes / s/ and / z/, which serve multiple linguistic functions in the English language. These findings may have important implications for the development of speech and language in young children with hearing loss because the bandwidth of current hearing aids generally is restricted to 6–7 kHz. The primary goal of the current study was to expand our previous work to examine the effects of stimulus bandwidth on a wide range of speech materials, to include a variety of auditory-related tasks, and to include the effects of background noise.

Design

Thirty-two children with normal hearing and 24 children with sensorineural hearing loss (7–14 years) participated in this study. To assess the effects of stimulus bandwidth, four different auditory tasks were used: 1) nonsense syllable perception, 2) word recognition, 3) novel-word learning, and 4) listening effort. Auditory stimuli recorded by a female talker were low-pass filtered at 5 and 10 kHz and presented in noise.

Results

For the children with normal-hearing, significant bandwidth effects were observed for the perception of nonsense syllables and for words, but not for novel-word learning or listening effort. In the 10-kHz bandwidth condition, children with hearing loss showed significant improvements for monosyllabic words, but not for nonsense syllables, novel-word learning, or listening effort. Further examination, however, revealed marked improvements for the perception of specific phonemes. For example, bandwidth effects for the perception of / s/ and / z/ were not only significant, but much greater than that seen in the group with normal hearing.

Conclusions

The current results are consistent with previous studies which have shown that a restricted stimulus bandwidth can negatively affect the perception of / s/ and / z/ spoken by female talkers. Given the importance of these phonemes in the English language and the tendency of early caregivers to be female, an inability to perceive these sounds correctly may have a negative impact on both phonological and morphological development.

Keywords: hearing loss, children, speech perception, novel-word learning, listening effort

Introduction

The fricative / s/ is the third or fourth most frequently occurring consonant and serves multiple linguistic functions in the English language (Rudmin, 1983). For example, word final / s/ and its voiced cognate / z/ can denote plurality of nouns (e.g., trucks), third person present tense (e.g., she drinks), possession (e.g., Susie’s house), and contractions (e.g., it’s, she’s). Studies have shown that fricatives are observed in both babble and early words of children with normal-hearing (NH) (McCune & Vihman, 2001; Sotto, Creach, Hunter, et al., Reference Note 2; Stoel-Gammon, 1985). In a recent longitudinal study of speech and language development in children with hearing loss (HL) and children with NH, however, Moeller, Stelmachowicz, Hoover, et al. (Reference Note 1) found that fricatives are markedly delayed in children with HL compared to their peers with NH. Specifically, 71% of the children with NH produced fricatives in their inventories by 24 months of age. In contrast, only 33% of the children with HL produced fricatives at this same point in time.

Because the peak energy of / s/ spoken by female and child talkers tends to occur in the 6.3–8.8 kHz range (Boothroyd, Erickson, & Medwetsky, 1994; Boothroyd & Medwetsky, 1992; McGowan & Nittrouer, 1988; Nittrouer, 1995; Stelmachowicz, Pittman, Hoover, et al., 2001), it is possible that the typical bandwidth of hearing aids may limit audibility of / s/ and / z/ for these talkers. In turn, inconsistent exposure to / s/ across talkers, situations, and contexts may delay the acquisition of morphological rules. A series of studies from our laboratory were conducted to address this issue. Kortekaas & Stelmachowicz (2000) evaluated the effects of low-pass filtering on the perception of / s/ as part of an inflectional morpheme (i.e., cats, socks) for adults and 5, 7, and 10 year-old children with NH. Low-pass filtering had a greater negative effect on the performance of children than adults. In a subsequent study, Stelmachowicz et al. (2001) investigated the effects of low-pass filtering on the perception of / s/ for four groups of listeners (children and adults with NH; children and adults with HL). CV and VC nonsense syllables were created with the vowel / i/ and the consonants / f/, /θ/, and / s/. Test stimuli were produced by an adult female, an adult male, and a child and were low-pass filtered at six frequencies from 2 through 9 kHz. In general, children with NH performed more poorly than the adults and subjects with HL performed more poorly than their counterparts with NH. For children with both NH and HL, mean performance for the male talker reached its maximum at a bandwidth of 5 kHz. For the female and child talkers, however, mean performance continued to improve up to a bandwidth of 9 kHz for both groups of children.

In a follow-up study, Stelmachowicz, Pittman, Hoover, et al. (2002) investigated the ability of 40 children with HL (7–13 years) to perceive / s/ and / z/ as inflectional morphemes while wearing their personal hearing aids. Test items (“Show me babies”) were spoken by an adult male and an adult female and the child’s task was to select the correct picture. Results revealed significant main effects for talker and plurality, with poorer performance for the female talker. A factor analysis determined that the frequency range important for the perception of / s/ and / z/ was 2–4 kHz and 2–8 kHz for the male and female talkers, respectively.

Other studies have suggested that the production and/or perception of fricatives may be difficult for listeners with hearing loss. Elfenbein, Hardin-Jones, & Davis (1994) found that children with hearing loss have difficulty with the accurate production of fricatives and affricates. Cornelisse, Gagné, & Seewald (1991) demonstrated that the spectrum of self-generated speech (measured at the hearing aid microphone) contains less energy above 2 kHz than when listening to another talker in face-to-face conversation. Thus, reduced audibility of the high-frequency components of speech would be expected to impair self-monitoring and thus may contribute to the poorer production of fricatives. To examine the ability of young children to monitor their own speech, Pittman, Stelmachowicz, Lewis, et al. (2003) compared the spectral characteristics of self-generated speech recorded at the ear and at a position 30 cm in front of the mouth. Subjects were adults and 2–4 year old children with NH. Long-term average spectra were calculated for sentences and short-term spectra were calculated for selected phonemes. Relative to the 0° azimuth condition, speech levels at the ear were 8–10 dB lower for frequencies ≥ 4 kHz for both groups.

The results of these studies suggest that the bandwidth of current hearing aids may contribute to known difficulties with the perception and production of fricatives and affricates (Elfenbein et al., 1994) and to delays in the development of noun and verb morphology (Davis, Elfenbein, Schum, et al., 1986). However, it appears that multiple factors (i.e., both listener and talker characteristics, degree of hearing loss, hearing aid bandwidth) may have a negative effect on the audibility of the high-frequency components of speech and that the effects of bandwidth may be task dependent. Because adults with acquired hearing losses generally are able to use semantic and syntactic cues in the perception of speech (Nittrouer & Boothroyd, 1990), these acoustic effects may be inconsequential for this population. However, infants and young children, who are still in the process of developing speech and language, may be disadvantaged by these acoustic effects. The longitudinal data cited earlier (Moeller et al., submitted) support this concern.

However, Pittman, Lewis, Hoover, et al. (2005) examined the effects of stimulus bandwidth on rapid word learning in 5- to 14-year-old children with NH and HL. CVCVC nonsense words were created using the 24 English consonants (3 per word). Half of the test words were low-pass filtered at 4 kHz and the other half at 9 kHz. Children viewed a brief slideshow containing the 8 nonsense words in the context of a simple story. Each word appeared in the story three times. After viewing the story twice, children were asked to identify the words from a closed set of 8 pictures. Although word-learning for the group with HL was significantly poorer than that of the children with NH, bandwidth effects were not significant.

The goal of the current study was to expand our previous work to examine the effects of stimulus bandwidth on a wider range of auditory skills. Although our earlier studies indicate that a reduction in the audibility of the high-frequency components of speech results in degraded performance for / s/ and / z/, no studies have included a wide range of fricatives. It is also of interest to examine the perception of nonsense syllables and of words to determine if the previously observed bandwidth effects also occur at the word level. Although Pittman et al. (2005) failed to demonstrate a bandwidth effect for novel-word learning, this previous study was conducted in quiet. It is possible that the combined effects of restricted bandwidth and noise may influence performance. Finally, it is possible that a restricted bandwidth may increase listening effort even if performance is not affected. Thus, the current study was designed to assess the effects of stimulus bandwidth on four different auditory tasks: 1) nonsense syllable perception, 2) word recognition, 3) novel-word learning, and 4) listening effort in children with NH and HL.

Materials and Methods

Subjects

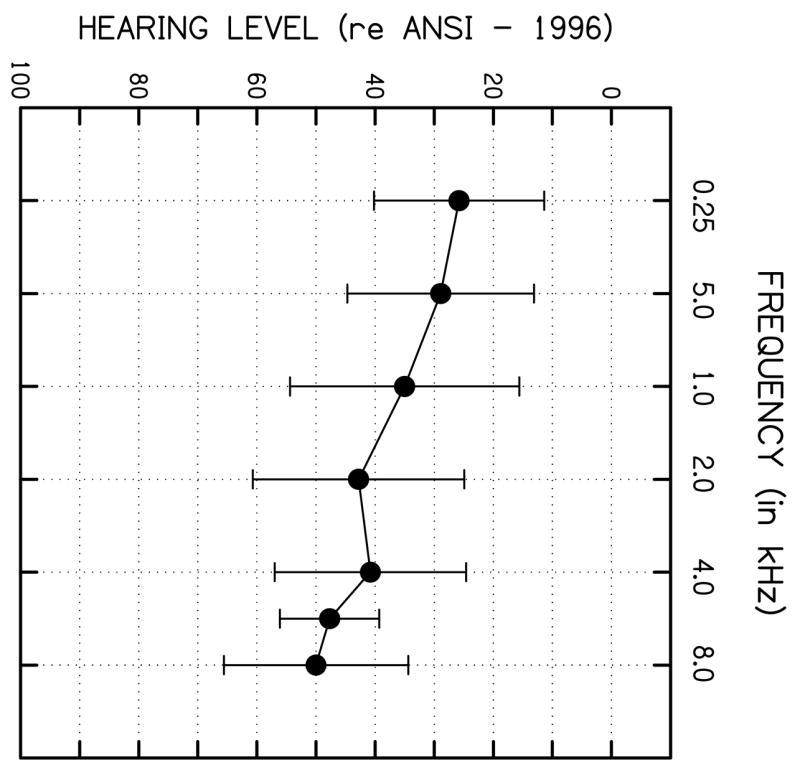

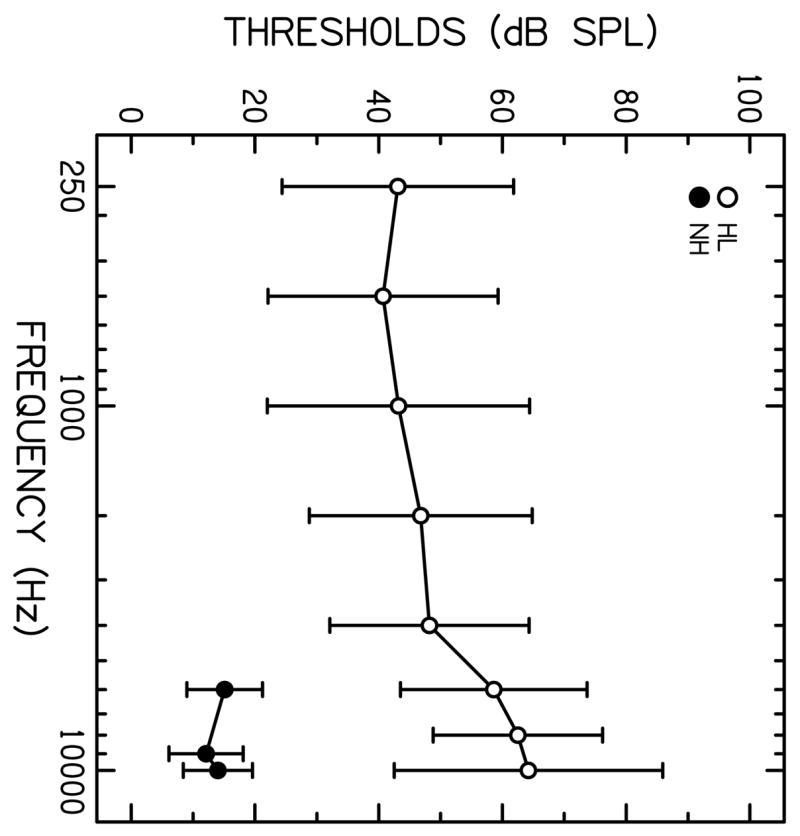

Thirty-two children with NH (16 males and 16 females) and 24 children with HL (15 males and 9 females) participated in this study. All children were between the ages of 7 and 14 years with equal numbers of children in four age groups (7–8, 9–10, 11–12, and 13–14 years). Normal hearing was defined as hearing thresholds <20 dB HL at octave frequencies from 0.25 to 8 kHz. The children with HL had mild-to-moderately-severe sensorineural losses. Fig. 1 shows the average (better ear) audiometric thresholds (+1 SD) for the children with hearing loss. To ensure adequate audibility in the high frequencies for the children with NH, auditory thresholds also were obtained at 6, 9, and 10 kHz. Pure-tone signals were presented via wide-band earphones (Sennheiser, 25D) and thresholds were obtained in 5-dB steps using a customized program that calculates a running mean and standard deviation of all reversals and terminates when the standard error reaches 1.6 dB (Stelmachowicz and Jesteadt, 1984). The same procedure was used to obtain thresholds from 0.25 through 10 kHz for the group with HL. The thresholds for both groups are shown in Fig. 2.

Figure 1.

Mean auditory thresholds (in dB HL) for the children with hearing loss. Values represent the better ear threshold at each frequency. Error bars denote +/− 1 SD from the mean.

Figure 2.

Mean auditory thresholds (in dB SPL) measured with the experimental transducer. Values represent the better ear threshold +/− 1 SD. Only data at 6, 9, and 10 kHz are shown for the group with normal hearing.

It should be noted that we did not attempt to quantify the existence of cochlear “dead regions” in the group with HL. Although the Threshold Equalizing Noise (TEN) test developed by Moore, Huss, Vickers, et al. (2000) was designed as a clinically-feasible method to identify cochlear dead regions, some investigators have questioned the validity of this procedure in identifying non-functional areas of the cochlea (Vestergaard, 2003). For example, Simpson, McDermott, and Dowell (2005) found that nine of ten subjects who were diagnosed with dead regions using the TEN test demonstrated improved speech perception as the audibility of the high-frequency components of speech was increased. Similarly, Mackersie, Crocker, and Davis (2004) reported improvement in consonant identification in quiet and in low-level noise for subjects who were thought to have cochlear dead regions. In addition, Summers, Molis, Musch, et al. (2003) have reported that the results of the TEN test were inconsistent with psychophysical tuning curve results in 8 of 18 subjects tested. In the absence of a valid test for the detection of dead regions, all subjects with thresholds ≤ 70 dB HL from 250–8000 Hz in their better ear were included.

Procedures

Frequency shaping for subjects with HL

To provide appropriate audibility for the children with HL, frequency shaping was applied to all test stimuli using target-sensation-level values (in dB) for average conversational speech provided by the DSL (v.5.0) fitting algorithm (Scollie, Seewald, Cornelisse, et al., 2005). This fitting algorithm only provides targets for frequencies ≤ 6 kHz, so 6 kHz targets also were used to set gain at 8 kHz. Because of the range of hearing thresholds at higher frequencies, system limitations, and concerns for loudness discomfort, the gain applied at 9 and 10 kHz was determined on an individual basis. On average, the peak SL of speech (presented at 65 dB SPL) in the 9–10 kHz region was 14 dB (assuming a 12 dB peak to rms ratio). It is important to note that for a severe hearing loss, this would not necessarily ensure audibility of the weaker components of speech.

Typically, audibility of speech is estimated by comparing hearing levels to the long-term average of the stimuli recorded in the ear canal using a probe microphone. Because small variations in probe-tube placement can result in large variations in SPL in the high frequencies (Gilman & Dirks, 1986), an alternate procedure was used. First, for each child with HL, auditory thresholds were obtained using the same transducer that would be used during the experiment (Sennheiser, 25D). Next, the gain required to meet target sensation levels for the long-term average speech spectrum at 65 dB SPL was calculated across frequency and stored for each child. Stimuli presented to subjects with HL were either preprocessed or frequency shaped online during the course of the experiments.

Measures of phonology, language, and short term memory

The Bankson-Bernthal Quick Screen of Phonology (Bankson and Bernthal, 1990) was administered to identify production errors that could influence scoring of verbal responses. All but one of the children with NH and four of the children with HL scored 100% on this test. Scores for these five children ranged from 82 to 93% and in many cases, test items missed by a particular child reflected the same phonologic error (/ f/ for /θ/ in the words thumb and mouth). Most substitutions appeared to be applied consistently. If a verbal response was unclear, however, the examiner would ask for clarification face to face (e.g., did you mean / f/ as in off or /θ/ as in moth?). To estimate the receptive vocabulary of each child, the Peabody Picture Vocabulary Test [PPVT-III, Form A or B; (Dunn and Dunn, 1997)] was administered. The Digit Span Test (Wechsler, 1991) was administered as a measure of short-term memory. During the administration of these tests, the children with hearing loss wore their personal hearing aids. One child with NH and one child with HL had standard scores > 2 SDs above normal on the PPVT. Two of the children with HL had scores > 2 SDs above average and one had a standard score < 2 SDs below average on the Digit Span Test. Table 1 shows mean results of the PPVT and Digit Span test by age group for both groups of children.

Table 1.

PPVT standard and Digit Span scale scores (Mean = 10) by age group for both the normal-hearing and hearing-impaired children. Standard deviations are shown in parentheses.

| Age Group (yrs) | NH children | HI children | ||

|---|---|---|---|---|

| PPVT | Digit Span | PPVT | Digit Span | |

| 7–8 | 106 (13.6) | 11.1(2.4) | 101 (10.8) | 9.0 (3.0) |

| 9–10 | 116 (9.0) | 10.5 (3.6) | 103 (19.4) | 12.2 (1.8) |

| 11–12 | 108 (14.8) | 9.8 (3.1) | 103 (17.6) | 9.5 (3.8) |

| 13–14 | 114 (11.6) | 10.6 (3.3) | 107 (15.1) | 8.5 (5.5) |

| Mean | 111 (10.5) | 10.5 (3.0) | 104 (15.2) | 9.8 (3.8) |

Fricative perception

Test stimuli were nine English fricatives and affricates (target phonemes: t∫ , d3, f, v, s, z, ∫, θ, ð) presented as VCs in an / i/ context. To provide an estimate of performance that would reflect typical conversational environments, three female talkers produced 5 exemplars of each nonsense syllable. These 135 stimuli were digitally recorded (Computerized Speech Lab) using a dynamic microphone with a response from 0.055 to 14 kHz (Shure, SM48). Speech tokens were amplified (Kay, Model 4500) and sampled at a rate of 44.1 kHz with a quantization of 16 bits. These 135 nonsense syllables were low-pass filtered (>100 dB/octave) at both 5 and 10 kHz to create 270 tokens. All stimuli were mixed with generic speech-shaped noise at a 10 dB SNR (i.e., no attempt was made to match the noise spectrum to individual talkers).

Responses were recorded on a touch-screen monitor that displayed a panel with 11 choices (9 phonemes, the vowel / i/ in isolation, and an “other” category). The youngest children were instructed to repeat each nonsense syllable and a second experimenter in the sound booth (who was blinded to the stimuli) voted on behalf of the child. Older children (usually 9 years and above) were familiarized with the response choices and voted independently. Fifteen different exemplars of each nonsense syllable at each bandwidth were presented for a total of 270 presentations. Feedback was given immediately after each response using a video game format. This feedback was not contingent upon correct responses, but was used only to maintain interest in the task. Results were analyzed in terms of overall percent correct and error patterns by bandwidth.

Novel-word learning paradigm

It has been demonstrated that, under certain circumstances, children are able to learn new words with as few as one or two exposures. This concept has been referred to as “fast mapping”, “quick incidental learning”, and “novel-word learning” (Dollaghan, 1987; Gathercole, Hitch, Service, et al., 1997; Gilbertson & Kamhi, 1995; Rice, Buhr, & Nemeth, 1990). Stelmachowicz, Pittman, Hoover, et al. (2004a) found that this type of paradigm was sensitive to presentation level in a study involving children with both NH and HL. They speculated that this type of task may be an effective way to compare various forms of hearing-aid signal processing. In the current study, eight CVC novel words were constructed using 16 commonly occurring consonants in English. Table 2 shows the orthographic representation and the phonetic transcription of each word as well as the associated picture. These eight novel words were embedded in a 4-minute, animated story (Microsoft, PowerPoint). The story was read by a female talker and recorded as described previously. The eight novel words were paired with pictures of fictitious objects which appeared three times within the context of the story. Half of the novel words were filtered at 5 kHz and the other half were filtered at 10 kHz. To reduce the perceptual effects associated with filtering at 5 kHz, story segments immediately preceding and following these four words were also filtered. The story was presented in speech-shaped noise at a 10 dB SNR.

Table 2.

Novel words and their associated pictures.

| Word | Phonetic Transcription | Picture |

|---|---|---|

| Foss | fɔs |

|

| Wul | wʌl |

|

| Hain | heIn |

|

| Kathe | kæð |

|

| Mide | maId |

|

| Teap | tip |

|

| Riv | riv |

|

| Zeb | zεb |

|

It has been well documented that many factors can affect word learning (Oetting, Rice, & Swank, 1995; Pittman et al., 2005). For example, some children may be more likely to remember a word due to the color or shape of an object, while others may recall words that sound interesting or similar to known words. To minimize the effects of unknown variables on word learning, two versions of the same story were created. To counterbalance the bandwidth conditions, the four words filtered at 5 kHz in Version 1 were filtered at 10 kHz in Version 2 and vice versa. Within each group (NH, HL) half of the children heard Version 1 and the other half heard Version 2. After viewing the story once, the children were given a short break, after which the story was viewed again. Immediately following the second presentation of the story, word learning was evaluated using an identification task in which pictures of all eight words were displayed on a touch-screen monitor. Children were instructed to touch the picture that corresponded to the word presented. As described earlier, feedback was given after each response. Each word was presented 10 times for a total of 80 trials. Results were analyzed in terms of overall percent correct by bandwidth.

Listening effort

A modified dual-task paradigm was used to assess listening effort. In this study, subjects were required to perform two tasks concurrently. The primary task, word recognition, consisted of two conditions (stimuli filtered at either a 5 or 10 kHz bandwidth) and the secondary task was digit recall. Based on a limited capacity model (Kahneman, 1973), one would expect a decrease in performance on one or both tasks when the tasks compete for the same resources. Stimuli for the word recognition task were 100 monosyllabic PBK words (Haskins, 1949) recorded by a female talker and mixed with speech-shaped noise at an SNR of +8 dB1. These PBK words were filtered at both 5 kHz and 10 kHz to create two versions that were counterbalanced across subjects. Baseline performance for word recognition (single task) was measured for 25 filtered and 25 unfiltered PBK words presented via wideband earphones at 60 dB SPL for the subjects with NH and with appropriate frequency shaping for each subject with HL.

Stimuli for the digit recall task (secondary task) consisted of randomly-generated sets of 5-digit numbers displayed on a computer monitor. Baseline performance for digits was measured for 10 trials of 5-digit items displayed on a computer monitor. Subjects viewed each set of digits for 3 seconds. After an additional 10 seconds, they were asked to verbally repeat the digits in order. It is important to note that the rehearsal of digits, words, or letters in working memory is considered a verbal process, in which the items are stored and repeated in an acoustic-articulatory code. This “phonological loop” is considered to involve the same resources as utilized for word recognition according to Baddeley, Gathercole & Papagno (1998). The evidence supporting the verbal nature of this task is long-standing, starting with the studies of Conrad (1964) demonstrating that errors made recalling visually-presented letters tended to be “sounds-like” errors and not “looks-like” errors. This auditory-articulatory code for visually presented digits, letters and words has been a part of Baddeley’s (1986) working model from the beginning. Subsequent findings of rhyme interference, articulatory suppression and production-duration effects on the memory for visually-presented stimuli have strengthened this view.

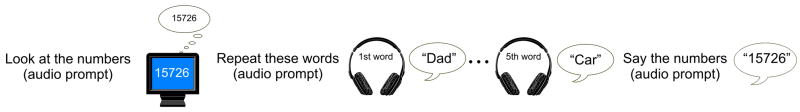

Prior to data collection, children were told that the most important task was repetition of words. Interpretation of results in a dual-task paradigm is contingent upon a focus on the primary task. As the primary task becomes more difficult (in this case by filtering the words), performance on the secondary task (digit recall) is expected to decrease. A memory pre-load technique (Baddeley & Hitch, 1974) was used to increase processing load in short-term memory, creating competition for attentional resources. Fig. 3 illustrates the sequence of events. At the beginning of each trial, 5 digits were displayed on the computer monitor for 3 seconds, followed by a verbal prompt to “Repeat these words”. Five monosyllabic words (either filtered or unfiltered) then were presented via earphones one at a time. Instructions to the child were to “continue rehearsing numbers” throughout the word repetition task. After every five words, children were prompted to recall the preloaded digits. Filter condition was alternated after every five words and counterbalanced across children. This sequence was repeated 10 times for a total of 5 digit recall trials and 25 monosyllabic words for each filter condition. Data were analyzed in terms of percent correct word recognition and digit recall.

Figure 3.

Schematic of the task sequence in one dual-task trial.

Results

The primary goal of the current study was to investigate the effects of stimulus bandwidth on a variety of auditory-related skills that play an important role in the development of speech and language. These include perception of nonsense syllables, word recognition, word learning, and listening effort. Results will be presented in each of these areas along with supporting statistical analyses. Unless stated otherwise, a three-way mixed analysis of variance (ANOVA) was conducted with bandwidth as the within-subjects factor and hearing group and age group as between-subjects factors.

Fricative Perception

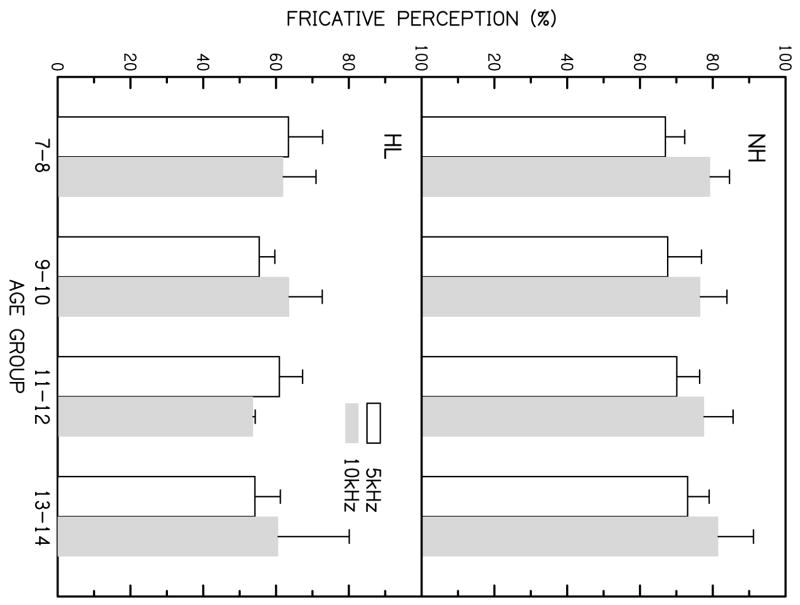

Results of the fricative perception task are shown as a function of age group in Fig. 4. The top and bottom panels show results for the children with NH and HL, respectively. In both cases, no obvious age-related trends are apparent. However, performance at the 10 kHz bandwidth appears to improve only for the group with NH. A three-way ANOVA (bandwidth × hearing status × age group) revealed significant main effects for hearing status [F(1, 48) = 64.63; p< .001] and bandwidth [F(1, 48) = 33.65; p< .001] as well as a bandwidth × hearing status [F(1,48) = 15.97; p<.001] and bandwidth × hearing status × age group [F(3, 48) = 3.15; p< .05) interactions . No main effect of age group or hearing × age group interaction was observed. Visual inspection of Fig. 4 suggested that the main effect of bandwidth may be due primarily to the results from the NH group. Therefore, separate two-way ANOVAs (bandwidth × age group) were conducted for each group. Results revealed a significant main effect for bandwidth for the group with NH [F(1,28) = 73.84; p<.001] and a significant bandwidth × age group interaction for the group with HL (F(3,20) = 3.34, p<.05). An analysis of fricative perception by talker revealed similar scores across all three talkers (74.8%, 74.9%, and 74.2%).

Figure 4.

Mean fricative perception (in %) as a function of age group for children with normal hearing (upper panel) and children with hearing loss (lower panel). Error bars represent +/− 1 SD. Open and filled bars represent the 5 and 10 kHz conditions, respectively.

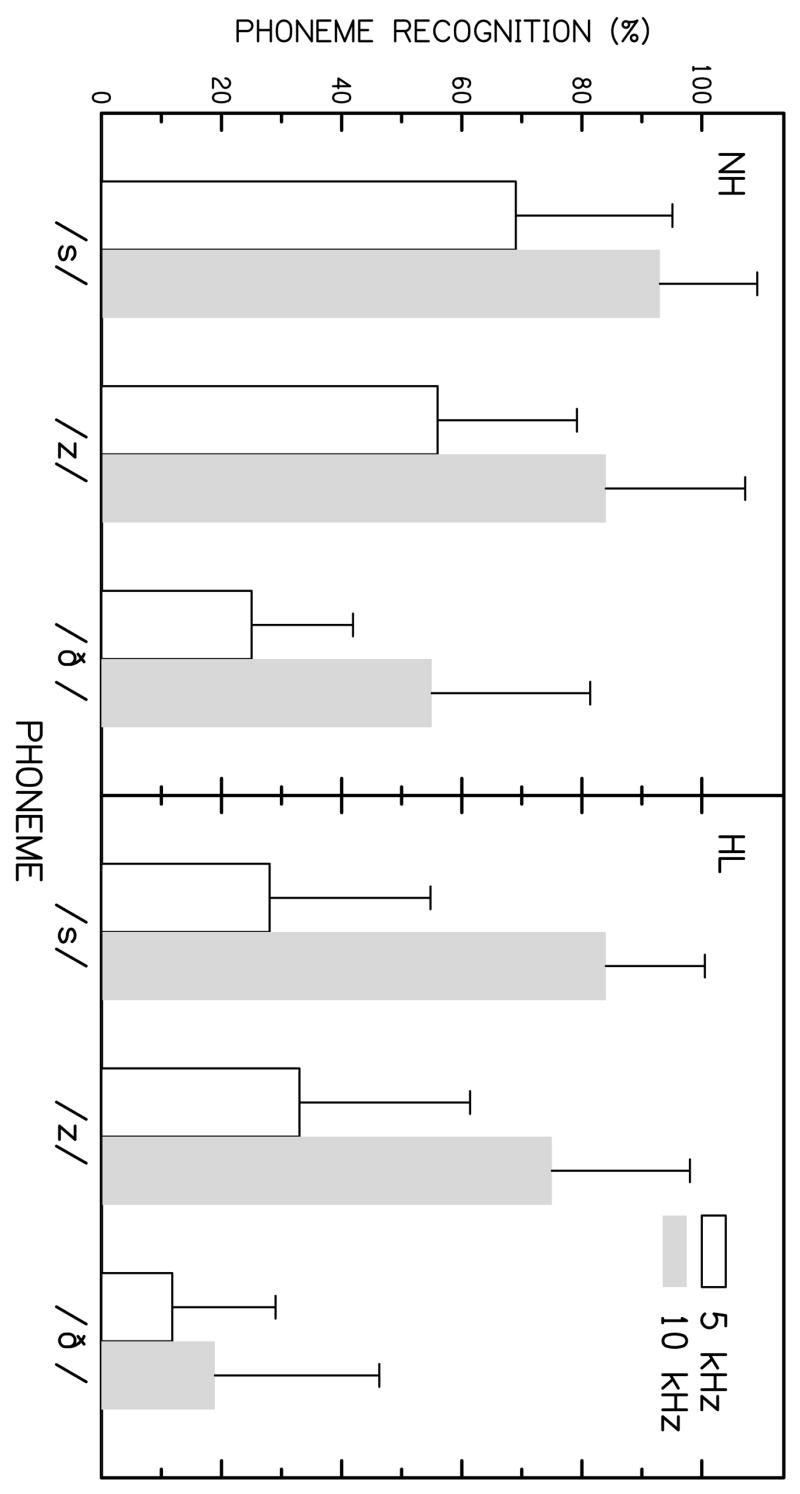

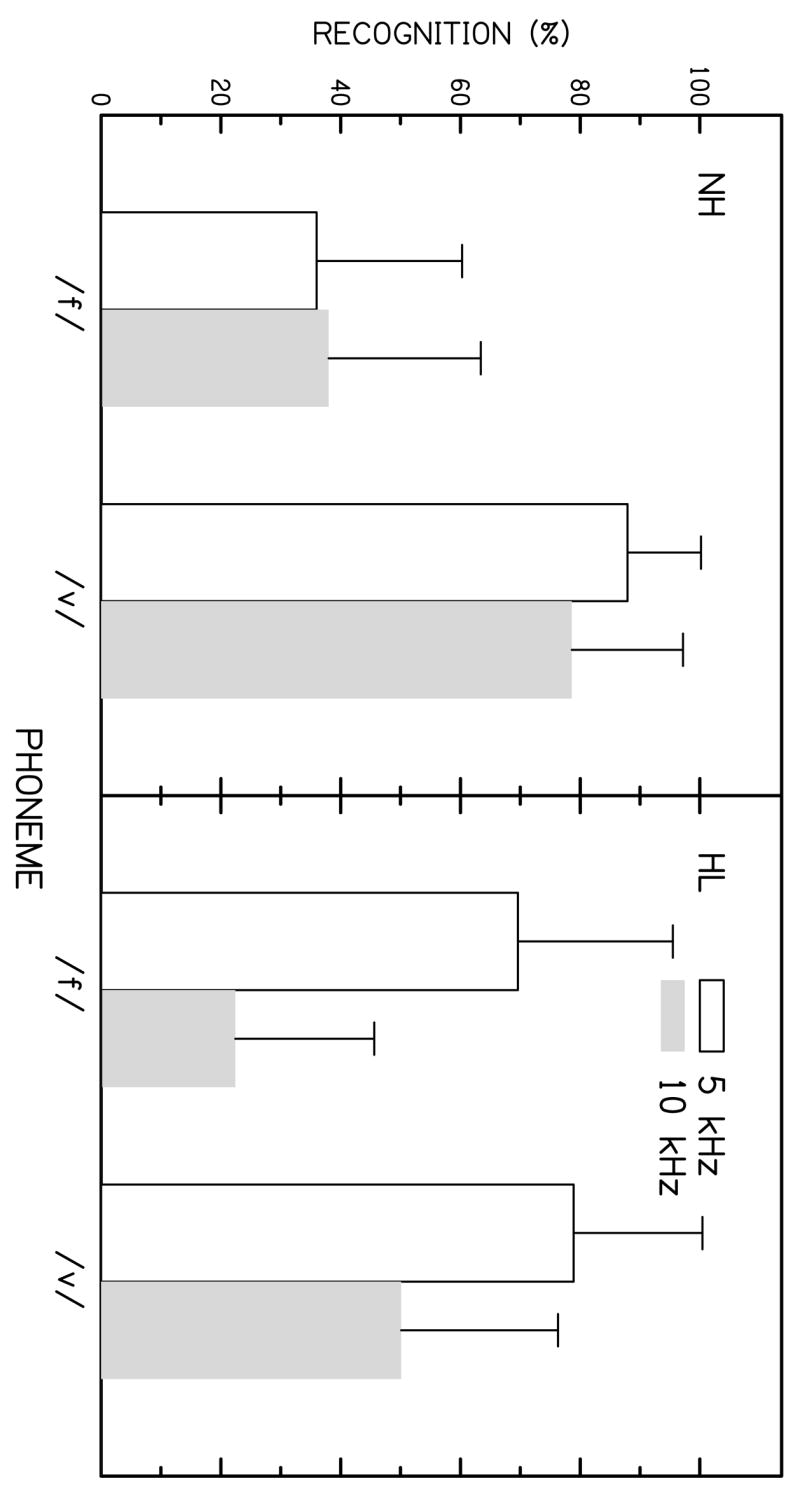

The lack of a significant main effect for bandwidth in the group with HL was surprising given the results of previous studies (Stelmachowicz et al., 2001; 2002; 2004a; Stelmachowicz, Pittman, Hoover, et al., 2004b). To explore these effects in more detail, an error analysis was conducted for the 9 phonemes used in this study. As shown in Fig. 5, results revealed relatively large bandwidth effects (mean absolute differences of 24–56 percentage points) for three phonemes (/ s/, / z/, / ð/) in both groups. For the children with NH, mean improvements at the 10 kHz bandwidth ranged from 24–28 percentage points. For / s/ and / z/, the children with HL showed even larger mean improvements than children with NH (56 and 42 percentage points, respectively), but a smaller improvement for / ð/ (7 percentage points). Follow-up tests revealed significant bandwidth effects for all three phonemes for the children with NH [/ s/: F(1,28) = 30.2, p<.001; / z/: F(1,28) = 87.59, p<.001; / ð/: F(1,28) = 63.13, p<.001], but only for / s/ [F(1,20) = 122.67, p<.001] and / z/ [F(1,20) = 49.61, p<.001] for children with HL. Given the large bandwidth effects for / s/ and / z/, it was somewhat surprising that the main effect of bandwidth was not significant for the group with HL. Further inspection revealed a marked decrement in performance for two other phonemes at the 10 kHz bandwidth for this group. Figure 6 shows phoneme recognition for / f/ and / v/ for both groups of subjects. For the group with NH, no significant changes in the perception of / f/ occurred for the 10 kHz bandwidth but the decrease in the perception of / v/ (11 percentage points) was significant [F(1,28) = 12.08, p<.05]. For the group with HL, significant decrements in performance occurred for both phonemes at the wider bandwidth (-43 and -29 percentage points for / f/ and / v/, respectively) [/ f/: F(1,20) = 61.31, p<.001; / v/: F(1,20) = 44.94, p<.001]. The children with NH tended to confuse / v/ with / ð/. Interestingly, the error patterns for the children with HL were different; the most frequently occurring error for / f/ was / s/ (accounting for 42% of errors) and the most common error for / v/ was / z/ (17% of errors). It is important to note that in the current study, an increase in bandwidth also was accompanied by increased gain in order to meet specified audibility requirements. Because both phonemes are relatively low in amplitude, it is possible that the combination of a wider bandwidth and increased amplitude caused the children with HL to mistake these normally low-amplitude sounds for / s/ and / z/. Table 3 shows these confusions as a function of age group. For the / f/ - / s/ confusion, there is a fair amount of variability at all ages and the error rate does not decrease until 13–14 years. Fewer errors and less within group variability occur for the / v/ - / z/ confusion.

Figure 5.

Mean phoneme recognition (in %) for / s/, / z/, and / ð/. Left and right panels show results for the children with normal hearing and hearing loss, respectively. The parameter in each panel is bandwidth condition.

Figure 6.

Mean phoneme recognition (in %) for / f/ and / v/ following the convention in Fig. 5.

Table 3.

Mean percentage of / f/ - / s/ and / v/ - / z/ confusions made by the HI children in the four age groups. The number is parentheses indicates the SD.

| Age Group (yrs) | Confusion | |

|---|---|---|

| / f/ - / s/ | /v / - / z/ | |

| 7–8 | 46% (30.0) | 25% (19.9) |

| 9–10 | 40% (22.1) | 12% (11.5) |

| 11–12 | 54% (34.8) | 20% (15.7) |

| 13–14 | 29% (21.7) | 11% (9.1) |

Word Recognition

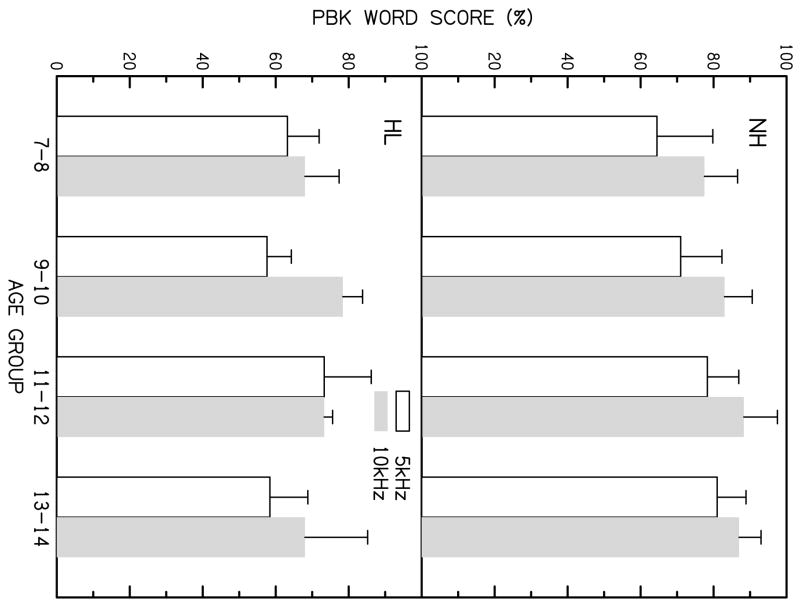

Figure 7 shows results for the monosyllabic word recognition task (derived from the baseline listening effort task) using the same format as in Fig. 4. Results of a three-way ANOVA revealed a significant main effect for bandwidth [F(1, 48) = 31.3; p<.001]. The between subjects factors revealed a significant effect of hearing status [F(1,48) = 39.6; p<.001] but no effect of age group and no hearing × age group interaction. It is of interest to compare these bandwidth effects for words with those shown for nonsense syllables in Fig. 4. The bandwidth effects for the group with NH are similar in both cases. In contrast, the children with HL appear to benefit more from a widened bandwidth when the test stimuli are words. The relatively large decrement in performance for / f/ and / v/ at the 10 kHz bandwidth did not appear to influence the performance for words. It is possible that factors such as linguistic knowledge and phonotactic probabilities may have facilitated perception for word level stimuli (Boothroyd & Nittrouer, 1988; Storkel, 2001).

Figure 7.

Mean PBK scores (in %) as a function of age group for children with normal hearing (upper panel) and hearing loss (lower panel) children. Error bars represent +/− 1 SD.

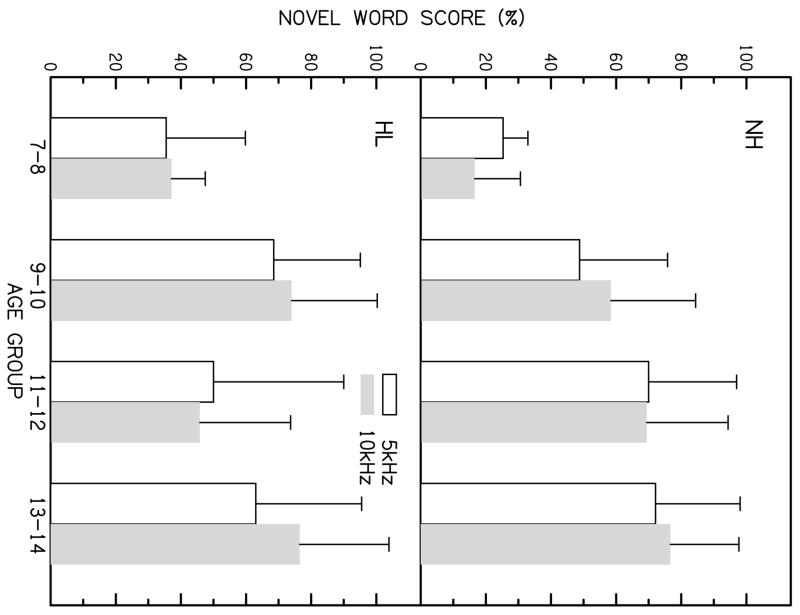

Novel Word Learning

Figure 8 shows mean scores (in %) on the novel-word identification task as a function of age group for both groups of children. In general, the effects of bandwidth appear to be minimal and a systematic increase in performance as a function of age is only apparent for the children with NH. Results of a three-way ANOVA revealed no significant effects of bandwidth. An analysis of between-subjects factors revealed a significant effect of age group [F(3,48)=9.22; p<.001], but no hearing status or hearing × age group interaction, despite the fact that 9–10 year olds performed as well as 13–14 year olds. The lack of a significant hearing × age group interaction is likely due to high variance and unequal sample sizes between the groups.

Figure 8.

Mean novel word scores (in %) as a function of age group for children with normal hearing and hearing loss. Error bars represent +/− 1 SD.

The lack of a significant bandwidth effect is consistent with the results of Pittman et al. (2005), refuting our hypothesis that increasing task difficulty by adding noise would reveal a bandwidth effect. Consistent with previous reports (Lederberg, Prezbindowski, & Spencer, 2000; Pittman et al., 2005; Stelmachowicz et al., 2004a), significant positive correlations were observed between the PPVT raw scores and novel-word learning for both groups (NH: 5 kHz = 0.694; 10 kHz = 0.782; HI: 5 kHz = 0.511; 10 kHz = 0.542). Correlations for the groups with NH and HL were significant at the 0.01 and 0.05 level (2-tailed), respectively. The unexpectedly high novel-word scores for the 9–10 year old children with HL were explored by examining differences in degree of hearing loss, PPVT standard scores, and digit span scale scores across age. Although no significant differences in PTA or PPVT standard scores were observed, mean performance on the digit span test for the 9–10 year old group was approximately one SD above that of the other three groups. These results suggest that the higher novel-word scores for this group are most likely due to differences in short-term memory.

Listening Effort

Table 4 shows performance (collapsed across age) for the two dual-task conditions in comparison to the single-task condition for both groups. As expected, performance on the single-task condition was relatively high and better than performance on either of the dual task conditions for both groups. Intersubject variability was higher for the dual-task conditions than for the single-task condition, but across the three conditions, performance was similar for children with NH and HL.

Table 4.

Mean performance on the digit recall task (in %) for the 5 and 10 kHz dual-task conditions and for the single-task condition for both subject groups. The number is parentheses indicates the SD.

| Group | Dual Task 5 kHz | Dual Task 10 kHz | Single Task |

|---|---|---|---|

| NH | 54.6% (22.5) | 57.4% (26.5) | 87.1 (15.1) |

| HL | 45.5% (24.8) | 52.0% (23.2) | 84.8% (16.6) |

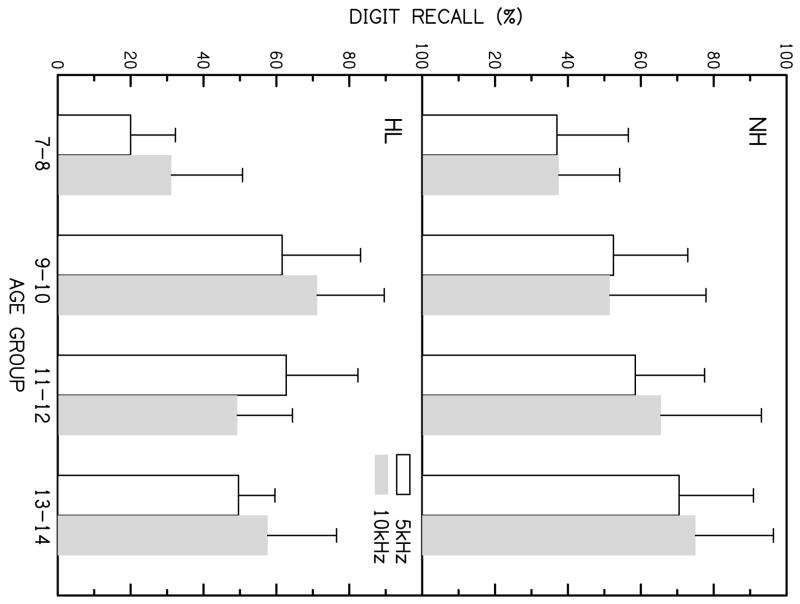

Typically, dual-task results are presented as a difference between single- and dual-task performance for the secondary task. To preserve information about absolute performance, however, Fig. 9 shows performance for the secondary task (digit recall) as a function of age group for both bandwidths. In general, there does not appear to be a marked improvement in performance at the wider bandwidth for either group. In addition, age trends similar to those observed in the novel-word learning task are apparent here. Results of a three-way ANOVA revealed no significant effects of bandwidth on listening effort. Analysis of between subjects factors revealed a significant effect of age group [F(3, 48) = 8.4; p<.001] but no hearing status or hearing status × age group interaction . As was observed in the novel-word learning task, mean performance for the 9–10 year olds was higher than expected and is most likely due to differences in short-term memory. Interestingly, paired t-tests of the 5- and 10 kHz word repetition data in the dual-task paradigm revealed a statistically significant effect of bandwidth only for the group with NH (p<.05). This implies that, at least for these children, performance improved with bandwidth but listening effort was not affected. As expected, significant positive correlations were observed between the digit span raw scores and digit performance in the dual task (NH: 5 kHz = 0.516; 10 kHz = 0.514; HI: 5 kHz = 0.703; 10 kHz = 0.476). With the exception of the 10 kHz condition for the children with HL (0.05 level), all data were significant at the 0.01 level (2-tailed).

Figure 9.

Mean digit recall scores (in %) in the dual-task condition as a function of age group for children with normal hearing and hearing loss. Error bars represent +/− 1 SD.

Surprisingly, systematic age-related differences in performance only occurred for the word recognition, novel-word, and listening effort (digit recall) tasks and only for the children with NH. These findings may reflect the increased variability in performance typically seen in children with HL, presumably due to factors such as age of identification, hearing aid use, parental support, and educational setting.

Discussion

Previous studies in our laboratory have suggested that a restricted bandwidth, such as that found in typical hearing aids, may have a negative impact on the perception of / s/ for individuals with hearing loss. Recent data from our 5-year longitudinal study of phonological development suggest that the entire fricative class is delayed in young hearing-impaired children more than any other class of phonemes (Moeller et al., submitted). The goal of the current study was to expand our previous work to assess the effects of bandwidth using a wider range of stimuli, a variety of auditory tasks, and including the interfering effects of noise. In the fricative/affricate perception task children had to rely solely on acoustic-phonetic skills to identify VC nonsense syllables. Under these circumstances, a significant bandwidth effect was observed only for the children with NH. This is not surprising because children with NH would be accustomed to listening to wide bandwidth speech. Further inspection of the data from children with HL revealed that the lack of a significant bandwidth effect in this group was due to increased errors for two phonemes (/ f/ and / v/) at the wider bandwidth (/ f/: -48 percentage points; / v/: -29 percentage points). It is likely that the combined effects of an extended bandwidth and increased stimulus amplitude caused the children with HL to mistake / f/ for / s/ and / v/ for / z/. While this might seem to suggest that a wider hearing-aid bandwidth might have substantial negative consequences, it is important to recognize that the children in this study were not able to monitor their own productions as would occur with wearable hearing instruments. In addition, the auditory-only presentation did not allow them to observe the articulatory movements of talkers as would occur in typical conversations. If children were able to monitor their own fricative production and benefit from visual cues, it is possible that they would quickly learn auditory distinctions between the phonemes / f/ - / s/ and / v/ - / z/. Because the bandwidth of current hearing aids is restricted, this hypothesis cannot be easily tested at the present time.

An analysis of confusion patterns revealed that both groups of children benefit from an extended bandwidth in the perception of / s/ and / z/, and that, on average, the bandwidth effect was substantially larger in the group with HL. For / s/, the mean benefit of a wider bandwidth was 24 and 56 percentage points for the children with NH and HL, respectively. Similar values for / z/ were 28 and 42 percentage points. As mentioned earlier, the relative importance of these two phonemes in the English language strengthens the importance of this finding.

Significant bandwidth effects also were observed for the repetition of monosyllabic words in both groups. Interestingly, the / f/ and / v/ confusions that occurred for nonsense syllables at the 10 kHz bandwidth were not apparent for word-level stimuli. Factors such as vocalic transitions, linguistic knowledge, and phonotactic probability may account for this finding. It is possible that children in this age range have learned to use secondary acoustic and linguistic cues. However, this may not be the case for younger children.

For both the novel-word learning and listening effort tasks, no effects of bandwidth were observed. Both tasks are more complex than the two perception tasks described above and both involve memory. It has been suggested that experimental details and the strategies of individual children may influence results in novel-word learning paradigms (Horohov & Oetting, 2004; Rice, Oetting, Marquis, et al., 1994). For example, story details, interest value, and the salience of objects or story characters may supplement or override the acoustic effects being investigated. In addition, children would not necessarily need to recall or learn the entire word in order to identify the objects correctly since the identification test was an 8-choice closed set format and the 8 words were not phonetically similar. These factors may have reduced the sensitivity of this type of paradigm to bandwidth effects. The dual task paradigm used to measure listening effort required children to rehearse a string of five digits while repeating five words, after which they were asked to recall the digits. In this paradigm, a reduction in digit scores for the filtered word trials would have suggested that a restricted stimulus bandwidth increases listening effort, but no such effect was found. Because significant improvements in perception were observed with increased bandwidth for PBK words in both the baseline and dual-task conditions, it may be that larger bandwidth effects than those observed here (i.e., 10 percentage points) may be necessary in order to influence listening effort.

Summary and Conclusions

In summary, results of the current study suggest that a restricted stimulus bandwidth can affect the perception of / s/ and / z/ spoken by female talkers. For these two phonemes, the magnitude of the bandwidth effect was larger for the children with HL. The current results are consistent with previous studies (Moeller et al., Reference Note 1; Pittman et al., 2003; Stelmachowicz et al., 2001; 2002) and can be explained largely by the acoustic characteristics of these phonemes spoken by female talkers. For the children with NH, a significant bandwidth effect was observed for both nonsense syllables and words. For the children with HL, no overall bandwidth effect was observed in the nonsense syllable task, but a significant bandwidth effect was found for PBK words.

Despite the lack of a significant overall bandwidth effect for nonsense syllables in the group with HL, large mean improvements were observed for both / s/ (56 percentage points) and / z/ (42 percentage points) in the 10 kHz bandwidth condition. Given the importance of these phonemes in the English language, an inability to perceive these sounds correctly may have a negative impact on phonological and morphological development, as well as speech production. Delays in these areas are likely to have implications for overall language development and academic performance. Further studies are needed to determine if the / s/ - / f/ and / z/ - / v/ confusions found at the 10 kHz bandwidth for children with HL occur with wearable wideband hearing instruments.

It is likely that the lack of significant bandwidth effects for the novel-word learning and listening effort tasks was due to task insensitivity. Alternative approaches may be needed in order to assess potential effects of a limited bandwidth on the development of high-level complex auditory skills.

Reference Notes

Moeller, M.P., Stelmachowicz, P.G., Hoover, B., Putman, C., Arbataitis, K., Bohnenkamp, G. Peterson, B., Wood, S., Lewis, D., Pittman, A. (submitted). Vocalizations of Infants with Hearing Loss Compared to Infants with Normal Hearing: Part I – Phonetic Development. Ear & Hearing.

Sotto, C. D., Creach, E. R., Hunter, M., Vatsa, R., Neils-Strunjas, J., & Creaghead, N. A. (2004). Occurrence of Fricatives in Babbling and Early Words. Paper presented at the annual meeting of the American Speech-Language Hearing Association, Philadelphia, PA, November.

Acknowledgments

The authors would like to thank Mary Pat Moeller, Kanae Nishi, Darcia Dierking, and Andrew Lotto for helpful comments on earlier versions of this manuscript. This work was supported by grants from the National Institute on Deafness and Other Communication Disorders, National Institutes of Health (R01 DC04300 and P30 DC04662).

This work was supported by the National Institute on Deafness and Other Communication Disorders (R01 DC04300 and P30 DC04662).

Footnotes

The original plan was to use a +10 dB SNR with the PBK words, but pilot data revealed ceiling effects in the group with NH at this SNR. Thus, a +8 dB SNR was used for both groups of children.

References

- Baddeley AD. Working Memory. Oxford: Clarendon Press; 1986. [Google Scholar]

- Baddeley AD, Hitch GJ. Working memory. In: Bower GH, editor. The Psychology of Learning and Memory. Vol. 8. London: Academic Press; 1974. pp. 47–90. [Google Scholar]

- Baddeley A, Gathercole S, Papagno C. The phonological loop as a language learning device. Psychological Review. 1998;105:158–173. doi: 10.1037/0033-295x.105.1.158. [DOI] [PubMed] [Google Scholar]

- Bankson NW, Bernthal JE. Quick Screen of Phonology. San Antonio, TX: Special Press, Inc; 1990. [Google Scholar]

- Boothroyd A, Erickson FN, Medwetsky L. The hearing aid input: a phonemic approach to assessing the spectral distribution of speech. Ear & Hearing. 1994;15:432–442. [PubMed] [Google Scholar]

- Boothroyd A, Medwetsky L. Spectral distribution of /s/ and the frequency response of hearing aids. Ear & Hearing. 1992;13:150–157. doi: 10.1097/00003446-199206000-00003. [DOI] [PubMed] [Google Scholar]

- Boothroyd A, Nittrouer S. Mathematical treatment of context effects in phoneme and word recognition. Journal of the Acoustical Society of America. 1988;84:101–114. doi: 10.1121/1.396976. [DOI] [PubMed] [Google Scholar]

- Cornelisse LE, Gagné JP, Seewald R. Ear level recordings of the long-term average spectrum of speech. Ear & Hearing. 1991;12:47–54. doi: 10.1097/00003446-199102000-00006. [DOI] [PubMed] [Google Scholar]

- Conrad R. Acoustic confusions in immediate memory. British Journal of Psychology. 1964;55:75–84. [Google Scholar]

- Davis JM, Elfenbein J, Schum R, Bentler RA. Effects of mild and moderate hearing impairments on language, educational, and psychosocial behavior of children. Journal of Speech & Hearing Disorders. 1986;51:53–62. doi: 10.1044/jshd.5101.53. [DOI] [PubMed] [Google Scholar]

- Dollaghan CA. Fast mapping in normal and language-impaired children. Journal of Speech & Hearing Disorders. 1987;52:218–222. doi: 10.1044/jshd.5203.218. [DOI] [PubMed] [Google Scholar]

- Dunn LM, Dunn LM. Peabody Picture Vocabulary Test. 3. Circle Pines, MN: American Guidance Service, Inc; 1997. [Google Scholar]

- Elfenbein JL, Hardin-Jones MA, Davis JM. Oral communication skills of children who are hard of hearing. Journal of Speech & Hearing Research. 1994;37:216–226. doi: 10.1044/jshr.3701.216. [DOI] [PubMed] [Google Scholar]

- Gathercole SE, Hitch GJ, Service E, Martin AJ. Phonological short-term memory and new word learning in children. Developmental Psychology. 1997;33:966–979. doi: 10.1037//0012-1649.33.6.966. [DOI] [PubMed] [Google Scholar]

- Gilbertson M, Kamhi AG. Novel word learning in children with hearing impairment. Journal of Speech & Hearing Research. 1995;38:630–642. doi: 10.1044/jshr.3803.630. [DOI] [PubMed] [Google Scholar]

- Gilman S, Dirks D. Acoustics of ear canal measurement of eardrum SPL in simulators. Journal of the Acoustical Society of America. 1986;80:783–793. doi: 10.1121/1.393953. [DOI] [PubMed] [Google Scholar]

- Haskins HA. A phonetically balanced test of speech discrimination for children. Northwestern University; Evanston, IL: 1949. Master’s thesis. [Google Scholar]

- Horohov J, Oetting J. Effects of input manipulations on the word learning abilities of children with and without specific language impairment. Applied Psycholinguistics. 2004;25:43–65. [Google Scholar]

- Kahneman D. Attention and effort. Englewood Cliffs, NJ: Prentice-Hall; 1973. [Google Scholar]

- Kortekaas RW, Stelmachowicz PG. Bandwidth effects on children’s perception of the inflectional morpheme /s/: acoustical measurements, auditory detection, and clarity rating. Journal of Speech, Language, & Hearing Research. 2000;43:645–660. doi: 10.1044/jslhr.4303.645. [DOI] [PubMed] [Google Scholar]

- Lederberg AR, Prezbindowski AK, Spencer PE. Word-learning skills of deaf preschoolers: the development of novel mapping and rapid word-learning strategies. Child Development. 2000;71:1571–1585. doi: 10.1111/1467-8624.00249. [DOI] [PubMed] [Google Scholar]

- Mackersie CL, Crocker TL, Davis RA. Limiting high-frequency hearing aid gain in listeners with and without suspected cochlear dead regions. Journal of the American Academy of Audiology. 2004;15:498–507. doi: 10.3766/jaaa.15.7.4. [DOI] [PubMed] [Google Scholar]

- McCune L, Vihman M. Early Phonetic and Lexical Development: A Productivity Approach. Journal Speech, Language, & Hearing Research. 2001;44:670–684. doi: 10.1044/1092-4388(2001/054). [DOI] [PubMed] [Google Scholar]

- McGowan RS, Nittrouer S. Differences in fricative production between children and adults: evidence from an acoustic analysis of /∫/ and /s/ Journal of the Acoustical Society of America. 1988;83:229–236. doi: 10.1121/1.396425. [DOI] [PubMed] [Google Scholar]

- Moore BCJ, Huss M, Vickers DA, Glasberg BR, Alcantara JI. A test for the diagnosis of dead regions in the cochlea. British Journal of Audiology. 2000;34:205–224. doi: 10.3109/03005364000000131. [DOI] [PubMed] [Google Scholar]

- Nittrouer S. Children learn separate aspects of speech production at different rates: evidence from spectral moments. Journal of the Acoustical Society of America. 1995;97:520–530. doi: 10.1121/1.412278. [DOI] [PubMed] [Google Scholar]

- Nittrouer S, Boothroyd A. Context effects in phoneme and word recognition by young children and older adults. Journal of the Acoustical Society of America. 1990;87:2705–2715. doi: 10.1121/1.399061. [DOI] [PubMed] [Google Scholar]

- Oetting JB, Rice ML, Swank LK. Quick Incidental Learning (QUIL) of words by school-age children with and without SLI. Journal of Speech, Language, & Hearing Research. 1995;38:434–445. doi: 10.1044/jshr.3802.434. [DOI] [PubMed] [Google Scholar]

- Pittman AL, Stelmachowicz PG, Lewis DE, Hoover BM. Spectral characteristics of speech at the ear: implications for amplification in children. Journal of Speech, Language, & Hearing Research. 2003;46:649–657. doi: 10.1044/1092-4388(2003/051). [DOI] [PubMed] [Google Scholar]

- Pittman AL, Lewis DL, Hoover BM, Stelmachowicz PG. Rapid word-learning in normal-hearing and hearing-impaired children: Effects of age, receptive vocabulary, and high-frequency amplification. Ear & Hearing. 2005;26:619–629. doi: 10.1097/01.aud.0000189921.34322.68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rice ML, Buhr JC, Nemeth M. Fast mapping word-learning abilities of language-delayed preschoolers. Journal of Speech & Hearing Disorders. 1990;55:33–42. doi: 10.1044/jshd.5501.33. [DOI] [PubMed] [Google Scholar]

- Rice ML, Oetting JB, Marquis J, Bode J, Pae S. Frequency of input effects on word comprehension of children with specific language impairment. Journal of Speech, Language, & Hearing Research. 1994;37:106–122. doi: 10.1044/jshr.3701.106. [DOI] [PubMed] [Google Scholar]

- Rudmin F. The why and how of hearing /s/ Volta Review. 1983;85:263–269. [Google Scholar]

- Scollie S, Seewald R, Cornelisse L, Moodie S, Bagatto M, Laurnagaray D, Beaulac S, Pumford J. Desired Sensation Level Multistate Input/Output Algorithm. Trends in Amplification. 2005;9:159–197. doi: 10.1177/108471380500900403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simpson A, McDermott HJ, Dowell RC. Benefits of audibility for listeners with severe high-frequency hearing loss. Hearing Research. 2005;210:42–52. doi: 10.1016/j.heares.2005.07.001. [DOI] [PubMed] [Google Scholar]

- Stelmachowicz PG, Jesteadt W. Psychophysical tuning curves in normal-hearing listeners: test reliability and probe level effects. Journal of Speech & Hearing Research. 1984;27:396–402. doi: 10.1044/jshr.2703.396. [DOI] [PubMed] [Google Scholar]

- Stelmachowicz PG, Pittman AL, Hoover BE, Lewis DE, Moeller MP. The importance of high-frequency audibility in the speech and language development of children with hearing loss. Archives of Otorhinolaryngology. 2004b;130:556–562. doi: 10.1001/archotol.130.5.556. [DOI] [PubMed] [Google Scholar]

- Stelmachowicz PG, Pittman AL, Hoover BM, Lewis DE. Effect of stimulus bandwidth on the perception of /s/ in normal- and hearing-impaired children and adults. Journal of the Acoustical Society of America. 2001;110:2183–2190. doi: 10.1121/1.1400757. [DOI] [PubMed] [Google Scholar]

- Stelmachowicz PG, Pittman AL, Hoover BM, Lewis DE. Aided perception of /s/ and /z/ by hearing-impaired children. Ear & Hearing. 2002;23:316–324. doi: 10.1097/00003446-200208000-00007. [DOI] [PubMed] [Google Scholar]

- Stelmachowicz PG, Pittman AL, Hoover BM, Lewis DE. Novel-word learning in children with normal hearing and hearing loss. Ear & Hearing. 2004a;25:47–56. doi: 10.1097/01.AUD.0000111258.98509.DE. [DOI] [PubMed] [Google Scholar]

- Stoel-Gammon C. Phonetic Inventories, 15–24 months: A longitudinal study. Journal of Speech & Hearing Research. 1985;28:505–512. doi: 10.1044/jshr.2804.505. [DOI] [PubMed] [Google Scholar]

- Storkel HL. Learning new words: phonotactic probability in language development. Journal of Speech, Language, & Hearing Research. 2001;44:1321–1337. doi: 10.1044/1092-4388(2001/103). [DOI] [PubMed] [Google Scholar]

- Summers V, Molis MR, Musch H, Walden BE, Surr RK, Cord MT. Identifying dead regions in the cochlea: psychophysical tuning curves and tone detection in threshold-equalizing noise. Ear & Hearing. 2003;24:133–142. doi: 10.1097/01.AUD.0000058148.27540.D9. [DOI] [PubMed] [Google Scholar]

- Vestergaard MD. Dead regions in the cochlea: implications for speech recognition and applicability of articulation index theory. International Journal of Audiology. 2003;42:249–261. doi: 10.3109/14992020309078344. [DOI] [PubMed] [Google Scholar]

- Wechsler D. Wechsler Intelligence Scale for Children. 3. London: Psychological Corporation; 1991. [Google Scholar]