Abstract

Vision is difficult because images are ambiguous about the structure of the world. For object color, the ambiguity arises because the same object reflects a different spectrum to the eye under different illuminations. Human vision typically does a good job of resolving this ambiguity—an ability known as color constancy. The past 20 years have seen an explosion of work on color constancy, with advances in both experimental methods and computational algorithms. Here, we connect these two lines of research by developing a quantitative model of human color constancy. The model includes an explicit link between psychophysical data and illuminant estimates obtained via a Bayesian algorithm. The model is fit to the data through a parameterization of the prior distribution of illuminant spectral properties. The fit to the data is good, and the derived prior provides a succinct description of human performance.

Keywords: color constancy, psychophysical data, illuminants, Bayesian algorithm, computational neuroscience

Introduction

Vision is useful because it informs an organism about the physical environment. Vision is difficult because the retinal stimulus is ambiguous about the state of the world. An example of such ambiguity is illustrated in Figure 1: The same object reflects a different spectrum to the eye when it is viewed under different illuminations. The remarkable feature of human vision is that despite ambiguity in the sense data, our perceptions generally provide a consistent representation of what surrounds us. Objects, for example, tend to appear the same color across changes in illumination, a phenomenon known as color constancy. The core of any general theory of perception must include an understanding of how ambiguity in the stimulus is resolved.

Figure 1.

Physical interaction of surfaces and illuminants. The images show the same house at two different times. The light reflected to the camera differs greatly because of changes in the illuminant. The squares above each image show the same location in each image and emphasize the physical effect. The images were taken by the first author. Automatic color balancing usually performed by the camera was turned off. Despite the physical change recorded by the camera, the house appeared approximately the same yellow at both times. Figure reproduced from Figure 61.1/Plate 36 of Brainard (2004).

An attractive general principle is that vision resolves ambiguity by taking advantage of the statistical structure of natural scenes: Given several physical interpretations that are consistent with the sense data, the visual system chooses the one that is most likely a priori (Adelson & Pentland, 1996; Purves & Lotto, 2003; von Helmholtz, 1866). This principle can be instantiated quantitatively using Bayesian decision theory, and there is great interest in linking perceptual performance explicitly to Bayesian models (Geisler & Kersten, 2002; Kersten & Yuille, 2003; Knill & Richards, 1996; Mamassian, Landy, & Maloney, 2002; Rao, Olshausen, & Lewicki, 2002; Stocker & Simoncelli, 2006; Weiss, Simoncelli, & Adelson, 2002). This article develops a quantitative model of human color constancy that connects estimates of the scene illuminant obtained via a Bayesian algorithm (Brainard & Freeman, 1997) with psychophysical measurements. The model is fit to the data by adjusting parameters that (a) describe the algorithm’s prior knowledge and (b) describe the mapping between illuminant estimates and the psychophysical data.

First, we review (Experimental measurements section) published measurements of human color constancy made by asking observers to adjust the appearance of test objects, embedded in images of three-dimensional scenes, until they appear achromatic (Delahunt & Brainard, 2004). The degree of constancy exhibited by the visual system varies greatly with the structure of the scenes used to assess it. Thus, a full understanding requires a model that predicts both successes and failures of constancy (Gilchrist et al., 1999). The core idea of our model is to use an explicit algorithm to estimate the scene illuminant from the image data and to use this estimate to predict the color appearance data. When the algorithm provides correct estimates of the illuminant, the model predicts good color constancy. When the algorithm’s estimates are in error, the model predicts failures of constancy.

We apply this modeling principle using a Bayesian illuminant estimation algorithm (Brainard & Freeman, 1997). The algorithm resolves ambiguity in the image through explicit priors that represent the statistical structure of spectra in natural images. These priors provide a parameterization that allows us to control the algorithm’s performance. The model provides a good account of the extant data. The illuminant prior that provides the best fit to the data may be compared to the statistics of natural illuminants.

Experimental measurements

In this section, we review the psychophysical data that we wish to model. The experimental methods and data are presented in considerably more detail by Delahunt and Brainard (2004). The published report also describes a number of control experiments that verify the robustness of the main results.

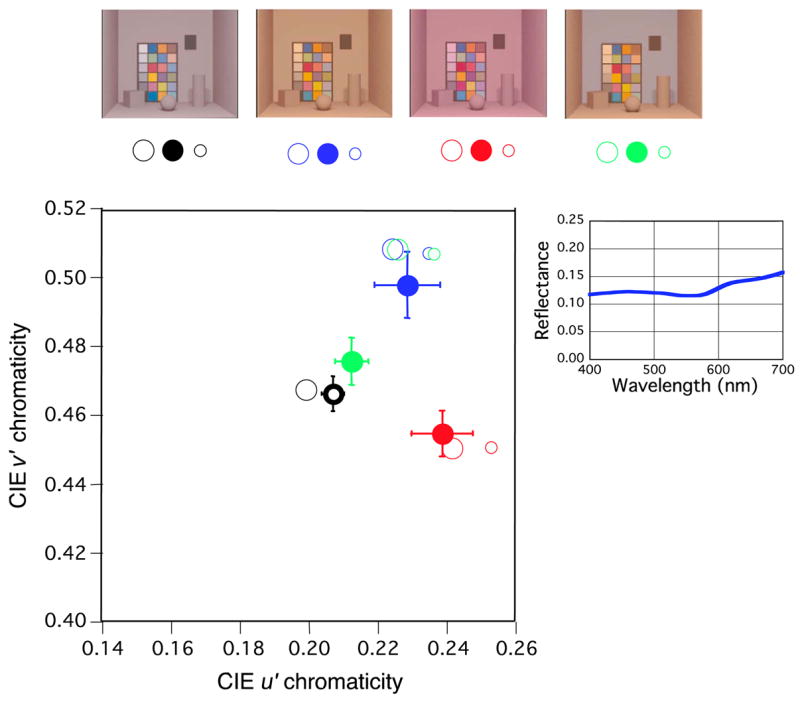

The data consist of achromatic settings made in different contexts. The images at the top right of Figure 2 show 4 of the 17 contextual images used to define these contexts. The first three images are graphics simulations of the same collection of objects rendered under three different illuminants. The left-hand image was rendered under a typical daylight (CIE D65, chromaticity shown as open black circle), whereas the second image was rendered under a more yellowish daylight (spectrum constructed from CIE linear model for daylights, chromaticity shown as open blue circle). The third image was also a rendering of the same set of surfaces, but the illuminant had a chromaticity atypical of daylight (chromaticity shown as open red circle). The right-hand image was rendered under essentially the same illuminant as the second image (open green circle), but a different background surface was simulated. This background was chosen so that the light reflected from it matched that reflected from the background in the left-hand image.

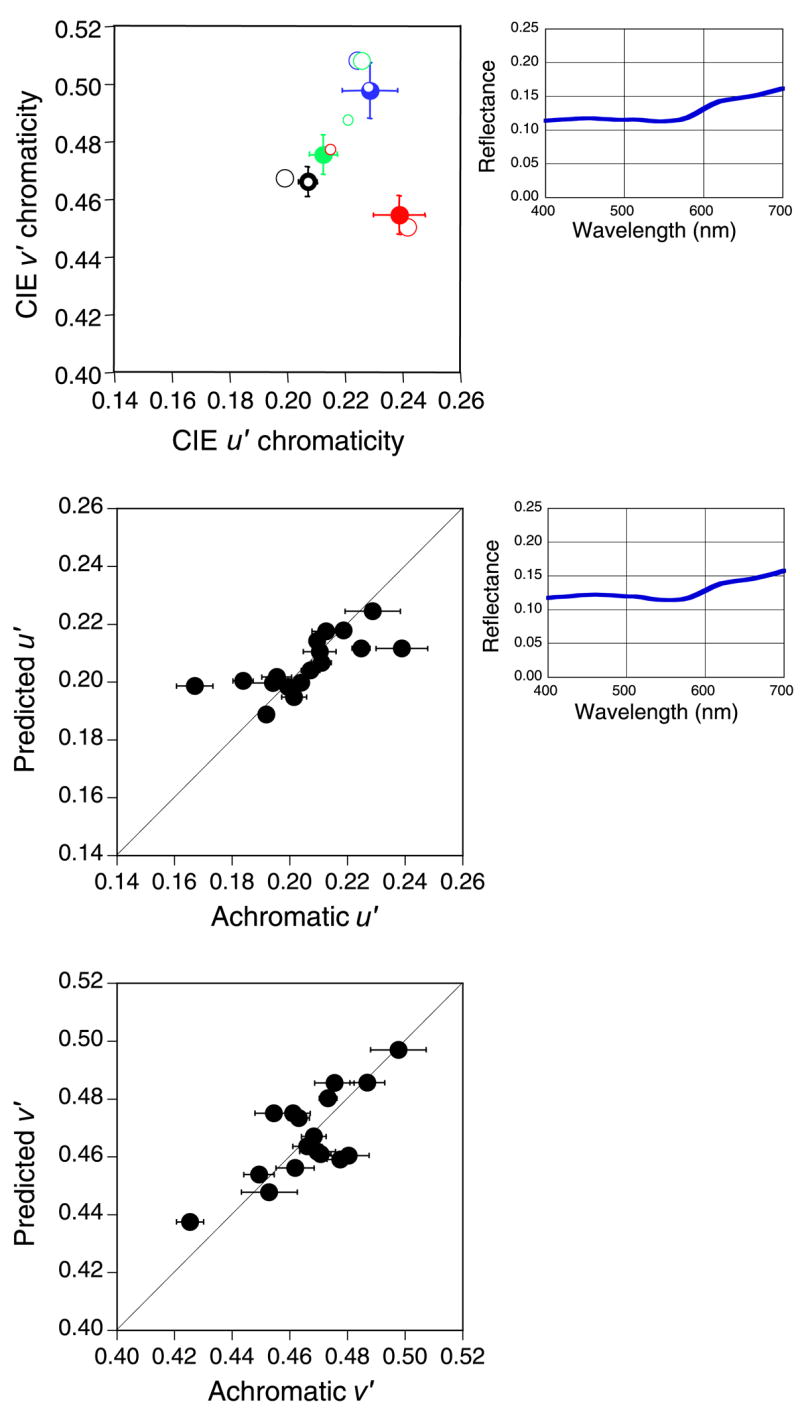

Figure 2.

Sample achromatic adjustment results and interpretation in terms of constancy. Average achromatic chromaticities set by seven observers in four contexts are shown as solid black, blue, red, and green circles. Data are shown in the standard CIE u′v′ chromaticity diagram. Stimuli that plot to the same point in this diagram produce the same relative L-, M-, and S-cone isomerization rates but may have different intensities, whereas stimuli that plot to different points produce different relative isomerization rates. The chromaticities of the four scene illuminants are shown as large open circles. The contextual images are shown above the plot, with the symbols under each identifying the corresponding datum and illuminant. Error bars show ± 1 SD for data from the seven observers. Predictions for a color constant observer are shown as small open circles, as explained in the text. The spectral plot to the right of the chromaticity diagram shows the reflectance of the inferred achromatic surface used to make the constancy predictions.

Stereo pairs corresponding to each image were displayed on a computer-controlled haploscope. Left- and right-eye images for each pair were obtained by rendering the scene from two viewpoints. For each pair, observers adjusted a test patch (location shown as a black rectangle in each image in Figure 2) so that it appeared achromatic (i.e., gray). Achromatic adjustment has been used extensively to characterize color appearance (Bauml, 2001; Brainard, 1998; Chichilnisky & Wandell, 1996; Helson & Michels, 1948; Kraft & Brainard, 1999; Yang & Maloney, 2001). It provides an excellent first-order characterization of how the visual system has adapted to the context provided by the image. Speigle and Brainard (1999) showed that achromatic adjustments made in two separate contexts may be used to predict asymmetric color matches.

The results of the experiment may be summarized by the chromaticities of the stimuli that appeared achromatic in each contextual image. We can thus represent measurements for the ith experimental image with a two-dimensional vector containing the u′ and v′ coordinates of the stimulus that appeared achromatic. This representation characterizes the spectrum reaching the observer from the test patch (i.e., the proximal stimulus), not the reflectance properties of the simulated surface. The solid black, blue, red, and green circles in the CIE u′v′chromaticity diagram in Figure 2 plot the for the four sample images. It is clear that the achromatic chromaticity varies with context. Our modeling goal is to predict each from a description of the corresponding contextual image.

Relation between the data and color constancy

As will become clear below, our modeling approach is closely related to an analysis of the achromatic data in terms of color constancy. Indeed, to understand the model, it is best to start by considering how the achromatic data connect to the notion of color constancy.

We begin with the subset of the data shown in Figure 2 and generalize below to constancy predictions for the entire data set. Consider the context defined by the left-hand image in Figure 2. The concept of color constancy provides no guidance about the relation between the chromaticity of the simulated illuminant (in this case, D65) and the corresponding achromatic chromaticity measured psychophysically.1 We can, however, use the achromatic and illuminant chromaticities to determine the spectral reflectance function of an inferred achromatic surface. Such a reflectance function is shown in Figure 2. This function was chosen so that when the simulated illuminant reflects from it, the chromaticity of the reflected light matches the measured achromatic chromaticity.2 This equality is indicated by the overlay of the solid black circle and the small open black circle.

For a color constant observer, a surface that appears achromatic in one context must continue to appear achromatic in any other context. Because we know the simulated illuminants for each scene, we can compute the chromaticity of the light that would be reflected from the inferred achromatic surface in the contexts defined by the other three images. These are shown as the small blue, red, and green open circles in Figure 2. Given the data from the left-hand image and our choice of inferred achromatic surface, a perfectly color constant observer must judge these chromaticities to be achromatic in the context of their respective images.

The constancy predictions for the two middle contextual images (shown as small open blue and red circles) lie in the general vicinity of the corresponding psychophysically measured achromatic chromaticities (filled blue and red circles), but there are clear deviations in each case. To evaluate the magnitude of the deviation, bear in mind that for an observer with no constancy, the achromatic chromaticity would not vary with the illuminant and would thus overlay the data for the standard context (solid black circle). In each of these two cases, the data are closer to the constancy predictions than to the prediction for no constancy. This pattern of results is typical for studies where only the illuminant is varied and is the basis for assertions in the literature that the human visual system is approximately color constant (Brainard, 2004).

The result for the right-hand contextual image is different. The simulated scene that produced this image has essentially the same illuminant as the second image but with a different background surface. Here, the measured achromatic chromaticity (solid green circle) falls closer to the no-constancy prediction (solid black circle) than to the constancy prediction (small open green circle). We have shown this type of result previously using stimuli that consist of real illuminated surfaces (Kraft & Brainard, 1999). Intuitively, less constancy is shown because the change in background surface silences the cue provided by local contrast. The fact that the achromatic chromaticity is not the same as in the standard context (solid green and solid black circles differ) indicates that local contrast is not the only cue mediating constancy, again replicating the basic structure of our earlier results obtained with real surfaces (Kraft & Brainard, 1999).

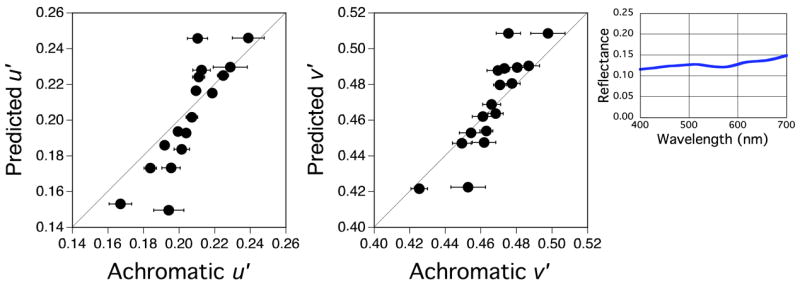

We can generalize the logic of Figure 2 to make constancy predictions for the achromatic chromaticities measured in all 17 contexts used by Delahunt and Brainard. Rather than determining the inferred achromatic surface using only the data from a single context, we can instead use numerical parameter search to find a single inferred achromatic surface, common to all 17 contexts (see Modeling methods section), that minimizes the sum-of-squared errors between the u′v′ achromatic chromaticities obtained in the experiment and the constancy predictions obtained through this surface.3 The predictions for each scene are obtained by computing the chromaticity of the light that would be reflected from the inferred achromatic surface under the known illuminant spectrum for that scene. The best fit to the data is summarized in Figure 3. As one might expect from the sample data shown in Figure 2, the data set is poorly fit by a model that embodies the assumption that the visual system is perfectly color constant.

Figure 3.

Constancy predictions. The left panel shows u′ chromaticity predicted by the constancy analysis described in the text versus achromatic u′ chromaticity measured psychophysically. The middle panel shows predicted v′ chromaticity versus measured v′ achromatic chromaticity. Perfect prediction would be indicated if the data fell along the positive diagonals in both panels. Error bars indicate standard deviations of the data across seven observers. The value of the fit error εa (see the Modeling methods section) is 0.0157. The spectral plot on the right shows the reflectance of the inferred achromatic surface used to make the constancy predictions.

Figures 2 and 3 show that any theory of surface color appearance that simply characterizes the overall degree of constancy (e.g., “humans are approximately color constant,” “human color constancy is poor,” “humans are 83% color constant”) is doomed: The degree of constancy depends critically on how the context is manipulated. The fact that we cannot describe human performance as simply color constant, or as not color constant, causes us to turn our attention to developing a method for predicting the measured achromatic chromaticities , given the contextual images.

Modeling approach

There are a number of approaches that may be used to develop predictive models for appearance data. One approach is mechanistic and seeks to formulate models that incorporate known features of the neural processing chain. Examples of models in this category are those that invoke ideas such as gain control (e.g., Burnham, Evans, & Newhall, 1957; Stiles, 1967; von Kries, 1902/1970), contrast coding (e.g., Shevell, 1978; Walraven, 1976), and color opponency (e.g., Hurvich & Jameson, 1957; Webster & Mollon, 1995) to explain various color appearance phenomena. A second approach abstracts further and considers the problem as one of characterizing the properties of a black-box system. Here, the effort is on testing principles of system performance (such as linearity) that allow prediction of color appearance in many contexts based on measurements from just a few (Brainard & Wandell, 1992; Krantz, 1968). A third approach is computational in the sense defined by Marr (1982), with the idea being to develop models by thinking about what useful function color context effects serve and how a system designed to accomplish this function would perform. In the case of color, one asks how could a visual system process image data to recover descriptions of object surface reflectance that are stable across changes in the scene. We have taken this approach in much of our prior work on color and lightness constancy (Brainard, Brunt, & Speigle, 1997; Brainard, Kraft, & Longère, 2003; Brainard, Wandell, & Chichilnisky, 1993; Bloj et al., 2004; Speigle & Brainard, 1996; see also Adelson & Pentland, 1996; Bloj, Kersten, & Hurlbert, 1999; Boyaci, Doerschner, & Maloney, 2004; Boyaci, Maloney, & Hersh, 2003; Doerschner, Boyaci, & Maloney, 2004; Land, 1964; Maloney & Yang, 2001; McCann, McKee, & Taylor, 1976) and develop it further here. It is useful to keep in mind that the various approaches are not mutually exclusive and that the distinctions between them are not always sharp.

Equivalent illuminant model

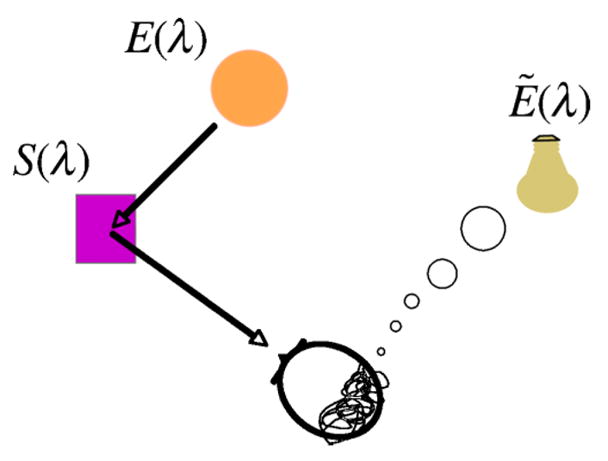

Given that we cannot characterize human vision as even approximately color constant across some scene changes, how do we use computational ideas to develop a model of human performance? Figure 4 illustrates the basic concept. The observer views an image formed when illumination reflects off objects. The illuminant is characterized by its spectral power distribution E(λ), and each surface is characterized by its reflectance function S(λ). The spectrum of the light reaching the eye is given by C(λ) = E(λ)S(λ).4 If a visual system has access to an estimate of the illuminant, Ẽ (λ), it is straightforward for it to produce a representation of object color based on an estimate of surface reflectance S̃ (λ) = C(λ)/Ẽ (λ).5 As long as Ẽ (λ) ≈ E(λ), this representation will be stable across changes in context. To put it another way, a visual system with access to a good estimate of the actual illuminant E(λ) can achieve approximate color constancy by computing an estimate of surface reflectance S̃ (λ) and using this estimate to determine color appearance.6 If the human visual system applies this strategy, but with illuminant estimates that sometimes deviate substantially from the actual illuminants, there will be commensurate deviations from constancy.

Figure 4.

Equivalent illuminant concept. See description in text.

This analysis suggests that useful models might be developed by starting with the premise that the visual system tries to achieve color constancy, and then allowing for deviations from constancy that occur because of errors in the estimated illuminant Ẽ (λ). It should not be surprising that illuminant estimates are sometimes in error: The reason that constancy is intriguing rests on the fundamental difficulty of achieving it across all possible scenes. We refer to Ẽ (λ) as the equivalent illuminant because, in the model, it replaces the physical illuminant in the constancy calculations. More generally, we refer to the broad class of models pursued here as equivalent illuminant models.

One empirical approach to testing the equivalent illuminant idea is to ask whether explicit judgments of illuminant intensity covary lawfully with explicit judgments of surface lightness. This approach has led to mixed results (Beck, 1959; Logvinenko & Menshikova, 1994; Rutherford & Brainard, 2002; see Gilchrist, 2006); as a whole, these studies cast doubt on the idea that explicit illuminant judgments tap an equivalent illuminant that may be used to predict surface lightness.

Another empirical approach is to ask whether the equivalent illuminant idea leads to parametric models that predict how surface appearance varies with some ancillary stimulus variable (e.g., chromaticity of reflected light, surface slant: Bloj et al., 2004; Boyaci et al., 2004, 2003; Brainard et al., 1997, 2003; Doerschner et al., 2004; Speigle & Brainard, 1996). In this work, the appearance data from each context are used to determine an implicit equivalent illuminant for that context. The question is then whether the parametric variation of appearance within each context is well fit by a calculation that estimates surface reflectance using the equivalent illuminant for that context. This work provides support for the general equivalent illuminant approach but does not provide guidance about how the context determines the equivalent illuminant.

Here, we take the next step in the development by asking whether we can predict human performance from equivalent illuminants that are obtained directly from the image through an illuminant estimation algorithm. We used a Bayesian algorithm that we have described previously (Brainard & Freeman, 1997). The algorithm’s performance depends on specifying a prior over illuminant spectral power distributions and surface reflectance functions. The output of the algorithm is an estimate of the chromaticity of the scene illuminant. We use the illuminant estimate to predict the achromatic chromaticities, through a calculation that achieves good color constancy for contexts where the estimate is correct. The next section provides technical details of the modeling method.

Modeling methods

Bayesian illuminant estimation

The principles underlying the Bayesian illuminant estimation algorithm are described by Brainard and Freeman (1997). The published algorithm was modified to estimate illuminant chromaticity rather than illuminant spectrum, as described below.

To predict the achromatic loci using the equivalent illuminant principle, we wished to estimate the CIE u′v′ chromaticity coordinates of the illuminant. We denoted these by the two-dimensional column vector

Our goal was then to estimate x from the data available in the retinal image. We denoted these data by the vector y. The entries of y consisted of the L-, M-, and S-cone quantal absorption rates at a series of Ny image locations

The Bayesian approach (Berger, 1985; Lee, 1989) tells us that to estimate x from y, we should calculate the posterior probability

| (1) |

where c is a normalizing constant that depends on y but not on x, p(y|x) is the likelihood that data y will be observed when the illuminant chromaticity is in fact x, and p(x) is the prior probability of an illuminant with chromaticity x occurring.

To choose an estimate x of the illuminant chromaticity, we maximized the posterior probability

To find the maximum, we need to evaluate both the likelihood and the prior. Because the constant c is independent of x, it could be ignored in the maximization.

The likelihood of observing a data vector y given an illuminant x depends on the imaging model, the spectrum of the illuminant, and the surfaces that the illuminant reflects from. We assumed that a single diffuse illuminant reflects from Ny distinct matte surfaces. Although this model is simplified relative to the imaging model used to simulate the experimental images, it captures the broad dependence of the spectral properties of the image data on the spectrum of the illuminant and spectral reflectance of the surfaces.

We represented the illuminant spectrum by a column vector e whose entries denote the illuminant power for a set of wavelength bands that sampled the visible spectrum (Brainard, 1995). We represented each individual surface by a column vector si (1 ≤ i ≤ Ny) whose entries specified the reflectance of the surface for the same set of wavelength bands. The reflected light is absorbed by L-, M-, and S-cone photopigment to produce the mean isomerization rates corresponding to each surface. These mean rates may be computed from e, si, and estimates of the photopigment spectral absorption curves using standard methods (Brainard, 1995). We assumed that the observed data specified by y were the mean absorption rates corresponding to each surface perturbed by independent zero-mean Gaussian noise. The standard deviation of this noise for each cone class was taken to be 1% of the mean value of y for that cone class. These assumptions allowed us to compute explicitly the likelihood of the data p(y|e, s1, …, sNy) given e and the vectors si, 1 ≤ i ≤ Ny. To find p(y|x), we need to compute

We assumed that the probability of any particular surface occurring in a scene was independent of the other surfaces in the scene and that the probability of a surface occurring in a scene was independent of the illuminant. This let us simplify the above equation to

| (2) |

where the notation yi refers to the three entries of y that depended on the light reflected from the ith surface. In principle, the limits of integration in Equation 2 are [0,∞] for each entry of e and [0,1] for each entry of the si, as lights do not have negative power and we consider only nonfluorescent surfaces that do not reflect more light at any wavelength than is incident at that wavelength. In practice, as described below, the support of each integral is localized and we obtained an approximation with a combination of analytic and numerical methods.

We assumed that the prior probability of a surface s occurring in a scene was described by a multivariate normal distribution over the weights of a linear model (Brainard & Freeman, 1997; Zhang & Brainard, 2004). That is,

| (3) |

where Bs is a matrix with Ns columns, each of which describes a spectral basis function, ws is an Ns dimensional column vector, us is the mean of ws, Ks is the covariance of ws, and the notation ~ indicates “distributed as.” Equation 3 allowed us to compute the probability p(s) for any s of the form s = Bsws explicitly, in two steps. First, we found the weights ws corresponding to s. Then, we evaluated p(ws) according to the normal density with mean us and covariance Ks. We took the probability for any s not of the form s = Bsws to be zero.

We used Ns = 3, and for the main calculations we chose Bs, us, and ws, as described by Brainard and Freeman (1997). Given this surface prior, each of the integrals in brackets in Equation 2 could be approximated analytically using the methods described by Brainard and Freeman (1994) and Freeman (1993).

We also assumed that illuminant spectra were characterized by a linear model, so that

| (4) |

where we chose Be to specify the CIE three-dimensional linear model for daylight. This linear model includes CIE D65 and other CIE standard daylights. Given the linear model constraint, the chromaticity of an illuminant determines the linear model weights up to a free multiplicative scale factor through a straightforward colorimetric calculation, which we denoted as we = af(x). The calculation proceeds in two steps. First, we used the chromaticity x to find a set of XYZ tristimulus coordinates with the same chromaticity (e.g., Wyszecki & Stiles, 1982). Then, we used the known color matching functions and the linear model Be to recover we from the tristimulus coordinates (e.g., Brainard, 1995). Because the recovery of tristimulus coordinates from chromaticity is unique only up to a multiplicative scale factor, the same is true for the recovery of we from chromaticity.

We assumed that the distribution over the scale factor a was uniform so that illuminants with the same chromaticity were equally likely. This is expressed by

We also set p(e | x) = 0 for any illuminant that had negative power in any wavelength band. Our assumption about illuminant spectra allowed us to approximate the outer integral in Equation 2 numerically. Doing so required choosing limits on the range of the scale factor a. Given the linear model constraints on illuminant and surface spectra, choosing a and x allowed us to recover a unique set of surface reflectance spectra that were perfectly consistent with the observed data y. We found the minimum value of a such that none of these recovered surface reflectance functions exceeded 1 at any wavelength, and we used this as the lower limit of integration. We set the upper limit of integration at three times this value. This upper limit was chosen after examining the behavior of the inner integrals (those in brackets) of Equation 2 — they fall off rapidly with increasing a, so that contributions to Equation 2 from values of a above the upper limit are negligible.

Given that we were able to evaluate the likelihood, computation of the posterior then required only that we define a prior probability over illuminant chromaticities. We assumed that

For the main calculations, we fixed ux at the chromaticity of CIE illuminant D65. Thus, the illuminant prior had three free parameters, the independent entries of covariance matrix Kx. This parameterization of the illuminant prior is capable of capturing the broad features of a set of measurements of daylight spectra (Figure 5; DiCarlo & Wandell, 2000).

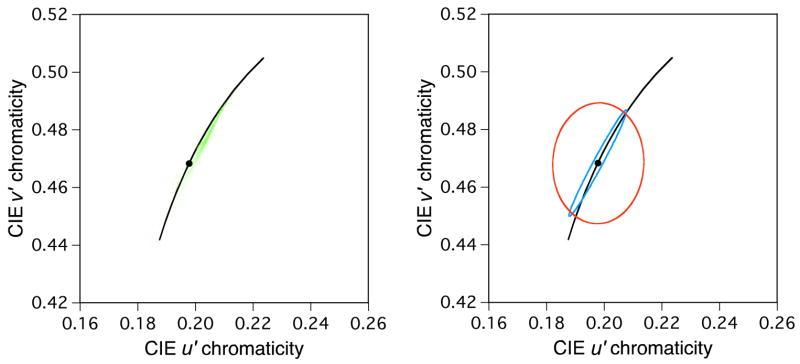

Figure 5.

Illuminant priors. The left panel shows the CIE u′v′ chromaticity of 10,760 daylight measurements made by DiCarlo and Wandell (2000). The right panel shows an isoprobability contour of a bivariate normal distribution chosen to capture the distribution of daylight chromaticities (blue ellipse). This is the daylight prior described in the text. The red ellipse shows an isoprobability contour of a bivariate normal distribution that, in conjunction with our Bayesian algorithm, led to equivalent illuminants that provided good predictions of human performance. Both isoprobability contours are scaled so that they contain 90% of draws from their corresponding normal distributions. For reference, the solid black line in both panels plots the CIE daylight locus for correlated color temperatures between 4000 and 10000 K, and the filled black circle plots the chromaticity of CIE illuminant D65.

To find x̃, we used numerical search and found the value of x that maximized the posterior (Equation 1).

To run the algorithm on images, we selected 24 points at random from the image data. In some conditions, points were selected uniformly from the image. In other conditions, points were drawn using a Gaussian weighting function of specified standard deviation and centered on the test patch. In the experiments, the test patch chromaticity was randomized at the start of every achromatic adjustment. To simulate this, we used the same randomization rule when drawing points that fell within the test patch. For each condition, the 24 points were drawn 10 separate times and the algorithm was run for each of the 10 sets. The resulting illuminant estimates were then averaged to produce the final estimate.

Predicting measured achromatic chromaticities from the illuminant estimate

The goal of our calculations was to predict the chromaticities of stimuli judged achromatic (achromatic chromaticities) in the context of different images. Recall that represents the psychophysically measured achromatic chromaticity for the ith scene, and let x̃i represent the illuminant chromaticity estimated from the image of the ith scene. One way to predict the achromatic chromaticities would be to assume directly that stimuli with chromaticities matched to the observer’s estimate of the illuminant appear achromatic. This assumption strikes us as theoretically unmotivated, as achromaticity is a property of surface appearance. Instead, to link to x̃i, we found the reflectance function of a single hypothetical surface (the inferred achromatic surface) such that the light that would have been reflected from it under each estimated illuminant x̃i had a chromaticity as close as possible to the corresponding achromatic chromaticity . Once we chose a single inferred achromatic surface (represented by its linear model weights), we mapped the illuminant estimates to predicted achromatic chromaticities for every scene. This is the same method that we used above (see the Relation between the data and color constancy section) to make constancy predictions, with the one change that the actual illuminant chromaticity was replaced by the equivalent illuminant chromaticity.

In more detail, we made the predictions as follows. We assumed that illuminant spectra were described by the CIE three-dimensional linear model for daylight, as in Equation 4, and that the inferred achromatic surface’s reflectance function was described by the same three-dimensional linear model for surfaces as in Equation 3. Given the linear model constraint on illuminants, we computed the spectrum of each scene’s estimated illuminant (ẽi) from its chromaticity x̃i, up to an undetermined scale factor. Given ẽi and the linear model weights for the achromatic surface , we computed the spectrum of the light that would be reflected from the inferred achromatic surface, again up to an undetermined factor. This then yielded the chromaticity of the light that would be reflected from the inferred achromatic surface under the estimated illuminant. Note that the computed chromaticity was independent of the undetermined scale factor.

The chromaticities served as our predictions of the psychophysically measured achromatic chromaticities . These predictions depend on the inferred achromatic surface weights , and we used numerical search to find the single set of weights that minimized the fit error to the entire data set, . Because the predictions depend only on the relative inferred achromatic surface reflectance function, we fixed the first entry of to be 1 and searched over the remaining two entries.

The same procedure was used to predict achromatic chromaticities from actual illuminant chromaticities. In the sample calculations involving a subset of images (chromaticity plots in Figures 2, 6, and 7), rather than minimizing the prediction error across all 17 contexts, the weights were chosen so that the prediction error for the standard context was zero.

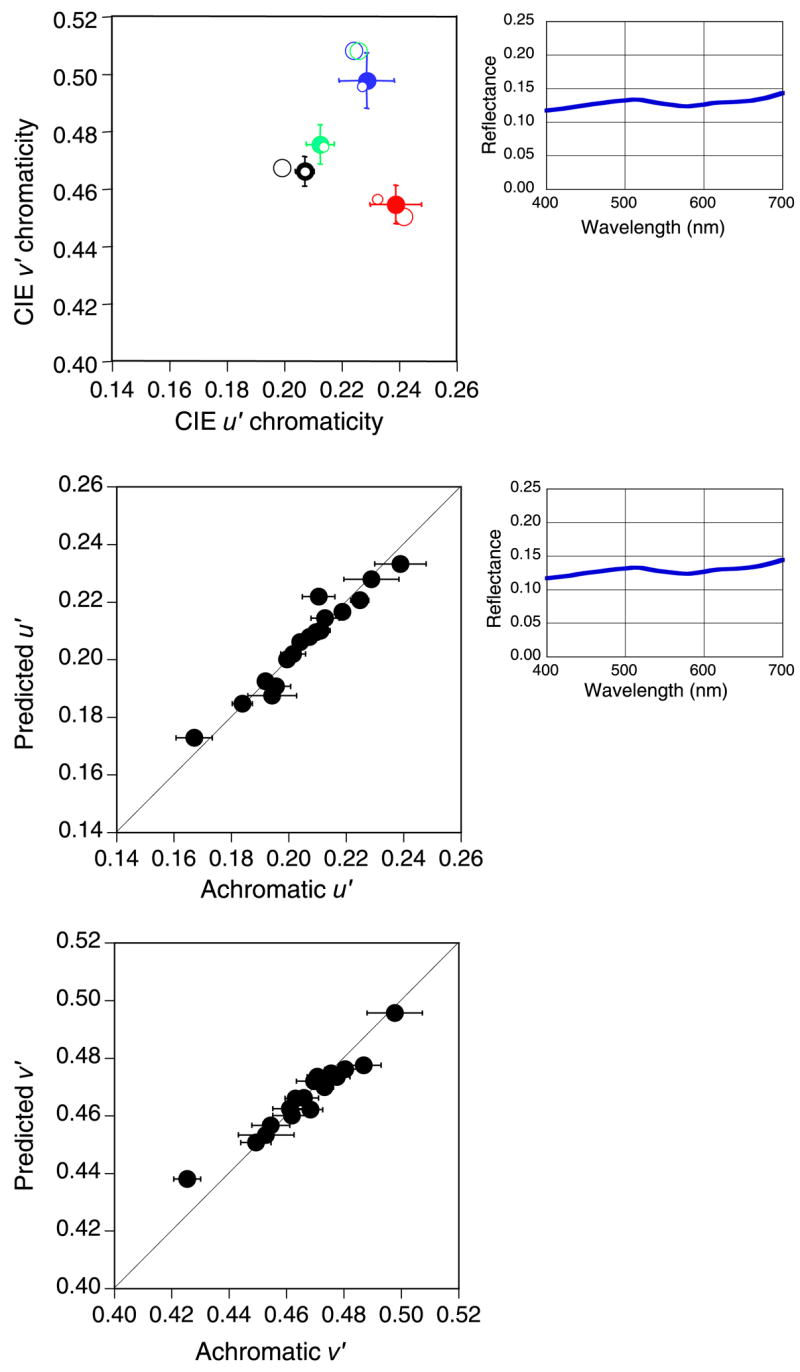

Figure 6.

Model predictions with daylight prior. The chromaticity diagram on the top left shows results and predictions for the same four sample scenes as in Figure 2. Same format as the corresponding diagram in Figure 2. The middle and bottom left panels summarize the prediction performance in the same format as the corresponding panels of Figure 3. The value of the fit error εa (see the Modeling methods section) is 0.0114. The spectral plots on the right show the inferred achromatic surface for the sample calculation (top) and overall predictions (bottom).

Figure 7.

Model predictions with best prior and spatial weighting. Same format as Figure 6. The value of the fit error εa is 0.0044.

Results

Figure 5 illustrates how we characterized the prior over illuminants. The left panel (green dots) shows the u′v′ chromaticity coordinates of 10,760 daylights measured by DiCarlo and Wandell (2000). Consistent with previous reports, these cluster along the CIE daylight locus (black line in both panels). To capture this regularity in broad terms, we modeled the prior probability of illuminant chromaticities as a bivariate normal distribution (see the Modeling methods section). The blue ellipse in the right panel shows an isoprobability contour of the normal distribution we chose to capture the broad statistics of the daylight chromaticity distribution. We chose the mean of this distribution as the chromaticity of CIE illuminant D65 and the covariance matrix as that of the DiCarlo and Wandell measurements. We refer to this illuminant prior as the daylight prior. The prior over scene surfaces and other details of the algorithm are provided in the Modeling methods section.

We applied the Bayesian algorithm with the daylight prior to the 17 images used by Delahunt and Brainard (2004) to obtain estimates of the illuminant chromaticities. We then repeated the analysis used to make the constancy predictions shown in Figure 3, but with the algorithm’s estimates in place of the actual illuminant chromaticity for each context.7 In brief, we used numerical search to infer an achromatic surface that minimized the error between the chromaticity of the light that would be reflected from it under each estimated illuminant x̃i and the corresponding psychophysically measured achromatic chromaticity . The resulting predictions are shown in Figure 6. The top panel shows predictions for the same sample contexts as in Figure 2. The middle and bottom panels summarize the prediction quality in the same format as Figure 3. The predictions are, on aggregate, improved slightly from those obtained using the actual chromaticities of the scene illuminants. The value of the error measure εa is 0.0157 for the predictions based on the actual illuminant chromaticities and 0.0114 for the predictions based on the Bayesian estimates obtained with the daylight prior.

The reason for the net improvement is that, for some scenes, the algorithm fails to estimate the physical illuminant correctly, and these failures are generally consistent with human performance. Two examples of this may be seen by comparing Figure 2 and the top panel of Figure 6: The predictions shown by the small open blue and green circles are better in the latter. The prediction improvement is largest for the case where the background surface in the image was varied to silence local contrast as a cue to the illuminant (green circles), although the prediction for this case still deviates from the data.

On the other hand, not all of the predictions are improved: The small open red circle lies further from the point it predicts in the top panel of Figure 6 than in Figure 2. Here, the Bayesian prediction lies much closer to the daylight locus than the corresponding datum. Intuitively, this occurs because the illuminant prior we used places very little probability weight in the vicinity of the scene illuminant used in the experiment. Overall, the model with the daylight prior does not adequately describe the data.

The poor prediction performance obtained with the daylight prior for some scenes is consistent with Delahunt and Brainard’s (2004) conclusion that human color constancy is robust with respect to atypical illuminant changes. Within a Bayesian framework, such robustness can be described through the use of a broader illuminant prior distribution, and we wondered whether allowing this possibility could improve the predictions of the equivalent illuminant model. A second nonrealistic aspect of the calculations described above is that the 24 sample points provided to the algorithm were drawn uniformly from the whole image. It seems likely that image regions surrounding the test patch have a larger influence on its appearance than do more distant regions. Thus, we also explored the effect of drawing samples according to a spatial Gaussian weighting function centered on the test patch.

We parameterized the illuminant prior through the three independent entries of its covariance matrix Kx and repeated the calculations for many choices of Kx. We identified the choice of Kx that led to the best prediction results (minimum εa). We then varied the spatial sampling, choosing the standard deviation of the Gaussian weighting function that minimized εa. The isoprobability contour corresponding to the best Kx is shown as the red ellipse in Figure 5. This derived prior distribution is considerably broader than the daylight prior. The best standard deviation for the Gaussian weighting function was 4° of visual angle. Figure 7 shows that the quality of the resulting fit is very good. The predictions for the sample conditions (top panel) all lie close to the measurements, while the points in the summary graphs (middle and bottom panels) are generally near the diagonal. Most of the improvement in εa was due to the change in prior, not in spatial sampling.8

Discussion

Insight about perception is often available from consideration of the computational task faced by the perceptual system (Barrow & Tenenbaum, 1978; Marr, 1982). The premise is that if we formulate explicit algorithms that can accomplish this task, then we can use these as the basis of models for human performance. In the case of color constancy, McCann et al. (1976) initiated this general approach by showing that predictions derived from Land’s retinex algorithm (Land, 1964, 1986; see Brainard & Wandell, 1986) successfully accounted for a range of experimental data. There are conditions, however, where the predictions of retinex-based models deviate from the data (e.g., Delahunt & Brainard, 2000; Kraft & Brainard, 1999).

We also adopted the computational approach to develop a quantitative model of surface appearance. Here, the predictions are derived from a principled analysis of how the illuminant can be estimated from image data, and this analysis is connected to the data through the equivalent illuminant principle.

In contrast with earlier work with equivalent illuminant models, both from our laboratory (Bloj et al., 2004; Brainard et al., 1997; Speigle & Brainard, 1996) and by others (Boyaci et al., 2004, 2003; Doerschner et al., 2004), the equivalent illuminants here were not fit individually to the experimental data from the corresponding condition but were instead obtained through the application of an independent algorithm. We have previously argued that modeling of color appearance may be fruitfully pursued in two steps: First, determine the appropriate parametric form to account for context effects; then, determine how the parameters are determined by the image context (Brainard, 2004; Brainard & Wandell, 1992). Prior work on equivalent illuminant models address the first step. The present work tackles the question of how the parameters are determined.

The model fitting was restricted to varying a few parameters that govern the overall behavior of the algorithm, and the same parameters were used for all the experimental conditions. The good quantitative fit obtained illustrates the promise of the approach. The introduction of an explicit algorithm represents a novel stage of theoretical development and increases the force of the general modeling approach. Parameterizing the prior distribution to allow fitting of a Bayesian model to human data has also proved effective for modeling of shape (Mamassian et al., 2002) and motion (Stocker & Simoncelli, 2006; Weiss et al., 2002) perception. In that they provide a principled benchmark against which to compare human performance, these Bayesian models of appearance may be understood as close relatives of the ideal observer models that have been highly successful in clarifying how vision detects and discriminates signals (Geisler, 1989; Green & Swets, 1966).

In addition to providing a predictive quantitative model, the parametric fit allows a succinct summary of human performance across all the conditions, in the form of the illuminant prior and inferred achromatic surface. The inferred achromatic surface is close to spectrally flat, consistent with the properties of achromatic reference surfaces used in the photographic industry. The derived illuminant prior is not uniform over illuminant chromaticity— it describes some illuminants as more likely than others. On the other hand, the derived prior is considerably broader than would be indicated by measurements of natural daylight.

The discrepancy is interesting, and the reasons for it are unclear. A different distributional form of the illuminant prior, perhaps one with heavier tails, might allow reconciliation of the daylight measurements and derived prior, or direct measurements of daylight spectra may not be the appropriate database from which to derive an illuminant prior: In natural scenes, light often reaches surfaces after reflecting from other surfaces in the scene. Extensive measurements of the spectra of light actually reaching objects in typical scenes are not yet available. A final possibility is that formulating the illuminant estimation problem with a different loss function (Brainard & Freeman, 1997; Mamassian et al., 2002) would lead to better performance with the daylight prior.

Although the fits obtained here are good, the experimental conditions were not chosen specifically to test the present model. Future tests will be most forceful if they contain conditions designed to probe it sharply. Kraft and Brainard (1999) emphasized that a good way to do this is to choose contextual images where the scene illuminant differs while the model at hand predicts no difference in performance. Comparing human performance and model prediction for scenes where the illuminant and surface spectral functions are chosen to deviate substantially from those captured by our linear-model-based priors might also provide useful insight.

The general analysis presented here is not specific to the Brainard/Freeman algorithm, in that the same logic may be used to link any illuminant estimation algorithm (Buchsbaum, 1980; D’Zmura & Iverson, 1993a, 1993b; Finlayson, Hubel, & Hordley, 1997; Funt & Drew, 1988; Maloney & Wandell, 1986) to the data. Within the general framework presented here, differentiating between candidate algorithms again requires careful choice of experimental conditions so that the data set includes conditions where different algorithms make substantially different predictions.

The algorithm we used does not take advantage of information carried by the geometric structure of the scene, and the experimental manipulations used were spectral rather than geometric. Much current experimental work on human color and lightness constancy focuses on such geometric manipulations (Adelson, 1999; Bloj et al., 1999; Gilchrist et al., 1999; Ripamonti et al., 2004; Williams, McCoy, & Purves, 1998), and several authors have modeled this type of data with equivalent illuminant models where the illuminant parameters are fit directly to the data in each condition (Bloj et al., 2004; Boyaci et al., 2004, 2003; Doerschner et al., 2004). As algorithms for achieving constancy with respect to geometric manipulations become available, the principles underlying our present work may allow these algorithms to be integrated into the modeling effort.

The model reported here provides a functional description and does not speak directly to processing steps that might implement it in human vision or to the neural mechanisms that mediate color constancy and color appearance. A great deal is known, however, about the early stages of chromatic adaptation (Brainard, 2001; Jameson & Hurvich, 1972; Stiles, 1967; Wyszecki, 1986). An important challenge for the current work remains to understand how known neural mechanisms might implement the sort of calculations that we have performed using a digital computer. We have begun to consider this question by examining how well parametric models of adaptation can adjust to changes in illumination and the composition of surfaces in a scene. On this note, there is no necessary reason to expect that a neural implementation that produced the same performance as our model would contain units that explicitly represent the constructs of the model as we have formulated it. For example, we would be surprised to find units whose outputs explicitly represent the prior probabilities of illuminant chromaticities in the CIE u′v′ representation. The value of our work for constraining models of neural processing lies in its ability to capture the relation between stimulus and percept that a model of the neural implementation must explain.

Gilchrist et al. (1999) have argued that the key to understanding the perception of color and lightness is to model deviations from constancy (in their terms, to model “errors” in perception). They conclude that a computational approach is unlikely to provide a satisfactory account because of the many such deviations observed in the literature. We conclude by noting that the model presented here, which is heavily motivated by computational considerations, successfully accounts for both conditions where the visual system is color constant and where it is not. Our experimental conditions differ substantially from those considered by Gilchrist et al., but it is clear that there is no fundamental constraint that prevents a computationally motivated model from accounting for both successes and failures of constancy.

Acknowledgments

This work was supported by NIH EY 10016 and by a gift from Agilent, Inc. An early version of the theory developed here was outlined in Brainard et al. (2003), and the work in close to its final form was presented at the 2003 COSYNE meeting. We thank J. DiCarlo and B. Wandell for providing us with their spectral measurements of daylight and M. Kahana and the Penn Psychology Department for providing access to a computer cluster.

Footnotes

Commercial relationships: none.

Some readers may have the intuition that color constancy implies that the measured achromatic chromaticity should be the same as that of the illuminant. Although it is true that data which conform to this intuition are consistent with perfect constancy, the converse does not hold. The concept of constancy is that surface color appearance is stable across changes in viewing context. It therefore does not carry any necessary implications about the data from a single context considered in isolation.

Because of metamerism (Brainard, 1995; Wyszecki & Stiles,1982), there are multiple surface reflectance functions that have the property that the chromaticity of the light reflected from them under the simulated illuminant matches the measured achromatic chromaticity. The surface shown was obtained by imposing a linear model constraint, so that its reflectance function is typical of naturally occurring surface reflectances (see Modeling Methods, Jaaskelainen, Parkkinen, & Toyooka, 1990; Maloney, 1986).

Although determining the inferred achromatic surface from the data of a single context is conceptually simpler than fitting it to all of the data, the more general fitting procedure ensures that the quality of the constancy model is not underestimated simply because the predictions were extrapolated from parameters set by a single datum. In the experiment, none of the seventeen contexts had a privileged status. Comparison of the spectral reflectance function of the inferred achromatic surface shown in Figure 2 with that of Figure 3 reveals that, in the event, the difference between the two methods is very small.

This image model is correct for matte surfaces viewed under spatially diffuse illumination. The reflectance of most real materials has a geometric dependence (Fleming, Dror, & Adelson, 2003; Foley, van Dam, Feiner, & Hughes, 1990), and real illuminations are not diffuse (Debevec, 1998; Dror, Willsky, & Adelson, 2004). The approximation used here allows us to make progress; geometrical effects will need to be taken into account as we extend our thinking about lightness and color towards richer scene geometries (see Bloj et al., 2004; Boyaci et al., 2003, 2004; Doerschner et al., 2004; Ripamonti et al., 2004; Xiao & Brainard, 2006).

The actual estimation is more complicated, as the visual system does not have direct access to C(λ) but instead must use the responses of the L-, M-, and S-cones to make the estimate. There are well-established methods for doing so (Brainard, 1995; Wandell, 1987). The tilde in the notation distinguishes estimated quantities from their physical counterparts.

Even for a visual system perfect with access to the actual illuminant, errors in constancy may still occur because of metamerism: two surfaces that produce the same cone responses under one illuminant may produce different cone responses under a changed illuminant (Brainard, 1995; Wyszecki & Stiles, 1982).

As described in Modeling methods, the calculation of inferred achromatic surface and achromatic predictions depends on the illuminant spectrum only through its chromaticity.

The value of εa with best illuminant prior and spatial weighting was 0.0044. The value of εa obtained with the best illuminant prior when points were drawn from the entire image was 0.0057. We also explored whether variation in the standard deviation of the Gaussian spatial weighting function could produce a similar fit with the daylight prior, and whether varying the surface prior parameters allowed a good fit with the daylight prior. Across all variations we explored, the minimum value of εa obtained with the daylight prior was 0.0110, over twice that obtained with the broadened prior.

Contributor Information

David H. Brainard, Department of Psychology, University of Pennsylvania, Philadelphia, PA, USA

Philippe Longère, Neion Graphics, Valbonne, France.

Peter B. Delahunt, Posit Science, San Francisco, CA, USA

William T. Freeman, Department of Electrical Engineering and Computer Science, MIT, Cambridge, MA, USA

James M. Kraft, Faculty of Life Sciences, The University of Manchester, Manchester, UK

Bei Xiao, Department of Neuroscience, University of Pennsylvania, Philadelphia, PA, USA.

References

- Adelson EH. Lightness perception and lightness illusions. In: Gazzaniga M, editor. The new cognitive neurosciences. 2. Cambridge, MA: MIT Press; 1999. pp. 339–351. [Google Scholar]

- Adelson EH, Pentland AP. The perception of shading and reflectance. In: Knill D, Richards W, editors. Visual perception: Computation and psychophysics. New York: Cambridge University Press; 1996. pp. 409–423. [Google Scholar]

- Barrow HG, Tenenbaum JM. Recovering intrinsic scene characteristics from images. In: Hanson AR, Riseman EM, editors. Computer vision systems. New York: Academic Press; 1978. pp. 3–26. [Google Scholar]

- Bauml KH. Increments and decrements in color constancy. Journal of the Optical Society of America A, Optics, Image Science, and Vision. 2001;18:2419–2429. doi: 10.1364/josaa.18.002419. [PubMed] [DOI] [PubMed] [Google Scholar]

- Beck J. Stimulus correlates for the judged illumination of a surface. Journal of Experimental Psychology. 1959;58:267–274. doi: 10.1037/h0045132. [PubMed] [DOI] [PubMed] [Google Scholar]

- Berger TO. Statistical decision theory and Bayesian analysis. New York: Springer-Verlag; 1985. [Google Scholar]

- Bloj MG, Kersten D, Hurlbert AC. Perception of three-dimensional shape influences colour perception through mutual illumination. Nature. 1999;402:877–879. doi: 10.1038/47245. [PubMed] [DOI] [PubMed] [Google Scholar]

- Bloj M, Ripamonti C, Mitha K, Hauck R, Greenwald S, Brainard DH. An equivalent illuminant model for the effect of surface slant on perceived lightness. Journal of Vision. 2004;4(9):735–746. doi: 10.1167/4.9.6. http://journalofvision.org/4/9/6/ [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- Boyaci H, Doerschner K, Maloney LT. Perceived surface color in binocularly viewed scenes with two light sources differing in chromaticity. Journal of Vision. 2004;4(9):664–679. doi: 10.1167/4.9.1. http://journalofvision.org/4/9/1/ [PubMed] [Article] [DOI] [PubMed]

- Boyaci H, Maloney LT, Hersh S. The effect of perceived surface orientation on perceived surface albedo in binocularly viewed scenes. Journal of Vision. 2003;3(8):541–553. doi: 10.1167/3.8.2. http://journalofvision.org/3/8/2/ [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- Brainard DH. Colorimetry. In: Bass M, editor. Handbook of optics: Volume 1. Fundamentals, techniques, and design. New York: McGraw-Hill; 1995. pp. 1–54. chap. 26. [Google Scholar]

- Brainard DH. Color constancy in the nearly natural image: 2. Achromatic loci. Journal of the Optical Society of America A, Optics, Image Science, and Vision. 1998;15:307–325. doi: 10.1364/josaa.15.000307. [PubMed] [DOI] [PubMed] [Google Scholar]

- Brainard DH. Color vision theory. In: Smelser NJ, Baltes PB, editors. International encyclopedia of the social and behavioral sciences. Vol. 4. Oxford, UK: Elsevier; 2001. pp. 2256–2263. [Google Scholar]

- Brainard DH. Color constancy. In: Chalupa L, Werner J, editors. The visual neurosciences. Vol. 1. Cambridge, MA: MIT Press; 2004. pp. 948–961. [Google Scholar]

- Brainard DH, Brunt WA, Speigle JM. Color constancy in the nearly natural image: 1. Asymmetric matches. Journal of the Optical Society of America A, Optics, Image Science, and Vision. 1997;14:2091–2110. doi: 10.1364/josaa.14.002091. [PubMed] [DOI] [PubMed] [Google Scholar]

- Brainard DH, Freeman WT. Paper presented at SPIE Conference on Human Vision, Visual Processing, and Display. San Jose, CA: 1994. Bayesian method for recovering surface and illuminant properties from photoreceptor responses; pp. 364–376. [Google Scholar]

- Brainard DH, Freeman WT. Bayesian color constancy. Journal of the Optical Society of America A, Optics, Image Science, and Vision. 1997;14:1393–1411. doi: 10.1364/josaa.14.001393. [PubMed] [DOI] [PubMed] [Google Scholar]

- Brainard DH, Kraft JM, Longère P. Color constancy: Developing empirical tests of computational models. In: Mausfeld R, Heyer D, editors. Colour perception: Mind and the physical world. Oxford: Oxford University Press; 2003. pp. 307–334. [Google Scholar]

- Brainard DH, Wandell BA. Analysis of the retinex theory of color vision. Journal of the Optical Society of America A, Optics and Image Science. 1986;3:1651–1661. doi: 10.1364/josaa.3.001651. [PubMed] [DOI] [PubMed] [Google Scholar]

- Brainard DH, Wandell BA. Asymmetric color matching: How color appearance depends on the illuminant. Journal of the Optical Society of America A, Optics and Image Science. 1992;9:1433–1448. doi: 10.1364/josaa.9.001433. [PubMed] [DOI] [PubMed] [Google Scholar]

- Brainard DH, Wandell BA, Chichilnisky EJ. Color constancy: From physics to appearance. Current Directions in Psychological Science. 1993;2:165–170. [Google Scholar]

- Buchsbaum G. A spatial processor model for object colour perception. Journal of the Franklin Institute. 1980;310:1–26. [Google Scholar]

- Burnham RW, Evans RM, Newhall SM. Prediction of color appearance with different adaptation illuminations. Journal of the Optical Society of America. 1957;47:35–42. [Google Scholar]

- Chichilnisky EJ, Wandell BA. Seeing gray through the ON and OFF pathways. Visual Neuroscience. 1996;13:591–596. doi: 10.1017/s0952523800008270. [PubMed] [DOI] [PubMed] [Google Scholar]

- Debevec P. Rendering synthetic objects into real scenes: Bridging traditional and image-based graphics with global illumination and high dynamic range photography; Paper presented at SIGGR. A. P. H., 189–198; Orlando, F. L.: 1998. [Google Scholar]

- Delahunt PB, Brainard DH. Control of chromatic adaptation: Signals from separate cone classes interact. Vision Research. 2000;40:2885–2903. doi: 10.1016/s0042-6989(00)00125-5. [PubMed] [DOI] [PubMed] [Google Scholar]

- Delahunt PB, Brainard DH. Does human color constancy incorporate the statistical regularity of natural daylight? . Journal of Vision. 2004;4(2):57–81. doi: 10.1167/4.2.1. http://journalofvision.org/4/2/1/ [PubMed] [Article] [DOI] [PubMed]

- DiCarlo JM, Wandell BA. Illuminant estimation: Beyond the bases; Paper presented at IS&T/SID Eighth Color Imaging Conference; Scottsdale, AZ: 2000. pp. 91–96. [Google Scholar]

- Doerschner K, Boyaci H, Maloney LT. Human observers compensate for secondary illumination originating in nearby chromatic surfaces. Journal of Vision. 2004;4(2):92–105. doi: 10.1167/4.2.3. http://journalofvision.org/4/2/3/ [PubMed] [Article] [DOI] [PubMed]

- Dror RO, Willsky AS, Adelson EH. Statistical characterization of real-world illumination. Journal of Vision. 2004;4:821–837. doi: 10.1167/4.9.11. http://journalofvision.org/4/9/11/ [PubMed] [Article] [DOI] [PubMed]

- D’Zmura M, Iverson G. Color constancy: I. Basic theory of two-stage linear recovery of spectral descriptions for lights and surfaces. Journal of the Optical Society of America A, Optics, Image Science, and Vision. 1993a;10:2148–2165. doi: 10.1364/josaa.10.002148. [PubMed] [DOI] [PubMed] [Google Scholar]

- D’Zmura M, Iverson G. Color constancy: II. Results for two-stage linear recovery of spectral descriptions for lights and surfaces. Journal of the Optical Society of America A, Optics, Image Science, and Vision. 1993b;10:2166–2180. doi: 10.1364/josaa.10.002166. [PubMed] [DOI] [PubMed] [Google Scholar]

- Finlayson GD, Hubel PH, Hordley S. Color by correlation; Paper presented at IS&T/SID Fifth Color Imaging Conference; 6–11; Scottsdale, AZ: 1997. [Google Scholar]

- Fleming RW, Dror RO, Adelson EH. Real-world illumination and the perception of surface reflectance properties. Journal of Vision. 2003;3(5):347–368. doi: 10.1167/3.5.3. http://journalofvision.org/3/5/3/ [PubMed] [Article] [DOI] [PubMed]

- Foley JD, van Dam A, Feiner SK, Hughes JF. Computer graphics: Principles and practice. 2. Reading, MA: Addison-Wesley; 1990. [Google Scholar]

- Freeman WT. Exploiting the generic view assumption to estimate scene parameters. Paper presented at 4th IEEE Intl. Conf. Computer Vision: Berlin; 1993. pp. 347–356. [Google Scholar]

- Funt BV, Drew MS. Color constancy computation in near-Mondrian scenes using a finite dimensional linear model; Paper presented at IEEE Comp. Vis. & Pat. Recog; Ann Arbor, MI. 1988. [Google Scholar]

- Geisler WS. Sequential ideal-observer analysis of visual discriminations. Psychological Review. 1989;96:267–314. doi: 10.1037/0033-295x.96.2.267. [PubMed] [DOI] [PubMed] [Google Scholar]

- Geisler WS, Kersten D. Illusions, perception, and Bayes. Nature Neuroscience. 2002;5:508–510. doi: 10.1038/nn0602-508. [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- Gilchrist A. Seeing black and white. Oxford: Oxford University Press; 2006. [Google Scholar]

- Gilchrist A, Kossyfidis C, Bonato F, Agostini T, Cataliotti J, Li X, et al. An anchoring theory of lightness perception. Psychological Review. 1999;106:795–834. doi: 10.1037/0033-295x.106.4.795. [PubMed] [DOI] [PubMed] [Google Scholar]

- Green DM, Swets JA. Signal detection theory and psychophysics. New York: John Wiley and Sons; 1966. [Google Scholar]

- Helson H, Michels WC. The effect of chromatic adaptation on achromaticity. Journal of the Optical Society of America. 1948;38:1025–1032. doi: 10.1364/josa.38.001025. [DOI] [PubMed] [Google Scholar]

- Hurvich LM, Jameson D. An opponent-process theory of color vision. Psychological Review. 1957;64:384–404. doi: 10.1037/h0041403. [PubMed] [DOI] [PubMed] [Google Scholar]

- Jaaskelainen T, Parkkinen J, Toyooka S. A vector-subspace model for color representation. Journal of the Optical Society of America A, Optics, Image Science, and Vision. 1990;7:725–730. [Google Scholar]

- Jameson D, Hurvich LM. Color adaptation: Sensitivity, contrast, and afterimages. In: Hurvich LM, Jameson D, editors. Handbook of sensory physiology. VII/4. Berlin: Springer; 1972. pp. 568–581. [Google Scholar]

- Kersten D, Yuille A. Bayesian models of object perception. Current Opinion in Neurobiology. 2003;13:150–158. doi: 10.1016/s0959-4388(03)00042-4. [PubMed] [DOI] [PubMed] [Google Scholar]

- Knill D, Richards W, editors. Perception as Bayesian inference. Cambridge: Cambridge University Press; 1996. [Google Scholar]

- Kraft JM, Brainard DH. Mechanisms of color constancy under nearly natural viewing. Proceedings of the National Academy of Sciences of the United States of America. 1999;96:307–312. doi: 10.1073/pnas.96.1.307. [PubMed] [Article] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krantz D. A theory of context effects based on cross-context matching. Journal of Mathematical Psychology. 1968;5:1. [Google Scholar]

- Land EH. The retinex. In: Reuck AVSd, Knight J., editors. CIBA foundation symposium on color vision. Boston: Little, Brown and Company; 1964. pp. 217–227. [Google Scholar]

- Land EH. Recent advances in retinex theory. Vision Research. 1986;26:7–21. doi: 10.1016/0042-6989(86)90067-2. [PubMed] [DOI] [PubMed] [Google Scholar]

- Lee PM. Bayesian statistics. London: Oxford University Press; 1989. [Google Scholar]

- Logvinenko A, Menshikova G. Trade-off between achromatic colour and perceived illumination as revealed by the use of pseudoscopic inversion of apparent depth. Perception. 1994;23:1007–1023. doi: 10.1068/p231007. [PubMed] [DOI] [PubMed] [Google Scholar]

- Maloney LT. Evaluation of linear models of surface spectral reflectance with small numbers of parameters. Journal of the Optical Society of America A, Optics, Image Science, and Vision. 1986;3:1673–1683. doi: 10.1364/josaa.3.001673. [PubMed] [DOI] [PubMed] [Google Scholar]

- Maloney LT, Wandell BA. Color constancy: A method for recovering surface spectral reflectances. Journal of the Optical Society of America A, Optics, Image Science, and Vision. 1986;3:29–33. doi: 10.1364/josaa.3.000029. [PubMed] [DOI] [PubMed] [Google Scholar]

- Maloney LT, Yang JN. The illuminant estimation hypothesis and surface color perception. In: Mausfeld R, Heyer D, editors. Colour perception: From light to object. Oxford: Oxford University Press; 2001. pp. 335–358. [Google Scholar]

- Mamassian P, Landy MS, Maloney LT. Bayesian modelling of visual perception. In: Rao RPN, Olshausen BA, Lewicki MS, editors. Probabilistic models of the brain: Perception and neural function. Cambridge, MA: MIT Press; 2002. pp. 13–36. [Google Scholar]

- Marr D. Vision. San Francisco: W. H. Freeman; 1982. [Google Scholar]

- McCann JJ, McKee SP, Taylor TH. Quantitative studies in retinex theory: A comparison between theoretical predictions and observer responses to the ‘Color Mondrian’ experiments. Vision Research. 1976;16:445–458. doi: 10.1016/0042-6989(76)90020-1. [PubMed] [DOI] [PubMed] [Google Scholar]

- Purves D, Lotto RB. Why we see what we do: An empirical theory of vision. Sunderland, MA: Sinauer; 2003. [Google Scholar]

- Rao RPN, Olshausen BA, Lewicki MS, editors. Probabilistic models of the brain: Perception and neural function. Cambridge, MA: MIT Press; 2002. [Google Scholar]

- Ripamonti C, Bloj M, Hauck R, Mitha K, Greenwald S, Maloney SI, et al. Measurements of the effect of surface slant on perceived lightness. Journal of Vision. 2004;4(9):747–763. doi: 10.1167/4.9.7. http://journalofvision.org/4/9/7/ [PubMed] [Article] [DOI] [PubMed]

- Rutherford MD, Brainard DH. Lightness constancy: A direct test of the illumination-estimation hypothesis. Psychological Science. 2002;13:142–149. doi: 10.1111/1467-9280.00426. [PubMed] [DOI] [PubMed] [Google Scholar]

- Shevell SK. The dual role of chromatic backgrounds in color perception. Vision Research. 1978;18:1649–1661. doi: 10.1016/0042-6989(78)90257-2. [PubMed] [DOI] [PubMed] [Google Scholar]

- Speigle JM, Brainard DH. Luminosity thresholds: Effects of test chromaticity and ambient illumination. Journal of the Optical Society of America A, Optics, Image Science, and Vision. 1996;13:436–451. doi: 10.1364/josaa.13.000436. [PubMed] [DOI] [PubMed] [Google Scholar]

- Speigle JM, Brainard DH. Predicting color from gray: The relationship between achromatic adjustment and asymmetric matching. Journal of the Optical Society of America A, Optics, Image Science, and Vision. 1999;16:2370–2376. doi: 10.1364/josaa.16.002370. [PubMed] [DOI] [PubMed] [Google Scholar]

- Stiles WS. Mechanism concepts in colour theory. Journal of the Colour Group. 1967;11:106–123. [Google Scholar]

- Stocker AA, Simoncelli EP. Noise characteristics and prior expectations in human visual speed perception. Nature Neuroscience. 2006;9:578–585. doi: 10.1038/nn1669. [PubMed] [DOI] [PubMed] [Google Scholar]

- von Helmholtz H. In: Treatise on physiological optics. 2. Southall JPC, editor. Vol. 1962. New York: Dover; 1866. [Google Scholar]

- von Kries J. Chromatic adaptation. In: MacAdam DL, editor. Sources of color vision. Cambridge, MA: MIT Press; 1970. pp. 109–119. (Original work published 1902) [Google Scholar]

- Walraven J. Discounting the background: The missing link in the explanation of chromatic induction. Vision Research. 1976;16:289–295. doi: 10.1016/0042-6989(76)90112-7. [PubMed] [DOI] [PubMed] [Google Scholar]

- Wandell BA. The synthesis and analysis of color images. IEEE Transactions on Pattern Analysis and Machine Intelligence. 1987;9:2–13. doi: 10.1109/tpami.1987.4767868. [DOI] [PubMed] [Google Scholar]

- Webster MA, Mollon JD. Colour constancy influenced by contrast adaptation. Nature. 1995;373:694–698. doi: 10.1038/373694a0. [PubMed] [DOI] [PubMed] [Google Scholar]

- Weiss Y, Simoncelli EP, Adelson EH. Motion illusions as optimal percepts. Nature Neuroscience. 2002;5:598–604. doi: 10.1038/nn0602-858. [PubMed] [DOI] [PubMed] [Google Scholar]

- Williams SM, McCoy AN, Purves D. An empirical explanation of brightness. Proceedings of the National Academy of Sciences of the United States of America. 1998;95:13301–13306. doi: 10.1073/pnas.95.22.13301. [PubMed] [Article] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wyszecki G. Color appearance. In: Boff KR, Kaufman L, Thomas JP, editors. Handbook of perception and human performance: Sensory processes and perception. Vol. 1. New York: John Wiley & Sons; 1986. pp. 9.1–9.56. [Google Scholar]

- Wyszecki G, Stiles WS. Color science— Concepts and methods, quantitative data and formulae. 2. New York: John Wiley & Sons; 1982. [Google Scholar]

- Xiao B, Brainard DH. Color perception of 3D objects: Constancy with respect to variation in surface gloss; Paper presented at ACM Symposium on Applied Perception in Graphics and Visualization; Boston, MA. 2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yang JN, Maloney LT. Illuminant cues in surface color perception: Tests of three candidate cues. Vision Research. 2001;41:2581–2600. doi: 10.1016/s0042-6989(01)00143-2. [PubMed] [DOI] [PubMed] [Google Scholar]

- Zhang X, Brainard DH. Bayesian color-correction method for non-colorimetric digital image sensors; Paper presented at 12th IS&T/SID Color Imaging Conference; Scottsdale, AZ. 2004. pp. 308–314. [Google Scholar]