Abstract

Rats chose between alternatives that differed in the number of reinforcers and in the delay to each reinforcer. A left leverpress led to two reinforcers, each delivered after a fixed delay. A right leverpress led to one reinforcer after an adjusting delay. The adjusting delay was increased or decreased many times in a session, depending on the rat's choices, in order to estimate an indifference point—a delay at which the two alternatives were chosen about equally often. Both the number of reinforcers and their individual delays affected the indifference points. The overall pattern of results was well described by the hyperbolic-decay model, which states that each additional reinforcer delivered by an alternative increases preference for that alternative but that a reinforcer's effect is inversely related to its delay. Two other possible delay-discounting equations, an exponential equation and a reciprocal equation, did not produce satisfactory predictions for these data. Adding an additional free parameter to the hyperbolic equation as an exponent for delay did not appreciably improve the predictions, suggesting that raising delay to some power other than 1.0 was unnecessary. The results were qualitatively similar to those from a previous experiment with pigeons (Mazur, 1986), but quantitative differences suggested that the rates of delay discounting were several times slower for rats than for pigeons.

When two alternatives differ in both the delay to reinforcement and the number of reinforcers delivered per trial, both of these factors can affect an animal's choices. This is not very surprising, but there have been several different proposals about exactly how these two factors combine to determine preference. In applying delay reduction theory to concurrent-chain schedules with more than one reinforcer in a terminal link, Squires and Fantino (1971) proposed that the delay to the first reinforcer is critical and that additional reinforcers affect preference only to the extent that they increase the overall rate of reinforcement. On the basis of his studies with pigeons in concurrent-chain procedures, Moore (1979, 1982) came to a different conclusion. His pigeons showed a preference for the alternative that delivered multiple reinforcers if they were separated by just a few seconds, but not if there were longer delays between the reinforcers. Moore proposed that when several reinforcers are delivered in rapid succession, this may be functionally similar to an increase in the amount of reinforcement and animals may display a preference for this alternative because of its larger amount. However, he argued that when longer delays separate a series of reinforcers, the separate reinforcers will not function as an increase in reinforcer amount and, in these circumstances, the multiple reinforcers will be no more effective than a single reinforcer.

McDiarmid and Rilling (1965) took a different approach to multiple delayed reinforcers. They proposed that if one alternative in a choice situation delivers multiple delayed reinforcers, each reinforcer adds to the total value of that alternative but the effect of each reinforcer is inversely proportional to its delay, as described by the following equation:

| (1) |

where V is the value of a series of reinforcers, n is the total number of potential reinforcers in the series, Pi is the probability that reinforcer i will be delivered, and Di is the delay between the choice response and reinforcer i. In a choice situation, an animal will choose whichever alternative has the larger V. This position is distinctly different from the proposals of Squires and Fantino (1971) and Moore (1979), because instead of attributing the effects of multiple reinforcers to changes in the rate or amount of reinforcement, it assumes that each reinforcer has an independent effect that depends on its delay. McDiarmid and Rilling found that Equation 1 could predict the choices of their pigeons in a discrete-trial procedure that involved alternatives with different delays and numbers of reinforcers. Later, Shull and Spear (1987) applied this equation to pigeons' choices in a concurrent-chain procedure with multiple reinforcers in one terminal link, and the equation accounted for their data at an ordinal level, although some departures from the exact quantitative predictions were observed.

Mazur (1986) adopted the general approach of McDiarmid and Rilling (1965)—that the total value of a series of reinforcers is the sum of their separate delay-discounted values—but he used a different equation to describe how reinforcer value decreases with increasing delay:

| (2) |

where A is a measure of the amount of reinforcement, and K is a decay parameter that determines how quickly V decreases with increases in Di. This equation, known as the hyperbolic-decay model, has been successfully applied to a variety of different choice situations, including self-control choice (Mazur, 1987), choice between fixed and variable reinforcer delays (Mazur, 1984), and choice between certain and uncertain reinforcers (Mazur, 1989; Mazur & Romano, 1992). To test the predictions of Equation 2 for choices between single and multiple delayed reinforcers, Mazur (1986) used an adjusting-delay procedure. The procedure gave pigeons a choice between a standard alternative, which delivered two or three reinforcers after fixed delays (e.g., food deliveries that began 5 and 10 sec after the choice response), and an adjusting alternative (one food delivery after a delay that varied over trials). The delay for the adjusting alternative was systematically increased and decreased over trials, depending on the animal's choices, in order to estimate an indifference point—a delay at which the two alternatives were chosen about equally often. By assuming that at an indifference point, Va = Vs (the value of the adjusting alternative equals the value of the standard alternative), Equation 2 could be used to make predictions for each condition in the experiment. The results of the experiment were well described by Equation 2.

Besides testing the general approach to multiple delayed reinforcers described by Equation 2, Mazur's (1986) experiment was conducted to evaluate whether it was necessary to raise Di in Equation 2 to some exponent other than 1. Several writers have suggested that in order to describe accurately the effects of delayed reinforcers, the time between a response and a reinforcer may need to be scaled by using some exponent, S, that is greater or less than 1 (Davison, 1969; Duncan & Fantino, 1970; Green, Fry, & Myerson, 1994; Killeen, 1968). For instance, Green et al. obtained indifference points from human subjects by giving them a series of hypothetical choices between a large amount of money after a long delay and a smaller amount delivered immediately. In applying the hyperbolic-decay equation to their subjects' indifference points, the authors varied both K and S, and they found that the values of S were significantly different for different age groups. For instance, in one set of conditions, the best-fitting value of S was 0.368 for sixth-grade children, 0.724 for college students, and 5.01 for elderly adults. Green et al. interpreted these large differences in S as indicating different sensitivities to short and long delays among the different age groups.

If delay is raised to some exponent, S, Equation 2 becomes

| (3) |

Variations in S have two main effects. First, larger values of S imply more rapid deceases in reinforcer value as delay increases. Second, varying S can change the shape of the discounting function. For example, if S equals 2 rather than 1 (and suitable adjustments are made in K to keep the two functions as similar as possible), the result is that V declines more slowly with short delays but more rapidly with long delays. Because it changes the shape of the discounting function, including S as a free parameter can lead to improved fits to a set of empirical data (since adding an additional free parameter can lead only to equal or better fits to the data, never worse fits). The question addressed by the present experiment was whether the exponent is really necessary. Mazur (1986) showed that giving animals a series of choices between single and multiple delayed reinforcers provides a particularly sensitive way to determine whether the exponent is necessary, because the predictions of Equation 3 vary substantially depending on the value of S. The pattern of indifference points from the pigeons in this experiment was well described by Equation 3 if S was set to a value of 1, and the predictions were worse if values of S much greater or less than 1 were used. Mazur (1986) therefore concluded that, at least for the pigeons in this procedure, there was no evidence that reinforcer delay needed to be raised to any power other than 1 to describe the relationship between delay and reinforcer value.

This method of adding an exponent to Equation 2 has been used by a number of researchers (Mazur, 1987; Rachlin, 2006; Rodriguez & Logue, 1988). Another approach is to apply S to the entire denominator, so that it becomes (1+KDi )S i (e.g., Green et al., 1994; Loewenstein & Prelec, 1992). For the present experiment, Equation 3 was used in order to be consistent with the analysis of Mazur (1986). However, the predictions for this experiment, and how varying S affects those predictions, would not change substantially if the alternate method of adding the exponent were used.

In the present experiment, rats were tested in a procedure similar to that in Mazur (1986). This experiment had two main purposes. The first was to determine whether Equation 3 could predict the results from a different species in choices between single and multiple delayed reinforcers. If it could, the second purpose was to assess whether an exponent other than 1 would be needed to describe the results accurately. An adjusting-delay procedure was used, and a choice of the right lever always delivered one food pellet after an adjusting delay. A choice of the left lever led to the standard alternative, which delivered two food pellets. In one set of conditions, the delay to the first food pellet was x sec, and the delay to the second food pellet was 2x sec (with x varying across conditions). In a second set of conditions, the standard alternative delivered pellets after x and 4x sec. The predictions of the hyperbolic-decay model for this experiment can be obtained as follows. Because neither amount (A) nor probability of reinforcement (Pi) varied in this experiment, Equation 3 can be simplified to

| (4) |

For any pair of standard delays and any values of K and S, Equation 4 can be used to calculate Vs, the value of the standard alternative. Then, assuming that Va = Vs at the indifference point, Equation 4 can be simplified and rewritten to solve for Da, the adjusting delay, as follows:

| (5) |

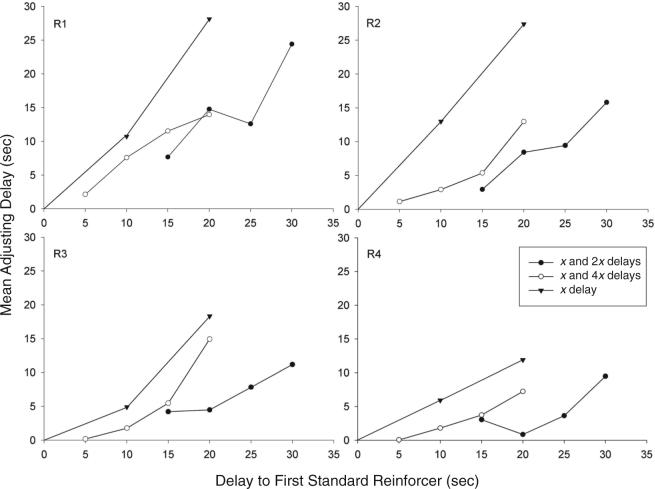

To illustrate how the predictions of the model depend heavily on the value of the exponent, the three panels in Figure 1 show some representative predictions for this experiment, with S set equal to 0.5, 1, and 2, respectively. In this figure, the delay to the first standard reinforcer is plotted on the x-axis, and the predicted adjusting delay is plotted on the y-axis. The solid lines show the predictions for conditions with standard reinforcers delivered after x and 2x sec, and the dashed lines are the predictions for conditions with standard reinforcers delivered after x and 4x sec. As S increases, both the x-intercepts and the slopes of the functions change. The x-intercept shifts to the left with increasing values of S, but this shift is not the most revealing difference, because it can be compensated for by changing the value of K (i.e., lowering the value of K will shift the functions to the right). However, the slopes of the functions are more informative, because after some initial curvature, the functions become nearly linear, and the slopes approach asymptotic values that depend only on S. For example, in the conditions with x and 2x delays, the slopes of the functions approach asymptotes of 0.33, 0.67, and 0.89 when S is equal to 0.5, 1, and 2, respectively. Because these slopes are independent of the value of K, they can be used to determine whether values of S other than 1 are necessary to describe the data accurately.

Figure 1.

Predictions of Equations 4 and 5 for a choice between a standard alternative that delivers two delayed reinforcers and an adjusting alternative that delivers one delayed reinforcer. The delay to the first standard reinforcer is plotted on the x-axis, and the predicted adjusting delay is plotted on the y-axis. The three panels show the predictions with S set equal to 0.5, 1, and 2, respectively.

METHOD

Subjects

Four male Sprague Dawley rats, about 11 months old at the start of the experiment, were maintained at approximately 80% of their free-feeding weights.

Apparatus

The experimental chamber was a modular test chamber for rats, 30.5 cm long, 24 cm wide, and 21 cm high. The side walls and top of the chamber were Plexiglas, and the front and back walls were aluminum. The floor consisted of steel rods, 0.48 cm in diameter and 1.6 cm apart, center to center. The front wall had two retractable response levers, 11 cm apart, 6 cm above the floor, 4.8 cm long, and extending 1.9 cm into the chamber. Centered on the front wall was a nonretractable lever with the same dimensions, 11.5 cm above the floor. A force of approximately 0.20 N was required to operate each lever, and when a lever was active, each effective response produced a feedback click. Above each lever was a 2-W white stimulus light, 2.5 cm in diameter. A pellet dispenser delivered 45-mg food pellets into a receptacle through a square 5.1 cm opening in the center of the front wall. A 2-W white houselight was mounted at the top center of the rear wall.

The chamber was enclosed in a sound-attenuating box containing a ventilation fan. All the stimuli were controlled and responses recorded by an IBM-compatible personal computer using the Med-state programming language.

Procedure

The rats had previously participated in conditions with an adjusting-delay procedure similar to the one used in this experiment, so no additional training was necessary. The experiment consisted of 10 conditions, which were divided into three phases.

Phase 1 (Conditions 1−4)

Every session lasted for 40 trials or for 60 min, whichever came first. Within a session, each block of 4 trials consisted of 2 forced trials followed by 2 choice trials. At the start of each trial, the houselight was turned off, the light above the center lever was lit, and a response on this lever was required to begin the choice period. On choice trials, after a response on the center lever, the light above this lever was turned off, the two front levers were extended into the chamber, and the lights above the two side levers were turned on. A single response on the left lever constituted a choice of the standard alternative, and a single response on the right lever constituted a choice of the adjusting alternative.

If the adjusting (right) lever was pressed during the choice period, the two side levers were retracted, only the light above the right lever remained on, and there was a delay of adjusting duration (as explained below). At the end of the adjusting delay, the light above the right lever was turned off, one food pellet was delivered, and the chamber was dark for 1 sec. Then the houselight was turned on, and an intertrial interval (ITI) began. For all the adjusting and standard trials, the duration of the ITI was set so that the total time from a choice response to the start of the next trial was 85 sec.

If the standard (left) lever was pressed during the choice period, the two side levers were retracted, and two food pellets were delivered x sec and 2x sec after the choice response, where x varied across conditions. For example, in Condition 1, after a response on the left lever, there was a 20-sec delay with the light above the left lever lit; then the light was turned off, a food pellet was delivered, and the chamber was dark for 1 sec. Next, the light above the left lever was lit for 19 sec; then the second food pellet was delivered (40 sec after the choice response), and the chamber was dark for 1 sec. Finally, the white houselight was lit, and there was an ITI of 44 sec (so the total time from the choice response to the start of the next trial was 85 sec). In Conditions 2−4, the reinforcer delays for the standard alternative were 25 and 50 sec, 30 and 60 sec, and 15 and 30 sec, respectively.

The procedure on the forced trials was the same as that on the choice trials, except that only one lever, left or right, was extended into the chamber after a response on the center lever and only the stimulus light above that lever was lit. A response on this lever led to the same sequence of events as that on choice trials. Of every two forced trials, one involved the left lever and the other the right lever. The temporal order of these two types of trials varied randomly.

After every two choice trials, the duration of the adjusting delay might be changed. If the subject chose the standard lever on both trials, the adjusting delay was decreased by 1 sec. If a subject chose the adjusting lever on both choice trials, the adjusting delay was increased by 1 sec (up to a maximum of 35 sec). If the subject chose each lever on one trial, no change was made. In all three cases, this adjusting delay remained in effect for the next block of four trials. At the start of the first session of a condition, the adjusting delay was 0 sec. At the start of later sessions of the same condition, the adjusting delay was determined by the rules above as if it were a continuation of the preceding session.

Phase 2 (Conditions 5−8)

These conditions were similar to those in Phase 1, except that the standard alternative delivered one pellet x sec after a choice response and a second pellet 4x sec after the choice response. In the four conditions, the reinforcer delays for the standard alternative were 5 and 20 sec, 15 and 60 sec, 10 and 40 sec, and 20 and 80 sec, respectively.

Phase 3 (Conditions 9 and 10)

The purpose of these two conditions was to measure any possible biases for the left or right alternative. The procedure was similar to those in the previous phases, except that the standard alternative delivered a single pellet after a fixed delay. The delay was 20 sec in Condition 9 and 10 sec in Condition 10. After a response on the left lever, the two side levers were retracted, and the light above the left lever was lit during the standard delay; then a food pellet was delivered, and the chamber was dark for 1 sec; then the white houselight was lit, and the ITI began.

Stability criteria

Conditions 1−8 lasted for a minimum of 20 sessions, and Conditions 9 and 10 for a minimum of 25 sessions. After the minimum number of sessions, a condition was terminated for each subject individually when several stability criteria were met. To assess stability, each session was divided into two 20-trial blocks, and for each block, the mean adjusting delay was calculated. The results from the first 2 sessions of a condition were not used, and the condition was terminated when the following criteria were met, using the data from all subsequent sessions: (1) Neither the highest nor the lowest single-block mean of a condition could occur in the last six blocks of a condition, (2) the mean adjusting delay across the last six blocks could not be the highest or the lowest six-block mean of the condition, and (3) the mean delay of the last six blocks could not differ from the mean of the preceding six blocks by more than 10% or by more than 1 sec (whichever was larger).

RESULTS

All data analyses were based on the results from the six half-session blocks that satisfied the stability criteria in each condition. For each subject and each condition, the mean adjusting delay from these six half-session blocks was used as a measure of the indifference point.

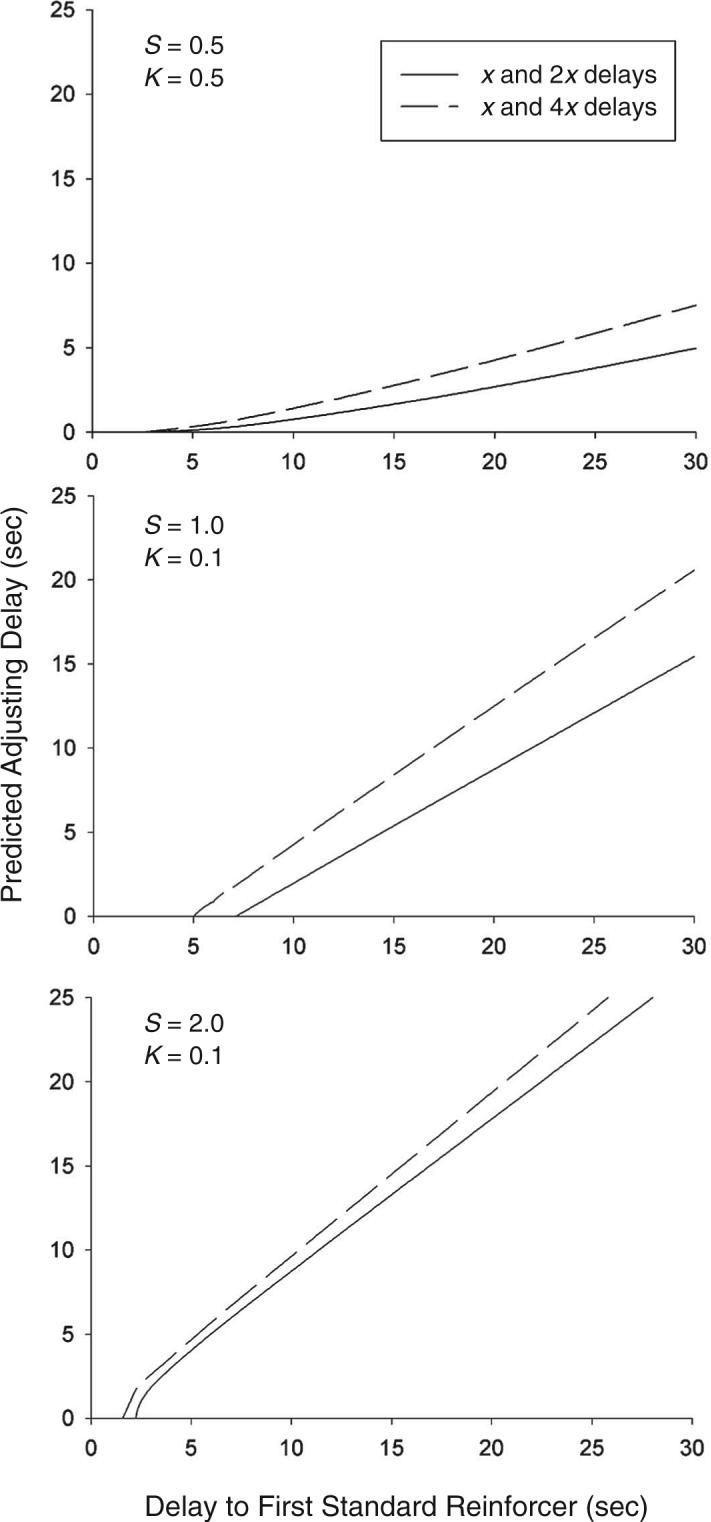

Figure 2 shows the mean adjusting delays from the 10 conditions for each of the 4 rats, plotted as a function of the delay to the first standard reinforcer. As can be seen, the indifference points varied across the three phases of the experiment. Because shorter adjusting delays indicate greater preference for the standard alternative, it was expected that the adjusting delays would be longest in Phase 3 (because there was only one standard reinforcer) and shortest in Phase 1 (because the delay to the second standard reinforcer was shorter than that in Phase 2). This expectation was confirmed, which shows that both the presence of the second standard reinforcer and its temporal location affected the rats' choices.

Figure 2.

For each rat, the mean adjusting delay for each condition, plotted as a function of the delay to the first standard reinforcer.

For each rat, the indifference points from the two conditions with a single reinforcer as the standard alternative were used to obtain an estimate of bias for or against the standard alternative (which could be due to a position preference, for example). If there were no bias, the mean adjusting delays in these two conditions would be equal to the standard delays (10 and 20 sec). However, as is shown in Figure 2, the actual adjusting delays varied among the rats. This bias can be handled in a variety of ways, but for the present experiment, a simple method was used. Data from previous experiments in which an adjusting-delay procedure has been used (e.g., Mazur, 1984) have shown that bias is approximately proportional to the size of the adjusting delay. For instance, an animal that reached an indifference point between a fixed delay of 10 sec and an adjusting delay of 12 sec would also reach an indifference point between a fixed delay of 20 sec and an adjusting delay of about 24 sec. This suggests a bias of 20% toward the adjusting alternative, and to compensate for this bias when a quantitative model is tested, all the predicted indifference points would be increased by 20%.

This was the method used to compensate for bias in the present experiment. To estimate bias for each rat, the equation Da 5 bDs was fitted to the indifference points from the two conditions that had a single standard reinforcer (where Da is the mean adjusting delay, Ds is the standard delay, and b is the bias parameter). The best-fitting value of b was obtained for each rat, using a least-squares criterion. The values of b are shown for each rat in Table 1.

Table 1.

Parameter Estimates for b, K, and S and the Percentage of Variance Accounted For (PVAC) When Both K and S Were Varied As Free Parameters and When S Was Set Equal to 1 and Only K Was Varied

| Varying Both K and S |

Varying K Only |

|||||

|---|---|---|---|---|---|---|

| Subject | b (bias) | K | S | PVAC | K | PVAC |

| R1 | 1.34 | .202 | 0.90 | 85.16 | .114 | 84.00 |

| R2 | 1.37 | .089 | 0.90 | 94.41 | .056 | 92.37 |

| R3 | 0.83 | .047 | 1.11 | 79.50 | .080 | 78.53 |

| R4 | 0.59 | .065 | 1.00 | 73.60 | .065 | 73.60 |

| Group | 1.03 | .085 | 0.97 | 93.93 | .073 | 93.82 |

After estimates of bias were obtained, the best-fitting predictions of the hyperbolic-decay model were obtained for the results from the other eight conditions (those that included two reinforcers for the standard alternative). The predictions were obtained with the same procedure as that used to obtain the predictions shown in Figure 1. First, for a specific set of values for K and S, Equation 4 was used to calculate Vs. Next, assuming that Va = Vs at an indifference point, Equation 5 was used to calculate Da, the equivalent adjusting delay. Lastly, to compensate for bias, each value of Da was multiplied by b to obtain the final predictions of the model.

Two sets of predictions were obtained for each rat, one with both K and S varying as free parameters and another with S held constant at 1. The middle portion of Table 1 shows the best-fitting values of K and S and the percentage of variance accounted for by the model when both were treated as free parameters. The best-fitting values of S were all close to 1, ranging from 0.90 to 1.11. The right portion of the table shows the best-fitting values of K and the percentage of variance accounted for when S was held constant at 1. Keeping S constant had little effect on the goodness of the fits. On average, there was less than a 1% increase in the percentage of variance accounted for when S was treated as a free parameter.

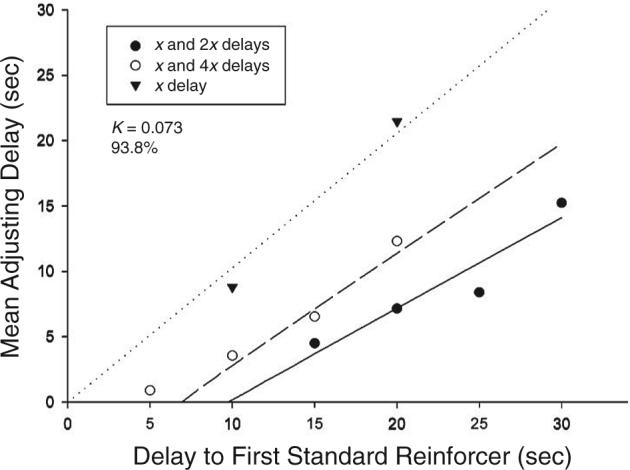

Figure 3 shows the group means from each condition, and the lines are the best-fitting predictions of Equations 4 and 5 when only K was varied as a free parameter. The equations provided good predictions, and with K = 0.073, the one-parameter model accounted for 93.8% of the variance. As is shown in Table 1, when S was also treated as a free parameter, its best-fitting value for the group data was 0.97, and there was an increase of only 0.10% in the percentage of variance accounted for.

Figure 3.

Mean adjusting delay for the group for each condition, plotted as a function of the delay to the first standard reinforcer. The lines are the best-fitting predictions of Equations 4 and 5, with S held constant at 1 and with K varying as a free parameter.

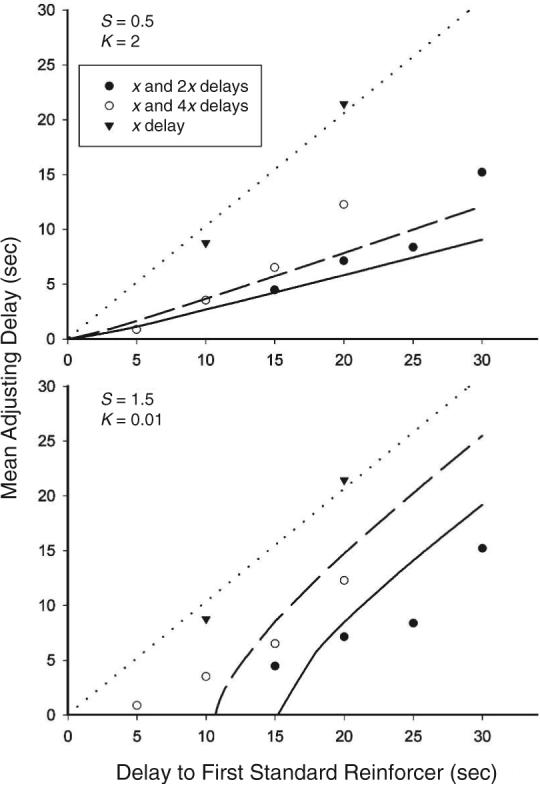

Not only does adding S as a free parameter do little to improve the predictions of the model, but also, if values of S very different from 1 are used, there are large and systematic errors in the predictions. To illustrate this point, Equations 4 and 6 were used to obtain two additional sets of predictions, with S equal to 0.5 and 1.5, respectively. As has already been explained, the slopes of the predicted functions vary greatly depending on the value of S. As is shown in Figure 4, with S = 0.5, the predicted slopes are too shallow to describe the data accurately, and with S = 1.5, the predicted slopes are too steep. These results, combined with the results shown in Table 1, indicate that the best predictions for both the individual rats and the group means were obtained with S equal to or close to 1.0.

Figure 4.

Group means from the experiment compared with the predictions of Equations 4 and 5, with S equal to 0.5 (top panel) and 1.5 (bottom panel).

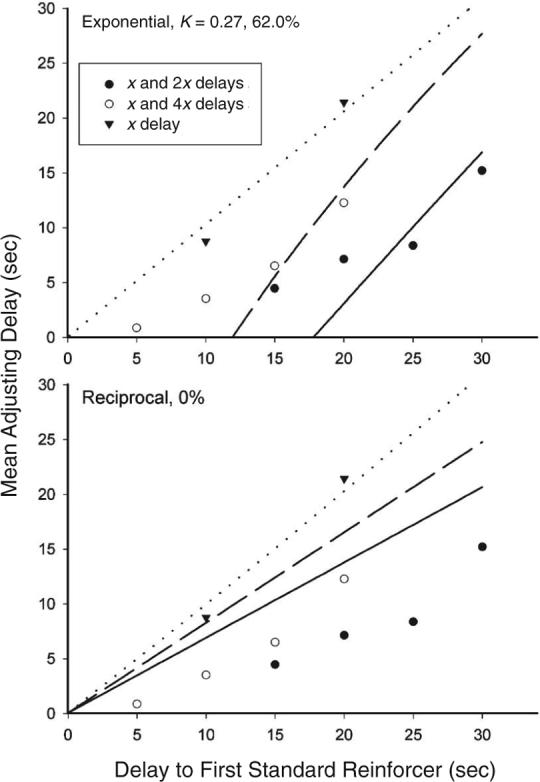

The results from this experiment can also be used to test the predictions of two other functions that have been proposed as potential discounting functions for delayed reinforcers: the exponential and the reciprocal equations. To apply the exponential equation to the results of the present experiment, it can be written as follows:

| (6) |

Equation 6 was applied to the group means from this experiment, with K varying as a free parameter to find the best least-squares fit. Ignoring any bias, Equation 6 predicts that the indifference functions should have slopes that are greater than 1.0 and approach 1.0 as an asymptote. (However, because the estimated bias parameter for the group was 1.03, this bias estimate was included in calculating the best fits for both the exponential and the reciprocal equations, just as was done for the hyperbolic equation.) As is shown in the top panel of Figure 5, the exponential equation does not produce satisfactory fits to the data, because the actual slopes were much less than 1.0.

Figure 5.

Group means from the experiment compared with the predictions of an exponential equation (top panel) and a reciprocal equation (bottom panel). The percentage of variance accounted for by each equation is shown.

The reciprocal equation, as proposed by McDiarmid and Rilling (1965), can include K as a discounting-rate parameter, as in the other equations:

| (7) |

However, the value of K has no effect on the predictions of the reciprocal equation for this choice situation. For all values of K, Equation 7 predicts linear functions with y-intercepts of zero and with a slope of 0.67 for the conditions with reinforcer delays of x and 2x and a slope of 0.80 for the conditions with delays of x and 4x. The bottom panel of Figure 5 shows that these predictions were not consistent with the data: The actual adjusting delays were substantially smaller than was predicted in all eight conditions with two standard reinforcers. Therefore, neither the exponential nor the reciprocal equations made predictions that were consistent with the results of this experiment.

DISCUSSION

In this experiment, the indifference points from the conditions with x and 2x delays were shorter than those from the conditions with x and 4x delays, which were, in turn, shorter than those from the conditions with a single reinforcer as the standard alternative. These results are not consistent with the suggestion that only the first reinforcer in the sequence is effective (Squires & Fantino, 1971) or with the suggestion that additional reinforcers are effective only if they occur in close proximity to the first reinforcer (Moore, 1979). The results are consistent with the proposal that when one alternative delivers two delayed reinforcers, both reinforcers add to the total value of that alternative, and that their contributions depend on their individual delays, as measured from the moment that the choice response is made (McDiarmid & Rilling, 1965).

Although the general approach to multiple delayed reinforcers suggested by McDiarmid and Rilling (1965) was supported, the simple reciprocal equation they proposed to describe the relation between delay and reinforcer value was not. Figure 5 shows that the predictions of the reciprocal equation were not accurate, and as has already been noted, adding K as a free parameter does not help, because varying K has no effect on the predictions of this equation. Figure 5 also shows that the exponential equation, with K varying as a free parameter, cannot account for the pattern of results either. In contrast, the hyperbolic-decay equation described the results well, accounting for 93.8% of the variance in the group means and for an average of 82.1% of the variance in the data from the individual rats. These results are consistent with the findings of Mazur (1987), who found support for the predictions of the hyperbolic equation, but not for the reciprocal or exponential equations, in an experiment on pigeons' choices between reinforcers differing in delay and amount.

One main purpose of this experiment was to determine whether delay should be raised to some exponent other than 1.0 in the hyperbolic equation. The experiment provided a sensitive test of this possibility because the predicted slopes of the indifference functions are heavily dependent on the value of the exponent S in Equation 4. The results suggested that the addition of the exponent was unnecessary in this experiment with rats. Of course, adding an additional free parameter will always improve the fits to the data; but when S was included as a free parameter, (1) the increases in the percentage of variance accounted for were negligible, and (2) the best-fitting values of S were close to 1.0 for all the rats (ranging from 0.90 to 1.11). This consistency of values for S is in striking contrast to estimates obtained from studies with human subjects, where values of the exponent have varied by a factor of 10 or more (e.g., Green et al., 1994).

All of these conclusions are similar to those of Mazur (1986), who conducted a similar experiment with pigeons, using the same adjusting-delay procedure and similar choices, except that the standard alternative included two delayed reinforcers in some conditions and three delayed reinforcers in other conditions. However, one aspect of the results that differed between the rats in this experiment and the pigeons in Mazur (1986) was the value of the discounting parameter, K. For the pigeons, the data were well described with K equal to 1.0, a value that has also worked well in other experiments with pigeons (e.g., Mazur, 1984). In the present experiment, the best-fitting values of K were much lower for all 4 rats (M = 0.10). This difference is consistent with other data suggesting that the rate of temporal discounting for rats is several times slower than that for pigeons (Mazur, 2000; Richards, Mitchell, de Wit, & Seiden, 1997).

In summary, the results from both pigeons (Mazur, 1986) and rats (the present experiment) suggest that no exponent for delay is needed in the hyperbolic-decay equation. How can these results be reconciled with those from studies with human subjects, where curve-fitting procedures have yielded large individual differences in the values of S (e.g., Green et al., 1994; Myerson & Green, 1995; Ohmura, Takahashi, Kitamura, & Wehr, 2006)? There are, of course, many procedural differences between the studies with human and nonhuman subjects (money rather than food as a reinforcer, hypothetical questions that are asked just once rather than repeated trials with actual reinforcers, delays of months or years rather than seconds). Do the large variations in S found with human subjects actually reflect a nonlinear scaling of delay (with very large individual differences), or could they be procedural or measurement artifacts? It would be interesting to give human subjects choices between one and two delayed reinforcers, varying the delays to obtain indifference functions analogous to those in the present experiment. If the large variations in S that have been found with human subjects actually reflect individual differences in the scaling of delay, these differences in S should lead to large and predictable variations in the slopes of the indifference functions when people choose between single and multiple delayed reinforcers.

AUTHOR NOTE

This research was supported by Grant MH 38357 from the National Institute of Mental Health. I thank Dawn Biondi, Maureen Lapointe, and Michael Lejeune for their assistance in this research.

REFERENCES

- Davison MC. Preference for mixed-interval versus fixed-interval schedules. Journal of the Experimental Analysis of Behavior. 1969;12:247–252. doi: 10.1901/jeab.1969.12-247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duncan B, Fantino E. Choice for periodic schedules of reinforcement. Journal of the Experimental Analysis of Behavior. 1970;14:73–86. doi: 10.1901/jeab.1970.14-73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green L, Fry AF, Myerson J. Discounting of delayed rewards: A life-span comparison. Psychological Science. 1994;5:33–36. [Google Scholar]

- Killeen P. On the measurement of reinforcement frequency in the study of preference. Journal of the Experimental Analysis of Behavior. 1968;11:263–270. doi: 10.1901/jeab.1968.11-263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loewenstein G, Prelec D. Anomalies in intertemporal choice: Evidence and an interpretation. In: Loewenstein G, Elster J, editors. Choice over time. Russell Sage Foundation; New York: 1992. pp. 119–146. [Google Scholar]

- Mazur JE. Tests of an equivalence rule for fixed and variable reinforcer delays. Journal of Experimental Psychology: Animal Behavior Processes. 1984;10:426–436. [PubMed] [Google Scholar]

- Mazur JE. Choice between single and multiple delayed reinforcers. Journal of the Experimental Analysis of Behavior. 1986;46:67–77. doi: 10.1901/jeab.1986.46-67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazur JE. An adjusting procedure for studying delayed reinforcement. In: Commons ML, Mazur JE, Nevin JA, Rachlin H, editors. Quantitative analyses of behavior: Vol. 5. The effect of delay and of intervening events on reinforcement value. Erlbaum; Hillsdale, NJ: 1987. pp. 55–73. [Google Scholar]

- Mazur JE. Theories of probabilistic reinforcement. Journal of the Experimental Analysis of Behavior. 1989;51:87–99. doi: 10.1901/jeab.1989.51-87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazur JE. Tradeoffs among delay, rate, and amount of reinforcement. Behavioural Processes. 2000;49:1–10. doi: 10.1016/s0376-6357(00)00070-x. [DOI] [PubMed] [Google Scholar]

- Mazur JE, Romano A. Choice with delayed and probabilistic reinforcers: Effects of variability, time between trials, and conditioned reinforcers. Journal of the Experimental Analysis of Behavior. 1992;58:513–525. doi: 10.1901/jeab.1992.58-513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDiarmid CG, Rilling ME. Reinforcement delay and reinforcement rate as determinants of schedule preference. Psycho-nomic Science. 1965;2:195–196. [Google Scholar]

- Moore J. Choice and number of reinforcers. Journal of the Experimental Analysis of Behavior. 1979;32:51–63. doi: 10.1901/jeab.1979.32-51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore J. Choice and multiple reinforcers. Journal of the Experimental Analysis of Behavior. 1982;37:115–122. doi: 10.1901/jeab.1982.37-115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Myerson J, Green L. Discounting of delayed rewards: Models of individual choice. Journal of the Experimental Analysis of Behavior. 1995;64:263–276. doi: 10.1901/jeab.1995.64-263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ohmura Y, Takahashi T, Kitamura N, Wehr P. Three-month stability of delay and probability discounting measures. Experimental & Clinical Psychopharmacology. 2006;14:318–328. doi: 10.1037/1064-1297.14.3.318. [DOI] [PubMed] [Google Scholar]

- Rachlin H. Notes on discounting. Journal of the Experimental Analysis of Behavior. 2006;85:425–435. doi: 10.1901/jeab.2006.85-05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richards JB, Mitchell SH, de Wit H, Seiden LS. Determination of discount functions in rats with an adjusting-amount procedure. Journal of the Experimental Analysis of Behavior. 1997;67:353–366. doi: 10.1901/jeab.1997.67-353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rodriguez ML, Logue AW. Adjusting delay to reinforcement: Comparing choice in pigeons and humans. Journal of Experimental Psychology: Animal Behavior Processes. 1988;14:105–117. [PubMed] [Google Scholar]

- Shull RL, Spear DJ. Detention time after reinforcement: Effects due to delay of reinforcement? In: Commons ML, Mazur JE, Nevin JA, Rachlin H, editors. Quantitative analyses of behavior: Vol. 5. The effect of delay and of intervening events on reinforcement value. Erlbaum; Hillsdale, NJ: 1987. pp. 187–204. [Google Scholar]

- Squires N, Fantino E. A model for choice in simple concurrent and concurrent-chains schedules. Journal of the Experimental Analysis of Behavior. 1971;15:27–38. doi: 10.1901/jeab.1971.15-27. [DOI] [PMC free article] [PubMed] [Google Scholar]