Abstract

Brain-damaged patients experience difficulties in recognizing a face (prosopagnosics), but they can still recognize its expression. The dissociation between these two face-related skills has served as a keystone of models of face processing. We now report that the presence of a facial expression can influence face identification. For normal viewers, the presence of a facial expression influences performance negatively, whereas for prosopagnosic patients, it improves performance dramatically. Accordingly, although prosopagnosic patients show a failure to process the facial configuration in the interest of face identification, that ability returns when the face shows an emotional expression. Accompanying brain-imaging results indicate activation in brain areas (amygdala, superior temporal sulcus, parietal cortex) outside the occipitotemporal areas normally activated for face identification and lesioned in these patients. This finding suggests a modulatory role of these areas in face identification that is independent of occipitotemporal face areas.

Keywords: face recognition, amygdala, configural processes, inversion effect, emotion

Patients with prosopagnosia are unable to recognize persons by the face (1), but recognition of facial expressions appears to be preserved. This dissociation has been a major contribution by lesion studies to standard models of normal face processes (2). Here, we report that, when performing a matching task in which identity of the parts is the critical variable, performance of prosopagnosic patients improves dramatically in the presence of a facial expression, whereas that of normal viewers deteriorates. More specifically, prosopagnosic patients show a failure to process the facial configuration, but, surprisingly, that ability returns when the face displays an emotional expression. These findings are consistent with evidence for a modulating role of facial expressions on visual processes in normal observers (3–6).

Now this modulatory role of facial expressions is reported for prosopagnosic patients and it is observed at the level at which the facial configuration is processed. This finding challenges accepted models of face processing but is nevertheless consistent with (i) our present understanding of the functional role of the brain areas involved in face perception, (ii) recent findings on residual skills for processing the facial configuration in prosopagnosics, (iii) findings about a relatively early time course for processing of facial expression, and (iv) the fact that the ability to process the facial configuration is important not only for face identity, but also for recognition of facial expressions.

First, a keystone of the functional explanation of prosopagnosia is a configural deficit, defined as a loss of the skill of treating the face as a whole or as a configuration, rather than as a collection of parts. The link between this configural deficit and neuroanatomy of face identification and prosopagnosia, however, is not yet well clarified. On the one hand, substantial variation occurs in the lesions giving rise to face deficits. Bilateral occipitotemporal damage is the most frequent pattern, but cases involving unilateral, either left or right, occipitotemporal damage and damage outside those areas have been reported (7). Besides that, in recent brain-imaging results (8–11), the relation between brain activation and the functional role of face areas in normal subjects is as yet not understood in sufficient detail to provide strong constraints. For example, a number of separate processes in which the facial configuration plays a critical role (face detection, structural encoding, categorization, or identification) cannot yet be assigned selectively to one or another brain area or network of areas. The hemodynamic response is relatively slow, complicating inferences about the separate subprocesses deemed critical in cognitive models.

Second, the configural deficit of prosopagnosics can manifest itself in different ways. The most familiar one is when patients only attend to parts of the face, but another pattern, which has the consequences opposite to a simple loss of configural face processing, has also been reported. Although normal viewers recognize upright faces better than upside-down ones (the inversion effect), many prosopagnosic patients no longer show this orientation sensitivity and only focus on face parts irrespective of the image's orientation. In contrast, it has been reported that for some other patients identification of upside-down faces is actually easier, a pattern referred to as the paradoxical inversion effect (12, 13). Such paradoxical phenomena have been reported in other studies of the consequences of brain damage. These phenomena result from a disinhibition between processing routes rather than from absolute loss of a skill (12, 14, 15). The paradoxical effects observed in prosopagnosic patients indicate that configural processes related to accessing personal identity from the face and the more general configural face skills (involved in face detection on the one hand and in object recognition on the other) possibly correspond to two different processing routes (16), such that identification can be lost, but configural face processing can still be preserved (17, 18).

Third, slower processing rates due to brain damage can lead to qualitative differences between face processing in normal and damaged brains. The temporal dynamics within the extended face system could thus overrule a dissociation of person identification and facial-expression recognition as we know it from the traditional face-recognition models. In normal viewers, different subprocesses involved in face processing have different time courses. For example, electroencephalogram studies indicate that facial expressions evoke activity as early as 80–100 ms (19), which is before the stage referred to as structural encoding. In the presence of a deficit, the time courses of person identification and expression recognition may overlap such that intact resources used for the one task may be applied to perform the other task.

Finally, configural processes are not only needed for face identification, but also for recognition of facial expressions as shown by findings of increased difficulty of expression recognition with facial expressions presented upside-down, a condition in which perceptual access to the facial configuration is hindered (20, 21). Thus, a deficit of processing the face configurally need not imply a total loss of configural face abilities.

Against this background, our goal was to find evidence for a modulatory role for facial expression in patients suffering from prosopagnosia by using behavioral and brain-imaging methods. For the behavioral study, we predicted that the presence of a facial expression would positively influence identification of parts. For the functional MRI study, we predicted that other areas that are part of the face-processing network (5), aside from the damaged fusiform face area, would show activation specifically for the facial-expression condition and could thus provide an explanation for the facilitation effect observed. We tested these predictions in four experiments. In two preliminary experiments, we tested for residual configural processes and for normal recognition of facial expressions. The critical experiment compared configural processes in a neutral and in an emotional-expression condition by using a task that requires identification of a face part as belonging to one and not another person. The same task was given to participants in a brain-imaging study.

Behavioral Experiments

Participants. All participants gave informed consent to a protocol approved by the university or hospital's institutional review board. They were paid for their participation.

Normal control subjects had assessments of near acuity, with corrected Snellen acuities of better than 20/40 required for participation, visual fields by confrontation, and handedness, using the Edinburgh handedness battery. Seven male patients (FJ, GA, KC, MD, MK, RB, and RG) with prosopagnosia were tested. Anatomical data from MRI scans and neuropsychological data relevant for the present study are presented in Fig. 1b and Table 1). Patients had extensive clinical and neuropsychological assessments with neuroophthalmologic testing of vision and eye movements, including the Goldmann perimetry. Detailed case information was provided in previous reports. FJ is a 38-year-old man with developmental prosopagnosia. GA is a 27-year-old man who suffered from a head injury at 18 months (see ref. 22; patient 1 in ref. 23). A structural MRI scan did not reveal any brain damage (11). KC is a 59-year-old man who suffered a right medial occipitotemporal stroke (compare patient 5 in ref. 23 and patient 4 in ref. 24). MD is a 38-year-old man shot in the occiput at 20 years of age (compare patient 7 in ref. 23 and patient 2 in ref. 24). MK is a 49-year-old man with a right posterior cerebral arterial infarct, which affects the fusiform face area and a left hemianopia. RB is a 68-year-old man who suffered a left occipital stroke 5 years before the present testing. RG is a 41-year-old man with bilateral posterior occipitotemporal lesions received in a car accident 20 years before that caused a subdural hematoma.

Fig. 1.

(a) Example of stimuli used in experiment 3. (b) Anatomical MRI scans of the patients. (c) Functional MRI, with all panels, from left to right, in sagittal, coronal, and horizontal views, respectively. (Top) Patient MD. Activation in the right superior parietal lobule (A–C) and in the right superior temporal sulcus (D–F). (Middle) Patient MK. Activation in the right amygdala (A–C) and in the right orbitofrontal cortex (D–F). (Bottom) Control subject MC. Activation in right fusiform gyrus, right superior temporal sulcus, left amygdala, bilateral orbitofrontal gyrus, and left premotor cortex.

Table 1. Overview of patient information.

| Benton |

Boston Naming Test |

VOSP object |

||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Patients | Gender | Age, yr | Site of lesion | Side | Lines | Faces | Spont | Sem | Phon | Scr | Let | Sil | Obj Dec | Prog Sil |

| FJ | Male | 38 | N/A | N/A | 28 | 58 | ||||||||

| GA | Male | 27 | N/A | N/A | 30 | 39 | 20 | 19 | 5 | 10 | 14 | |||

| KC | Male | 59 | Medial occiptemp | Right | 27 | 35 | 60 | 60 | 60 | 20 | 18 | 24 | 17 | |

| MD | Male | 38 | Occiptemp vent | Bilateral | 30 | 32 | 35 | 50 | 58 | 6 | 10 | |||

| MK | Male | 49 | Post vent occiptemp | Right | 30 | 43 | 54 | 0 | 4 | 20 | 19 | 13 | 19 | 14 |

| RB | Male | 68 | Occiptemp vent | Left | ||||||||||

| RG | Male | 41 | Occiptemp vent | Bilateral | 21 | 37 | 33 | 43 | 56 | 19 | 20 | 7 | 14 | 20 |

VOSP, visual object and space perception battery; Spont, spontaneous; Sem, semantic; Phon, phonetic; Scr, scrambled; Let, letter; Sil, silhouettes; Obj Dec, object decision; Prog Sil, progressive silhouettes; N/A, not applicable; Occiptemp, occipitotemporal; vent, ventral.

Experiment 1: Identification of faces and objects as wholes or by parts. The goal was to test for residual skills in processing identity of faces and objects with a matching task. We knew from previous research that a whole-face advantage obtained meant that normal viewers performed better in the uncued whole-face condition than in the part condition (16, 25). To probe how useful it was for the patients to be explicitly cued, two different instruction conditions were used. In the Whole condition, participants were instructed to match two stimuli by attending to the whole image. In the Part condition, instructions specified what part of the image was critical for the matching task. Previous research had indicated that, notwithstanding this explicit cue, normal viewers still perform better in the Whole condition specifically for faces, indicating that this task taps face processes even if matching could be done purely based on physical resemblance (25).

Materials and procedure. Materials consisted of 12 computer-edited gray-scale pictures, 7 cm wide and 9 cm high, of male faces (see ref. 16 for a detailed description) and 12 house stimuli, 8.5 cm wide and 7.5 cm high, prepared in the same manner.

For each type of stimulus (faces and houses) three blocks were presented, each time with different instructions. In the Whole condition, participants were requested to attend to the whole image. In the Part conditions, attention was cued to a prespecified part (the eyes or the mouth for the faces or the window/ dormer for the houses). Each block consisted of 24 trials (12 the same and 12 different) and was preceded by four familiarization trials.

Participants performed a self-paced, delayed-matching task. A target picture appeared in the center of the screen for 1,800 ms, followed by a blank screen (125 ms). Then a probe stimulus was presented until response. During the intertrial interval (1,600 ms) a fixation point was presented in the center of the screen. Participants were instructed to judge whether the probe stimulus was the same or different from the target and to respond as quickly and as accurately as possible by pressing the corresponding button.

Results. As observed, normal viewers were faster in the Whole than in the Part condition and this Whole advantage was specific for faces (25). To analyze the patient data the two Part conditions were pooled and contrasted with the Whole condition (Table 2). To reduce variability due to excessively long latencies, analyses were done on the 50% fastest responses. All prosopagnosics (except MD) were significantly slower when they had to attend to the Whole stimulus compared with a Part. Overall, cueing had a significant effect on accuracy (P < 0.01). Reaction times were faster when cued to parts (P < 0.01), but more so for the house stimuli (P < 0.01).

Table 2. Experiment 1: Accuracy and reaction times for the delayed-matching task.

| Accuracy, % correct |

Reaction times, ms |

|||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Faces |

Houses |

Faces |

Houses |

|||||||||

| Patients | Whole | Part | Whole | Part | Whole | Part | Whole—part | P | Whole | Part | Whole—part | P |

| Control | 90 | 88 | 91 | 95 | 938 | 1028 | — | <0.05 | 1,033 | 926 | + | <0.05 |

| KC | 67 (63) | 83 (88) | 100 (88) | 96 (96) | 898 | 782 | + | 0.02 | 1,136 | 771 | + | 0.00 |

| MD | 58 (50) | 83 (75) | 75 (83) | 83 (88) | 1,517 | 1,478 | 0 | 0.36 | 1,727 | 1,330 | + | 0.00 |

| MK | 83 (58) | 96 (92) | 92 (79) | 96 (96) | 1,605 | 1,268 | + | 0.00 | 1,744 | 1,219 | + | 0.00 |

| RB | 100 (75) | 96 (92) | 83 (71) | 100 (94) | 1,913 | 1,727 | + | 0.02 | 2,380 | 1,928 | + | 0.00 |

| RG | 67 (54) | 79 (71) | 83 (63) | 100 (94) | 1,285 | 1,029 | + | 0.00 | 1,504 | 1,078 | + | 0.00 |

Accuracy for 50% fastest trials; accuracy for all responses is in parentheses. Reaction times are from the 50% fastest responses. A “+” in the “Whole—part” column indicates a response-time advantage for parts instruction; nonsignificant differences are indicated by “0.” The P values are for one-tailed independent t tests, except for the control group in which the P values are for one-tailed paired tests. Control data are from Rossion et al. (25) and include all responses.

Discussion. With this experiment we tested for residual configural processes in the patients by using the whole-face advantage effect (25). The normal pattern of better whole-face performance compared with performance in a cued-part condition was not observed in any of the patients, indicating that faces were not processed configurally. This result is consistent with the standard explanation of prosopagnosia, which is a deficit in processing the facial configuration (26). In contrast with the normal pattern, patients were somewhat worse in the Whole condition, as expected when face processing based solely on parts is the only available procedure for prosopagnosics. Finally, a similar pattern of results was obtained across the whole patient group, indicating that the site of lesion whether left, right, or bilateral was not a critical variable.

Experiment 2: Matching facial expressions. Materials consisted of gray-scale photographs of 14 men and 14 women displaying different emotions (anger, fear, happiness, sad, disgust, surprise, or contempt). A trial consisted of a target arranged at the top of a triangle and two distracters, with identities different from the target, at the bottom. The task consisted of 28 trials (except for patient GA who received 84 trials). Participants were requested to match the pictures based on similarity of expression and to indicate responses by a button press.

Our results indicate that, relative to matching faces on the basis of identity, the ability of most patients to match faces on the basis of expression was better.

Experiment 3: The influence of facial expression on matching identical face parts. Experiment 3 investigated feature-based matching in neutral and emotional faces with a design that combined two classical ways of measuring whole- vs. feature-based face identification. The inversion effect represents the fact that matching upside-down-presented faces is more difficult than matching upright ones, because inversion blocks perceptual access to the configuration (27). The context superiority effect represents the fact that part to whole matching is facilitated when the whole stimulus is one that provides a meaningful context, e.g., a normal face as opposed to an inverted or a scrambled one (28), and that provides insight into the procedures used in matching.

Materials and procedure. Materials consisted of 16 different gray-scale photographs, 9.5 cm wide and 9.5 cm high, of neutral faces and of facial expressions (happy or angry) (29). Part stimuli, 4.5 × 1.5 cm, were created by extracting parts (either mouth of eyes) from the full images. A trial consisted of a full face (the target) presented together with two part probes underneath (see Fig. 1a for an example). They remained on screen until response. Accuracy and latency were measured from stimulus onset. There were four blocked conditions, neutral-up (a neutral face and two face parts, all in an upright orientation), and likewise, neutral-inverted, expression-up, and expression-inverted. Participants were instructed to indicate by a button press whether the right- or the left-side distracter matched the corresponding part in the full face. A total of 32 trials were performed per condition for the control group. For the patient group, a total of 64 trials were performed for each condition (32 per part type), except for GA and FJ, which was the same as for the control group. Sessions started with four practice trials.

Results. The response-time data of the control group were submitted to a 2 (Expression) × 2 (Orientation) ANOVA. Both main effects were significant (P < 0.001), indicating that an inversion effect occurred (inverted slower than upright) and that reaction times for neutral faces were faster.

Patient data were analyzed on both the individual and group levels. At the individual level, most patients have an abnormal pattern with some striking paradoxical inversion effects for the neutral faces (Table 3), but all prosopagnosics (except MD) show a normal inversion effect (t tests, all P values were <0.05) for the expressive faces. In the group analysis, a trend existed toward significance for an interaction between Emotion and Orientation, F(1, 6) = 4.118, P < 0.1. t tests showed that a normal inversion effect occurred for faces bearing emotion only, t(6) = 3.409, P < 0.05.

Table 3. Experiment 3: Accuracy and reaction times on the Part to Whole matching task.

| Accuracy, % correct |

Reaction times, ms |

|||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Neutral |

Expression |

Neutral |

Expression |

|||||||||

| Patients | Upright | Inverted | Upright | Inverted | Upright | Inverted | Inv—up | P | Upright | Inverted | Inv—up | P |

| Control | 96 ± 3.6 | 94 ± 4.7 | 93 ± 3.8 | 94 ± 5.7 | 1,583 | 1,704 | + | 0.00 | 1,772 | 1,999 | + | 0.00 |

| FJ | 100 | 100 | 97 | 97 | 3,603 | 2,822 | — | 0.02 | 2,998 | 4,105 | + | 0.02 |

| GA | 91 | 84 | 94 | 94 | 5,510 | 5,706 | 0 | 0.41 | 4,388 | 6,085 | + | 0.04 |

| KC | 83 | 91 | 97 | 88 | 3,246 | 3,989 | + | 0.00 | 3,829 | 4,611 | + | 0.02 |

| MD | 86 | 83 | 94 | 84 | 7,516 | 6,722 | — | 0.04 | 6,812 | 6,365 | 0 | 0.22 |

| MK | 88 | 97 | 91 | 88 | 2,819 | 2,775 | 0 | 0.39 | 1,847 | 2,498 | + | 0.00 |

| RB | 89 | 92 | 97 | 97 | 6,821 | 8,460 | + | 0.00 | 5,973 | 7,254 | + | 0.01 |

| RG | 75 | 69 | 84 | 78 | 9,626 | 7,212 | — | 0.00 | 6,841 | 8,650 | + | 0.03 |

A “+” in the “Inv—up” (inverted—upright) column indicates normal inversion/context effect, “—” indicates a paradoxical effect, and “0” indicates no difference (P values are for one-tailed t tests).

Discussion. Our result reveals a striking contrast between neutral faces and facial expression in normal viewers and in prosopagnosic patients, but the influence of a facial expression was positive for the latter group and negative for the former group. For facial expressions, performance of normal viewers deteriorated, whereas the performance of prosopagnosics improved dramatically.

The current data challenge face-processing theories that assume independence between processing the face identity and processing the facial expression. First, although unfamiliar faces were used to avoid confounds with impaired face skills of the patients, in both conditions identity of the face had to be processed to achieve correct performance. In the Neutral condition, our results are in line with recent findings challenging the notion that prosopagnosia is strictly a matter of loss of configural processing. We see that all three patterns of configural deficit now reported in the literature are represented here: a normal-context effect (KC and RB), a loss-of-context effect (GA and MK), and a paradoxical effect (FJ, MD, and RG). The paradoxical effect is now reported more frequently (18, 30), indicating that paradoxical effects are not that unusual once attention is drawn to the phenomenon. Second, the normal-context effect in KC and RB does also not correspond with the view that the core problem of prosopagnosia is a loss of configural processes. Only in two cases did we find that configuration had no impact at all, either in the upright or in the inverted condition, which is the familiar pattern of configural loss known from the older prosopagnosia literature.

No specific relation existed between the pattern of performance and lesion. Two patients (MD and RG) with a similar pattern of paradoxical inversion effects had bilateral occipitotemporal lesions. The other patient with that pattern had developmental prosopagnosia. In contrast, KC and RB had a normal pattern notwithstanding their lesions. The side on which the lesion occurred was not a critical variable, because KC had a right-side lesion and RB had a left-side one.

The major finding concerns the performance of patients in the Expression condition. Contrary to what was observed in normal subjects, patients were faster in the Expression than in the Neutral condition, irrespective of the stimulus orientation. With the exception of one patient (MD), all prosopagnosics strongly benefited from the presence of a facial expression. This pattern contrasts sharply with the data from normal viewers, where we observed not simply an overall improvement in performance, but a reversal of the normal pattern. In the presence of a facial expression, prosopagnosics exhibited a normal inversion and context effect.

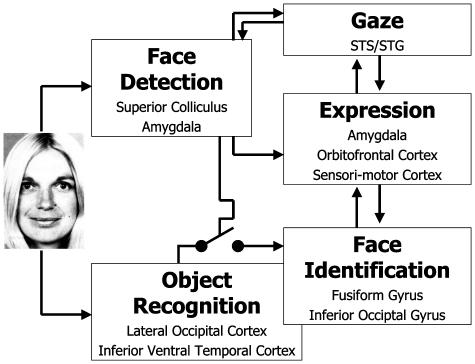

Experiment 4: Imaging experiment. Face identification evokes activity in a bilateral region in the lateral fusiform gyrus (11, 31–35) and in the inferior occipital gyrus (8, 9, 11, 32, 36, 37). But besides these main areas associated with face processes in normal viewers and which are damaged in these patients, other areas are part of an extended network of face processing (8). These areas are involved in processing emotional aspects of the face (occipitotemporal cortices, amygdala, orbitofrontal cortex, basal ganglia, and right parietal cortices) (38), and these areas are intact in the patients studied here.

Participants. Structural scans and magnetic resonance images of brain activity were collected from four patients (MD, MK, RB, and RG) and from one normal control subject. Informed written consent was obtained for each subject before the scanning session, and Massachusetts General Hospital Human Studies Protocol numbers 1999P-010946 and 2002P-000228 indicate approval of all procedures.

Materials and procedure. Stimuli were identical with the ones used in experiment 3, and were generated by E-PRIME 1.1 (39) on an ASUS A1300 and projected with a Sharp XG-NV6XU Notevision 6 projector (Osaka Microsystems) through a collimating lens onto a screen secured to the head coil. Subjects viewed images on a tilted mirror placed directly in front of their eyes.

Functional images were generated by using a high-field 3.0-T high-speed echoplanar-imaging device (Siemens, Munich) with a quadrature head coil. Subjects lay on a padded scanner couch in a dimly illuminated room and wore foam earplugs. Foam padding stabilized the head. High-resolution (1.0 × 1.0 × 1.3 mm) structural images were obtained for 3D reconstruction. Structural images were magnetization-prepared rapid acquisition with gradient echoes [MP-RAGE; 128 slices, 256 × 256 matrix, echo time (TE) = 3.3 ms, repetition time (TR) = 30 ms, flip = 40°].

Functional sessions began with an initial sagittal localizer scan, followed by autoshimming to maximize field homogeneity. To register functional data to the 3D reconstructions, a set of high-resolution (44 coronal slices, perpendicular to the calcarine sulcus, 1.5 × 1.5 mm in-plane, 4 mm thick, no skip), inversion-time T1-weighted echoplanar images [TE = 29 ms, TI = 1,200 ms, TR = 6,000 ms, number of excitations (NEX) = 4] was acquired, along with T2 conventional high-resolution anatomical scans (256 × 256 matrix, TE = 104 ms, TI = 1,200 ms, TR = 11 s, NEX = 2). The coregistered functional series (TR = 4,000 ms, 128 images per slice, TE = 30 ms, flip angle = 90°, field of view = 20 × 20 cm, matrix = 64 × 64, in-plane resolution 3.125 mm2) lasted 512 s and were presented in an AB-blocked design.

Each of the functional runs was motion-corrected to the first run by using AFNI (http://afni.nimh.nih.gov/afni/index.shtml) and then spatially smoothed by using a 2D Hanning filter (full width at half-maximum = 8.0 mm). The phase of the signal at the stimulus frequency was used to distinguish between signal increases and decreases in the magnetic resonance signal for two-condition comparisons. Significance of the activation at each voxel was determined by using an F statistic. During the scanning session, the subjects performed the same match-to-sample task as described in experiment 3, except that stimuli were now presented at a set pace.

Results. Functional data from two patients (RB and RG) could not be used because of excessive motion in the scanner. Results from one control subject (MC) are consistent with reports in the literature detailing the brain areas activating to neutral and emotional faces. We observed activation (Fig. 1c) for expression vs. the neutral face condition in the right fusiform gyrus (anterior and posterior), in the right superior temporal sulcus, in the left amygdala, in the orbitofrontal gyrus bilaterally, and in the left premotor cortex.

For MD (bilateral occipitotemporal lesion), activation was present bilaterally in the superior temporal sulcus and gyrus, in the right temporal lobule, in the right superior frontal gyrus, in the right cingulate gyrus, and in the left inferior frontal gyrus. For patient MK, activation was observed in the right amygdala region and in the right orbitofrontal cortex.

Discussion. As predicted, patients with damage to the brain areas predominantly involved in face identification show activation in other areas belonging to the extended network dedicated to the recognition of facial expressions (6, 8), like the right fusiform gyrus (anterior and posterior), the right superior temporal sulcus, the amygdala, and the orbitofrontal gyrus. Moreover, activity in the left premotor cortex suggests involvement of structures related to imitation and action (40). These activations might then explain improved task performance in the expression condition of experiment 3. As expected, only a partial overlap occurs between the areas active in normal subjects and those active in the patients because of the lesions. For patient MK, we observed activity only in the right amygdala and in the right orbitofrontal cortex, consistent with activations in the normal control subject. For patient MD, activity in the superior temporal sulcus corresponded to what was observed in the normal control subject, except that with the patient the activation was bilateral. Besides that, activity occurred in the right superior frontal gyrus. This result is in line with the role of the right temporal lobule, the right cingulate gyrus, and the left inferior frontal gyrus in processing facial expressions (38).

It is interesting to relate the observed brain activation to the results of experiment 3, where the presence of an expression had an inhibitory effect on task performance. The matter is complicated by the fact that activation in the same areas leads to facilitation of performance in the patients. The fact that these areas were active when the facial expression was not the focus of attention because it was not relevant for the task is also consistent with the literature on implicit processing of facial expressions (40, 41).

General Discussion

Historically, dissociations between impaired and intact skills observed in lesion studies have greatly contributed to the understanding of normal face processing, essentially by providing evidence for functional dissociations, which then defined the building blocks for modular models of normal processes (42). More recently, it has been argued that patient studies can also contribute to a better understanding of the dynamical aspects of brain function, because they can reveal processing routes other than the ones that are dominant in normal observers (12, 14, 16). This point is particularly relevant when some subprocesses may have different processing speed, as suggested for feature and configuration processing (43) and for emotion processing (19). Also, if two routes of comparable importance coexist, the outcome may be determined by only one of them, because the faster one can overtake the other slower one. In fact, both a feature-based and a configural procedure may be available in normal face identification, depending on the type of task used and the time constraints imposed. But in skilled viewers the configural procedure may by far be the fastest one and thus overwrite and mask the contribution from the former as in a horse-race model (44). Yet, as a consequence of brain damage, absolute speed of processing decreases, but, more importantly, the relative speed of processing of different routes may be altered. For example, what is the slower and less likely route in normal viewers can, by comparison, become the fast one or sometimes the only available route in the case of brain damage.

The present results indicate that performance on a face-matching task on which patients are severely impaired can be modified by the presence of a stimulus dimension that is not relevant for performing the task, like a facial expression for which no visual impairment exists. Moreover, the interaction specifically influences the way the configuration of the face is processed, because the presence of an expression normalizes the sensitivity to stimulus orientation and the ensuing configural processes. These results are important for a better understanding of the relationship between perception of emotional valence and visual processes. It is known from neuropsychological reports that lesion of the visual areas normally involved in processing facial identity does not destroy the ability to recognize facial expressions. Although our study confirms this, it points to a much closer interaction between face identification and facial expression than envisaged previously. Our results have important implications for standard models of face processing (2), which are based on the notion that the different aspects of face processing, like facial expression, gender, and speech, are processed independently (assumption of dissociation), and assume that these different kinds of information are only extracted after structural encoding based on intact configuration recoding (assumption of hierarchical processing). Both assumptions are challenged by the present results, which are consistent with a similar challenge to such models that has been provided by recent studies using time-sensitive methods like event-related potential and magnetoencephalography. Indeed, the time course of the structural encoding of neutral faces is ≈170 ms, as indicated by a face-specific negative potential (N170) (45). Recent studies using facial expressions have provided evidence for encoding of expression at latencies earlier than 170 ms, between 80 and 116 ms (46) or ≈110 ms (47), and raised the possibility of a modulation of the face area in the fusiform cortex by this earlier activity. The present results do not allow direct inferences about time course, but the influence of a facial expression as shown here is consistent with those findings.

What could be responsible for a positive influence of facial expressions on face identification in these prosopagnosics are feedback projections from intact amygdala to damaged face areas in the fusiform cortex in line with modulation of fusiform face activity observed in normal subjects (6, 42). This idea fits the notion that residual skills observed in brain damage are based on degraded representations whose threshold for influencing performance can be modified by concurrent stimulus input (48). In other words, when a facial expression is present, the activation in the damaged face areas would be boosted and would lead to improved performance and normalization of the configural effects. But this explanation is unlikely here because the lesions of patients MK and MD include face areas in the fusiform cortex, ruling out projections from amygdala to fusiform cortex. Moreover, the two foci of activation are both right-sided, indicating independence from the face area in fusiform cortex, because the latter cannot possibly be a relay in a network that gets to amygdala and orbitofrontal cortex. For MD (bilateral occipitotemporal lesion), activation was present bilaterally in the superior temporal sulcus and gyrus, the right temporal lobule, the right superior frontal gyrus, the right cingulate gyrus, and the left inferior frontal gyrus (Fig. 2).

Fig. 2.

Face-processing model, based on separate routes for face detection and identification.

Finally, these results are interesting for a better understanding of the importance of identification of familiar persons in the diagnosis of prosopagnosia and possibly in rehabilitation. Our results suggest that the presence of a facial expression can boost face identification. In the oldest definition of prosopagnosia, a restriction of the deficit to impairment with familiar faces existed. Later studies have extended the definition by including unfamiliar faces as well. This extension has raised the question of whether the cases of prosopagnosia originally reported were not, at least in part, cases of amnesia for faces. This explanation is obviously not pertinent for a deficit in unfamiliar face identification, but familiar and unknown faces do not differ only in whether a face template is stored and can be accessed in memory. Familiar faces are rarely neutral but are most often viewed with one or another expression, whereas the unfamiliar faces commonly used in clinical tests and in experiments on face identification are typically neutral.

Acknowledgments

This work was supported by National Institutes of Health Grant R01 NS44824-01 (to N.H.).

Abbreviations: TE, echo time; TR, repetition time.

References

- 1.Bodamer, J. (1947) Arch. Psychiatr. Nervenkr. 179 6-53. [DOI] [PubMed] [Google Scholar]

- 2.Bruce, V. & Young, A. W. (1986) Br. J. Psychol. 77 305-327. [DOI] [PubMed] [Google Scholar]

- 3.Taylor, S. F., Liberzon, I., Fig, L. M., Decker, L. R., Minoshima, S. & Koeppe, R. A. (1998) NeuroImage 8 188-197. [DOI] [PubMed] [Google Scholar]

- 4.Nakamura, K., Kawashima, R., Ito, K., Sugiura, M., Kato, T., Nakamura, A., Hatano, K., Nagumo, S., Kubota, K., Fukuda, H., et al. (1999) J. Neurophysiol. 82 1610-1614. [DOI] [PubMed] [Google Scholar]

- 5.Dolan, R. J. (2002) Science 298 1191-1194. [DOI] [PubMed] [Google Scholar]

- 6.Rotshtein, P., Malach, R., Hadar, U., Graif, M. & Hendler, T. (2001) Neuron 32 747-757. [DOI] [PubMed] [Google Scholar]

- 7.Farah, M. J., Monheit, M. A. & Wallace, M. A. (1991) Neuropsychologia 29 948-958. [DOI] [PubMed] [Google Scholar]

- 8.Haxby, J. V., Hoffman, E. A. & Gobbini, M. I. (2000) Trends Cognit. Sci. 4 223-233. [DOI] [PubMed] [Google Scholar]

- 9.Haxby, J. V., Hoffman, E. A. & Gobbini, M. I. (2002) Biol. Psychiatry 51 59-67. [DOI] [PubMed] [Google Scholar]

- 10.Marotta, J. J., Genovese, C. R. & Behrmann, M. (2001) NeuroReport 12 1581-1587. [DOI] [PubMed] [Google Scholar]

- 11.Hadjikhani, N. & de Gelder, B. (2002) Hum. Brain Mapp. 16 176-182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.de Gelder, B., Bachoud-Levi, A. C. & Degos, J. D. (1998) Vision Res. 38 2855-2861. [DOI] [PubMed] [Google Scholar]

- 13.Farah, M. J., Wilson, K. D., Drain, H. M. & Tanaka, J. R. (1995) Vision Res. 35 2089-2093. [DOI] [PubMed] [Google Scholar]

- 14.Kapur, N. (1996) Brain 119 1775-1790. [DOI] [PubMed] [Google Scholar]

- 15.Price, C. J. & Friston, K. J. (2002) Neurocase 8 345-354. [DOI] [PubMed] [Google Scholar]

- 16.de Gelder, B. & Rouw, R. (2000) NeuroReport 11 3145-3150. [DOI] [PubMed] [Google Scholar]

- 17.de Gelder, B. & Rouw, R. (2000) Cognit. Neuropsychol. 17 89-102. [DOI] [PubMed] [Google Scholar]

- 18.Boutsen, L. & Humphreys, G. W. (2002) Neuropsychologia 40 2305-2313. [DOI] [PubMed] [Google Scholar]

- 19.Pizzagalli, D. A., Lehmann, D., Hendrick, A. M., Regard, M., Pascual-Marqui, R. D. & Davidson, R. J. (2002) NeuroImage 16 663-677. [DOI] [PubMed] [Google Scholar]

- 20.Bartlett, J. C. & Searcy, J. (1993) Cognit. Psychol. 25 281-316. [DOI] [PubMed] [Google Scholar]

- 21.de Gelder, B., Teunisse, J. P. & Benson, P. J. (1997) Cognit. Emotion 11 1-23. [Google Scholar]

- 22.Kosslyn, S. M., Hamilton, S. E. & Bernstein, J. H. (1995) Brain Cognit. 27 36-58. [DOI] [PubMed] [Google Scholar]

- 23.Barton, J. J., Cherkasova, M. & O'Connor, M. (2001) Neurology 57 1161-1168. [DOI] [PubMed] [Google Scholar]

- 24.Barton, J. J., Press, D. Z., Keenan, J. P. & O'Connor, M. (2002) Neurology 58 71-78. [DOI] [PubMed] [Google Scholar]

- 25.Rossion, B., Dricot, L., Devolder, A., Bodart, J. M., Crommelinck, M., de Gelder, B. & Zoontjes, R. (2000) J. Cognit. Neurosci. 12 793-802. [DOI] [PubMed] [Google Scholar]

- 26.Levine, D. N. & Calvanio, R. (1989) Brain Cognit. 10 149-170. [DOI] [PubMed] [Google Scholar]

- 27.Yin, R. K. (1969) J. Exp. Psychol. 81 141-145. [Google Scholar]

- 28.Homa, D., Haver, B. & Schwartz, T. (1976) Mem. Cognit. 4 176-185. [DOI] [PubMed] [Google Scholar]

- 29.Ekman, P. & Friesen, W. V. (1976) Pictures of Facial Affects (Consulting Psychologists Press, Palo Alto, CA).

- 30.Gauthier, I., Behrmann, M. & Tarr, M. J. (1999) J. Cognit. Neurosci. 11 349-370. [DOI] [PubMed] [Google Scholar]

- 31.Clark, V. P., Keil, K., Maisog, J. M., Courtney, S., Ungerleider, L. G. & Haxby, J. V. (1996) NeuroImage 4 1-15. [DOI] [PubMed] [Google Scholar]

- 32.Halgren, E., Dale, A. M., Sereno, M. I., Tootell, R. B., Marinkovic, K. & Rosen, B. R. (1999) Hum. Brain Mapp. 7 29-37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Haxby, J. V., Horwitz, B., Ungerleider, L. G., Maisog, J. M., Pietrini, P. & Grady, C. L. (1994) J. Neurosci. 14 6336-6353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Kanwisher, N., McDermott, J. & Chun, M. M. (1997) J. Neurosci. 17 4302-4311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.McCarthy, G., Puce, A., Gore, J. C. & Allison, T. (1997) J. Cognit. Neurosci. 9 605-610. [DOI] [PubMed] [Google Scholar]

- 36.Levy, I., Hasson, U., Avidan, G., Hendler, T. & Malach, R. (2001) Nat. Neurosci. 4 533-539. [DOI] [PubMed] [Google Scholar]

- 37.Puce, A., Allison, T., Asgari, M., Gore, J. C. & McCarthy, G. (1996) J. Neurosci. 16 5205-5215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Adolphs, R. (2002) Curr. Opin. Neurobiol. 12 169-177. [DOI] [PubMed] [Google Scholar]

- 39.Schneider, W., Eschman, A. & Zuccolotto, A. (2002) E-Prime User's Guide (Psychology Software Tools, Inc., Pittsburgh).

- 40.Carr, L., Iacoboni, M., Dubeau, M. C., Mazziotta, J. C. & Lenzi, G. L. (2003) Proc. Natl. Acad. Sci. USA 100 5497-5502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Whalen, P. J., Rauch, S. L., Etcoff, N. L., McInerney, S. C., Lee, M. B. & Jenike, M. A. (1998) J. Neurosci. 18 411-418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Morris, J. S., de Gelder, B., Weiskrantz, L. & Dolan, R. J. (2001) Brain 124 1241-1252. [DOI] [PubMed] [Google Scholar]

- 43.Sagiv, N. & Bentin, S. (2001) J. Cognit. Neurosci. 13 937-951. [DOI] [PubMed] [Google Scholar]

- 44.Searcy, J. & Bartlett, J. C. (1996) J. Exp. Psychol. Hum. Percept. Perform. 22 904-915. [DOI] [PubMed] [Google Scholar]

- 45.Bentin, S., Allison, T., Puce, A., Perez, E. & McCarthy, G. (1996) J. Cognit. Neurosci. 8 551-565. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Pizzagalli, D., Koenig, T., Regard, M. & Lehmann, D. (1999) Brain Res. Cognit. Brain Res. 7 371-377. [DOI] [PubMed] [Google Scholar]

- 47.Halgren, E., Raij, T., Marinkovic, K., Jousmaki, V. & Hari, R. (2000) Cereb. Cortex 10 69-81. [DOI] [PubMed] [Google Scholar]

- 48.Köhler, S. & Moscovitch, M. (1997) in Cognitive Neuroscience: Studies in Cognition, ed. Rugg, M. (MIT Press, Cambridge, MA), pp. 305-373.