Abstract

We evaluated behavior exhibited by individuals with developmental disabilities using progressive-ratio (PR) schedules. High- and low-preference stimuli were determined based on the results of a paired-stimulus preference assessment and were evaluated in subsequent reinforcer and PR assessments using concurrent and single schedules of presentation. In Experiment 1, results showed that for 2 of 3 participants, stimuli determined to be low-preference functioned as reinforcers when evaluated independent of high-preference stimuli. Further, the results from Experiment 2 showed that low-preference stimuli also functioned as reinforcers under gradually increasing PR requirements. Results suggest that for cases in which a high-preference stimulus is unavailable or impractical, the contingent delivery of relatively less preferred stimuli may maintain appropriate behavior, even as schedule requirements increase.

Keywords: break point, choice, preference assessment, progressive-ratio schedules, reinforcer assessment

The identification of effective positive reinforcers can be extremely valuable for reducing problem behavior (e.g., Ringdahl, Vollmer, Marcus, & Roane, 1997) as well as facilitating appropriate behavior (e.g., Saunders, McEntee, & Saunders, 2005). Even though it may seem intuitive to deliver the most frequently selected stimulus identified via a preference assessment for treatment purposes, prior work has suggested that some assessment methods may be prone to false negatives, resulting in the exclusion of items that could potentially function as effective positive reinforcers (e.g., Roscoe, Iwata, & Kahng, 1999).

Roscoe et al. (1999), for example, demonstrated that stimuli ranked relatively low on a preference hierarchy could function as reinforcers in specific contexts. After assessing preference for edible items, a high-preference (HP) stimulus (i.e., an item approached most frequently during both assessments) and a low-preference (LP) stimulus (i.e., an item for which the results of the two assessments showed the largest discrepancy) were identified for each participant. These stimuli were evaluated in concurrent- and single-schedule reinforcer assessments and delivered on a fixed-ratio (FR) 1 schedule. When tasks associated with HP and LP stimuli were available simultaneously, participants responded almost exclusively on the task associated with the HP stimulus. However, when only the task associated with the LP stimulus was available, all participants engaged in that task, often at rates that approximated those observed for the HP task under concurrent schedules. These results suggested that LP stimuli may maintain responding when presented contingently. Other research has produced similar results (e.g., DeLeon, Iwata, & Roscoe, 1997; Taravella, Lerman, Contrucci, & Roane, 2000). This repeated finding is encouraging because it suggests that those responsible for implementing reinforcement-based programs may select from a wider range of potential reinforcers (i.e., not simply that which was selected more frequently; Egel, 1981).

Roscoe et al. (1999) made a distinction between reinforcer preference and reinforcer potency, noting that preference refers to the choice of one stimulus over another, whereas potency refers to the capacity of a stimulus to maintain performance when delivered contingently. Therefore, even though preference assessments may produce a discrete hierarchy of preferred stimuli, such evaluations do not necessarily indicate which stimuli are likely to maintain performance over an extended period of time (i.e., which stimuli may function as effective and durable reinforcers). Current reinforcer assessments commonly use relatively dense reinforcement schedules (e.g., FR 1), which are not necessarily representative of how behavior is reinforced in the natural environment (Fisher & Mazur, 1997). Therefore, the use of leaner schedules may lead to the identification of stimuli that are more likely to function as potent reinforcers in natural settings (i.e., when schedule requirements are presumably larger).

Tustin (1994) studied the effects of lean schedules of reinforcement by using a computer program that gradually increased the number of responses required to access sensory stimuli or attention across sessions using FR schedules. The FR schedules were presented either in single or concurrent arrangements, either under constant (i.e., FR 5) or varied (e.g., FR 2, FR 10, FR 20) requirements. Results indicated that for 1 participant, preference between reinforcers remained constant as schedule requirements increased. For another individual, when response requirements for two reinforcers increased together, a reversal of preference occurred. In other words, the stimulus that was associated with more responding at low schedule requirements was no longer preferred when response requirements were increased. This outcome suggests that reinforcer value may be altered as a function of increasing schedule requirements.

A type of schedule that is particularly useful in evaluating reinforcer potency is the progressive-ratio (PR) schedule. Under PR schedules, the response requirement gradually increases from ratio to ratio within a session until responding no longer occurs for some period of time (Pierce & Cheney, 2004). For example, under a PR 2, the completion of the first two responses results in the delivery of the reinforcer. The response requirement then systematically increases by two; in this example, the reinforcer is delivered after the completion of four additional responses, and so on, until responding no longer occurs for a predetermined period of time (e.g., 5 min), at which point the session is terminated. PR schedules differ from progressive FR schedules used by Tustin (1994) and others (e.g., DeLeon, Iwata, Goh, & Worsdell, 1997; Johnson & Bickel, 2006) in that PR schedule requirements increase within sessions instead of across sessions. The last schedule value completed in a PR schedule is referred to as the break point, which indicates the maximum number of steps completed to produce the reinforcer (e.g., Roane, Call, & Falcomata, 2005). PR schedules allow the assessment of reinforcer value, in that higher response rates across increasing response requirements are typically associated with more potent reinforcers (Roane, Lerman, & Vorndran, 2001).

Despite the potential usefulness of PR schedules to differentiate between HP and LP stimuli with humans accurately, these schedules have primarily been investigated in the basic laboratory with nonhumans (e.g., Baron, Mikorski, & Schlund, 1992; Hodos, 1961; Hodos & Kalman, 1963). In more recent years, a handful of studies have incorporated PR schedules to address problems of applied significance (e.g., DeLeon, Fisher, Herman, & Crosland, 2000; Shahan, Bickel, Madden, & Badger, 1999). For example, Roane et al. (2001) assessed responding for highly preferred reinforcers when participants' behavior was exposed to PR schedules. For each participant, two stimuli that were highly and similarly ranked in a preference assessment (Fisher et al., 1992) were evaluated in a PR reinforcer assessment using simple tasks (e.g., pressing a button) presented in a single-schedule arrangement. Ratio requirements increased within the session until no responding occurred for 5 min. Results showed that for all participants, differential responding occurred depending on which item was delivered. In other words, one of the two HP stimuli was associated with greater response persistence under increasing response requirements, a finding that was somewhat counterintuitive based on the results of the preference assessment (which showed both stimuli to be approximately equal in preference, and by extension, presumably equal in reinforcing efficacy).

The collective findings of Roane et al. (2001) and Roscoe et al. (1999) warrant a closer investigation of LP stimuli, as determined by common preference-assessment methods, as well as a further evaluation of the applied utility of PR schedules. Therefore, the purpose of Experiment 1 was to replicate the methods used by Roscoe et al. systematically, and the purpose of Experiment 2 was to extend those findings by evaluating responding for HP and LP stimuli under PR schedules.

General Method

Participants and Settings

Three children with various developmental disabilities, who attended a public school for individuals with special needs, participated. Aaron was 5 years old and had been diagnosed with Down syndrome, Logan was 3 years old and had unspecified developmental delays, and Derrick was 5 years old and had been diagnosed with autism. All sessions were conducted in each child's regular classroom or an adjacent room. One to three sessions were conducted daily, 4 to 5 days per week, depending on participant availability.

Response Measurement and Interobserver Agreement

During the paired-stimulus preference assessment (Fisher et al., 1992), an approach response was defined as a participant either touching or picking up the stimulus. During the reinforcer assessments and PR assessments, correct task completion was defined as releasing a disk past the plane of the receptacle lid and the subsequent contact between the disk and the bottom of the disk receptacle or another disk already in the receptacle. Stimulus delivery was defined as the time beginning at the presentation of the edible item (determined by preference assessment results) to the participant contingent on meeting the response requirement and ending when the edible item had been consumed.

Trained undergraduate and graduate students served as observers and collected data using data-collection sheets (for the preference assessment and the training portion of the reinforcer assessment) or handheld computers while sitting approximately 1 m from the participant and the experimenter. For all phases, interobserver agreement was assessed by having a second observer simultaneously but independently collect data during each condition. Across all participants, the mean percentage of sessions with interobserver agreement was 100% during the preference assessments, 63% during the reinforcer assessments, and 38% during the PR assessments.

During the preference assessment, observers recorded which of the two concurrently available stimuli was approached on each trial. Agreement was defined as both observers having recorded the same selection or no selection for each trial. Interobserver agreement was calculated by dividing number of agreements by number of agreements plus disagreements and multiplying by 100%. Interobserver agreement for all preference assessments was 100%. During the reinforcer and PR assessments, observers collected data as responses per minute (correct task completion) and recorded the frequency of target responses and stimulus delivery. Each session was divided into consecutive 10-s intervals, and agreement was calculated by dividing the smaller number of responses by the larger number of responses for each interval. These fractions were averaged across all intervals to obtain the percentage of agreement between the two observers. During the reinforcer assessment, Aaron's mean percentage was 97% (range, 87% to 100%) for task completion and 80% (range, 68% to 97%) for stimulus delivery; Logan's mean percentage was 98% (range, 97% to 100%) for task completion and 82% (range, 68% to 99.6%) for stimulus delivery; and Derrick's mean percentage was 99% (range, 95% to 100%) for task completion and 89% (range, 72% to 97%) for stimulus delivery. During the PR assessment, Aaron's mean percentage was 97% (range, 80% to 100%) for responses to the task and 95% (range, 86% to 100%) for stimulus delivery; and Logan's mean percentage was 97% (range, 80% to 100%) for responses to the task and 97% (range, 94% to 100%) for stimulus delivery.

Experiment 1: Relative and Absolute Reinforcement Effects: A Systematic Replication

Stimulus Preference Assessment: Procedure

Preference for 10 edible stimuli was assessed based on the procedures described by Fisher et al. (1992). Only edible items were used in the assessment and subsequent analyses to expedite consumption and to reduce the overall duration of sessions, especially given the potentially lengthy sessions during the PR assessment. The stimulus that was approached on at least 80% of presentations was considered the HP stimulus, and the stimulus that was approached on 22% of presentations was considered the LP stimulus. If two or more HP stimuli or two or more LP stimuli were approached on an equal number of trials, one stimulus (for each stimulus type) was chosen quasirandomly for use in subsequent phases. The experimenters made an effort to choose the item of most nutritional value for use in the evaluation.

Reinforcer Assessment: Procedure and Design

The stimuli determined to be the HP and LP items via preference assessment were evaluated using procedures described by Roscoe et al. (1999). Prior to conducting the reinforcer assessment, a training condition was conducted in order to expose participants to the contingencies associated with each task. After this initial training, no further prompts or presession exposure occurred during the reinforcer and PR assessments. In concurrent baseline and concurrent reinforcement conditions, two sets of a disk task were presented to the participant, which were identical except for color (with one color associated with the HP stimulus and another color associated with the LP stimulus). Thus, the different tasks were designated the HP task and the LP task because completion of the task resulted in the delivery of either the HP or the LP stimulus. Participants were free to alter their response allocation between the two tasks at any time, but attempts to engage in both tasks simultaneously were blocked. Under the concurrent baseline condition, responding on either task produced no programmed consequences. Under the concurrent reinforcement condition, correct responding on one task resulted in the delivery of the HP stimulus, and correct responding on the other task resulted in the delivery of the LP stimulus; HP and LP stimuli were delivered on an FR 1 reinforcement schedule. During stimulus delivery and consumption, tasks were moved out of reach of the participant, but were still in full view (i.e., the participant could not engage in the task while consuming the stimulus).

In the LP baseline condition, only the disk task associated with the LP stimulus was available, and no programmed consequences were in place for responding. In the LP reinforcement condition, correct responses to the task resulted in the delivery of the LP stimulus on an FR 1 schedule of reinforcement. All sessions lasted 5 min.

Results and Discussion

Preference Assessment

Aaron's preference assessment indicated raisins as the HP stimulus (selected on 89% of opportunities) and crackers (selected on 22% of opportunities) as the LP stimulus. Although his preference assessment showed rice cakes and raisins as equally ranked (89%), it was noted in our observations that he never consumed the item, but instead often crushed the rice cake in his hand. Logan's preference assessment showed raisins as the HP item (selected on 89% of opportunities) and crackers as the LP item (selected on 22% of opportunities). Finally, Derrick's preference assessment yielded crackers as the HP stimulus (selected on 89% of opportunities) and raisins as the LP stimulus (selected on 22% of opportunities). (Data for the preference assessment outcomes for all participants are available from the second author.)

Reinforcer Assessment

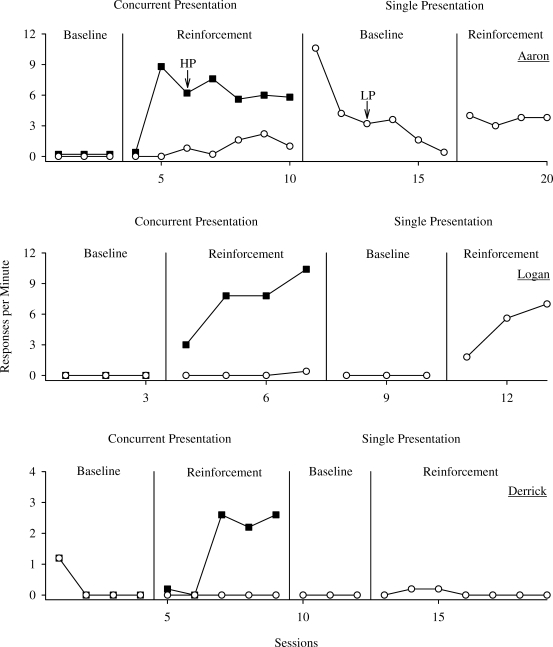

The outcomes of the reinforcer assessment for Aaron, Logan, and Derrick are shown in Figure 1. The results of Aaron's concurrent baseline evaluation showed low rates of responding on the HP task (M = 0.2 responses per minute) and on the LP task (M = 0). Response rates in the concurrent reinforcement condition showed relatively high levels of responding allocated to the HP task (M = 5.8) compared to the LP task (M = 0.8). In the LP baseline condition, responding decreased gradually (M = 3.9). In the LP reinforcement condition, responding stabilized (M = 3.7) and exceeded rates observed in the last two sessions of the LP baseline.

Figure 1.

Reinforcer assessment data for Aaron (top), Logan (middle), and Derrick (bottom). Data are depicted as responses per minute.

In the concurrent baseline for Logan, no responding occurred on either task. However, Logan responded at a relatively higher rate on the HP task (M = 7.3 responses per minute) in the concurrent reinforcement condition than on the LP task (M = 0.1). When the LP task and stimuli were presented in the LP reinforcement condition, rates of responding were relatively high and on an increasing trend (M = 4.8) compared to no responding during the LP baseline.

The results of Derrick's reinforcer assessment showed low to zero levels of responding in both the concurrent and LP baseline conditions (Ms = 0.3 responses per minute for both stimuli in the concurrent baseline condition). In the concurrent reinforcement condition, Derrick exclusively responded toward the HP task (M = 1.5). When the LP reinforcement condition was introduced, near-zero levels of responding were observed (M = 0.1), with rates at zero for the last four sessions.

The results of Experiment 1 showed that for all 3 participants, the item that was selected on the highest percentage of trials (HP stimulus) during the paired-stimulus preference assessment maintained relatively high levels of responding when presented contingently and concurrently with an item that was ranked lower on the preference hierarchy. These results suggested that, for these individuals, the outcomes of the preference assessment accurately predicted that HP stimuli would function as reinforcers. For all participants, the contingent presentation of the LP stimulus resulted in zero or very low rates of responding when concurrently presented with a higher ranked item. However, in the LP reinforcement condition, in which only the task associated with the LP stimulus was available, the contingent delivery of LP stimuli maintained responding for 2 participants (Aaron and Logan). These outcomes suggested that in the absence of HP stimuli, the delivery of LP stimuli may support at least moderate rates of responding. This was not the case with Derrick, who rarely responded on the available task when his LP stimulus was arranged contingently. Overall, the results of Experiment 1, including Derrick's data, generally replicate those of Roscoe et al. (1999).

Responses were reinforced on an FR 1 schedule in Roscoe et al. (1999) and Experiment 1 of the current study, requiring relatively little effort on the part of the participant. A next step would be to evaluate response allocation and persistence using a more effortful reinforcement schedule. PR schedules, as previously described, are particularly useful for this purpose, because increasing response requirements can be used to evaluate the extent to which stimulus presentation will continue to support responding. Outcomes from such evaluations may prove to be informative, particularly for selecting effective reinforcers in experimental and applied settings. Therefore, the purpose of Experiment 2 was to extend the results of Experiment 1 and of Roscoe et al. by assessing the reinforcing efficacy of both HP and LP stimuli under more effortful PR schedules.

Experiment 2: PR Assessment

Procedure

Because the results of the reinforcer assessment for Aaron and Logan suggested that their respective LP stimuli functioned as reinforcers, the reinforcing efficacy of these stimuli was evaluated using PR schedules. Because the LP stimulus did not function as a reinforcer for Derrick, he did not participate in the subsequent PR assessment.

The effects of reinforcement on responding under PR schedules was examined using a design similar to that employed for the reinforcer assessment in Experiment 1. For both participants, the PR step size was set at two responses based on the mean response rates obtained during the reinforcement conditions of Experiment 1. In the PR assessment, the ratio requirements continued to increase throughout the session until no responses were emitted for 3 min (Dantzer, 1976). Prior to beginning the subsequent session, the schedule was reset to the lowest step-size value (e.g., PR 2). In the concurrent and LP reinforcement conditions, session duration varied depending on response persistence. The mean session duration during the concurrent and LP reinforcement conditions for Experiment 2 was 14 min across participants.

Concurrent Baseline

This condition was identical to the concurrent baseline conducted in the reinforcer assessment of Experiment 1.

Concurrent Reinforcement

This condition was identical to the concurrent reinforcement condition conducted in the reinforcer assessment, with one notable exception: The response requirement increased by a step size of two following completion of the programmed response requirement. PR step sizes were equal for both HP and LP stimuli and operated independently. For example, given a step size of two, two responses to the task associated with the HP stimulus produced one piece of the HP edible item. Following stimulus consumption, four responses were required to access the HP stimulus. However, only two responses were required to produce the LP stimulus until the first schedule requirement was completed. As in the concurrent reinforcement condition in the reinforcer assessment, participants could freely allocate responding between the two tasks (but not simultaneously), and tasks were placed out of reach during reinforcer delivery and consumption.

LP Baseline

This condition was identical to the LP baseline conducted in the reinforcer assessment.

LP Reinforcement

This condition was identical to the LP reinforcement condition conducted in the reinforcer assessment, except, as in the concurrent reinforcement condition of the PR assessment, the response requirement increased by a step size of two, following completion of the programmed response requirement. Thus, when a session began, the participant was required to complete two responses to the task associated with the LP stimulus, which produced one piece of the LP stimulus. Four responses were then required to produce the LP stimulus, followed by six responses, eight responses, and so on. The task was unavailable during reinforcer delivery and consumption.

Results and Discussion

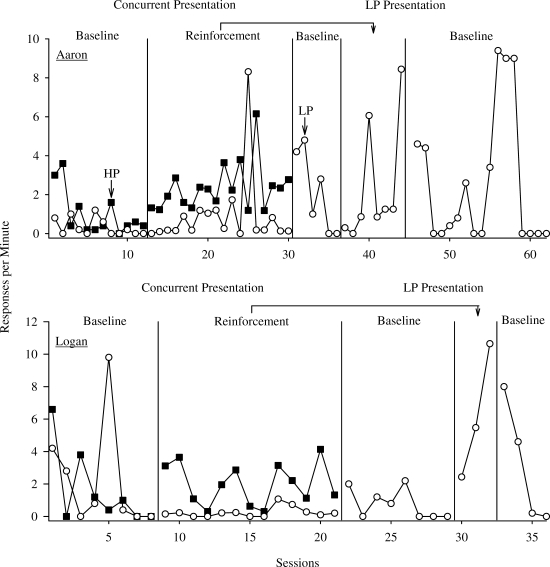

The outcomes of the PR assessment are shown in Figure 2. For Aaron, variable and decreasing levels of responding were observed during the concurrent baseline condition. When the HP and LP stimuli were presented contingent on meeting the response requirement in the concurrent PR reinforcement condition, responding on the task associated with the HP stimulus (M = 2.4 responses per minute) was generally higher than rates on the task associated with the LP stimulus (M = 0.9). In the initial LP baseline, rates of responding eventually decreased to zero levels. When the LP stimulus was delivered contingent on meeting the PR response requirements in the LP reinforcement condition, response rate increased relative to baseline (M = 2.4). A return to baseline resulted in variable responding, with no responding observed during the last four sessions.

Figure 2.

Progressive-ratio assessment data for Aaron (top) and Logan (bottom). Data are depicted as responses per minute.

Under the concurrent baseline, Logan's responding gradually decreased to zero levels for both the HP and LP stimuli. He responded at a relatively higher rate on the HP task (M = 2.0 responses per minute) than on the LP task (M = 0.3) in the concurrent reinforcement condition. In the LP baseline, responding was initially variable but eventually decreased to low rates, with no responding in the last three sessions. When the LP reinforcement condition was implemented, a steady increase in response rate was observed (M = 6.2). Finally, in a reversal to the LP baseline, response rates eventually reached zero.

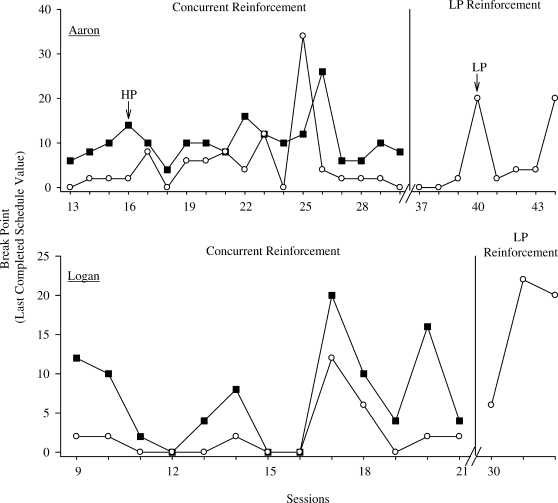

The break points (i.e., the last completed schedule value before a 3-min pause in responding occurred) achieved for each session are depicted in Figure 3. Only data from the reinforcement conditions are included, because PR reinforcement schedules were implemented only under these conditions (and not in baseline). Aaron's data show that for the majority of sessions, higher break points were observed for the HP task (M = 10.3) than for the LP task (M = 5.2) in the concurrent reinforcement condition. Under the LP reinforcement condition, the mean break point for all sessions was 6.5. As with Aaron, Logan's data show generally higher break points for the HP task (M = 6.9) relative to the LP task (M = 2.2) in the concurrent reinforcement condition. Under the LP reinforcement condition, mean break point for responses on the LP task was 16.

Figure 3.

Progressive-ratio assessment break-point data for Aaron (top) and Logan (bottom) during the concurrent-schedule presentation and the single-schedule presentation. Session numbers correspond to those in Figure 2, and the break in the x axis indicates the intervening baseline condition.

In Experiment 2, PR schedules were used to evaluate response persistence and reinforcer efficacy when HP and LP stimuli were delivered contingently. Under concurrent reinforcement arrangements, both Aaron and Logan generally allocated more responding toward the task associated with the HP stimulus than to the task associated with the LP stimulus. Similar response patterns were observed previously for both participants under FR 1 reinforcement schedules in the reinforcer assessment of Experiment 1, albeit with less variability. Subsequently, contingent LP stimuli were arranged under single-schedule conditions, and response persistence was observed for both participants. Although Aaron's rates of responding in the LP presentation were quite variable, he responded on the LP task at rates greater than zero. The high response rates are noteworthy given the much leaner reinforcement schedule in place (relative to previous analyses). For Logan, the steady increasing trend in response rates in this condition further underscores the potential of LP stimuli to be effective and durable reinforcers when presented without more highly preferred items.

General Discussion

In Experiment 1, we conducted paired-stimulus preference assessments with individuals with developmental disabilities and used the results to select HP and LP items. These stimuli were then evaluated in reinforcer assessments under concurrent- and single-schedule arrangements to determine the reinforcing efficacy of each stimulus, using an FR 1 schedule. Once we empirically demonstrated that these stimuli functioned as reinforcers, we repeated the concurrent- and single-schedule evaluations, using PR schedules in Experiment 2. The results showed that for both participants, the LP stimulus maintained responding in a single-schedule arrangement and under increasing PR response requirements.

One of the main goals of the present study was to determine whether LP items might function as effective reinforcers in certain contexts. For 2 participants (Aaron and Logan), the results of the reinforcer assessments showed that concurrent presentation of tasks associated with their HP and LP items resulted in relatively higher rates of responding to access the HP task. In most sessions of the reinforcer and PR assessments, responding for the HP stimulus was equal to or higher than that for the LP stimulus. This outcome might be expected, and these data support the use of empirically based preference assessments to predict highly preferred items. By contrast, when only the task associated with the LP stimulus was presented, both Aaron and Logan responded on the task associated with the LP item, providing evidence that the LP stimuli functioned as effective positive reinforcers.

The current results are inconsistent with those obtained in a recent study by Glover, Roane, Kadey, and Grow (2008), who found that under single PR reinforcement schedules, individuals rarely allocated responses toward a task that contingently produced LP stimuli. However, there are two notable differences between the procedures used by Glover et al. and the current investigation that may have contributed to the discrepancy in outcomes. The first difference was in the selection of the LP stimulus; Glover et al. evaluated the lowest ranked stimulus from the preference assessment, whereas we selected stimuli that were ranked low on the hierarchy (selected on 22% of opportunities) but not the lowest. In the current study, the item ranked lowest in the preference assessment was not chosen for evaluation to increase the likelihood that the item selected as the LP stimulus might function as a reinforcer (i.e., it was hypothesized that stimuli never approached in the preference assessment would not function as reinforcers). Therefore, it is possible that the lower response rates and break points observed for the LP stimulus in the Glover et al. study under single-schedule conditions were due to the selection of items that were never approached during the preference assessment (for 2 of 3 participants). It is interesting to note, however, that for one participant in the Glover et al. study, whose LP item was selected on 20% of trials in the preference assessment (which was also the lowest ranked item for that participant), response rates and break points achieved were very similar to those observed for Aaron under a similar condition in the current investigation. This similarity in response patterns across studies suggests that there may be a difference between a stimulus that is never selected in a preference assessment and one that is selected on a few trials, and that difference is sufficient to affect reinforcing efficacy.

The second difference between the Glover et al. (2008) study and the current investigation was the manner in which the single-schedule PR conditions were conducted. In the current study, LP stimuli were presented in consecutive single-schedule PR sessions, whereas in the Glover et al. study, LP stimuli were presented singly in a multielement format (alternated with HP stimuli). Thus, the differing results of the two studies may have simply been an artifact of the experimental designs and procedures employed.

In the current investigation the item ranked lowest in the preference assessment was not chosen for evaluation to increase the likelihood that the LP stimulus might function as a reinforcer. That is, we were interested primarily in evaluating the reinforcing efficacy of items that were relatively less preferred and not nonpreferred. Thus, an extension of the current research would be to evaluate the reinforcing efficacy of other stimuli ranked along the preference hierarchy (e.g., those ranked last, as evaluated by Glover et al., 2008; second to last, etc.). Informative results may also be obtained from evaluations of two similarly ranked LP stimuli (taking an approach similar in principle but procedurally opposite to that of Roane et al., 2001).

When the break-point data obtained from the PR assessment are evaluated in terms of total response output, they show that for both participants, under concurrent as well as LP reinforcement conditions, there were several sessions in which a high number of total responses were emitted. As an example, in the concurrent reinforcement condition of the PR assessment for Logan, break points of 20 and 12 were achieved on the HP and LP tasks, respectively, in Session 9. Due to the additive nature of PR schedules, to achieve these break points, Logan had to have made at least 110 responses on the HP task and at least 42 responses on the LP task, resulting in a minimum total of 152 responses emitted during this session. This high combined response output suggests that providing a choice between the delivery of HP and LP stimuli contingent on behavior may result in the occurrence of a large amount of responding overall. Even more compelling are the total number of responses emitted during sessions in the LP reinforcement conditions of the PR assessment, when responses produced the LP stimulus. In two sessions (22 and 26), Aaron achieved break points of 20, meaning that he made at least 110 responses in each session before the session ended. In the same condition, Logan made at least 132 and 110 responses in Sessions 15 and 16, respectively, based on break points of 22 and 20. These data suggest that even when responding produces stimuli ranked relatively low in a preference hierarchy, high overall response outputs can be obtained. Such responding may be of particular significance when it is more important to characterize behavior in terms of overall response output rather than rate of responding (e.g., in a classroom setting, where teachers may be more concerned with the amount of work completed than with the rate at which students complete work).

A primary limitation of the current investigation was the design used for the reinforcer and PR assessments. In Experiment 1, we replicated the design implemented by Roscoe et al. (1999) for the reinforcer assessment. This design limits the conclusions that can be drawn with regard to the absolute reinforcer value of HP stimuli because the HP stimuli were never evaluated in the absence of the LP stimuli. In Experiment 2, we used a similar design to that for Experiment 1 for the PR assessment except that we included a reversal to baseline following the LP reinforcement condition. Even though we showed that respective LP stimuli functioned as reinforcers in a single-schedule context for Aaron and Logan, the reinforcing effects of the LP item were less apparent for Aaron in the PR assessment. Therefore, the reinforcing efficacy of LP items may have been clearer had a reversal to the LP reinforcement condition (which was not conducted in the current investigation due to time constraints) been included.

The present study adds to the literature in several ways. First, it is a systematic replication of Roscoe et al. (1999), who showed that HP items (based on preference assessment results) maintained responding under concurrent-schedule conditions, and LP items maintained responding under single-schedule conditions. Similar results were obtained in the current investigation with 2 of 3 participants in the reinforcer assessment, whereas Roscoe et al. reported this finding for 6 of 7 participants. Second, we demonstrated that reinforcer value may be more appropriately assessed under PR schedules than under FR 1 schedules. Even though the results of Roscoe et al. and the reinforcer assessment in the current evaluation showed that contingent delivery of LP items maintained responding, participants were required to make only one response to produce access to their LP items. In conducting the PR assessment, we showed that responding to the LP task was maintained when stimuli were contingently delivered under increasing response requirements. Third, the schedules used in the PR assessment are more akin to the leaner schedules of reinforcement typically found in the natural environment (because the reinforcement schedules were gradually thinned within each session) than to the more dense schedules (e.g., FR 1) commonly used in applied research.

The extant basic (nonhuman and human) literature describes three measures commonly used to evaluate reinforcer efficacy: (a) peak response rate from single-schedule arrangements, (b) preference for one alternative over another in a concurrent-schedule arrangement, and (c) PR break point (Bickel, Marsch, & Carroll, 2000; Madden, Smethells, Ewan, & Hursh, 2007). Of the three measures, PR break point has been the least investigated in applied research, although it may provide unique information on parameters such as reinforcer durability. By evaluating stimuli using PR schedules and using information (e.g., break points) derived from these evaluations, we may be better able to articulate under what conditions and to what extent those stimuli function as reinforcers. From both experimental and clinical standpoints, use of PR schedules has the potential to make the reinforcer identification process context specific (in some situations LP stimuli may effectively support responding, whereas LP stimuli may not in others). Roane et al. (2001) suggested that in lieu of conducting extended analyses involving exposure to a single FR schedule value, PR schedules may be more time efficient for examining responding under increasing response requirements because each schedule value occurs only once per session. As a consequence, response persistence may be directly assessed because sessions are not terminated until the individual ceases to engage in the task for a predetermined period of time (i.e., persistence on a given task is dictated largely by the individual and not by a session duration set by the evaluator).

Furthermore, the break points obtained from PR sessions can be helpful in guiding experimenters and clinicians toward selecting effective stimuli to use in reinforcer-based interventions, because break points provide information on the maximum number of responses the individual will emit given the contingency in place. Selection of durable reinforcers is particularly important because treatments using those stimuli may be implemented over long periods of time or may involve repetitive or effortful tasks. Thus, assessments involving PR schedules may reduce the number of preference or reinforcer assessments that may need to be conducted in the future for that individual, especially if several stimuli are evaluated for reinforcer efficacy during initial assessments. Toward that end, one implication of the current findings is that LP stimuli might have use under certain conditions. In some cases, using LP items could be ideal when the item determined to be highly preferred is inappropriate or unfeasible. For example, if a relatively less preferred book can maintain responding and can be used in a classroom setting in lieu of a more highly preferred, but noisy, computer game, such a modification in a behavioral intervention would likely make it more acceptable to teachers, caregivers, and other related individuals. Even though responding may not occur at comparable rates when the LP item is delivered instead of the HP item, the LP item may be useful in producing relatively high overall response output. As the results of the current investigation have shown, evaluating LP stimuli in concurrent- and single-schedule arrangements, as well as under increasing response requirements, can reveal valuable information regarding reinforcer efficacy.

Acknowledgments

This experiment was completed in partial fulfillment of the requirements of the MA degree by the first author. We thank Ken Beauchamp and Carolynn Kohn for their comments on an earlier version of this manuscript. We also thank Stephany Crisolo, Weston Rieland, Noël Ross, Sophia Spearman, and the undergraduate research assistants who were integral in carrying out this research. Portions of this research were presented at the 25th meeting of the California Association for Behavior Analysis in Burlingame and the 33rd meeting of the Association for Behavior Analysis in San Diego, California. Monica Francisco is now at the University of Kansas, and Jolene Sy is now at the University of Florida.

References

- Baron A, Mikorski J, Schlund M. Reinforcement magnitude and pausing on progressive-ratio schedules. Journal of the Experimental Analysis of Behavior. 1992;58:377–388. doi: 10.1901/jeab.1992.58-377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bickel W.K, Marsch L.A, Carroll M.E. Deconstructing relative reinforcing efficacy and situating the measures of pharmacological reinforcement with behavioral economics: A theoretical proposal. Psychopharmacology. 2000;153:44–56. doi: 10.1007/s002130000589. [DOI] [PubMed] [Google Scholar]

- Dantzer R. Effect of diazepam on performance of pigs in a progressive ratio schedule. Physiology and Behavior. 1976;17:161–163. doi: 10.1016/0031-9384(76)90286-9. [DOI] [PubMed] [Google Scholar]

- DeLeon I.G, Fisher W.W, Herman K.M, Crosland K.C. Assessment of a response bias for aggression over functionally equivalent appropriate behavior. Journal of Applied Behavior Analysis. 2000;33:73–77. doi: 10.1901/jaba.2000.33-73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeLeon I.G, Iwata B.A, Goh H, Worsdell A.S. Emergence of reinforcer preference as a function of schedule requirements and stimulus similarity. Journal of Applied Behavior Analysis. 1997;30:439–449. doi: 10.1901/jaba.1997.30-439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeLeon I.G, Iwata B.A, Roscoe E.M. Displacement of leisure reinforcers by food during preference assessments. Journal of Applied Behavior Analysis. 1997;30:475–484. doi: 10.1901/jaba.1997.30-475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Egel A.L. Reinforcer variation: Implications for motivating developmentally disabled children. Journal of Applied Behavior Analysis. 1981;14:345–350. doi: 10.1901/jaba.1981.14-345. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fisher W.W, Mazur J.E. Basic and applied research on choice responding. Journal of Applied Behavior Analysis. 1997;30:387–410. doi: 10.1901/jaba.1997.30-387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fisher W, Piazza C.C, Bowman L.G, Hagopian L.P, Owens J.C, Slevin I. A comparison of two approaches for identifying reinforcers for persons with severe and profound disabilities. Journal of Applied Behavior Analysis. 1992;25:491–498. doi: 10.1901/jaba.1992.25-491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glover A.C, Roane H.S, Kadey H.J, Grow L.L. Preference for reinforcers under progressive- and fixed-ratio schedules: A comparison of single and concurrent arrangements. Journal of Applied Behavior Analysis. 2008;41:163–176. doi: 10.1901/jaba.2008.41-163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hodos W. Progressive ratio as a measure of reward strength. Science. 1961;134:943–944. doi: 10.1126/science.134.3483.943. [DOI] [PubMed] [Google Scholar]

- Hodos W, Kalman G. Effects of increment size and reinforcer volume on progressive ratio performance. Journal of the Experimental Analysis of Behavior. 1963;6:387–392. doi: 10.1901/jeab.1963.6-387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson M.W, Bickel W.K. Replacing relative reinforcing efficacy with behavioral economic demand curves. Journal of the Experimental Analysis of Behavior. 2006;85:73–93. doi: 10.1901/jeab.2006.102-04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Madden G.J, Smethells J.R, Ewan E.E, Hursh S.R. Tests of behavioral-economic assessments of relative reinforcer efficacy: Economic substitutes. Journal of the Experimental Analysis of Behavior. 2007;87:219–240. doi: 10.1901/jeab.2007.80-06. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pierce W.D, Cheney C.D. Behavior analysis and learning. Mahwah, NJ: Erlbaum; 2004. pp. 235–267. [Google Scholar]

- Ringdahl J.E, Vollmer T.R, Marcus B.A, Roane H.S. An analogue evaluation of environmental enrichment: The role of stimulus preference. Journal of Applied Behavior Analysis. 1997;30:203–216. [Google Scholar]

- Roane H.S, Call N.A, Falcomata T.S. A preliminary analysis of adaptive responding under open and closed economies. Journal of Applied Behavior Analysis. 2005;38:335–348. doi: 10.1901/jaba.2005.85-04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roane H.S, Lerman D.C, Vorndran C.M. Assessing reinforcers under progressive schedule requirements. Journal of Applied Behavior Analysis. 2001;34:145–167. doi: 10.1901/jaba.2001.34-145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roscoe E.M, Iwata B.A, Kahng S. Relative versus absolute reinforcement effects: Implications for preference assessments. Journal of Applied Behavior Analysis. 1999;32:479–493. doi: 10.1901/jaba.1999.32-479. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saunders R.R, McEntee J.E, Saunders M.D. Interaction of reinforcement schedules, a behavioral prosthesis, and work-related behavior in adults with mental retardation. Journal of Applied Behavior Analysis. 2005;38:163–176. doi: 10.1901/jaba.2005.9-04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shahan T.A, Bickel W.K, Madden G.J, Badger G.J. Comparing the reinforcing efficacy of nicotine containing and de-nicotinized cigarettes: A behavioral economic analysis. Psychopharmacology. 1999;147:210–216. doi: 10.1007/s002130051162. [DOI] [PubMed] [Google Scholar]

- Taravella C.C, Lerman D.C, Contrucci S.A, Roane H.S. Further evaluation of low-ranked items in stimulus-choice preference assessments. Journal of Applied Behavior Analysis. 2000;33:105–108. doi: 10.1901/jaba.2000.33-105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tustin R.D. Preference for reinforcers under varying schedule arrangements: A behavioral economic analysis. Journal of Applied Behavior Analysis. 1994;27:597–606. doi: 10.1901/jaba.1994.27-597. [DOI] [PMC free article] [PubMed] [Google Scholar]