Abstract

Consideration of reinforcer magnitude may be important for maximizing the efficacy of treatment for problem behavior. Nonetheless, relatively little is known about children's preferences for different magnitudes of social reinforcement or the extent to which preference is related to differences in reinforcer efficacy. The purpose of the current study was to evaluate the relations among reinforcer magnitude, preference, and efficacy by drawing on the procedures and results of basic experimentation in this area. Three children who engaged in problem behavior that was maintained by social positive reinforcement (attention, access to tangible items) participated. Results indicated that preference for different magnitudes of social reinforcement may predict reinforcer efficacy and that magnitude effects may be mediated by the schedule requirement.

Keywords: concurrent operants, preference, progressive-ratio schedules, reinforcement magnitude, reinforcer efficacy

Various parameters of reinforcement have been found to influence responding. For example, research findings have demonstrated more responding (i.e., a preference) for response alternatives that produce higher rates of reinforcement (e.g., Mace, Neef, Shade, & Mauro, 1994), higher quality reinforcers (e.g., Koehler, Iwata, Roscoe, Rolider, & O'Steen, 2005; Neef, Mace, Shea, & Shade, 1992), and more immediate reinforcers (e.g., Neef, Mace, & Shade, 1993) under concurrent-operants (i.e., choice) arrangements. Taken together, this line of research suggests that various dimensions of reinforcement may have an important role in determining reinforcer effectiveness.

One parameter of reinforcement that has begun to receive attention in the applied literature is reinforcement magnitude. Magnitude refers to the quantity, intensity, or duration of the reinforcer provided for responding (Hoch, McComas, Johnson, Faranda, & Guenther, 2002). For example, if the reinforcer is a musical toy, magnitude could be manipulated by varying the number of toys available to the individual (quantity), the volume of the music generated by the toy (intensity), or the amount of time that the individual has access to the toy (duration). Results of numerous basic studies with nonhuman subjects suggest that reinforcement magnitude can influence response allocation and response rates (e.g., Catania, 1963; Hodos, 1961; Hutt, 1954; Jenkins & Clayton, 1949; Reed, 1991; Reed & Wright, 1988; Stebbins, Mead, & Martin, 1959). Thus, this parameter may be important to consider when establishing behavior programs for individuals with developmental disabilities. A growing number of applied studies have examined the relation between the magnitude of contingent reinforcers and responding, but the results have been inconsistent (e.g., Hoch et al.; Lerman, Kelley, Van Camp, & Roane, 1999; Lerman, Kelley, Vorndran, Kuhn, & LaRue, 2002; Lovitt & Curtiss, 1969; Peck et al., 1996; Roane, Falcomata, & Fisher, 2007; Volkert, Lerman, & Vorndran, 2005; Vollmer, Borrero, Lalli, & Daniel, 1999). Thus, although this parameter is often discussed in applied textbooks (e.g., Cooper, Heron, & Heward, 2007), few guidelines are available for determining how much of the reinforcer to deliver when using reinforcement-based procedures.

In several studies, different amounts of reinforcement produced little, if any, differences in response acquisition or maintenance (e.g., Lerman et al., 1999, 2002; Lovitt & Curtiss, 1969; Volkert et al., 2005). By contrast, results of other studies suggest that reinforcement magnitude does influence responding under some circumstances (e.g., Hoch et al., 2002; Peck et al., 1996; Roane et al., 2007; Vollmer et al., 1999). For example, Peck et al. evaluated the combined effects of reinforcer magnitude and quality on response allocation while treating problem behavior maintained by attention or escape from demands. Choice between appropriate behavior and either problem behavior or an arbitrary response was evaluated within a concurrent arrangement. All participants allocated more responses to the alternative that produced the longer duration and higher quality reinforcer (e.g., 2 min of enthusiastic attention vs. 10 s of neutral attention). However, the role of reinforcement magnitude was not clear, because magnitude and quality were manipulated simultaneously.

The inconsistent outcomes reported in applied studies on reinforcement magnitude could be due to at least two procedural variations. First, it is possible that magnitude reliably influences responding under concurrent schedules (e.g., Hoch et al., 2002; Peck et al., 1996; Vollmer et al., 1999) but not under single-operant schedules (e.g., Lerman et al., 2002; Volkert et al., 2005). In basic studies, reinforcement magnitude has been found to be positively related (e.g., Hutt, 1954; Jenkins & Clayton, 1949; Reed & Wright, 1988; Stebbins et al., 1959), negatively related (e.g., Belke, 1997; Lowe, Davey, & Harzem, 1974; Staddon, 1970), or unrelated (e.g., Catania, 1963; Keesey & Kling, 1961) to rates of responding under single-operant schedules. By contrast, studies using concurrent schedules generally report a positive relation between reinforcer magnitude and responding (e.g., Catania; Reed, 1991; see Bonem & Crossman, 1988, for a review of basic research).

Second, the effects of magnitude on responding under single-operant schedules may depend on the reinforcement schedule used. Reinforcement has been delivered on a continuous schedule in most applied studies on reinforcement magnitude (e.g., Lerman et al., 1999; Volkert et al., 2005). In a review of basic research, Reed (1991) noted that a positive relation between response rates and reinforcer magnitude has been found under schedules that tend to increase responding (e.g., thin ratio schedules); conversely, a negative relation has been observed under schedule requirements that decrease responding (e.g., differential reinforcement of low-rate behavior).

A positive relation between reinforcer magnitude and responding also has been observed in studies using increasing schedule requirements. For example, Hodos (1961) examined the effects of different reinforcer magnitudes (i.e., sweetened condensed milk diluted with various amounts of water) on response rate under progressive-ratio (PR) schedules. As the reinforcer magnitude was increased (i.e., as less water was added to the milk), responding persisted under higher schedule requirements, suggesting a positive relation between magnitude and reinforcer efficacy.

A growing number of basic and applied studies have shown that PR schedules are useful for comparing the efficacy of different reinforcers and for evaluating variables that may interact with reinforcement schedules to influence responding (e.g., Baron & Derenne, 2000; Baron, Mikorski, & Schlund, 1992; Roane, Lerman, & Vorndran, 2001; Stafford & Branch, 1998; Thomas, 1974). For example, in Roane et al., two stimuli that produced similar reinforcement effects under low schedule requirements (e.g., PR 1) were differentially effective as the schedule requirement was increased. Similar findings have been obtained in applied studies using progressively increasing fixed-ratio (FR) schedule requirements (e.g., DeLeon, Iwata, Goh, & Worsdell, 1997; Tustin, 1994).

Thus, the disparity in applied findings on reinforcement magnitude may be explained, in part, by the schedule arrangement and schedule of reinforcement used. Systematic analysis of schedule arrangements (i.e., concurrent vs. single operant; dense vs. thin ratio schedules) is needed in research on the relation between reinforcer efficacy and magnitude. Despite inconsistent applied findings, basic research indicates that reinforcer magnitude can influence responding in important ways, especially in choice situations or when response requirements are increased systematically over time. These findings are clinically relevant because reinforcement is often available for multiple responses during treatment (e.g., Mace & Roberts, 1993) and because schedule thinning is a common component of reinforcement-based programs (e.g., Hanley, Iwata, & Thompson, 2001; Kahng, Iwata, DeLeon, & Wallace, 2000; Roane, Fisher, Sgro, Falcomata, & Pabico, 2004). Basic findings suggest that treatment will be more successful if the magnitude of the reinforcer is increased as the schedule is thinned, a recommendation that has appeared in applied textbooks (e.g., Cooper et al., 2007). Results of a recent study by Roane et al. (2007) also provided preliminary support for this recommendation in the context of treating problem behavior with differential reinforcement of other behavior.

However, relatively little is known about children's preference for different magnitudes of reinforcers or the extent to which relative preference would be related to differences in reinforcer efficacy in the context of increasing schedule requirements. Thus, the generality of basic findings on magnitude needs to be established with clinical populations and with the types of reinforcers that are typically used in application (e.g., attention, toys).

The purpose of the current study was to evaluate the basic relations among reinforcer magnitude, preference, and efficacy by drawing on the procedures and results of basic research in this area. First, a concurrent-operants preference assessment was used to determine whether children with developmental disabilities would demonstrate a preference for different durations of social reinforcement. A PR schedule of reinforcement was then arranged to evaluate the relative effects of different amounts of the reinforcer on free-operant responding. The use of PR schedules also permitted an examination of potential interactions between the schedule of reinforcement and magnitude. Finally, the relation between preference and efficacy was examined to determine if relative preference was a reliable predictor of relative reinforcer efficacy.

Method

Participants and Settings

Participants were selected from children who had been referred for the functional analysis and treatment of problem behavior (i.e., aggression and disruption). The first 4 children whose problem behavior was found to be maintained by social positive reinforcement (i.e., access to tangible items or attention) participated in the study. Three of these children completed the study. The 4th child did not complete the study because the reinforcer selected for the magnitude assessment failed to maintain responding, suggesting that it no longer functioned as a reinforcer. Seth and Chad were 5-year-old boys who had been diagnosed with autism, and Whitney was an 11-year-old girl who had been diagnosed with autism and a seizure disorder. All participants communicated via vocal speech that occurred spontaneously and when prompted. Participants also demonstrated good receptive language skills (e.g., followed two-step instructions). None of the participants demonstrated sensory impairments, although Whitney had some delays in fine motor skills. Whitney took medication for her seizure disorder throughout the duration of the study.

A functional analysis using procedures described by Iwata, Dorsey, Slifer, Bauman, and Richman (1982/1994; Seth and Chad) or Northup et al. (1991; Whitney) was conducted to identify the reinforcer that maintained each participant's problem behavior (data available from the second author). Results indicated that each participant's problem behavior was maintained by multiple social reinforcers (access to preferred items, attention, and escape from demands for Seth and Chad; access to preferred items and escape from demands for Whitney). For the current investigation, two reinforcers (attention and access to tangible items) were evaluated with Seth, and one reinforcer was evaluated for Chad (attention) and Whitney (access to preferred items).

Trained doctoral students conducted sessions during each magnitude preference and reinforcer assessment. Sessions were conducted at the participant's day care (Seth), in rooms at a university-based summer program for children with autism (Seth and Chad), or in an unused classroom at the participant's school (Whitney). Each room was equipped with materials necessary for sessions, including a table, chairs, and stimuli used during the assessments (e.g., color cards, toys). Other objects (e.g., trash can, textbooks, extra desks, sofa) stored in the room at the day care or school were also present; however, participants were blocked from interacting with these items when necessary.

Response Measurement, Interobserver Agreement, and Procedural Integrity

Graduate and undergraduate students (who had received previous training in direct observation and data collection) used laptop computers to collect frequency and duration data on targeted behaviors during each assessment. During the magnitude preference assessment, data were collected on the number of times a particular magnitude was selected (defined as the participant touching the stimulus associated with a particular magnitude). Data were converted to the percentage of trials in which each magnitude was selected by dividing the number of times it was chosen by the total number of trials the magnitude was presented and multiplying that number by 100%. A preference for a particular magnitude was indicated if a participant chose that magnitude a higher percentage of times relative to the comparison magnitude or no reinforcement for at least three consecutive sessions.

During the reinforcer assessment, data were collected on the frequency of the target response. The target response for Seth and Chad was a button press, defined as pressing a button with the finger or hand until an audible click was emitted; for Whitney the target response was a chip insertion, defined as inserting a poker chip into a slot at the top of an enclosed cylinder. Arbitrary responses were chosen instead of academic responses because each participant's problem behavior was determined to be maintained, at least in part, by escape from academic demands. Thus, the target responses were selected to reduce the likelihood of occasioning problem behavior, which was placed on extinction throughout the study. Data were converted to cumulative number of responses emitted across sessions by adding the total number of responses emitted during each session to the total number of responses emitted during previous sessions. Cumulative frequency displays were selected on the basis of their use in previous studies using PR schedules (e.g., Roane et al., 2001).

During both assessments, data were collected on the duration of reinforcer delivery, which was defined as the total amount of time the experimenter provided participants with access to the relevant reinforcer (i.e., access to tangible items or attention) in order to assess procedural integrity. Procedural integrity was defined as delivery of the reinforcer within 5 s above or below the corresponding magnitude of reinforcement. For example, if the 120-s magnitude was chosen, procedural integrity was scored if the experimenter delivered between 115 s and 125 s of reinforcement. Each reinforcer delivery was measured for procedural integrity during all sessions. The total percentage of sessions with correct procedural integrity was calculated across trials by dividing the number of reinforcer deliveries with correct delivery by the total number of reinforcer deliveries and multiplying by 100%. Mean integrity scores for all participants exceeded 94%. During the preference assessments mean integrity scores for each participant were as follows: Seth (tangible), 98% (range, 33% to 100%); Seth (attention), 98% (range, 50% to 100%); Chad, 96% (range, 50% to 100%); and Whitney, 95% (range, 50% to 100%). Mean integrity scores during the reinforcer assessments were as follows: Seth (tangible), 95% (range, 75% to 100%); Seth (attention), 94% (range, 53% to 100%); Chad, 97% (range, 88% to 100%); and Whitney, 98% (range, 50% to 100%).

Interobserver agreement data were collected for 62% of all preference assessment sessions and 64% of all reinforcer assessment sessions by having a second observer simultaneously but independently record occurrences of the target behaviors. Agreement was calculated separately for preference and reinforcer assessments. Agreement during the preference assessment was calculated by comparing the responses recorded by each observer on a trial-by-trial basis. An agreement was defined as both observers recording the same stimulus chosen on each trial or both recording that no choice was made. Interobserver agreement coefficients for the preference assessment were calculated by dividing the number of trials with agreements by the total number of trials and multiplying the resulting quotient by 100%. During the reinforcer assessment, interobserver agreement was calculated by dividing each session into 10-s intervals and comparing the data of the two observers. Agreements were defined as the same number of responses scored within a 10-s interval, and disagreements were defined as a different number of responses scored within the interval. Interobserver agreement coefficients were calculated by dividing the number of 10-s intervals with agreement by the number of 10-s intervals with agreement plus the number of 10-s intervals with disagreement, multiplied by 100% and collapsed across the target behaviors for each assessment. Coefficients for the preference assessment were collapsed across choices for the large, small or medium, and neutral reinforcer. For Seth, mean agreement coefficients for the magnitude preference assessments were 100% (tangible) and 99% (range, 83% to 100%, attention). Seth's agreement coefficients for each reinforcer assessment were 98% (range, 89% to 100%, tangible) and 99% (range, 95% to 100%, attention). For Chad, agreement coefficients were 100% for the preference assessment and 99% (range, 96% to 100%) for the reinforcer assessment. The agreement coefficients for Whitney's preference and reinforcer assessments were 99% (range, 83% to 100%) and 99% (range, 67% to 100%), respectively.

Procedure

The reinforcers used during the preference and reinforcer assessments were those that had been found to partially maintain each participant's problem behavior (i.e., access to tangible items and attention for Seth, attention for Chad, and access to tangible items for Whitney). Tangible items were identical to those used during each participant's functional analysis; however, the type of attention delivered differed from that used in the functional analysis (verbal reprimands, statements of concern). During the magnitude preference and reinforcer assessments, attention consisted of verbal and physical interaction in the form of praise, tickles, hugs, songs, verbal stories, and games (e.g., spinning around like an airplane). Each participant was exposed to two assessments. First, a magnitude preference assessment was conducted to identify preference for a particular magnitude (i.e., large or small) of reinforcement and to identify a magnitude value for which no preference was shown. The large magnitude was 120-s access to the reinforcer, and the small magnitude was 10-s access to the reinforcer. These values were chosen because they represented a range commonly used in applied research on the assessment and treatment of problem behavior (e.g., brief attention, Worsdell, Iwata, Hanley, Thompson, & Kahng, 2000, to 120 s, Volkert et al., 2005). Following the magnitude preference assessment, a magnitude reinforcer assessment was conducted to evaluate the reinforcer efficacy of each magnitude value. Seth was exposed to the preference and reinforcer assessments for two reinforcers (i.e., access to tangible items and attention) separately. Throughout the study, problem behavior was placed on extinction.

Discrimination Training

Prior to the magnitude preference assessment, sessions were conducted to teach each participant to discriminate among three different-colored cards (Seth and Whitney) or white cards with different numbers on them (Chad) that would be correlated with different magnitudes of reinforcement (all cards were 12.7 cm by 12.7 cm). The stimuli were placed on a board an equal distance from each other (approximately 0.6 cm) and from the participant (approximately 30.5 cm). Three phases of discrimination training were conducted in a concurrent-operants arrangement: (a) forced-choice trials during which the experimenter physically guided the participant to choose the stimuli associated with large or small durations of reinforcement or no reinforcement five times each, (b) free-choice trials between two stimuli (i.e., either the small or large magnitude of reinforcement and no reinforcement) that were terminated when the participant selected the large or small magnitude of reinforcement relative to no reinforcement for five consecutive trials, and (c) a free-choice concurrent-operants arrangement in which all three options were available; this was terminated following five consecutive trials in which the participant did not select the no-reinforcement stimulus. Sessions continued until the criteria for each phase of discrimination training were met or 1 hr elapsed, whichever came first. (Additional discrimination training procedures are available from the second author.)

When tangible items were used as the reinforcer, a multiple-stimulus-without-replacement (MSWO) preference assessment (DeLeon & Iwata, 1996) was conducted prior to each session using the tangible items (toys) that had been evaluated in the functional analysis. For Seth, the items were a vibrating cat, an airplane, a slinky, a transforming action figure, and a toy helicopter. For Whitney, the items were a tape player that played children's songs, a mirror ball, a bumpy ball, a musical toy with a mirror on it, and a vibrating cat. The top two items from the MSWO assessment were used as reinforcers during each subsequent session.

Magnitude Preference Assessment

Preference for the three magnitude values (i.e., small, large, and no reinforcement) was evaluated using a concurrent-operants arrangement (Fisher et al., 1992; Vollmer et al., 1999). Each session consisted of five choice trials. Procedures were identical to those described above for the final phase of discrimination training, except that the verbal prompt was changed from an instruction (i.e., saying, “pick one”) to a statement that indicated a choice was available (“Here, you can pick one if you want”). Attempts to select more than one stimulus were blocked, and the experimenter reissued the verbal prompt to select one stimulus. If the participant touched the stimulus associated with either the small or large magnitude of reinforcement, the reinforcer being evaluated (i.e., access to tangible items or attention) was delivered for the corresponding magnitude as previously described. If participants chose the no-reinforcement stimulus, the experimenter continued item restriction (tangible) or turned away (attention) for 10 s. The stimuli were re-presented at the end of each reinforcement (or no reinforcement) interval. If participants did not make a choice, the experimenter stated “You can pick one if you want” every 30 s until a choice was made. Throughout the assessment, the participant was free to move about the room.

As previously noted, preference was defined as the participant's choice of a particular magnitude on a higher percentage of trials relative to the comparison magnitude and no reinforcement for at least three consecutive preference assessment sessions. The assessment continued until this criterion was met or at least five sessions were conducted, whichever came first. For all participants, a nonpreferred value was identified for use in the subsequent assessment of reinforcer efficacy (see further explanation below). When preference was shown for the large magnitude (Seth, attention and tangible; Chad, attention), the small magnitude was systematically increased by approximately 50% of the distance between the small and large magnitudes (e.g., for Seth from 10 s to 60 s), with a slightly larger increase for Chad when increasing from 60 s to 105 s due to experimental error, until a value was identified at which no preference was observed. When no preference was observed during the initial choice of 10 s versus 120 s for Whitney, the large magnitude was increased by 50% (i.e., 180 s) to evaluate whether a larger difference between the values would affect preference. A different stimulus was associated with each new value, and discrimination training for all new values was conducted as described above prior to conducting additional preference assessment sessions. Preference for one particular magnitude over another was evaluated in a reversal design for Seth (ABAB) and Chad (ABCAC). An AB design was used for Whitney because no preference was observed.

Magnitude Reinforcer Assessment

It was hypothesized that when participants showed a clear preference for a given magnitude, the reinforcer delivered at that magnitude would be more effective at increasing responding than a reinforcer delivered at a less preferred magnitude. Likewise, it was hypothesized that when a clear preference was not displayed, the reinforcing efficacy of the different magnitudes would be similar (i.e., would maintain similar levels of responding). To test these hypotheses, a PR reinforcer assessment was conducted based on the procedures described by Roane et al. (2001). For Seth, the efficacy of three different magnitudes of reinforcement was compared because he demonstrated a preference for 120 s over 10 s but not for 120 s over 60 s. Thus, 10 s, 60 s, and 120 s were compared during the reinforcer assessment. Three magnitudes were also evaluated for Chad (i.e., 10 s, 105 s, and 120 s), who demonstrated a preference for 120 s over 10 s but who did not consistently prefer 120 s over 105 s. Two magnitudes of reinforcement (10 s and 120 s) were compared to no reinforcement for Whitney because a clear preference for 10 s, 120 s, or 180 s was not observed in the magnitude preference assessment. A subsequent analysis comparing 10 s and 30 s was also conducted to evaluate the influence of satiation on responding (described below). The stimulus used during the preference assessment (the color card) to represent each magnitude was present during corresponding sessions of the reinforcer assessment to facilitate discrimination among the magnitude alternatives.

Prior to baseline, participants were taught to engage in the target response (i.e., button pressing or chip insertion) to access the reinforcer. Training was conducted using a graduated prompting procedure (successive verbal, model, physical prompts). During training, participants received 20-s access to the reinforcer being evaluated contingent on each occurrence of the targeted response. Training was terminated when the participant emitted the targeted response independently on 10 consecutive trials.

Prior to each baseline and reinforcement session, the experimenter physically guided the participant to engage in the target response once so that he or she would contact the contingencies in place during that session. During baseline, no programmed consequences were arranged for the target response. Each baseline session continued until the participant did not engage in the targeted response for 5 min or the session duration reached 60 min, which was never met (see below for a description of an additional termination criterion for Seth).

During all reinforcement sessions, access to the duration of the specified reinforcer was provided contingent on the completion of an increasing number of responses (e.g., button pressing) within each session. A PR schedule of reinforcement was used in which the number of responses required to access the reinforcer systematically increased within each session following the completion of the previous schedule requirement. After the completion of each session, the schedule requirement was reset to the lowest response requirement (i.e., PR 1) at the beginning of the next session. For each participant, the following PR schedule was used: PR 1, PR 1, PR 2, PR 2, PR 5, PR 5, PR 10, PR 10, PR 20, PR 20, PR 30, PR 30, PR 40, PR 40. The PR schedule involved two exposures to each requirement to prevent rapid ratio strain (i.e., to increase the likelihood that the procedures were sensitive enough to show any potential differences in resistance across the different reinforcement magnitudes). This was similar to the PR schedules used in previous applied research (e.g., Roane et al., 2001). If participants reached the final PR schedule and the session-duration termination criteria had not been met, the schedule was increased by increments of 10 until termination criteria were met (as described above).

An additional termination criterion based on the total amount of time available to engage in the targeted response was used for Seth's attention sessions because of the high rate of responding observed during the initial series of sessions. The high rates of responding were a concern because access to the apparatus could be different across the different magnitudes due to the 60-min session termination criterion. Thus, the additional criterion was initiated in an attempt to hold this variable constant across the different magnitudes of reinforcement. Based on the rate of responding, the absolute number of reinforcers that could be earned within 60 min was determined. The absolute duration of reinforcement was then calculated by multiplying 120 s by the absolute number of reinforcers that could be earned. The additional termination criterion was then determined by subtracting the absolute duration of reinforcement from 60 min to yield the maximum amount of time he could have access to the response apparatus. The additional termination criterion for his attention assessment was 27-min access to the button. It should be noted that this termination criterion was met only twice during his attention reinforcer assessment. This additional termination criterion was applied for all magnitudes and types of reinforcement.

During the attention reinforcement phase (Seth and Chad), attention was presented for the corresponding duration evaluated during that session. During the tangible reinforcement sessions (Seth and Whitney), the top two items from the MSWO were used as reinforcers during each session. The experimenter delivered access to the tangible items for the corresponding duration being evaluated contingent on the completion of the schedule requirement.

Preferred and nonpreferred values under the PR schedules were compared using a single-operant multielement design with preceding baseline observations. The reinforcer assessment continued until clear differences in responding were obtained (defined as no overlapping data points across the conditions for at least three consecutive sessions) or until no differences in responding were apparent for at least four sessions with each magnitude of reinforcement. For Whitney, an additional comparison phase was implemented based on the results of the initial reinforcer assessment. During the comparison of 10 s and 120 s, responding was more persistent under the 10-s magnitude than under the 120-s magnitude. It was hypothesized that within-session satiation effects during the large-magnitude sessions may have reduced levels of responding relative to those in the small-magnitude sessions. Thus, the value of the large magnitude was decreased (i.e., 30 s) and compared to 10 s. Following a return to baseline, an evaluation of 10 s and 30 s was conducted.

The main dependent variable during the reinforcer assessment was the cumulative number of responses emitted for each magnitude. As a secondary analysis, modified demand curves were constructed for each participant to obtain additional information about the value of the reinforcer (Johnson & Bickel, 2006). The modified demand curve showed the mean number of reinforcers earned at each schedule requirement. This was calculated by adding the total number of reinforcers earned at each schedule requirement within and across reinforcement sessions and dividing that number by the total number of reinforcement sessions. The maximum mean number of reinforcers that could be obtained for any given schedule requirement was two. Demand curves were used as an adjunct to the cumulative graphs because they provide a more fine-grained analysis of reinforcer value. That is, demand curves indicate whether participants maximized opportunities for reinforcement under each schedule requirement. The use of demand curves to assess relative reinforcer efficacy has been advocated in lieu of more traditional measures of reinforcer efficacy (e.g., response allocation, PR break points) by some authors (e.g., Johnson & Bickel).

Results

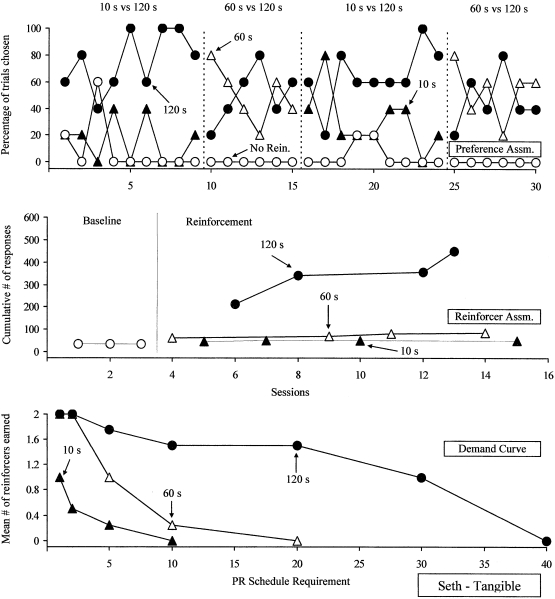

Results of Seth's magnitude preference assessment using tangible reinforcers are presented in Figure 1. Seth allocated more responding to the 120-s magnitude (M = 70%) than to the 10-s magnitude (M = 23%) and to no reinforcement (M = 7%) during the first phase. When the small-magnitude value was increased to 60 s, responding became variable, with response allocation alternating between 60 s (M = 52%) and 120 s (M = 48%). These results were replicated in subsequent phases and indicated that 120-s access to tangible items was preferred over 10-s access but not over 60-s access. Results of Seth's reinforcer assessment (cumulative number of responses) for tangible reinforcers are also presented in Figure 1. Note that cumulative data for each magnitude began from levels of responding observed during baseline. During baseline, button presses were observed only in the first session. During reinforcement, more responses occurred under the 120-s magnitude than under the 10-s and 60-s magnitudes, indicating that 120 s was a more effective magnitude of reinforcement than either 10 s or 60 s.

Figure 1.

Percentage of trials in which magnitude values were selected during the tangible preference assessment (top), cumulative number of responses across sessions of the tangible reinforcer assessment (middle), and demand curves (bottom) for Seth.

Figure 1 also depicts the demand curves for Seth. More reinforcers were earned across increasing schedule requirements for 120 s relative to 10 s and 60 s. More specifically, under the richer schedules of reinforcement (i.e., PR 1 and PR 2), similar numbers of reinforcers were earned, on average, for 120 s and 60 s relative to 10 s. Differences in reinforcer consumption were not observed until the schedule requirement reached PR 5. Differences in reinforcer consumption also occurred between 10 s and 60 s, with more reinforcers earned on average for 60 s relative to 10 s under each schedule of reinforcement.

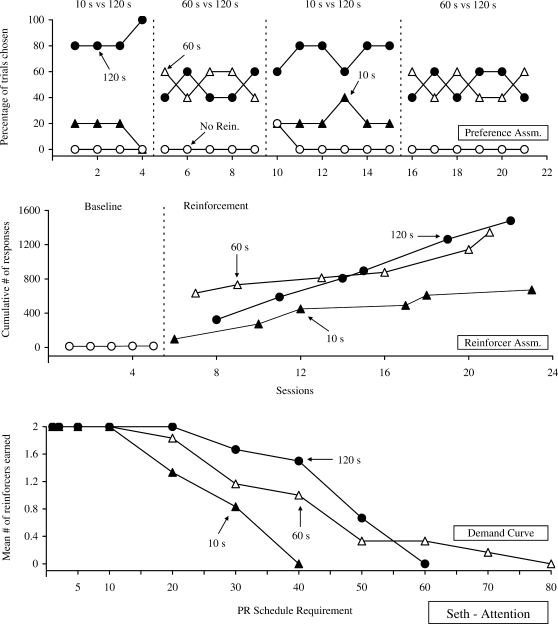

Figure 2 depicts the results of Seth's reinforcer assessment for attention. During the preference assessment, more responses were allocated to the 120-s magnitude (M = 78%) than to the 10-s magnitude (M = 20%) and to no reinforcement (M = 2%) during the first phase. When the small value was increased to 60 s, his choices became variable, alternating between 60 s (M = 51%) and 120 s (M = 49%); no responses were allocated to no reinforcement. These data indicate a preference for 120 s of attention over 10 s but not over 60 s.

Figure 2.

Percentage of trials in which magnitude values were selected during the attention preference assessment (top), cumulative number of responses across sessions of the attention reinforcer assessment (middle), and demand curves (bottom) for Seth.

The cumulative number of responses during Seth's reinforcer assessment is also depicted in Figure 2. Very little responding was observed during baseline. During reinforcement, higher levels of responding were observed under the 120-s magnitude than under the 10-s magnitude. Responding also was slightly higher under 120 s than under 60 s. As depicted in the demand curves, more reinforcers were earned for 120 s than for 10 s or 60 s as the schedule requirement increased. More reinforcers also were earned under 60 s than under 10 s. Interestingly, there was no difference between the conditions until the schedule exceeded PR 10, suggesting an interaction between schedule and magnitude. Moreover, slight differences between 120 s and 60 s emerged at PR 20 through PR 50, yet more reinforcers were earned for 60 s than for 120 s at values higher than PR 50.

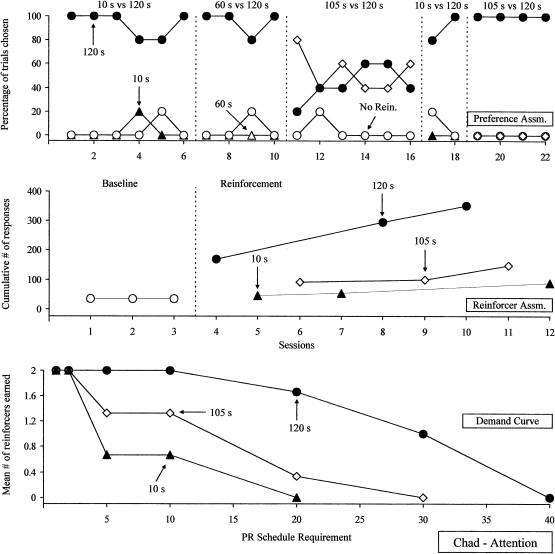

Figure 3 shows the results of Chad's preference and reinforcer assessments. Chad allocated more responses to the 120-s magnitude (M = 93%) than to 10 s (M = 2%), 60 s (M = 0%), and no reinforcement (M = 5%). When the small value was increased, choice initially alternated between 105 s (M = 53%) and 120 s (M = 43%). However, a reversal to 105 s versus 120 s in the final phase showed exclusive responding for 120 s relative to 105 s and to no reinforcement. These results indicate that 120 s of attention was preferred over 10 s and 60 s. Conclusions regarding relative preference for 120 s over 105 s could not be made in light of the inconsistent results obtained for this comparison, and additional reversals to 105 s versus 120 s were not conducted due to time constraints.

Figure 3.

Percentage of trials in which magnitude values were selected during the preference assessment (top), cumulative number of responses across sessions of the reinforcer assessment (middle), and demand curves (bottom) for Chad.

Data from Chad's reinforcer assessment are also presented in Figure 3. During baseline, button presses occurred only in the first session. During the reinforcement phase, more responses occurred under 120 s than under either 105 s or 10 s. The 105-s magnitude also was associated with more responses than the 10-s magnitude. The demand curves were consistent with these data, in that more reinforcers were earned for 120 s than for 105 s and 10 s. Schedule interactions were also observed, in that differences in reinforcer consumption across magnitudes did not emerge until a PR 5 schedule was reached.

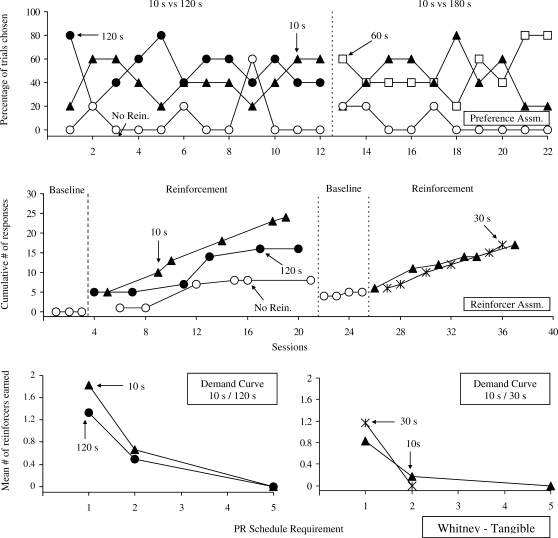

Results for Whitney are presented in Figure 4. During the preference assessment, similar levels of responding were observed for the 10-s magnitude (M = 51%) and the 120-s magnitude (M = 41%). Fewer responses were allocated towards no reinforcement (M = 8%). This lack of preference between small and large magnitudes continued even when the large magnitude was increased to 180 s (M = 44%) and was compared to 10 s (M = 50%) and to no reinforcement (M = 6%). These data indicate that Whitney preferred some reinforcement to no reinforcement, but she did not demonstrate a preference between different durations of reinforcement.

Figure 4.

Percentage of trials in which magnitude values were selected during the preference assessment (top panel), cumulative number of responses across sessions of the reinforcer assessment (middle panel), and demand curves (bottom panels) for Whitney.

Whitney's reinforcer assessment data are also depicted in Figure 4. No responses were observed during baseline. During the reinforcement phase, response output was significantly lower than that of the other participants, with higher levels of responding associated with 10 s and 120 s of reinforcement relative to no reinforcement. More responding was observed for 10 s than for 120 s, suggesting that the 10-s magnitude was a more effective reinforcer than the 120-s magnitude. However, it was hypothesized that responding was lower during the 120-s condition than in the 10-s condition due to within-session satiation effects. To test this hypothesis, 10 s was compared to 30 s following a return to baseline. Similar levels of responding were observed across magnitudes, suggesting that satiation was at least partly responsible for the results of the previous reinforcement phase.

Whitney's demand curves are shown for both comparisons (Figure 4). During the comparison of 10 s and 120 s, slightly more reinforcers were earned, on average, under 10 s at lower schedule requirements (i.e., PR 1 and PR 2). Only once did she earn reinforcement at the PR 5 schedule requirement during this comparison (under the 120-s magnitude). Similar numbers of reinforcer were earned, on average, during the comparison of 10 s and 30 s, with slightly higher averages observed at PR 1 for 30-s access to tangible items.

Discussion

Preference for a larger magnitude of social reinforcement that had previously maintained problem behavior was demonstrated for 3 of 4 participants. Furthermore, greater response persistence occurred for the largest magnitude relative to at least one smaller magnitude when a preference among alternatives was observed. Therefore, it appears that preference for different magnitudes of a positive reinforcer may predict relative reinforcer efficacy. An apparent interaction between magnitude effects and reinforcement schedule was observed, in that differences in responding did not occur under the lowest schedule requirements in three of the four evaluations. Specifically, it was only under more strenuous schedule requirements that differences emerged between different magnitudes of reinforcement.

The current findings replicate those of previous studies in which reinforcement effects were examined under increasing schedule requirements (e.g., DeLeon et al., 1997; Roane et al., 2001; Tustin, 1994). That is, two stimuli that were similarly effective under low schedule requirements were differentially effective as the schedule was thinned. Thus, these results extend this area of research by demonstrating that different magnitudes of identical reinforcers influence response allocation and overall response rate. Taken together, this line of research has established differential reinforcer effectiveness under dense and thin schedules of reinforcement for topographically different reinforcers (e.g., DeLeon et al.; Roane et al.; Tustin) and for topographically similar reinforcers of varying magnitudes (current investigation). Results are also consistent with basic findings suggesting that the effects of magnitude may interact with the schedule arrangement (i.e., concurrent vs. single operant; dense vs. thin ratio schedules; e.g., Catania, 1963; Hodos, 1961; Reed, 1991). Therefore, the methods used to evaluate magnitude effects in previous applied studies may have been responsible for the apparent behavioral insensitivity to this parameter (e.g., Lerman et al., 1999, 2002; Lovitt & Curtiss, 1969).

These findings have important implications for the use of reinforcement-based procedures. When problem behavior cannot be exposed to extinction, clinicians may be able to bias responding towards appropriate behavior by providing longer durations of the reinforcer for a desired response (e.g., sign language) relative to problem behavior (e.g., aggression). In addition, increasing the magnitude of positive reinforcers may enhance treatment effectiveness under single-operant arrangements, especially when the reinforcement schedule is thinned. Thus, these findings are consistent with recommendations and strategies described in some applied textbooks and articles (e.g., Cooper et al., 2007; Roane, Call, & Falcomata, 2005; Roane et al., 2007).

Although the current results indicate that preference assessments might be useful for selecting magnitude values prior to treatment, a reinforcer assessment using PR schedules may be an important adjunct. In some instances, the PR schedule identified differences between magnitudes when the preference assessment did not (60 s vs. 120 s comparison of the tangible reinforcer for Seth; first 105 s vs. 120 s comparison of the attention reinforcer for Chad). This suggests that magnitude effects may be observed more readily (Seth and Chad) or consistently (Chad) under certain schedule arrangements; however, additional research is needed to evaluate this hypothesis.

Results of Whitney's preference and reinforcer assessments warrant further discussion. It is possible that responding was undifferentiated during her preference assessment because the tangible items did not function as potent reinforcers or that the items lost their reinforcing value over the course of the study. Results of the functional analysis indicated that access to toys functioned as a reinforcer for problem behavior. During the preference assessment, she allocated more responses to some reinforcement than to no reinforcement. Results of the reinforcer assessment suggested that toys maintained responding (i.e., inserting a poker chip) but only at lower schedule requirements, regardless of the duration of reinforcement delivered. Thus, it appeared that toys functioned as a reinforcer, but only if the amount of work required to access them was low. Alternatively, results of the preference assessment may have indicated a lack of discrimination between the stimuli associated with the different magnitudes of reinforcement. An initial matching-to-sample or sorting pretest with the colored cards may be useful for evaluating discrimination in future studies. The possible role of satiation on responding during Whitney's reinforcer assessment also remains unclear. Responding remained low even when the value of the large magnitude was greatly reduced following a reversal to baseline. Although the MSWO assessment was conducted frequently to decrease the likelihood of across-session satiation effects, the same five items were used throughout the study. It is possible that satiation across all of the items was a factor, even though she was able to choose among them frequently (i.e., prior to each session). Access to the items as part of presession exposure to the contingencies (see description in the procedures above) also may have produced satiation effects.

Another limitation is that single-operant and concurrent-operants arrangements were not directly compared during the preference or reinforcer assessments. Results of previous studies indicate that concurrent-operants arrangements provide a better measure of relative reinforcement effects than do single-operant arrangements (e.g., Fisher et al., 1992; Roscoe, Iwata, & Kahng, 1999). Potential interactions between magnitude and reinforcement schedule also could have been examined more directly by comparing responding under continuous reinforcement with responding under the PR schedule. In addition, the same stimulus was associated with the large-magnitude reinforcer during each phase of the preference assessment for Chad and Seth. Thus, prior exposure to this stimulus could have influenced responding during subsequent comparisons, providing one possible explanation for the inconsistent results of Chad's preference assessment. Another possible limitation is that the experimenter turned away for 10 s each time the participant chose the no-reinforcement stimulus when attention was the reinforcer. Although this procedure was used to enhance discrimination between the reinforcement and no-reinforcement stimuli, the experimenter's response may have functioned as punishment (time-out).

The use of PR schedules in the current investigation may also have limited implications for application. Although PR schedules provide a relatively quick evaluation of responding under increasing schedule requirements, they do not exemplify schedules typically used in applied settings (Roane et al., 2005). Reinforcement schedules are typically increased more gradually across time (see Hanley et al., 2001, for a discussion of schedule thinning). In addition, increases usually involve smaller schedule increments (e.g., FR 1 to FR 2). The current investigation, along with previous work (e.g., Roane et al., 2001), however, suggest some clinical benefits to using PR schedules. Future research is needed to determine if this method can be used efficiently for application purposes and to evaluate other possible benefits of fading reinforcement via PR schedules.

Despite these limitations, our results begin to identify some of the conditions under which magnitude effects may be observed in application. Additional investigation is needed to further analyze magnitude effects in the treatment context (e.g., functional communication training), with more clinically relevant schedule-thinning procedures, with other sources of reinforcement (e.g., negative reinforcement), and with other types of parametric manipulations (e.g., altering the number of toys provided for responding).

Acknowledgments

This research is based on a master's thesis submitted by the first author under the supervision of the second and third authors in partial fulfillment of the PhD degree at Louisiana State University. We thank Henry Roane and George Noell for their insights regarding the outcomes of this study. In addition, we thank Valerie Volkert, Karen Rader, Amanda Dahir, and numerous undergraduate research assistants for their help during various phases of this investigation. Nathan Call is now at the Marcus Institute. Laura Addison is now at the Institute for Applied Behavior Analysis. Tiffany Kodak is now at the Monroe-Meyer Institute.

References

- Baron A, Derenne A. Progressive-ratio schedules: Effects of later schedule requirements on earlier performances. Journal of the Experimental Analysis of Behavior. 2000;73:291–304. doi: 10.1901/jeab.2000.73-291. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baron A, Mikorski J, Schlund M. Reinforcement magnitude and pausing on progressive-ratio schedules. Journal of the Experimental Analysis of Behavior. 1992;58:377–388. doi: 10.1901/jeab.1992.58-377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belke T.W. Running and responding reinforced by the opportunity to run: Effect of reinforcer duration. Journal of the Experimental Analysis of Behavior. 1997;67:337–351. doi: 10.1901/jeab.1997.67-337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonem M, Crossman E.K. Elucidating the effects of reinforcement magnitude. Psychological Bulletin. 1988;104:348–362. doi: 10.1037/0033-2909.104.3.348. [DOI] [PubMed] [Google Scholar]

- Catania A.C. Concurrent performances: A baseline for the study of reinforcement magnitude. Journal of the Experimental Analysis of Behavior. 1963;6:299–300. doi: 10.1901/jeab.1963.6-299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cooper J.O, Heron T.E, Heward W.L. Applied behavior analysis (2nd ed.) Upper Saddle River, NJ: Merrill/Prentice Hall; 2007. [Google Scholar]

- DeLeon I.G, Iwata B.A. Evaluation of a multiple-stimulus presentation format for assessing reinforcer preferences. Journal of Applied Behavior Analysis. 1996;29:519–533. doi: 10.1901/jaba.1996.29-519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeLeon I.G, Iwata B.A, Goh H, Worsdell A.S. Emergence of reinforcer preference as a function of schedule requirements and stimulus similarity. Journal of Applied Behavior Analysis. 1997;30:439–449. doi: 10.1901/jaba.1997.30-439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fisher W.W, Piazza C.C, Bowman L.G, Hagopian L.H, Owens J.C, Slevin I. A comparison of two approaches for identifying reinforcers for persons with severe and profound disabilities. Journal of Applied Behavior Analysis. 1992;25:491–498. doi: 10.1901/jaba.1992.25-491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanley G.P, Iwata B.A, Thompson R.H. Reinforcement schedule thinning following treatment with functional communication training. Journal of Applied Behavior Analysis. 2001;34:17–38. doi: 10.1901/jaba.2001.34-17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoch H, McComas J.J, Johnson L, Faranda N, Guenther S.L. The effects of magnitude and quality of reinforcement on choice responding during play activities. Journal of Applied Behavior Analysis. 2002;35:171–181. doi: 10.1901/jaba.2002.35-171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hodos W. Progressive ratio as a measure of reward strength. Science. 1961;134:943–944. doi: 10.1126/science.134.3483.943. [DOI] [PubMed] [Google Scholar]

- Hutt P.J. Rate of bar pressing as a function of quality and quantity of food reward. Journal of Comparative and Physiological Psychology. 1954;47:235–239. doi: 10.1037/h0059855. [DOI] [PubMed] [Google Scholar]

- Iwata B.A, Dorsey M.F, Slifer K.J, Bauman K.E, Richman G.S. Toward a functional analysis of self-injury. Journal of Applied Behavior Analysis. 1994;27:197–209. doi: 10.1901/jaba.1994.27-197. (Reprinted from Analysis and Intervention in Developmental Disabilities, 2, 3–20, 1982) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jenkins W.O, Clayton F.L. Rate of responding and amount of reinforcement. Journal of Comparative and Physiological Psychology. 1949;42:174–181. doi: 10.1037/h0055036. [DOI] [PubMed] [Google Scholar]

- Johnson M.W, Bickel W.K. Replacing relative reinforcing efficacy with behavioral economic demand curves. Journal of the Experimental Analysis of Behavior. 2006;85:73–93. doi: 10.1901/jeab.2006.102-04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahng S, Iwata B.A, DeLeon I.G, Wallace M.D. A comparison of procedures for programming noncontingent reinforcement schedules. Journal of Applied Behavior Analysis. 2000;33:223–231. doi: 10.1901/jaba.2000.33-223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keesey R.E, Kling J.W. Amount of reinforcement and free-operant responding. Journal of the Experimental Analysis of Behavior. 1961;4:125–132. doi: 10.1901/jeab.1961.4-125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koehler L.J, Iwata B.A, Roscoe E.M, Rolider N.U, O'Steen L.E. Effects of stimulus variation on the reinforcing capability of nonpreferred stimuli. Journal of Applied Behavior Analysis. 2005;38:469–484. doi: 10.1901/jaba.2005.102-04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lerman D.C, Kelley M.E, Van Camp C.M, Roane H.S. Effects of reinforcement magnitude on spontaneous recovery. Journal of Applied Behavior Analysis. 1999;32:197–200. doi: 10.1901/jaba.1999.32-197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lerman D.C, Kelley M.E, Vorndran C.M, Kuhn S.A.C, LaRue R.H., Jr Reinforcement magnitude and responding during treatment with differential reinforcement. Journal of Applied Behavior Analysis. 2002;35:29–48. doi: 10.1901/jaba.2002.35-29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lovitt T.C, Curtiss K.A. Academic response rate as a function of teacher- and self-imposed contingencies. Journal of Applied Behavior Analysis. 1969;2:49–53. doi: 10.1901/jaba.1969.2-49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lowe C.F, Davey G.C.L, Harzem P. Effects of reinforcement magnitude on interval and ratio schedules. Journal of the Experimental Analysis of Behavior. 1974;22:553–560. doi: 10.1901/jeab.1974.22-553. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mace F.C, Neef N.A, Shade D, Mauro B.C. Limited matching on concurrent schedule reinforcement of academic behavior. Journal of Applied Behavior Analysis. 1994;27:585–596. doi: 10.1901/jaba.1994.27-585. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mace F.C, Roberts M.L. Factors affecting selection of behavioral intervention. In: Reichle J, Wacker D.P, editors. Communicative alternatives to challenging behavior. Baltimore: Paul H. Brookes; 1993. pp. 113–133. [Google Scholar]

- Neef N.A, Mace F.C, Shade D. Impulsivity in students with serious emotional disturbance: The interactive effects of reinforcer rate, delay, and quality. Journal of Applied Behavior Analysis. 1993;26:37–52. doi: 10.1901/jaba.1993.26-37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neef N.A, Mace F.C, Shea M.C, Shade D. Effects of reinforcer rate and reinforcer quality on time allocation: Extensions of matching theory to educational settings. Journal of Applied Behavior Analysis. 1992;25:691–699. doi: 10.1901/jaba.1992.25-691. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Northup J, Wacker D, Sasso G, Steege M, Cigrand K, Cook J, et al. A brief functional analysis of aggressive and alternative behavior in an outclinic setting. Journal of Applied Behavior Analysis. 1991;24:509–522. doi: 10.1901/jaba.1991.24-509. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peck S.M, Wacker D.P, Berg W.K, Cooper L.J, Brown K.A, Richman D, et al. Choice-making treatment of young children's severe behavior problems. Journal of Applied Behavior Analysis. 1996;29:263–290. doi: 10.1901/jaba.1996.29-263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reed P. Multiple determinants of the effects of reinforcement magnitude on free-operant response rates. Journal of the Experimental Analysis of Behavior. 1991;55:109–123. doi: 10.1901/jeab.1991.55-109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reed P, Wright J.E. Effects of magnitude of food reinforcement on free-operant response rates. Journal of the Experimental Analysis of Behavior. 1988;49:75–85. doi: 10.1901/jeab.1988.49-75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roane H.S, Call N.A, Falcomata T.S. A preliminary analysis of adaptive responding under open and closed economies. Journal of Applied Behavior Analysis. 2005;38:335–348. doi: 10.1901/jaba.2005.85-04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roane H.S, Falcomata T.S, Fisher W.W. Applying the behavioral economics principle of unit price to DRO schedule thinning. Journal of Applied Behavior Analysis. 2007;40:529–534. doi: 10.1901/jaba.2007.40-529. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roane H.S, Fisher W.W, Sgro G.M, Falcomata T.S, Pabico R.R. An alternative method of thinning reinforcer delivery during differential reinforcement. Journal of Applied Behavior Analysis. 2004;37:213–218. doi: 10.1901/jaba.2004.37-213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roane H.S, Lerman D.C, Vorndran C.M. Assessing reinforcers under progressive schedule requirements. Journal of Applied Behavior Analysis. 2001;34:145–167. doi: 10.1901/jaba.2001.34-145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roscoe E.M, Iwata B.A, Kahng S. Relative versus absolute reinforcement effects: Implications for preference assessments. Journal of Applied Behavior Analysis. 1999;32:479–493. doi: 10.1901/jaba.1999.32-479. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Staddon J.E.R. Effect of reinforcement duration on fixed-interval responding. Journal of the Experimental Analysis of Behavior. 1970;13:9–11. doi: 10.1901/jeab.1970.13-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stafford D, Branch M.N. Effects of step size and break-point criterion on progressive-ratio performance. Journal of the Experimental Analysis of Behavior. 1998;70:123–138. doi: 10.1901/jeab.1998.70-123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stebbins W.C, Mead P.B, Martin J.M. The relation of amount of reinforcement to performance under a fixed-interval schedule. Journal of the Experimental Analysis of Behavior. 1959;2:351–355. doi: 10.1901/jeab.1959.2-351. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thomas J.R. Changes in progressive-ratio performance under increased pressures of air. Journal of the Experimental Analysis of Behavior. 1974;21:553–562. doi: 10.1901/jeab.1974.21-553. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tustin R.D. Preference for reinforcers under varying schedule arrangements: A behavioral economic analysis. Journal of Applied Behavior Analysis. 1994;27:597–606. doi: 10.1901/jaba.1994.27-597. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Volkert V.M, Lerman D.C, Vorndran C.M. The effects of reinforcement magnitude on functional analysis outcomes. Journal of Applied Behavior Analysis. 2005;38:147–162. doi: 10.1901/jaba.2005.111-04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vollmer T.R, Borrero J.C, Lalli J.S, Daniel D. Evaluating self-control and impulsivity in children with severe behavior disorders. Journal of Applied Behavior Analysis. 1999;32:451–466. doi: 10.1901/jaba.1999.32-451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Worsdell A.S, Iwata B.A, Hanley G.P, Thompson R.H, Kahng S. Effects of continuous and intermittent reinforcement for problem behavior during functional communication training. Journal of Applied Behavior Analysis. 2000;33:167–179. doi: 10.1901/jaba.2000.33-167. [DOI] [PMC free article] [PubMed] [Google Scholar]