Abstract

In many cases, the computation of a neural system can be reduced to a receptive field, or a set of linear filters, and a thresholding function, or gain curve, which determines the firing probability; this is known as a linear/nonlinear model. In some forms of sensory adaptation, these linear filters and gain curve adjust very rapidly to changes in the variance of a randomly varying driving input. An apparently similar but previously unrelated issue is the observation of gain control by background noise in cortical neurons: the slope of the firing rate versus current (f-I) curve changes with the variance of background random input. Here, we show a direct correspondence between these two observations by relating variance-dependent changes in the gain of f-I curves to characteristics of the changing empirical linear/nonlinear model obtained by sampling. In the case that the underlying system is fixed, we derive relationships relating the change of the gain with respect to both mean and variance with the receptive fields derived from reverse correlation on a white noise stimulus. Using two conductance-based model neurons that display distinct gain modulation properties through a simple change in parameters, we show that coding properties of both these models quantitatively satisfy the predicted relationships. Our results describe how both variance-dependent gain modulation and adaptive neural computation result from intrinsic nonlinearity.

Author Summary

Many neurons are known to achieve a wide dynamic range by adaptively changing their computational input/output function according to the input statistics. These adaptive changes can be very rapid, and it has been suggested that a component of this adaptation could be purely input-driven: even a fixed neural system can show apparent adaptive behavior since inputs with different statistics interact with the nonlinearity of the system in different ways. In this paper, we show how a single neuron's intrinsic computational function can dictate such input-driven changes in its response to varying input statistics, which begets a relationship between two different characterizations of neural function—in terms of mean firing rate and in terms of generating precise spike timing. We then apply our results to two biophysically defined model neurons, which have significantly different response patterns to inputs with various statistics. Our model of intrinsic adaptation explains their behaviors well. Contrary to the picture that neurons carry out a stereotyped computation on their inputs, our results show that even in the simplest cases they have simple yet effective mechanisms by which they can adapt to their input. Adaptation to stimulus statistics, therefore, is built into the most basic single neuron computations.

Introduction

An f-I curve, defined as the mean firing rate in response to a stationary mean current input, is one of the simplest ways to characterize how a neuron transforms a stimulus into a spike train output as a function of the magnitude of a single stimulus parameter. Recently, the dependence of f-I curves on other input statistics such as the variance has been examined: the slope of the f-I curve, or gain, is modulated in diverse ways in response to different intensities of added noise [1]–[4]. This enables multiplicative control of the neuronal gain by the level of background synaptic activity [1]: changing the level of the background synaptic activity is equivalent to changing the variance of the noisy balanced excitatory and inhibitory input current to the soma, which modulates the gain of the f-I curve. It has been demonstrated that such somatic gain modulation, combined with saturation in the dendrites, can lead to multiplicative gain control in a single neuron by background inputs [5]. From a computational perspective, the sensitivity of the firing rate to mean or variance can be thought of as distinguishing the neuron's function as either an integrator (greater sensitivity to the mean) or a differentiator/coincidence detector (greater sensitivity to fluctuations, as quantified by the variance) [3],[6],[7].

An alternative method of characterizing a neuron's input-to-output transformation is through a linear/nonlinear (LN) cascade model [8],[9]. These models comprise a set of linear filters or receptive field that selects particular features from the input; the filter output is transformed by a nonlinear threshold stage into a time-varying firing rate. Spike-triggered covariance analysis [10],[11] reconstructs a model with multiple features from a neuron's input/output data. It has been widely employed to characterize both neural systems [12]–[15] and single neurons or neuron models subject to current or conductance inputs [16]–[19].

Generally, results of reverse correlation analysis may depend on the statistics of the stimulus used to sample the model [15], [19]–[25]. While some of the dependence on stimulus statistics in the response of a neuron or neural system may reflect underlying plasticity, in some cases, the rapid timescale of the changes suggests the action of intrinsic nonlinearities in systems with fixed parameters [16], [19], [25]–[29], which changes the effective computation of a neuron.

Our goal here is to unify the f-I curve description of variance-dependent adaptive computation with that given by the LN model: we present analytical results showing that the variance-dependent modulation of the firing rate is closely related to adaptive changes in the recovered LN model if a fixed underlying model is assumed. When the model relies only on a single feature, we find that such a system can show only a single type of gain modulation, which accompanies an interesting asymptotic scaling behavior. With multiple features, the model can show more diverse adaptive behaviors, exemplified by two conductance-based models that we will study.

Results

Diverse Variance-Dependent Gain Modulations without Spike Rate Adaptation

Recently, Higgs et al. [3] and Arsiero et al. [4] identified different forms of variance-dependent change in the f-I curves of various neuron types in avian brainstem and in cortex. Depending on the type, neurons can have either increasing or decreasing gain in the f-I curve with increasing variance. These papers linked the phenomenon to mechanisms underlying spike rate adaptation, such as slow afterhyperpolarization (sAHP) currents and slow sodium channel inactivation. We recently showed [7] that a standard Hodgkin–Huxley (HH) neuron model, lacking spike rate adaptation, can show two different types of variance-dependent gain modulation simply by tuning the maximal conductance parameters of the model. These differences in gain modulation correspond to two different regimes in the space of conductance parameters. In one regime, which includes the standard parameters, a neuron periodically fires to a sufficiently large constant input current. In the other regime, a neuron never fires to a constant input regardless of its magnitude, but responds only to rapid fluctuations. This rarely discussed property has been termed class 3 excitability [30],[31]. Higgs et al. [3] proposed that the type of gain modulation classifies the neuron as an integrator or differentiator.

Here, we examine two models that show these different forms of variance-dependent gain modulation without spike rate adaptation, and study the resulting LN models sampled with different stimulus statistics. We show that these fixed models generate variance-dependent gain modulation, and that this gain modulation is well predicted by aspects of the LN models derived from white noise stimulation. The two models are both based on the HH [32] active currents; one model is the standard HH model, and the other (HHLS) has lower Na+ and higher K+ conductances. The HHLS model is a class 3 neuron and responds only to a rapidly changing input. For this reason, the HHLS model can be thought of as behaving more like a differentiator than an integrator [3],[7].

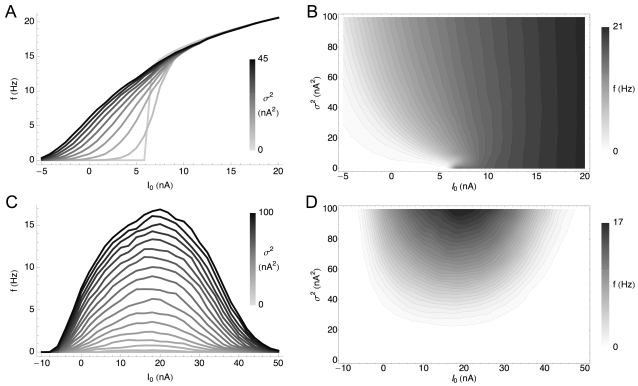

Figure 1 shows the different gain modulation behaviors of the HH and HHLS conductance-based models. For the HH model, Figure 1A, the f-I curves in the presence of noise are similar to the noiseless case except that they are increasingly smoothed at the threshold. In contrast, Figure 1C shows that the f-I curves of the HHLS model never converge toward each other as the noise level increases. This case resembles that of layer 5 pyramidal neurons in rat medial prefrontal cortex [4], as well as nucleus laminaris (NL) neurons in the chick auditory brainstem and some pyramidal neurons in layer 2/3 of rat neocortex [3]. While for these layer 2/3 neurons, there is evidence that this change in f-I curve slope may be related to the sAHP current [3], at steady state this effect can be obtained in general by tuning the maximal conductances without introducing any mechanism for spike rate adaptation [7].

Figure 1. Variance-Dependent Gain Modulation of the HH and HHLS Model.

Each model is simulated as described in the Materials and Methods section. (A) f-I curves of a standard HH model for differing 10 variances (σ2) from 0 to 45 nA2. The topmost trace is the response to the highest variance. Each curve is obtained with 31 mean values (I 0) ranging from −5 to 20 nA. (B) The same data as (A) plotted in the (mean, variance) plane. Lighter shades represent higher firing rates. We used cubic spline interpolation for points not included in the simulated data. (C,D) f-I curves of the HHLS model as in (A) and (B). 10 means from −10 to 50 nA and 21 variances from 0 to 100 nA2 are used.

Gain Modulation and Adaptation of Fixed Models

For a system described by an LN model with a single feature, we derive an equation relating the slopes of the firing rate with respect to stimulus mean and variance. We then consider gain modulation in a system with multiple relevant features and derive a series of equations relating gain change to properties of the spike-triggered average and spike-triggered covariance. Throughout, we assume that the underlying system is fixed, and that its parameter settings do not depend on stimulus statistics. For example, if the model has a single exponential filter with a time constant τ, we assume that τ does not change with the stimulus mean (I 0) or variance (σ2). However, this does not mean that the model shows a single response pattern regardless of the statistical structure of stimuli. The sampled LN description of a nonlinear system with fixed parameters—even when the underlying model is an LN model [25]—can show interaction with the input statistics, leading to different LN model descriptions for different input parameters [19], [25], [27]–[29]. We refer to this as intrinsic adaptation.

One-Dimensional Model

An LN model is composed of its relevant features {εμ(t)} (μ = 1,2,…,n)), which act as linear filters on an incoming stimulus, and a probability to spike given the filtered stimulus, P(spike|filtered stimulus). For a Gaussian white noise stimulus with mean I 0 and variance σ2, the firing rate is

| (1) |

where  is the time-integrated filter and x is the mean-subtracted noise stimulus filtered by the n relevant features. p(x) is an n-dimensional Gaussian distribution with variance σ2. We refer to the Materials and Methods section for a more detailed account of the model.

is the time-integrated filter and x is the mean-subtracted noise stimulus filtered by the n relevant features. p(x) is an n-dimensional Gaussian distribution with variance σ2. We refer to the Materials and Methods section for a more detailed account of the model.

For a one-dimensional model n = 1, Equation 1 can be rewritten with change of variables

| (2) |

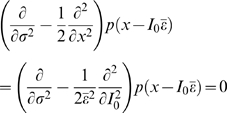

Since p(x) is Gaussian, it is also the kernel or Green's function of a diffusion equation in terms of (x,σ2) and therefore so is p(x−I 0ε̅) in terms of (I 0,σ2). In other words, we have

|

Now operating with  on both sides of the equation, p(x−I

0ε̅) is the only term on the left hand side of Equation 2 that depends on (I

0,σ2) and therefore the right hand side of Equation 2 vanishes. Thus one finds

on both sides of the equation, p(x−I

0ε̅) is the only term on the left hand side of Equation 2 that depends on (I

0,σ2) and therefore the right hand side of Equation 2 vanishes. Thus one finds

| (3) |

The boundary condition is given by evaluating Equation 2 as σ2→0; here the Gaussian distribution becomes a delta function

and the boundary condition is given by the zero-noise f-I curve. Thus, when a model depends only on a single feature, ε(t), the f-I curve with a noisy input is given by a simple diffusion-like equation, Equation 3, with a single parameter, the time integrated filter,  , determining the diffusion constant 1/2ε̅2.

, determining the diffusion constant 1/2ε̅2.

Equation 3 states that the variance-dependent change in the firing rate is simply determined by the curvature of the f-I curve. Thus, a one-dimensional system displays only a single type of noise-induced gain modulation: as in diffusion, an f-I curve is gradually smoothed and flattened as the variance increases. Given a boundary condition, such as an f-I curve for a particular variance, the family of f-I relations can be reconstructed up to a scale factor by solving Equation 3. For example, one can predict how the neuron would respond to a noise stimulus based on its output in the absence of noise. Note that the solution of Equation 3 generalizes a classical result [33] based on a binary nonlinearity to a simple closed form which applies to any type of nonlinearity.

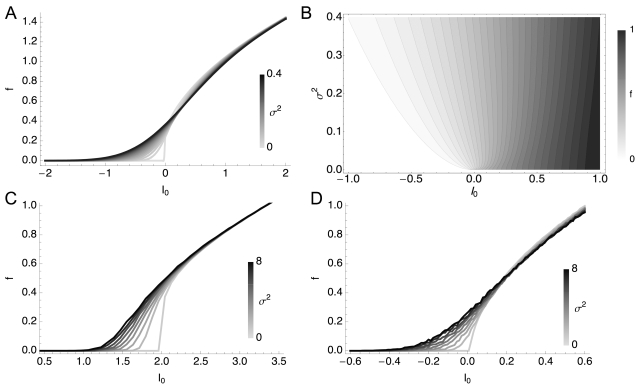

Figure 2A and 2B show a solution of Equation 3. While this one-dimensional model is based on the simplest and most general assumptions, it provides insights into the structure of variance-dependent gain modulation. The boundary condition is an f-I curve with no noise, f = (I+0.1)1/2 for I>0 and f = 0 for I≤0, which imitates the general behavior of many dynamical neuron models around rheobase [34]–[36]. Compared with the HH conductance-based model, Equation 3 captures qualitative characteristics of the HH f-I curve despite differences due to the increased complexity of the HH model over a 1D LN model: in Figure 2A and 2B, there is a positive curvature (second derivative of firing rate with respect to current) of the f-I curve below rheobase related to the increase of the firing rate with increasing variance. In contrast, the behavior of the HHLS model cannot be described by Equation 3. Even though the f-I curves in Figure 1C mostly have negative curvature, the firing rate keeps increasing with variance, implying that the HHLS model cannot be described by a one-dimensional LN model.

Figure 2. Variance-Dependent Gain Modulation of One-Dimensional Models.

(A) Variance-dependent f-I curves of a one-dimensional model from the solution of Equation 3 with the boundary condition, f = (I+0.1)1/2 for I>0 and f = 0 for I≤0 at zero noise. (B) The firing rates of A in the (mean, variance) plane. (C) f-I curves of an LIF model. (D) f-I curves of a QIF model. The model parameters for the LIF and QIF are in the Materials and Methods section. We used 50 mean (I 0) values from 0 to 4 (LIF) and from −2 to 2 (QIF), and 8 variances (σ2) from 0 to 8 for both models.

We also compared Equation 3 with the f-I curves from two commonly used simple neuron models, the leaky integrate-and-fire (LIF) model (Figure 2C), and a similar model with minimal nonlinearity, the quadratic integrate-and-fire (QIF) model [37],[38] (Figure 2D). The f-I curves of the two models are similar but have subtle differences: in the LIF model, firing rate never decreases with noise, even though parameters were chosen to induce a large negative curvature, as shown analytically in Text S1. The QIF model behavior is much more similar to the 1D LN model, marked by a slight decrease in firing rate at large I 0. From this perspective, the QIF is a simpler model in terms of the LN description despite the dynamical nonlinearity.

It is interesting to note that for one-dimensional models, the gain modulation given by Equation 3 depends only on the boundary condition, which implicitly describes how an input with a given mean samples the nonlinearity, but not explicitly on the details of filters or nonlinearity. An ideal differentiator, where firing rate is independent of the stimulus mean, is realized only when the filter has zero integral, ε̅ = 0. This is also the criterion that would be satisfied if the filter itself were ideally differentiating. We will return to the relationship between the LN model functional description and that of the f-I curves in the Discussion.

Multidimensional Models

Here we examine gain modulation in the case of a system with multiple relevant features. In this case, one cannot derive a single simple equation such as Equation 3. Instead, we derive relationships between the characteristics of f(I 0,σ) curves and quantities calculated using white noise analysis.

Fixed multidimensional models can display far more complex response patterns to different stimulus statistics than one-dimensional models, because linear components in the model can now interact nonlinearly [29]. For example, in white noise analysis, as the stimulus variance increases, the distribution of the filtered stimuli also expands and probes different regions of the nonlinear threshold structure of the model. This induces a variance-dependent rotation among the filters recovered through sampling by white noise analysis, and the corresponding changes in the spike-triggered average, spike-triggered covariance, and the sampled nonlinearity [19].

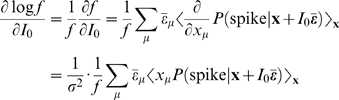

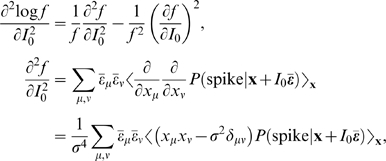

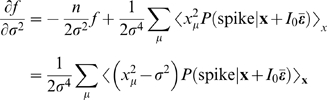

Here, we relate parameters of the changing spike-triggered average and spike-triggered covariance description to the form of the f-I curves. The relationships are derived by taking derivatives of each side of Equation 1 with respect to I 0 and σ2 (see Materials and Methods section). The first order in I 0 establishes the relationship between the STA and the gain of the f-I curve with respect to the mean

| (4) |

The second order leads to a relationship between the second derivative of the f-I curve and the covariance matrix

| (5) |

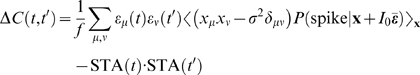

The gain with respect to the variance is

| (6) |

where

Equations 4–6 show how the nonlinear gain of an f-I curve with respect to input mean and variance is related to intrinsic adaptation as observed through changes in the STA and STC. Note that Equations 4–6 apply to one-dimensional LN models as well. In that case, the STA has the same shape as the feature in the model, and only its magnitude varies according to the overlap integral, Equation 1, between the nonlinearity of the model and the prior stimulus. This is the same for the STC, and thus Equations 4–6 are not independent. This leads to a single form of variance gain modulation, given by Equation 3. However, in a multidimensional model, changing the stimulus mean shifts the nonlinearity in a single direction,  , while increasing the variance expands the prior in every direction in the stimulus space. Therefore, the overlap integral can show more diverse behaviors.

, while increasing the variance expands the prior in every direction in the stimulus space. Therefore, the overlap integral can show more diverse behaviors.

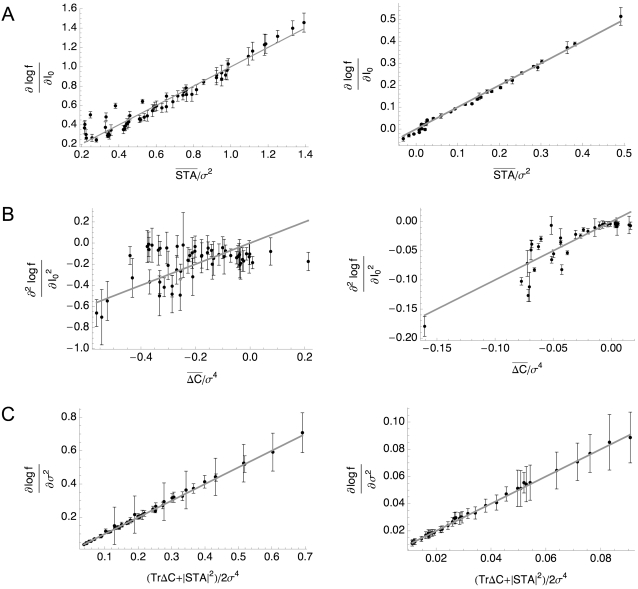

Conductance-Based Models

We now examine whether the gain modulation behaviors we have described can be captured by a multi-dimensional LN model. We tested this by computing f-I curves, spike-triggered averages and the spike-triggered covariance matrices for the noise-driven HH and HHLS models for a range of input statistics. Figure 3A, B, and C show the result of fitting simulation data from the HH (left) and HHLS (right) model to Equations 4, 5, and 6, respectively. The linear relationships are quite clear in Figure 3A and 3C which show the gains with respect to mean and variance. Figure 3B involves the curvature of f-I curves, which is more difficult to calculate accurately, and shows larger errors. In every case, goodness of fit is p<1.3×10−6 and p<5.8×10−6 for the HH and HHLS where the upper bounds of p-values are given by the case of Equation 5, corresponding to Figure 3B. These results show that intrinsic adaptation of the LN model predicts the form of noise-induced gain modulation for these models.

Figure 3. Derivatives of the Firing Rate Curves with Respect to Mean and Variance Related to Quantities Obtained by White Noise Analysis for the Standard HH (Left) and HHLS (Right) Models.

Each point is calculated from the simulated data with a selected (mean, variance) input parameter pair, as described in the Materials and Methods section, and the gray lines represent our theoretical predictions, Equations 4–6, which hold when the variance dependent change in f-I curves is only due to intrinsic adaptation. (A) Gain versus the norm of the STA, as in Equation 4. (B) Gain change versus the spike-triggered covariance term of Equation 5. (C) Change of firing rate with respect to variance versus the function of the STA and spike-triggered covariance given in Equation 6.

Gain Rescaling of One-Dimensional Models

Here we discuss a consequence of intrinsic adaptation for neuronal encoding of mean and variance information for a one-dimensional model. In this case, Equation 3 completely specifies intrinsic adaptation, and therefore we will focus on this case.

Our first observation is that Equation 3 is invariant under the simultaneous rescaling of the mean and standard deviation, I 0→αI 0, σ→ασ, where α is an arbitrary positive number. This invariance is preserved if the solution is also a function of only a dimensionless variable I 0/σ, which would represent a signal-to-noise ratio if we describe the neuron's input/output function in terms of an f-I curve at a fixed noise level σ. Note that this situation is analogous to the Weber–Fechner [39],[40] and Fitts' law [41], which states that perception tends to depend on only dimensionless variables that are invariant under scaling of the absolute magnitude of stimulus [42]. However, the invariance of Equation 3 under the scaling of a stimulus does not necessarily lead to the invariance of a firing rate solution. By rewriting Equation 2 in terms of the “rescaled” variables, y = x/σ and μ = I 0/σ, we get

| (7) |

where f 0(I) = P(spike|Iε̅) is an f-I curve with no noise. Thus, the scaling of f(I 0,σ2) with standard deviation depends on the boundary condition, f 0(I), which in principle can be any arbitrary function.

Nevertheless, in practice, the f-I curves of many dynamical neurons are not completely arbitrary but can share a simple scaling property, at least asymptotically. For example, in the QIF and many other neuron models, the f-I curve with no noise asymptotically follows a power law f 0∼(I 0−I c)1/2 around the rheobase I c [34]–[36]. In general, if f 0(I)∝I α asymptotically in such a regime, from Equation 7, the firing rate is asymptotically factorized into a σ dependent and μ = I 0/σ dependent part as

| (8) |

In other words, I 0/σ becomes an intermediate asymptotic of the f-I curves [43].

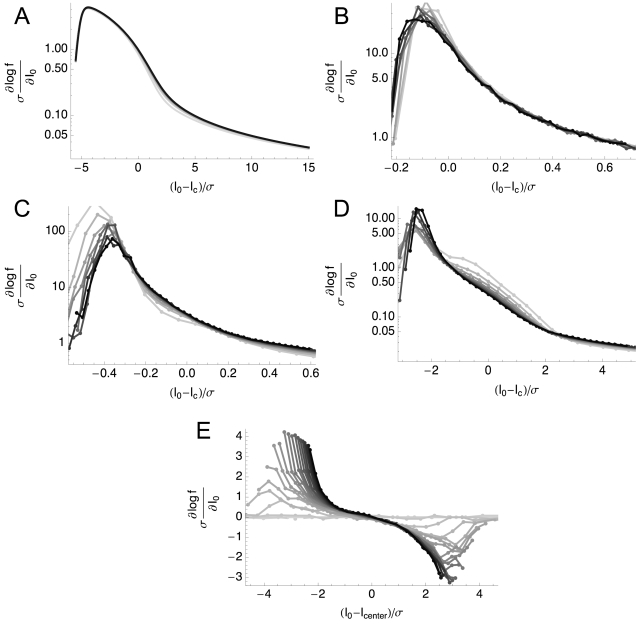

To test to what extent this scaling relationship holds in the models we have considered, we calculated the rescaled relative gain of the f-I curves, which we define as (σ/f) ∂f/∂I 0 = σ ∂ log f/∂I 0; the rescaled relative gain of Equation 8 depends only on μ = I 0/σ, not on σ. Thus, if the rescaling strictly holds, this becomes a single-valued function of the signal-to-noise ratio, I 0/σ, regardless of the noise level σ.

We find evidence for this form of variance rescaling in the QIF, LIF, and HH models. Figure 4 shows the rescaled gains evaluated from the simulated data. The QIF and HH case, Figure 4B and 4D, match well with the solution of Equation 3, Figure 4A. In the LIF case, Figure 4C, the relative gain shows deviations at low variance, but it approaches a variance-independent limit at large σ. We also present an analytic account in Text S1. On the other hand, in Figure 4E, the HHLS model does not exhibit this form of asymptotic scaling at all. The role of the signal-to-noise ratio, I 0/σ, in the HHLS model appears to be quite distinct from the other models. In summary, Equation 3 predicts that one-dimensional LN models will have the tendency to decrease gain with increasing noise level. However, if the f-I curve of a neuron is power-law-like, the resulting gain modulation will be such that the neuron's sensitivity to mean stimulus change at various noise levels is governed only by the signal-to-noise ratio.

Figure 4. Rescaled Relative Gains of Variance-Dependent f-I Curves.

(A) The one-dimensional LN, (B) QIF, and (C) LIF models. The same data as Figure 2 are used. (D) The standard HH model from Figure 1A and 1B. (E) The HHLS model from Figure 1C and 1D. Since the HHLS does not have a rheobase, we instead used I center = 20 nA at which the variance-dependent firing rate increase is maximal.

Discussion

In this paper, we have obtained analytical relationships between noise-dependent gain modulation of f-I curves and properties of the sampled linear/nonlinear model. We have shown that gain control arises as a simple consequence of the nonlinearity of the LN model, even with no changes in any underlying parameters.

For a system described by an LN model with only one relevant feature, a simple single-parameter diffusion relationship relates the f-I curves at different variances, where the role of the diffusion coefficient is taken by the integral of the STA. This form strictly limits the possible forms of gain modulation that may be manifested by such a system. The result qualitatively describes the variance dependent gain modulation of different neuron models such as the LIF, QIF, and standard HH neuron models. Models based on dynamical spike generation, such as QIF, showed better agreement with this result than the LIF model. The QIF model case is a good example of how a nonlinear dynamical system can be mapped onto an LN model description [19],[44]. The QIF model has a single dynamical equation whose subthreshold dynamics are captured approximately by a linear kernel, which takes the role of the feature; one can then determine a threshold which acts as a binary decision boundary for spiking. Thus, it is reasonable that the QIF model and the one-dimensional LN model show a similar response pattern to a noisy input. When the system has multiple relevant features, we obtain equations relating the gain with respect to the input mean and the input variance to parameters of the STA and STC. We verified these results using HH neurons displaying two different forms of noise-induced gain control.

Previous work has related different gain control behaviors to a neuron's function as an integrator or a differentiator [3],[7]. From an LN model perspective, the neuron's function is defined by specific properties of the filter or filters ε(t). An integrating filter would consist of entirely positive weights; for leaky integrators these weights will decay at large negative times. A differentiating filter implements a local subtraction of the stimulus, and so should consist of a bimodal form where the positive weights approximately cancel the negative weights.

In general, characterizations of neural function by LN model and by f-I curves are quite distinct. The f-I approach we have discussed here describes the encoding of stationary statistical properties of the stimulus by time-averaged firing rate, while the LN model describes the encoding of specific input fluctuations by single spikes, generally under a particular choice of stimulus statistics. Indeed, the LN characterization can change with the driving stimulus distribution, even, in principle, from an integrator to a differentiator. Thus, a model may, for example, act as a differentiator on short timescales but as an integrator on longer timescales. For systems whose LN approximation varies with mean and variance, the neuron's effective computation changes with stimulus statistics, and so does the information that is represented. One might then ask how the system can decode the represented information. It has been proposed that statistics of the spike train might provide the information required to decode slower-varying stimulus parameters [22],[45]. The possibility of distinguishing between responses to different stimulus statistics using the firing rate alone depends on the properties of the f-I curves.

The primary focus of this work is the restricted problem of single neurons responding to driving currents, where the integrated synaptic current in an in vivo-like condition is approximated to be a (filtered) Gaussian white noise [46]–[50]. However, our derivations can apply to arbitrary neural systems driven by white noise inputs, if f-I curves are interpreted as tuning functions with respect to the mean stimulus parameter. Given the generality of our results for neural systems, it would be interesting to test our results in cases where firing is driven by an external stimulus. A good candidate would be retinal ganglion cells, which are well-described by LN-type models [9], [14], [51]–[53], show adaptation to stimulus statistics on multiple timescales [23],[54] and display a variety of dimensionalities in their feature space [14].

A limitation of the tests we have performed here is a restriction to the low firing rate regime where spike-triggered reverse correlation captures most of the dependence of firing probability on the stimulus. The effects of interspike interaction can be significant [16],[17],[55] and models with spike history feedback have been developed to account for this [44],[51],[56],[57]. We have not investigated how spike history effects would impact our results.

Although evidence suggests that gain modulation by noise may be enhanced by slow afterhyperpolarization currents underlying spike frequency adaptation [3], these slow currents are not required to generate gain enhancement in simple neuron models [7], [19], [25]–[29]. While one may generate diverse types of noise-induced gain modulation only by modifying the mechanism of generating a spike independent of spike history [7], in realistic situations, slow adaptation currents are present and will affect neural responses over many timescales [58]–[60]. In principle, it is possible to extend our result to include these effects: f-I curves under conditions of spike frequency adaptation have been already discussed [61]–[63] and can be compared to LN models with spike history feedback. However, our goal here was to demonstrate the effects that can occur independent of slow adaptation currents and before such currents have acted to shift neuronal coding properties.

The suggestive form of our result for one-dimensional LN models led us to look for a representation of neuronal output that is invariant under change in the input noise level. Our motivation is based on a simple principle of dimensional analysis: the gains of the f-I curves with noise may be asymptotically described by a signal-to-noise ratio, a dimensionless variable depending only on the stimulus itself. We showed that this may occur if the f-I curve with no noise obeys asymptotic power-law properties. Such a property has been determined to arise both from the bifurcation patterns of spike generation [31],[34],[35] and due to spike rate adaptation [61]. This relationship implies that the gain of the firing rate as a function of the mean should scale inversely with the standard deviation. Scaling of the gain of the nonlinear decision function with the stimulus standard deviation has been observed to some degree in a number of neural systems [10], [15], [22]–[25], [29], [64]–[67]. Such scaling guarantees maximal transmission of information [10],[22]. As we and others have proposed, a static model might suffice to explain this phenomenon [25],[27], although in some cases slow adaptation currents are known to contribute [65],[66].

In summary, we have presented theoretically derived relationships between the variance-dependent gain modulation of f-I curves and intrinsic adaptation in neural coding. In real neural systems, any type of gain modulation likely results from many different mechanisms, possibly involving long-time scale dynamics. Our results show that observed forms of gain modulation may be a result of a pre-existing static nonlinearity that reacts to changes in the stimulus statistics robustly and almost instantaneously.

Materials and Methods

Biophysical Models

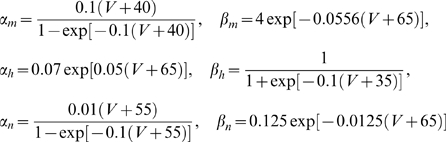

We used two single compartmental models with Hodgkin–Huxley (HH) active currents. The first one is an HH model with standard parameters while the second model (HHLS) has a lower Na+ and higher K+ maximal conductance. The voltage changes are described by [32]

and the activation variables m, n, and h behave according to

where

|

The voltage V is in millivolts (mV).

For the HH model, the conductance parameters are g̅ K = 36 mS/cm2 and g̅ Na = 120 mS/cm2. The HHLS model has g̅ K = 41 mS/cm2 and g̅ Na = 79 mS/cm2. All other parameters are common to both models. The leak conductance is g̅ L = 0.3 mS/cm2 and the membrane capacitance per area C is 1 μF/cm2. The reversal potentials are EL = −54.3 mV, ENa = 50 mV, and EK = −77 mV. The membrane area is 10−3 cm2, so that a current density of 1 μA/cm2 corresponds to a current of 1 nA.

All simulations of these models were done with the NEURON simulation environment [68]. Gaussian white noise currents with various means and variances are generated with an update rate of 5 kHz (dt = 0.2 ms) and delivered into the model via current clamp. For the f-I curves, we simulated 4 min of input for each mean and variance pair. The whole procedure was repeated five times to estimate the variance of the f-I relationship, σrepeat.

We ran another set of simulations for reverse correlation analysis and collected about 100,000 spikes for each stimulus condition. The means and variances of the Gaussian noisy stimuli were chosen such that the mean firing rate did not exceed 10 Hz, and we selected eight means and seven variances for the HH model, and nine means and four variances for the HHLS model.

Integrate-and-Fire-Type Models

In addition to the conductance-based model, we investigated the behavior of two heuristic model neurons driven by a noisy current input. Each model consists of a single dynamical equation describing voltage fluctuations of the form

The first model is a leaky integrate-and-fire (LIF) model [69],[70], for which L(V) = −gL(V−EL). We used the parameters gL = 2, EL = 0, and C = 1. Since this choice of L(V) cannot generate a spike, we additionally imposed a spiking threshold Vth = 1 and reset voltage V reset = −3.

The second is a quadratic integrate-and-fire (QIF) model [31],[37],[38], for which L(V) = gL(V−EL)(V−Vth)/ΔV where ΔV = Vth−EL>0. We used gL = 0.5, EL = 0, Vth = 0.1, and C = 1. In this model, the voltage V can increase without bound; such a trajectory is defined to be a spike if it crosses V spike = 5. After spiking, the system is reset to V reset = 0.

These two models are simulated using a fourth-order Runge–Kutta integration method with an integration time step of dt = 0.01. The input current I(t) was Gaussian white noise, updated at each time step, with a range of means and variances. The f-I curves were obtained from 1,000 s of stimulation for each (mean,variance) condition. We then compared the f-I curves from these models with the relationship derived in the Results section, Equation 5. A numerical solution of the partial differential equation was obtained using a PDE solver in Mathematica (Wolfram Research, Inc.).

Linear/Nonlinear Model

We use the linear/nonlinear (LN) cascade model framework to describe a neuron's input/output relation. We will focus on the dependence of the firing rate of a fixed LN model on the mean and variance of a Gaussian white noise input.

We will take the driving input to be I(t) = I 0+ξ(t) where I 0 is the mean and ξ(t) is a Gaussian white noise with variance σ2 and zero mean. The linear part of the model selects, by linear filtering, a subset of the possible stimuli probed by I(t). That subset is expressed as n relevant features {εμ(t)}, (μ = 1,2,…,n). Interpreted as vectors, the components of any stimulus that are relevant to changing the firing rate can be expressed in terms of projections onto these features. The firing rate of the model for a given temporal sequence I(t) depends only on s, the input filtered by the n relevant features. Thus the firing rate from the given stimulus depends on the convolution of the input with all n features and can be written as P(spike|s = I 0 ε̅+x) where

Since I(t) is white noise with stationary statistics, the projections x μ can be taken to be stationary random variables chosen from a Gaussian distribution at each t.

Given the filtered stimulus, a nonlinear decision function P(spike|I 0 ε̅+x) generates the instantaneous time-varying firing rate. For a specified model and stimulus statistics, the mean firing rate f(I 0,σ2) = P(spike) is simply

| (9) |

where

Equation 9 describes an f-I curve of the model in the presence of added noise with variance σ2. The slope or gain of the firing rate with respect to mean or variance can be computed if P(spike|I 0 ε̅+x) is known. However, the gains can be also obtained in terms of the first and second moments of P(spike|I 0 ε̅+x), which can be measured directly by reverse correlation analysis.

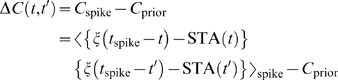

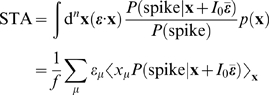

Reverse Correlation Analysis

We used spike-triggered reverse correlation to probe the computation of the model neurons through an LN model. We collected about 100,000 spikes and corresponding ensembles of spike triggered stimulus histories in a 30 ms long time window preceding each spike.

From the spike-triggered input ensembles, we calculated spike-triggered averages (STAs) and spike-triggered covariances (STCs). The STA is simply the average of the set of stimuli that led to spikes subtracted from the mean of the “prior” stimulus distribution, the distribution of all stimuli independent of spiking output

| (10) |

Therefore, one may consider only the noise part of the zero mean stimulus.

When computing the STC, the prior's covariance is subtracted

|

(11) |

Statistical Analysis

In calculating the slope and curvature of the f-I curves, we used 6–10 degree polynomial fitting of the f-I curves, where in any single case, the lowest degree was used which provided both a good fit and smoothness. From the fitting procedure, we obtained the standard deviation of the residuals, σfit. This was repeated five times for f-I curves computed using different noise samples, and from this we computed σrepeat, the standard deviation of each computed slope and curvature. We estimated the total error of our calculation as σtotal = (σrepeat 2+σfit 2)1/2. In practice, σrepeat was always greater than σfit by an order of magnitude. This σtotal was used for the error bars in Figure 3.

To evaluate the goodness of fit in Figure 3, we used the Pearson χ2 test by using the reduced χ2 statistic

where O and E represent the right and left hand sides of Equations 4–6, respectively. From this, the p-values are estimated from the cumulative density function of the χ2 distribution, Q(χ2/k,k). The degree of freedom is k = 54 and k = 34 for the HH and HHLS, respectively.

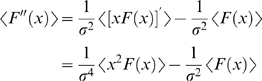

Derivation of Equations 4–6

We first present two key identities: the first one, which depends on the form of s having additive mean and noise components, is a change of variables for the gradient of P(spike|x+I 0 ε̅)

| (12) |

Secondly, when x is a Gaussian random variable with zero mean and variance σ2, by using integration by parts in can be seen that any function F(x) satisfies

| (13) |

|

Then, we first take derivatives of both sides of Equation 9 (or equivalently Equation 1), by I 0 and σ2, and apply Equations 12 and 13. The first order in I 0 is

|

(14) |

The second order is given by

|

(15) |

where δμν is a Kronecker delta symbol. The gain with respect to variance is

|

(16) |

Now, we show how the right hand sides of Equations 14–16 correspond to the STA and the STC. Given a Gaussian white noise signal ξ(t), we can split it as ξ = ξ∥+ξ⊥, where ξ∥ belongs to the space spanned by our basis features {εμ}, and therefore relevant to spiking. ξ⊥ is the orthogonal or irrelevant part. ξ∥ can be written as

Again, x is a Gaussian variable from a distribution Equation 9.

The STA is

since ξ⊥ is irrelevant and does not make any contribution. Here we use Bayes theorem

As in Equation 9, P(s = x+I 0 ε̅) = p(x), and therefore the STA becomes

|

Comparing this result with Equation 14, we obtain Equation 4.

A similar calculation for the second order [19] shows

|

This result, combined with Equations 15 and 16, leads to Equations 5 and 6, respectively.

Supporting Information

Firing Rate of the LIF Model with Noisy Stimuli.

(0.09 MB DOC)

Footnotes

The authors have declared that no competing interests exist.

This work was supported by a Burroughs-Wellcome Careers at the Scientific Interface grant, a Sloan Research Fellowship, and a McKnight Scholar Award to ALF. BNL was supported by grant number F30NS055650 from the National Institute of Neurological Disorders and Stroke, the Medical Scientist Training Program at the University of Washington supported by the National Institute of General Sciences, and an Achievement Rewards for College Scientists fellowship.

References

- 1.Chance FS, Abbott LF, Reyes AD. Gain modulation from background synaptic input. Neuron. 2002;35:773–782. doi: 10.1016/s0896-6273(02)00820-6. [DOI] [PubMed] [Google Scholar]

- 2.Fellous JM, Rudolph M, Destexhe A, Sejnowski TJ. Synaptic background noise controls the input/output characteristics of single cells in an in vitro model of in vivo activity. Neuroscience. 2003;122:811–829. doi: 10.1016/j.neuroscience.2003.08.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Higgs MH, Slee SJ, Spain WJ. Diversity of gain modulation by noise in neocortical neurons: regulation by the slow afterhyperpolarization conductance. J Neurosci. 2006;26:8787–8799. doi: 10.1523/JNEUROSCI.1792-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Arsiero M, Lüscher HR, Lundstrom BN, Giugliano M. The impact of input fluctuations on the frequency–current relationships of layer 5 pyramidal neurons in the rat medial prefrontal cortex. J Neurosci. 2007;27:3274–3284. doi: 10.1523/JNEUROSCI.4937-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Prescott SA, Koninck YD. Gain control of firing rate by shunting inhibition: roles of synaptic noise and dendritic saturation. Proc Natl Acad Sci USA. 2003;100:2076–2081. doi: 10.1073/pnas.0337591100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Prescott SA, Ratté S, De Koninck Y, Sejnowski TJ. Nonlinear interaction between shunting and adaptation controls a switch between integration and coincidence detection in pyramidal neurons. J Neurosci. 2006;26:9084–9097. doi: 10.1523/JNEUROSCI.1388-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Lundstrom BN, Hong S, Fairhall AL. Two computational regimes of a single-compartment neuron separated by a planar boundary in conductance space. Neural Comput. 2008;20:1239–1260. doi: 10.1162/neco.2007.05-07-536. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Victor J, Shapley R. A method of nonlinear analysis in the frequency domain. Biophys J. 1980;29:459–483. doi: 10.1016/S0006-3495(80)85146-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Meister M, Berry MJ., II The neural code of the retina. Neuron. 1999;22:435–450. doi: 10.1016/s0896-6273(00)80700-x. [DOI] [PubMed] [Google Scholar]

- 10.Brenner N, Bialek W, de Ruyter van Steveninck R. Adaptive rescaling maximizes information transmission. Neuron. 2000;26:695–702. doi: 10.1016/s0896-6273(00)81205-2. [DOI] [PubMed] [Google Scholar]

- 11.Simoncelli EP, Paninski L, Pillow J, Schwartz O. Characterization of neural responses with stochastic stimuli. In: Gazzaniga M, editor. The Cognitive Neurosciences. 3rd edition. Cambridge (Massachusetts): MIT Press; 2004. [Google Scholar]

- 12.Rust NC, Schwartz O, Movshon JA, Simoncelli EP. Spatiotemporal elements of macaque V1 receptive fields. Neuron. 2005;46:945–956. doi: 10.1016/j.neuron.2005.05.021. [DOI] [PubMed] [Google Scholar]

- 13.Stanley GB, Lei FF, Dan Y. Reconstruction of natural scenes from ensemble responses in the lateral geniculate nucleus. J Neurosci. 1999;19:8036–8042. doi: 10.1523/JNEUROSCI.19-18-08036.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Fairhall AL, Burlingame CA, Narasimhan R, Harris RA, Puchalla JL, et al. Selectivity for multiple stimulus features in retinal ganglion cells. J Neurophysiol. 2006;96:2724–2738. doi: 10.1152/jn.00995.2005. [DOI] [PubMed] [Google Scholar]

- 15.Maravall M, Petersen RS, Fairhall AL, Arabzadeh E, Diamond ME. Shifts in coding properties and maintenance of information transmission during adaptation in barrel cortex. PLoS Biol. 2007;5:e19. doi: 10.1371/journal.pbio.0050019. doi:10.1371/journal.pbio.0050019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Agüera y Arcas B, Fairhall AL. What causes a neuron to spike? Neural Comput. 2003;15:1715–1749. doi: 10.1162/08997660360675017. [DOI] [PubMed] [Google Scholar]

- 17.Agüera y Arcas B, Fairhall AL, Bialek W. Computation in a single neuron: Hodgkin and Huxley revisited. Neural Comput. 2003;15:1789–1807. doi: 10.1162/08997660360675017. [DOI] [PubMed] [Google Scholar]

- 18.Slee SJ, Higgs MH, Fairhall AL, Spain WJ. Two-dimensional time coding in the auditory brainstem. J Neurosci. 2005;25:9978–9988. doi: 10.1523/JNEUROSCI.2666-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Hong S, Agüera y Arcas B, Fairhall AL. Single neuron computation: from dynamical system to feature detector. Neural Comput. 2007;19:3133–3172. doi: 10.1162/neco.2007.19.12.3133. [DOI] [PubMed] [Google Scholar]

- 20.Atick JJ. Could information theory provide an ecological theory of sensory processing? Network (Bristol, England) 1992;3:213–251. doi: 10.3109/0954898X.2011.638888. [DOI] [PubMed] [Google Scholar]

- 21.Theunissen FE, Sen K, Doupe AJ. Spectral-temporal receptive fields of nonlinear auditory neurons obtained using natural sounds. J Neurosci. 2000;20:2315–2331. doi: 10.1523/JNEUROSCI.20-06-02315.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Fairhall A, Lewen G, Bialek W, de Ruyter van Steveninck RR. Efficiency and ambiguity in an adaptive neural code. Nature. 2001;412:787–792. doi: 10.1038/35090500. [DOI] [PubMed] [Google Scholar]

- 23.Baccus SA, Meister M. Fast and slow contrast adaptation in retinal circuitry. Neuron. 2002;36:909–919. doi: 10.1016/s0896-6273(02)01050-4. [DOI] [PubMed] [Google Scholar]

- 24.Nagel KI, Doupe AJ. Temporal processing and adaptation in the songbird auditory forebrain. Neuron. 2006;51:845–859. doi: 10.1016/j.neuron.2006.08.030. [DOI] [PubMed] [Google Scholar]

- 25.Gaudry KS, Reinagel P. Benefits of contrast normalization demonstrated in neurons and model cells. J Neurosci. 2007;27:8071–8079. doi: 10.1523/JNEUROSCI.1093-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Rudd ME, Brown LG. Noise adaptation in integrate-and-fire neurons. Neural Comput. 1997;9:1047–1069. doi: 10.1162/neco.1997.9.5.1047. [DOI] [PubMed] [Google Scholar]

- 27.Paninski L, Lau B, Reyes AD. Noise-driven adaptation: in vitro and mathematical analysis. Neurocomputing. 2003;52:877–883. [Google Scholar]

- 28.Yu Y, Lee TS. Dynamical mechanisms underlying contrast gain control in single neurons. Phys Rev E. 2003;68:011901. doi: 10.1103/PhysRevE.68.011901. [DOI] [PubMed] [Google Scholar]

- 29.Borst A, Flanagin VL, Sompolinsky H. Adaptation without parameter change: dynamic gain control in motion detection. Proc Natl Acad Sci USA. 2005;102:6172–6176. doi: 10.1073/pnas.0500491102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Hodgkin AL. The local electric changes associated with repetitive action in a non-medullated axon. J Physiol. 1948;107:165–181. doi: 10.1113/jphysiol.1948.sp004260. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Izhikevich EM. Dynamical Systems in Neuroscience: The Geometry of Excitability and Bursting. Cambridge (Massachusetts): MIT Press; 2006. [Google Scholar]

- 32.Hodgkin AL, Huxley AF. A quantitative description of membrane current and its application to conduction and excitation in nerve. J Physiol. 1952;463:391–407. doi: 10.1113/jphysiol.1952.sp004764. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Spekreijse H, Reits D. Sequential analysis of the visual evoked potential system in man: nonlinear analysis of a sandwich system. Ann N Y Acad Sci. 1982;388:72–97. doi: 10.1111/j.1749-6632.1982.tb50785.x. [DOI] [PubMed] [Google Scholar]

- 34.Ermentrout GB. Reduction of conductance-based models with slow synapses to neural nets. Neural Comput. 1994;6:679–695. [Google Scholar]

- 35.Rinzel JM, Ermentrout GB. Analysis of neuronal excitability. In: Koch C, Segev I, editors. Methods in Neuronal Modeling: From Synapses to Networks. Cambridge (Massachusetts): MIT Press; 1989. pp. 135–170. [Google Scholar]

- 36.Hoppensteadt F, Izhikevich EM. Weakly Connected Neural Nets. Berlin: Springer-Verlag; 1997. [Google Scholar]

- 37.Ermentrout GB, Kopell N. Parabolic bursting in an excitable system coupled with a slow oscillation. SIAM J Appl Math. 1986;4:233–253. [Google Scholar]

- 38.Ermentrout B. Type I membranes, phase resetting curves, and synchrony. Neural Comput. 1996;8:979–1001. doi: 10.1162/neco.1996.8.5.979. [DOI] [PubMed] [Google Scholar]

- 39.Weber EH. De Pulsu, Resorptione, Auditu et Tactu. Annotiones Anatomicae et Physiologicae. Lipsiae: Koehler; 1834. [Google Scholar]

- 40.Fechner G. Elements of Psychophysics. New York: Holt, Rinehart and Winston; 1966. [Google Scholar]

- 41.Fitts PM. The information capacity of the human motor system in controlling the amplitude of movement. J Exp Psychol. 1954;47:381–391. [PubMed] [Google Scholar]

- 42.Stevens SS. Psychophysics: Introduction to Its Perceptual, Neural, and Social Prospects. Piscataway (New Jersey): Transaction Publishers; 1986. [Google Scholar]

- 43.Barenblatt GI. Scaling. Cambridge, UK: Cambridge University Press; 2003. [Google Scholar]

- 44.Gerstner W, Kistler W. Spiking Neuron Models: Single Neurons, Populations, Plasticity. Cambridge, UK: Cambridge University Press; 2002. [Google Scholar]

- 45.Lundstrom BN, Fairhall AL. Decoding stimulus variance from a distributional neural code of interspike intervals. J Neurosci. 2006;26:9030–9037. doi: 10.1523/JNEUROSCI.0225-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Gerstein GL, Mandelbrot B. Random walk models for the spike activity of a single neuron. Biophys J. 1964;4:41–68. doi: 10.1016/s0006-3495(64)86768-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Bryant HL, Segundo JP. Spike initiation by transmembrane current: a white-noise analysis. J Physiol. 1976;260:279–314. doi: 10.1113/jphysiol.1976.sp011516. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Mainen ZF, Sejnowski TJ. Reliability of spike timing in neocortical neurons. Science. 1995;268:1503–1506. doi: 10.1126/science.7770778. [DOI] [PubMed] [Google Scholar]

- 49.Destexhe A, Paré D. Impact of network activity on the integrative properties of neocortical pyramidal neurons in vivo. J Neurophysiol. 1999;81:1531–1547. doi: 10.1152/jn.1999.81.4.1531. [DOI] [PubMed] [Google Scholar]

- 50.Rudolph M, Destexhe A. Characterization of subthreshold voltage fluctuations in neuronal membranes. Neural Comput. 2003;15:2577–2618. doi: 10.1162/089976603322385081. [DOI] [PubMed] [Google Scholar]

- 51.Keat J, Reinagel P, Reid RC, Meister M. Predicting every spike: a model for the responses of visual neurons. Neuron. 2001;30:803–817. doi: 10.1016/s0896-6273(01)00322-1. [DOI] [PubMed] [Google Scholar]

- 52.Chichilnisky EJ. A simple white noise analysis of neuronal light responses. Network (Bristol, England) 2001;12:199–213. [PubMed] [Google Scholar]

- 53.Pillow JW, Paninski L, Uzzell VJ, Simoncelli EP, Chichilnisky EJ. Prediction and decoding of retinal ganglion cell responses with a probabilistic spiking model. J Neurosci. 2005;25:11003–11013. doi: 10.1523/JNEUROSCI.3305-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Smirnakis SM, Berry MJ, Warland DK, Bialek W, Meister M. Adaptation of retinal processing to image contrast and spatial scale. Nature. 1997;386:69–73. doi: 10.1038/386069a0. [DOI] [PubMed] [Google Scholar]

- 55.Pillow JW, Simoncelli EP. Biases in white noise analysis due to non-Poisson spike generation. Neurocomputing. 2003;52–54:109–115. [Google Scholar]

- 56.Truccolo W, Eden UT, Fellows MR, Donoghue JP, Brown EN. A point process framework for relating neural spiking activity to spiking history, neural ensemble, and extrinsic covariate effects. J Neurophysiol. 2005;93:1074–1089. doi: 10.1152/jn.00697.2004. [DOI] [PubMed] [Google Scholar]

- 57.Paninski L, Pillow J, Lewi J. Statistical models for neural encoding, decoding, and optimal stimulus design. Prog Brain Res. 2006;165:493–507. doi: 10.1016/S0079-6123(06)65031-0. [DOI] [PubMed] [Google Scholar]

- 58.Schwindt PC, Spain WJ, Foehring RC, Stafstrom CE, Chubb MC, et al. Multiple potassium conductances and their functions in neurons from cat sensorimotor cortex in vitro. J Neurophysiol. 1988;59:424–449. doi: 10.1152/jn.1988.59.2.424. [DOI] [PubMed] [Google Scholar]

- 59.Spain WJ, Schwindt PC, Crill WE. Two transient potassium currents in layer V pyramidal neurones from cat sensorimotor cortex. J Physiol. 1991;434:591–607. doi: 10.1113/jphysiol.1991.sp018488. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.La Camera G, Rauch A, Thurbon D, Lüscher HR, Senn W, et al. Multiple time scales of temporal response in pyramidal and fast spiking cortical neurons. J Neurophysiol. 2006;96:3448–3464. doi: 10.1152/jn.00453.2006. [DOI] [PubMed] [Google Scholar]

- 61.Ermentrout B. Linearization of F-I curves by adaptation. Neural Comput. 1998;10:1721–1729. doi: 10.1162/089976698300017106. [DOI] [PubMed] [Google Scholar]

- 62.Benda J, Herz AVM. A universal model for spike-frequency adaptation. Neural Comput. 2003;15:2523–2564. doi: 10.1162/089976603322385063. [DOI] [PubMed] [Google Scholar]

- 63.La Camera G, Rauch A, Lüscher HR, Senn W, Fusi S. Minimal models of adapted neuronal response to in vivo-like input currents. Neural Comput. 2004;16:2101–2124. doi: 10.1162/0899766041732468. [DOI] [PubMed] [Google Scholar]

- 64.Kim KJ, Rieke F. Temporal contrast adaptation in the input and output signals of salamander retinal ganglion cells. J Neurosci. 2001;21:287–299. doi: 10.1523/JNEUROSCI.21-01-00287.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Arganda S, Guantes R, de Polavieja GG. Sodium pumps adapt spike bursting to stimulus statistics. Nat Neurosci. 2007;10:1467–1473. doi: 10.1038/nn1982. [DOI] [PubMed] [Google Scholar]

- 66.Diaz-Quesada M, Maravall M. Intrinsic mechanisms for adaptive gain rescaling in barrel cortex. J Neurosci. 2008;28:696–710. doi: 10.1523/JNEUROSCI.4931-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Ringach DL, Malone BJ. The operating point of the cortex: neurons as large deviation detectors. J Neurosci. 2007;27:7673–7683. doi: 10.1523/JNEUROSCI.1048-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Hines ML, Carnevale NT. The NEURON simulation environment. Neural Comput. 1997;9:1179–1209. doi: 10.1162/neco.1997.9.6.1179. [DOI] [PubMed] [Google Scholar]

- 69.Knight BW. Dynamics of encoding in a population of neurons. J Gen Physiol. 1972;59:734. doi: 10.1085/jgp.59.6.734. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Tuckwell HC. Introduction to Theoretical Neurobiology. Cambridge, UK: Cambridge University Press; 1988. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Firing Rate of the LIF Model with Noisy Stimuli.

(0.09 MB DOC)