Abstract

Recently, a considerable growth of interest in projected gradient (PG) methods has been observed due to their high efficiency in solving large-scale convex minimization problems subject to linear constraints. Since the minimization problems underlying nonnegative matrix factorization (NMF) of large matrices well matches this class of minimization problems, we investigate and test some recent PG methods in the context of their applicability to NMF. In particular, the paper focuses on the following modified methods: projected Landweber, Barzilai-Borwein gradient projection, projected sequential subspace optimization (PSESOP), interior-point Newton (IPN), and sequential coordinate-wise. The proposed and implemented NMF PG algorithms are compared with respect to their performance in terms of signal-to-interference ratio (SIR) and elapsed time, using a simple benchmark of mixed partially dependent nonnegative signals.

1. Introduction and Problem Statement

Nonnegative matrix factorization (NMF) finds such nonnegative factors (matrices) A = [a ij] ∈ ℝI×J and X = [x jt] ∈ ℝJ×T with a a ij ≥ 0, x jt ≥ 0 that Y ≅ AX, given the observation matrix Y = [y it] ∈ ℝI×T, the lower-rank J, and possibly other statistical information on the observed data or the factors to be estimated.

This method has found a variety of real-world applications in the areas such as blind separation of images and nonnegative signals [1–6], spectra recovering [7–10], pattern recognition and feature extraction [11–16], dimensionality reduction, segmentation and clustering [17–32], language modeling, text mining [25, 33], music transcription [34], and neurobiology (gene separation) [35, 36].

Depending on an application, the estimated factors may have different interpretation. For example, Lee and Seung [11] introduced NMF as a method for decomposing an image (face) into parts-based representations (parts reminiscent of features such as lips, eyes, nose, etc.). In blind source separation (BSS) [1, 37, 38], the matrix Y represents the observed mixed (superposed) signals or images, A is a mixing operator, and X is a matrix of true source signals or images. Each row of Y or X is a signal or 1D image representation, where I is a number of observed mixed signals and J is a number of hidden (source) components. The index t usually denotes a sample (discrete time instant), where T is the number of available samples. In BSS, we usually have T ≫ I ≥ J, and J is known or can be relatively easily estimated using SVD or PCA.

Our objective is to estimate the mixing matrix A and sources X subject to nonnegativity constraints of all the entries, given Y and possibly the knowledge on a statistical distribution of noisy disturbances.

Obviously, NMF is not unique in general case, and it is characterized by a scale and permutation indeterminacies. These problems have been addressed recently by many researchers [39–42], and in this paper, the problems will not be discussed. However, we have shown by extensive computer simulations that in practice with overwhelming probability we are able to achieve a unique nonnegative factorization (neglecting unavoidable scaling and permutation ambiguities) if data are sufficiently sparse and a suitable NMF algorithm is applied [43]. This is consistent with very recent theoretical results [40].

The noise distribution is strongly application-dependent, however, in many BSS applications, a Gaussian noise is expected. Here our considerations are restricted to this case, however, the alternative NMF algorithms optimized to different distributions of the noise exist and can be found, for example, in [37, 44, 45].

NMF was proposed by Paatero and Tapper [46, 47] but Lee and Seung [11, 48] highly popularized this method by using simple multiplicative algorithms to perform NMF. An extensive study on convergence of multiplicative algorithms for NMF can be found in [49]. In general, the multiplicative algorithms are known to be very slowly convergent for large-scale problems. Due to this reason, there is a need to search more efficient and fast algorithms for NMF. Many approaches have been proposed in the literature to relax these problems. One of them is to apply projected gradient (PG) algorithms [50–53] or projected alternating least-squares (ALS) algorithms [33, 54, 55] instead of multiplicative ones. Lin [52] suggested applying the Armijo rule to estimate the learning parameters in projected gradient updates for NMF. The NMF PG algorithms proposed by us in [53] also address the issue with selecting such a learning parameter that is the steepest descent and also keeps some distance to a boundary of the nonnegative orthant (subspace of real nonnegative numbers). Another very robust technique concerns exploiting the information from the second-order Taylor expansion term of a cost function to speed up the convergence. This approach was proposed in [45, 56], where the mixing matrix A is updated with the projected Newton method, and the sources in X are computed with the projected least-squares method (the fixed point algorithm).

In this paper, we extend our approach to NMF that we have initialized in [53]. We have investigated, extended, and tested several recently proposed PG algorithms such as the oblique projected Landweber [57], Barzilai-Borwein gradient projection [58], projected sequential subspace optimization [59, 60], interior-point Newton [61], and sequential coordinate-wise [62]. All the presented algorithms in this paper are quite efficient for solving large-scale minimization problems subject to nonnegativity and sparsity constraints.

The main objective of this paper is to develop, extend, and/or modify some of the most promising PG algorithms to a standard NMF problem and to find optimal conditions or parameters for such a class of NMF algorithms. The second objective is to compare the performance and complexity of these algorithms for NMF problems, and to discover or establish the most efficient and promising algorithms. We would like to emphasize that most of the discussed algorithms have not been implemented neither used till now or even tested before for NMF problems, but they have been rather considered for solving a standard system of algebraic equations: Ax(k) = y(k) (for only k = 1) where the matrix A and the vectors y are known. In this paper, we consider a much more difficult and complicated problem in which we have two sets of parameters and additional constraints of nonnegativity and/or sparsity. So it was not clear till now whether such algorithms would work efficiently for NMF problems, and if so, what kind of projected algorithms is the most efficient? To our best knowledge only the Lin-PG NMF algorithm is widely used and known for NMF problems. We have demonstrated experimentally that there are several novel PG gradient algorithms which are much more efficient and consistent than the Lin-PG algorithm.

In Section 2, we briefly discuss the PG approach to NMF. Section 3 describes the tested algorithms. The experimental results are illustrated in Section 4. Finally, some conclusions are given in Section 5.

2. Projected Gradient Algorithms

In contrast to the multiplicative algorithms, the class of PG algorithms has additive updates. The algorithms discussed here approximately solve nonnegative least squares (NNLS) problems with the basic alternating minimization technique that is used in NMF:

| (1) |

| (2) |

or in the equivalent matrix form

| (3) |

| (4) |

where A = [a 1, …, a J] ∈ ℝI×J, , X = [x 1, …, x T] ∈ ℝJ×T, , Y = [y 1, …, y T] ∈ ℝI×T, , and usually I ≥ J. The matrix A is assumed to be a full-rank matrix, so there exists a unique solution X* ∈ ℝJ×T for any matrix Y since the NNLS problem is strictly convex (with respect to one set of variables {X}).

The solution x t* to (1) satisfies the Karush-Kuhn-Tucker (KKT) conditions:

| (5) |

or in the matrix notation

| (6) |

where g X and G X are the corresponding gradient vector and gradient matrix:

| (7) |

| (8) |

Similarly, the KKT conditions for the solution to (2), and the solution A* to (4) are as follows:

| (9) |

or in the matrix notation:

| (10) |

where g A and G A are the gradient vector and gradient matrix of the objective function:

| (11) |

There are many approaches to solve the problems (1) and (2), or equivalently (3) and (4). In this paper, we discuss selected projected gradient methods that can be generally expressed by iterative updates:

| (12) |

where P Ω[ξ] is a projection of ξ onto a convex feasible set Ω = {ξ ∈ ℝ : ξ ≥ 0}, namely, the nonnegative orthant ℝ+ (the subspace of nonnegative real numbers), P X (k) and P A (k) are descent directions for X and A, and η X (k) and η A (k) are learning rules, respectively.

The projection P Ω[ξ] can be performed in several ways. One of the simplest techniques is to replace all negative entries in ξ by zero-values, or in practical cases, by a small positive number ϵ to avoid numerical instabilities. Thus,

| (13) |

However, this is not the only way to carry out the projection P Ω(ξ). It is typically more efficient to choose the learning rates η X (k) and η A (k) so as to preserve nonnegativity of the solutions. The nonnegativity can be also maintained by solving least-squares problems subject to the constraints (6) and (10). Here we present the exemplary PG methods that work for NMF problems quite efficiently, and we implemented them in the Matlab toolboxm, NMFLAB/NTFLAB, for signal and image processing [43]. For simplicity, we focus our considerations on updating the matrix X, assuming that the updates for A can be obtained in a similar way. Note that the updates for A can be readily obtained solving the transposed system X T A T = Y T, having X fixed (updated in the previous step).

3. Algorithms

3.1. Oblique Projected Landweber Method

The Landweber method [63] performs gradient-descent minimization with the following iterative scheme:

| (14) |

where the gradient is given by (8), and the learning rate η ∈ (0, η max). The updating formula assures an asymptotical convergence to the minimal-norm least squares solution for the convergence radius defined by

| (15) |

where λ max(A T A) is the maximal eigenvalue of A T A. Since A is a nonnegative matrix, we have λ max(A T A) ≤ maxj[A T A 1 J]j, where 1 J = [1, …, 1]T ∈ ℝJ. Thus the modified Landweber iterations can be expressed by the formula

| (16) |

In the obliqueprojected Landweber (OPL) method [57], which can be regarded as a particular case of the PG iterative formula (12), the solution obtained with (14) in each iterative step is projected onto the feasible set. Finally, we have Algorithm 1 for updating X.

Algorithm 1.

(OPL).

3.2. Projected Gradient

One of the fundamental PG algorithms for NMF was proposed by Lin in [52]. This algorithm, which we refer to as the Lin-PG, uses the Armijo rule along the projection arc to determine the steplengths η X (k) and η A (k) in the iterative updates (12). For the cost function being the squared Euclidean distance, P X = (A (k))T (A (k) X (k) − Y) and P A = (A (k) X (k+1)−Y)(X (k+1))T.

For computation of X, such a value of η X is decided, on which

| (17) |

where m k is the first nonnegative integer m that satisfies

| (18) |

The parameters β ∈ (0, 1) and σ ∈ (0,1) decide about a convergence speed. In this algorithm we set σ = 0.01, β = 0.1 experimentally as default.

The Matlab implementation of the Lin-PG algorithm is given in [52].

3.3. Barzilai-Borwein Gradient Projection

The Barzilai-Borwein gradient projection method [58, 64] is motivated by the quasi-Newton approach, that is, the inverse of the Hessian is replaced with an identity matrix H k = α k I, where the scalar α k is selected so that the inverse Hessian has similar behavior as the true Hessian in the recent iteration. Thus,

| (19) |

In comparison to, for example, Lin's method [52], this method does not ensure that the objective function decreases at every iteration, but its general convergence has been proven analytically [58]. The general scheme of the Barzilai-Borwein gradient projection algorithm for updating X is in Algorithm 2.

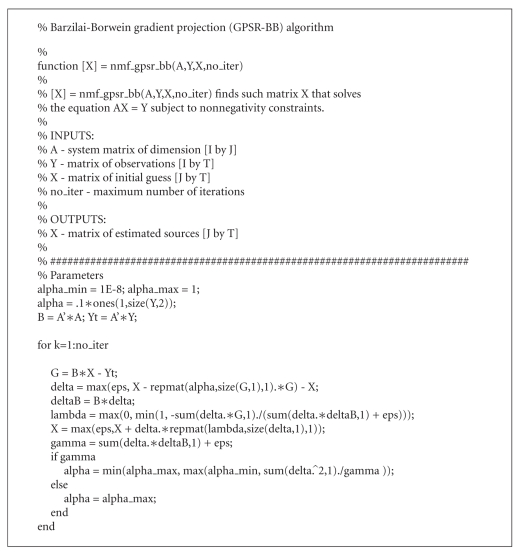

Algorithm 2.

(GPSR-BB).

Since D F(Y||AX) is a quadratic function, the line search parameter λ (k) can be derived in the following closed-form formula:

| (20) |

The Matlab implementation of the GPSR-BB algorithm, which solves the system AX = Y of multiple measurement vectors subject to nonnegativity constraints, is given in Algorithm 4 (see also NMFLAB).

Algorithm 4.

3.4. Projected Sequential Subspace Optimization

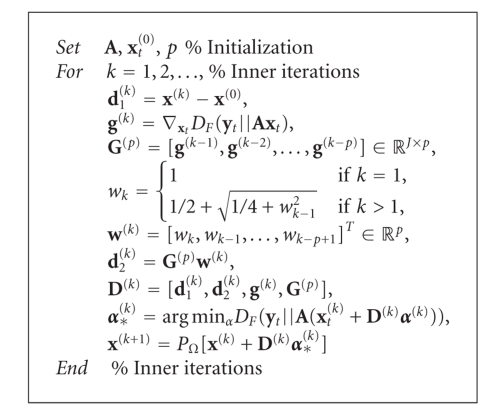

The projected sequential subspace optimization (PSESOP) method [59, 60] carries out a projected minimization of a smooth objective function over a subspace spanned by several directions which include the current gradient and gradient from the previous iterations, and the Nemirovski directions. Nemirovski [65] suggested that convex smooth unconstrained optimization is optimal if the optimization is performed over a subspace which includes the current gradient g(x), the directions d 1 (k) = x (k) − x (0), and the linear combination of the previous gradients d 2 (k) = ∑n=0 k−1 w n g(x n) with the coefficients w n, n = 0, …, k − 1. The directions should be orthogonal to the current gradient. Narkiss and Zibulevsky [59] also suggested to include another direction: p (k) = x (k) − x (k−1), which is motivated by a natural extension of the conjugate gradient (CG) method to a nonquadratic case. However, our practical observations showed that this direction does not have a strong impact on the NMF components, thus we neglected it in our NMF-PSESOP algorithm. Finally, we have Algorithm 3 for updating x t which is a single column vector of X.

Algorithm 3.

(NMF-PSESOP).

The parameter p denotes the number of previous iterates that are taken into account to determine the current update.

The line search vector α * (k) derived in a closed form for the objective function D F(y t||A x t) is as follows:

| (21) |

The regularization parameter can be set as a very small constant to avoid instabilities in inverting a rank-deficient matrix in case that D (k) has zero-value or dependent columns.

3.5. Interior Point Newton Algorithm

The interior point Newton (IPN) algorithm [61] solves the NNLS problem (1) by searching the solution satisfying the KKT conditions (5) which equivalently can be expressed by the nonlinear equations

| (22) |

where D(x t) = diag{d 1(x t), …, d J(x t)}, x t ≥ 0, and

| (23) |

Applying the Newton method to (22), we have in the kth iterative step

| (24) |

where

| (25) |

In [61], the entries of the matrix E k(x t) are defined by

| (26) |

for 1 < γ ≤ 2.

If the solution is degenerate, that is, t = 1, …, T, ∃ j : x jt* = 0, and g jt=0, the matrix D k(x t)A T A + E k(x t) may be singular. To avoid such a case, the system of equations has been rescaled to the following form:

| (27) |

with

| (28) |

for x t > 0. In [61], the system (27) is solved by the inexact Newton method, which leads to the following updates:

| (29) |

| (30) |

| (31) |

where σ ∈ (0, 1), r k(x t) = A T(A x t − y t), and P Ω[·] is a projection onto a feasible set Ω.

The transformation of the normal matrix A T A by the matrix W k(x t)D k(x t) in (27) means the system matrix W k(x t)D k(x t)M k(x t) is no longer symmetric and positive-definite. There are many methods for handling such systems of linear equations, like QMR [66], BiCG [67, 68], BiCGSTAB [69], or GMRES-like methods [70], however, they are more complicated and computationally demanding than, for example, the basic CG algorithm [71]. To apply the CG algorithm the system matrix in (27) must be converted to a positive-definite symmetric matrix, which can be easily done with normal equations. The methods like CGLS [72] or LSQR [73] are therefore suitable for such tasks. The transformed system has the form

| (32) |

| (33) |

| (34) |

with and .

Since our cost function is quadratic, its minimization in a single step is performed with combining the projected Newton step with the constrained scaled Cauchy step that is given in the form

| (35) |

Assuming x t (k) + p k (C) > 0, τ k is chosen as being either the unconstrained minimizer of the quadratic function ψ k(−τ k D k(x t)g k(x t)) or a scalar proportional to the distance to the boundary along −D k(x t)g k(x t), where

| (36) |

Thus

| (37) |

where ψ k(−τ k D k(x t)g k(x t)) = (g k(x t))T D k(x t)g k(x t)/(D k × (x t)g k(x t))T M k(x t)D k(x t)g k(x t) with θ ∈ (0, 1). For ψ k(p k (C)) < 0, the global convergence is achieved if red(x t (k+1) − x t (k)) ≥ β, β ∈ (0, 1) with

| (38) |

The usage of the constrained scaled Cauchy step leads to the following updates:

| (39) |

with t ∈ [0, 1), and p k (C) are given by (30) and (35), respectively, and t is the smaller square root (laying in (0,1)) of the quadratic equation:

| (40) |

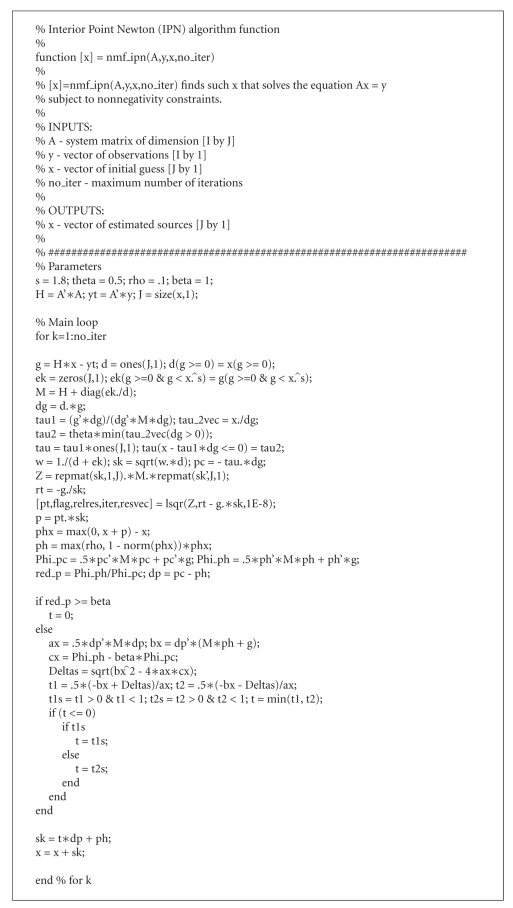

The Matlab code of the IPN algorithm, which solves the system A x t = y t subject to nonnegativity constraints, is given in Algorithm 5. To solve the transformed system (32), we use the LSQR method implemented in Matlab 7.0.

Algorithm 5.

3.6. Sequential Coordinate-Wise Algorithm

The NNLS problem (1) can be expressed in terms of the following quadratic problem (QP) [62]:

| (41) |

where

| (42) |

with H = A T A and c t = −A T y t.

The sequential coordinate-wise algorithm (SCWA) proposed first by Franc et al. [62] solves the QP problem given by (41) updating only single variable x jt in one iterative step. It should be noted that the sequential updates can be easily done, if the function Ψ(x t) is equivalently rewritten as

| (43) |

where ℐ = {1, …, J}, and

| (44) |

Considering the optimization of Ψ(x t) with respect to the selected variable x jt, the following analytical solution can be derived:

| (45) |

Updating only single variable x jt in one iterative step, we have

| (46) |

Any optimal solution to the QP (41) satisfies the KKT conditions given by (5) and the stationarity condition of the following Lagrange function:

| (47) |

where λ t ∈ R J is a vector of Lagrange multipliers (or dual variables) corresponding to the vector x t. Thus, ∇xt ℒ(x t, λ t) = H x t + c t − λ t = 0. In the SCWA, the Lagrange multipliers are updated in each iteration according to the formula

| (48) |

where h j is the jth column of H, and λ t (0) = c t.

Finally, the SCWA can take the following updates:

| (49) |

4. Simulations

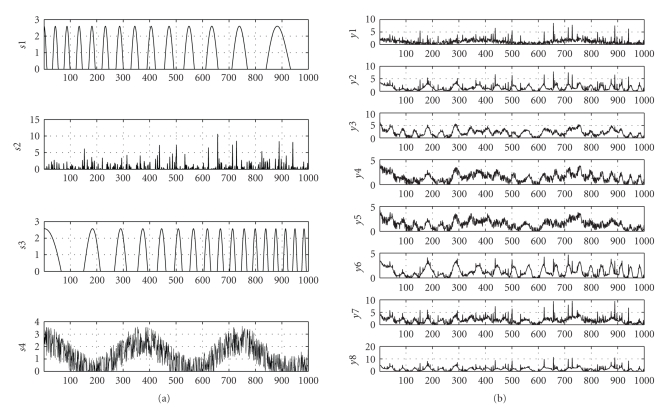

All the proposed algorithms were implemented in our NMFLAB, and evaluated with the numerical tests related to typical BSS problems. We used the synthetic benchmark of 4 partially dependent nonnegative signals (with only T = 1000 samples) which are illustrated in Figure 1(a). The signals are mixed by random, uniformly distributed nonnegative matrix A ∈ ℝ8×4 with the condition number cond{A} = 4.11. The matrix A is displayed in

| (50) |

The mixing signals are shown in Figure 1(b).

Figure 1.

Dataset: (a) original 4 source signals, (b) observed 8 mixed signals.

Because the number of variables in X is much greater than in A, that is, I × J = 32 and J × T = 4000, we test the projected gradient algorithms only for updating A. The variables in X are updated with the standard projected fixed point alternating least squares (FP-ALS) algorithm that is extensively analyzed in [55].

In general, the FP-ALS algorithm solves the least-squares problem

| (51) |

with the Moore-Penrose pseudoinverse of a system matrix, that is, in our case, the matrix A. Since in NMF usually I ≥ J, we formulate normal equations as A T AX = A T Y, and the least-squares solution of minimal l 2-norm to the normal equations is X LS = (A T A)−1 A T Y = A + Y, where A + is the Moore-Penrose pseudoinverse of A. The projected FP-ALS algorithm is obtained with a simple “half-rectified” projection, that is,

| (52) |

The alternating minimization is nonconvex in spite of the cost function being convex with respect to one set of variables. Thus, most NMF algorithms may get stuck in local minima, and hence, the initialization plays a key role. In the performed tests, we applied the multistart initialization described in [53] with the following parameters: N = 10 (number of restarts), K i = 30 (number of initial alternating steps), and K f = 1000 (number of final alternating steps). Each initial sample of A and X has been randomly generated from a uniform distribution. Each algorithm has been tested for two cases of inner iterations, that is, with k = 1 and k = 5. The inner iterations mean a number of iterative steps that are performed to update only A (with fixed X, i.e., before going to the update of X and vice versa). Additionally, the multilayer technique [53, 54] with 3 layers (L = 3) is applied.

The multilayer technique can be regarded as multistep decomposition. In the first step, we perform the basic decomposition Y = A 1 X 1 using any available NMF algorithm, where A 1 ∈ ℝI×J and X 1 ∈ ℝJ×T with I ≥ J. In the second stage, the results obtained from the first stage are used to perform the similar decomposition: X 1 = A 2 X 2, where A 2 ∈ ℝJ×J and X 2 ∈ ℝJ×T, using the same or different update rules, and so on. We continue our decomposition taking into account only the last achieved components. The process can be repeated arbitrary many times until some stopping criteria are satisfied. In each step, we usually obtain gradual improvements of the performance. Thus, our model has the form Y = A 1 A 2 ⋯ A L X L with the basis matrix defined as A = A 1 A 2 ⋯ A L ∈ ℝI×J. Physically, this means that we build up a system that has many layers or cascade connection of L mixing subsystems.

There are many stopping criteria for terminating the alternating steps. We stop the iterations if s ≥ K f = 1000 or the following condition ||A (s) − A (s−1)||F < ϵ is held, where s stands for the number of alternating step, and ϵ = 10−5. Note that the condition (20) can be also used as a stopping criterion, especially as the gradient is computed in each iteration of the PG algorithms.

The algorithms have been evaluated with the signal-to-interference ratio (SIR) measures, calculated separately for each source signal and each column in the mixing matrix. Since NMF suffers from scale and permutation indeterminacies, the estimated components are adequately permuted and rescaled. First, the source and estimated signals are normalized to a uniform variance, and then the estimated signals are permuted to keep the same order as the source signals. In NMFLAB [43], each estimated signal is compared to each source signal, which results in the performance (SIR) matrix that is involved to make the permutation matrix. Let x j and be the jth source and its corresponding (reordered) estimated signal, respectively. Analogically, let a j and be the jth column of the true and its corresponding estimated mixing matrix, respectively. Thus, the SIRs for the sources are given by

| (53) |

and similarly for each column in A we have

| (54) |

We test the algorithms with the Monte Carlo (MC) analysis, running each algorithm 100 times. Each run has been initialized with the multistart procedure. The algorithms have been evaluated with the mean-SIR values that are calculated as follows:

| (55) |

for each MC sample. The mean-SIRs for the worst (with the lowest mean-SIR values) and best (with the highest mean-SIR values) samples are given in Table 1. The number k means the number of inner iterations for updating A, and L denotes the number of layers in the multilayer technique [53, 54]. The notation L = 1 means that the multilayer technique was not used. The elapsed time [in seconds] is measured in Matlab, and it informs us in some sense about a degree of complexity of the algorithm.

Table 1.

Mean-SIRs [dB] obtained with 100 samples of Monte Carlo analysis for the estimation of sources and columns of mixing matrix from noise-free mixtures of signals in Figure 1. Sources X are estimated with the projected pseudoinverse. The number of inner iterations for updating A is denoted by k, and the number of layers (in the multilayer technique) by L. The notation best or worst in parenthesis that follows the algorithm name means that the mean-SIR value is calculated for the best or worst sample from Monte Carlo analysis, respectively. In the last column, the elapsed time [in seconds] is given for each algorithm with k = 1 and L = 1.

| Algorithm | Mean-SIRA [dB] | Mean-SIRX [dB] | Time | ||||||

|---|---|---|---|---|---|---|---|---|---|

| L = 1 | L = 3 | L = 1 | L = 3 | ||||||

| k = 1 | k = 5 | k = 1 | k = 5 | k = 1 | k = 5 | k = 1 | k = 5 | ||

| M-NMF (best) | 21 | 22.1 | 42.6 | 37.3 | 26.6 | 27.3 | 44.7 | 40.7 | 1.9 |

| M-NMF (mean) | 13.1 | 13.8 | 26.7 | 23.1 | 14.7 | 15.2 | 28.9 | 27.6 | |

| M-NMF (worst) | 5.5 | 5.7 | 5.3 | 6.3 | 5.8 | 6.5 | 5 | 5.5 | |

| OPL(best) | 22.9 | 25.3 | 46.5 | 42 | 23.9 | 23.5 | 55.8 | 51 | 1.9 |

| OPL(mean) | 14.7 | 14 | 25.5 | 27.2 | 15.3 | 14.8 | 23.9 | 25.4 | |

| OPL(worst) | 4.8 | 4.8 | 4.8 | 5.0 | 4.6 | 4.6 | 4.6 | 4.8 | |

| Lin-PG(best) | 36.3 | 23.6 | 78.6 | 103.7 | 34.2 | 33.3 | 78.5 | 92.8 | 8.8 |

| Lin-PG(mean) | 19.7 | 18.3 | 40.9 | 61.2 | 18.5 | 18.2 | 38.4 | 55.4 | |

| Lin-PG(worst) | 14.4 | 13.1 | 17.5 | 40.1 | 13.9 | 13.8 | 18.1 | 34.4 | |

| GPSR-BB(best) | 18.2 | 22.7 | 7.3 | 113.8 | 22.8 | 54.3 | 9.4 | 108.1 | 2.4 |

| GPSR-BB(mean) | 11.2 | 20.2 | 7 | 53.1 | 11 | 20.5 | 5.1 | 53.1 | |

| GPSR-BB(worst) | 7.4 | 17.3 | 6.8 | 24.9 | 4.6 | 14.7 | 2 | 23 | |

| PSESOP(best) | 21.2 | 22.6 | 71.1 | 132.2 | 23.4 | 55.5 | 56.5 | 137.2 | 5.4 |

| PSESOP(mean) | 15.2 | 20 | 29.4 | 57.3 | 15.9 | 34.5 | 27.4 | 65.3 | |

| PSESOP(worst) | 8.3 | 15.8 | 6.9 | 28.7 | 8.2 | 16.6 | 7.2 | 30.9 | |

| IPG(best) | 20.6 | 22.2 | 52.1 | 84.3 | 35.7 | 28.6 | 54.2 | 81.4 | 2.7 |

| IPG(mean) | 20.1 | 18.2 | 35.3 | 44.1 | 19.7 | 19.1 | 33.8 | 36.7 | |

| IPG(worst) | 10.5 | 13.4 | 9.4 | 21.2 | 10.2 | 13.5 | 8.9 | 15.5 | |

| IPN(best) | 20.8 | 22.6 | 59.9 | 65.8 | 53.5 | 52.4 | 68.6 | 67.2 | 14.2 |

| IPN(mean) | 19.4 | 17.3 | 38.2 | 22.5 | 22.8 | 19.1 | 36.6 | 21 | |

| IPN(worst) | 11.7 | 15.2 | 7.5 | 7.1 | 5.7 | 2 | 1.5 | 2 | |

| RMRNSD(best) | 24.7 | 21.6 | 22.2 | 57.9 | 30.2 | 43.5 | 25.5 | 62.4 | 3.8 |

| RMRNSD(mean) | 14.3 | 19.2 | 8.3 | 33.8 | 17 | 21.5 | 8.4 | 33.4 | |

| RMRNSD(worst) | 5.5 | 15.9 | 3.6 | 8.4 | 4.7 | 13.8 | 1 | 3.9 | |

| SCWA(best) | 12.1 | 20.4 | 10.6 | 24.5 | 6.3 | 25.6 | 11.9 | 34.4 | 2.5 |

| SCWA(mean) | 11.2 | 16.3 | 9.3 | 20.9 | 5.3 | 18.6 | 9.4 | 21.7 | |

| SCWA(worst) | 7.3 | 11.4 | 6.9 | 12.8 | 3.8 | 10 | 3.3 | 10.8 | |

For comparison, Table 1 contains also the results obtained for the standard multiplicative NMF algorithm (denoted as M-NMF) that minimizes the squared Euclidean distance. Additionally, the results of testing the PG algorithms which were proposed in [53] have been also included. The acronyms Lin-PG, IPG, RMRNSD refer to the following algorithms: projected gradient proposed by Lin [52], interior-point gradient, and regularized minimal residual norm steepest descent (the regularized version of the MRNSD algorithm that was proposed by Nagy and Strakos [74]). These NMF algorithms have been implemented and investigated in [53] in the context of their usefulness to BSS problems.

5. Conclusions

The performance of the proposed NMF algorithms can be inferred from the results given in Table 1. In particular, the results show how the algorithms are sensitive to initialization, or in other words, how easily they fall in local minima. Also the complexity of the algorithms can be estimated from the information on the elapsed time that is measured in Matlab.

It is easy to notice that our NMF-PSESOP algorithm gives the best estimation (the sample which has the highest best-SIR value), and it gives only slightly lower mean-SIR values than the Lin-PG algorithm. Considering the elapsed time, the PL, GPSR-BB, SCWA, and IPG belong to the fastest algorithms, while the Lin-PG and IPN algorithms are the slowest.

The multilayer technique generally improves the performance and consistency of all the tested algorithms if the number of observation is close to the number of nonnegative components. The highest improvement can be observed for the NMF-PSESOP algorithm, especially when the number of inner iterations is greater than one (typically, k = 5).

In summary, the best and the most promising NMG-PG algorithms are NMF-PSESOP, GPSR-BB, and IPG algorithms. However, the final selection of the algorithm depends on a size of the problem to be solved. Nevertheless, the projected gradient NMF algorithms seem to be much better (in the sense of speed and performance) in our tests than the multiplicative algorithms, provided that we can use the squared Euclidean cost function which is optimal for data with a Gaussian noise.

References

- 1.Cichocki A, Zdunek R, Amari S. New algorithms for nonnegative matrix factorization in applications to blind source separation. In: Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP '06), vol. 5; May 2006; Toulouse, France. pp. 621–624. [Google Scholar]

- 2.Piper J, Pauca VP, Plemmons RJ, Giffin M. Object characterization from spectral data using nonnegative matrix factorization and information theory. In: Proceedings of the AMOS Technical Conference; September 2004; Maui, Hawaii, USA. pp. 1–12. [Google Scholar]

- 3.Schmidt MN, Mørup M. Nonnegative matrix factor 2-D deconvolution for blind single channel source separation. In: Proceedings of the 6th International Conference on Independent Component Analysis and Blind Signal Separation (ICA '06), vol. 3889; March 2006; Charleston, SC, USA. pp. 700–707. Lecture Notes in Computer Science. [Google Scholar]

- 4.Ziehe A, Laskov P, Pawelzik K, Mueller K-R. Nonnegative sparse coding for general blind source separation. In: Advances in Neural Information Processing Systems 16; 2003; Vancouver, Canada. [Google Scholar]

- 5.Wang W, Luo Y, Chambers JA, Sanei S. Nonnegative matrix factorization for note onset detection of audio signals. In: Proceedings of the 16th IEEE International Workshop on Machine Learning for Signal Processing (MLSP '06); September 2006; Maynooth, Ireland. pp. 447–452. [Google Scholar]

- 6.Wang W. Squared euclidean distance based convolutive nonnegative matrix factorization with multiplicative learning rules for audio pattern separation. In: Proceedings of the 7th IEEE International Symposium on Signal Processing and Information Technology (ISSPIT '07); December 2007; Cairo, Egypt. pp. 347–352. [Google Scholar]

- 7.Li H, Adali T, Wang W, Emge D. Nonnegative matrix factorization with orthogonality constraints for chemical agent detection in Raman spectra. In: Proceedings of the 15th IEEE International Workshop on Machine Learning for Signal Processing (MLSP '05); September 2005; Mystic, Conn, USA. pp. 253–258. [Google Scholar]

- 8.Sajda P, Du S, Brown T, Parra L, Stoyanova R. Recovery of constituent spectra in 3D chemical shift imaging using nonnegative matrix factorization. In: Proceedings of the 4th International Symposium on Independent Component Analysis and Blind (ICA '03); April 2003; Nara, Japan. pp. 71–76. [Google Scholar]

- 9.Sajda P, Du S, Brown TR, et al. Nonnegative matrix factorization for rapid recovery of constituent spectra in magnetic resonance chemical shift imaging of the brain. IEEE Transactions on Medical Imaging. 2004;23(12):1453–1465. doi: 10.1109/TMI.2004.834626. [DOI] [PubMed] [Google Scholar]

- 10.Sajda P, Du S, Parra LC. Recovery of constituent spectra using nonnegative matrix factorization. In: Wavelets: Applications in Signal and Image Processing X, vol. 5207; August 2003; San Diego, Calif, USA. pp. 321–331. Proceedings of SPIE. [Google Scholar]

- 11.Lee DD, Seung HS. Learning the parts of objects by nonnegative matrix factorization. Nature. 1999;401(6755):788–791. doi: 10.1038/44565. [DOI] [PubMed] [Google Scholar]

- 12.Liu W, Zheng N. Nonnegative matrix factorization based methods for object recognition. Pattern Recognition Letters. 2004;25(8):893–897. [Google Scholar]

- 13.Spratling MW. Learning image components for object recognition. Journal of Machine Learning Research. 2006;7:793–815. [Google Scholar]

- 14.Wang Y, Jia Y, Hu C, Turk M. Nonnegative matrix factorization framework for face recognition. International Journal of Pattern Recognition and Artificial Intelligence. 2005;19(4):495–511. [Google Scholar]

- 15.Smaragdis P. Nonnegative matrix factor deconvolution; extraction of multiple sound sources from monophonic inputs. In: Proceedings of the 5th International Conference on Independent Component Analysis and Blind Signal Separation (ICA '04), vol. 3195; September 2004; Granada, Spain. pp. 494–499. Lecture Notes in Computer Science. [Google Scholar]

- 16.Smaragdis P. Convolutive speech bases and their application to supervised speech separation. IEEE Transactions on Audio, Speech and Language Processing. 2007;15(1):1–12. [Google Scholar]

- 17.Ahn J-H, Kim S, Oh J-H, Choi S. Multiple nonnegative-matrix factorization of dynamic PET images. In: Proceedings of the 6th Asian Conference on Computer Vision (ACCV '04); January 2004; Jeju Island, Korea. pp. 1009–1013. [Google Scholar]

- 18.Carmona-Saez P, Pascual-Marqui RD, Tirado F, Carazo JM, Pascual-Montano A. Biclustering of gene expression data by non-smooth nonnegative matrix factorization. BMC Bioinformatics. 2006;7, article 78:1–18. doi: 10.1186/1471-2105-7-78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Guillamet D, Schiele B, Vitrià J. Analyzing nonnegative matrix factorization for image classification. In: Proceedings of the 16th International Conference on Pattern Recognition (ICPR '02), vol. 2; August 2002; Quebec City, Canada. pp. 116–119. [Google Scholar]

- 20.Guillamet D, Vitrià J. Nonnegative matrix factorization for face recognition. In: Proceedings of the 5th Catalan Conference on Artificial Intelligence (CCIA '02); October 2002; Castello de la Plana, Spain. pp. 336–344. [Google Scholar]

- 21.Guillamet D, Vitrià J, Schiele B. Introducing a weighted nonnegative matrix factorization for image classification. Pattern Recognition Letters. 2003;24(14):2447–2454. [Google Scholar]

- 22.Okun OG. Nonnegative matrix factorization and classifiers: experimental study. In: Proceedings of the 4th IASTED International Conference on Visualization, Imaging, and Image Processing (VIIP '04); September 2004; Marbella, Spain. pp. 550–555. [Google Scholar]

- 23.Okun OG, Priisalu H. Fast nonnegative matrix factorization and its application for protein fold recognition. EURASIP Journal on Applied Signal Processing. 2006;2006:8 pages. Article ID 71817. [Google Scholar]

- 24.Pascual-Montano A, Carazo JM, Kochi K, Lehmann D, Pascual-Marqui RD. Non-smooth nonnegative matrix factorization (nsNMF) IEEE Transactions on Pattern Analysis and Machine Intelligence. 2006;28(3):403–415. doi: 10.1109/TPAMI.2006.60. [DOI] [PubMed] [Google Scholar]

- 25.Pauca VP, Shahnaz F, Berry MW, Plemmons RJ. Text mining using nonnegative matrix factorizations. In: Proceedings of the 4th SIAM International Conference on Data Mining (SDM '04); April 2004; Lake Buena Vista, Fla, USA. pp. 452–456. [Google Scholar]

- 26.Shahnaz F, Berry MW, Pauca VP, Plemmons RJ. Document clustering using nonnegative matrix factorization. Journal on Information Processing & Management. 2006;42(2):373–386. [Google Scholar]

- 27.Li T, Ding C. The relationships among various nonnegative matrix factorization methods for clustering. In: Proceedings of the 6th IEEE International Conference on Data Mining (ICDM '06); December 2006; Hong Kong. pp. 362–371. [Google Scholar]

- 28.Ding C, Li T, Peng W, Park H. Orthogonal nonnegative matrix tri-factorizations for clustering. In: Proceedings of the 12th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD '06); August 2006; Philadelphia, Pa, USA. pp. 126–135. [Google Scholar]

- 29.Zass R, Shashua A. A unifying approach to hard and probabilistic clustering. In: Proceedings of the 10th IEEE International Conference on Computer Vision (ICCV '05), vol. 1; October 2005; Beijing, China. pp. 294–301. [Google Scholar]

- 30.Banerjee A, Merugu S, Dhillon IS, Ghosh J. Clustering with Bregman divergences. In: Proceedings of the 4th SIAM International Conference on Data Mining (SDM '04); April 2004; Lake Buena Vista, Fla, USA. pp. 234–245. [Google Scholar]

- 31.Cho H, Dhillon IS, Guan Y, Sra S. Minimum sum-squared residue co-clustering of gene expression data. In: Proceedings of the 4th SIAM International Conference on Data Mining (SDM '04); April 2004; Lake Buena Vista, Fla, USA. pp. 114–125. [Google Scholar]

- 32.Wild S. Seeding nonnegative matrix factorization with the spherical k-means clustering . Boulder, Colo, USA: University of Colorado; 2000. M.S. thesis. [Google Scholar]

- 33.Berry MW, Browne M, Langville AN, Pauca VP, Plemmons RJ. Algorithms and applications for approximate nonnegative matrix factorization. Computational Statistics and Data Analysis. 2007;52(1):155–173. [Google Scholar]

- 34.Cho Y-C, Choi S. Nonnegative features of spectro-temporal sounds for classification. Pattern Recognition Letters. 2005;26(9):1327–1336. [Google Scholar]

- 35.Brunet J-P, Tamayo P, Golub TR, Mesirov JP. Metagenes and molecular pattern discovery using matrix factorization. Proceedings of the National Academy of Sciences of the United States of America. 2004;101(12):4164–4169. doi: 10.1073/pnas.0308531101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Rao N, Shepherd SJ. Extracting characteristic patterns from genome-wide expression data by nonnegative matrix factorization. In: Proceedings of the IEEE Computational Systems Bioinformatics Conference (CSB '04); August 2004; Stanford, Calif, USA. pp. 570–571. [Google Scholar]

- 37.Cichocki A, Zdunek R, Amari S. Independent Component Analysis and Blind Signal Separation. Vol. 3889. New York, NY, USA: Springer; 2006. Csiszár's divergences for nonnegative matrix factorization: family of new algorithms; pp. 32–39. Lecture Notes in Computer Science. [Google Scholar]

- 38.Cichocki A, Zdunek R, Amari S. Nonnegative matrix and tensor factorization. IEEE Signal Processing Magazine. 2008;25(1):142–145. [Google Scholar]

- 39.Donoho D, Stodden V. When does nonnegative matrix factorization give a correct decomposition into parts?. In: Advances in Neural Information Processing Systems 16; 2003; Vancouver, Canada. [Google Scholar]

- 40.Bruckstein AM, Elad M, Zibulevsky M. Sparse nonnegative solution of a linear system of equations is unique. In: Proceedings of the 3rd International Symposium on Communications, Control and Signal Processing (ISCCSP '08); March 2008; St. Julians, Malta. [Google Scholar]

- 41.Theis FJ, Stadlthanner K, Tanaka T. First results on uniqueness of sparse nonnegative matrix factorization. In: Proceedings of the 13th European Signal Processing Conference (EUSIPCO '05); September 2005; Antalya, Turkey. [Google Scholar]

- 42.Laurberg H, Christensen MG, Plumbley MD, Hansen LK, Jensen SH. Theorems on positive data: on the uniqueness of NMF. Computational Intelligence and Neuroscience. 2008;2008:9 pages. doi: 10.1155/2008/764206. Article ID 764206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Cichocki A, Zdunek R. Saitama, Japan: Laboratory for Advanced Brain Signal Processing, BSI, RIKEN; 2006. NMFLAB for signal and image processing. Tech. Rep. [Google Scholar]

- 44.Cichocki A, Amari S, Zdunek R, Kompass R, Hori G, He Z. Extended SMART algorithms for nonnegative matrix factorization. In: Proceedings of the 8th International Conference on Artificial Intelligence and Soft Computing (ICAISC '06), vol. 4029; June 2006; Zakopane, Poland. Springer; pp. 548–562. Lecture Notes in Computer Science. [Google Scholar]

- 45.Zdunek R, Cichocki A. Nonnegative matrix factorization with quasi-Newton optimization. In: Proceedings of the 8th International Conference on Artificial Intelligence and Soft Computing (ICAISC '06), vol. 4029; June 2006; Zakopane, Poland. pp. 870–879. Lecture Notes in Computer Science. [Google Scholar]

- 46.Paatero P. Least-squares formulation of robust nonnegative factor analysis. Chemometrics and Intelligent Laboratory Systems. 1997;37(1):23–35. [Google Scholar]

- 47.Paatero P, Tapper U. Positive matrix factorization: a nonnegative factor model with optimal utilization of error estimates of data values. Environmetrics. 1994;5(2):111–126. [Google Scholar]

- 48.Lee DD, Seung HS. Algorithms for nonnegative matrix factorization. In: Advances in Neural Information Processing Systems 13; 2000; Denver, Colo, USA. pp. 556–562. [Google Scholar]

- 49.Lin. Ch-J. On the convergence of multiplicative update algorithms for nonnegative matrix factorization. IEEE Transactions on Neural Networks. 2007;18(6):1589–1596. [Google Scholar]

- 50.Chu MT, Diele F, Plemmons R, Ragni S. Winston-Salem, NC, USA: Departments of Mathematics and Computer Science, Wake Forest University; 2004. Optimality, computation, and interpretation of nonnegative matrix factorizations. Tech. Rep. [Google Scholar]

- 51.Hoyer PO. Nonnegative matrix factorization with sparseness constraints. Journal of Machine Learning Research. 2004;5:1457–1469. [Google Scholar]

- 52.Lin C-J. Projected gradient methods for nonnegative matrix factorization. Neural Computation. 2007;19(10):2756–2779. doi: 10.1162/neco.2007.19.10.2756. [DOI] [PubMed] [Google Scholar]

- 53.Cichocki A, Zdunek R. Multilayer nonnegative matrix factorization using projected gradient approaches. International Journal of Neural Systems. 2007;17(6):431–446. doi: 10.1142/S0129065707001275. [DOI] [PubMed] [Google Scholar]

- 54.Cichocki A, Zdunek R. Multilayer nonnegative matrix factorization. Electronics Letters. 2006;42(16):947–948. [Google Scholar]

- 55.Cichocki A, Zdunek R. Regularized alternating least squares algorithms for nonnegative matrix/tensor factorizations. In: Proceedings of the 4th International Symposium on Neural Networks on Advances in Neural Networks ( ISNN '07), vol. 4493; June 2007; Nanjing, China. Springer; pp. 793–802. Lecture Notes in Computer Science. [Google Scholar]

- 56.Zdunek R, Cichocki A. Nonnegative matrix factorization with constrained second-order optimization. Signal Processing. 2007;87(8):1904–1916. [Google Scholar]

- 57.Johansson B, Elfving T, Kozlov V, Censor Y, Forssén P-E, Granlund G. The application of an oblique-projected Landweber method to a model of supervised learning. Mathematical and Computer Modelling. 2006;43(7-8):892–909. [Google Scholar]

- 58.Barzilai J, Borwein JM. Two-point step size gradient methods. IMA Journal of Numerical Analysis. 1988;8(1):141–148. [Google Scholar]

- 59.Narkiss G, Zibulevsky M. 559. Haifa, Israel: Department of Electrical Engineering, Technion, Israel Institute of Technology; October 2005. Sequential subspace optimization method for large-scale unconstrained problems. Tech. Rep. 559. [Google Scholar]

- 60.Elad M, Matalon B, Zibulevsky M. Coordinate and subspace optimization methods for linear least squares with non-quadratic regularization. Applied and Computational Harmonic Analysis. 2007;23(3):346–367. [Google Scholar]

- 61.Bellavia S, Macconi M, Morini B. An interior point Newton-like method for nonnegative least-squares problems with degenerate solution. Numerical Linear Algebra with Applications. 2006;13(10):825–846. [Google Scholar]

- 62.Franc V, Hlaváč V, Navara M. Sequential coordinate-wise algorithm for the nonnegative least squares problem. In: Gagalowicz A, Philips W, editors. In: Proceedings of the 11th International Conference on Computer Analysis of Images and Patterns (CAIP '05), vol. 3691; September 2005; Versailles, France. Springer; pp. 407–414. Lecture Notes in Computer Science. [Google Scholar]

- 63.Bertero M, Boccacci P. Introduction to Inverse Problems in Imaging. Bristol, UK: Institute of Physics; 1998. [Google Scholar]

- 64.Dai Y-H, Fletcher R. Projected Barzilai-Borwein methods for large-scale box-constrained quadratic programming. Numerische Mathematik. 2005;100(1):21–47. [Google Scholar]

- 65.Nemirovski A. Orth-method for smooth convex optimization. Izvestiia Akademii Nauk SSSR. Tekhnicheskaia Kibernetika. 1982;2 (Rus). [Google Scholar]

- 66.Freund RW, Nachtigal NM. QMR: a quasi-minimal residual method for non-Hermitian linear systems. Numerische Mathematik. 1991;60(1):315–339. [Google Scholar]

- 67.Fletcher R. Conjugate gradient methods for indefinite systems. In: Proceedings of the Dundee Biennial Conference on Numerical Analysis; July 1975; Dundee, Scotland. Springer; pp. 73–89. [Google Scholar]

- 68.Lanczos C. Solution of systems of linear equations by minimized iterations. Journal of Research of the National Bureau of Standards. 1952;49(1):33–53. [Google Scholar]

- 69.van der Vorst HA. Bi-CGSTAB: a fast and smoothly converging variant of Bi-CG for the solution of nonsymmetric linear systems. SIAM Journal on Scientific and Statistical Computing. 1992;13(2):631–644. [Google Scholar]

- 70.Saad Y, Schultz MH. GMRES: a generalized minimal residual algorithm for solving nonsymmetric linear systems. SIAM Journal on Scientific and Statistical Computing. 1986;7(3):856–869. [Google Scholar]

- 71.Hestenes MR, Stiefel E. Method of conjugate gradients for solving linear systems. Journal of Research of the National Bureau of Standards. 1952;49:409–436. [Google Scholar]

- 72.Hansen PC. Rank-Deficient and Discrete Ill-Posed Problems. Philadelphia, Pa, USA: SIAM; 1998. [Google Scholar]

- 73.Paige CC, Saunders MA. LSQR: an algorithm for sparse linear equations and sparse least squares. ACM Transactions on Mathematical Software. 1982;8(1):43–71. [Google Scholar]

- 74.Nagy JG, Strakos Z. Enforcing nonnegativity in image reconstruction algorithms. In: Mathematical Modeling, Estimation, and Imaging, vol. 4121; July 2000; San Diego, Calif, USA. pp. 182–190. Proceedings of SPIE. [Google Scholar]