Abstract

Objective

Event-related brain potentials (ERP) may provide tools for examining normal and abnormal language development. To clarify functional significance of auditory ERPs, we examined ERP indices of spectral differences in speech and non-speech sounds.

Methods

Three Spectral Items (BA, DA, GA) were presented as three Stimulus Types: syllables, non-phonetics, and consonant-vowel transitions. Fourteen 7–10-year-old children and 14 adults were presented with equiprobable Spectral Item sequences blocked by Stimulus Type.

Results

Spectral Item effect appeared as P1, P2, N2, and N4 amplitude variations. The P2 was sensitive to all Stimulus Types in both groups. In adults, the P1 was also sensitive to transitions while the N4 was sensitive to syllables. In children, only the 50-ms CVT stimuli elicited N2 and N4 spectral effects. Non-phonetic stimuli elicited larger N1-P2 amplitudes while speech stimuli elicited larger N2- N4 amplitudes.

Conclusions

Auditory feature processing is reflected by P1-P2 and N2-N4 peaks and matures earlier than supra-sensory integrative mechanisms, reflected by N1-P2 peaks. Auditory P2 appears to pertain to both processing types.

Significance

These results delineate an orderly processing organization whereby direct feature mapping occurs earlier in processing and, in part, serves sound detection whereas relational mapping occurs later in processing and serves sound identification.

Keywords: auditory, children, event-related potentials, ERP, non-speech, speech

Introduction

Neural mechanisms underlying language comprehension are not fully understood. While the “innateness” account postulates that humans are born with pre-wired neural network devoted to language processing (Pinker, 1994), the “emergenist” account (Bates, in press) suggests that, while inborn morpho-functional biases exist, language ability is built on the basis of non-linguistic skills such as sensory, motor, attention, and gestures (MacWhinney, 1999; Dick et al., 2004). Therefore, emergence and development of language should be examined in the context of maturation of its non-linguistic precursors. In this paper, we examine language-relevant aspects of auditory processing, namely processing of spectral auditory features in speech and non-speech sounds.

Event-related potentials (ERPs) offer an excellent non-invasive tool to study functional brain systems and their development. However, for this tool to be instrumental, functional roles of the registered ERP features must be understood. Auditory cortical ERPs consist of P1, N1, P2, N2, and N4 peaks. The generators of the P1 and N1 waves are tonotopically and ampliotopically organized (Pantev et al., 1989; Pantev et al., 1995), (but see Lütkenhöner et al., 2003), and it has been shown that the N1 parameters vary with tone frequency (Näätänen et al., 1988; Wunderlich and Cone-Wesson, 2001; Wunderlich et al., 2006). However, in adults the P1 and N1 waves do not show systematic amplitude or latency changes as a function of perceived sound features, such as loudness or pitch (Parasuraman et al., 1982; Woods et al., 1984; Näätänen and Picton, 1987). Instead, perception of subtle differences between sounds is indexed by the mismatch negativity potential (MMN, 140 – 250 ms, Näätänen and Winkler, 1999; Picton et al., 2000) that has been shown to co-vary with the timing and accuracy of behavioral discrimination of fine acoustic and phonetic contrasts (Lang et al., 1990; Tiitinen et al., 1994; Winkler et al., 1999). However, stimulus feature – ERP response relationships have not been systematically investigated for any of the adult ERP peaks except for the N1. Given that peaks other than N1 predominate ERPs during the age when language is acquired, examining their roles in auditory processing appears to be warranted.

The most salient features of children’s auditory ERPs are two large deflections, the P1/P2 and N2/N4 peaks (Bruneau and Gomot, 1998; Èeponienë et al., 1998; Ponton et al., 2002; Èeponienë et al., 2002). In rudimentary form, this complex is present at birth and is fully formed by 6–9 months of age (Kurtzberg et al., 1986; Kushnerenko et al., 2002). This age is remarkable for rapid acquisition of receptive language. After that, it takes a long period of time, from approximately 7 to 16–18 years of age, for the children’s auditory ERP waveform to transition into an adult-like P1-N1-P2-N2-N4 sequence (Ponton et al., 2000). This suggests that the ERP signatures observed during early childhood (P1-N2-N4), and not necessarily those emerging by adolescence (e.g., N1-P2), reflect the neural processes critical for the development of basic auditory skills, sound recognition, and receptive language. Therefore, it is important to understand the functional roles of the children’s ERP peaks and to map them onto adult correlates. In particular, this is necessary for elucidating mechanisms of neuro-developmental disorders characterized by language and attention impairments.

In an earlier study (Èeponienë et al., 2001), we found that the amplitude of the auditory P1 peak increased linearly with increasing sound complexity (simple tones, complex tones, acoustically matched vowels). The N2, however, increased in amplitude in response to complex tones as compared with simple tones, but did not further increase in amplitude in response to even more complex vowel stimuli. In contrast, the amplitude of the N4 peak was comparable in simple vs. complex tone trials but increased in vowel trials. That is, the non-linear acoustic complexity - neural activation pattern emerged by the time of N2 peak and changed in nature by the time of the N4 peak. These data showed that children’s ERPs reflect sound complexity and “speechness” and that successive peaks may reflect orderly organized stages of sound content processing. It appears that early on, acoustic feature mapping is either linear (distinct neural populations responding to simple vs. complex stimuli) or additive (acoustically more complex stimuli excite more neurons) but later on, integrative processing takes over and higher-order sound features, such as those based on relational aspects, determine the electrophysiological brain’s response. Our subsequent study (Èeponienë et al., 2005) extended these findings to consonant-vowel syllable stimuli and included an adult subject group. In this study, the speech and non-phonetic stimuli were maximally matched on acoustic complexity with the goal of identifying ERP indices specific to speech sound processing. The findings of this study corroborated those of Èeponienë et al. (2001) by showing that while the N1 (adults) and P2 (children and adults) peaks were enhanced by the non-phonetic stimuli, the N2 and N4 peaks were enhanced by the syllables. Overall, these findings are in line with the electric and magnetic data from adults showing that the largest activation differences between speech and non-speech stimuli occur in a time range following the N1 peak (Eulitz et al., 1995; Diesch et al., 1996; Szymanski et al., 1999, 1999).

Therefore, the N2/N4 peaks may reflect either a comprehensive, fine-grained acoustic analysis or a higher-order encoding of sound content features. Either of these are likely to be instrumental for auditory perception and therefore for successful maturation of basic auditory skills and for receptive language. Indeed, neonatal and infantile speech-sound ERP components in the P1, N2, and N4 latency ranges were found to predict language and reading abilities at 3, 5, and 8 years of age (Molfese, 1989) and diminished N2 and N4 peaks have been found in children with developmental dysphasia (Korpilahti and Lang, 1994; Korpilahti, 1995), reading impairment (Neville et al., 1993), and language impairment (Èeponienë et al., 2006).

Stimulus-response relationships of the adult N1/P2 peaks appear to tell quite a different story. The N1 is mostly sensitive to sound audibility and salience and parallels behavioral sound detection thresholds (Martin et al., 1997). Based on its known functional significance in adults as well as late emergence and specific elicitation conditions in children (long inter-stimulus intervals, ISI), we have suggested (Èeponienë et al., 2002; Èeponienë et al., 2005) that, at least partially, the N1 emerges as a complementing and balancing mechanism to the development of focused and sustained attention (Gomes et al., 2000; Coch et al., 2005b; Wible et al., 2005). That is, the auditory N1 may reflect a “gate-keeping” mechanism for sensory information, which depends on the load and direction of the ongoing mental activity. In contrast to the N1, the auditory N2 does not appear to vary with stimulus audibility or salience. For example, the N2 does not increase in amplitude with long ISIs (Èeponienë et al., 1998) or diminish in amplitude with sound repetition, as the N1 does. Instead, in both children and adults, the N2 increased in amplitude with consecutive stimuli (Karhu et al., 1997). This finding was interpreted to reflect a build up of short-term representation for the repeated sound stimulus.

Another robust maturational change in the ERP waveform is segregation of the P1 and P2 peaks upon the emergence of the N1. Views on the development of the P2 peak are inconsistent. Some researchers posit that auditory P2 emerges early in infancy (Barnet et al., 1975; Kurtzberg et al., 1984; Novak et al., 1989), while others state that it does not appear until 5–6 years of age (Ponton et al., 2000). This is difficult to resolve since only one positivity, traditionally identified as the P1, is present in infants and young children (Ponton et al., 2000; Kushnerenko et al., 2002; Èeponienë et al., 2002). However, a recent study that used very long inter-stimulus intervals (3–6 sec) was able to demonstrate both the N1 and P2 peaks in infants (Wunderlich et al., 2006). Consistent with this, using an Independent Component Analysis (Makeig et al., 1997), we were able to demonstrate that P2-like activity is present in a later portion of the 7–10-year-old children’s P1 peak (Èeponienë et al., 2005). In that study, the P1- and P2-like activities were distinguished by differences in scalp distribution and responsivity to stimulus salience. Therefore, it appears that children’s P1 represents a correlate of adult P1 and P2 peaks (hence referred to as P1/P2). The children’s auditory P2 activity responded to the more salient non-phonetic stimuli in a manner similar to that of the adult N1 and P2. Therefore, we suggested that the auditory P2 might reflect a sensory-attentional interface that comes online years before the more effective, “shortcut” N1 mechanism becomes functional. Specifically, the P2 is sensitive to stimulus salience – increases in amplitude with longer ISIs (Williams et al., 2006), shows robust refractoriness effects in children and adults (Coch et al., 2005a), and enhances in amplitude during selective attention tasks (Coch et al., 2005b). On the other hand, to a certain degree the P2 also reflects content feature perception (Crowley and Colrain, 2004) - it was enhanced during a discrimination, as compared with detection, task (Novak et al., 1992) and was was larger in amplitude in response to 400-Hz tones than to 3000-Hz tones in neonates, toddlers, children, and adults (Wunderlich et al., 2006). Finally, the P2 was about 3-fold enhanced after perceptual training of initially not discriminable syllables (Tremblay et al., 2001; Tremblay and Kraus, 2002).

The present study is a part of an ongoing inquiry into the functional roles of children’s auditory sensory ERPs and mapping them onto adult correlates. Specifically, we aimed to determine which ERP regions, if any, are sensitive to spectral1 auditory contrasts in speech stimuli and acoustically matched non-phonetic stimuli. Based on the earlier data, we hypothesized that the N4 region of child and adult’s ERPs, earlier identified as enhanced specifically in response to speech stimuli, would also be sensitive to spectral differences between speech sounds. Similarly, we hypothesized that the P1/N2 region of child and adult’s ERPs, earlier identified as distinguishing between different levels of complexity in non-phonetic stimuli, would also be sensitive to spectral differences in non-phonetic stimuli. No strong predictions could be made for the P2 peak. Overall, we expected to find comparable ERP indices of spectral processing in children and adults, consistent with the notion that processing of sound content acoustics is well mature by mid-childhood.

Methods

Subjects

Seventeen normally developing children were recruited to the study via advertisements in parent magazines and contacts with elementary and middle schools. Participants were screened for developmental or acquired neurological disorders, learning and language disabilities, hearing, vision, emotional, or behavioral problems. Three subjects were excluded from this report due to excessive noise in their ERP data. The mean age of the remaining 14 children was 8 yr 3 mo (range 7 yr 0 mo – 10 yr 2 mo, 4 males). Adult group consisted of 14 college students (mean age 19 yr 9 mo, range 18 yr 2–22 yr 8 mo, 6 males) who participated for a course credit.

Fourteen adult subjects (10 same as in the ERP experiment, the other 4 matched by age and gender) and 14 children (12 same as in the ERP experiment, the other 2 matched by age and gender) performed a behavioral Syllable Discrimination task. All participants were right-handed native speakers of American English. All of them signed informed consent in accordance with the UCSD Institutional Review Board procedures.

Stimuli

Stimuli were created using Semi-synthetic Speech Generation method (SSG, Alku et al., 1999). The SSG allows quantitatively modifying natural speech stimuli according to the particular goal of a study. The SSG method first extracts, from a natural utterance, a glottal excitation waveform generated by the fluctuating vocal folds, which is then used to excite an artificial vocal tract model, specific to the phoneme to be created. Using natural glottal excitation renders a realistic prosody and jitter in the periodic structure of the synthesized waveform2.

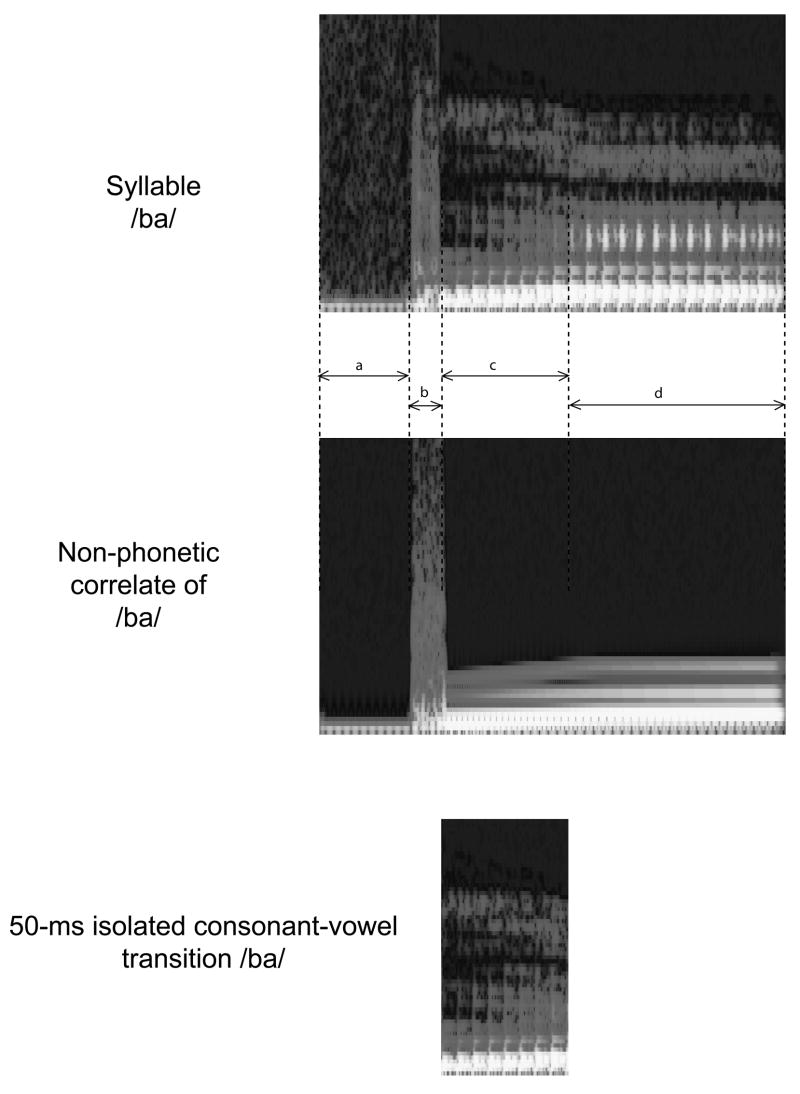

For this study, three consonant-vowel syllables, /ba/, /da/, and /ga/, spoken by a female speaker of American English, were recorded, digitized, and served as a raw material for computing the stimulus synthesis parameters with the SSG. Computed were the glottal excitation waveform, the formant frequencies for the three voiced plosive consonants (/b/, /d/, /g/), as well as formant frequencies for the vowel /a/. The consonant burst section of the syllables was copied from the corresponding original recordings. A 30-ms pre-consonant voice bar (segment a in Fig. 1), present in the natural /ba/ syllables, was also added to the /da/ and /ga/ stimuli to make the gross structure of all stimuli identical. Using these parameters, the semi-synthetic syllables were computed by means of an adaptive digital vocal tract filter, formant frequencies of which changed linearly from those estimated from the consonant bursts to the settings estimated from the vowel. The duration of the consonant burst was 10 ms. Three exemplars of each syllable were created that differed in the duration of the consonant-to-vowel transition (CVT, from the end of the consonant burst to the beginning of the steady-state vowel; bottom panel in Figure 1). The three CVTs were 20, 50, and 80 ms in duration. These CVT segments were followed by an identical steady-state vowel /a/ lasting for another 60 ms. Therefore, the total durations of the syllable and non-phonetic correlate stimuli were 120, 150, or 180 ms, with the pre-voicing of 30 ms, consonant burst of 10 ms, CVTs spanning 20-, 50-, or 80-ms, respectively, and the steady-state vowel lasting 60 ms. The formants of the steady-state vowel segment were: F1 - 1075, F2 - 1445, F3 - 2930, F4-4910 Hz. The starting formant frequencies for the consonant-vowel transitions were: /ba/, F1 - 605, F2 -1150, F3 - 2700, and F4 - 3960 Hz; /da/, F1 - 540, F2 - 1895, F3 - 3210, and F4 - 4005 Hz; /ga/: F1 - 410, F2 - 1785, F3 - 2650, and F4 - 3790 Hz. Since the same glottal excitation was used in the synthesis of all syllables, acoustically these stimuli differed only in terms of the plosives and formant transitions, whereas the rest of the sound features (fundamental frequency, intonation, phonation type, intensity, duration) were held equal.

Figure 1.

Examples of stimulus waveforms, spectral view. Top panel: 50-ms CVT syllable /ba/. Middle panel: non-phonetic correlate of the syllable /ba/. Bottom panel: 50-ms consonant-vowel transition isolated from 50-ms CVT syllable /ba/. a - pre-consonant voice bar (30 ms); b – consonant burst (10 ms); c – consonant-to-vowel transition (50 ms); d – steady-state vowel (80 ms).

The non-phonetic correlates of the nine syllables were created as a composition of five sinusoidal tones. The frequencies and intensity levels of the tones were selected on the basis of the formant frequencies of the syllables, as computed by the SSG: the lowest tone of each non-phonetic stimulus was selected to match the fundamental frequency of the underlying syllable, while the rest of the four sinusoidals matched the harmonic component in the vicinity of the four lowest formants in the spectrum of the corresponding syllable. The burst sections were synthesized to match the spectra of the corresponding burst sections in the syllables. Finally, the shapes of the burst-to-steady state formant transitions, the durations, and intensities of the non-phonetic stimuli were equalized to those of the corresponding speech stimuli.

Nine spectrally different stimuli were used in the present study: three syllables (/ba/, /da/, and /ga/), their three non-phonetic correlates, and three isolated consonant-vowel transitions. Each of the 9 stimuli had three consonant-vowel transition durations (20-, 50-, 80-ms), which resulted in a total of 27 stimulus items. The stimuli were perceptually validated by 17 adult subjects who performed a sound identification task (Èeponienë et al., 2005)

Stimulus presentation

The stimuli were presented in blocks of 325 (children) or 450 (adults) sounds. In the same block, sounds of the same Stimulus Type (syllables, non-phonetics, or isolated CVTs) and CVT duration were presented. E.g., one block contained the 3 syllables (/ba/, /da/, /ga/), all with 50-ms CVT duration, etc. Therefore, were 9 different stimulus blocks (3 stimulus types × 3 CVT durations). Within any given block, the 3 stimuli were presented with equal probabilities. The intensities of the different-duration stimuli were equalized to be 62 dB SPL at the subject’s head. The onset-to-onset inter-stimulus interval varied randomly between 700 and 900 ms. Sounds were delivered by stimulus presentation software (Presentation® software, Version 0.70, www.neurobs.com) and played via 2 loudspeakers, situated 120 cm in front and 40 degrees to the sides of a subject. During the experiment, subjects watched a self-selected soundless video on a computer monitor.

Behavioral Syllable discrimination task

To provide a perceptual index of syllable discrimination as a function of CVT duration in a syllable, 14 adults and 14 children performed a Syllable Discrimination Task. During this task, the subjects listened to a total of 180 syllable pairs (Same or Different, 50% each) presented at 1-sec inter-stimulus intervals. They pressed a “happy-face” button or a “sad-face” button to indicate whether the sounds were same or different, respectively. The response was considered valid if it occurred within 150 ms – 3 sec after onset of the second stimulus of a pair. Syllables with 20-ms and 80-ms consonant-to-vowel transitions were presented to allow assessing perceptually more difficult and less difficult conditions, respectively. In order to control for potential differences in response bias, we analyzed a measure of discrimination sensitivity, d prime (d′) computed from z transforms of hit and false alarm rates. In this calculation, omissions were excluded from the total number of trials.

EEG recording and averaging

Continuous EEG was recorded using a 32-electrode cap (Electrocap, Inc.) with the following electrodes attached to the scalp according to the 10–20 system: FP1, FP2, F7, F8, FC6, FC5, F3, Fz, F4, TC3, TC4 (30% distance from T3 to C3 and T4 to C4, respectively), FC1, FC2, C3, Cz, C4, PT3, PT4 (halfway between P3 – T3 and P4 –T4, respectively), T5, T6, CP1, CP2, P3, Pz, P4, PO3, PO4, O1, O2, and right mastoid. Eye movements were monitored with two electrodes, one attached below the left eye and another at the outer corner of the right eye. During data acquisition, all channels were referenced to the left mastoid; offline, data was re-referenced to the average of the left- and right-mastoid recordings.

The EEG (0.01 – 100 Hz) was amplified 20,000 × and digitized at 250 Hz for the off-line analyses. An independent-component analysis (ICA, Jung et al., 2000) was used to correct for eye blinks and lateral movements. After this, each data set has been visually examined and the artifact rejection values were adjusted individually for each subject to maximize rejection of all visible artifact. Epochs containing 100 ms pre-stimulus and 800 ms post-stimulus time were baseline-corrected with respect to the pre-stimulus interval and averaged by stimulus type. Frequencies higher than 60 Hz were filtered out by convolving the data with the Gaussian function. For a subject to be included in final analysis, they had to have at least 60 accepted epochs per smallest bin (e.g., bin of syllable /ba/ with 20-ms CVT), although most had 75 to 85. On average, children’s individual data contained 233, 233, and 231 accepted trials per syllable bins /ba/, /da/, and /ga/, respectively; 220, 213, and 215 accepted trials per each corresponding non-phonetic bin, and 218, 220, and 218 trials per each corresponding transition bin. The respective sums in adult data were 397, 396, 401 (syllables), 389, 390, 387 (non-phonetics), and 401, 398, 399 (transition) accepted trials.

Data measurement and analysis

Peak search windows were determined by visual inspection of syllable, non-phonetic, and CVT grand-average waveforms of each age group (Figures 2 and 4; Table 1). Peak latencies, measured from these windows, were used to center a 24-ms interval over which the mean amplitudes were calculated.

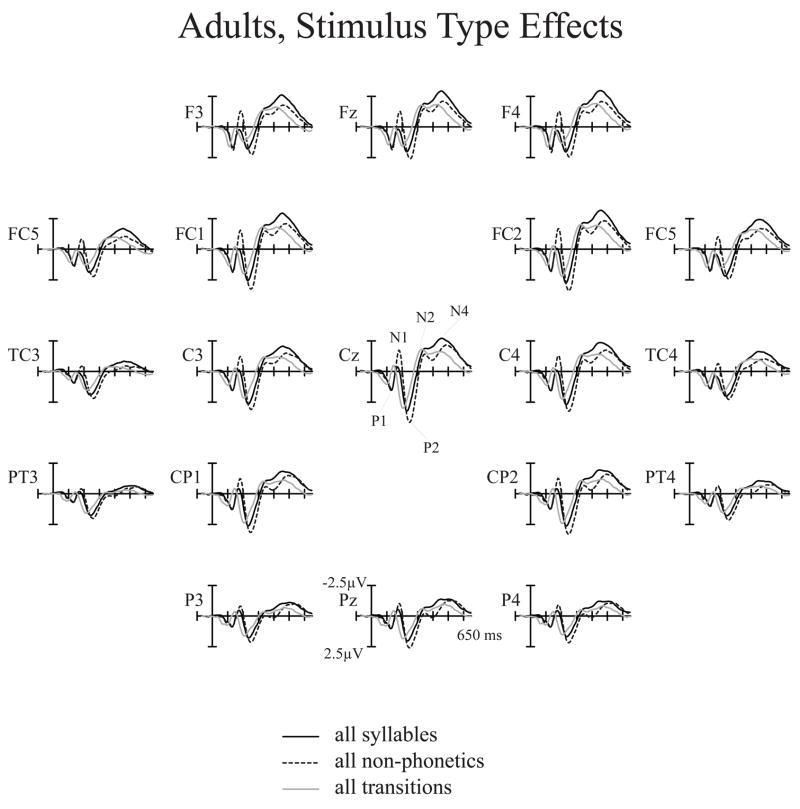

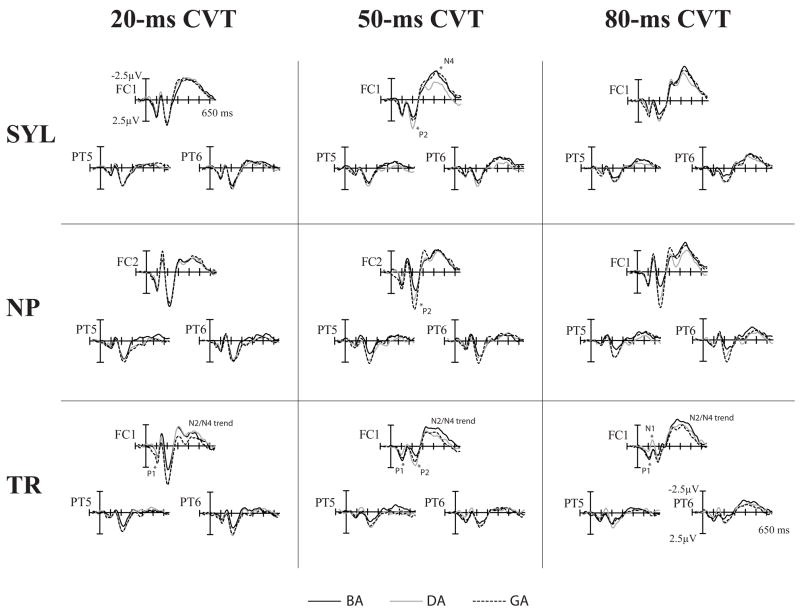

Figure 2.

Stimulus Type effects in adults. Adult syllable, non-phonetic, and transition grand-average ERPs averaged over all CVT durations and spectral items. Non-phonetic stimuli elicited larger N1 and P2 peaks whereas syllable stimuli elicited larger N2 and N4 peaks.

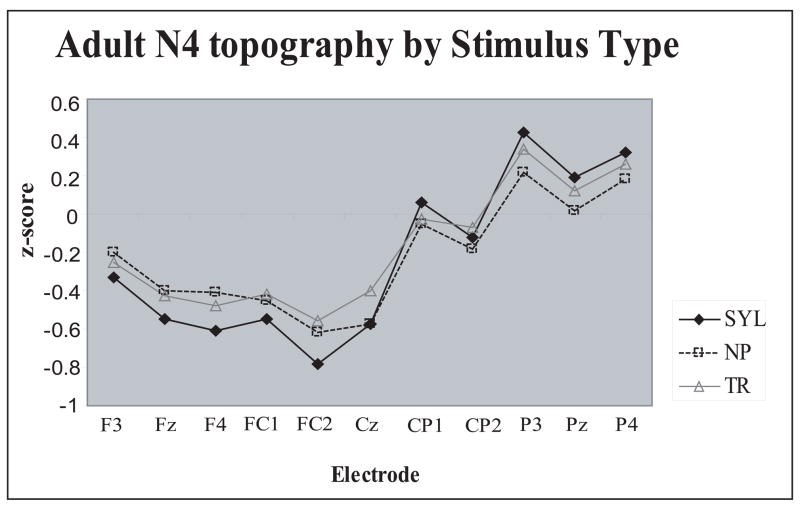

Figure 4.

Anterior-posterior scalp distribution differences of the adult N4 peak as a function of Stimulus Type. Syllable N4 showed the steepest anterior-posterior slope. Z score reflects N4 amplitudes normalized by Stimulus Type.

Table 1.

Peak search windows (ms) derived from the grand-average waveforms. Mean amplitudes were calculated as ± 12ms around peak latencies. SYL – syllables; NP – non-phonetic correlates, TR - transitions.

| Stimulus | P1 | N1 | P2 | N2 | N4 | |

|---|---|---|---|---|---|---|

| Adults | SYL | 30–130 | 100–200 | 170–270 | 250–370 | 370–570 |

| NP | 30–130 | 100–200 | 170–270 | 250–370 | 410–520 | |

| TR | 10–100 | 40–140 | 140–240 | 220–350 | 320–470 | |

|

| ||||||

| Children | SYL | 70–170 | - | - | 270–370 | 350–470 |

| NP | 70–190 | - | - | 270–390 | 410–520 | |

| TR | 50–150 | - | - | 200–320 | 350–500 | |

The initial statistical analysis was a four-way ANOVA run on mean amplitudes and peak latencies with factors of Stimulus Type (Syllables, Non-phonetics, Transitions), CVT duration (20, 50, or 80 ms), Spectral Item (BA, DA, GA), and Electrode (n=21 in adults, n=23 in children). In adults, the 21 electrodes were F3, Fz, F4, FC1, FC2, TC3, TC4, FC5, FC6, C3, Cz, C4, CP1, CP2, PT3, P3, Pz, P4, PT4, PO3, and PO3. In children, the F7 and F8 electrodes were used in addition according to the extent of the effects seen in their grand average waveforms. To specifically examine Spectral Item effects, the main focus of this paper, separate three-way ANOVAs were run on Syllable, Non-phonetic, and Transition stimuli. The factors were Spectral Item, CVT duration, and Electrode. The sources of significant interactions were clarified using post hoc Pairwise Comparisons. All scalp distribution analyses were done using amplitudes normalized using z-score technique (McCarthy and Wood, 1985). The data were normalized at the level of the analyses of interest (e.g., by Stimulus Type if this variable interacted with the Electrode factor). Because large amplitude differences, and different waveforms, were observed in the two age groups, no direct between-group comparisons were performed. Within each group, separate analyses were performed to assess the laterality differences (Hemisphere effect), by contrasting the amplitudes measured over 8 × 2 lateral scalp sites (F7, F3, FC5, FC1, TC5, C3, TP5, CP1 vs. F8, F4, FC6, FC2, TC6, C4, TP6, CP2). Huynh-Feldt correction was applied whenever appropriate.

Results

Behavioral Syllable Discrimination Task

Overall, adults were more accurate (F(1,26)=26.36, p<.0001; d’ adults - 4.59, children - 3.48, p <.0001) and faster (F(1,26)=35.71, p<.0001) than children (Table 2).

Table 2.

Behavioral performance on Syllable Discrimination Task.

| 20-ms syllables | 80-ms syllables | All syllables | |||||||

|---|---|---|---|---|---|---|---|---|---|

| same | diff | all | same | diff | all | same | diff | all | |

| Accuracy (% correct) | |||||||||

| Adults | 97.6% | 98.6% | 98.2% | 97.1% | 97.7% | 97.5% | 97.3% | 98.1% | 97.8% |

| Children | 88.3% | 84.2% | 85.6% | 88.5% | 83.8% | 85.3% | 88.4% | 83.9% | 85.4% |

| Reaction time for correct responses (Standard Deviation) | |||||||||

| Adults | 830 (157) | 880 (144) | 863 (144) | 860 (143) | 823 168) | 860 (155) | 826 (146) | 880 (151) | 866 (147) |

| Children | 1191 (225) | 1405 (294) | 1331 (249) | 1192 (226) | 1380 (284) | 1317 (251) | 1119 (214) | 1394 (274) | 1349 (246) |

The CVT duration or pairing (Same-Same vs. Same-Different) did not affect discrimination accuracy in either age group. However, reaction times were shorter for Same than Different stimulus pairs (F(1,26)=28.72, p<.0001), and this difference was greater in children than adults (Group × Same-Different interaction F(1,26)=9.63, p<.005, η2 =.27; 1192 vs. 1392 ms and 826 vs. 880 ms, respectively).

ERP results - Adults

Main Stimulus Type and CVT duration effects

Stimulus Type effects replicated our earlier findings (Èeponienë et al., 2005) showing that the non-phonetic stimuli elicit larger N1 and P2 peaks, whereas syllables elicit larger N2 and N4 peak. These effects, which are summarized in Tables 3, 5 and Figs. 2, 3, and 4 have been interpreted to reflect distinct functions of the N1-P2 peaks (sensory detection, arousal, sensory-attention interface) vs. those of the N2-N4 peaks (integrative processing of sound content).

Table 3.

Main Stimulus Type and CVT duration effects on ERP peak amplitudes.

| ADULTS | |||||

|---|---|---|---|---|---|

| P1 | N1 | P2 | N2 | N4 | |

| Stimulus Type | - | SYL<NP>TR SYL = TR | SYL<NP>TR SYL = TR | SYL>NP<TR | SYL>NP,TR NP>TR |

| - | F(2,26)=18.66, p<.0001, η 2 =.59 | F(2,26)=4.91, p<.03, η 2 =.27 | F(2,26)=4.97, p<.02, η 2 =.28 | F(2,26)=39.75, p<.0001, η 2 =.75 | |

| CVT duration | 20>50>80 | SYL only: 20>50=80 | 20>50>80 | NP only: 20<50<80 | 20<50<80 |

| a trend | F(2,26)=8.04, p<.002, η 2 =.38 | F(2,26)=9.50, p<.002, η 2 =.42 | F(2,26)=4.12, p<.03, η 2 =.24 | F(2,26)=27.23, p<.0001, η 2 =.68 | |

| CHILDREN | |||||

| Stimulus Type | SYL < NP | - | - | ST × EL: TR anterior | SYL>NP,TR |

| F(1,13)=7.44, p<.02, η 2 =.36 | - | - | F(44,528)=2.67, p<.001, η 2 =.18 | F(2,24)=10.08, p<.001, η 2 =.46 | |

| CVT duration | N.S. | - | - | 20<50<80 | 20<50<80 |

| - | - | - | F(2,24)=9.14, p<.005, η 2 =.43 | F(2,24)=10.26, p<.002, η 2 =.46 | |

Unless indicated otherwise, the F statistics refer to the Main Anova effects for Stimulus Type or CVT duration.

Table 5.

Adult ERP amplitudes averaged over the 21 electrodes.

| P1 | N1 | P2 | N2 | N4 | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| /ba/ | /da/ | /ga/ | /ba/ | /da/ | /ga/ | /ba/ | /da/ | /ga/ | /ba/ | /da/ | /ga/ | /ba/ | /da/ | /ga/ | |

| SYL | 1.40 (.19) | 1.32 (.17) | 1.61 (.27) | −.25 (.31) | −.27 (.32) | −.03 (.41) | 2.01 (.40) | 2.52 (.40) | 2.47 (.50) | −1.60 (.34) | −1.60 (.36) | −1.58 (.33) | −2.54 (.28) | −2.26 (.28) | −2.46 (.22) |

| NP | 1.38 (.22) | 1.33 (.22) | 1.48 (.19) | −1.21 (.38) | −1.05 (.29) | −1.28 (.34) | 2.37 (.45) | 2.95 (.56) | 3.35 (.59) | −1.04 (.39) | −.81 (.43) | −1.21 (.38) | −2.07 (.30) | −1.92 (.30) | −2.08 (.22) |

| TR | 1.35 (.27) | 1.00 (.23) | 1.44 (.25) | −.43 (.30) | −.67 (.31) | −.24 (.32) | 1.79 (.45) | 2.46 (.43) | 2.31 (.40) | −1.64 (.35) | −1.47 (.38) | −1.21 (.28) | −1.85 (.22) | −1.50 (.31) | −1.36 (.21) |

Data is in microvolts with Standard Error of the Mean in parenthesis.

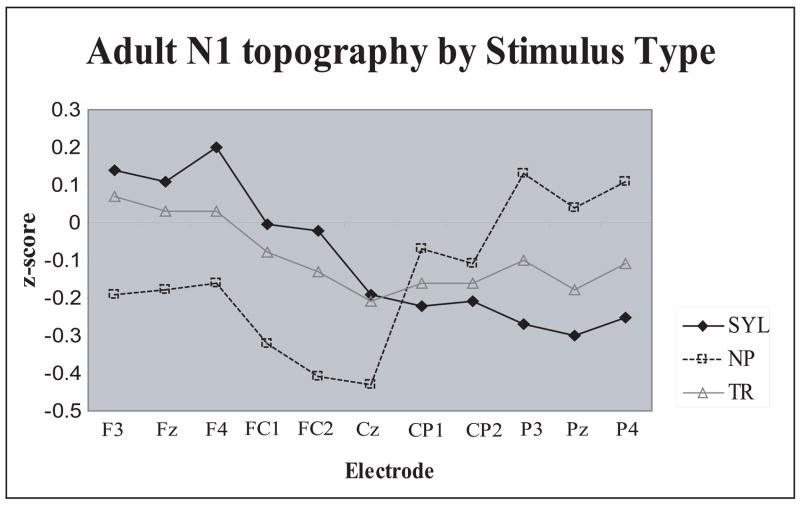

Figure 3.

Anterior-posterior scalp distribution differences of the adult N1 peak as a function of Stimulus Type. The non-phonetic N1 was maximal fronto-centrally, syllable N1 - centro-parietally, and transition N1 did not show a clear predominance. Z score reflects N1 amplitudes normalized by Stimulus Type.

A new Stimulus Type-related finding was the nearly opposite scalp distributions of the N1 and N4 peaks across the three Stimulus Types (normalized amplitudes: Stimulus Type × Electrode interaction, N1: F(40,520)=8.14, p<.0001, η 2 =.39; N4: F(40,520)=5.09, p<.0001, η 2 =.28 ). During the N1 range, response to the syllables was predominantly parietal, that to non-phonetic stimuli – central, and the transition N1 was distributed relatively evenly over the anterior-posterior scalp (Figs. 2 and 3). During the N4 range, response to the syllables was oriented most anteriorly, that to transitions - most posteriorly, and that to the non-phonetic N4 displayed an intermediate pattern (Fig. 4).

Table 3 summarizes also the main CVT duration effects, which are also reflected in Fig. 5. These effects are consistent with earlier findings (Kushnerenko et al., 2001) demonstrating larger negativity in response to longer sounds, a finding that was explained by elicitation of a sustained potential and/or by elicitation of duration-specific neural response.

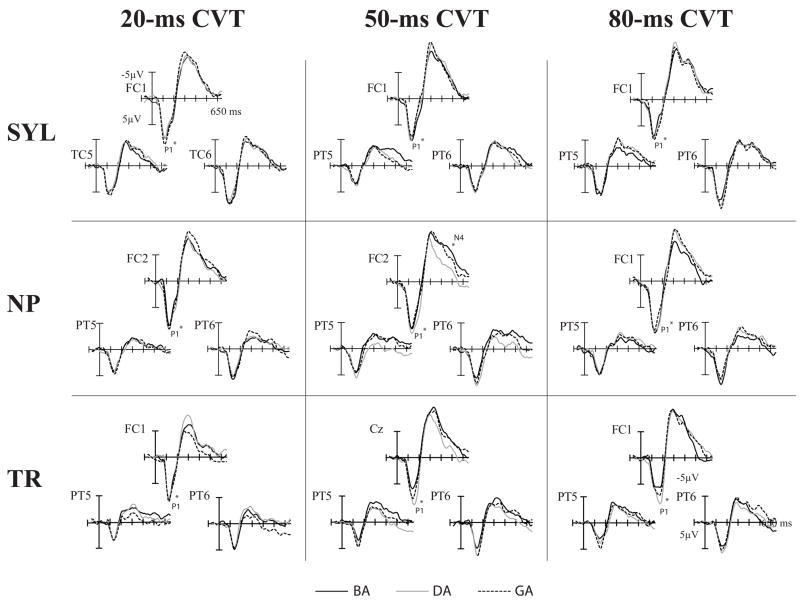

Figure 5.

Spectral Item effects by Stimulus Type and CVT duration in adults. A fronto-central and the right and left lateral electrodes are shown. Significant Spectral Item effects for peak mean amplitudes are indicated by asterisk (significant scalp distribution differences are not noted).

Spectral Item effects, the main focus of the present study, are reported separately for each of the three Stimulus Types (Fig. 5). A summary is given in Table 4. Of all the peaks, the auditory P2 showed the most consistent Spectral Item effects across the three Stimulus Types. Isolated transitions, acoustically simplest stimuli, elicited Spectral Item effects additionally in the earlier P1 and N1 peaks, whereas syllables, the most complex, verbal stimuli, elicited Spectral Item effects additionally in the N4 peak.

Table 4.

Spectral Item effects for each Stimulus Type on ERP peak amplitudes.

| ADULTS | |||||

|---|---|---|---|---|---|

| P1 | N1 | P2 | N2 | N4 | |

| SYL | - | - | /ba/</da/, /ga/ /da/=/ga/ | latency: /ba/>/da/, /ga/ | /ba/>/da/</ga/ SI xEL /ba/ most anterior; diffs over LH |

| NP | - | SI × EL: /da/, /ga/>/ba/ centrally | /ba/</da/, /ga/ /da/</ga/ | SI × EL: /ga/>/ba/, /da/ centrally | SI ×EL: /ga/ most anterior diffs over LH |

| TR | /ba/>/da/</ga/ | /ba/</da/>/ga/ | /ba/</da/, /ga/ /da/=/ga/ | /ba/>/da/, /ga/ | a trend: /ba/>/ga/ |

| CHILDREN | |||||

| SYL | /ba/ < /ga/ | - | - | Only for 50-ms CVT stimuli: /ba/>/da/</ga/ | N. S. |

| NP | /ba/, /da/>/ga/ | - | - | Only for 50-ms CVT NP: /ba/>/da/</ga/ | |

| TR | /ba/</da/, /ga/ | - | - | N. S. | |

Statistics reported in the text.

P1

Neither the syllable nor the non-phonetic P1 showed significant Spectral Item effects (Tables 4 and 5, Fig. 5, upper panel).

The consonant-vowel transitions were the only stimulus type showing a significant Spectral Item effect for the P1 peak (F(2,26)=10.51, p<.0001, η 2 =.45; Fig. 5, Tables 4 and 5). This effect originated from the tr-/da/ P1 being smaller in amplitude than either the tr-/ba/ P1 (p<.006) or tr-/ga/ P1 (p<.001; Fig. 5, lower panel).

Consistent with this finding, the peak latency of the auditory P1 was shorter for the da/ as compared with the /ba/ (p<.03) or /ga/ (p<.004) for transition stimuli and also for syllables (Spectral Item effect: F(2,26)=3.39, p<.05, η 2 =.21 and F(2,26)=4.36, p<.04, η 2 =.25, respectively).

N1

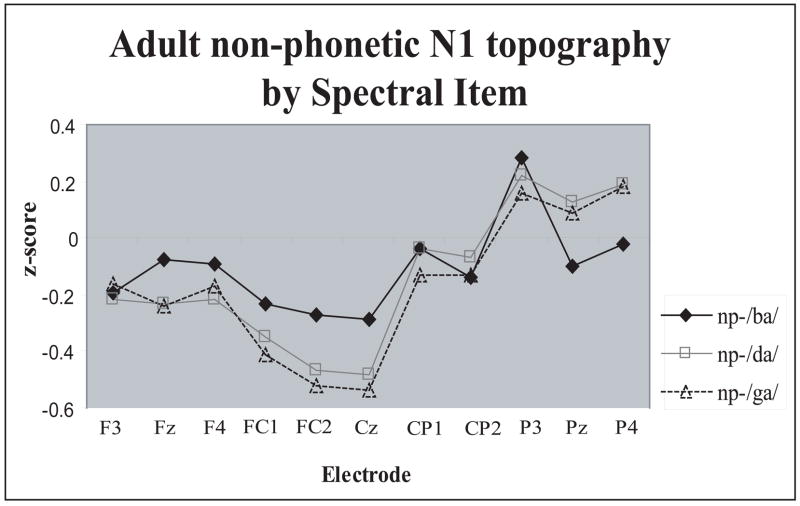

Syllable N1 did not show any Spectral Item related effects (Tables 4 and 5). The only significant effect for the non-phonetic N1 was Spectral Item × Electrode interaction (F(40,520)=3.70, p<.0001, η 2 =.22): the np-/da/ and np-/ga/ elicited larger N1 amplitudes over the FC1, FC2, and Cz electrodes than the np-/ba/ (Fig. 6).

Figure 6.

Anterior-posterior scalp distribution differences of the adult non-phonetic N1 peak as a function of Spectral Item. The difference between /ba/ and /da/, /ga/ was maximal around vertex, a distribution characteristic of non-specific component of the N1. Z score reflects non-phonetic N1 amplitudes normalized by Spectral Item.

Similar to the P1 peak, transitions were the only stimulus type producing a significant Spectral Item effect for the N1 amplitudes (F(2,26)=4.40, p<.02, η 2 =.25). The tr-/da/ N1 was larger in amplitude than either the tr-/ba/ N1 (p<.09) or tr-/ga/ N1 (p<.02; Table 5). However, this mostly originated from the 80-ms stimuli, as corroborated by a significant Spectral Item × CVT interaction (F(4,52)=3.17, p<.02, η 2 =.20; Fig. 5, bottom panel).

P2

The overall Spectral Item effect was highly significant for this peak: F(2,26)=18.45, p<.0001, η 2 =.59. It originated from the stimuli with spectral /ba/ characteristics eliciting smaller P2 amplitudes than stimuli with either the /da/ (p<.0001) or /ga/ (p<.0001) characteristics (Tables 4 & 5, Fig. 5).

The syllable P2 was smaller in amplitude in response to the /ba/ than to either the /da/ (p<.0001) or /ga/ stimuli (p<.03; Spectral Item effect F(2,26)=3.98, p<.05, η 2 =.23). However, there was no difference between the /da/-P2 and /ga/-P2.

The Spectral Item effect of the non-phonetic P2 (F(2,26)=3.98, p<.05, η 2 =.23) was such that np-/ba/ elicited smaller P2 amplitude than either the np-/da/ (p<.05) or np-/ga/ (p<.005), and also the np-/da/ stimuli elicited smaller P2 than the np-/ga/ stimuli (p<.07; Tables 4 & 5; Fig. 5, middle panel).

Similar to the syllable P2, the transition P2 was smaller in amplitude in response to the tr-/ba/ than either to the tr-/da/ or tr-/ga/ stimuli (p<.001 and.03, respectively), but there was no difference between tr-/da/ P2 and tr-/ga/ P2 (Spectral Item effect (F(2,26)=7.82, p<.003, η 2 =.38, Tables 4 & 5).

N2

The N2 elicited by each of the three Stimulus Types was larger in amplitude over the right than left hemisphere (syllables: F(1,13)=10.42, p<.007, η 2 =.45; non-phonetics: F(1,13)=3.76, p<.07, η 2 =.22; transitions: F(1,13)=11.91, p<.004, η 2 =.48).

The syllable N2 amplitude showed no Spectral Item-related effects (Table 4). However, the peak latency of /ba/-N2 (328 ms) was longer than that of the /da/-N2 (319 ms, p<.05) or /ga/-N2 (322 ms, p<.09; F(2,26)=3.10, p<.05, η 2 =.20).

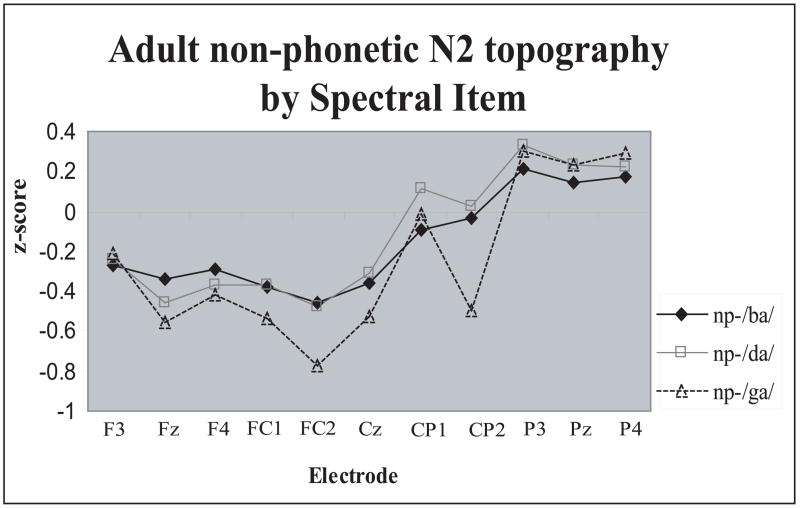

The non-phonetic N2 showed a significant Spectral Item × Electrode interaction (F(40,520)=3.12, p<.001, η 2 =.19): stimuli with /ga/ spectrum elicited larger N2 amplitudes than the other two spectra specifically over the Fz, FC1, FC2, and Cz electrodes (Fig. 5, middle panel, Fig. 7).

Figure 7.

Anterior-posterior scalp distribution differences of the adult non-phonetic N2 peak as a function of Spectral Item. The difference between /ga/ and /ba/, /da/was maximal anterior to the vertex. Z score reflects non-phonetic N2 amplitudes normalized by Spectral Item.

The transition N2 showed a trend (F(2,26)=2.18, p<.1, η 2 =.14) for the Spectral Item effect, with the tr-/ga/ N2 amplitudes being smaller than either the tr-/ba/ or tr-/da/ N2 amplitudes (Tables 4 & 5).

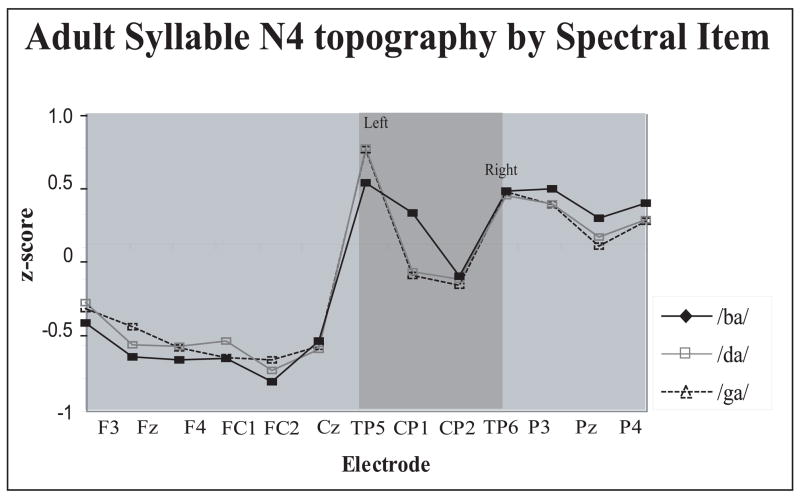

N4

The N4 elicited by each of the three Stimulus Types was larger in amplitude over the right than left hemisphere (syllables: F(1,13)=21.96, p<.001, η 2 =.63; non-phonetics: F(1,13)=16.52, p<.001, η 2 =.56; transitions: F(1,13)=26.64, p<.0001, η 2 =.67).

The syllable N4 showed a trend for Spectral Item × CVT interaction (F(4,52)=1.69, p<.1, η2 =.13,) and Spectral Item × Electrode interaction (F(40,520)=1.90, p<.06, η 2 =.13). The Spectral Item × CVT interaction indicated that there might be Spectral Item N4 differences with the 50-ms CVT syllables but not with the 20- or 80-ms CVT syllables (Fig. 5, top panel). Therefore, the 20- 50-, and 80-ms CVT syllables were analyzed in separate ANOVAs to elucidate stimulus effects within each CVT category. There were no significant stimulus effects with either the 20- or 80-ms CVT syllables, but 50-ms syllables produced a significant N4 amplitude difference (F(2,26)=4.12, p<.03, η 2 =.24) that originated from the syllable /da/ eliciting smaller N4 amplitude than either the /ba/ (p<.006) or /ga/ (p<.05) syllables (Tables 4 & 5, Fig. 5, top panel).

The Spectral Item × Electrode interaction was caused by subtle differences in scalp distribution among the three syllables. As can be seen in Fig. 8, the /ba/-N4 was distributed somewhat anteriorly than either the /da/-N4 or /ga/-N4, and also showed a different topography over the left, but not right, centro-parietal regions (shaded area in Fig. 8).

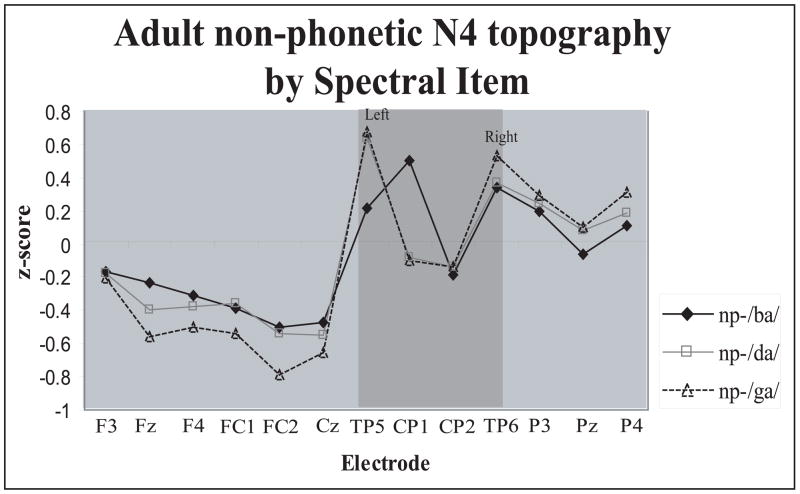

Figure 8.

Scalp distribution differences of the adult syllable N4 peak as a function of Spectral Item. Syllable /ba/ showed distinct topography over the left, but not right, centro-parietal region. Z score reflects syllable N4 amplitudes normalized by Spectral Item.

The non-phonetic N4 showed a significant Spectral Item × Electrode interaction (F(40,520)=2.82, p<.003, η 2 =.18). Almost opposite to the scalp distributions of syllable N4, the most anterior distribution for the non-phonetic stimuli was of the np-/ga/ N4, while the np-/ba/ N4 was the most posterior (Fig. 9). However, the differential distribution over the left lateral temporal area was similar to that of the syllable N4 (shaded area in the Fig. 9).

Figure 9.

Scalp distribution differences of the adult non-phonetic N4 peak as a function of Spectral Item. Non-phonetic /ba/ showed distinct topography over the left, but not right, temporal-parietal region. Z score reflects non-phonetic N4 amplitudes normalized by Spectral Item.

The transition N4 showed a trend for the Spectral Item effect (F(2,26)=2.69, p<.09, η 2 =.18) such that tr-/ba/ elicited larger N4 than the tr-/ga/ (Fig. 5, bottom panel).

ERP results – Children

Main Stimulus Type and CVT duration effects

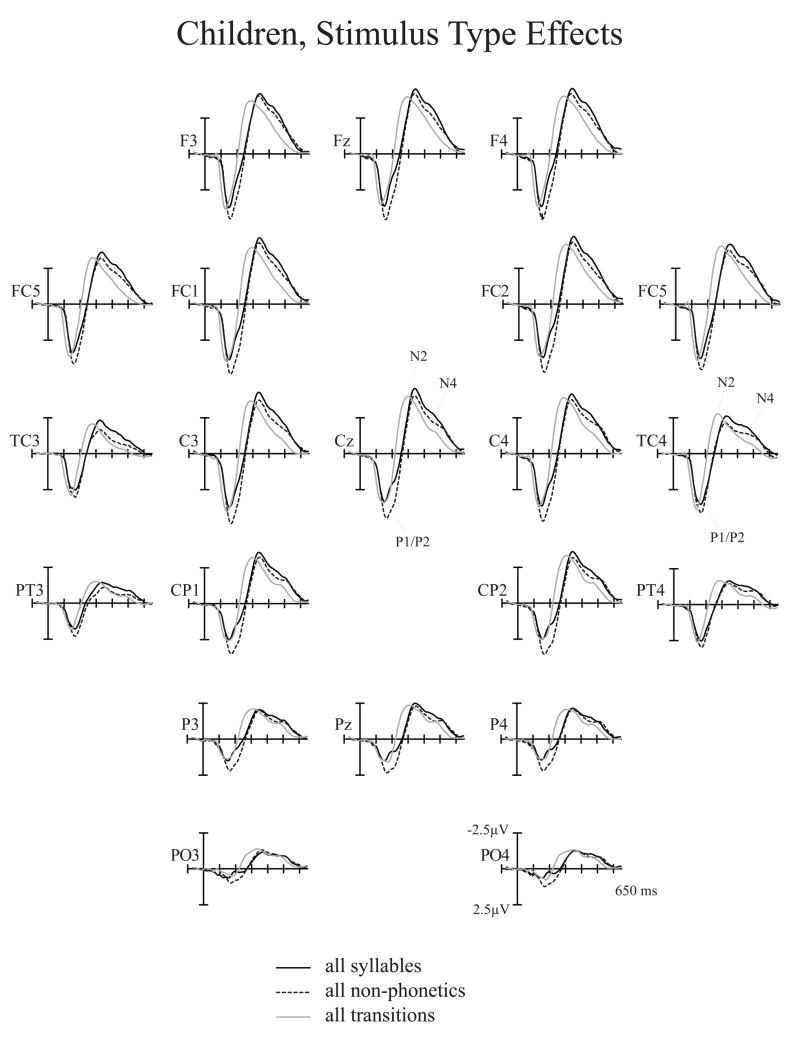

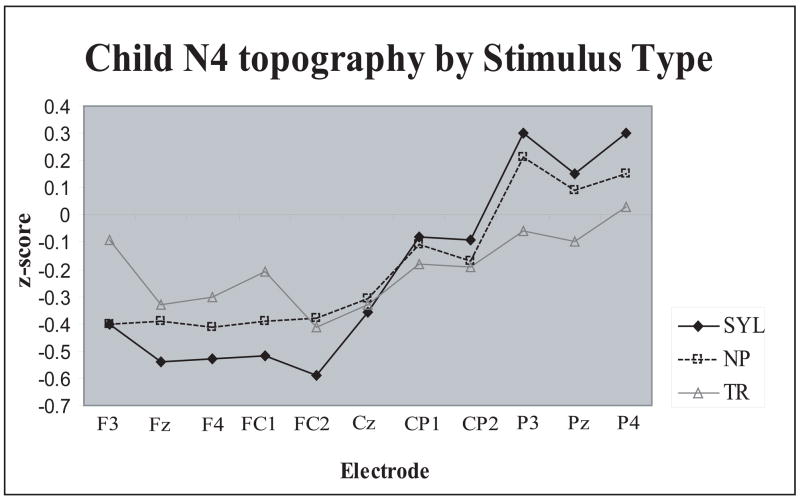

No discernible N1 peak was elicited in the present study in children. Assessed were the P1 peak and the N2/N4 complex (Fig. 10). As in adults, Stimulus Type effects replicated our earlier findings (Èeponienë et al., 2005) demonstrating that non-phonetic stimuli elicit larger P1 peak whereas syllables elicit larger N2/N4 complex (Tables 3 & 6, Fig. 10). The P1 effect was explained by the contribution of P2-like activity indexing sensory detection and arousal, while the N2/N4 effect was explained by the ontogenetically early integrative processing of sound content. A new finding was a highly significant Stimulus Type × Electrode interaction (Fig. 12; F(44,528)=4.44, p<.0001, η 2 =.27) showing that, as in adults, in children syllable N4 was distributed most anteriorly, the transition-N4 was relatively posterior, and the non-phonetic N4 had an intermediate anterior-posterior gradient

Figure 10.

Stimulus Type effects in children. Child syllable, non-phonetic, and transition grand-average ERPs averaged over all CVT durations and spectral items. Non-phonetic stimuli elicited larger P1/P2 peak whereas syllable stimuli elicited larger N4 peak.

Table 6.

Children’s ERP amplitudes averaged over 23 electrodes.

| P1 | N2 | N4 | |||||||

|---|---|---|---|---|---|---|---|---|---|

| /ba/ | /da/ | /ga/ | /ba/ | /da/ | /ga/ | /ba/ | /da/ | /ga/ | |

| SYL | 6.16 (.37) | 6.11 (.57) | 7.28 (.65) | −6.18 (.85) | −7.51 (.85) | −6.94 (.94) | −5.40 (.80) | −5.72 (.74) | −5.44 (.72) |

| NP | 7.72 (.63) | 7.38 (.70) | 5.95 (.41) | −6.43 (.71) | −6.94 (.89) | −7.79 (.88) | −4.79 (.77) | −4.57 (.75) | −5.36 (.80) |

| TR | 5.63 (.42) | 6.57 (.50) | 6.50 (.49) | −7.07 (.70) | −6.72 (.74) | −6.49 (.65) | −3.36 (.37) | −3.05 (.54) | −3.17 (.46) |

Data is in microvolts with Standard Error of the Mean in parenthesis.

Figure 12.

Anterior-posterior scalp distribution differences of children’s N4 peak as a function of Stimulus Type. Syllable N4 was maximal fronto-centrally, transition N4 –centro-parietally, and the non-phonetic N4 showed an intermediate distribution patter. Z score reflects N4 amplitudes normalized by Stimulus Type.

Table 3 summarizes the main CVT duration effects, which are also reflected in Fig. 11. These CVT duration effects are consistent with earlier findings (Kushnerenko et al., 2001) demonstrating larger negativity in response to longer sounds.

Figure 11.

Spectral Item effects by Stimulus Type and CVT duration in children. Significant Spectral Item effects for peak mean amplitudes are indicated by asterisk (significant scalp distribution differences are not noted).

Children’s Syllable P1 was smaller in amplitude in response to the /da/ than /ga/ stimuli (F(2,26)=4.05, p<.04, η 2 =.24; post hoc: p<.01; Fig. 12, Tables 4 & 6).

For the non-phonetic P1, the relationship was inversed (F(2,26)=7.98, p<.006, η 2 =.38): the np-/ga/ P1 was smaller in amplitude than either the np-/ba/ (p<.009) or np-/da/ P1 (p<.08; Fig. 12). The non-phonetic P1 also showed a Spectral Item × Electrode interaction (F(44,572)=2.43, p<.02, η 2 =.16) due to the np-/ga/ P1 being more weakly expressed over the mid-parietal region than the other two P1 peaks.

The transition P1 also showed a significant Spectral Item effect (F(2,26)=3.77, p<.04, η 2 =.23). For these stimuli, the tr-/ba/ elicited smaller response than either the tr-/da/ (p<.09) or tr-/ga/ (p<.05) (Fig. 12, bottom panel).

N2

The Spectral Item × CVT interaction (F(4,48)=3.14, p<.03, η 2 =.21) showed that the Spectral Item differences were most salient with the stimuli containing 50-ms CVTs. These stimuli elicited smaller N4 amplitudes for /da/ syllable and correlates than for either /da/ or /ga/ syllables and correlates (Spectral Item effect: F(2,26)=4.80, p<.02, η 2 =.27). Individually, neither the syllable, non-phonetic, nor the transition N2 showed significant Spectral Item effects.

N4

There were no significant effects or interactions involving Spectral Item for either the syllable or transition N4.

The non-phonetic N4 showed a significant Spectral Item × CVT interaction, suggesting that Spectral Item differences were CVT-dependent (Fig. 12, middle panel). Separate ANOVA-s conducted for stimuli containing 20-, 50-, and 80-ms CVTs revealed a significant Spectral Item effect for the 50-ms CVT stimuli (F(2,26)=3.72, p<.05, η 2 =.22). For these stimuli, the np-/da/ elicited smaller N4 amplitudes than either of the two other stimuli.

Discussion

The broad aim of the present study was to continue inquiry into the functional significance of the child and adult auditory sensory ERPs. Our earlier study showed that in both children and adults (Èeponienë et al., 2005), the N1-P2 peaks are preferentially sensitive to sound salience while the N2-N4 peaks are sensitive to sound content features. The specific goal of the present study was to determine which of the ERP features are sensitive to variations in spectral sound values, specifically those defining consonants in CV syllables. Further, three stimulus types were used (syllables, their non-phonetic correlates, and isolated consonant-vowel transitions) to examine the generalization of the observed effects across speech and non-speech stimuli.

Behavioral performance

Overall, adults were more accurate than children in discriminating the syllables. Although it has been shown that fine frequency discrimination matures until 9–10 years of age (Trehub et al., 1988; Allen and Wightman, 1992), there is little reason to believe that this caused poorer performance in children. The syllable stimuli were chosen to be good representatives of the three phonemes and were identified with high fidelity by adults (96–98% accuracy; Èeponienë et al., 2005) and discriminated well above chance by children and adults (85% and 98% accuracy, respectively). Furthermore, distinguishing between phonemes of one’s native language does not require fine-grained acoustic analysis; this is typically accomplished by utilizing critical, though partial, acoustic cues (Winkler et al., 1999). Therefore, it appears that factors other than perceptual abilities accounted for the accuracy differences between the groups. The likely factors are attentional, motivational, working memory, and/or executive abilities. This is supported by longer reaction times in children as compared with adults.

An interesting finding was shorter reaction times in same-syllable trials. One explanation for this is that stimulus repetition reinforces its neural representation and thus facilitates a behavioral response. The forms of representation at question are short-term sensory memory trace as well as covert stimulus rehearsal within the phonological loop. On the other hand, once a different stimulus is encountered, additional processes of mismatch detection, evaluation, and feasibly identification of the new stimulus take place, contributing to the delay in reaction times. Based on the significant Pairing × Age Group interaction, it appears that this interference is greater in children than adults.

Sensory “orienting”

This study replicated our previous findings (Èeponienë et al., 2005) regarding Stimulus Type effects on adult auditory sensory ERPs whereby perceptually more salient stimuli, the non-phonetic correlates, elicit larger N1 and P2 peaks and the syllables, most complex though perceptually “soft” sounds,3 elicit larger N2 and N4 amplitudes. These findings corroborate the notion that the auditory N1 (Näätänen, 1990; Näätänen and Winkler, 1999) and P2 peaks (Èeponienë et al., 2005) serve sound detection whereas longer-latency peaks (e.g., N2, N4) may reflect integrative processing of sound content. The Spectral Item × Electrode effect of the non-phonetic N1 (Fig. 6) was likely caused by the same phenomenon since it was observed over the vertex, a scalp distribution characteristic to orienting-related, “non-specific” N1 and P2 components. Further, this was the case in both adults and children, as indexed by the behavior of children’s P1 peak that likely includes correlate of the adult P2 (Èeponienë et al., 2005).

In addition, the three Sound Types yielded significant N1 scalp distribution differences. The non-phonetic N1 was predominant around the vertex, the syllable N1 showed a clear predominance over the centro-parietal areas, and the transition N1 was quite evenly distributed across the anterior-posterior scalp (Fig. 3). One explanation for this finding could be different N1 componentry elicited by these stimulus types. The most attention-getting non-phonetic stimuli may have elicited a larger “non-specific” N1 component, consistent with the vertex-predominant distribution of the non-phonetic N1.

Relevant to the here observed Sound-Type related N1 differences, an earlier MEG study (Diesch and Luce, 1997) reported different N1 source locations for vowels, their isolated formants, and corresponding simple tones. These data suggest that the N1 generators may draw sound detection-facilitating information from several processing levels or sub-streams specialized for different types of acoustic information.

The dual role of the auditory P2

As the N1, the P2 also was larger in amplitude in response to the non-phonetic sounds than to syllables, which is consistent with earlier reports on the P2 amplitude being larger for more salient sounds (Picton et al., 1974; Èeponienë et al., 2002). However, while the N1 did not show consistent Spectral Item effects, the P2 showed amplitude differences between the three Spectral Items (/ba/, /da/, /ga/), across the three Stimulus Types. Further, this was the case in both adults and children, as indexed by the behavior of children’s P1 peak that likely includes correlate of the adult P2 (Èeponienë et al., 2005).

Earlier, The P2 has been shown to correspond to perception more closely than the N1 by, for example, showing higher ceiling for intensity saturation and significant latency-intensity correlation (Crowley and Colrain, 2004). Further, task manipulations have demonstrated larger P2 amplitude during sound discrimination than detection tasks (Novak et al., 1992). In a patient with bilateral damage to auditory cortex, both supra-threshold and below-threshold stimuli elicited the P1-N1 peaks, but only the supra-threshold (perceived) stimuli elicited the P2 peak (Woods et al., 1984). Similarly, perceptually discriminable differences in voice (speech stimuli) and formant (non-speech correlates) onset times elicited significant P2 amplitude variations while acoustically comparable though perceptually not discriminable onset time differences did not (Horev et al., 2007). Finally, robust P2 enhancement was the major change that occurred with perceptual CV syllable discrimination training in young adults (Tremblay et al., 2001; Tremblay and Kraus, 2002). Therefore, the P2 appears to be tightly linked to sound content processing, which is in agreement with its early ontogenetical emergence as well as latency overlap with the discrimination-related ERP component, the MMN.

It has been suggested that one source of the P2 is in extra-lemniscal pathways (Barnet et al., 1975; Ponton et al., 2000). This is supported by its vertex-centered scalp distribution, as well as a maturational trajectory that parallels that of the brainstem potentials (Eggermont, 1988). Contribution of this pathway to P2 is consistent with its reflection of sound detectability and possibly even the conscious perceptual thresholds.

However, data also exists showing that the P2 has temporal-lobe generators within auditory cortices that are tonotopically organized (lemniscal pathway, Rif et al., 1991; Lütkenhöner and Steinsträter, 1998). Lütkenhöner et al. (1998) localized the P2 in primary auditory cortex of Heshl’s gyrus, which is consistent with our observation of spectral sensitivity of the P2 as well as with the findings of Wunderlich et al (2001, 2006) who showed that the P2 amplitude decreased as a function of tone frequency, and that this variability was present from infancy for the P2 but emerged only in childhood for the N1. In addition, the present findings suggest that the P2 generators might have access to more fine-grained spectral stimulus information than the N1 generators. This level of resolution appears to allow not just stimulus detection but also assignment of relevance (e.g., in selective attention tasks) or identification (e.g., in discrimination tasks). The latter is especially interesting due to the considerable overlap of the latency of the P2 with that of the mismatch negativity (MMN, the so far unsurpassed objective measure of auditory perceptual accuracy Näätänen and Alho, 1997). While the functions reflected, and the mechanisms utilized by the P2 and the MMN are different, the underlying sensory substrates may be shared. Specifically, MMN operates on short-term sensory memories that are not identical to stimulus-evoked transient activation (or synchronization), reflected by transient sensory ERPs (for review, see Näätänen and Winkler, 1999). However, the type of acoustic analysis performed during the P2 range may provide information relevant for constructing and maintaining the memory traces underlying MMN generation. Indeed, MMN evidence exists that certain perceptually relevant sound features, such as those identifying phonemic distinctions, can be extracted during the MMN – P2 latency range, in parallel with and before the fine-grained sensory analysis is complete (Winkler et al., 1999).

Altogether, available results support the dual role of the auditory P2. Specifically, the auditory P2 appears to be concerned with both stimulus detection and possibly some level of identification, in part based on the spectral information afforded by the P2 generators.

Interestingly, the only peak in the children’s ERPs that showed similar Spectral Item effects was their auditory P1. Using Independent Component Analysis (Makeig et al., 1997), earlier we suggested that children’s P1 contains functional correlates of both the adult P1 and P2 peaks (Èeponienë et al., 2005). Consistent with the “detection” role of the adult auditory P2, children’s P1 was larger in amplitude in response to the non-phonetic stimuli than to syllables. On the other hand, consistent with spectral sensitivity of the adult P2, children’s P1 showed Spectral Item-related amplitude variation. Isolated transitions, the stimuli that yielded the most consistent Spectral Item effects, elicited the same P2 amplitude patterns in both children and adults (BA<DA, GA). Therefore, the present findings of similar behaviors between the adult P2 and child P1 peaks support the notion that, at least functionally, children’s P1 is a correlate of the adult P1 and P2, and that both children’s and adult P2 is reflective of spectral auditory processing.

Non-linearity of spectral encoding: simple vs. complex acoustically rich stimuli

Isolated consonant-vowel transitions, the simplest stimuli of the present study, elicited the most consistent Spectral Item-related effects. These effects were significant for the earlier, P1, N1, and P2 peaks in the adults and for the P1 peak in children. Assuming that auditory attributes are being encoded, possibly at different levels of complexity and abstraction (e.g., direct simple acoustic mapping, direct complex acoustic mapping, relational mapping), at all levels of auditory pathways it is reasonable to assume that spectral stimulus differences can be detected at several processing stages, indexed by several ERP peaks. However, isolated transition stimuli elicited detectable Spectral Item effects above and beyond the peak ranges of the other two stimulus types. One reason for this might be their “rich simplicity”: although spectrally rich, the transitions had simple, linear shape, were relatively brief, and carried no phonemic information. Apparently, such form of information delivery (just the on-off features and the spectra of sweeps) permitted robust frequency mapping at the earlier processing levels (e.g., P1), utilization of this information for sound salience response (the N1), and also maintained the robustness of spectral representation during the P2 range since it was spectrally rich but not obscured by, e.g., complex envelope. Therefore, these stimuli elicited the cleanest ERP indices of spectral processing. Similar stimuli may be considered for use in populations with suspected or known spectral processing deficits (e.g., children with language impairment).

Late sensory processing: comprehensive integration

In adults, both the N2 and N4 peaks showed amplitude as well as scalp distribution differences related to Stimulus Type, which were opposite to those shown by the N1. Specifically, syllable N2 and N4 showed the most anterior predominance while the non-phonetic stimuli showed the most parietal predominance. In addition, the N2 and N4 responses to syllables were larger in amplitude than those to the non-phonetic sounds. This indicates that Stimulus Type effects were caused by different factors in the N2/N4 than the N1 latency ranges. Two earlier studies (Woods and Elmasian, 1986; Èeponienë et al., 2001) found that the P1 and N1 peaks were larger in response to acoustically complex than simple tones, but the N4 increased specifically in response to vowels. The present study replicated this finding for consonant stimuli. This supports the above notion that structurally complex stimulus features, and/or those favoring pre-established coding rules, are being preferentially processed during 250–400 ms after stimulus.

The robustness of Spectral Item effects evoked by the transitions in the adults diminished by the N2 - N4 range. There were trends but not statistically significant effects. Instead, at those latencies, significant differences were found among the non-phonetic Spectral Items (N2 scalp distribution) and 50-ms CVT syllabic Spectral Items (N4 amplitudes). This might suggest that at these later processing stages, larger-scale acoustic structures, such as complex envelopes, are taken into account and that auditory analysis is exhausted once the temporally local, spectrally distinct features are utilized to produce a larger temporal scale, higher spectral-code level transient sound representations. One putative role of such transient traces might be facilitation of phoneme matching during online auditory processing, especially in poor audibility conditions (Diesch and Luce, 1997; Näätänen et al., 2001; Èeponienë et al., 2005). Further, this level of encoding could be used for identification of novel complex auditory objects such as syllables and words during language acquisition in infancy or in foreign language learning after childhood. A possibly corroborating finding was the differential distribution of Spectral Item activities recorded over the left, but not right, lateral temporal regions (Figs. 8 & 9). This pattern was observed exquisitely for the N4 peak, but both for the syllable and non-phonetic stimuli. This suggests differential involvement of the left auditory cortices during the N4 range, supporting a link of late sensory processing with the higher-order, though sub-phonemic, sensory encoding.

An interesting observation was that only syllables, and only those with the 50-ms CVT – the most prototypical versions – evoked a detectable consonant effect on the N4 peak in adults. It has been suggested that due to their brief, complex, and unstructured character, consonants are retained only in coded form in short-term memory (Studdert-Kennedy, 1993) and that categorization of consonants requires attention (Cowan et al., 1990). Therefore, the unattend conditions of the present study make it rather unlikely that phonemic consonant representations were tapped, which is supported by the right-hemisphere predominance found for the N2 and N4 peaks. Nonetheless, it appears that the most prototypical stimuli were able to pre-attentively activate different spectral items in higher-order sensory networks. While this will have to be verified in a follow-up study using both attended and unattended stimuli, it provides additional support for there being coded, yet sub-phonemic, speech sound representations in auditory cortex serving, for example, facilitation of phoneme recognition.

In children, both the Stimulus Type and the consonant effect were exactly the same as in adults for the N4 peak. Specifically, syllables showed the most anterior scalp distribution while the non-phonetics showed the most posterior distribution. Further, syllable /da/ elicited smaller N4 amplitudes than syllables /ga/ or /ba/, and this effect was seen with 50-ms CVT stimuli only. This suggests persisting functional roles of the later auditory sensory peaks from childhood to adulthood even in the wake of robust changes in amplitudes and overall waveform morphology.

Developmental conjectures

The present study provided additional evidence to the notion that children’s P1 contains functional correlates of the adult P1 and P2 peaks. Since these peaks emerge during the first 6 months of life, it appears that it is this early when infants become equipped with both sound detection as well as spectral processing devices. However, this is not to be taken to assume that these mechanisms are as efficient or precise during infancy as in their mature form. In fact, the P2-based sound detection, although possibly better rooted in stimulus features, may be not as efficient or sensitive for the purposes of mere sound detection and calling for attention as the later-emerging N1 mechanism.

The close similarity between the child and adult N4 Spectral Item effects provides both inter-group validation as well as demonstrates the developmental stability of the observed results. Although the children of the present study were well beyond the major developmental milestones of sensory-motor and language development, their N1-P2 complex was still not defined (e.g., compare Figs 2 & 10). Compared to this, the stimulus-response relationship of the N4 peak stands out as rather mature. It may appear counterintuitive that the longer-latency peak, feasibly reflecting higher-order processing, shows relative maturity earlier in development than earlier-latency peaks. However, an explanation exists. First, data shows that the N4 emerges as early as by 3 months of age (Kushnerenko et al., 2002). If the N4 indeed reflects larger-scale sound organization, its early emergence would be consistent with the evidence showing that infants are capable of processing larger-scale stimulus attributes, such as prosody, words en bloc, and distinct streams of auditory information (Werker and Polka, 1993; Best, 1994; Winkler et al., 2003). If so, it is reasonable to expect that such a developmentally early processor appears quite mature at the age of 7 to 10 years. Nonetheless, even if the present account is correct, the substrates feeding to this generator likely undergo robust maturation from birth to late childhood, yielding emergence of fine grading of spectral information. The effects of such maturation on the N4 functionality are yet to be uncovered.

No robust Stimulus Type or Spectral Item effects were found for the auditory N2 peak in the children of the present study. This is at odds with the earlier results of the 50-ms CVT stimuli showing significant Stimulus Type effects. One possibility is that inclusion of very short, 20-ms CVT, stimuli diminished resolving power of this large peak. Further studies are needed to clarify functional significance of the N2 in children, including conditions with attended stimuli and those with easier-detectable spectral distinctions, for example, vowels.

Conclusions

Even for complex stimuli presented under unattended conditions, auditory sensory ERPs showed robust indices of differential spectral processing in child and adult groups. The most consistent Spectral Item effects were found for the P2 peak in adults and its correlate in children (P1). Based on its spectral as well as stimulus salience sensitivity, it appears that P2 serves both stimulus detection and identification functions. Further, spectral processing showed Stimulus Type-associated nonlinearity across processing stages, as reflected by the more robust Spectral Item-related ERP differences during earlier peaks (P1, N1) for simpler stimuli (transitions) and more robust Spectral Item-related ERP differences during later peaks (N2, N4) for more complex stimuli (non-phonetics and syllables)

The Stimulus Type effects replicated earlier findings of stimulus salience-linked sensitivity of the N1-P2 complex and content-feature sensitivity of the N2-N4 peaks. Together, the Spectral Item and Stimulus Type effects delineate an orderly processing organization whereby direct mapping of linear acoustic features occurs earlier in the processing and, in part, serves sound detection and a more integrative, relational, mapping occurs later in the processing and, in part, serves sound identification.

Children demonstrated rather comparable Spectral Item effects to those of the adults, indicating relative maturity of spectral sound content processing at the age of 7–10 years.

Acknowledgments

This study was funded by National Institutes of Health Grant NINDS NS22343.

Footnotes

Similar to visual spectrum of colors, auditory spectrum refers to acoustic sound frequencies and their combinations, such as formants in speech sounds.

For a more detailed description of stimulus generation, see Ceponiene et al., 2005.

Speech is rendered perceptually “soft” by band-width formants, low-pass envelopes, and low-energy antiformants.

References

- Alku P, Tiitinen H, Näätänen R. A method for generating natural-sounding speech stimuli for cognitive brain research. Clin Neurophysiol. 1999;110:1329–1333. doi: 10.1016/s1388-2457(99)00088-7. [DOI] [PubMed] [Google Scholar]

- Allen P, Wightman F. Spectral pattern discrimination by children. J Speech Hear Res. 1992;35:222–233. doi: 10.1044/jshr.3501.222. [DOI] [PubMed] [Google Scholar]

- Barnet AB, Ohlrich ES, Weiss IP, Shanks B. Auditory evoked potentials during sleep in normal children from ten days to three years of age. Electroenceph Clin Neurophysiol. 1975;39:29–41. doi: 10.1016/0013-4694(75)90124-8. [DOI] [PubMed] [Google Scholar]

- Bates E. On the nature and nurture of language. In: Levi-Montalcini R, Baltimore D, Dulbecco R, Jacob FSE, Bizzi E, Calissano P, Volterra VVE, editors. Frontiere della biologia [Frontiers of biology]. The brain of homo sapiens. Rome: Giovanni Trecanni; in press. [Google Scholar]

- Best CT. The emergence of native-language phonological influences in infants: A perceptual assimilation model. In: Goodman JC, Nusbaum HC, editors. The Development of Speech Perception: The Transition from Speech Sounds to Spoken Words. Cambridge: A Bradford Book, The MIT Press; 1994. pp. 167–224. [Google Scholar]

- Bruneau N, Gomot M. Auditory evoked potentials (N1 wave) as indices of cortical development. In: Garreau B, editor. Neuroimaging in Child Neuropsychiatric Disorders. Springer Verlag; 1998. pp. 113–124. [Google Scholar]

- Èeponienë R, Alku P, Westerfield M, Torkki M, Townsend J. Event-related potentials differentiate syllable and non-phonetic correlate processing in children and adults. Psychophysiology. 2005;42:391–406. doi: 10.1111/j.1469-8986.2005.00305.x. [DOI] [PubMed] [Google Scholar]

- Èeponienë R, Cheour M, Näätänen R. Interstimulus interval and auditory event-related potentials in children: evidence for multiple generators. Journal of Electroenceph Clin Neurophysiol. 1998;108:345–354. doi: 10.1016/s0168-5597(97)00081-6. [DOI] [PubMed] [Google Scholar]

- Èeponienë R, Rinne T, Näätänen R. Maturation of cortical sound processing as indexed by event-related potentials. Clin Neurophysiol. 2002;113:870–882. doi: 10.1016/s1388-2457(02)00078-0. [DOI] [PubMed] [Google Scholar]

- Èeponienë R, Shestakova A, Balan P, Alku P, Yaguchi K, Näätänen R. Children’s auditory event-related potentials index stimulus complexity and “speechness”. Int J Neurosci. 2001;109:245–260. doi: 10.3109/00207450108986536. [DOI] [PubMed] [Google Scholar]

- Èeponienë R, Townsend J, Williams C, Cummings A, Evans M, Wulfeck B. Auditory processing of speech in children with Specific Language Impairment: evidence for spectral processing deficits. Annual Cognitive Neuroscience Society Meeting; San Francisco, CA. 2006, April 8–12. [Google Scholar]

- Coch D, Sanders LD, Neville HJ. An event-related potential study of selective auditory attention in children and adults. J Cogn Neurosci. 2005b;17:605–22. doi: 10.1162/0898929053467631. [DOI] [PubMed] [Google Scholar]

- Coch D, Skendzel W, Neville HJ. Auditory and visual refractory period effects in children and adults: an ERP study. Clin Neurophysiol. 2005a;116:2184–203. doi: 10.1016/j.clinph.2005.06.005. [DOI] [PubMed] [Google Scholar]

- Cowan N, Lichty W, Groove TR. Properties of memory for unattended spoken syllables. J Exp Psychol Learn Mem Cogn. 1990;16:258–269. doi: 10.1037//0278-7393.16.2.258. [DOI] [PubMed] [Google Scholar]

- Crowley KE, Colrain IM. A review of the evidence for P2 being an independent component process: age, sleep and modality. Clin Neurophysiol. 2004;115:732–44. doi: 10.1016/j.clinph.2003.11.021. [DOI] [PubMed] [Google Scholar]

- Dick F, Saygin AP, Moineau S, Aydelott J, Bates E. Language in an embodied brain: the role of animal models. Cortex. 2004;40:226–7. doi: 10.1016/s0010-9452(08)70960-2. [DOI] [PubMed] [Google Scholar]

- Diesch E, Eulitz C, Hampson S, Ross B. The neurotopography of vowels as mirrored by evoked magnetic field measurements. Brain Lang. 1996;53:143–168. doi: 10.1006/brln.1996.0042. [DOI] [PubMed] [Google Scholar]

- Diesch E, Luce T. Magnetic fields elicited by tones and vowel formants reveal tonotopy and nonlinear summation of cortical activation. Psychophysiology. 1997;34:501–10. doi: 10.1111/j.1469-8986.1997.tb01736.x. [DOI] [PubMed] [Google Scholar]

- Eggermont JJ. On the rate of maturation of sensory evoked potentials. Electroencephalography adnd Clin Neurophysiol. 1988;70:293–305. doi: 10.1016/0013-4694(88)90048-x. [DOI] [PubMed] [Google Scholar]

- Eulitz C, Diesch E, Pantev C, Hampson S, Elbert T. Magnetic and electric brain activity evoked by the processing of tone and vowel stimuli. J Neurosci. 1995;15:2748–55. doi: 10.1523/JNEUROSCI.15-04-02748.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gomes H, Molholm S, Christodoulou C, Ritter W, Cowan N. The development of auditory attention in children. Front Biosci. 2000;5:108–120. doi: 10.2741/gomes. [DOI] [PubMed] [Google Scholar]

- Horev N, Most T, Pratt H. Categorical Perception of Speech (VOT) and Analogous Non-Speech (FOT) signals: Behavioral and electrophysiological correlates. Ear Hear. 2007;28:111–28. doi: 10.1097/01.aud.0000250021.69163.96. [DOI] [PubMed] [Google Scholar]

- Jung TP, Makeig S, Westerfield M, Townsend J, Courchesne E, Sejnowski TJ. Removal of eye activity artifacts from visual event-related potentials in normal and clinical subjects. Clin Neurophysiol. 2000;111:1745–58. doi: 10.1016/s1388-2457(00)00386-2. [DOI] [PubMed] [Google Scholar]

- Karhu J, Herrgård E, Pääkkönen A, Luoma L, Airaksinen E, Partanen J. Dual cerebral processing of elementary auditory input in children. NeuroReport. 1997;8:1327–1330. doi: 10.1097/00001756-199704140-00002. [DOI] [PubMed] [Google Scholar]

- Korpilahti P. Auditory discrimination and memory functions in SLI children: A comprehensive study with neurophysiological and behavioral methods. Scand J Logoped Phon. 1995;20:131–139. [Google Scholar]

- Korpilahti P, Lang HA. Auditory ERP components and mismatch negativity in dysphasic children. Electroencephalography and Clin Neurophysiol. 1994;91:256–264. doi: 10.1016/0013-4694(94)90189-9. [DOI] [PubMed] [Google Scholar]

- Kurtzberg D, Hilpert PL, Kreuzer JA, Vaughan HGJ. Differential maturation of cortical auditory evoked potentials to speech sounds in normal fullterm and very low-birthweight infants. Dev Med Child Neurol J. 1984;26:466–475. doi: 10.1111/j.1469-8749.1984.tb04473.x. [DOI] [PubMed] [Google Scholar]

- Kurtzberg D, Stone CLJ, Vaughan HGJ. Cortical responses to speech sounds in the infant. In: Cracco R, Bodis-Wollner I, editors. Front Clin Neurosci. New York: Alan R. Press; 1986. pp. 513–520. [Google Scholar]

- Kushnerenko E, Èeponienë R, Balan P, Fellman V, Huotilainen M, Näätänen R. Maturation of the auditory event-related potentials during the first year of life. NeuroReport. 2002;13:47–51. doi: 10.1097/00001756-200201210-00014. [DOI] [PubMed] [Google Scholar]

- Kushnerenko E, Èeponienë R, Fellman V, Huotilainen M, Winkler I. Event-related potential correlates of sound duration: similar pattern from birth to adulthood. NeuroReport. 2001;12:3777–3781. doi: 10.1097/00001756-200112040-00035. [DOI] [PubMed] [Google Scholar]

- Lang H, Nyrke T, Ek M, Aaltonen O, Raimo I, Näätänen R. Pitch discrimination performance and auditory event-related potentials. In: Brunia CHM, Gaillard AWK, Kok A, Mulder G, Verbaten MN, editors. Psychophysiological Brain Research. Tilburg: Tilburg University Press; 1990. pp. 294–298. [Google Scholar]

- Lütkenhöner B, Krumbholz K, Seither-Preisler A. Studies of tonotopy based on wave N100 of the auditory evoked field are problematic. NeuroImage. 2003;19:935–49. doi: 10.1016/s1053-8119(03)00172-1. [DOI] [PubMed] [Google Scholar]

- Lütkenhöner B, Steinsträter O. High-precision neuromagnetic study of the functional organization of the human auditory cortex. Audiol Neurootol. 1998;3:191–213. doi: 10.1159/000013790. [DOI] [PubMed] [Google Scholar]

- MacWhinney B. The emergence of language. Mahwah, NJ: Lawrence Erlbaum Associates; 1999. [Google Scholar]

- Makeig S, Jung TP, Bell AJ, Ghahremani D, Sejnowski TJ. Blind separation of auditory event-related brain responses into independent components. Proc Natl Acad Sci U S A. 1997;94:10979–84. doi: 10.1073/pnas.94.20.10979. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martin BA, Sigal A, Kurtzberg D, Stapells DR. The effects of decreased audibility produced by high-pass noise masking on cortical event-related potentials to speech sounds/ba/ and/da. J Acoust Soc Am. 1997;101:1585–99. doi: 10.1121/1.418146. [DOI] [PubMed] [Google Scholar]

- McCarthy G, Wood CC. Scalp distributions of event-related potentials: an ambiguity associated with analysis of variance models. Electroenceph Clin Neurophysiol. 1985;62:203–208. doi: 10.1016/0168-5597(85)90015-2. [DOI] [PubMed] [Google Scholar]

- Molfese DL. The use of auditory evoked responses recorded from newborn infants to predict later language skills. Birth Defects Orig Artic Ser. 1989;25:47–62. [PubMed] [Google Scholar]

- Näätänen R. The role of attention in auditory information processing as revealed by event-related potentials and other brain measures of cognitive function. Behav Brain Sci. 1990;13:201–288. [Google Scholar]

- Näätänen R, Alho K. Mismatch negativity - the measure for central sound representation accuracy. Audiol Neurootol. 1997;2:341–353. doi: 10.1159/000259255. [DOI] [PubMed] [Google Scholar]

- Näätänen R, Picton T. The N1 wave of the human electric and magnetic response to sound: a review and an analysis of the component structure. Psychophysiology. 1987;24:375–425. doi: 10.1111/j.1469-8986.1987.tb00311.x. [DOI] [PubMed] [Google Scholar]

- Näätänen R, Sams M, Alho K, Paavilainen P, Reinikainen K, Sokolov EN. Frequency and location specificity of the human vertex N1 wave. Electroenceph Clin Neurophysiol. 1988;69:523–531. doi: 10.1016/0013-4694(88)90164-2. [DOI] [PubMed] [Google Scholar]

- Näätänen R, Tervaniemi M, Sussman E, Paavilainen P, Winkler I. “Primitive intelligence in the auditory cortex”. Trends Neurosci. 2001;24:283–8. doi: 10.1016/s0166-2236(00)01790-2. [DOI] [PubMed] [Google Scholar]

- Näätänen R, Winkler I. The concept of auditory stimulus representation in cognitive neuroscience. Psychol Bull. 1999;125:826–59. doi: 10.1037/0033-2909.125.6.826. [DOI] [PubMed] [Google Scholar]

- Neville H, Coffey S, Holcomb P, Tallal P. The neurobiology of sensory and language processing in language-impaired children. J Cogn Neurosci. 1993;5:235–253. doi: 10.1162/jocn.1993.5.2.235. [DOI] [PubMed] [Google Scholar]

- Novak G, Ritter W, Vaughan HGJ. Mismatch detection and the latency of temporal judgements. Psychophysiology. 1992;29:398–411. doi: 10.1111/j.1469-8986.1992.tb01713.x. [DOI] [PubMed] [Google Scholar]

- Novak GP, Kurzberg D, Kreuzer JA, Vaughan HGJ. Cortical responses to speech sounds and their formants in normal infants: maturational sequence and spatiotemporal analysis. J Electroencephal Clin Neurophysiol. 1989;73:295–305. doi: 10.1016/0013-4694(89)90108-9. [DOI] [PubMed] [Google Scholar]

- Pantev C, Bertrand O, Eulitz C, Verkindt C, Hampson S, Schuirer G, Elbert T. Specific tonotopic organizations of different areas of the human auditory cortex revealed by simultaneous magnetic and electric recordings. Electroenceph Clin Neurophysiol. 1995;94:26–40. doi: 10.1016/0013-4694(94)00209-4. [DOI] [PubMed] [Google Scholar]

- Pantev C, Hoke M, Lehnertz K, Lütkenhöner B. Neuromagnetic evidence of an ampliotopic organization of the human auditory cortex. Electroencephalogr Clin Neurophysiol. 1989;72:225–31. doi: 10.1016/0013-4694(89)90247-2. [DOI] [PubMed] [Google Scholar]

- Parasuraman R, Richer F, Beatty J. Detection and recognition: concurrent processes in perception. Percept Psychophys. 1982;31:1–12. doi: 10.3758/bf03206196. [DOI] [PubMed] [Google Scholar]

- Picton TW, Alain C, Otten L, Ritter W, Achim A. Mismatch negativity: different water in the same river. Audiol Neurootol. 2000;5:111–139. doi: 10.1159/000013875. [DOI] [PubMed] [Google Scholar]

- Picton TW, Hillyard SA, Krausz HI, Galambos R. Human auditory evoked potentials. I Evaluation of components. Electroenceph Clin Neurophysiol. 1974;36:179–190. doi: 10.1016/0013-4694(74)90155-2. [DOI] [PubMed] [Google Scholar]

- Pinker S. The language instinct. New York: NY: William Morrow & Co; 1994. [Google Scholar]

- Ponton C, Eggermont JJ, Khosla D, Kwong B, Don M. Maturation of human central auditory system activity: separating auditory evoked potentials by dipole source modeling. Clin Neurophysiol. 2002;113:407–20. doi: 10.1016/s1388-2457(01)00733-7. [DOI] [PubMed] [Google Scholar]

- Ponton CW, Eggermont JJ, Kwong B, Don M. Maturation of human central auditory system activity: Evidence from multi-channel evoked potentials. Clin Neurophysiol. 2000;111:220–236. doi: 10.1016/s1388-2457(99)00236-9. [DOI] [PubMed] [Google Scholar]

- Rif J, Hari R, Hamalainen MS, Sams M. Auditory attention affects two different areas in the human supratemporal cortex. Electroencepha Clin Neurophysiol. 1991;79:464–472. doi: 10.1016/0013-4694(91)90166-2. [DOI] [PubMed] [Google Scholar]

- Studdert-Kennedy M. Discovering phonetic function. J Phon. 1993;21:147–155. [Google Scholar]

- Szymanski MD, Rowley HA, Roberts TP. A hemispherically asymmetrical MEG response to vowels. NeuroReport. 1999;10:2481–6. doi: 10.1097/00001756-199908200-00009. [DOI] [PubMed] [Google Scholar]

- Tiitinen H, May P, Reinikainen K, Näätänen R. Attentive novelty detection in humans is governed by pre-attentive sensory memory. Nature. 1994;372:90–92. doi: 10.1038/372090a0. [DOI] [PubMed] [Google Scholar]