Abstract

During goal-directed movements, primates are able to rapidly and accurately control an online trajectory despite substantial delay times incurred in the sensorimotor control loop. To address the problem of large delays, it has been proposed that the brain uses an internal forward model of the arm to estimate current and upcoming states of a movement, which are more useful for rapid online control. To study online control mechanisms in the posterior parietal cortex (PPC), we recorded from single neurons while monkeys performed a joystick task. Neurons encoded the static target direction and the dynamic movement angle of the cursor. The dynamic encoding properties of many movement angle neurons reflected a forward estimate of the state of the cursor that is neither directly available from passive sensory feedback nor compatible with outgoing motor commands and is consistent with PPC serving as a forward model for online sensorimotor control. In addition, we found that the space–time tuning functions of these neurons were largely separable in the angle–time plane, suggesting that they mostly encode straight and approximately instantaneous trajectories.

Keywords: internal forward model, space–time tuning, trajectory, sensorimotor control, neurophysiology

The posterior parietal cortex (PPC) lies at the functional interface between sensory and motor representations in the primate brain. Known sensory inputs to PPC arrive from visual and proprioceptive pathways (Fig. 1A). Previous work has suggested how these sensory inputs could be integrated to compute a goal vector in eye-centered coordinates for an impending reach (1–3). In addition, psychophysical and clinical studies in humans have clearly established a role for PPC in rapid online updating and correction of continuous movement (4–6). In order for a brain area to play an effective role in rapid online control, it would have to represent an estimate of the state of the movement (position, direction, speed, etc.) that is derived from mechanisms other than just sensory feedback, which is generally considered to be too slow to accomplish the task much of the time (7, 8). Another possible input to PPC is an efference copy signal that relays replicas of recent movement commands from downstream motor areas back to PPC with little or no delay (9).

Fig. 1.

Model and experimental design. (A) Diagram of sensorimotor integration for online control in PPC. Inputs to PPC consist of visual and proprioceptive sensory signals and, potentially, an efference copy signal. Plausible PPC outputs to be tested are (i) the static target direction (goal angle) and (ii) the dynamic cursor state (movement angle). (B) Diagram of actual trajectory showing the goal angle and movement angle and their respective origins of reference. The filled green and red circles represent the target and fixation point, respectively. (C) Example trajectories for center-out task. The dashed green circle is the starting location of the target and is not visible once the target has been jumped to the periphery. Dots represent cursor position sampled at 15-ms intervals along the trajectory (black, monkey 1; magenta, monkey 2). (D) Example trajectories for obstacle task. Targets, fixation points, and cursor representations are identical to center-out task. Blue filled circle represents the obstacle.

Growing evidence supports the idea that the brain overcomes long sensory delay times by using an internal forward model that combines efference copy signals with a model of the system dynamics to generate estimates of upcoming states of the effector (otherwise not inferable from late arriving sensory feedback), which are more suitable for the rapid control of movement (10, 11). Because the output of a forward model reflects a best guess of the next state of the arm in lieu of delayed sensory feedback, it is also likely that sensory observations that arrive at later times are continually integrated as well by the online controller to improve the estimate of the forward model as time goes by (12).

In addition, the output of a forward model can be used to create an internal estimate of the sensory consequences of a movement in a timely manner (i.e., the expected visual/proprioceptive state of the effector in the environment), providing a mechanism for transforming between intrinsic motor representations and task-based sensory representations (8, 10). In particular, a forward model may be useful for distinguishing the motion of an effector from motion of the external environment. For example, when we make eye movements, it is widely believed that the brain makes use of an internal reference signal to avoid misinterpreting shifts of the visual scene on our retina as motion in the outside world (13). von Holst and Mittelstaedt (14) originally proposed a reafference-cancelling model that performs a subtractive comparison of efference copy and sensory signals to remove the retinal shift from our perception. However, a more recent study has provided evidence that this comparative mechanism actually uses a forward model of the expected sensory outcome of an eye movement rather than raw, unmodified efference copy as originally envisaged by von Holst and Mittelstaedt (15). Interestingly, additional clinical evidence presented by Haarmeier and colleagues (16) suggested that parieto-occipital regions may be involved in performing the comparison between self-induced and external sensory motion during smooth-pursuit eye movements.

Neurophysiological evidence that identifies the neural substrate of the internal forward model for sensorimotor control of limb movement has yet to be reported. PPC, specifically the parietal reach region (PRR) and area 5, could be a possible site for the forward model to reside given its large number of feedback connections from frontal areas and substantial sensory input from both visual and somatosensory domains (17, 18). Therefore, we investigated the neural representation of online directional control signals in PPC by analyzing the correlations of single neuron activity with the static goal angle (fixed angle from the starting cursor position to the target) and the dynamic movement angle of the cursor (angle of heading) during a joystick task (Fig. 1B). We monitored single-unit neuronal activity in PPC while monkeys performed center-out and obstacle-avoidance tasks with central eye fixation (Fig. 1 C and D). Importantly, monkeys were required to fixate centrally during the entire movement so as to maintain a constant visual reference frame and to rule out any effects due to eye movements. This control was instituted because earlier studies have shown that PRR encodes visual targets for reaching in eye coordinates and area 5 in both eye and hand coordinates (1, 2). We found strong evidence that both of these angles were encoded in PPC: a representation of the static target direction and a dynamic estimate of the state of the cursor. The temporal encoding properties of dynamically tuned neurons provide the first evidence that PRR and area 5 encode the current state of the cursor, consistent with the operation of a forward model for online sensorimotor control. Furthermore, these state-estimating neurons appear to encode rather simple trajectories, encoding instantaneous and mostly straight paths in space.

Results

Space–Time Tuning.

We characterized the encoding properties of each PPC neuron during the movement period by constructing a space–time tuning function (STTF) (Fig. 2 B and C) (19). Each horizontal slice in the STTF plots a neuron's instantaneous firing rate as a function of angle (goal or movement) measured at a particular lag time (e.g., Fig. 2A, 0-ms lag time slice). Importantly, lag time, τ, denotes the relative time difference between the instantaneous firing rate and the time that a particular behavioral angle occurred and should not be confused with the absolute elapsed time. Therefore, the STTF of a neuron can be thought of as a description of the average temporal dynamics of the angle that can be recovered from the firing rate, for example, by downstream neurons faced with the task of decoding the goal or movement angle at different relative times in the trajectory. We also calculated the mutual information between firing rate and angle for each lag time in the STTF to generate a temporal encoding function (TEF). Because mutual information is a nonparametric measure of statistical dependency between two random variables, this measure allowed us to more directly quantify a neuron's encoding strength. The TEF of a neuron plots the amount of information that could be recovered from the instantaneous firing rate about the angle at different lag times (i.e., from past (τ < 0) to future (τ > 0) angles). The lag time that contained the maximal mutual information was defined as the optimal lag time (OLT), denoting the relative time at which a neuron's firing rate contained the most information about the angle.

Fig. 2.

Representative neuron and STTF analysis. (A) Movement angle tuning curve, plotting firing rate as a function of movement angle measured at zero lag time. The tuning curve was well fit by a cosine model (R2 = 0.92). (B) Diagram describing space–time tuning analysis. Neural activity was sampled from the middle of the movement period, and movement angle was sampled across the entire movement period, from movement onset to the time the cursor entered the target zone. This sampling scheme allowed each firing-rate sample to be paired with angle samples at all possible lag times considered. (C) Movement angle STTF. A contour plot shows the average firing rate of a cell that occurred for different movement angles measured over a range of lag times (−120 ms ≤ τ ≤ 120 ms) relative to the firing rate. (D) Movement angle TEF and corresponding goal-angle TEF, where mutual information between firing rate and movement angle is plotted as a function of lag time. The firing rate contained the most information about the movement angle at an optimal lag time of 0 ms. All error bars denote 95% confidence intervals. Because the target was stationary during each trial (e.g., goal angle did not change during a trajectory), the goal-angle information was approximately constant across lag time. The dashed lines denote surrogate TEFs, for both movement (red-dashed) and goal (green-dashed) angles, that were derived from surrogate spike trains and actual angles. Note that there is no temporal tuning structure in the surrogate movement-angle TEF.

Fig. 2C shows a movement angle STTF for a single neuron. This neuron contained significantly more information about the movement angle than the goal angle at its OLT of 0 ms (Fig. 2D). However, because it is not possible to classify cells as encoding purely goal angle or purely movement angle (because of implicit partial correlation between these two angles), we instead determined whether tuning for movement angle was significant, independent of tuning for goal angle, and vice versa [supporting information (SI) Text]. If so, we included that cell in the movement-angle population. Similarly, if the cell contained significant information about the goal angle, independent of the movement angle, we included it in the goal-angle population.

During the center-out task, we recorded from 652 neurons from two monkeys. Using the above-mentioned criteria, we found that 390 neurons were significantly tuned for either the movement angle or goal angle or both. Of these 390 neurons, 220 (56%) significantly encoded the movement angle and 292 of 390 (75%) significantly encoded the goal angle. During the obstacle task, we recorded from 221 neurons from monkey 1, and 212 of these were significantly tuned for either the movement angle or goal angle. One hundred sixty-eight of 212 (79%) neurons significantly encoded the movement angle and 197 of 212 (93%) significantly encoded the goal angle. Because our analysis relies on the neural tuning properties being stationary in time, the above population counts do not include any cells that exhibited nonstationarity (SI Text).

Interestingly, we found an anatomical correlate for the representation of goal angle and movement angle in the medial intraparietal area: The mutual information for goal angle tended to increase gradually with the depth of the recording electrode, whereas information for movement angle (peak information, measured at OLT) decreased with depth. A linear regression using least squares was performed to quantify a linear relationship between encoded information and depth, and 100 (1 − α) % confidence intervals were obtained for the slope of the line. The average movement-angle information decreased by ≈30% over a 10-mm span (α = 0.038). The average goal-angle information increased with depth in the sulcus, by ≈60%, over 10 mm (α = 0.002). A stronger encoding of target-related signals deeper in the intraparietal sulcus (IPS) and, conversely, a favored representation of arm movement-related activity in surface regions of the IPS is consistent with previous PPC studies of reach planning, in which eye-centered target signals were commonly found in deeper structures such as PRR, and more hand-related activity was reported for surface area 5 neurons (2).

Static Encoding of Goal Angle.

Neurons that were significantly tuned for the goal angle persistently encoded information about the static direction to the target (measured from the starting cursor position, which is also the fixation point), independent of the changing state of the cursor. These cells were consistent with previous descriptions of target-sensitive tuning in area 5 (20). This target representation is most likely not due simply to a cue response, because the neural activity we analyzed typically occurred >220 ms after cue onset. Therefore, the intended goal of the trajectory is maintained in the PPC population during control of the movement. Knowledge of the target direction during the movement could be used downstream, for example, by motor cortices, to adjust upcoming motor commands to more accurately constrain the trajectory toward the target. Similarly, a forward model that estimates current and future states of the cursor could also exploit this online target information to generate more accurate estimates of the state of the cursor.

Temporal Encoding of Movement Angle.

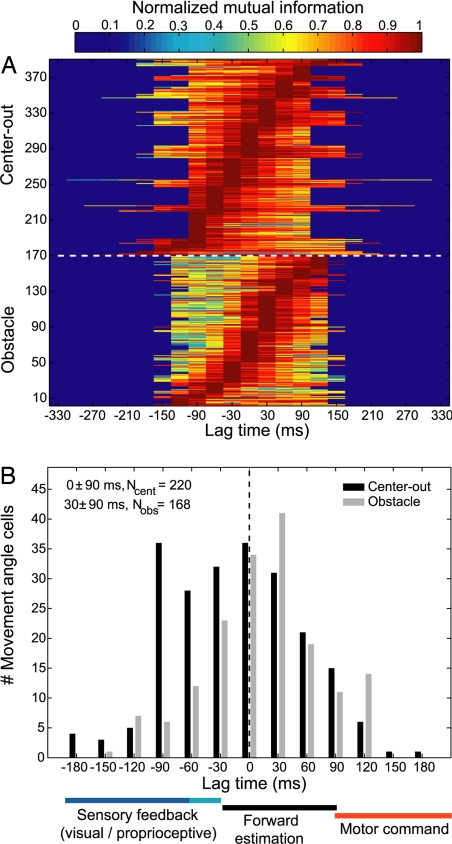

PPC neurons tuned for the movement angle encode dynamic information about the changing state of the cursor. Fig. 3A shows TEFs for the entire movement-angle population, normalized on a per-cell basis by each cell's maximal mutual information. TEFs were typically single-peaked at each cell's OLT. The histogram in Fig. 3B summarizes the distribution of OLTs for the movement-angle population, which was centered at 0 ± 90 ms and 30 ± 90 ms, for the center-out and obstacle tasks, respectively [median ± interquartile range (IQR)]. Both of these plots show that movement-angle neurons contained a temporal distribution of information about the state of the ongoing movement; some neurons best represented states in the near future (positive lag time), some best represented states in the recent past (negative lag time), and many peaked around the current state (zero lag time). Passive sensory feedback would require at least 30–90 ms (proprioceptive-visual) to reach PPC; consistent with some of the negative OLTs (≤−30 ms) observed here (21–24). Conversely, if PPC neurons were encoding an outgoing motor command, subsequent motor processing and execution of the movement would require at least 90 ms to produce the corresponding cursor motion, resulting in positive OLTs >90 ms (21). For instance, similar analyses for velocity have been performed in the primary motor cortex and report average OLTs of ≈90–100 ms (19, 20). Therefore, it is unlikely that PPC is driving motor cortex with feedforward commands because it would be expected that PPC would lead the movement state by more than motor cortex does, on average (i.e., OLT >90 ms). Previous studies have reported that velocity information is present in area 5 and have suggested that those neurons best reflect noncausal, sensory information (20, 25). We performed an additional temporal encoding analysis for velocity and obtained very similar results to those reported here for movement angle (SI Text). Neither passive sensory feedback nor efferent motor explanations best account for many of the OLTs observed in our data. In contrast, the most reasonable description of neurons whose optimal lag times lie between −30 and 90 ms is that they encode a forward estimate, which is used to monitor the current and upcoming state of the movement angle, before the arrival of delayed sensory feedback. We suggest that these forward-state estimates most likely reflect the operation of a forward model, which relies on efference copy and a model of the dynamics of the cursor to mimic the causal process that governs how the cursor transitions from one state to the next.

Fig. 3.

Population temporal encoding results. (A) Population TEFs plotted for all movement-angle neurons showing cell-normalized mutual information as a function of lag time. TEFs are sorted from lowest to highest OLT. The population encoded a distribution of temporal information, including past, present, and future states of the movement angle. Note that some neurons' TEFs had more data than others because one monkey made slightly faster movements than the other. (B) Histogram summarizing the OLTs for movement-angle neurons for both center-out and obstacle tasks. Many of these neurons' OLTs were consistent with a forward estimate of the state of the movement angle, which did not directly reflect delayed sensory feedback to PPC nor were they compatible with outgoing motor commands from PPC. Color-coded horizontal bars beneath the abscissa denote the approximate lag time ranges for sensory (blue), forward estimate (black), and motor (red) representations of the state of the movement angle.

We also observed that the peak information (mutual information at the OLT) encoded by those neurons that were clearly forward-estimating (0 ≤ OLT ≤ 60 ms) was significantly larger than the peak information encoded by the remaining population of movement-angle neurons (OLT ≤ −30 ms, or, OLT ≤ 90 ms) [P < 0.001, Wilcoxon rank sum test (SI Text)]. Fig. S1 A and B plots the peak mutual information that each cell encoded for movement angle at its OLT. This result shows that, in addition to PPC having a central tendency to encode the current state of the movement angle, these forward-estimating neurons will also, on average, encode more information compared with neurons at other OLTs, further supporting the idea that the population best represents forward estimates of the state of the cursor. In addition, we observed that the median OLT for the obstacle task distribution was significantly larger than the median OLT for the center-out task distribution, and shifted forward from 0 to 30 ms (P < 1e-4, Wilcoxon rank sum). This result suggests that, during situations in which a more demanding “control load” is placed on the sensorimotor control system (e.g., the obstacle task), PPC responds by encoding a more forward estimate of the state of the cursor, presumably relying on a more anticipatory estimate of the state of the cursor for online control.

Dynamic Tuning and Separability of Movement-Angle STTF.

We further analyzed the spatiotemporal-encoding properties of movement-angle neurons by measuring changes in the preferred direction of a neuron, θpd, over a range of lag times. θpd is the movement angle at which a neuron fired maximally for a particular lag time. We reasoned that if θpd did not vary significantly as a function of lag time compared with changes that occurred in the movement angle itself, then that neuron encoded a mostly straight trajectory. Fig. 4A shows an example of a neuron recorded during the obstacle task for which θpd changed smoothly in time. Specifically, θpd changed by 0.87 radians from −120 ms to 0 ms lag time and by an additional 0.61 radians from 0 ms to 120 ms lag time, implying that this neuron, on average, encoded a slightly nonlinear trajectory. Across the population, most neurons' STTFs exhibited small changes in θpd as a function of lag time. We quantified the tendency for the θpd to vary for the movement-angle population by computing the circular standard deviation of the distribution of all neurons' angle changes, for each lag time (26). We found that the standard deviation of θpd changes increased with time away from the OLT and rose to a maximum difference of 0.36 ± 0.03 and 0.72 ± 0.03 radians >120 ms for the center-out and obstacle tasks, respectively (Fig. 4B). These deviations, although significantly >0, were significantly smaller than deviations measured in the movement angle itself, which were 0.74 ± 0.03 and 1.91 ± 0.06 radians >120 ms, for the center-out and obstacle tasks, respectively (Fig. 4B). This result shows that the PPC population encoded significantly less change in movement direction than was observed in the actual trajectories themselves (<50%). We also calculated the average curvature of the monkeys' trajectories and the curvature of simulated trajectories derived from a neuron's STTF, which were considered to be the “preferred trajectory” of a neuron. Consistent with the preferred direction results, the average curvature of preferred trajectories (0.06 ± 0.01 and 0.15 ± 0.04, for center-out and obstacle tasks, respectively) was significantly less than the average curvature of the actual trajectories (0.15 ± 0.04 and 0.26 ± 0.02, for center-out and obstacle tasks, respectively). This result further substantiates the claim that movement-related neurons encoded mostly instantaneous straight-line trajectories, which contained less curvature than was present in the actual executed movements. For additional example movement-angle STTFs and TEFs containing a variety of tuning characteristics and OLTs, see Fig. S2.

Fig. 4.

Curvature and separability of STTFs. (A) Example STTF containing slight curvature. The θpd of this cell (dashed line) changed smoothly as a function of lag time. These small changes in θpd over time do not implicate a nonseparable STTF however; the percentage of fractional energy (FE) accounted for by the first singular vectors for this cell was 89%. (B) Standard deviation of the population's distribution of θpd changes (σdθ), plotted as a function of time relative to the OLT. For both center-out and obstacle tasks, the population σdθ (neural, solid lines) was less than the σdθ for the movement angle (behavior, dashed lines) over the same time range. Data points represent mean, and error bars denote 95% confidence intervals derived from bootstrapped distributions of σdθ. (C) Population summary of FE accounted for by each singular vector (dots denote median FE, and error bars depict interquartile range). The majority of energy in movement-angle STTFs was captured by the first singular vectors for center-out and obstacle tasks, respectively. (D) Population histogram showing distribution of FE of first singular value for all movement-angle cells. Unfilled (white) regions of bars denote the fraction of cells that were not significantly separable. Overall, the distributions for the two tasks were largely separable.

We performed an additional separability analysis to further characterize the relationship between angle and lag time encoded by a neuron's STTF. A perfectly separable STTF indicated that lag time and angle were encoded independently of one another. We determined that the population of movement-angle neurons was largely separable in the angle–time plane by using singular value decomposition (SVD) (27, 28). We calculated the fractional energy contained in the singular values for each cell's movement-angle STTF. 92.0 ± 14.7% and 78.9 ± 25.8% of energy (median + IQR) was contained in the first singular value, for the center-out and obstacle tasks, respectively (Fig. 4C). Two hundred nine of 220 (95%) and 130 of 168 (77%) of movement angle STTFs were significantly separable when compared with their corresponding surrogate STTFs for center-out and obstacle tasks. Fig. 4D shows the distribution of fractional energies of the first singular value for both tasks. These SVD results show that movement angle and time were primarily encoded independently by the PPC population and suggest that they could be combined in a multiplicative fashion to create the observed STTFs.

Together, the above analyses suggest that dynamic sensorimotor control mechanisms in PPC encode mostly straight trajectories, with a less substantial component of neurons' firing rates arising because of nonlinear encoding mechanisms that may reflect the slight curvature we observed in the STTFs. This interpretation is consistent with PPC neurons encoding a state estimate of the movement angle, such that the majority of information is encoded at a cell's OLT, with decreasing information encoded away from the OLT.

Discussion

Our data suggest that neurons in PPC are not only involved in forming plans for movement (3, 29), but also in monitoring the goal and the dynamic state of the trajectory during movement execution. This monitoring is likely important for, among other purposes, continuous control and error correction. Rapid online control of movement cannot rely completely on slow sensory feedback but instead must make continuous adjustments to motor commands based on a best estimate of the current and future states of the effector (7, 8). The temporal encoding properties of many movement-angle neurons suggest that PPC computes an estimate of the state of the cursor forward in time, consistent with the operation of a theoretical forward model. Furthermore, the distribution of OLTs we report in PPC spans a continuum of dynamic state estimates, bridging purely sensory with purely motor representations of the state of a continuous movement while having a central tendency to encode a forward estimate of the current state of the cursor. We suggest that PPC movement-angle neurons may provide a sensorimotor linkage necessary for translating recent motor commands into an estimate of the current state of the cursor that is useful for rapid control of the limb and that can be subsequently updated by delayed sensory information fed into PPC (12, 32). It should be noted that the forward estimate measured by using our analysis corresponds to the expected movement state of an external object (i.e., the sensory outcome of the cursor motion on the computer screen). However, for our joystick task, the movement of the cursor is strongly correlated with the movement of the hand, and therefore it is also possible that these state estimates might reflect an intrinsic representation of the hand itself. Further experiments should be carried out to determine to what extent the forward model in PPC encodes a motor, sensory (visual and/or proprioceptive) or intermediate representation of the expected state of a movement.

A forward model must rely both on efference copy and a model of the dynamics of the cursor/hand to estimate the next state of a movement from the previous state. Efference copy signals could be central in origin (fed back from motor and premotor cortices) or may be signals of noncortical origin that lead the current state of the movement. Previous psychophysical, clinical, and theoretical studies have pointed to both the parietal lobe and the cerebellum as candidate neural substrates for a forward model (30–33). Because both areas are reciprocally connected [cerebellum projects to parietal cortex via the thalamus (34); parietal cortex projects to cerebellum via the pons (35)], it is quite possible that the two areas might comprise a “functional loop” responsible for monitoring and updating the internal state of the limb for online control (31). The extent to which forward model control is distributed across multiple brain areas and the distinguishing functional roles of these areas is an important direction for further investigation.

The finding that movement-angle neurons are largely separable in the angle–time plane implies that online directional tuning is mostly stable over time during the movement. Although PPC neurons do encode some curvature during our tasks, both the average change in θpd (approximately π/6 radians in 120 ms) and the amount of curvature encoded by PPC neurons were not large and, moreover, were both significantly smaller than their corresponding values measured for the movement itself over the same time range. Therefore, these preferred trajectories are not complex functions of time but, for the most part, provide a simple dynamic encoding scheme: state estimation of movement direction at a particular OLT. This explanation is conceptually similar to the claim that M1 neurons encode an instantaneous estimate of movement direction (or velocity) at a particular lag time (36, 37). Alternatively, Hatsopoulos and colleagues (38) have recently suggested that neurons in M1 encode more complex “pathlets,” composed of a broad range of temporally extensive trajectory shapes. This complex spatiotemporal-encoding scheme may, in part, reflect M1's involvement in the execution of actions in coordinate frames appropriate for musculoskeletal control, although a single coordinate frame for M1 has not been identified (39). In contrast, we suggest that the encoding of space and time that we observe in PPC may best reflect a visuomotor representation of the trajectory, which seems reasonable given the strong sensory input to PPC and its substantial reciprocal connections with downstream motor areas (17, 18).

A representation of the expected state of the cursor may also be useful for reconciling whether the outcome of a movement in space is caused by self-induced or external sensory motion. PPC would be a reasonable brain area to perform such a reafference-cancelling computation during continuous sensorimotor control, given both the presence of a forward model estimate of the sensory consequences of movement and the convergence of substantial sensory inputs. Unfortunately, because the desired sensory outcome and the actual sensory outcome of the movement coincide closely in our task, we cannot determine from our data whether a comparison between these two signals is encoded in PPC or not. A future experiment that perturbs the cursor visual feedback so that it is incongruent with the movement of the joystick, dissociating the intended movement state and the actual visual state, might modulate the activity of cells that did not respond during our task, which would normally encode such a comparison.

Eye behavior-related signals have also been described in PPC (40). For instance, some smooth pursuit-sensitive cells in area MST appear to reflect the integration of an extraretinal signal related to the continuous movement of the eye and continue to respond during periods of the pursuit when the stimulus is blinked off (41). One use of this signal may be for perceptual stability during pursuit eye movements (15, 42). It would be interesting to determine whether MST cells estimate the current direction of eye movements during pursuit, similar to the forward estimation of movement angle we observe for arm movements. Discrete eye behavior-related signals, such as saccade and fixation responses, have also been described in PPC. Whereas area 7a saccade responses begin largely after the saccade, area LIP saccade responses tend to occur before, during, or after saccades (43). These dynamics have led to the suggestion that this saccade activity is important for perceptual stability and coordinate transformations but not for the execution of eye movements (43). [Note that although LIP does not appear to generate the execution signal for saccades, it does appear to be involved in the formulation of the plan or intent to saccade (29).] The saccade response dynamics in LIP appear to estimate when a saccade is occurring. Fixation-related activity commonly found in PPC is sensitive for eye position, and this response characteristic is often multiplicatively combined with the sensory and saccade-related activity of single neurons (43). The eye-position signal in PPC could be derived from proprioceptive inputs from the eye muscles (44) and/or the integration of saccade command signals. It would be interesting to see whether a component of the eye-position signal might also provide anticipatory information (ahead of passive sensory feedback) about the current state of the eye position during fixations between saccades.

Finally, the availability of both goal and dynamic arm-movement information in PPC makes this brain area an attractive target for a neural prosthesis. For example, a continuous decoder estimating the dynamic state of the cursor could be improved by incorporating target information to constrain the decoded cursor trajectory toward the goal (45). Moreover, at any instant during the movement, the trajectory decoder could be switched to a target decoder when sufficient information becomes available about the target, rapidly jumping the cursor to the correct endpoint. Last, the observation that these neurons appear to encode mostly straight lines in visual space may prove to be more straightforward to decode for controlling a variety of end effectors.

Methods

General.

In our experiment, two monkeys were trained to perform a 2D center-out joystick (ETI Systems) reaction task. Both monkeys performed a center-out task, and the first monkey was also trained to perform an obstacle-avoidance task to enforce more curvature in the trajectories and to further decorrelate goal angle and movement angle (center-out task correlation coefficient: ρT = 0.70 ± 0.06., ρT = 0.57 ± 0.12 for monkeys 1 and 2 respectively, obstacle task: ρT = 0.16 ± 0.11 [mean ± SD, T-linear association test (26)]. Example trajectories are shown for both tasks in Fig. 1 C and D. During joystick trials, we recorded simultaneous single-unit activity from multiple neurons (up to four electrodes) in the medial bank of the intraparietal sulcus of the PPC, with chamber coordinates centered at 5 mm posterior and 6 mm lateral.

Behavioral Task.

The monkeys sat 45 cm in front of an LCD monitor. Eye position was monitored with an infrared oculometer (ISCAN). The monkeys initiated a trial by moving a white cursor (0.9°) into a central green target circle (4.4°) and then fixated a red concentric circle (1.6°). After 350 ms, the target was jumped to one of eight (or 12) random peripheral locations (14.7°). The monkeys then guided the cursor smoothly into the peripheral target while maintaining central fixation. Once the cursor was held within 2.2° of the target center for 350 ms, the monkeys were rewarded with a drop of juice. In the obstacle task, the monkey initiated the trial as before. After 350 ms, a blue obstacle circle (10.0°) appeared, and the target was jumped simultaneously to one of eight (or 12) target locations. The obstacle was aligned and equidistant between initial and final target positions. During movement, the monkey maintained central fixation and guided the cursor around the obstacle and into the peripheral target. If the cursor intersected the obstacle or fixation was broken, the trial was aborted. The duration from movement onset to the time the cursor entered the target was for the center-out task: 259 ± 80 ms and 392 ± 173 ms for monkeys 1 and 2, respectively, and for monkey 1 for the obstacle task: 360 ± 99 ms (mean ± SD).

Space–Time Tuning Analysis.

Spike trains and raw joystick positional data were smoothed with a Gaussian kernel (SD = 20 ms). The SD of the smoothing Gaussian was derived from the SD of the interspike interval (ISI) distribution of a typical neuron. Specifically, a Gaussian curve was fit to every tuned neuron's ISI distribution. The median ISI SD for all neurons was 23 ms, which we then approximated to 20 ms.

We evaluated the dynamic tuning properties of PPC neurons by constructing a STTF for each neuron, described by the bivariate function N(θ,τ) (19). Each value in the STTF represented the expected value of the firing rate R measured at some time during the movement t, given that a particular behavioral angle θ occurred at time t + τ. The STTF is then expressed as the conditional expectation

where τ is the lag time, which was sampled in 30-ms increments, and E is the expected value operator. To compute the average firing rate for a particular STTF bin, N(θbin,τbin), firing rate samples were accumulated at all times{ti} for which θ = θbin at times {ti + τ} and across all trials in a session. This sum was then divided by the total number of firing-rate samples that occurred for that θbin − τbin bin, to give an average firing rate. Both goal- and movement-angle bins were discretized into π/4 radians increments (π/6 radians, for 12-target experiments), whereas firing rates were binned into 1-Hz increments. We tried a variety of bin-size resolutions for both parameters, none of which had any qualitative effect on the results reported in this study. On average, >70,000 firing-rate samples were used per session, which were compiled over 489 ± 300 and 498 ± 233 trials (mean ± SD), for the center-out and obstacle tasks, respectively (typically resulting in >500 samples per θ − τ bin and >40 trials per target).

The movement angle and goal angle were sampled every millisecond over the entire trajectory, from movement onset to the instant the cursor entered the target circle. Importantly, however, firing rates were sampled from the middle segment of a trial, so that each firing-rate sample could be paired with angles sampled uniformly over the entire range of lag times we considered (Fig. 2B). This eliminated unwanted edge effects for large-magnitude values of lag time (e.g., a sampling bias would otherwise exist for, say, τ = −120 ms; firing rates would be more likely to be sampled from later times in the trajectory, and these samples would be less likely to have corresponding angle pairs for τ > 0). This segment of neural activity varied in size depending on the trial length and, on average, was 145 ± 22 ms and 147 ± 18 ms (mean ± SD), for the center-out and obstacle tasks, respectively. In addition, we only considered firing rates beginning from 90 ms after movement onset to allow sufficient time for sensory feedback about the state of the movement to arrive into PPC. This was important to ensure that none of the PPC neural activity we reported reflected encoding schemes that operated without access to visual or proprioceptive feedback during online control of the cursor. Finally, it is unlikely that this neural activity period reflected stimulus onset or reward because it began >220 ms (reaction time + at least 90 ms) after cue onset and terminated >440 ms (350-ms hold + at least 90 ms) before reward delivery. The reaction time to initiate movement was 138 ± 51 ms and 159 ± 19 ms (mean ± SD), for the center-out and obstacle tasks, respectively.

A bootstrap Monte Carlo resampling method (>100 iterations) was used to generate a distribution of STTFs (and TEFs), which were averaged to yield the reported STTF and from which we derived 95% confidence intervals. To create a null hypothesis for significance testing, we constructed surrogate STTFs (TEFs) by using actual trajectory data (to preserve kinematics) but by substituting neural activity with surrogate spike trains. Surrogate spike trains were generated by sampling uniformly from a neuron's actual interspike-interval distribution (i.e., surrogate neurons contained the same ISI statistics and mean firing rate as the actual neurons) and then subsequently smoothing the surrogate spike train as before. By using this surrogate neural activity and the actual behavior (movement or goal angles), bootstrap Monte Carlo resampling was performed again to create a distribution of surrogate STTFs (TEFs).

Cosine goodness-of-fit was also assessed for all lag times in a neuron's STTF (i.e., an angular tuning curve for each lag time) according to the following model

where c is the baseline firing rate, a is the gain, θpd is the preferred direction of the neuron, and Nτ denotes the angular tuning function for a particular lag time. This model typically provided a good fit for the population of movement-angle neurons. The coefficient of determination R2 for the model fit was 0.70 ± 0.43 and 0.83 ± 0.19 (mean ± SD), for the center-out and obstacle tasks, respectively. θpd was computed by using the mean direction method (26). Specifically, vector addition was used to determine the resultant vector B at which a cell fired maximally for a given lag time as follows

|

where M is the number of angle bins (8 or 12), and Ni is a neuron's average firing rate for a particular bin angle θi.

Note that the STTFs we report here differ somewhat from the spatiotemporal receptive fields used in sensory cortices, which generally use white-noise stimuli to probe a rich set of space–time representations (46, 47). Because of autocorrelation present in natural goal-directed arm movements, we do not interpret the structure of STTFs to represent what is directly encoded by PPC neurons for all possible stimuli but, instead, to reflect the movement-angle dynamics that can be inferred from the firing rate for our particular task.

Information Theoretic Analysis.

Because tuning depth and linear correlation metrics such as Pearson's linear correlation coefficient are not a robust measure of all types of statistical dependency, we calculated the Shannon mutual information between firing rate and angle for each lag time of the STTF to construct a TEF (19, 48). For two different examples of cells' residual TEFs, see Fig. S3. This method allowed us to rigorously quantify the dependency of the firing rate on the movement or goal angle at different lag times. Mutual information is an entropy-based measure that quantifies how much the variability of a particular parameter (i.e., neural firing rate N) depends on the variability of another (i.e., goal or movement angle θ), and is defined as

|

p(N) is the prior probability distribution for firing rates. p(θ∣N) is the conditional probability distribution for angle given that a particular firing rate was observed. p(θ) is the prior probability distribution for angle. We computed the above integral by using a nonparametric binning approach. Specifically, we did not approximate these distributions by using a model fit but instead computed them exactly by evaluating the integral in Eq. 4 as a finite sum according to

|

To ensure that mutual information values for goal and movement angle were comparable (e.g., to avoid any bias due to possible differences in the individual goal-angle or movement-angle entropies), we normalized mutual information using the symmetric uncertainty measure introduced by Särndal (49)

The normalized information NI effectively varied between 0 and 1, with NI = 1 denoting perfect correlation. H(θ) and H(N) are the entropy of the angle (goal or movement) and the firing rate, respectively, and were computed in a similar manner, for example,

Therefore, the NI scalar quantity was not reported in bits but should instead be interpreted simply as a nonparametric measure of dependency of the firing rate on the angle.

Note that goal angle TEFs were mostly flat and did not contain temporal-encoding structure (e.g., Fig. 1D). This is because, for any given trial, all firing-rate samples in the neural-activity period were paired with the same goal angle (i.e., a single target) at all possible lag times. Therefore, each trial contributed only a mean rate for one particular goal angle, with no temporal information. When repeated across all trials, the resultant goal-angle STTF contained angular tuning but no temporal tuning, resulting in a flat TEF. Importantly, a flat TEF does not imply that goal angle information was fixed in absolute trial time, but instead represents the average tuning strength for target direction during the neural-activity period that we sampled.

Last, we performed an additional analysis to determine whether the mutual information encoded for movement angle at a cell's OLT was significantly larger than at all other lag times. The outcome of all of these comparisons is summarized graphically in the 95% confidence OLT plots of Fig. S4.

Temporal Dynamics and Curvature of Movement-Angle STTF.

To assess how the encoded movement angle varied as a function of lag time, the difference between θpd at a cell's OLT and θpd at all other significantly tuned lag times was calculated for a neuron's STTF. This calculation was carried out for all cells that had significantly tuned lag times in the range of −120 to 120 ms. So that we could compare these changes in θpd with changes that occurred in the movement angle itself, we also calculated the difference between the movement angle for all times {ti} and the movement angle at all times {ti + τ}, for a range of different lag times (−120 ≤ τ ≤ 120 ms). This process was then repeated for all trials in a session and for all sessions in which movement-angle neurons were reported. These procedures resulted in a distribution of angle differences associated with each lag time, both for the neural activity (i.e., changes in θpd) and the behavior (i.e., changes in movement angle) (Fig. 4B). We then computed the circular SD σdθ for each lag time's distribution of angle-differences (26) to summarize the average tendency of the angle to deviate over lag time. A bootstrap Monte Carlo resampling procedure was used to generate multiple distributions of angle differences, from which the mean and 95% CIs for σdθ were derived (Fig. 4B).

In addition to the preferred-direction analysis, we calculated the curvature of both the actual trajectories themselves and a neuron's preferred trajectory, which we simulated from a neuron's STTF. First, to derive the curvature of an actual trajectory, a circle was fit to a series of points sampled every 30 ms along a 240-ms trajectory segment by using a nonlinear least-squares estimation approach presented by Gander and colleagues (50) and implemented in the Matlab program, “fitcircle,” by R. Brown (University of Canterbury, Canterbury, U.K.). The curvature was then calculated simply as the inverse of the radius of the circle. This calculation was repeated for a series of subsequent 240-ms trajectory segments within each trial, each beginning 30 ms apart (i.e., 0–240 ms, then 30–270 ms…), and then repeated for all trials in a session. Second, to construct the preferred trajectory encoded by a neuron's STTF, we combined unit vectors, which were derived from each lag time's θpd, in a tip-to-tail fashion from −120 ms to +120 ms. The curvature of the resultant trajectory was then computed as described above, and then this procedure was repeated for each neuron in the population.

Separability of Movement Angle STTF.

To assess the separability of an STTF, using SVD, we modeled the movement-angle STTF matrix N as a function of an offset term plus a multiplicative component

where U and V are orthogonal matrices containing the singular vectors, and S is a diagonal matrix containing the nonzero singular values of N. If an STTF was completely separable, then it could be factored by using only the first singular vectors and first singular value

with s1 = 1 denoting that all energy could be captured in the space spanned by the first singular vectors. Varying α from the minimum to the maximum firing rate of a neuron (in increments of 1 Hz), we iteratively fit the separable model of Eq. 9 to reconstruct the matrix N. This optimization process allowed us to determine the optimal offset αopt, which minimized the mean-squared error for reconstructing N, similar to the approach of Pena and colleagues (28). Typically, we found that αopt was strongly correlated with the mean value of the N matrix (correlation coefficient, ρcc = 0.97 and ρcc = 0.90, for center-out and obstacle tasks). By using αopt, the full SVD decomposition was then performed once more according to Eq. 8, and the fractional energy (FE) contained in each set of singular vectors was calculated as

|

To determine whether or not the FE contained in the first singular value was significantly separable, we also performed SVD decomposition on surrogate STTFs (which were derived from the same behavior but using surrogate spike trains) for comparison with the actual STTFs' separability. If the first singular value computed from the actual STTF was >95% of the surrogate STTF's first singular values, then the SVD decomposition was considered to be significantly separable.

Supplementary Material

Acknowledgments.

We thank R. Bhattacharyya, E. Hwang, I. Kagan, and Z. Nadasdy for comments on the manuscript; K. Pejsa and N. Sammons for animal care; and V. Shcherbatyuk and T. Yao for technical and administrative assistance. This work was supported by the National Eye Institute, the James G. Boswell Foundation, the Defense Advanced Research Projects Agency, and a National Institutes of Health training grant fellowship (to G.H.M.).

Footnotes

The authors declare no conflict of interest.

This article contains supporting information online at www.pnas.org/cgi/content/full/0802602105/DCSupplemental.

References

- 1.Batista AP, Buneo CA, Snyder LH, Andersen RA. Reach plans in eye-centered coordinates. Science. 1999;285:257–260. doi: 10.1126/science.285.5425.257. [DOI] [PubMed] [Google Scholar]

- 2.Buneo CA, Jarvis MR, Batista AP, Andersen RA. Direct visuomotor transformations for reaching. Nature. 2002;416:632–636. doi: 10.1038/416632a. [DOI] [PubMed] [Google Scholar]

- 3.Snyder LH, Batista AP, Andersen RA. Coding of intention in the posterior parietal cortex. Nature. 1997;386:167–170. doi: 10.1038/386167a0. [DOI] [PubMed] [Google Scholar]

- 4.Della-Maggiore V, Malfait N, Ostry DJ, Paus T. Stimulation of the posterior parietal cortex interferes with arm trajectory adjustments during the learning of new dynamics. J Neurosci. 2004;24:9971–9976. doi: 10.1523/JNEUROSCI.2833-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Desmurget M, et al. Role of the posterior parietal cortex in updating reaching movements to a visual target. Nat Neurosci. 1999;2:563–567. doi: 10.1038/9219. [DOI] [PubMed] [Google Scholar]

- 6.Pisella L, et al. An “automatic pilot” for the hand in human posterior parietal cortex: Toward reinterpreting optic ataxia. Nat Neurosci. 2000;3:729–736. doi: 10.1038/76694. [DOI] [PubMed] [Google Scholar]

- 7.Desmurget M, Grafton S. Forward modeling allows feedback control for fast reaching movements. Trends Cognit Sci. 2000;4:423–431. doi: 10.1016/s1364-6613(00)01537-0. [DOI] [PubMed] [Google Scholar]

- 8.Miall RC, Wolpert DM. Forward models for physiological motor control. Neural Networks. 1996;9:1265–1279. doi: 10.1016/s0893-6080(96)00035-4. [DOI] [PubMed] [Google Scholar]

- 9.Kalaska JF, Caminiti R, Georgopoulos AP. Cortical mechanisms related to the direction of two-dimensional arm movements—Relations in parietal area 5 and comparison with motor cortex. Exp Brain Res. 1983;51:247–260. doi: 10.1007/BF00237200. [DOI] [PubMed] [Google Scholar]

- 10.Jordan MI, Rumelhart DE. Forward models—Supervised learning with a distal teacher. Cognit Sci. 1992;16:307–354. [Google Scholar]

- 11.Wolpert DM, Ghahramani Z, Jordan MI. An internal model for sensorimotor integration. Science. 1995;269:1880–1882. doi: 10.1126/science.7569931. [DOI] [PubMed] [Google Scholar]

- 12.Goodwin GC, Sin KS. Adaptive Filtering Prediction and Control. Englewood Cliffs, NJ: Prentice–Hall; 1984. [Google Scholar]

- 13.von Helmoltz H. Handbook of Physiological Optics. 3rd Ed. New York: Dover Publications; 1866. [Google Scholar]

- 14.Von Holst E, Mittelstaedt H. Das reafferenzprinzip. Naturwissenschaften. 1950;37:464–476. [Google Scholar]

- 15.Haarmeier T, Bunjes F, Lindner A, Berret E, Thier P. Optimizing visual motion perception during eye movements. Neuron. 2001;32:527–535. doi: 10.1016/s0896-6273(01)00486-x. [DOI] [PubMed] [Google Scholar]

- 16.Haarmeier T, Thier P, Repnow M, Petersen D. False perception of motion in a patient who cannot compensate for eye movements. Nature. 1997;389:849–852. doi: 10.1038/39872. [DOI] [PubMed] [Google Scholar]

- 17.Jones EG, Powell TP. An anatomical study of converging sensory pathways within the cerebral cortex of the monkey. Brain. 1970;93:793–820. doi: 10.1093/brain/93.4.793. [DOI] [PubMed] [Google Scholar]

- 18.Johnson PB, Ferraina S, Bianchi L, Caminiti R. Cortical networks for visual reaching: Physiological and anatomical organization of frontal and parietal lobe arm regions. Cereb Cortex. 1996;6:102–119. doi: 10.1093/cercor/6.2.102. [DOI] [PubMed] [Google Scholar]

- 19.Paninski L, Fellows MR, Hatsopoulos NG, Donoghue JP. Spatiotemporal tuning of motor cortical neurons for hand position and velocity. J Neurophysiol. 2004;91:515–532. doi: 10.1152/jn.00587.2002. [DOI] [PubMed] [Google Scholar]

- 20.Ashe J, Georgopoulos AP. Movement parameters and neural activity in motor cortex and area 5. Cereb Cortex. 1994;4:590–600. doi: 10.1093/cercor/4.6.590. [DOI] [PubMed] [Google Scholar]

- 21.Miall RC, Weir DJ, Wolpert DM, Stein JF. Is the cerebellum a Smith predictor? J Mot Behav. 1993;25:203–216. doi: 10.1080/00222895.1993.9942050. [DOI] [PubMed] [Google Scholar]

- 22.Flanders M, Cordo PJ. Kinesthetic and visual control of a bimanual task—Specification of direction and amplitude. J Neurosci. 1989;9:447–453. doi: 10.1523/JNEUROSCI.09-02-00447.1989. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Petersen N, Christensen LOD, Morita H, Sinkjaer T, Nielsen J. Evidence that a transcortical pathway contributes to stretch reflexes in the tibialis anterior muscle in man. J Physiol. 1998;512:267–276. doi: 10.1111/j.1469-7793.1998.267bf.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Raiguel SE, Xiao DK, Marcar VL, Orban GA. Response latency of macaque area MT/V5 neurons and its relationship to stimulus parameters. J Neurophysiol. 1999;82:1944–1956. doi: 10.1152/jn.1999.82.4.1944. [DOI] [PubMed] [Google Scholar]

- 25.Averbeck BB, Chafee MV, Crowe DA, Georgopoulos AP. Parietal representation of hand velocity in a copy task. J Neurophysiol. 2005;93:508–518. doi: 10.1152/jn.00357.2004. [DOI] [PubMed] [Google Scholar]

- 26.Fisher NI. Statistical Analysis of Circular Data. Cambridge, UK: Cambridge Univ Press; 1993. [Google Scholar]

- 27.Mazer JA, Vinje WE, McDermott J, Schiller PH, Gallant JL. Spatial frequency and orientation tuning dynamics in area V1. Proc Natl Acad Sci USA. 2002;99:1645–1650. doi: 10.1073/pnas.022638499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Pena JL, Konishi M. Auditory spatial receptive fields created by multiplication. Science. 2001;292:249–252. doi: 10.1126/science.1059201. [DOI] [PubMed] [Google Scholar]

- 29.Andersen RA, Buneo CA. Intentional maps in posterior parietal cortex. Annu Rev Neurosci. 2002;25:189–220. doi: 10.1146/annurev.neuro.25.112701.142922. [DOI] [PubMed] [Google Scholar]

- 30.Bastian AJ. Learning to predict the future: The cerebellum adapts feedforward movement control. Curr Opin Neurobiol. 2006;16:645–649. doi: 10.1016/j.conb.2006.08.016. [DOI] [PubMed] [Google Scholar]

- 31.Blakemore SJ, Sirigu A. Action prediction in the cerebellum and in the parietal lobe. Exp Brain Res. 2003;153:239–245. doi: 10.1007/s00221-003-1597-z. [DOI] [PubMed] [Google Scholar]

- 32.Wolpert DM, Goodbody SJ, Husain M. Maintaining internal representations the role of the human superior parietal lobe. Nat Neurosci. 1998;1:529–533. doi: 10.1038/2245. [DOI] [PubMed] [Google Scholar]

- 33.Wolpert DM, Miall RC, Kawato M. Internal models in the cerebellum. Trends Cognit Sci. 1998;2:338–347. doi: 10.1016/s1364-6613(98)01221-2. [DOI] [PubMed] [Google Scholar]

- 34.Clower DM, West RA, Lynch JC, Strick PL. The inferior parietal lobule is the target of output from the superior colliculus, hippocampus, and cerebellum. J Neurosci. 2001;21:6283–6291. doi: 10.1523/JNEUROSCI.21-16-06283.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Glickstein M. How are visual areas of the brain connected to motor areas for the sensory guidance of movement? Trends Neurosci. 2000;23:613–617. doi: 10.1016/s0166-2236(00)01681-7. [DOI] [PubMed] [Google Scholar]

- 36.Moran DW, Schwartz AB. Motor cortical representation of speed and direction during reaching. J Neurophysiol. 1999;82:2676–2692. doi: 10.1152/jn.1999.82.5.2676. [DOI] [PubMed] [Google Scholar]

- 37.Schwartz AB, Kettner RE, Georgopoulos AP. Primate motor cortex and free arm movements to visual targets in 3-dimensional space. 1. Relations between single cell discharge and direction of movement. J Neurosci. 1988;8:2913–2927. doi: 10.1523/JNEUROSCI.08-08-02913.1988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Hatsopoulos NG, Xu QQ, Amit Y. Encoding of movement fragments in the motor cortex. J Neurosci. 2007;27:5105–5114. doi: 10.1523/JNEUROSCI.3570-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Wu W, Hatsopoulos N. Evidence against a single coordinate system representation in the motor cortex. Exp Brain Res. 2006;175:197–210. doi: 10.1007/s00221-006-0556-x. [DOI] [PubMed] [Google Scholar]

- 40.Mountcastle VB, Lynch JC, Georgopoulos A, Sakata H, Acuna C. Posterior parietal association cortex of monkey—Command functions for operations within extrapersonal space. J Neurophysiol. 1975;38:871–908. doi: 10.1152/jn.1975.38.4.871. [DOI] [PubMed] [Google Scholar]

- 41.Newsome WT, Wurtz RH, Komatsu H. Relation of cortical areas MT and MST to pursuit eye-movements. 2. Differentiation of retinal from extraretinal inputs. J Neurophysiol. 1988;60:604–620. doi: 10.1152/jn.1988.60.2.604. [DOI] [PubMed] [Google Scholar]

- 42.Bradley DC, Maxwell M, Andersen RA, Banks MS, Shenoy KV. Mechanisms of heading perception in primate visual cortex. Science. 1996;273:1544–1547. doi: 10.1126/science.273.5281.1544. [DOI] [PubMed] [Google Scholar]

- 43.Andersen RA, Essick GK, Siegel RM. Neurons of area-7 activated by both visual-stimuli and oculomotor behavior. Exp Brain Res. 1987;67:316–322. doi: 10.1007/BF00248552. [DOI] [PubMed] [Google Scholar]

- 44.Wang XL, Zhang MS, Cohen IS, Goldberg ME. The proprioceptive representation of eye position in monkey primary somatosensory cortex. Nat Neurosci. 2007;10:640–646. doi: 10.1038/nn1878. [DOI] [PubMed] [Google Scholar]

- 45.Srinivasan L, Brown EN. A state–space framework for movement control to dynamic goals through brain-driven interfaces. IEEE Trans Biomed Eng. 2007;54:526–535. doi: 10.1109/TBME.2006.890508. [DOI] [PubMed] [Google Scholar]

- 46.DeAngelis GC, Ghose GM, Ohzawa I, Freeman RD. Functional micro-organization of primary visual cortex: Receptive field analysis of nearby neurons. J Neurosci. 1999;19:4046–4064. doi: 10.1523/JNEUROSCI.19-10-04046.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Kowalski N, Depireux DA, Shamma SA. Analysis of dynamic spectra in ferret primary auditory cortex. 1. Characteristics of single-unit responses to moving ripple spectra. J Neurophysiol. 1996;76:3503–3523. doi: 10.1152/jn.1996.76.5.3503. [DOI] [PubMed] [Google Scholar]

- 48.Cover TM, Thomas JA. Elements of Information Theory. New York: Wiley; 1991. [Google Scholar]

- 49.Sarndal CE. A comparative study of association measures. Psychometrika. 1974;39:165–187. [Google Scholar]

- 50.Gander W, Golub GH, Strebel R. Least-squares fitting of circles and ellipses. Bit. 1994;34:558–578. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.